1. Introduction

In [

1], we developed an axiomatic system for thermodynamics that incorporated information as a fundamental concept. This system was inspired by previous axiomatic approaches [

2,

3] and discussions of Maxwell’s demon [

4,

5]. The basic concept of our system is the

eidostate, which is a collection of possible states from the point of view of some agent. The axioms imply the existence of additive conserved quantities called

components of content and an entropy function

that identifies reversible and irreversible processes. The entropy includes both thermodynamic and information components.

One of the surprising things about this axiomatic system is that despite the absence of probabilistic ideas in the axioms, a concept of probability emerges from the entropy

. If state

e is an element of a uniform eidostate

E, then we can define

States in

E with higher entropy are assigned higher probability. As we will review below, this distribution has a uniquely simple relationship to the entropies of the individual states and the overall eidostate

E.

The emergence of an entropic probability distribution motivates us to ask several questions. Can this idea be extended beyond uniform eidostates? Can we interpret an arbitrary probability distribution over a set of states as an entropic distribution within a wider context? What does the entropic probability tell us about probabilistic processes affecting the states within our axiomatic system? In this paper, we will address these questions.

2. Axiomatic Information Thermodynamics

In physics, a formal axiomatic system can serve several purposes. It can serve to ensure consistent reasoning with unfamiliar or counter-intuitive mathematical objects. Furthermore, it may clarify the underlying concepts of a subject and their relations. This in turn can help to understand how widely, and under what circumstances, those concepts may apply to the physical world. Here, we review the system in [

1] and describe the ideas represented by the definitions and axioms.

Our system aims to capture how an agent with finite knowledge might describe states and processes in the external world. The set of states is denoted , but the agent’s knowledge is represented by an eidostate, which is a finite collection of possible states in . (The term eidostate comes from the Greek eidos, meaning to see.) Thus, the agent’s knowledge of the state may be incomplete. No probability distribution is assumed a priori for the eidostate; it is a simple enumeration of possibilities. The set of eidostates is called . (Without too much confusion, we may identify a state with the singleton eidostate so that can be regarded as a subset of .)

Eidostates may be combined by the operation +, which simply represents the Cartesian product of the sets. Thus, if A and B are distinct eidostates, the combination is not the same as . Two eidostates A and are similar () if they are formed by the same Cartesian factors perhaps put together in a different way. Our first axiom is

Axiom 1 (Eidostates). is a collection of sets called eidostates such that

- (a)

Every is a finite non-empty set with a finite prime Cartesian factorization.

- (b)

if and only if .

- (c)

Every non-empty subset of an eidostate is also an eidostate.

The agent may control state transformations, including those that involve the acquisition, use, or deletion of information. This produces a relation → on . We interpret to mean that there exists a dynamical evolution that transforms A into B, perhaps in the presence of some other “apparatus” states that undergo no net change. We assume that any simple rearrangement of an eidostate’s components is always possible, that transformations can be composed in succession, and so on:

Axiom 2 (Processes). Let eidostates , and .

- (a)

If , then .

- (b)

If and , then .

- (c)

If , then .

- (d)

If , then .

Formally, a process is a pair of eidostates, and it is said to be possible if either or . An eidostate A is uniform if, for all , is possible. Uniform eidostates, as we will see, have particularly tractable properties. The existence of non-uniform eidostates is neither forbidden nor guaranteed by the axioms, and there are models both with them and without them.

Consider a non-deterministic process in which initial state a might evolve into either or . We describe this as a deterministic process on eidostates (that is, ). The principle underlying the “Second Law” in our axiomatic system is simple to state. Given a collection A of possible states, no process can deterministically eliminate one or more of the elements of A without any other effect. Possibilities cannot simply be “deleted” by the agent. That is,

Axiom 3. If and B is a proper subset of A, then .

To borrow an example from the next section, suppose the agent does not know whether a coin is showing “heads” (h) or “tails” (t). This might be represented by the eidostate . The agent might examine the coin to discover its actual state, but this is not a deterministic process, and it might end up in either h or t. To guarantee that the coin is h, the agent will need to intervene in the coin’s state, which in principle may involve changes to other states.

On the other hand, it is reasonable to assume the existence of certain “conditional” processes:

Axiom 4 (Conditional processes).

- (a)

Suppose and . If and then .

- (b)

Suppose A and B are uniform eidostates that are each disjoint unions of eidostates: and . If and then .

We now introduce information concepts into the system. This is based on the idea of a record state. State r is a record state if there exists another state such that . That is, a particular record state may be freely created or deleted. An information state is an eidostate containing only record states; the set of these is called . A bit state is an information state with exactly two elements, and a bit process is a process of the form . Then, we have

Axiom 5 (Information). There exist a bit state and a possible bit process.

Devices such as Maxwell’s demon accomplish state transformations by acquiring information. We accommodate these into our theory via a new axiom:

Axiom 6 (Demons). Suppose and such that .

- (a)

There exists such that .

- (b)

For any , either or .

The first clause asserts that what one demon can do (transforming a into b by acquiring the information in J), another demon can undo (transforming b into a by acquiring information in I). The second clause envisions nearly reversible demons.

Two more axioms serve more technical purposes. The first regularizes the → relation by its asymptotic behavior:

Axiom 7 (Stability). Suppose and . If for arbitrarily large values of n, then .

The second posits the existence of states in which conserved quantities may be stored—e.g., the height of a weight used for storing energy. We have

Axiom 8 (Mechanical states). There exists a subset of mechanical states such that

- (a)

If , then .

- (b)

For , if then .

(This axiom is identical to one posited by Giles [

2], whose system was a model for our own.) Note that the axiom does not assert that

is non-empty; it is perfectly consistent to have a model for the axioms with no mechanical states.

We introduce one additional axiom that allows us to relate uniform eidostates to singleton states. Consider, for instance, a situation in which a one-particle “Szilard” gas is confined to a volume. This situation corresponds to a single state (as in the first part of Figure 2 below). Now, we introduce a partition so that the gas particle might be either in one side or the other (that is, state or ). If we remove the partition, the original state is restored. Thus, . Considering many such examples, we arrive at our final axiom:

Axiom 9 (State equivalence). If E is a uniform eidostate, then there exist states such that and .

The system yields some striking results. For instance, we can establish the existence of components of content, which are numerical quantities that are conserved in every possible process. A component of content Q is a real-valued additive function on the set of states . (Additive in this context means that .) In a uniform eidostate E, every element has the same values of all components of content, so we can without ambiguity refer to the value . The set of uniform eidostates is denoted . This set includes all singleton states in , all information states in and so forth, and it is closed under the + operation.

Perhaps the most far-reaching theorem established by the axioms is the following (Theorem 8 in [

1]):

Theorem 1. There exist an entropy function and a set of components of content Q on with the following properties:

- (a)

For any , .

- (b)

For any and component of content Q, .

- (c)

For any , if and only if and for every component of content Q.

- (d)

for all .

On uniform eidostates, the entropy function is completely determined (up to a non-mechanical component of content) by the → relations among the eidostates.

3. Coin-and-Box Model

Now that we have described our system in some detail, we will introduce a very simple model of the axioms. None of our later results depend on this model, but a definite example will be convenient for explanatory purposes. Our theory deals with configurations of coins and boxes; the states are arrangements of coins, memory records, and closed boxes containing coins. We have the following:

Coin states, which can be either h (heads) or t (tails) or combinations of these. It is also convenient to define a stack state to be a particular combination of n coin states h: . The coin value Q of a compound of coin states is just the total number of coins involved. A finite set K of coin states is said to be Q-uniform if every element has the same Q-value.

Record states r. As the name suggests, these should be interpreted as specific values in some available memory register. The combination of two record states is another record state. Thus, r, , , etc., are all distinct record states. Record states are not coins, so .

Box states. For any Q-uniform set of coin states C, there is a sequence of box states . Intuitively, this represents a kind of closed box containing coins so that . If , then we denote the corresponding “basic” box states by .

We now must define the relation → among eidostates.

We assume that similar eidostates can be transformed into each other in accordance with our axioms. As far as the → relation is concerned, we can freely rearrange the “pieces” in a compound eidostate.

For coin states, .

If r is a record state, for any a. In a similar way, for an empty box state, .

If K is a Q-uniform eidostate of coin states, .

Now, we add some rules that allow us to extend these to more complex situations and satisfy the axioms. In what follows, A, , B, etc., are eidostates, and s is a state.

- Transitivity.

If and , then .

- Augmentation.

If , then .

- Cancelation.

If , then .

- Subset.

If and , then .

- Disjoint union.

If A and B are both disjoint unions and , and both and , then .

Using these rules, we can prove a lot of → relations. For example, for a basic box state, we have

. From the subset rule, we have

(but not the reverse). Then, we can say

from which we can conclude (via transitivity and cancelation) that

. The use of a basic box allows us to “randomize” the state of one coin.

Or consider two coin states and distinct record states

and

. Then

from which can show that

. That is, we can set an unknown coin state to

h if we also make a record of which state it is. A pretty similar argument establishes the following:

The bit state

can be deleted at the cost of a coin absorbed by the basic box. The basic box is a coin-operated deletion device, and since each step above is reversible, we can also use it to dispense a coin together with a bit state (that is, an unknown bit in a memory register).

These examples help us to clarify an important distinction. What is the difference between the box state

and the eidostate

? Could we simply replace all box states

with a simple combination

of possible coin eidostates? We cannot, because such a replacement would preclude us from using the subset rule to obtain Equation (

2). The whole point of the box state is that the detailed state of its contents is

entirely inaccessible for determining possible processes. From the point of view of the agent, putting a coin in a box effectively randomizes it.

It is not difficult to show that our model satisfies all of the axioms presented in the the last section with the mechanical states in

identified as coin states. The key idea in the proof is that we can reversibly reduce any eidostate to one with a special form:

where

is a stack state of

coins and

is an

information state containing

k possible record states. (This is true because all box states can be reversibly “emptied”, and any

Q-uniform eidostate of coin states can be reversibly transformed into a stack state and an information state, as in Equation (

5)). Relations between eidostates are thus reduced to relations between states of this form. We note that the coin value

q is conserved in every → relation, and no relation allows us to decrease the value of

k. In our model, there is just one independent component of content (

Q itself), and the entropy function is

. (We use base-2 logarithms throughout).

4. The Entropy Formula and Entropic Probability

Now, let us return to the general axiomatic system. One general result of [

1] is a formula for computing the entropy of a uniform eidostate

E in terms of the entropies of its elements

e. This is

It is this equation that motivates our definition of the entropic probability of

e within the eidostate

E:

Then,

and the probabilities sum over

E to 1. As we have mentioned, the entropy function

may not be quite unique; nevertheless, two different admissible entropy functions lead to the

same entropic probability distribution. Even better, our definition gives us a very suggestive formula for the entropy of

E:

where the mean

is taken with respect to the entropic probability, and

is the Shannon entropy of the distribution

P [

6,

7].

Equation (

9) is very special. If we choose an arbitrary distribution (say

), then with respect to this probability, we find

with equality if and only if

is the entropic distribution [

7]. Therefore, we might

define the entropic probability to be the distribution that maximizes the sum of average state entropy and Shannon entropy—a kind of “maximum entropy” characterization.

How is the entropic probability related to other familiar probability concepts? To quote [

1],

Every formal basis for probability emphasizes a distinct idea about it. In Kolmogorov’s axioms [

8], probability is simply a measure on a sample space. High-measure subsets are more probable. In the Bayesian approach of Cox [

9], probability is a rational measure of confidence in a proposition. Propositions in which a rational agent is more confident are also more probable. Laplace’s early discussion [

10] is based on symmetry. Symmetrically equivalent events—two different orderings of a shuffled deck of cards, for instance—are equally probable. (Zurek [

11] has used a similar principle of “envariance” to discuss the origin of quantum probabilities.) In algorithmic information theory [

7], the algorithmic probability of a bit string is related to its complexity. Simpler bit strings are more probable.

We may remark further that axiom systems like those of Kolmogorov or Cox do not assign actual probabilities in any situation; they simply enforce rules that any such assignment must satisfy. Entropic probability is an actual assignment of a particular distribution, which is determined by objective facts about state transformations.

5. Uniformization

A unique entropic probability rule arises from our → relations among eidostates, which in the real world might summarize empirical data about possible state transformations. But so far, this entropic probability distribution is only defined within a uniform eidostate E.

In part, this makes sense. An eidostate represents represents the knowledge of an agent—i.e., that the state must be one of those included in the set. This is the knowledge upon which the agent will assign probabilities, which is why we have indicated the eidostate E as the condition for the distribution. Furthermore, these might be the only eidostates, since the axioms themselves do not guarantee that any non-uniform eidostates exist. (Some models of the axioms have them, and some do not.) But can we generalize the probabilities to distributions over non-uniform collections of states?

Suppose

is a finite set of states that is possibly not uniform. Then, we say that

A is

uniformizable if there exists a uniform eidostate

, where the states

are mechanical states in

. The idea is that the states in

A, which vary in their components of content, can be extended by mechanical states that “even out” these variations. Since

is uniform, then

for any

. The abstract process

is said to be

adiabatically possible [

2]. Mechanical states have

, so the entropy of the extended

is just

which is independent of our choice of the uniformizing mechanical states.

What is the significance of this entropy? Suppose A and B are not themselves uniform, but their union is uniformizable. Then, we may construct uniform eidostates and such that either or , depending on whether or the reverse. In short, the entropies of the extended eidostates determine whether the set of states A can be turned into the set B if we imagine that these states can be augmented by mechanical states, embedding them in a larger, uniform context.

Given the entropy of the extended state, we can define

This extends the entropic probability to the uniformizable set

A.

Let us consider an example from our coin-and-box model. We start out with the non-uniform set

. These two basic box states have different numbers of coins. But we can uniformize this set by adding stack states, so that

is a uniform eidostate. The entropy of a basic box state is

, so we have

The entropic probabilities are thus

6. Reservoir States

So far, we have uniformized a non-uniform set A by augmenting its elements with mechanical states, which act as a sort of “reservoir” of components of content. These mechanical states have no entropy of their own. But we can also consider a procedure in which the augmenting states act more like the states of a thermal reservoir in conventional thermodynamics.

We begin with a mechanical state , and posit a sequence of reservoir states , which have the following properties.

For any

n and

m, we have that

, so that

is a non-negative constant for the particular sequence of reservoir states. This sequence

is characterized by the state

and the entropy increment

. Note that we can write

, where

.

For example, in our coin-and-box model, the basic box states act as a sequence of reservoir states with a mechanical (coin) state and an entropy increment . The more general box states form a reservoir state sequence with and , where and k is the number of states in K. For each of these box-state reservoir sequences, .

One particular type of reservoir is a

mechanical reservoir consisting of the states

,

,

, etc. We denote the

nth such state by

. For the

reservoir states,

. If we have a finite set of states

that can be uniformized by the addition of the

states, they can also be uniformized by a corresponding set of non-mechanical reservoir states

:

As before, we can find the entropy of this uniform eidostate and define entropic probabilities. But the

reservoir states now contribute to the entropy and affect the probabilities.

First, the entropy:

The entropic probability—which now depends on the choice of reservoir states—is

The reservoir states affect the relative probabilities of the states. For example, suppose

for a pair of states in

A. We might naively think that these states would end up with the same entropic probability, as they would if we uniformized

A by mechanical states. But since we are uniformizing using the

reservoir states, it may be that

and

have different entropies. Then, the ratio of the probabilities is

which may be very different from 1.

Again, let us consider our coin-and-box model. We begin with the non-uniform set

. Each of these states has the same entropy

, that is, zero. We choose to uniformize using basic box states

. For instance, we might have

Recalling that

, the entropy is

This yields probabilities

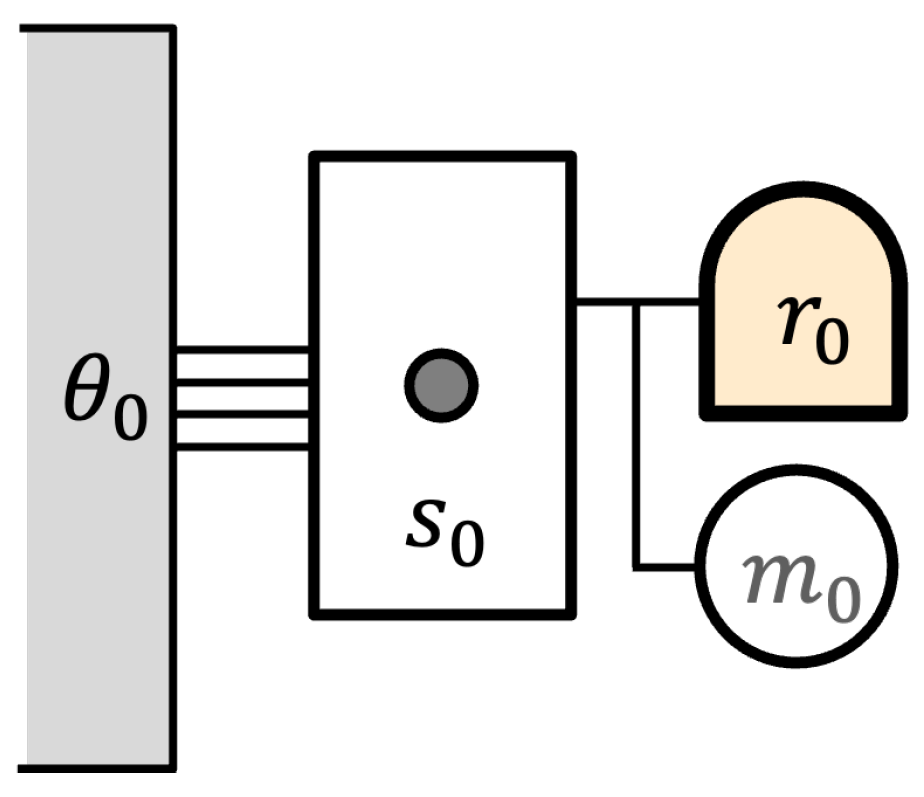

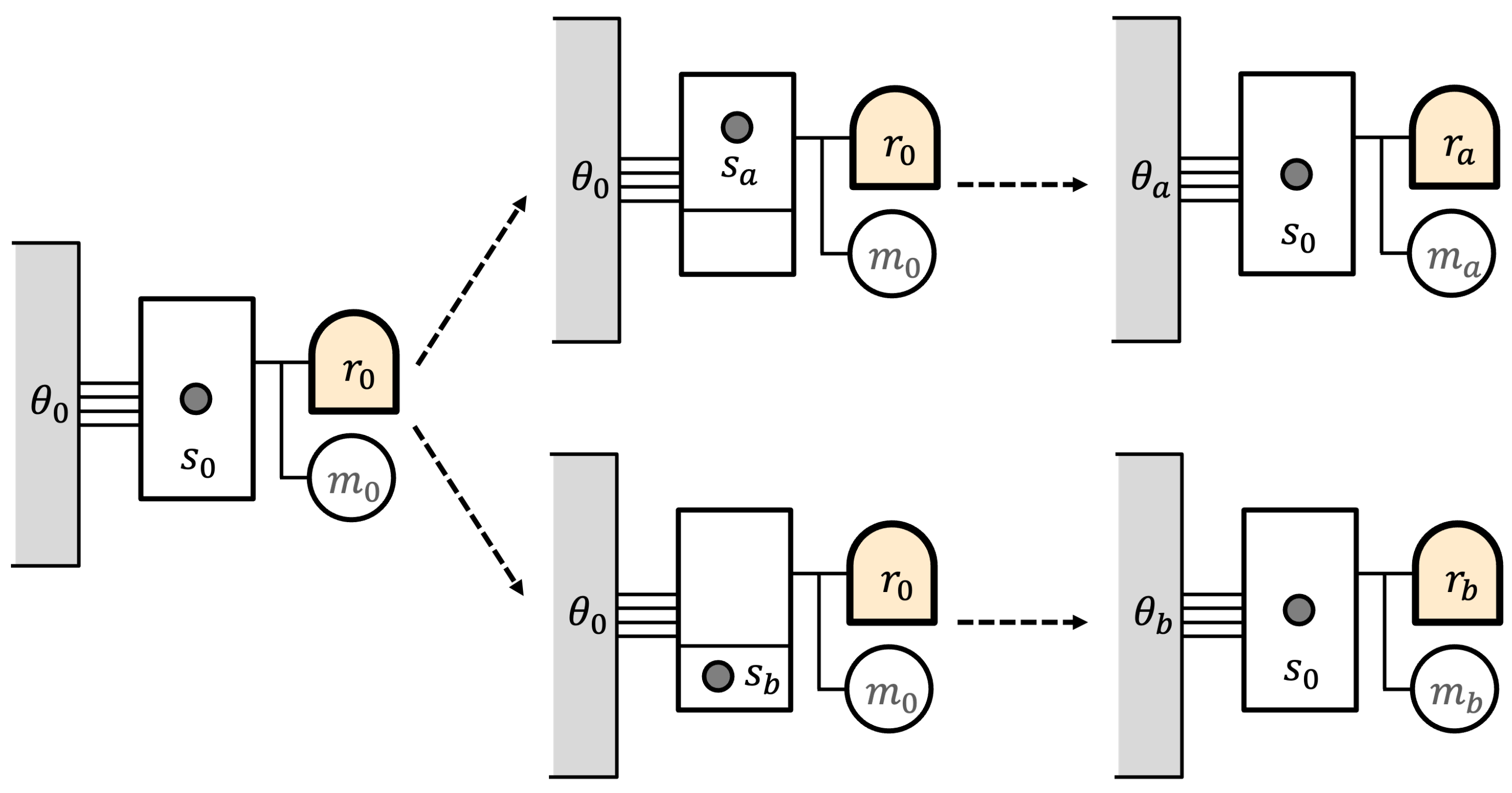

As an illustration of these ideas, consider the version of Maxwell’s demon shown in

Figure 1.

The demon is a reversible computer with an initial memory state . It is equipped with a reversible battery for storing energy, initially in mechanical state . The demon interacts with a one-particle “Szilard” gas, in which the single particle can move freely within its volume (state ). The gas is maintained in thermal equilibrium with a heat reservoir whose initial state is . We might denote the overall initial state by .

Now, the demon introduces a partition into the gas, separating the enclosure into unequal subvolumes, as in

Figure 2. The two resulting states are

and

, which are not equally probable. The probabilities here are entropic probabilities due to the difference in entropy of

and

.

Now, the demon records the location of the particle in its memory and uses this to control the isothermal expansion of the one-particle gas. The work is stored in the battery. At the end of this process, the demon retains its memory record, and the battery is in one of two mechanical states and . The gas is again in state . But different amounts of heat have been extracted from the reservoir during the expansion, so the reservoir has two different states and .

The overall final eidostate might be represented as

The states of the demon and the gas,

and

, have different energies and the same entropy. It is the reservoir states

and

that (1) make

F uniform (constant energy) and (2) introduce the entropy differences leading to different entropic probabilities for the two states.

A conventional view would suppose that the unequal probabilities for the two final demon states comes from their history—that is, that the probabilities are inherited from the unequal partition of the gas. In the entropic view, the unequal probabilities are due to differences in the environment of the demon, which is represented by the different reservoir states and . The environment, in effect, serves as the “memory” of the history of the process.

7. Context States

When we uniformize a non-uniform A by means of a sequence of reservoir states, the reservoir states affect the entropic probabilities. We can use this idea more generally.

For example, in our coin-and-box model, suppose we flip a coin but do not know how it lands. This might be represented by the eidostate

. Without further information, we would assign the coin states equal probability 1/2, which is the simple entropic probability. But suppose we have additional information about the situation that would lead us to assign probabilities 1/3 and 2/3 to the coin states. This additional information—this

context—must be reflected in the eidostate. The example in Equation (

14) tells us that this does the job:

The extended coin-flip state

includes extra context so that the entropic probability reflects our additional information.

In general, we can adjust our entropic probabilities by incorporating

context states. Suppose we have a uniform eidostate

, but we wish to

specify a particular non-entropic distribution

over these states. Then, for each

, we introduce eidostates

, leading to an extended eidostate

which we assume is uniform. The

values are the context states. Our challenge is to find a set of context states so that the entropic probability in

equals the desired distribution

.

We cannot always do this exactly, but we can always approximate it as closely as we like. First, we note that we can always choose our context eidostates to be information states. The information state

containing

n record states has entropy

. Now, for each

k, we closely approximate the ratio

by a rational number; and since there are finitely many of these numbers, we can represent them using a common denominator. In our approximation,

Now, choose

for each

k. The entropy of

becomes

From this, we find that the entropic probability is

as desired.

We find, therefore, that the introduction of context states allows us to “tune” the entropic probability to approximate any distribution that we like. This is more than a trick. The distribution represents additional implicit information (beyond the mere list of states ), and such additional information must have a physical representation. The context states are that representation.

8. Free Energy

The tools we have developed can lead to some interesting places. Suppose we have two sets of states, and , endowed with a priori probability distributions and , respectively. We wish to know when the states in A can be turned into the states in B, which are perhaps augmented by reservoir states. That is, we wish to know when .

We suppose we have a mechanical state , leading to a ladder of mechanical reservoir states . The mechanical state is non-trivial in the the sense that for any s. This means that there is a component of content Q such that . The set can be uniformized by augmenting the and states by mechanical reservoir states.

However, we still need to realize the

and

probabilities. We do this by introducing as context states a corresponding ladder of reservoir states

such that

is very small. Essentially, we assume that the reservoir states are “fine-grained” enough that we can approximate any positive number by

for some positive or negative integer

n. Then, if we augment the

and

states by combinations of

and

states, we can uniformize

and also tune the entropic probabilities to match the a priori

and

. The final overall uniform eidostate is

for integers

,

,

and

. The uniformized

and

eidostates are subsets of this and thus are themselves uniform eidostates. The entropic probabilities have been adjusted so that

We now choose a component of content

Q such that

. Since the overall state is is uniform, it must be true that

for all choices of

. Of course, if all of these values are the same, we can average them together and obtain

We can write the average change in the

Q-value of the mechanical state as

Since all of the states lie within the same uniform eidostate,

if and only if

—that is,

From this, it follows that

If we substitute this inequality into Equation (

33), we obtain

We can obtain insight into this expression as follows. Given the process ,

is the average increase in Q-value of the mechanical state, which we can call . Intuitively, this might be regarded as the “work” stored in the process.

We can denote the change in the Shannon entropy of the probabilities by . Since each or state could be augmented by a corresponding record state, this is the change in the information entropy of the stored record.

For each state

a, we can define the

free energy . We call this free “energy”, even though

Q does not necessarily represent energy, because of the analogy with the familiar expression

for the Helmholtz free energy in conventional thermodynamics. The average change in the free energy

F is

The free energy F depends on the particular reservoir states only via the ratio . Given this value, depends only on the and states, together with their a priori probabilities.

To return to our coin-and-box example, suppose we use the basic box states as reservoir states , and we choose the coin number Q as our component of content. Then, and , so that the free energy function . (If we use different box states as reservoir states, the ratio is different.)

With these definitions, Equation (

36) becomes

Many useful inferences can be drawn from this. For example, the erasure

Q-cost of one bit of information in the presence of the

-reservoir is

. This cost can be paid from either the mechanical

Q-reservoir state, the average free energy, or from a combination of these. This amounts to a very general version of Landauer’s principle [

12]: one that involves any type of mechanical component of content.