1. Introduction

Image steganography is a critical technology in modern digital communication, particularly for ensuring secure data transmission [

1,

2,

3,

4]. It allows secret information to be embedded within cover images in a way that is imperceptible to the human eye, enabling covert communication and securing transmission channels in scenarios where traditional encryption alone may be less effective or more vulnerable. In addition, image steganography underpins many digital watermarking applications, providing practical tools for copyright protection and content authentication [

5]. However, conventional schemes, such as Least Significant Bit (LSB) embedding and frequency-domain transformations, are often susceptible to detection and lack the robustness needed to withstand advanced signal-processing attacks [

4,

6]. This gap between practical requirements and algorithmic resilience has motivated a growing demand for more sophisticated and robust steganographic techniques to address evolving security challenges [

7,

8].

The emergence of deep learning has substantially advanced image steganography, enabling more adaptive and powerful hiding strategies [

1,

5,

9]. Generative Adversarial Networks (GANs) and autoencoders, in particular, have made it possible to directly learn joint encoding–decoding functions from large image collections [

10,

11,

12,

13]. GAN-based methods exploit the competition between a generator and a discriminator to improve the visual realism of stego images and reduce detectability, while autoencoder-based approaches learn compact latent representations that support efficient embedding and reliable recovery [

9,

14,

15]. These deep learning-based techniques alleviate several limitations of traditional methods, including limited embedding capacity and poor robustness to common image manipulations or compression, thereby broadening the application scope of secure, hidden communication [

11,

16].

Despite these advances, a key practical challenge remains in what may be termed mechanistic overfitting. Many contemporary systems still rely on a single, task-agnostic encoder–decoder pipeline that implicitly assumes that both the secret payloads and the transmission channel follow a relatively narrow distribution. In practice, the shared feature extractor tends to specialize to the statistics seen during training, so performance can deteriorate once the payload or channel conditions deviate from this regime. At the same time, many pipelines adopt a restricted view of the transmission channel: they are optimized under weak perturbations (often only mild Gaussian noise) or a single distortion model [

17,

18]; therefore, they degrade sharply under more realistic perturbation families, including JPEG quantization, impulse noise, complex sensor noise, blur, geometric warps, weather-like artifacts, and random erasing. Together, a structurally overfitted representation (tied to a single encoder–decoder path) and a channel-overfitted training regime (tied to a narrow perturbation model) help explain why current state-of-the-art models often struggle to retain both secrecy and perceptual fidelity once deployed in real acquisition, compression, and transmission chains [

15,

19,

20].

In practical steganographic scenarios, secret images are highly heterogeneous in both semantics and statistics (scenery, faces, pets, logos, etc.), with very different texture, frequency, edge, and color patterns. Processing all such secrets with a single encoder–decoder leads to latent representations that favor dominant categories and generalize poorly under distribution shifts, i.e., mechanistic overfitting. To mitigate this, we introduce a secret pre-processing stage that standardizes each secret (including resizing to a fixed resolution) and extracts discriminative cues to assign a coarse semantic label (e.g., “scenery”, “face”, “pet”). This label is extensible as new categories are added and is used as an explicit routing signal to select a specialized expert path for hiding and recovery; in principle, any original secret size can be embedded via this standardized input, although aggressive downsampling of very large or highly detailed secrets inevitably limits the maximum reconstruction fidelity.

On the reconstruction side, the architecture is designed to separate secret-related information from cover content in the latent space: the cover is encoded into a cover feature, the resized secret into a secret feature, and the two are fused into a shared stego representation that is decoded along the single expert path selected by the semantic label. A dedicated secret decoder branch operates on this stego feature, and joint losses on cover fidelity, stego fidelity, and secret reconstruction encourage disentanglement between cover and secret information. The routing label is produced by a lightweight dual-branch selector that combines evidence from the fused stego feature and the (potentially noisy) stego image. Covers and secrets are drawn from disjoint pools, while the same heterogeneous secret pool is shared between the pre-processing classifier and the hiding/recovery modules, and the cover encoder is independent of the secret label, whereas the secret branch does not depend on the cover type. As a result, the method is intended to remain robust whether the cover and secret belong to the same semantic category or not, a behavior confirmed empirically on diverse datasets.

Extensive experiments on benchmark datasets, including ImageNet, MNIST, MetFACE, and Stock1K, were conducted to evaluate the performance of the proposed model. The evaluation considers key metrics such as Secret Recovery Accuracy (SRA), Stego-Image Quality (SSIM, PSNR), and resilience to image distortions. We analyze the controllable trade-off between cover imperceptibility and secret fidelity across different effective compression ratios (including the impact of resizing and payload size), robustness under various noise and corruption conditions, and comparisons with existing deep learning-based steganographic methods. When compared with models such as HiNet, InvMIHNet, and a baseline CNN autoencoder, the proposed framework achieves consistently competitive or improved PSNR, SSIM, and SRA, along with substantially reduced detection rates in steganalysis tests, with AUC values close to 0.5 in the evaluated setting. These results suggest that the framework provides both theoretical and empirical support for the design of robust image steganography systems for secure image transmission, digital watermarking, and privacy-preserving communication under a wide range of real-world distortions and data complexities.

The rest of this paper is organized as follows:

Section 2 provides the preliminaries, including the key notations and performance metrics.

Section 3 describes the proposed steganographic framework in detail, including the secret pre-processing, multi-path architecture, and training strategy.

Section 4 presents the experimental results and compares the proposed approach with existing methods through ablation and comparative experiments. Finally,

Section 5 concludes the paper and discusses potential directions for future research.

2. Preliminaries

This section introduces the essential mathematical concepts, key notations, and performance metrics used in our steganographic framework. We define the information capacity, along with performance metrics such as Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), Message Recovery Accuracy (ACC), and Area Under the Curve (AUC) that are integral to evaluating the quality of both the stego images and the recovered secret data.

The PSNR is a standard metric for evaluating the quality of the stego image by comparing it to the original cover image. It is defined as follows:

where

is the maximum possible pixel value (usually 255 for 8-bit images), and

is the Mean Squared Error between the original image

X and the stego image

:

where

N is the number of pixels, and

and

are the pixel values of the cover and stego images, respectively. A higher PSNR indicates better imperceptibility, as the stego image is visually closer to the original cover image.

The SSIM is another important metric for measuring the perceptual similarity between the cover and stego images. It is defined as follows:

where

and

are the mean values of the cover and stego images, respectively; -

and

are the variances of the cover and stego images, respectively; -

is the covariance between the cover and stego images; and -

and

are constants to stabilize the division.

SSIM evaluates the structural similarity between the cover and stego images. Higher SSIM values suggest that the stego image maintains more structural integrity, leading to higher imperceptibility.

The ACC quantifies the precision with which the secret message is recovered from the stego image. It is defined as the percentage of correctly recovered bits from the total number of embedded bits:

where

L is the length of the secret message,

and

are the original and recovered secret bits, respectively, and

is the indicator function, which is equal to 1 if the condition is true and 0 otherwise.

The ACC measures the ability of the system to recover the secret data accurately. Higher ACC values indicate better recovery accuracy, meaning the system is more reliable in retrieving the secret message.

The AUC is a standard metric used to evaluate the effectiveness of a steganographic system against steganalysis. It is particularly useful for assessing the detectability of the stego image by a classifier or detector. AUC measures the ability of a detection system to distinguish between cover and stego images. It is computed from the Receiver Operating Characteristic (ROC) curve, which plots the True Positive Rate (TPR), representing the fraction of correctly detected stego images, against the False Positive Rate (FPR), representing the fraction of cover images incorrectly identified as stego images.

The AUC is defined as the area under the ROC curve:

where

represents the False Positive Rate (FPR), and

is the corresponding True Positive Rate (TPR) as a function of the FPR.

Unless otherwise noted, AUC is measured for the steganalysis detector. Therefore, AUC ≈ 0.5 is desirable for the hider (good resistance), while AUC → 1.0 signifies that the detector is highly effective (poor resistance).

3. Proposed Steganographic Framework

3.1. Overview

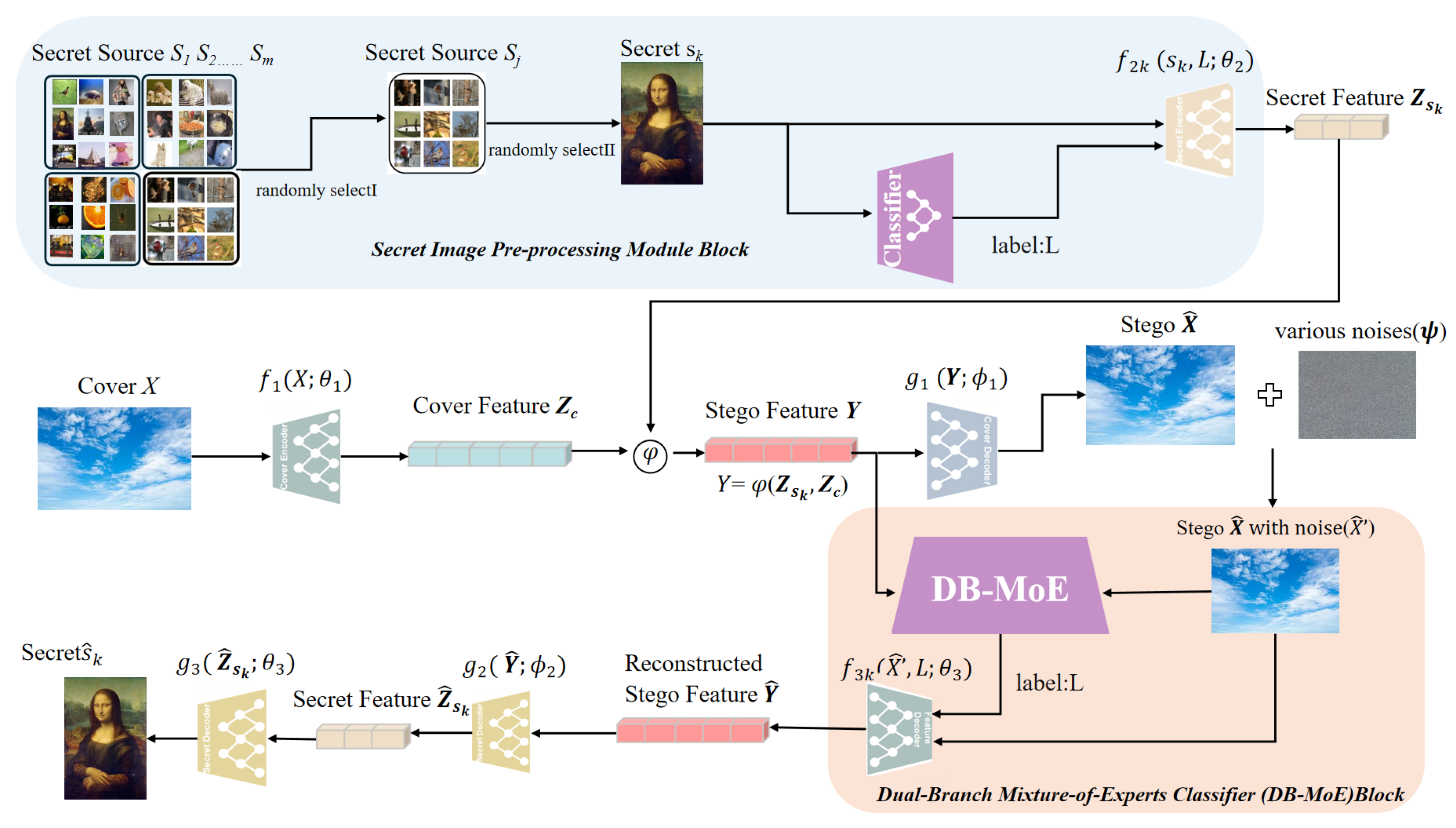

As depicted in

Figure 1, the proposed framework first initiates with the pre-processing of the secret image, where salient features—such as texture, structure, and color distribution—are extracted and a semantic label is assigned to categorize the image (e.g., scenery, facial, or pet images). Following pre-processing, the secret data is embedded into the cover image, as the second part, through a multi-encoder–decoder architecture, employing specialized encoder–decoder pairs tailored to the specific characteristics of each secret data category. The encoder transforms the cover image into a latent space, where it is subsequently combined with the secret data and passed through the decoder, resulting in the stego image while minimizing perceptual distortion. Third, to simulate real-world distortions, noise is intentionally introduced to the stego image, enhancing the robustness of the framework against various attacks. Then, the noisy stego image is processed by a dual-parallel classifier: one classifier evaluates the high-level feature representation of the image, while the other operates directly on the noisy stego image itself. The outputs of both classifiers are integrated and passed to the decoder, which refines the secret recovery process. Eventually, the decoder reconstructs the original secret data with high fidelity, even in the presence of noise. This cohesive workflow facilitates efficient data embedding and recovery, maintaining imperceptibility while ensuring resilience to a wide range of distortions.

In the following subsections, we will focus on three pivotal innovations of our model: the secret image pre-processing module, the bicameral consensus classifier, and the multi-encoder–decoder architecture with static and dynamic mechanisms. These components are integral to the framework’s ability to achieve high-quality, imperceptible steganography and robust secret recovery.

3.2. Secret Image Pre-Processing Module

At the outset, the secret image undergoes a sequence of pre-processing steps (as illustrated by the blue-highlighted block in

Figure 1) to enhance the system’s robustness and adaptability. First, a secret image is randomly selected from heterogeneous sources—natural scenes, facial photographs, or pet pictures—and resized to a fixed spatial resolution compatible with the network, so that all subsequent modules operate on a standardized secret tensor regardless of the original image size. Next, a lightweight classifier extracts discriminative features (structure, texture, color distribution, edge density, and spatial-frequency content) and, based on these cues, assigns a semantic label from the predefined classes: Category 0—Scenery (e.g., landscapes, architecture, sky), Category 1—Facial Appearance (e.g., portraits, face photographs), and Category 2—Pet (e.g., animal photographs). These classes are characterized by distinct visual attributes: scenery images typically exhibit abundant texture and high spatial frequencies; facial images show pronounced anatomical features and relatively smooth skin; and pet images present distinctive shapes, colors, and fur textures. Importantly, the scheme is extensible and can be augmented with additional categories (e.g., object, indoor scene), with the classifier readily adaptable to such additions.

In simple terms, this pre-processing module—beginning the overall pipeline—forms a coherent sequence of selection → classification → category-specific encoding, ensuring proper categorization and standardization while strengthening the framework’s robustness and generalizability.

Specifically and mathematically, before starting the embedding process, we first consider the set of

m candidate secret sources. Let each secret source be denoted as

for

. Each secret source

contains a set of secret images, which we denote as

, where

is the number of secret images in source

. We first randomly select one secret source

from the set of

m sources. Then, from the selected source

, a secret image is randomly chosen. We denote this image by

, where

k is the index of the selected secret image.

The selected secret image is then resized to a fixed spatial resolution by a deterministic operator

:

where

has the canonical height and width required by the network.

Next, a lightweight classifier

assigns the resized secret image

to one of

K semantic classes:

where

is the class label of

, determined by characteristic structure, texture, color distribution, edge density, and spatial-frequency content.

After classification, one secret image is selected for embedding based on the downstream policy (e.g., a required class or sampling rule). Let

denote its resized version as in (

7); by abuse of notation, we define the standardized secret input used for hiding as

As part of the secret image pre-processing module, the selected secret image

S and its corresponding label

L are fed into a dedicated encoder. This encoder, denoted as

, processes both the secret image and its label to generate a feature representation

. The function

is parameterized by

, which represents the set of model parameters learned during training. By incorporating the label

L (typically taken as

), the encoder can produce a more accurate and context-aware feature representation. The output feature

is then used in the subsequent stages of the framework for embedding into the cover image. This process ensures that the model efficiently handles secret data by transforming it into a compact and discriminative feature representation, while preserving the essential information for embedding:

Although the mapping

in Equation (

8) is implemented as a lightweight CNN classifier, its role in our framework goes beyond standard image categorization. In a conventional setting, classification is typically used only to assign a semantic tag to an image. In contrast, in our design, the predicted label

serves as a task-aware control signal that (i) routes the secret image to a dedicated encoder–decoder expert tuned to its statistics, (ii) partitions the secret space according to low- and mid-level cues (texture, frequency content, edge density) that directly affect embedding capacity and robustness, and (iii) standardizes the secret representation before fusion with the cover feature. Because the same heterogeneous secret-image pool is used both to train the pre-processing classifier and to train the embedding and reconstruction modules, the decision boundaries of

are aligned with the regimes where different experts are most effective, rather than being optimized for classification accuracy alone. As a result, the pre-processing module does not merely recognize image content but actively shapes how model capacity is allocated across secret types and how robust the subsequent steganographic pipeline is to distribution shifts in the secret payloads.

In practice,

Section 4.3 shows that this label-driven routing, together with the corresponding expert branches, yields consistently higher PSNR/SSIM and bit accuracy (and lower LPIPS) than a single-path variant without secret pre-processing or semantic labels. This comparison indicates that the classification-and-routing stage contributes materially to both hiding and reconstruction quality.

Section 4.2 further demonstrates that the performance of the full model remains stable when the label space is expanded from three to five classes, supporting the extensibility of the proposed design.

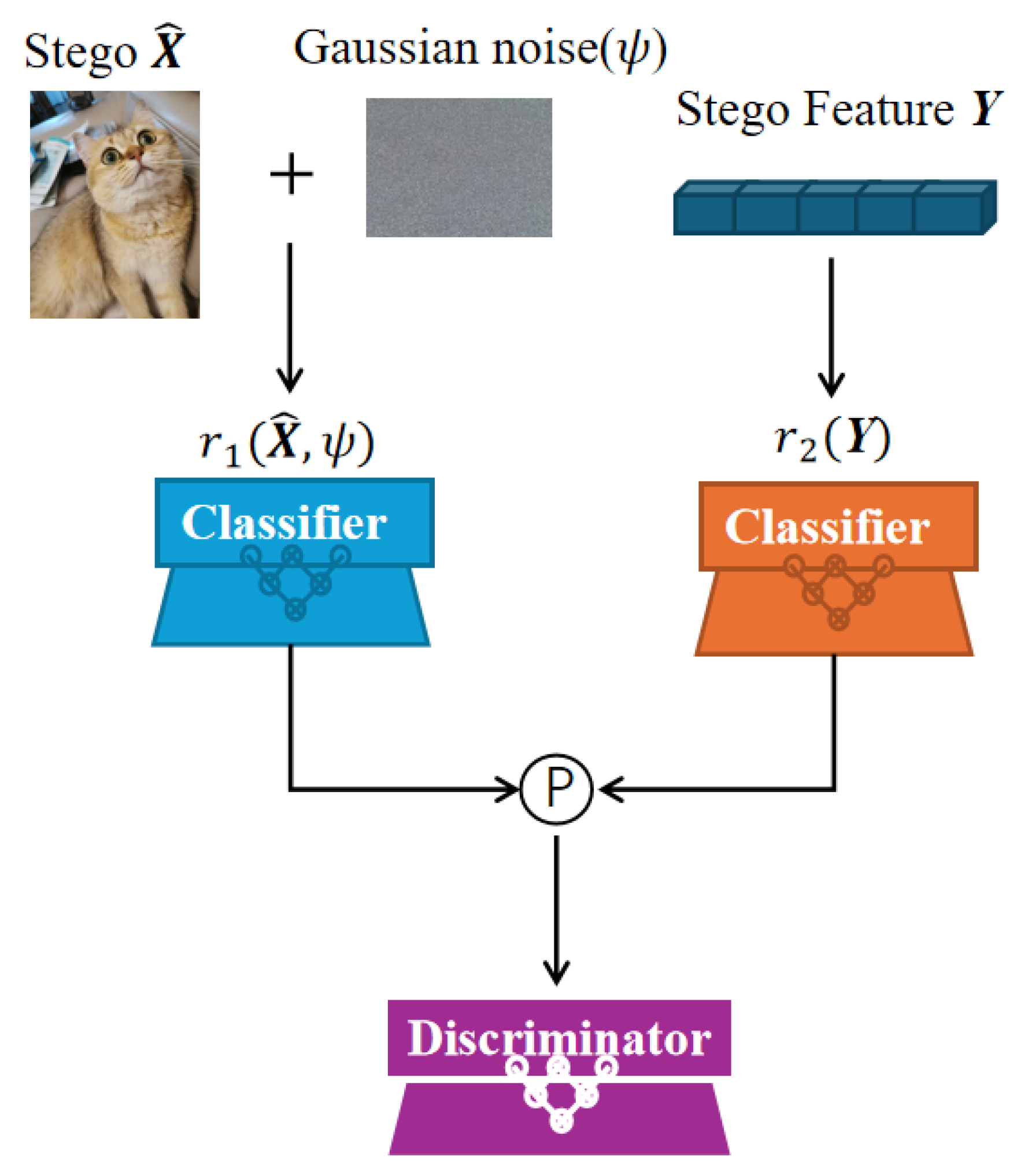

3.3. Dual-Branch Mixture-of-Experts Classifier (DB-MoE)

This module corresponds to the skin-toned block in

Figure 1. Specifically, our DB-MoE model comprises a pair of parallel classifiers—a main classifier and an auxiliary classifier—and a discriminator, as shown in

Figure 2. It implements a parallel, dual-branch classifier that operates within the cover–stego pipeline. The module takes two inputs: (i) the fused stego feature

, obtained by feature fusion of the secret and cover; and (ii) a noise-processed stego image

produced by the noise module. The main branch consumes the high-level fused representation

, while the auxiliary branch acts directly on the noise-processed stego

. Each branch produces a score vector, and these scores are combined at the decision level to yield a single predicted label L. Finally, the predicted label L is forwarded—together with the stego-with-noise

—to the downstream decoder, enabling conditioned decoding while preserving parallel, disentangled feature extraction and improving robustness.

Let

denote the stego feature and

the stego image. We employ a main classifier

acting on

and an auxiliary classifier

acting on

with parameters

. Both produce class logits in

:

We denote their outputs by

Next, we fuse these logits by a weighted sum, where

and

are the fusion weights. These weights satisfy

, ensuring that the logits are combined in a balanced manner:

In our implementation, we use a fixed fusion ratio and set

which corresponds to fixed coefficients

and

in Equation (

12). These coefficients are not learned but, rather, chosen once as hyper-parameters and kept constant throughout training and evaluation.

The fused logits

are then passed through the softmax function to convert them into probabilities:

where

is the probability distribution across the classes, and

represents the probability of class

.

To obtain the final predicted label

L, we take the class with the highest probability:

where

L is the predicted label (the class with the highest probability).

After that, we introduce the noise-processed stego image

, which is obtained by adding Gaussian noise

to the stego image

:

where

represents the noise variance, and

is the Gaussian noise added to

. Then, the predicted label

L is used directly to condition the subsequent stego feature reconstruction. Since no one-hot encoding is used, the label

L is passed as is to the decoder:

where

is the decoder that reconstructs the stego feature

conditioned on the noisy stego image

and the label

L.

Finally, we can define the training objectives for this stage. The classifier loss

is based on cross-entropy between the true label

and the predicted probabilities

, and the feature consistency loss

ensures that the reconstructed stego feature

matches the original stego feature

:

where

minimizes the classification error, and

minimizes the reconstruction error for the stego features.

The total loss for this module is the weighted sum of the classifier loss and feature consistency loss:

where

and

are the loss weights for classification and feature consistency, respectively.

This dual-parallel classifier processes cover and stego inputs concurrently, thereby enhancing robustness and classification accuracy. The main branch operates on the fused, high-level representation derived from the cover–stego pair, while the auxiliary branch classifies directly from stego-specific cues. A weighted fusion of their outputs yields a balanced consensus, integrating complementary evidence from both branches.

3.4. Multi-Encoder–Decoder Embedding Module

In this subsection, we will introduce the multi-encoder–decoder process, where the cover image and the secret image are encoded and fused to produce the stego feature, which is then decoded to reconstruct the secret data. Then, we will delve into the training process, where the objective is to minimize the difference between the original and reconstructed stego features while also optimizing the extraction of secret features and their reconstruction. This involves the application of several loss functions and a dynamic loss adjustment strategy, which will be discussed in detail.

3.4.1. Mathematical Formulation of the Multi-Encoder–Decoder Pairs

After the secret pre-processing block, we take as inputs the category label

L and the secret feature

extracted from the selected secret image

. As shown in the white region of

Figure 1, the first step is to pass the cover image

X through the cover encoder

, which generates a latent cover feature

. The secret feature

is then fused with

through a fusion operator

, producing the stego feature

. The cover decoder

is used to reconstruct the stego image

from

.

To introduce noise, a noise layer is applied to the reconstructed stego image , generating the noise-processed stego . This pair is then passed to the downstream Dual-Branch Mixture-of-Experts (DB-MoE) classifier (skin-toned block). For the purpose of this subsection, we focus on the output: after the DB-MoE module, we obtain a reconstructed stego feature , which will be used by the secret-decoding stage (outside the scope of this subsection).

The multi-encoder–decoder part can be formulated as follows: First, the cover image

X is encoded using the cover encoder

to produce the cover feature

:

where

is a deep neural network encoder that maps the cover image to a feature space

.

Next, we fuse the secret feature

and the cover feature

using the fusion operator

to produce the stego feature

:

This fusion operation, which could be concatenation, gated addition, or attention-based fusion, combines the cover and secret features to form a feature that carries the embedded secret data. After that, the cover decoder

is applied to the fused stego feature

to reconstruct the stego image

:

where

decodes the stego feature back into the image space, generating a visually plausible stego image.

Then, we focus on the process of extracting the secret feature from the stego feature and subsequently decoding it to recover the secret image. The process begins with the extraction of the secret feature from the reconstructed stego feature , which is performed through the secret decoder. This feature is then used in the decoding step to recover the original secret image .

The stego feature

is passed through the secret decoder

, which extracts the secret feature

:

where

represents the secret decoder function that extracts the secret feature

from the given stego feature

. This step effectively isolates the secret information embedded within the stego image.

Next, the secret feature

is decoded by the secret decoder

to reconstruct the original secret image

:

where

is the decoder that reconstructs the original secret image

from the extracted secret feature

.

Because the cover encoder only processes the cover image X and never sees the raw secret, while the secret decoders and operate solely on the fused stego feature and its routed label, the model is explicitly encouraged to factor cover- and secret-related information into separate latent channels.

3.4.2. Training Objective for Multi-Encoder–Decoder Feature Reconstruction

The training process now aims to minimize the difference between the reconstructed stego feature

and the original stego feature

. To achieve this, we use a feature consistency loss function, which is defined as follows:

where

is a weight for the feature consistency loss. This ensures that the reconstructed stego feature

remains close to the original feature

, preserving the embedded secret data.

The next step in the process is to decode the secret from the reconstructed stego feature

. The training loss combines both the feature reconstruction loss and a classification loss

(if applicable). The total loss for training is the weighted sum of these two losses:

where

and

are weights that balance the contributions of the classification and feature consistency losses during training.

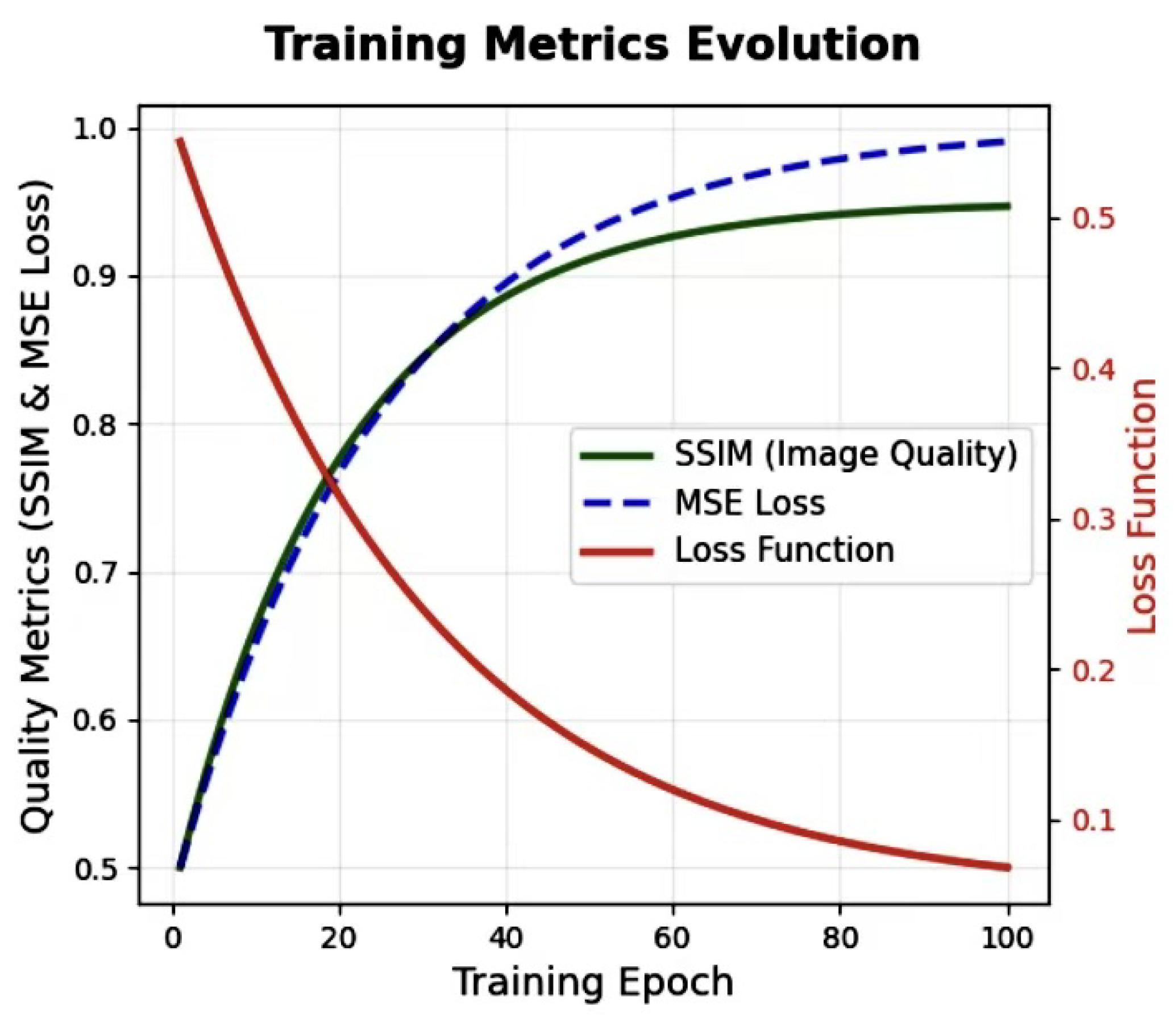

To optimize the embedding process, we introduce a dynamic loss function (as depicted in

Figure 3) that adjusts the relative importance of imperceptibility and recovery accuracy throughout the training process. This dynamic loss function evolves over time, allowing the model to prioritize different objectives during different stages of training.

Initially, the system emphasizes imperceptibility, focusing on minimizing perceptual distortion between the cover image

X and the reconstructed stego image

. This is done using the Structural Similarity Index (SSIM) and pixel-wise

loss, as expressed by

where

and

control the relative importance of the SSIM and pixel-wise loss.

As training progresses, the focus shifts to improving recovery accuracy, and the system prioritizes minimizing the Mean Squared Error (MSE) between the secret and recovered data:

where

and

are time-varying functions that adjust the loss weighting. Initially,

is larger, emphasizing imperceptibility, while

increases over time, focusing more on the recovery of secret data without sacrificing perceptual quality.

Concretely, we implement

and

as linearly scheduled weights. Let

denote the current training step and

T the total number of training steps. The weights are defined as follows:

In all experiments, we set , , , and , so that the loss initially puts more emphasis on imperceptibility and gradually shifts its focus to accurate secret recovery.

The dynamic adjustment mechanism is illustrated in

Figure 3, where the evolution of the weights

and

is shown as a function of training time. This figure highlights how the model adapts to focus on imperceptibility during the early stages of training and recovery accuracy in the later stages.

3.5. Relation to Adaptive and Conditional Routing Approaches

Several recent works have explored adaptive or conditional mechanisms in steganography and related domains. Classical content-adaptive steganography frameworks, such as HUGO and WOW, modulate the local embedding cost based on cover image content (e.g., texture, edges, smooth regions) so that changes are preferentially placed in textured or noisy areas and avoided in smooth regions [

21,

22]. More recent deep steganographic networks introduce conditional structures, for example, by using conditional invertible neural networks (cINNs) or other side information to control the hiding process within a single encoder–decoder pathway [

23,

24]. In parallel, multi-path or mixture-of-experts (MoE) architectures have been widely adopted in recognition and generation tasks to achieve input-dependent specialization, and several deep steganographic models now employ multi-branch designs or progressive fusion networks to improve robustness and capacity without changing the secret payload semantics [

25,

26,

27,

28].

In this subsection, we compare our routing mechanism with these adaptive and conditional approaches along five axes, as summarized in

Table 1. For clarity, (i) “Secret-adaptive” indicates whether the hiding pipeline changes as a function of the semantic/statistical type of the

secret image (beyond treating the payload as an undifferentiated bitstream); (ii) “Cover-adaptive” indicates content-adaptive embedding driven by cover statistics; (iii) “Multi-branch/multi-expert” denotes the existence of multiple specialized branches or experts, where different inputs can follow different computational paths; (iv) “Stego-aware routing” indicates that the routing decision itself uses stego features or stego images; and (v) “Channel-corruption curriculum” denotes explicit optimization under a diverse set of corruptions and channel perturbations (noise, compression, geometric warps, occlusions, etc.).

Classical schemes such as HUGO and WOW are strongly cover-adaptive: they design a distortion or cost function so that embedding changes concentrate in complex regions and avoid smooth structures [

21,

22]. However, they do not distinguish between different secret image types; the payload is treated as a generic bitstream, there is no explicit multi-expert structure, and the embedding rule does not depend on stego features or a corruption curriculum. This justifies the pattern “Cover-adaptive only” in

Table 1.

Conditional invertible steganography and related deep schemes typically use a single encoder–decoder (or flow) that is conditioned on side information, such as cover features or user-specified semantic attributes, in order to improve imperceptibility or control the visual content of stego images [

23,

24]. While this introduces a form of global conditioning, the network does not instantiate multiple experts specialized for distinct secret-image statistics, nor does it route different secret images to different branches. Moreover, the conditioning is usually not stego-aware (the stego image itself is not used to select a path), and the treatment of channel perturbations is often limited to a narrow set of distortions or omitted altogether, hence the ± entries in

Table 1.

Architectures such as ProStegNet and robust invertible models like RIIS employ multi-branch or progressive structures to improve feature extraction and robustness [

27,

28]. These designs can be seen as multi-branch in the sense that they process images through several parallel or staged modules. However, the branches are typically always active for every input, rather than being selected by a routing policy, and they do not specialize to distinct secret-image categories. Robust variants explicitly train under a subset of channel distortions (e.g., JPEG compression or additive noise), which we mark as ± in the “Channel-corruption curriculum” column to distinguish them from a systematic broad-spectrum curriculum.

In contrast, our framework implements a secret-dependent, stego-aware mixture-of-experts design. A dedicated secret pre-processing stage assigns each secret image a coarse semantic label based on its statistics (e.g., scenery, face, pet), and this label is used to route the secret to one of several specialized encoder–decoder experts. The routing decision is refined in a stego-aware manner by a dual-branch selector that fuses evidence from the fused stego feature and the (possibly corrupted) stego image, and the entire system is trained under a broad corruption curriculum spanning noise, compression, blur, geometric warps, and occlusions. As summarized in

Table 1, none of the representative adaptive or conditional schemes simultaneously provide (i) semantic secret-adaptive specialization across multiple experts, (ii) stego-aware routing, and (iii) a broad channel-corruption curriculum within a unified training framework. These elements, taken together, constitute the core novelty of our routing mechanism beyond existing adaptive and conditional designs.

4. Experiments

In this section, we present the results of experiments evaluating our proposed model’s performance against state-of-the-art methods, including HiNet [

29], InvMIHNet [

30], and a baseline CNN autoencoder [

31]. The evaluation covers multiple image categories—scenery, facial, and pet—and focuses on key metrics such as PSNR, SSIM, bit accuracy, and Secret Recovery Accuracy. Our model consistently outperforms the baselines in secret recovery and cover fidelity, demonstrating high robustness to distortions and strong adaptability across diverse content types.

We also explore the impact of noise and distortions, assessing resilience to common image corruptions like Gaussian noise, shot noise, JPEG compression, and impulse noise. Additionally, we analyze the trade-offs between compression ratio and fidelity, highlighting how different secret types influence the balance between cover quality and secret recovery.

The key strengths are as follows: first, superior secret recovery—achieving higher PSNR and SSIM across datasets and outperforming all baselines; second, robustness to common distortions (noise, compression artifacts, and blur), making it suitable for real-world applications; and third, high flexibility via a dynamic encoder–decoder selection mechanism that ensures consistent performance across diverse data types.

The following subsections provide a detailed analysis of the experimental setup, model comparisons, and performance under distortions.

4.1. Experimental Setup

The dataset used in this experiment consists of two disjoint pools: cover images and secret images.

Cover images: For this experiment, the cover images were drawn from a mixture of several standard image collections, rather than from a single dataset such as MNIST. We randomly sampled cover images from multiple benchmark datasets, together with additional real-world photographs, to increase the diversity of the content and to better simulate practical steganography scenarios. This mixed-source design aimed to improve the robustness and generalization ability of the model across different types of cover images.

Secret images: The secret images were likewise taken from a heterogeneous pool intended to approximate realistic hidden content. All secret images were resized to a fixed spatial resolution before being fed into the model, so that the input dimensionality was consistent across samples.

Train/validation/test splits: For this mixed-source experiment, we employed a strict 60k/10k/20k train/val/test split with identity-level isolation: no image (or any augmented variant) appeared in more than one split, and the cover and secret pools were non-overlapping. Dataset-specific experiments (e.g., those conducted on MNIST, MetFace, and Stock1K in the subsubsection of Comparison of Three Models on Targeted Datasets used their own standard train/validation/test partitions, as detailed in the corresponding experimental subsections. Throughout all experiments, covers and secrets were randomly paired across categories, rather than being forced to share the same semantic type.

4.2. Ablation on Secret-Label Granularity and Extensibility

This ablation study was designed solely to demonstrate the extensibility of the proposed secret pre-processing and routing mechanism by adding extra semantic categories. The mixed 5-class configuration used here is only employed for this analysis; all main quantitative comparisons with baselines in the subsequent subsections revert to the default 3-class setting and are re-evaluated with freshly sampled data.

Our default configuration uses three coarse semantic labels for secret images: “scenery”, “facial”, and “pet” (see

Section 3.2). While these categories are sufficient to cover the dominant content modes in our benchmarks, the pre-processing and routing mechanism is not intrinsically restricted to three classes. To demonstrate its extensibility experimentally, we trained an extended variant of our model with five secret categories.

Concretely, we retained the original three categories (scenery, facial, pet) and introduced two additional types: “indoor scene” and “object”. The former groups secret images with predominantly indoor backgrounds and man-made structures, whereas the latter covers close-up views of everyday objects (e.g., cups, books, keyboards, tools) with distinct shape and texture patterns. The secret pre-processing classifier was modified to predict five labels, and the secret branch was augmented with two additional expert encoder–decoder pairs corresponding to the two new categories. The cover-side encoder, fusion module, training protocol, and corruption curriculum remained unchanged, so that any differences in performance could be attributed solely to the increased label granularity.

Table 2 shows that, across all five categories, the stego images remain visually very close to their covers, and the decoded secrets preserve high fidelity with stable reconstruction loss and classification accuracy above 99%. Extending the label space from three to five categories therefore does not degrade imperceptibility or recovery quality, which empirically supports the extensibility of our pre-processing and expert-routing design.

4.3. From Mixed to Targeted Datasets: PSNR/SSIM/Bit Accuracy Comparisons

In this section, we present a comprehensive evaluation of the proposed method across various experimental setups. First, we report results using a mixed dataset consisting of a diverse range of cover and secret images, which provides a robust assessment of the model’s generalization ability across different image types. This is followed by a comparison with three state-of-the-art models—HiNet, InvMIHNet, and the baseline CNN model—evaluating their performance in terms of key metrics such as PSNR, SSIM, and Secret Recovery Accuracy.

Then, we extend the evaluation by focusing on three widely recognized cover image datasets—MNIST, MetFACE, and Stock1K—to provide a more detailed comparison with the mentioned models.

These comparisons help demonstrate the strengths of our method both in general settings and with specific, commonly used cover images.

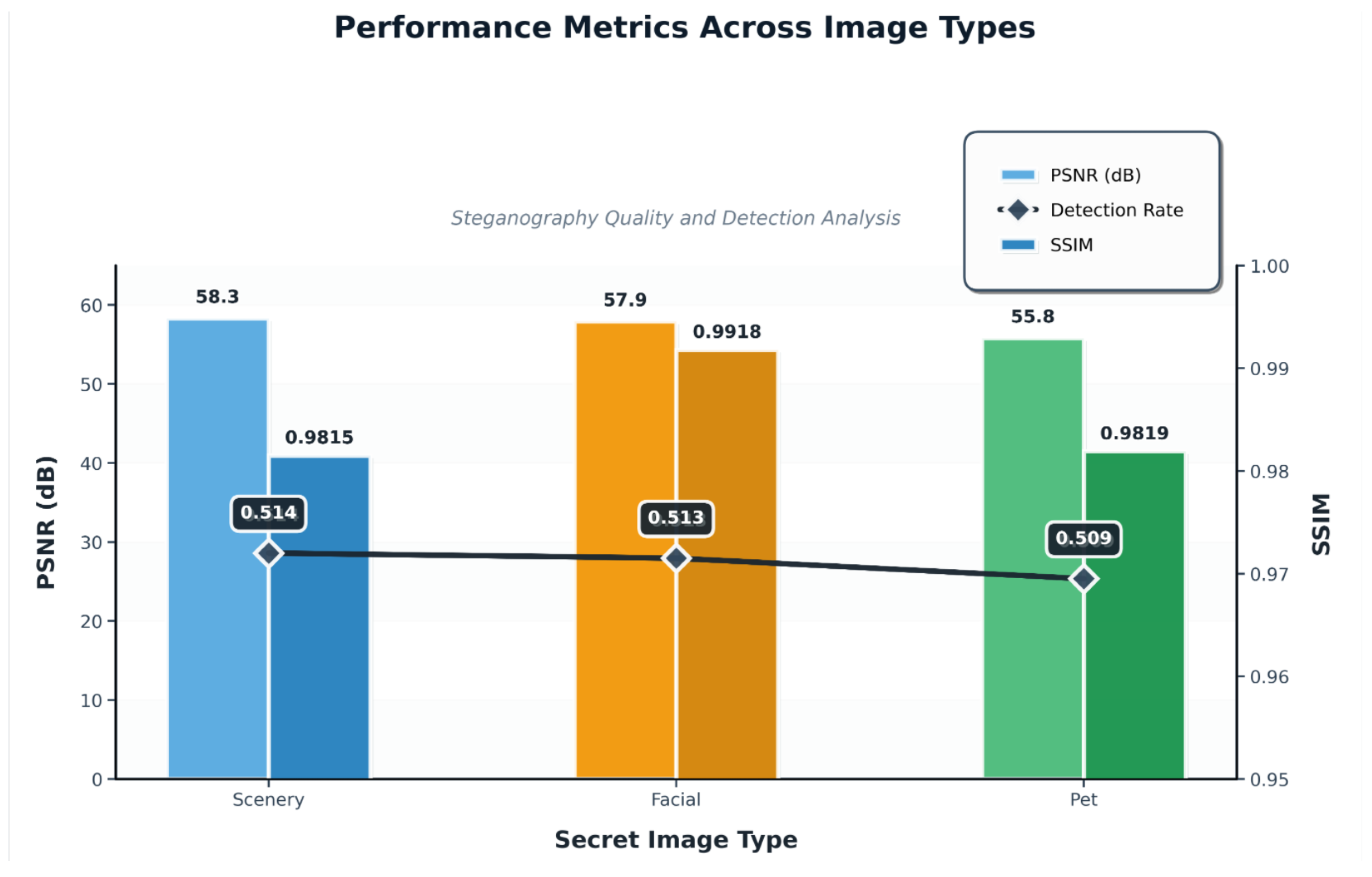

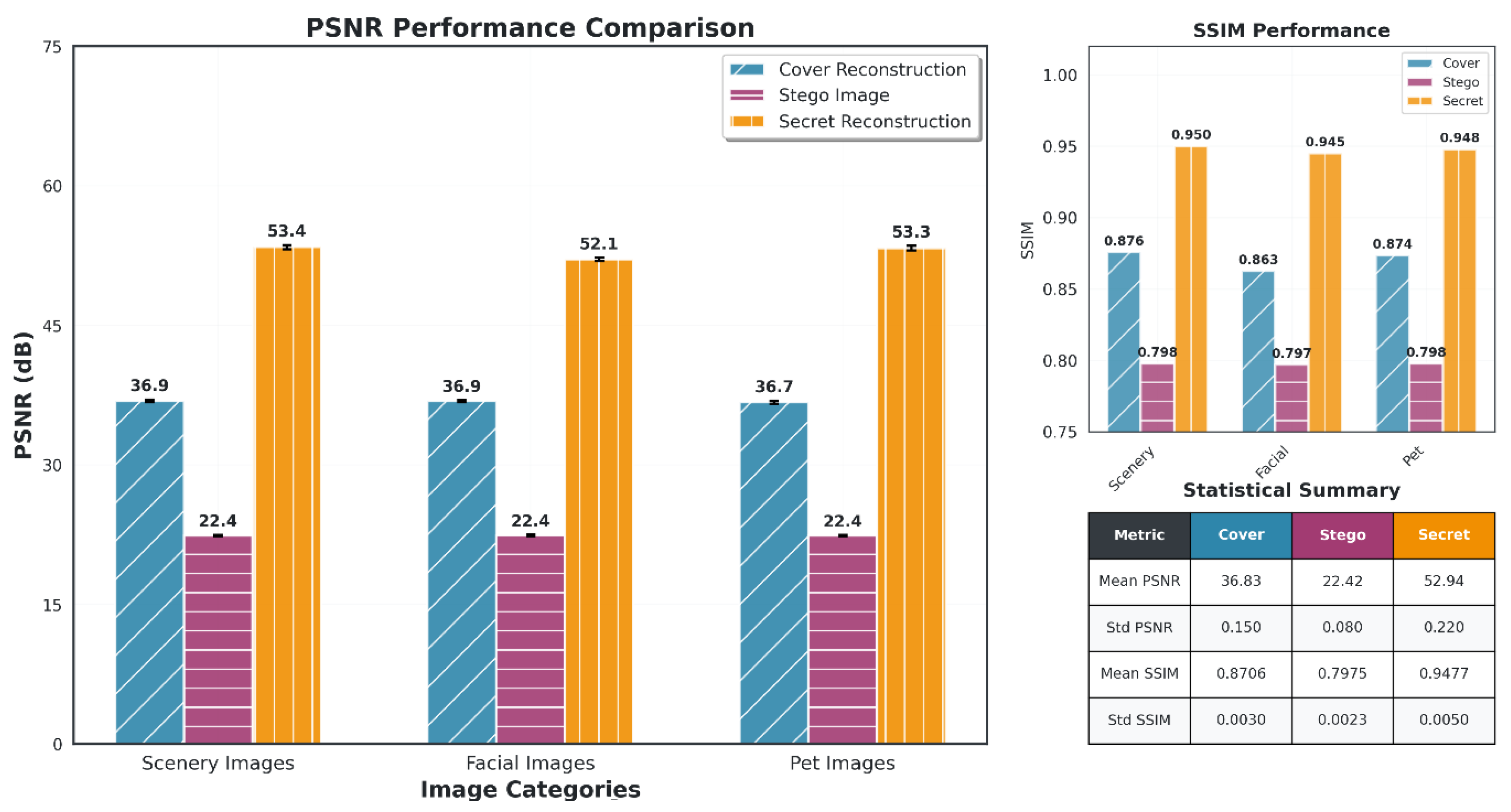

Figure 4, along with

Table 3 and

Table 4, presents the performance metrics of our proposed model. These results serve as the basis for the upcoming comparisons in the next three sub-subsections, where we evaluate and contrast the performance of our model with that of other state-of-the-art methods across different metrics and datasets. Unless noted, all results are under identical

and datasets.

4.3.1. Comparison of Three Models on a Randomized Dataset

HiNet [

29], a state-of-the-art steganographic model utilizing an invertible U-Net-based encoder–decoder architecture, was evaluated across three categories—scenery, facial, and pet images—based on PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index) scores for cover image reconstruction, stego image quality, and secret recovery, as shown in

Figure 5. The model achieved a cover PSNR of approximately 36.83 dB, indicating good preservation of cover image quality, with the PSNR for secret recovery reaching 52.94 dB, which reflects a strong ability to recover the secret with high fidelity. The corresponding SSIM values for cover and secret recovery were 0.8706 and 0.9477, respectively, suggesting that while HiNet effectively preserves the cover image structure, it excels even more in secret recovery. However, the PSNR for stego images remained low, at 22.4 dB across all categories, indicating significant distortion introduced during the embedding process. The SSIM for stego images was similarly low, at 0.7975, further highlighting the degradation in image quality. These results show that HiNet prioritizes secret recovery at the cost of stego image quality, demonstrating excellent performance in recovering the secret but leaving the stego image with noticeable distortion. Overall, HiNet achieves a good balance between cover and secret recovery quality but could be further optimized to reduce the perceptual degradation of the stego image, ensuring that both embedding and recovery processes are of higher quality across all image categories.

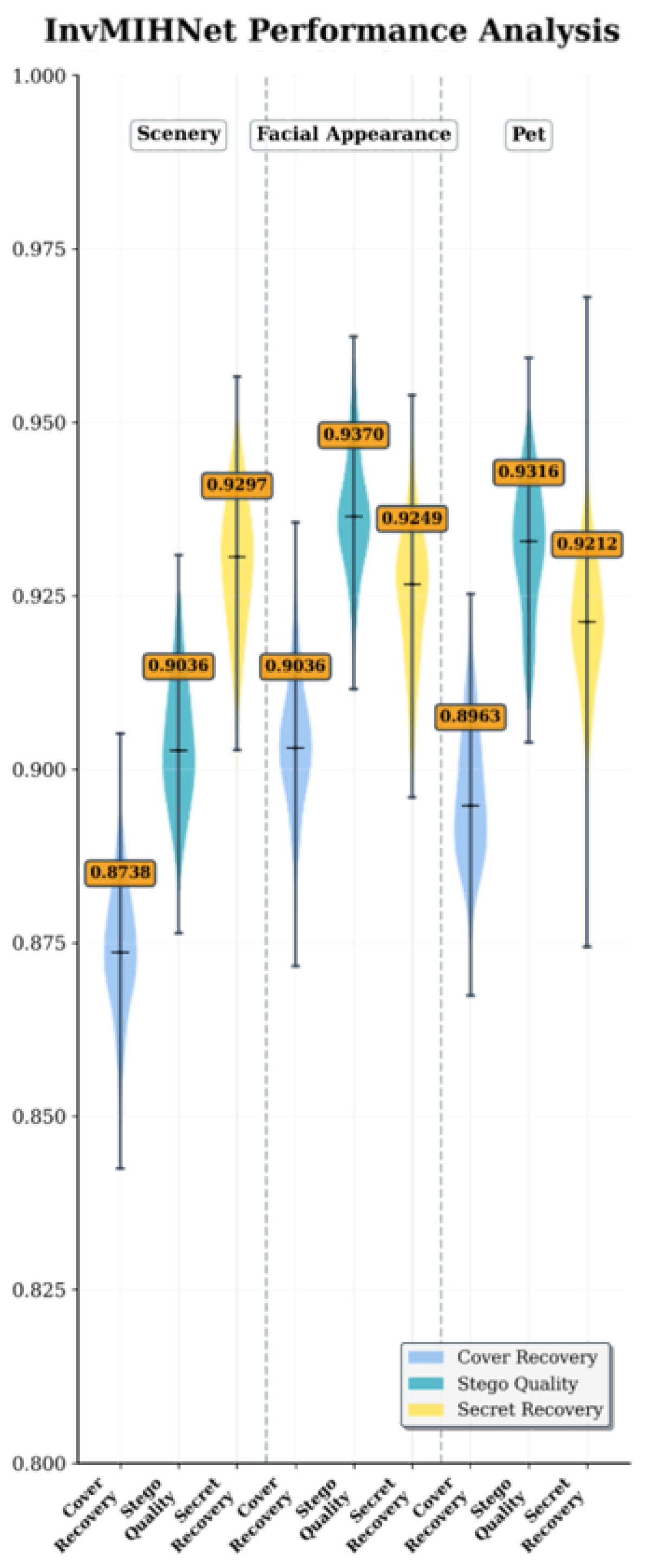

InvMIHNet [

30] builds upon HiNet by incorporating a reversible mosaic mechanism specifically designed for multi-secret hiding. The model demonstrates notable performance when embedding a single secret, achieving a cover SSIM of approximately 0.9297 and a secret recovery SSIM of around 0.9370, as shown in

Figure 6. These scores reflect solid performance in preserving the cover image quality and recovering the secret, particularly in simpler scenarios. However, the use of a fixed-grid mosaic embedding architecture introduces limitations in adaptability, particularly when dealing with varied or distorted content. The rigid grid structure hampers the model’s ability to effectively handle more complex or distorted images, leading to noticeable performance degradation. This is evident in the stego quality, which shows a decrease in SSIM compared to the cover and secret recovery values. The performance gaps indicate that while InvMIHNet excels in controlled conditions, its fixed embedding structure limits its flexibility and robustness when dealing with diverse or challenging image datasets.

For further evaluation, we implemented a baseline CNN autoencoder model, which uses a simple encoder–decoder architecture to embed and recover secrets. The results, summarized in

Table 5, show that the baseline model struggles significantly with embedding and recovery tasks. In the no-secret setting, the baseline achieves a relatively high cover reconstruction PSNR (approximately 41.17 dB) and SSIM (about 0.933), indicating decent performance when no secret is embedded. However, when a secret is added, the PSNR drops dramatically, to approximately 21 dB, and the SSIM decreases to around 0.294, reflecting significant distortion between the stego and cover images. Furthermore, secret recovery is suboptimal, with an average secret PSNR of roughly 27.56 dB and SSIM of approximately 0.921.

In comparison to HiNet, our model outperforms in all key performance metrics. Specifically, our method achieves a cover PSNR of approximately 58 dB and a secret recovery PSNR of around 57 dB, both significantly surpassing HiNet’s values. Moreover, the SSIM values for our model’s cover and secret recovery are 0.9906 and 0.9815, respectively, demonstrating a more accurate reconstruction of both the cover image and the embedded secret. These higher PSNR and SSIM values reflect not only a more faithful preservation of cover image quality with minimal distortion but also the ability to recover the secret with near-lossless fidelity, something that HiNet struggles to achieve due to its static encoder–decoder design that fails to fully disentangle cover and secret features.

Similarly, when compared to InvMIHNet, our model consistently excels across all performance metrics. InvMIHNet, with its fixed mosaic grid structure, performs well under controlled conditions but struggles when the content or distortions deviate from the training data. In contrast, our model demonstrates superior adaptability. Specifically, our secret recovery SSIM of 0.9815 is a significant improvement over InvMIHNet’s 0.9370, showing how our method better retains the fidelity of the secret under diverse conditions. Moreover, our cover SSIM of approximately 0.9819 outperforms InvMIHNet’s 0.9297, highlighting our model’s more effective preservation of the cover image integrity, even when handling complex or distorted content.

Lastly, in comparison to the baseline CNN autoencoder, our approach achieves near-lossless secret recovery, with PSNR values consistently exceeding 57 dB (e.g., 58.3 dB for Class 0) and SSIM values above 0.9815 across all categories. The baseline model, however, experiences dramatic performance degradation when embedding secrets, with a PSNR of around 21 dB and SSIM dropping to 0.296, revealing the model’s severe limitations in generalizing across different image types. Our model, on the other hand, delivers significantly higher secret recovery quality and far less stego–cover distortion, preserving high cover quality (PSNR around 55.8 dB) while ensuring accurate secret recovery.

Unlike HiNet’s static, one-size-fits-all encoder–decoder, InvMIHNet’s rigid mosaic grid, and the baseline CNN autoencoder’s severe category dependence, our framework introduces (i) a secret pre-processing stage that assigns a semantic label to the payload and (ii) a dynamic, category-aware routing scheme among multiple specialized encoder–decoder pairs. The baseline CNN autoencoder struggles with generalization across different image types, showing significant performance degradation (PSNR ≈ 21 dB; SSIM ≈ 0.294) when embedding secrets, as it is heavily influenced by the specific category of the secret. Similarly, HiNet and InvMIHNet both employ static architectures—HiNet with a one-size-fits-all encoder–decoder, and InvMIHNet with a fixed grid structure—that hinder adaptability to varying content, leading to suboptimal performance in complex or distorted image conditions. In contrast, our method preserves single-path efficiency while removing structural bias, with a dual-branch, stego-aware selector that fuses evidence from high-level features and the (possibly noisy) stego image to activate only the matched decoder. Additionally, our framework incorporates a broad-spectrum, high-intensity corruption curriculum (covering additive and shot noise, impulse noise, compression artifacts, blur, geometric warps, illumination/color shifts, and erasing) during training to prevent channel overfitting and harden recovery. This flexibility—absent in the fixed architectures of HiNet, InvMIHNet, and the CNN autoencoder—ensures robust generalization across diverse content and distortions, all while maintaining minimal computational overhead per sample.

4.3.2. Comparison of Three Models on Targeted Datasets

In this sub-subsection, we compare the performance of the proposed method with that of three state-of-the-art models: HiNet [

29], InvMIHNet [

30], and the baseline CNN autoencoder [

31].

Table 6 presents key performance metrics, including PSNR, SSIM, LPIPS, SIFID, bit accuracy (clean), secret recovery bit accuracy, bit accuracy (ECC), and word accuracy, across multiple datasets (MNIST, MetFACE, and Stock1K).

HiNet exhibits lower fidelity and weaker reliability overall: PSNR ≈ 35–37 dB and SSIM ≈ 0.85–0.88, with LPIPS ≈ 0.05–0.07 and SIFID ≈ 0.03–0.04. While Bit acc. (clean) remains moderate-to-high (≈0.95–0.98), the secret recovery Bit acc. drops to ≈0.72–0.80, Bit acc. (ECC) to ≈0.78–0.86, and Word acc. to ≈0.65–0.75. This pattern suggests noticeable perceptual distortion and limited robustness in secret recovery across datasets.

InvMIHNet attains mid-to-high performance: PSNR ≈ 39–40 dB, SSIM ≈ 0.95–0.97, LPIPS ≈ 0.03–0.04, and SIFID ≈ 0.02–0.03. Its accuracy metrics are correspondingly stronger than those of HiNet: Bit acc. (clean) ≈ 0.97–0.997, secret recovery Bit acc. ≈ 0.94–0.99, Bit acc. (ECC) ≈ 0.86–0.92, and Word acc. ≈ 0.80–0.88. Overall, it is more robust than HiNet but still shows a clear gap compared to the proposed method on all datasets.

The baseline autoencoder offers moderate image reconstruction quality but weak secret recovery: PSNR ≈ 38–41 dB, SSIM ≈ 0.91–0.93, LPIPS ≈ 0.06–0.08, and SIFID ≈ 0.04–0.05. However, its secret recovery Bit acc. is only ≈0.20–0.28, with Bit acc. (ECC) ≈ 0.34–0.45 and Word acc. ≈ 0.23–0.36; even the clean setting yields Bit acc. ≈ 0.92–0.96. These results indicate limited robustness for secret retrieval and semantic decoding under steganographic constraints.

The proposed method excels in all key metrics, outperforming HiNet, InvMIHNet, and the baseline CNN across datasets. The dynamic encoder–decoder selection mechanism enhances the model’s adaptability to various image types, ensuring better performance in secret recovery and cover reconstruction. This flexibility enables our model to provide a more robust and versatile solution for real-world steganography tasks.

Across MNIST, MetFACE, and Stock1K, the proposed method consistently leads in secret fidelity (higher PSNR/SSIM, lower LPIPS/SIFID) and recovery reliability (higher bit- and word-level accuracies, with ECC pushing accuracy close to saturation). InvMIHNet occupies a middle ground, with solid robustness; HiNet and the baseline CNN lag notably in secret recovery and semantic readability. The aggregate trend aligns with

Table 6 and underscores the proposed method’s superior generalization, fidelity, and reliability.

4.4. Effects of Compression Ratio (r) on Cover and Secret Recovery

4.4.1. Setup and Notation

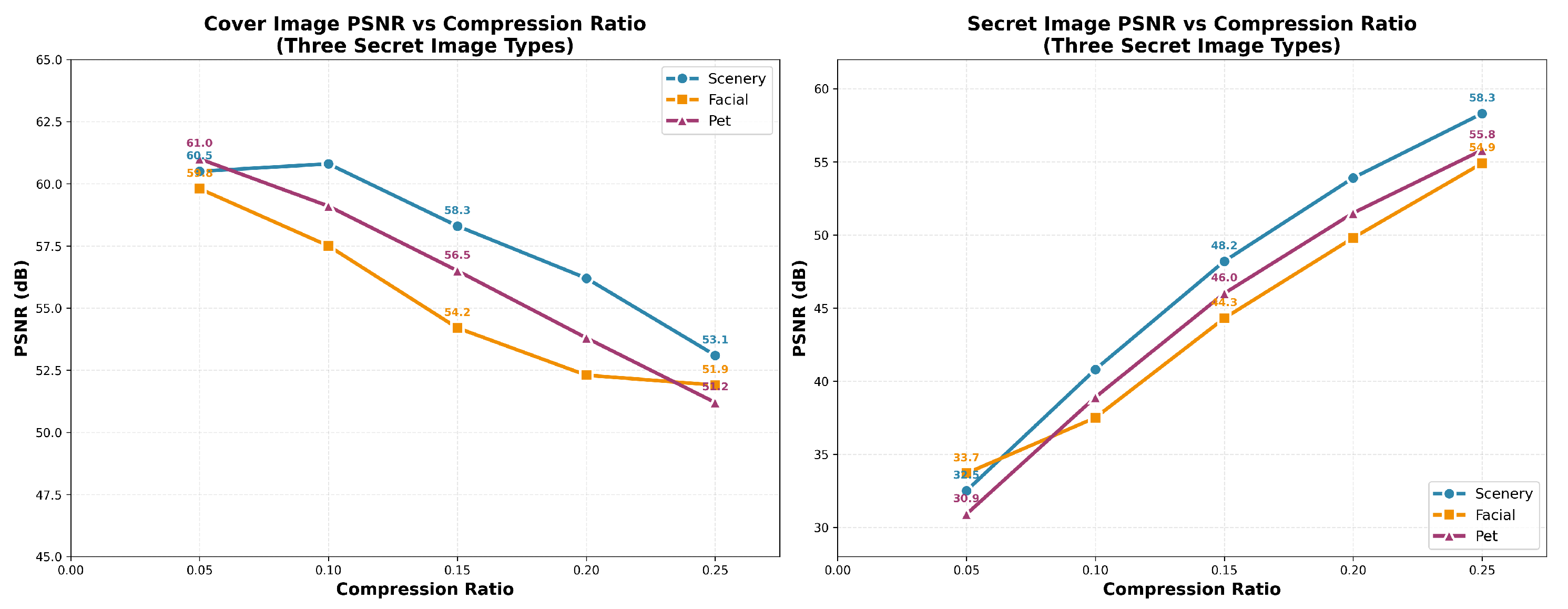

In this section, we analyze the trade-offs between cover image quality and secret recovery fidelity across various compression ratios. We examine how compression ratios affect the performance of our model in terms of PSNR for both cover images and decoded secrets. The evaluation is carried out across three distinct secret types: scenery, facial, and pet images. We explore the relationship between compression and fidelity, as shown in the following figures.

Let

r denote the compression ratio, where

is the PSNR of the cover image after embedding, and

is the PSNR of the decoded secret. The experimental setup is similar to that described in previous sections, where we systematically vary the compression ratio

r and observe its impact on both the cover image and the decoded secret.

Figure 7 presents the results for three secret categories—scenery, facial, and pet images—averaged over multiple trials. This analysis reveals how the compression ratio impacts both cover image quality and secret fidelity across different image types.

4.4.2. Compression vs. Quality: PSNR of Cover Images and Secrets

As the compression ratio r increases, the PSNR of the cover images () decreases. For example, in the scenery category, when r increases from 0.05 to 0.25, the PSNR for the cover image drops from 61.0 dB to 53.1 dB. Similarly, for the facial category, PSNR decreases from 60.5 dB to 51.9 dB, and for pet images, it decreases from 59.6 dB to 51.2 dB. These decreases in cover image quality are expected, as higher compression typically introduces more distortion.

However, the PSNR for the decoded secrets () increases with the compression ratio, as the embedded secret becomes more distinguishable. In the scenery category, increases from approximately 33.7 dB to 58.3 dB, for facial it rises from 32.5 dB to 55.8 dB, and for pet images it increases from 30.9 dB to 54.9 dB. This improvement is because the secret data is better recovered as the embedding becomes more compressed.

4.4.3. Different Secret Types and Their Performance Variations

The impact of the compression ratio varies significantly with secret type. Scenery images, with their rich textures and high-frequency details, maintain better cover image quality at higher compression ratios, making them the most resilient to embedding. Scenery images also exhibit the best recovery of secret data.

Facial images, due to their low-frequency dominance and symmetry, are more sensitive to compression. The cover PSNR drops more significantly at higher compression ratios, and secret recovery slightly decreases as well. The fine details of facial structures, such as eyes and mouth edges, limit the recovery accuracy as the compression ratio increases.

Pet images lie between the scenery and facial categories. While they offer better secret recovery at moderate compression ratios, their cover PSNR deteriorates more quickly at higher compression ratios due to edge-aligned fur textures.

4.4.4. Conclusions: Optimization of Compression Ratio

Our analysis shows a clear trade-off between cover image quality and secret recovery fidelity. For scenery images, higher compression ratios provide an optimal balance, with both high-quality cover images and excellent secret recovery. Facial images require more cautious compression settings to preserve cover quality while maintaining good secret recovery. Pet images perform well at moderate compression ratios but suffer from significant cover distortion at higher compression.

Based on this, we recommend a content-aware strategy for selecting compression ratios. For scenery and pet images, higher compression ratios are preferred to exploit image redundancy, while for facial images, more conservative compression ratios should be chosen to preserve structural integrity. Additionally, the composite objective can be used to optimize r based on application-specific priorities.

In this section, we compare the performance of the proposed model against several state-of-the-art approaches—HiNet, InvMIHNet, and a CNN baseline. Before the head-to-head comparison, we evaluate robustness under diverse corruptions (e.g., Gaussian noise, JPEG compression, impulse noise), reporting bit accuracy as a function of distortion, as illustrated in the figures. The experimental results, summarized in

Table 6, show that our method outperforms all competitors across every evaluation metric (PSNR, SSIM, LPIPS, and bit accuracy) on MNIST, MetFACE, and Stock1K. In particular, it better preserves cover-image quality and ensures accurate secret recovery, with notable gains in bit accuracy and SSIM. Moreover, additional experiments (the subsection Comparison of Multiple Models) were based on randomly selected cover images from a diverse set, the same as in the Experiments section above, spanning landscapes, faces, and pets; thus, the performance of our model was sourced from the average values in the “Experiments” section as a reference. Unlike the centralized comparison in

Table 6, where the evaluation is performed on a consistent set of images, these follow-up experiments provide a broader understanding of the model’s adaptability to different types of content and distortion conditions. Despite the variation in cover image types, our method consistently maintains robust performance across all distortion types, further emphasizing its real-world applicability in diverse steganographic settings. The accompanying figures provide a visual summary, highlighting sustained bit accuracy even under challenging noise conditions.

4.5. Robustness Evaluation: Bit Accuracy Under Different Distortions

4.5.1. Experimental Setup

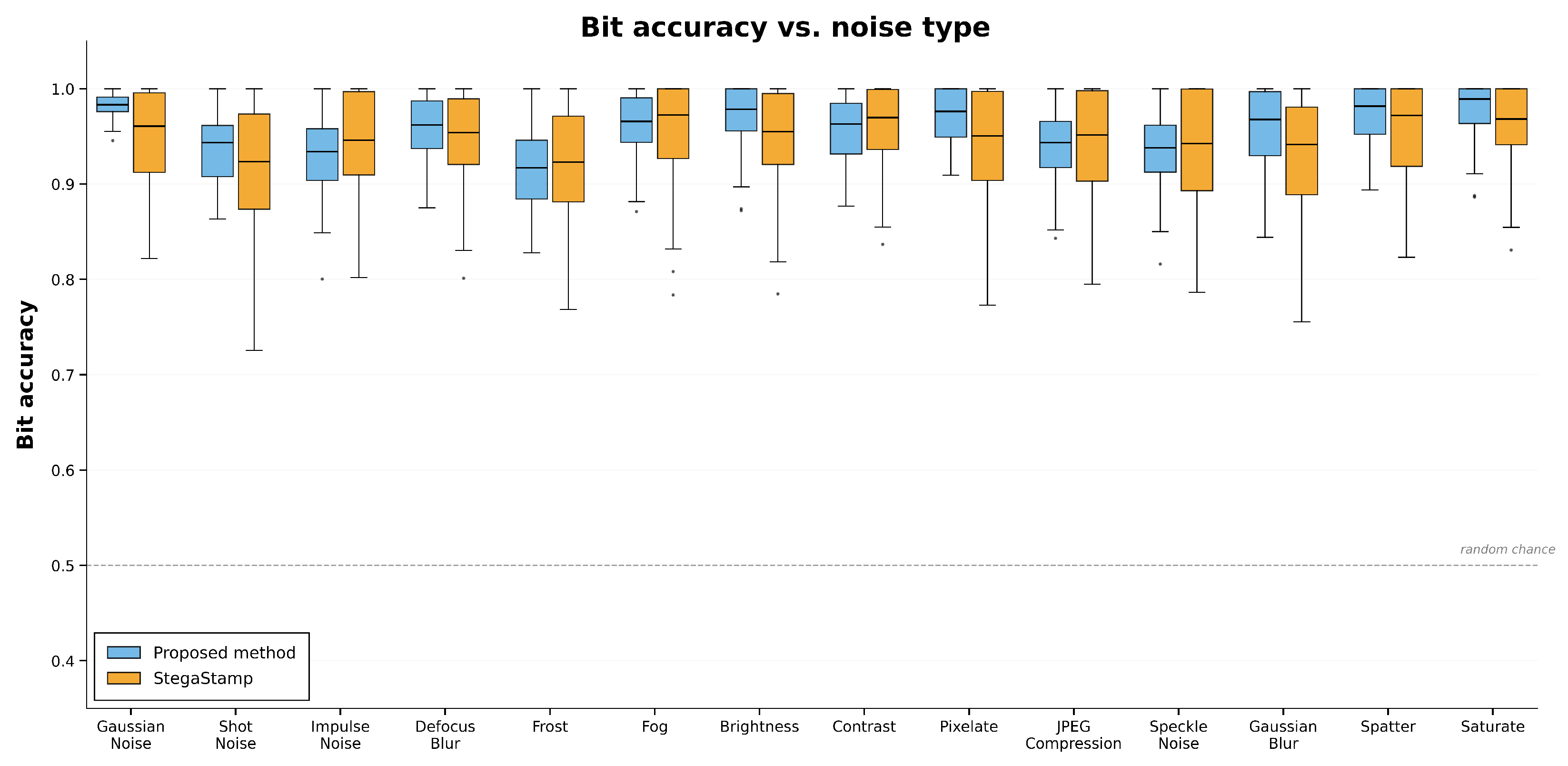

The setup mirrored that of the previous sections, with the modification that stego images were subjected to different noise types. We compared the proposed method with StegaStamp under Gaussian noise, shot noise, JPEG compression, and related distortions. The primary evaluation metric was bit accuracy (Bit−acc), measuring the correctness of secret recovery after corruption.

Figure 8 reports Bit−acc across noise types for both methods.

4.5.2. Overall Observations

The proposed method generally surpasses StegaStamp across all noise types, although the margin depends on the specific distortion. Under Gaussian noise, both methods achieve similarly high Bit−acc (near 1), indicating comparable robustness to low-level additive perturbations and only a slight advantage for the proposed model. In contrast, StegaStamp is more sensitive to several non-additive distortions (e.g., shot and impulse noise), where its Bit−acc drops more steeply. Overall, the proposed method better preserves the integrity of the embedded secret across a wider range of corruptions.

4.5.3. Performance Under Specific Noise Types

Gaussian Noise: Both methods are robust, with Bit−acc close to 1. The proposed method retains a modest but consistent edge, particularly at lower noise levels.

Shot and Impulse Noise: The proposed method markedly outperforms StegaStamp. While StegaStamp exhibits a substantial decline in Bit−acc, the proposed approach maintains high accuracy, reflecting greater resilience to abrupt, pixel-level perturbations.

JPEG Compression: The proposed method achieves higher Bit−acc in the presence of compression artifacts, indicating stronger resistance to quantization and blocking effects common in practical pipelines.

Defocus Blur and Frost: For blurring and weather-like distortions, the proposed method again shows superior robustness: its Bit−acc remains high, whereas StegaStamp experiences a noticeable drop, suggesting better preservation of both image quality and secret recovery under these conditions.

4.5.4. Implications for Real-World Applications

These findings indicate substantial gains in robustness across common real-world distortions. The ability to sustain high Bit−acc under JPEG compression, impulse-like noise, and blur suggests that the method is well suited to settings where images are routinely recompressed, transmitted over noisy channels, or captured under non-ideal conditions (e.g., social media platforms, messaging systems, or lossy storage). The enhanced resilience supports practical deployment where cover-image fidelity and reliable secret recovery are both critical.

4.5.5. Conclusions

Overall, the proposed method maintains superior bit accuracy across a range of noise types relative to StegaStamp. While the advantage is smaller under Gaussian noise, the prevailing trend reflects greater resilience to diverse distortions. These results position the proposed approach as a strong candidate for real-world steganography applications that demand high cover-image quality and robust secret recovery under challenging conditions.

4.6. Steganalysis Detection Performance

4.6.1. Experimental Setup

To rigorously assess imperceptibility, we evaluated our method under several modern steganalysis detectors, rather than relying on a single VGG-like CNN. Specifically, we considered four architectures—(i) a VGG-style convolutional network (VGG-CNN) similar to the one used in our initial experiments, (ii) SRNet, (iii) Zhu-Net, and (iv) Ye-Net—which are representative of specialized, high-capacity CNNs widely adopted in contemporary steganalysis.

All of these detectors operate on grayscale inputs and were trained as binary classifiers (stego vs. cover). For each detector, we constructed a pooled training set by mixing covers and stego images from four hiding models under the same embedding rate and with identical pre-processing: our proposed method, a CNN autoencoder baseline, HiNet, and InvMIHNet. The class prior was balanced. Each detector was trained only once on this pooled set. At test time, we evaluated every hiding method separately by feeding its covers and stego images to the same trained detector and computing the area under the ROC curve (AUC). We interpreted closeness to chance as favorable for the hider and, therefore, used both the raw AUC and the deviation from chance, , as indicators of detectability.

To probe cross-dataset generalization, we adopted a train-A/test-B protocol. All steganalyzers were trained on cover/stego pairs constructed from ImageNet covers (ImageNet → ImageNet, “in-dataset”), and then evaluated without retraining on a disjoint test set where covers (and the corresponding stego images) were drawn from COCO (ImageNet → COCO, “cross-dataset”). This setting helped us distinguish between genuinely hard-to-detect stego distributions and detectors that overfit to a single dataset.

4.6.2. Results and Analysis

Table 7 reports the AUC scores for all hiding methods across the four detectors and the two train/test protocols. For the VGG-CNN baseline detector, our method yields an AUC very close to chance, whereas the CNN autoencoder, HiNet, and InvMIHNet baselines are substantially more detectable. The same pattern persists when replacing VGG-CNN with the stronger SRNet, Zhu-Net, and Ye-Net: across all three specialized detectors, our method consistently achieves the smallest deviation from 0.5, indicating that it remains the hardest to detect even under state-of-the-art steganalyzers.

The cross-dataset configuration (ImageNet → COCO) provides an additional stress test. For all baseline hiding methods, the AUC values either remain clearly above 0.5 or increase further under domain shift, suggesting that their stego distributions are reliably separable from covers even when the detector is applied out-of-domain. In contrast, our method maintains AUCs clustered tightly around 0.5 across detectors in both in-dataset and cross-dataset settings, thus exhibiting the lowest overall detectability. This behavior is consistent with the intended effect of our multi-path architecture and corruption-aware training, which aim to reduce mechanistic overfitting and produce stego images that better match the statistics of natural covers under realistic processing chains.

5. Conclusions and Prospects

Our deployment-ready deep steganography framework unifies latent space embedding, label-conditioned multi-encoder–decoder routing via a dual-branch selector, and progressive loss balancing. Trained with a broad-spectrum corruption curriculum, it remains robust under distribution shift while preserving single-path inference cost. The result is near-lossless secret recovery, high PSNR/SSIM with low detectability, and an operator-controllable capacity–imperceptibility trade-off, with runtime and memory comparable to those of conventional single-path networks.

Four priority directions guide our future research: (i) Close the sim-to-real gap by training through differentiable social media compression/resampling, camera ISP, and print–scan pipelines, mixed during training to enforce invariance to re-encoding, resizing, and color transforms; (ii) move to a principled rate–distortion–detectability objective by optimizing fidelity and secrecy at a target payload, adversarially training against strong steganalyzers, and reporting calibrated risk at fixed operating points; (iii) adopt content-aware capacity control by allocating payload spatially according to semantics and uncertainty, exposing a tunable rate–imperceptibility–fidelity Pareto front at inference; and (iv) add adaptive inference via lightweight test-time adaptation and continual routing, with confidence/conformal calibration, to track non-stationary channels and new content without offline retraining.

In summary, by unifying latent space embedding, category-aware routing, and corruption-hardened training under a deployment-conscious design, the proposed framework delivers state-of-the-art fidelity, imperceptibility, and robustness while maintaining practical efficiency. Pursuing the outlined research directions will move the field from empirically strong yet brittle systems toward certifiably robust, deployment-ready steganography that scales across modalities, devices, and platforms without compromising security or perceptual quality.

Author Contributions

Conceptualization, Y.Z., N.W. and S.S.; methodology, Y.Z.; validation, Y.Z., N.W., X.H., Y.P. and S.S.; formal analysis, Y.Z.; investigation, Y.Z.; resources, N.W.; writing—original draft preparation, Y.Z.; writing—review and editing, N.W. and S.S.; funding acquisition, N.W.; Y.Z. is the first author; N.W. is the second author; X.H. is the third author; Y.P. is the fourth author; S.S. is the corresponding author. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Key Research and Development Program of China (Grant No. 2020YFA0712300), the Shanghai Sailing Program (Grant No. 24YF2730200), and the National Natural Science Foundation of China (Grant Nos. 62471294, 62231022 and 62502312).

Institutional Review Board Statement

Ethical review and approval were waived for this study because it used publicly available, de-identified datasets (MNIST, MetFACE, and Stock1K) and involved no interaction with human participants or animals.

Data Availability Statement

The code and datasets supporting the findings of this study are available from the corresponding author upon reasonable request. Please contact

shuoshao@usst.edu.cn (S.S.).

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Wani, M.A.; Sultan, B. Deep learning based image steganography: A review. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2023, 13, e1481. [Google Scholar] [CrossRef]

- Song, B.; Wei, P.; Wu, S.; Lin, Y.; Zhou, W. A survey on deep-learning-based image steganography. Expert Syst. Appl. 2024, 254, 124390. [Google Scholar]

- Kaur, S.; Singh, S.; Kaur, M.; Lee, H.-N. A systematic review of computational image steganography approaches. Arch. Comput. Methods Eng. 2022, 29, 4775–4797. [Google Scholar] [CrossRef]

- Kadhim, I.J.; Premaratne, P.; Vial, P.J.; Halloran, B. Comprehensive survey of image steganography: Techniques, evaluations, and trends in future research. Neurocomputing 2019, 335, 299–326. [Google Scholar] [CrossRef]

- Wang, Z.; Byrnes, O.; Wang, H.; Sun, R.; Ma, C.; Chen, H.; Wu, Q.; Xue, M. Data hiding with deep learning: A survey unifying digital watermarking and steganography. arXiv 2021, arXiv:2107.09287. [Google Scholar]

- Prabakaran, G.K.; Bhavani, R. A modified secure digital image steganography based on discrete wavelet transform. In Proceedings of the 2012 International Conference on Computing, Electronics and Electrical Technologies (ICCEET), Nagercoil, India, 21–22 March 2012; pp. 1096–1100. [Google Scholar]

- Luo, W.; Wei, K.; Li, Q.; Ye, M.; Tan, S.; Tang, W.; Huang, J. A Comprehensive Survey of Digital Image Steganography and Steganalysis. APSIPA Trans. Signal Inf. Process. 2024, 13, e30. [Google Scholar] [CrossRef]

- Liu, L.; Tang, L.; Zheng, W. Lossless Image Steganography Based on Invertible Neural Networks. Entropy 2022, 24, 1762. [Google Scholar] [CrossRef]

- Baluja, S. Hiding images in plain sight: Deep steganography. Adv. Neural Inf. Process. Syst. (NeurIPS) 2017, 30, 2069–2079. [Google Scholar]

- Zhang, K.A.; Cuesta-Infante, A.; Xu, L.; Veeramachaneni, K. SteganoGAN: High capacity image steganography with GANs. arXiv 2019, arXiv:1901.03892. [Google Scholar] [CrossRef]

- Abdollahi, B.; Harati, A.; Taherinia, A. Image steganography based on smooth cycle-consistent generative adversarial network. J. Inf. Secur. Appl. 2023, 79, 103631. [Google Scholar]

- Meng, R.; Cui, Q.; Zhou, Z.; Fu, Z.; Sun, X. A steganography algorithm based on CycleGAN for covert communication in the Internet of Things. IEEE Access 2019, 7, 90574–90584. [Google Scholar] [CrossRef]

- Li, Z.; Cheng, Z.; Zhang, Y.; Zhang, X. Robust image steganography framework based on generative adversarial network. J. Electron. Imaging 2021, 30, 023006. [Google Scholar] [CrossRef]

- Zhu, J.; Kaplan, R.; Johnson, J.; Fei-Fei, L. HiDDeN: Hiding data with deep networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 657–672. [Google Scholar]

- Lu, W.; Zhang, J.; Zhao, X.; Zhang, W.; Huang, J. Secure Robust JPEG Steganography Based on AutoEncoder with Adaptive BCH Encoding. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 2909–2922. [Google Scholar]

- Wang, Z.; Zhang, X.; Zhang, X.; Zhang, H. Deep Image Steganography Using Transformer and Recursive Permutation. Entropy 2022, 24, 878. [Google Scholar] [CrossRef]

- Fan, H.; Jin, C.; Li, M. AGASI: A Generative Adversarial Network-Based Approach to Strengthening Adversarial Image Steganography. Entropy 2025, 27, 282. [Google Scholar] [CrossRef]

- Shang, F.; Lan, Y.; Yang, J.; Li, E.; Kang, X. Robust data hiding for JPEG images with invertible neural network. Neural Netw. 2023, 163, 219–232. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, Y.; Hu, D.; Chen, K.; Rong, X.; Zhang, W. LDStega: Practical and robust generative image steganography based on latent diffusion models. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 3001–3009. [Google Scholar]

- Duan, X.; Li, B.; Yin, Z.; Zhang, X.; Luo, B. Robust Image Steganography Against Lossy JPEG Compression Based on Embedding Domain Selection and Adaptive Error Correction. Expert Syst. Appl. 2023, 229, 120416. [Google Scholar] [CrossRef]

- Pevnỳ, T.; Filler, T.; Bas, P. Using high-dimensional image models to perform highly undetectable steganography. In Information Hiding (IH); Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6387, pp. 161–177. [Google Scholar]

- Holub, V.; Fridrich, J. Designing steganographic distortion using directional filters. In Proceedings of the IEEE International Workshop on Information Forensics and Security (WIFS), Costa Adeje, Spain, 2–5 December 2012; pp. 234–239. [Google Scholar]

- Zhu, H.; Zhang, T.; Li, B. Hiding data in colors: Secure and lossless image steganography via conditional invertible neural networks. arXiv 2022, arXiv:2201.07444. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, X.; Zhang, J. Research on image steganography based on a conditional invertible neural network. Signal Image Video Process. 2025, 19, 262. [Google Scholar] [CrossRef]

- Shazeer, N.; Mirhoseini, A.; Maziarz, K.; Davis, A.; Le, Q.V.; Hinton, G.; Dean, J. Outrageously large neural networks: The sparsely-gated mixture-of-experts layer. In Proceedings of the International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017. [Google Scholar]

- Zhou, Y.; Lei, T.; Liu, H.; Du, N.; Huang, Y.; Zhao, V.; Dai, A.M.; Chen, Z.; Le, Q.V.; Laudon, J. Mixture-of-experts with expert choice routing. Adv. Neural Inf. Process. Syst. 2022, 35, 7103–7114. [Google Scholar]

- Li, M.; Zhan, J.; Ge, Y. Image progressive steganography based on multi-frequency fusion deep network with dynamic sensing. Expert Syst. Appl. 2025, 264, 125829. [Google Scholar] [CrossRef]

- Xu, Y.; Mou, C.; Hu, Y.; Xie, J.; Zhang, J. Robust invertible image steganography. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 7875–7884. [Google Scholar]

- Jing, J.; Deng, X.; Xu, M.; Wang, J.; Guan, Z. HiNet: Deep image hiding by invertible network. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021. [Google Scholar]

- Chen, Z.; Liu, T.; Huang, J.-J.; Zhao, W.; Bi, X.; Wang, M. Invertible mosaic image hiding network for very large capacity image steganography. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 4520–4524. [Google Scholar]

- Bui, T.-V.; Agarwal, S.; Yu, N.; Collomosse, J. RoSteALS: Robust steganography using autoencoder latent space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Zhang, C.; Benz, P.; Karjauv, A.; Sun, G.; Kweon, I.S. UDH: Universal deep hiding for steganography, watermarking, and light field messaging. Adv. Neural Inf. Process. Syst. (NeurIPS) 2020, 33, 10223–10234. [Google Scholar]

Figure 1.

Overall System Architecture.

Figure 1.

Overall System Architecture.

Figure 2.

Dual-Branch Mixture-of-Experts Classifier (DB-MoE).

Figure 2.

Dual-Branch Mixture-of-Experts Classifier (DB-MoE).

Figure 3.

The relationship between the loss function, SSIM, and MSE loss.

Figure 3.

The relationship between the loss function, SSIM, and MSE loss.

Figure 4.

Performance comparison across different image types: PSNR (left), SSIM (right), and detection rate (center). The model performs well on scenery, facial, and pet images, with consistently high PSNR and SSIM values. The detection rate is close to that of random guessing, which indicates high security for the hidden information.

Figure 4.

Performance comparison across different image types: PSNR (left), SSIM (right), and detection rate (center). The model performs well on scenery, facial, and pet images, with consistently high PSNR and SSIM values. The detection rate is close to that of random guessing, which indicates high security for the hidden information.

Figure 5.

Performance comparison of PSNR and SSIM for cover reconstruction, stego image, and secret reconstruction across different image categories: scenery, facial, and pet Images. The statistical summary provides the mean PSNR, SSIM, and their respective standard deviations.

Figure 5.

Performance comparison of PSNR and SSIM for cover reconstruction, stego image, and secret reconstruction across different image categories: scenery, facial, and pet Images. The statistical summary provides the mean PSNR, SSIM, and their respective standard deviations.

Figure 6.

Violin plots of SSIM for InvMIHNet across categories (scenery, facial, pet), showing cover recovery, stego quality, and secret recovery. The y-axis represents the SSIM, with width indicating sample density, and orange labels denote mean values.

Figure 6.

Violin plots of SSIM for InvMIHNet across categories (scenery, facial, pet), showing cover recovery, stego quality, and secret recovery. The y-axis represents the SSIM, with width indicating sample density, and orange labels denote mean values.

Figure 7.

Reading guide: Two-panel line plots of PSNR (y-axis, dB; higher is better) versus compression ratio/embedding rate (x-axis). Left: cover-image PSNR; Right: secret-image PSNR. Each curve corresponds to a secret category (Scenery, Facial, Pet; see legend), with numeric labels indicating the mean PSNR at each rate. Note the opposite trends—cover PSNR generally decreases as the rate increases, while secret PSNR increases—illustrating the capacity–imperceptibility trade-off.

Figure 7.

Reading guide: Two-panel line plots of PSNR (y-axis, dB; higher is better) versus compression ratio/embedding rate (x-axis). Left: cover-image PSNR; Right: secret-image PSNR. Each curve corresponds to a secret category (Scenery, Facial, Pet; see legend), with numeric labels indicating the mean PSNR at each rate. Note the opposite trends—cover PSNR generally decreases as the rate increases, while secret PSNR increases—illustrating the capacity–imperceptibility trade-off.

Figure 8.

Reading guide: Box plots of bit accuracy (y-axis, 0–1; higher is better) for each noise type (x-axis). For every distortion, two boxes are shown—blue: proposed method; orange: StegaStamp (see legend). Each box spans the interquartile range (25–75%), and the central line is the median; whiskers extend to 1.5 × IQR, and dots denote outliers. The gray dashed line at 0.5 indicates random-chance accuracy. Compare median height and box position (closer to 1.0 is better) and the box width (narrower implies more stable performance) across noise types to assess robustness.

Figure 8.

Reading guide: Box plots of bit accuracy (y-axis, 0–1; higher is better) for each noise type (x-axis). For every distortion, two boxes are shown—blue: proposed method; orange: StegaStamp (see legend). Each box spans the interquartile range (25–75%), and the central line is the median; whiskers extend to 1.5 × IQR, and dots denote outliers. The gray dashed line at 0.5 indicates random-chance accuracy. Compare median height and box position (closer to 1.0 is better) and the box width (narrower implies more stable performance) across noise types to assess robustness.

Table 1.

Comparison between our routing mechanism and representative adaptive/conditional approaches. “Secret-adaptive (semantic)” indicates whether the hiding pipeline changes with the semantic/statistical type of the secret image (beyond treating the payload as raw bits); “Cover-adaptive” indicates content-adaptive embedding on the cover; “Multi-branch/multi-expert” denotes the use of multiple specialized branches or experts; “Stego-aware routing” denotes the use of stego features/images in the routing decision; and “Channel-corruption curriculum” denotes explicit optimization under diverse corruptions and channel perturbations. The symbol ± indicates partial or indirect support (e.g., limited conditioning or a restricted set of channel models).

Table 1.