1. Introduction

Machine learning (ML) comprises a set of techniques for analyzing large volumes of data and is now a key tool in diverse fields, including statistics, condensed matter, high energy physics, astrophysics, cosmology, and quantum computing. ML encompasses three main approaches: supervised learning (using labeled data to learn mappings and make predictions), unsupervised learning (discovering patterns without labels, such as clustering and dimensionality reduction), and reinforcement learning (where an agent learns optimal policies through rewards and penalties).

In this work, we apply supervised and unsupervised deep learning methods to investigate disorder-induced phase transitions in the Biswas–Chatterjee–Sen (BChS) model. In this model, opinions are continuous,

, and pairwise interactions can be cooperative (+) or antagonistic (−). The fraction

q of antagonistic interactions controls the disorder. On fully connected networks, the model exhibits a continuous phase transition with Ising mean-field exponents [

1].

Various geometries have been explored in the literature, motivating our analysis. On regular lattices, as for example square and cubic, the continuous version exhibits a second-order transition and belongs to the Ising universality class in the corresponding dimensions [

2]. On Solomon networks (two coupled networks), both discrete and continuous versions show a continuous transition. In Solomon networks, the exponents depend on dimensionality and may differ from those of the Ising model [

3,

4,

5].

On Barabási–Albert networks, the discrete version exhibits a second-order transition and universality class differences compared to other topologies [

6]. On random and complex graphs, extensions with memory and bias confirm the occurrence of transitions and discuss changes in universality class [

7]. Modular structures with two groups reveal, in the mean-field regime, a stable antisymmetric ordered state in addition to symmetric ordered and disordered states [

8].

The aim of this work is to employ deep learning methods to investigate the continuous phase transition in the BChS model, demonstrating the applicability of these techniques to various network geometries. We generate data using kinetic Monte Carlo dynamics and analyze the resulting configurations with dense neural network classifiers, principal component analysis (PCA), and variational autoencoders (VAE) to accurately identify the critical point and characterize the critical behavior, even in the presence of disorder.

In the following sections, we apply machine learning techniques to study the continuous phase transition of the BChS model on both square and triangular lattices. We begin by presenting our results using supervised learning with dense neural networks, followed by unsupervised learning with PCA and dense neural networks.

3. Supervised Learning

Neural networks have been widely applied to study second-order phase transitions in models such as the Ising model [

10,

11,

12], directed percolation [

13], the pair contact process with diffusion [

14], and quantum phase transitions [

15]. In this work, we extend these methods to the BChS model on square and triangular lattices. Dense neural networks are trained to classify configurations as ferromagnetic (

) or paramagnetic (

). Training is performed on square lattice data, and inference is carried out on stationary configurations from both square and triangular lattices, allowing us to assess the network’s ability to identify the critical point in a nonequilibrium system with continuous states.

To account for the

symmetry, each configuration generated during simulation is paired with its inverted counterpart. The resulting training dataset contains

configurations, comprising

stationary samples for each of 200 noise values in the range

to

on the square lattice. The critical noise for the BChS model on the square lattice is

[

2]. Of the total dataset,

is reserved for validation.

During Monte Carlo simulations, the first steps are discarded to ensure that the dynamics become stationary, and an additional steps are omitted between stored configurations to reduce correlations. One Monte Carlo step corresponds to updating all spins. Lattice sizes used are , 20, 24, 32, and 40. Configurations sampled at are labeled as ferromagnetic, while those at are labeled as paramagnetic.

The neural network architecture is as follows:

Input layer of size , with each input representing a continuous spin value ;

First hidden layer with 128 neurons, ReLU activation, regularization, batch normalization, and dropout rate ;

Second hidden layer with 64 neurons, ReLU activation, regularization, batch normalization, and dropout rate ;

Output layer with two neurons (, ) and softmax activation.

The output represents the score for a pure ferromagnetic state (), while is the complementary score for a paramagnetic state (). Configuration labels are set as for the ferromagnetic phase and for the paramagnetic phase. The point of maximum confusion, corresponding to the transition threshold, occurs when . We chose a dense neural network architecture for this task, as dense neural networks are well suited for classification problems on arbitrary geometries since they do not rely on spatial structure. The neural network is implemented and trained using the Keras 3.11.3 and Tensorflow 2.20.0 libraries in Python 3.13.5.

The neural network was trained for at least

epochs with a batch size of 128, using the ADAM 3.11.3 optimizer with a learning rate

, and the chosen loss function is the sparse categorical cross-entropy

where

is the size of the dataset,

is the true label of the configuration,

is the predicted label by the neural network, and

represents the neural network parameters (weights and biases), which are optimized during training. The categorical cross-entropy loss function measures the dissimilarity between the true and predicted labels, encouraging the neural network to make accurate classifications.

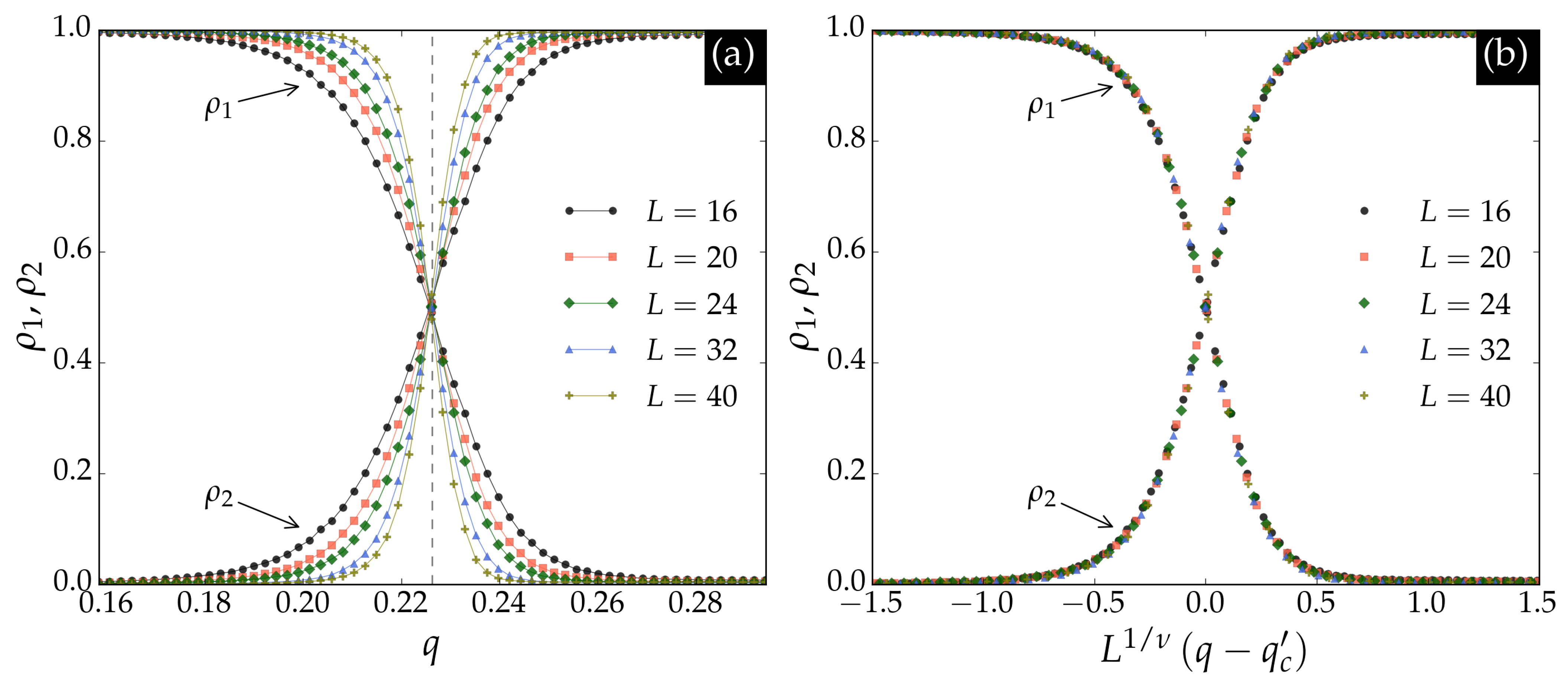

Figure 1 shows the classification results for the BChS model on the square lattice. In panel (a), the crossing point

closely matches the critical noise

, indicated by the dashed vertical line. Panel (b) demonstrates that the outputs collapse according to the finite-size scaling relation

where

is the correlation length exponent for the Ising universality class in two dimensions, and

denotes the crossing abscissas. The scaling functions

and

are universal up to a rescaling of the argument. These results confirm that the neural network accurately identifies the critical point

of the BChS model on the square lattice.

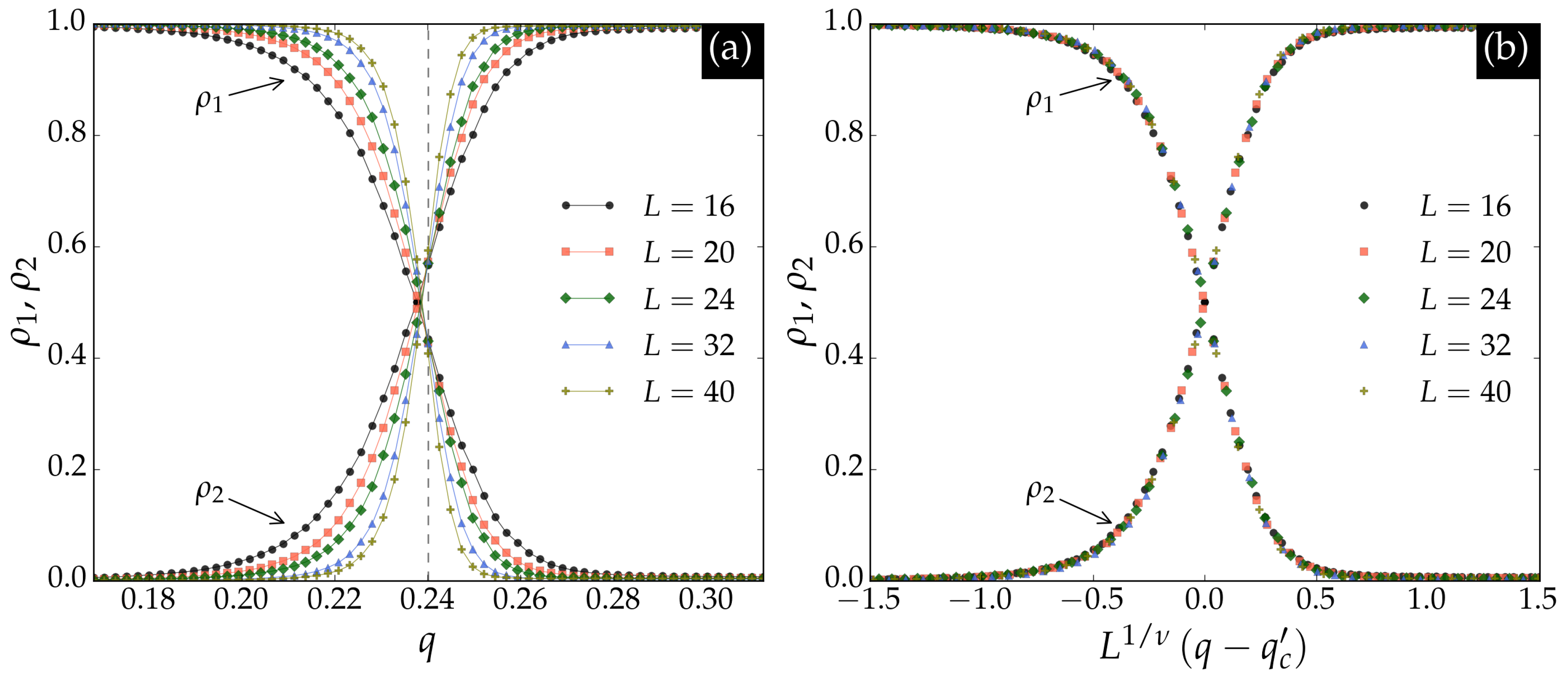

Next, we generated an inference dataset for the triangular lattice using the same parameters as for the square lattice. The neural network trained on square lattice data was then used to infer on triangular lattice configurations. The results are shown in

Figure 2. In panel (a), the crossing point

is close to the critical noise

, indicated by the dashed vertical line. Panel (b) shows that the outputs collapse according to Equation (

4) with

.

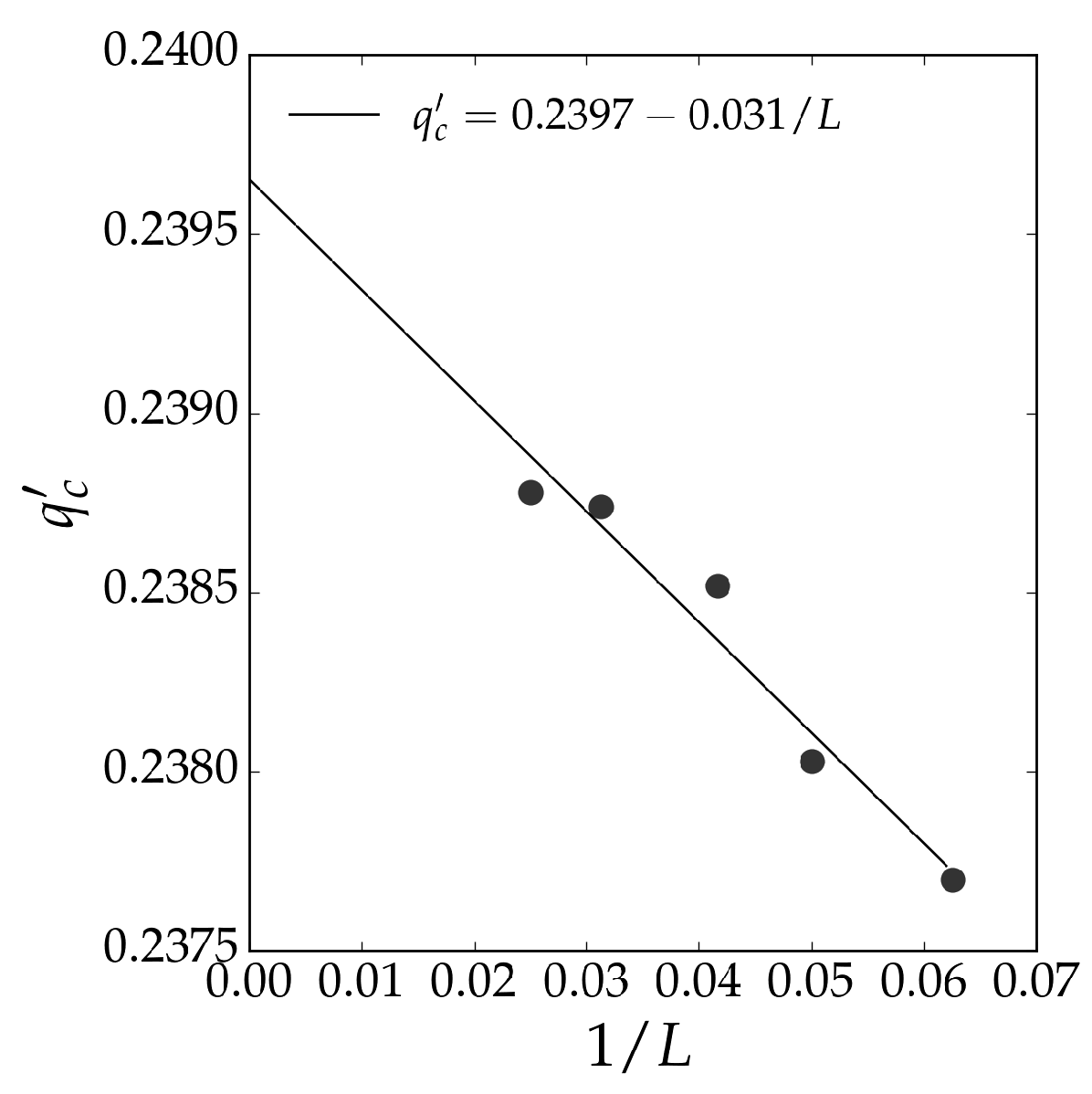

An extrapolation of the crossing points

seen in

Figure 2 as a function of

is shown in

Figure 3. The extrapolation is performed according to the linear regression

where

a is a constant. The extrapolation yields an estimate for the critical noise,

, which provides an estimate of the critical noise of the BChS model on the triangular lattice.

Results for the BChS model on the triangular lattice are not available in the literature. To compare the classification neural network results with standard methods, we simulated the model on triangular lattices using the kinetic Monte Carlo method. The fundamental observable is the average opinion balance (magnetization) per spin:

The order parameter is the time average of

m in the stationary regime, and its fluctuation defines the susceptibility. The order parameter

M, susceptibility

, and Binder cumulant

U are defined as [

16]

where

denotes the time average over the Markov chain. All observables depend on the noise parameter

q, so independent simulations are performed for each value of

q.

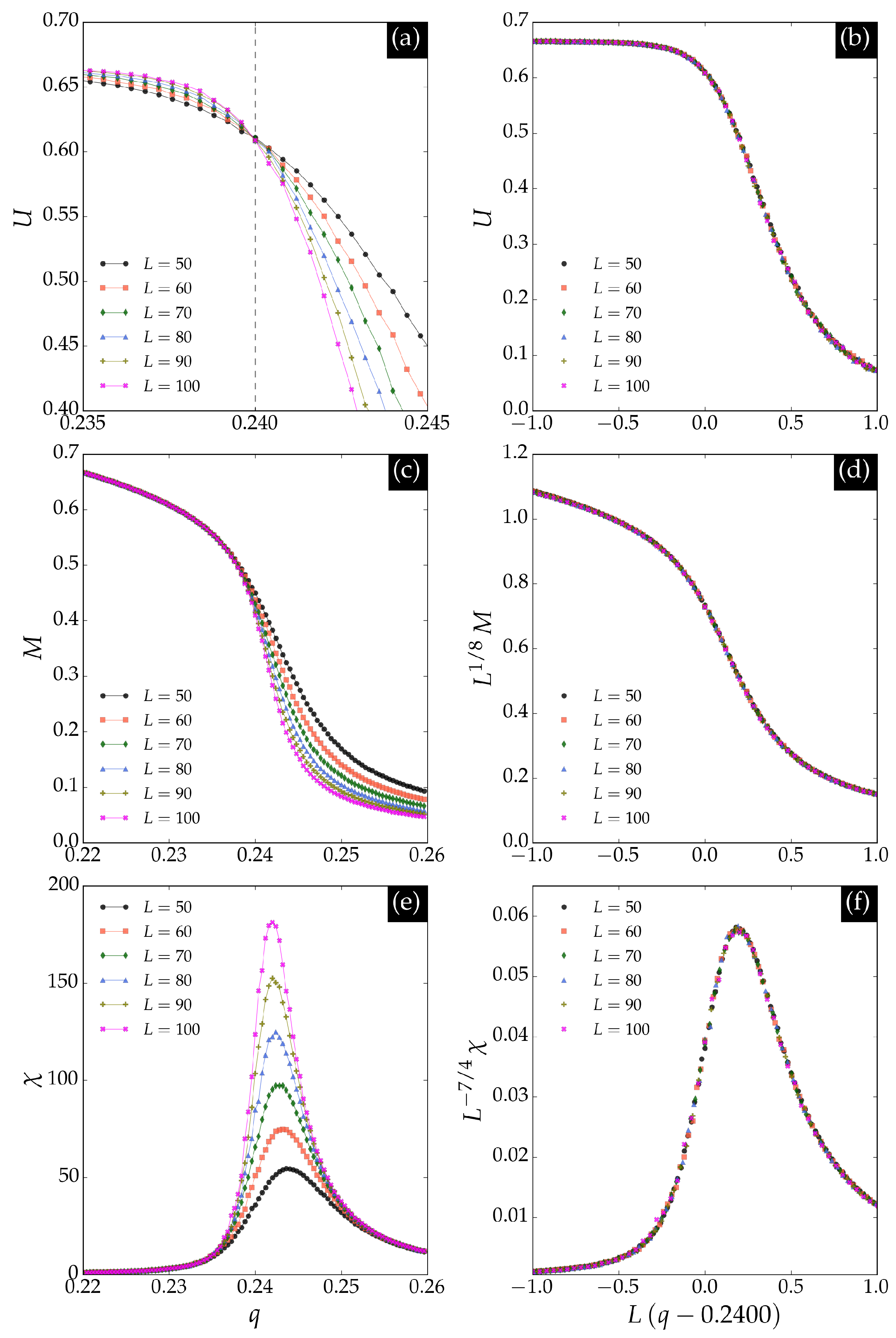

We performed simulations on triangular lattices of sizes

, 60, 70, 80, 90, and 100. For each noise value, we discarded the first

Monte Carlo steps to ensure stationarity, then collected

samples, omitting 10 steps between samples to reduce correlations. The results are shown in

Figure 4. We estimated the critical noise

using Binder’s cumulant method [

17], which is close to the extrapolation estimate shown in

Figure 3. The critical behavior matches that of the square lattice, as expected, except for the non-universal value of the critical noise. The agreement between the critical noise estimated by the neural network and that obtained via Monte Carlo simulations confirms the effectiveness of supervised learning in identifying phase transitions in nonequilibrium systems with continuous states.

4. Unsupervised Learning

Unsupervised learning methods have also been applied to study phase transitions in the Ising model [

18,

19,

20]. Here, we extend both supervised and unsupervised learning approaches to the BChS model on square and triangular lattices. We first employ

PCA, a classical unsupervised technique, followed by

VAEs, a deep learning method.

PCA identifies the directions (principal components) along which the variance in the data is maximized. The first principal component captures the largest variance, the second captures the next largest, and so on. These components correspond to the eigenvectors of the covariance matrix, with their associated eigenvalues indicating the amount of variance explained. PCA is commonly used for dimensionality reduction, visualization, and feature extraction.

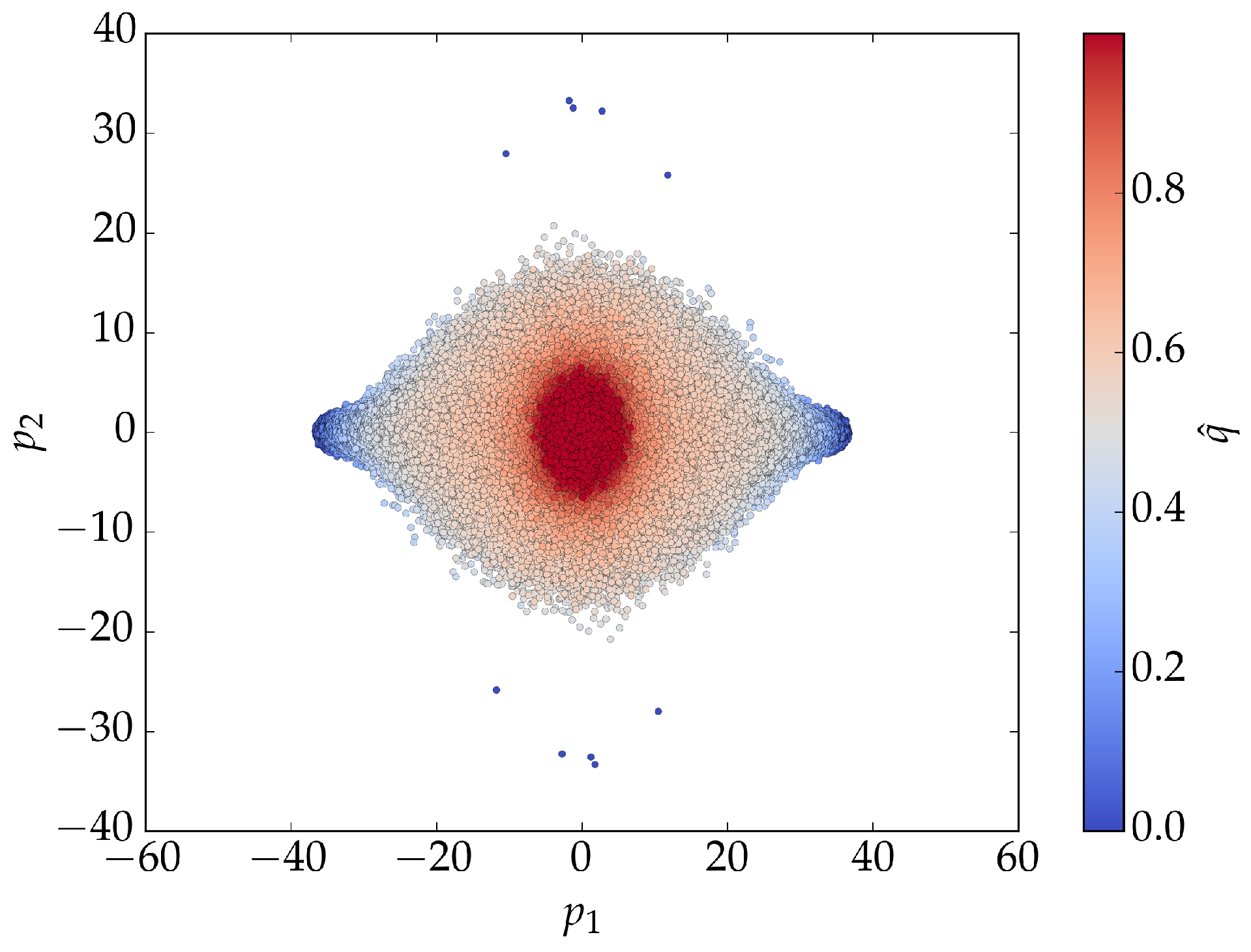

Figure 5 shows the results of

PCA applied to BChS model training data. For low noise values, the principal components form two clusters centered at

and

. For higher noise values, a single cluster appears at

. The cluster plot provides a clear visualization of the phase transition in the principal component space.

We further analyzed the principal component data using finite-size scaling techniques. Specifically, we considered the ratio of the two largest eigenvalues

of the covariance matrix, as well as the averages of the absolute values of the first and second principal components, denoted

and

, respectively. The finite-size scaling relations for these observables are

where

,

, and

are universal scaling functions.

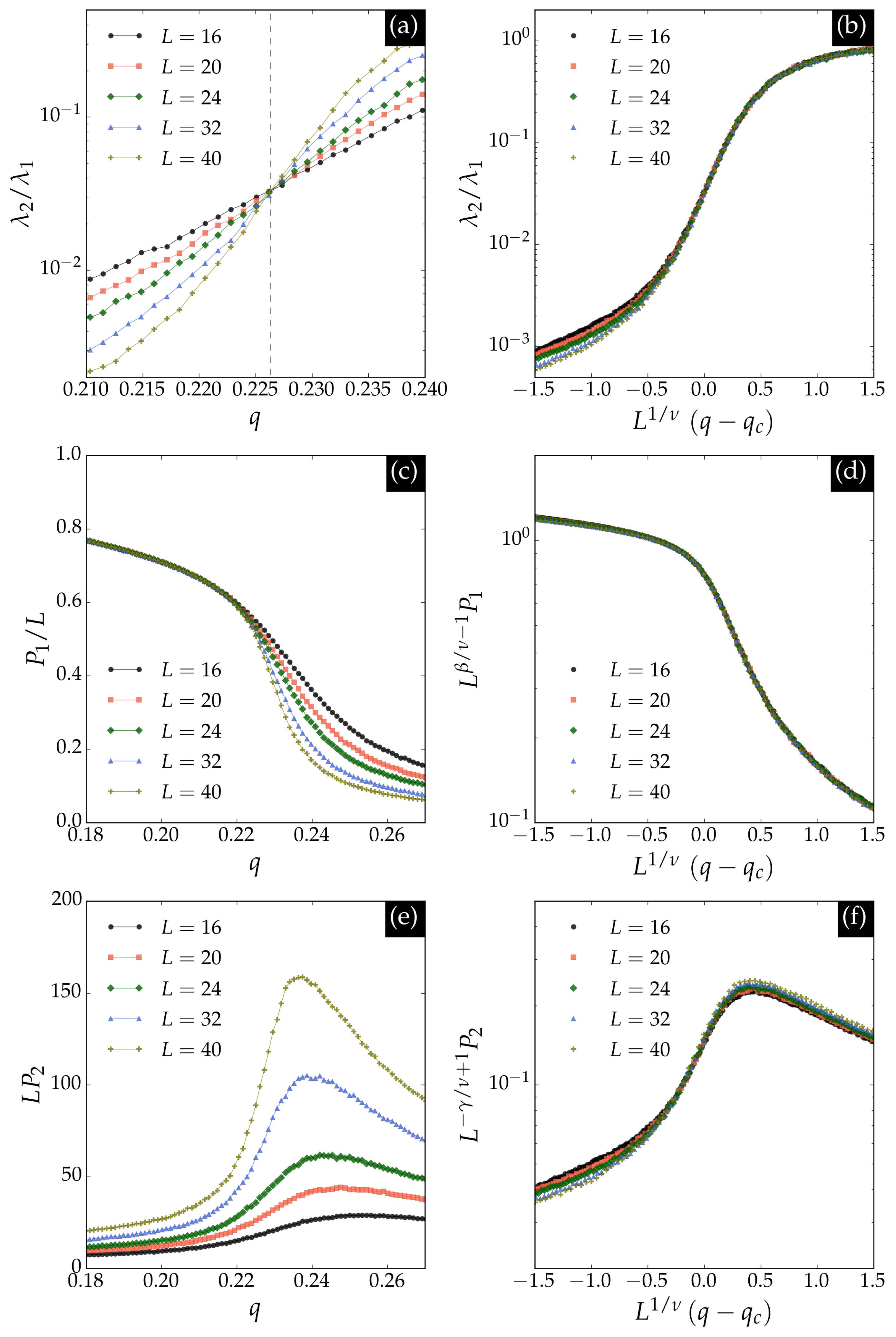

Figure 6 summarizes the results for these

PCA observables. Panel (a) shows that the ratio

is universal at the critical noise

for the square lattice. Panel (b) demonstrates the scaling collapse, allowing estimation of the critical exponent

. Panel (c) presents

as a function of noise, which coincides with the average magnetization per spin and scales as

with

, as confirmed by the collapse in panel (d). Panel (e) displays

, whose maximum increases with

at the critical point, where

, as shown in panel (f).

We also investigated the continuous phase transition using VAEs, which are generative models combining autoencoder architectures with variational inference. A VAE consists of an encoder that maps input data to a latent space and a decoder that reconstructs the input from the latent representation. The encoder learns a probabilistic mapping, enabling the generation of new samples by sampling from the latent space.

The encoder architecture is as follows:

Input layer of size , with each input corresponding to a continuous spin variable ;

First hidden layer with 625 neurons, ReLU activation, regularization, batch normalization, and dropout rate ;

Second hidden layer with 256 neurons, ReLU activation, regularization, batch normalization, and dropout rate ;

Third hidden layer with 64 neurons, ReLU activation, regularization, batch normalization, and dropout rate ;

Output layer with two neurons (linear activation): one outputs the mean and the other outputs the logarithm of the variance of the latent variable Z.

The decoder mirrors the encoder structure and receives the latent encoding Z as input. Additionally, it includes an extra input neuron for the normalized noise of the configuration, making the neural network a conditional VAE.

The

VAE was trained for at least

epochs with a batch size of 128, using the RMSprop optimizer with a learning rate

. The loss function is the sum of the mean squared error and the Kullback–Leibler loss

where

is the mean squared error between the input and reconstructed configurations,

with

the dataset size,

the input configuration,

the reconstructed configuration, and

the network parameters. The Kullback–Leibler loss [

21] is

where

d is the dimension of the latent space, and

,

are the mean and standard deviation of the latent variable. The Kullback–Leibler loss regularizes the latent space by encouraging the learned distribution to approximate a standard normal distribution, preventing overfitting and enabling sampling of new artificial configurations.

The latent space consists of a single statistical variable Z, sampled from a normal distribution with mean and variance provided by the encoder. This minimal latent space encourages the encoder to capture only the most relevant features of the data and prevents trivial reproduction of the input configurations. The VAE was implemented and trained using the Keras and Tensorflow libraries in Python.

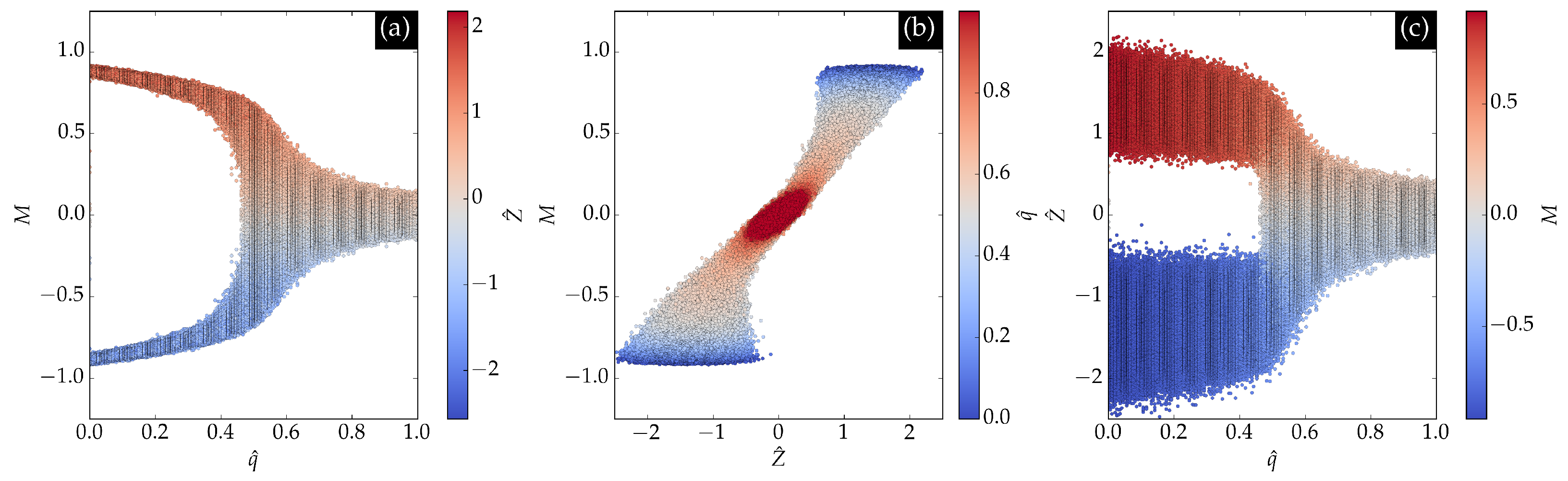

Figure 7 shows the latent encoding

Z of the input data as a function of magnetization and normalized noise. In panel (a), a clear separation between positive and negative magnetizations is observed according to the sign of

Z, reflecting the

symmetry. Panel (b) demonstrates that the relationship learned by the neural network between magnetization

m and latent encoding

Z is approximately linear. At low noise values, two clusters centered at

and

appear, while at higher noise values, a single cluster at

emerges, indicating the phase transition. Panel (c) further confirms that the phase transition is evident from the latent encoding.

We define a correlation function between the real data configurations

and those reconstructed by the

VAE,

, as

where

denotes an average over the dataset,

is the average magnetization of the real data, and

is the average magnetization of the reconstructed data. The correlation function is universal at the critical point, enabling estimation of the transition threshold in the same way as the Binder cumulant in Monte Carlo simulations. Therefore one can expect the following scaling dependence

which allows estimation of the correlation length exponent

.

We also calculate the binary cross-entropy loss function

,

by renormalizing the input configurations to the interval

. The loss functions

and

between the input and reconstructed output configurations serve as indicators of the phase transition. In the paramagnetic regime (

), the input and reconstructed outputs behave as two effectively random configurations, yielding limiting values

and

for random uniform data. Consequently, the quantities

and

act as order parameters and obey the following scaling relations:

where the loss functions scale with system size as

, consistent with the universality class of the two-dimensional Ising model.

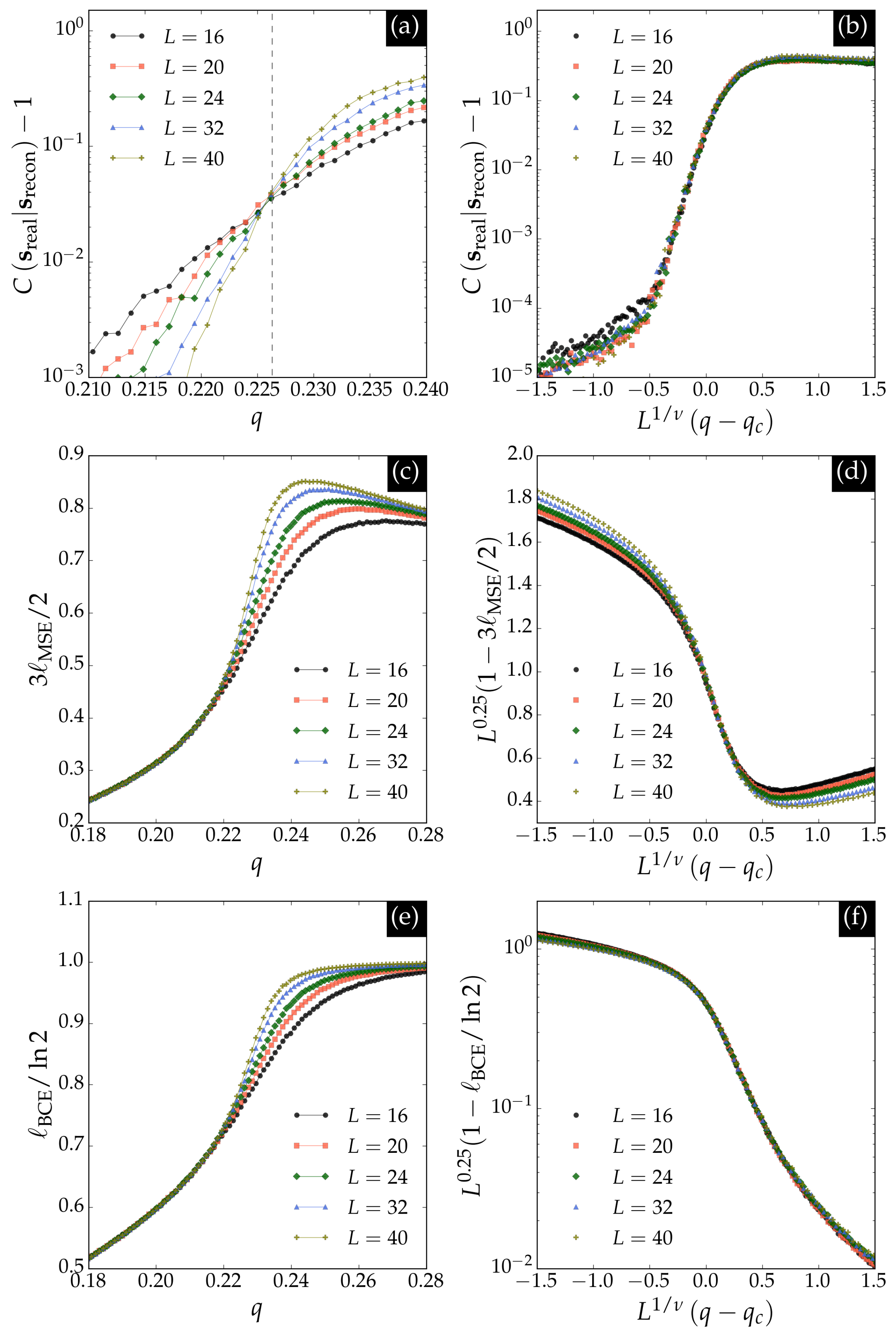

Figure 8 presents the

VAE observables for the BChS model. Panel (a) shows the correlation function defined in Equation (

12), which is universal at the critical noise

for the square lattice. The scaling collapse in panel (b) confirms the finite-size scaling relation for the correlation function with the Ising critical exponent

. Panels (c) and (e) display

and

, respectively, both serving as order parameters that vanish at the transition. The scaling collapses in panels (d) and (f) confirm the expected scaling relations for these quantities with the Ising exponent

.