1. Introduction

Organizations generate terabytes of documents daily across emails, reports, policies, and multimedia, requiring systematic organization [

1] to enable knowledge discovery and decision making. This proliferation demands systems that efficiently manage documents while preserving contextual relationships that enable actionable insights within changing workflows.

Traditional document management approaches have basic limitations [

2,

3] that become more severe at scale. Static classification schemes fail to adapt as priorities shift and responsibilities change. Content-based similarity measures, despite advances in transformer architectures [

4,

5], miss crucial organizational context. Hierarchical clustering methods require expensive recomputation [

6,

7] when collections change, making them unsuitable for dynamic environments.

Documents derive value from complex relationship webs, including temporal dependencies, priority hierarchies, approval workflows, and cross-domain connections. A project proposal gains significance not just from content but from its relationships to budgets, regulations, timelines, and risk assessments. Current document management systems do not adequately capture these dynamic contextual relationships at scale.

Existing approaches typically focus on one-dimensional similarity or static categories, leading to significant inefficiencies. While the need for multi-dimensional context modeling is recognized, current solutions often rely on costly manual annotation, external knowledge bases, or rigid domain-specific ontologies. The challenge of automatically extracting and leveraging contextual information at scale remains challenging.

This paper presents FLACON (Flag-Aware Context-sensitive Clustering), a multi-dimensional approach that integrates semantic, structural, temporal, and categorical context within a unified mathematical framework. The approach uses a six-dimensional flag system to capture organizational metadata: document type, organizational domain, priority level, workflow status, relationship mapping, and temporal relevance. The FLACON methodology consists of four algorithmic components: (1) a six-dimensional flag extraction algorithm; (2) a composite distance function integrating content, contextual, and temporal similarities; (3) an adaptive hierarchical clustering algorithm; and (4) an incremental update mechanism for dynamic adaptation.

Evaluation on nine dataset variations (six public benchmarks and three enterprise collections) demonstrates significant improvements: Silhouette Scores of 0.311 versus 0.040 for traditional methods (7.8-fold gains), and 89% of GPT-4’s clustering quality at a 7× faster processing speed. The system demonstrates incremental-update complexity (for affected documents) and deterministic behavior suitable for compliance requirements.

2. Related Work

Document clustering has evolved from keyword-based approaches [

8] to semantic understanding using neural architectures. While BERT-based models and sentence transformers [

4,

5] capture deep semantic relationships, they face computational efficiency challenges in enterprise deployments. Hierarchical clustering methods [

9,

10] provide interpretable organization structures, and incremental techniques [

11] handle streaming documents, but multilingual approaches [

12] still struggle with computational efficiency at scale. The gap between algorithmic sophistication and practical deployment constraints limits adoption in enterprise environments.

Dynamic clustering systems adapt to evolving data characteristics and organizational priorities [

13,

14]. Adaptive hierarchical approaches [

15,

16] maintain quality as data changes, while temporal clustering [

17,

18] tracks topical evolution. However, existing methods typically focus on single-dimensional adaptations—temporal changes, user feedback, or content evolution—rather than comprehensive multi-dimensional context modeling required for organizational scenarios.

Context-aware computing [

19] develops systems that understand situational factors beyond content similarity. Large language models [

20,

21] offer unprecedented opportunities for context-aware document processing, with GPT-4 demonstrating exceptional capabilities in understanding complex textual relationships and extracting metadata. However, LLM-based approaches face substantial challenges: computational cost, latency requirements, and scalability to millions of documents. Automatic metadata inference remains challenging due to organizational complexity and the need for consistent extraction across diverse document types.

Modern organizations require systems that understand complex workflows, multi-stage approval processes, and temporal dynamics [

22]. Manual categorization becomes prohibitively expensive at scale, while automated approaches often lack contextual understanding. Modern platforms provide collaboration features but create information silos that hinder cross-domain knowledge discovery [

23]. Most approaches focus on static relationship identification rather than dynamic context adaptation.

Previous work on structural features and temporal patterns has focused on single aspects rather than systematic integration. General-purpose context-aware frameworks lack domain-specific organizational intelligence, while LLM-based methods [

20,

21] face deployment constraints. FLACON provides complementary advantages through lightweight rule-based flag extraction; it is 7× faster than GPT-4-based clustering (60 s vs. 420 s for 10 K documents) while maintaining deterministic behavior essential for compliance requirements.

Information theory provides fundamental principles through entropy-based similarity measures and mutual information optimization [

24,

25,

26]. Entropy-based approaches minimize within-cluster entropy while maximizing between-cluster divergence [

27]. Recent advances explore mutual information clustering [

28] and conditional entropy minimization [

29], but these methods operate on single-dimensional feature spaces and have not been systematically applied to multi-dimensional organizational contexts.

3. Methodology

The FLACON methodology addresses the fundamental challenge of organizing documents based on multi-dimensional organizational context. Traditional clustering approaches focus on minimizing content-based distance, but enterprise environments require consideration of workflow status, priority hierarchies, and temporal relevance. This problem is formalized as an entropy minimization problem, where the goal is to reduce uncertainty in document flags within each cluster.

Let

represent flag vectors for n documents, and

represent k cluster assignments. The within-cluster contextual entropy is defined as

where:

- -

= number of documents in cluster Ci

- -

= total number of documents (

- -

measures flag diversity within cluster

- -

= empirical probability of flag value in cluster

For cluster k, the flag entropy is computed as:

where

denotes the

-th flag dimension of document

, and

represents the weight for the

j-th dimension (see Notation

Table A1 for complete definitions).

- (i)

For categorical flags (e.g., T, D, S), use a Dirichlet-smoothed plug-in estimator:

= count of category c in cluster

= total documents in cluster

= number of categories

- (ii)

For ordinal/continuous flags (e.g., , ), use discretization () or kNN entropy ().

- (iii)

For set-valued flags (e.g., R), use category folding () to treat them as categorical, or Jaccard-based discretization.

This study uses this mixed estimator to compute the within-cluster contextual entropy as defined in Equation (1). Minimizing ensures that documents within the same cluster share similar contextual characteristics. The composite distance function (Equations (2)–(4)) operationalizes this objective by integrating content similarity with flag-based contextual distance and temporal factors. The approach can be viewed through the lens of the information bottleneck principle, balancing compression (cluster simplicity) with preservation of contextual information , though this study implements this through explicit distance-based clustering rather than probabilistic optimization.

This methodology is designed to overcome the core limitations of existing systems, including their failure to model multi-dimensional context and adapt to dynamic changes. This study introduces a dynamic Context Flag System, which serves as the foundation for the information-theoretic clustering. Instead of treating document attributes as a static vector, this system represents each document with six dynamic flags. This dynamic representation is the key to reducing the uncertainty (entropy) associated with document relationships. The approach uses a six-dimensional flag system to represent document characteristics. Unlike traditional static metadata approaches, the system extracts contextual information from document content and metadata, with periodic updates when changes are detected.

The FLACON methodology consists of four integrated components that work together to enable dynamic content structuring: (1) a six-dimensional flag system for capturing enterprise context, (2) algorithms for extracting these flags from documents, (3) a composite distance function that combines multiple similarity measures, and (4) an adaptive clustering algorithm with incremental update capabilities.

3.1. Dynamic Context Flag System Design

Unlike traditional feature engineering approaches that treat contextual attributes as static vectors, the Dynamic Context Flag System operates as an algorithmic control mechanism that continuously monitors and updates organizational relationships through real-time flag state transitions and dependency tracking. The six-dimensional flag system encompasses components representing different aspects of organizational context.

The Type Flag () categorizes documents based on their functional role within workflows, including reports, policies, communications, and technical documentation. Type classification uses a hybrid approach combining rule-based patterns with machine learning classifiers that require training on organization-specific collections for optimal performance.

The Domain Flag () identifies the organizational domain or department associated with each document. Domain assignment considers author information, recipient patterns, and content analysis to determine primary organizational context. This enables cross-domain relationship discovery and department-specific organization schemes that reflect actual organizational structure and communication patterns.

The Priority Flag () represents document importance within current organizational priorities. To determine relative importance, the priority assignment mechanism analyzes communication patterns, deadline proximity, stakeholder involvement, and resource allocation decisions. Priority levels are continuously updated based on organizational feedback and usage patterns, ensuring that document organization reflects current business priorities rather than historical classifications.

The Status Flag () tracks document position within organizational workflows. Status categories include active, under review, approved, implemented, and archived states that reflect common organizational processes. Status transitions are automatically detected through workflow analysis and content changes, enabling systems to maintain current workflow understanding without manual intervention.

The Relationship Flag () captures inter-document dependencies and connections that are critical for organizational understanding. Relationship types include hierarchical dependencies such as parent–child relationships, temporal sequences including predecessor–successor relationships, and semantic associations representing related content. Relationship discovery employs both explicit citations and implicit content connections to build comprehensive relationship networks.

The Temporal Flag () represents time-dependent relevance and access patterns that influence document importance over time. Temporal scoring considers creation time, last modification, access frequency, and relevance decay functions that model how document importance changes over time. This enables automatic prioritization of current information while maintaining access to historical context when needed.

The six-dimensional flag system was designed to capture orthogonal aspects of organizational context that jointly determine document utility. Type and Domain flags represent functional role and organizational ownership, directly affecting access control and routing decisions. Priority and Status flags provide orthogonal axes of importance versus workflow stage. A high-priority document may remain in draft status, while a low-priority document may already be approved. This distinction enables the system to differentiate between deadline-sensitive and approval-focused organizational needs.

Relationship flags capture inter-document dependencies that cannot be inferred from content alone, including hierarchical approval chains and sequential task dependencies. Temporal flags model time-dependent relevance and decay patterns aligned with organizational cycles. Ablation studies confirm that each flag type contributes meaningfully to clustering quality.

Priority flags provide the largest individual improvement (ΔNM = 0.089), followed by Status (ΔNMI = 0.067), Domain (ΔNMI = 0.056), Type (NMI = 0.051), Relationship (ΔNMI = 0.045), and Temporal flags (ΔNMI = 0.034). The sum of individual contributions is 0.342, but the complete six-flag combination achieves NMI = 0.275 in practice due to normalization and interaction effects. However, the combined system still demonstrates 15.4% improvement over the best single-flag configuration (Priority-only: NMI = 0.238), confirming synergistic multi-dimensional context modeling. Note: The apparent discrepancy between summed individual gains and the final NMI (0.275) arises because: (1) baseline NMI ≠ 0 (content-only baseline achieves 0.186), and (2) flag contributions are measured relative to this baseline, not in absolute terms. This demonstrates that multi-dimensional context modeling provides benefits beyond simple feature aggregation.

3.2. Flag Extraction Algorithm

The flag extraction process is formalized in Algorithm 1, which processes each document

through multiple specialized extractors operating in parallel to generate comprehensive flag assignments. For each document

, Algorithm 1 outputs a flag vector

= (

,

,

,

,

). The complete set of flag vectors

for all

documents serves as input to the hierarchical clustering algorithm (Algorithm 2 in

Section 3.4), enabling integration of organizational context into the clustering hierarchy. The extraction process begins with content feature extraction using natural language processing preprocessing that includes tokenization, named entity recognition, and semantic embedding generation. Rule-based patterns identify explicit flag indicators, such as document type markers, status keywords, and temporal references through pattern matching approaches.

Type classification employs pre-trained transformer models to generate semantic embeddings that provide rich representations for content analysis, capturing contextual nuances beyond keyword matching. Trained models combine content features with metadata to achieve accurate categorization across organizational document types. Domain identification analyzes author and recipient information along with content features to determine organizational context. Priority assessment employs organizational pattern analysis that considers communication networks, deadline proximity, and resource allocation indicators to determine relative document importance within current organizational priorities.

Status determination examines workflow indicators, including approval markers, review comments, and process stage identifiers to track document position within organizational workflows. Relationship discovery combines content similarity analysis with citation extraction to identify both explicit and implicit document connections. Temporal relevance computation integrates access patterns, modification history, and organizational seasonality to generate time-dependent importance scores that reflect how document relevance changes over time. Real-world validation shows consistent flag generation across diverse document types, with processing efficiency of 0.55 s for 200-document collections and scalable performance up to 1 K documents.

| Algorithm 1. FLACON Six-Dimensional Flag Extraction |

INPUT: Document d with content, metadata, headers

OUTPUT: Flag vector

// Returns 6-dimensional flag vector for single document

1: // Type Classification (hybrid rule + ML)

2: rule_score ← MATCH_PATTERNS (d.content, type_patterns)

3: ml_score ← SVM_CLASSIFY(TFIDF (d.content), trained_model)

4: Type ← WEIGHTED_COMBINE(rule_score, ml_score, [0.6, 0.4])

5: // Priority Extraction (multi-source fusion)

6: header_priority ← EXTRACT_HEADER (d, ‘X-Priority’) OR 0.5

7: keyword_score ← COUNT_KEYWORDS (d.content, urgency_terms) / threshold

8: network_score ← MIN(|d.recipients|/10.0, 1.0)

9: Priority ← WEIGHTED_AVERAGE

([header_priority, keyword_score, network_score], [0.4, 0.4, 0.2] )

10: // Status Determination (pattern + temporal inference)

11: Status ← MATCH_WORKFLOW_PATTERNS (d.content, status_patterns)

12: IF Status = NULL THEN

13: age ← CURRENT_DATE − d.last_modified

14: Status ← INFER_FROM_AGE(age) // draft < 7 days, archived > 180 days, else active

15: // Domain Assignment (metadata + content)

16: Domain

← EXTRACT_FROM_EMAIL (d.author) OR NAIVE_BAYES(d.content, domain_model)

17: // Relationship Discovery (citation + similarity)

18: explicit_refs ← EXTRACT_CITATIONS (d.content)

19: semantic_sim ← TOP_K_SIMILAR (d, corpus, k = 10)

20: Relationship ← COMBINE (explicit_refs, semantic_sim)

21: // Temporal Scoring (decay + access + deadline)

22: recency ← EXP(-days_since_creation/30.0) // Decay half-life = 30 days

23: access_freq ← MIN (d.access_count/100.0, 1.0) // Normalized to [0, 1]

24: deadline ← EXTRACT_DEADLINE_PROXIMITY (d.content)

// Days until deadline

25: Temporal

← WEIGHTED_AVERAGE ([recency, access_freq, deadline], [0.5, 0.3, 0.2])

// Normalize to [0, 1]

26: Temporal

← WEIGHTED_AVERAGE ([recency, access_freq, deadline], [0.5, 0.3, 0.2])

27: RETURN F = (Type, Domain, Priority, Status, Relationship, Temporal)

// Flag vector for document

Computational Complexity:

- -

Type classification: for rule matching + for SVM

where |d| = document length in tokens, f = feature dimension

- -

Priority extraction: where = size of recipient set - -

Overall per-document: dominated by ML classification - -

For n documents:

Note: denotes the number of tokens in document , and f represents the feature dimension for ML classification (typically for TF-IDF). |

The flag extraction methodology acknowledges several practical constraints that influence implementation effectiveness in real-world organizational environments. The Type Flag extraction combines rule-based pattern matching with support vector machine classification to achieve robust document categorization across diverse organizational contexts. Rule-based patterns demonstrate high precision on structured documents containing explicit type indicators, achieving approximately 85% accuracy on formal organizational communications. However, informal documents lacking standardized formatting require machine learning augmentation through TF-IDF feature extraction and SVM classification trained on organization-specific document collections.

Priority Flag extraction encounters significant challenges due to the heterogeneous nature of priority indicators across different communication channels and organizational workflows. Email headers containing explicit priority fields provide reliable priority assessment, but approximately 60% of organizational documents lack such structured metadata. The methodology compensates by analyzing keyword frequencies to detect urgency indicators and analyzing communication networks to assess stakeholder involvement patterns. Empirical validation demonstrates 72% correlation with expert human assessments when explicit priority metadata is unavailable.

Status Flag determination relies on workflow-specific terminology that varies substantially across organizational domains and cultural contexts. The current implementation assumes standardized English-language status indicators common in North American enterprise environments.

Organizations employing domain-specific terminology or non-English workflow descriptions require pattern customization to achieve comparable accuracy levels. Temporal inference mechanisms provide fallback status assignment based on document modification patterns, though these heuristics may not accurately reflect complex organizational approval processes.

Domain Flag assignment combines organizational metadata analysis with content-based classification to determine departmental or functional associations. Email domain mapping provides high-confidence domain assignment when organizational email structures follow consistent departmental patterns. Content-based classification through Naive Bayes models serves as fallback methodology but requires domain-specific training data that may not be readily available in all organizational contexts. Cross-domain documents present particular challenges requiring multi-label classification approaches not fully addressed in the current implementation.

Relationship Flag extraction represents the most computationally intensive component of the flag extraction pipeline due to the necessity of cross-document analysis for relationship discovery. Explicit citation detection through pattern matching provides reliable identification of formal document references, while semantic similarity computation employs cosine similarity measures on TF-IDF representations as a practical approximation of deeper semantic relationships. The current methodology does not capture complex organizational relationships that require domain knowledge or temporal reasoning beyond simple content similarity measures.

Temporal Flag computation employs exponential decay functions with fixed parameters that may require organizational customization based on specific document lifecycle patterns and business cycle characteristics. The 30-day half-life parameter reflects general organizational document relevance patterns but may not accurately model specialized domains with longer or shorter relevance cycles. Access frequency normalization assumes uniform user behavior patterns that may not hold across diverse organizational roles and responsibilities.

3.3. Composite Distance Computation

The effectiveness of hierarchical clustering depends critically on accurate distance computation that integrates multiple information dimensions. The composite distance function combines content similarity, flag-based contextual distance, and temporal factors:

where,

, and

are weighting parameters (

) that balance content versus context, initialized to (0.4, 0.4, 0.2) based on empirical validation and adapted through organizational feedback.

The temporal distance component is defined as:

where

are normalized temporal relevance scores computed by Algorithm 1 (Line 30), combining recency decay (weight 0.5), access frequency (weight 0.3), and deadline proximity (weight 0.2).

Content distance employs semantic embeddings to capture deep textual relationships:

This study uses sentence-BERT embeddings [

5] fine-tuned on organizational document collections to ensure domain-specific semantic understanding.

Flag distance computation integrates six contextual dimensions extracted by Algorithm 1. Each flag type

= {Type, Domain, Priority, Status, Relationship, Temporal} requires a specialized distance function

to handle its specific data characteristics:

where

K = {Type, Domain, Priority, Status, Relationship, Temporal} represents the set of flag dimensions (see

Table A1).

The default to uniform distribution wk = 1/6 for all flags but can be adapted organizationally through user feedback mechanisms. For example, legal departments may increase Status weight to while reducing others to reflect emphasis on approval workflows.

Distance Functions : Specialized distance computations tailored to each flag’s data type:

Categorical flags (Type, Domain, Status): Binary mismatch indicator:

Ordinal flags (Priority): Normalized Manhattan distance across L levels:

For a five-level priority system (Critical = 5, High = 4, Medium = 3, Low = 2, Minimal = 1), L = 5. This preserves ordinality; the distance between High and Medium (1/4 = 0.25) is smaller than between High and Low (2/4 = 0.50).

Relationship flags: Jaccard distance on linked document sets:

where R

i denotes documents related to d

i through explicit citations or high semantic similarity (cosine > 0.8). Documents sharing many connections receive low distance (high similarity).

Temporal flags: Absolute difference of normalized relevance scores:

where

are temporal relevance scores from Algorithm 1, line 30, combining recency decay, access frequency, and deadline proximity.

Normalization Rationale: The denominator ensures regardless of weight configuration, maintaining consistent scaling across organizations with different weight settings. When using uniform weights (), this simplifies to averaging across six dimensions.

Concrete Example: Consider two documents with flags:

- -

d1: Type = Report, Domain = Finance, Priority = High (4), Status = Review, τ1 = 0.9;

- -

d2: Type = Report, Domain = Finance, Priority = Medium (3), Status = Draft, τ2 = 0.7.

Computing each dk:

Type: 1 [Report = Report] = 0;

Domain: 1[Finance = Finance] = 0;

Priority: |4 − 3|/(5 − 1) = 0.25;

Status: 1[Review ≠ Draft] = 1;

Relationship: Assume d

1 links to {

, doc_B, doc_C} and d

2 links to {doc_B, doc_C, doc_D};

Temporal: |0.9 − 0.7| = 0.2.

With uniform weights (w

k = 1/6):

This moderate flag distance reflects shared organizational context (same type and domain) but different workflow stages (Review vs. Draft) and temporal profiles.

3.4. Adaptive Hierarchical Clustering Algorithm

The clustering process is formalized in Algorithm 2, which constructs a hierarchical tree structure using the composite distance measured in Equations (2)–(4). The algorithm operates on flag vectors

extracted by Algorithm 1, enabling integration of organizational context into the clustering hierarchy. The initial clustering process constructs a hierarchical tree structure through an iterative agglomeration procedure. The algorithm begins by treating each document as a singleton cluster, then iteratively merges the closest cluster pairs based on composite distance until a complete hierarchy is formed.

| Algorithm 2. Initial Hierarchy Construction |

Input: Document Collection , Flag Vectors

(extracted via Algorithm 1)

Output: Hierarchical Tree H

1: // Initialize distance matrix using Equations (2)–(4)

2: FOR i = 1 TO n DO

3: FOR j = i + 1 TO n DO

4: ←

5:

6: // Build hierarchy through iterative merging

7: // Singleton clusters

8: WHILE |C| > 1 DO

9: // Find closest pair

10: // Merge clusters

11:

12: // Update distances using average linkage

13: FOR each Ck ∈ C\{Ci, Cj} DO

14: 15:

16: // Replace with merged cluster

17: Record merge () in H // Build tree structure

18:

19: RETURN H |

The algorithm employs average linkage criteria for cluster merging that balance clustering quality with computational efficiency. Distance matrix updates utilize efficient incremental computation that avoids full recomputation for each merge operation.

3.5. Incremental Update Mechanism

A key innovation in the proposed approach is an incremental update mechanism that maintains hierarchy quality while avoiding costly full recalculation when flags change. The system identifies affected document pairs and performs targeted hierarchy adjustments that preserve the overall structure while adapting to contextual changes.

The incremental update process starts by detecting flag changes through continuous monitoring of document states and organizational contexts. When changes are detected, the system identifies all document pairs affected by the flag modifications, focusing updates on the minimal set of relationships that need recalculation.

An update threshold mechanism determines whether changes are significant enough to warrant complete hierarchy reconstruction or can be handled through localized adjustments. Small-scale changes affecting fewer than a specified percentage of documents trigger incremental updates, while large-scale changes initiate full rebuilding to maintain clustering quality.

Localized rebalancing procedures adjust the hierarchy structure in regions affected by flag changes while preserving the overall tree topology. The rebalancing process uses efficient tree manipulation algorithms that minimize computational overhead while maintaining clustering coherence. Consistency validation ensures that incremental updates maintain hierarchy quality comparable to complete reconstruction. If validation fails, the system automatically triggers full rebuilding to preserve clustering integrity.

With bounded candidate set size q via ANN/LSH and stable weights, inserting items costs in expectation. If drift triggers exceed ρ of cluster mass, a full rebuild O(n2) is preferable.

4. System Architecture

Practical deployment of FLACON requires an enterprise-grade system architecture that translates the algorithmic components described in

Section 3 into a scalable, fault-tolerant platform capable of handling real-world organizational document collections and usage patterns. The architecture design emphasizes modularity for independent component optimization, fault tolerance for enterprise reliability requirements, horizontal scalability for growing organizational needs, and integration capability with existing enterprise systems and workflows.

The system architecture follows a carefully designed layered pattern that systematically separates concerns while enabling efficient communication between components and maintaining clear interfaces for system maintenance and enhancement. The Document Input Layer handles heterogeneous data sources, including email systems, file repositories, web content management systems, enterprise collaboration platforms, and document creation tools, providing standardized document ingestion capabilities that accommodate diverse organizational environments, varying data formats, and different integration requirements. This layer implements sophisticated content preprocessing, metadata extraction, format normalization, and quality validation procedures that ensure consistent document representation across the system.

The system architecture follows a layered design pattern that separates concerns while enabling efficient communication between components. The Document Input Layer handles heterogeneous data sources, including email systems, file repositories, web content, and enterprise platforms, providing standardized document ingestion capabilities that accommodate diverse organizational environments. The system implements flag extraction through a microservices architecture where each flag type (Type, Domain, Priority, Status, Relationship, Temporal) operates as an independent service. This design enables horizontal scaling of individual flag processors based on computational demand and allows for independent updates without system-wide interruption.

The Clustering Engine implements algorithms defined through distributed processing nodes that handle concurrent clustering operations. Load balancing ensures optimal resource utilization across multiple compute instances, while the clustering coordinator manages distributed hierarchy construction and maintains consistency across nodes. The Hierarchy Manager maintains dynamic tree structures using efficient data structures that support rapid traversal and modification operations. The Incremental Update Engine performs real-time adaptations without full recomputation, utilizing sophisticated algorithms that identify affected regions and perform localized adjustments. The modular design enables independent optimization of each clustering operation while maintaining overall system coherence.

Figure 1 illustrates the complete system architecture with four main layers: (1) Document Input Layer handling heterogeneous data sources, (2) Flag Extraction Microservices implementing Algorithm 1 in parallel, (3) Clustering Engine executing Algorithm 2 with distributed processing, and (4) Distributed Storage Layer maintaining document content, flag metadata, and hierarchical indices. Arrows indicate data flow and component dependencies.

The overall system architecture is illustrated in

Figure 1, which shows the layered design pattern and component interactions.

The Distributed Storage Layer provides scalable persistence for document content, flag metadata, and hierarchical indices across multiple storage systems. Document repositories handle original content storage with version control capabilities that maintain document history and enable rollback operations when necessary. Specialized flag databases optimize for frequent updates and complex queries, utilizing indexing strategies that support rapid flag-based filtering and relationship queries. Hierarchical indices maintain spatial data structures that enable efficient tree traversal and modification operations while supporting concurrent access patterns.

Component integration follows event-driven patterns that ensure system responsiveness and consistency across distributed deployments. Document ingestion triggers immediate flag extraction processes that operate in parallel across multiple flag processors, maximizing throughput while maintaining processing quality. Extracted flags feed into distance computation pipelines that update affected portions of the similarity matrix incrementally rather than recomputing entire structures.

The Query Processing Interface supports complex multi-dimensional queries that combine content similarity, flag-based filtering, and hierarchical constraints. Users can explore document relationships through multiple conceptual lenses, including temporal evolution, priority hierarchies, and cross-domain connections. The interface provides both programmatic API access and interactive exploration capabilities.

Performance monitoring and analytics capabilities provide real-time insights into system behavior, enabling automatic scaling decisions and performance tuning. Machine learning models predict resource requirements based on usage patterns, allowing proactive capacity adjustments that prevent service degradation during peak usage periods. The monitoring system tracks clustering quality metrics, processing latencies, and user interaction patterns to optimize system performance continuously.

5. Experimental Setup and Evaluation Framework

5.1. Comprehensive Dataset Collection and Characteristics

This study evaluated FLACON on six primary datasets with multiple preprocessing variations, representing diverse organizational contexts and text domains. The evaluation included three enterprise collections provided by Gyeongbuk Software Associate under a data sharing agreement (GSA-Internal with 15 K documents, GSA-Admin with 3 K documents, and GSA-Research with 4 K documents), and three publicly available benchmark datasets: the Enron Email corpus (50 K emails from the Kaggle variant), 20 Newsgroups in three preprocessing variants (18,828 deduplicated documents, 19,997 original documents, and chronologically split by date versions), and Reuters-21578 financial news (21,578 articles). These six primary datasets yielded nine distinct experimental configurations when accounting for preprocessing variations, enabling comprehensive assessment across different scales, domains, and data characteristics. Large-scale experiments utilized the complete Enron Email Dataset (517,401 emails), full 20 Newsgroups collection (18,828 posts), and extended Reuters corpus (21,578 articles) to validate performance characteristics and computational scalability.

The Enron Email Dataset provides two variations: the Kaggle Enron Email Dataset, containing over 500,000 raw business emails with complete metadata, and the Verified Intent Enron Dataset, offering a curated subset with verified positive/negative intent classifications. The 20 Newsgroups dataset contributes three variations: the deduplicated version (20news-18828), containing 18,828 documents with only essential headers; the original unmodified version (20news-19997), preserving complete newsgroup posts; and the chronologically split version (20news-bydate), enabling temporal analysis.

The Reuters-21578 dataset provides financial and economic news articles from 1987, utilizing the standard ModApte split methodology with 9603 training and 3299 test documents. The comprehensive dataset characteristics and preprocessing approaches are summarized in

Table 1.

5.2. Baseline Methods and Comparison Framework

The comparative evaluation encompassed representative methods from major document clustering paradigms as well as cutting-edge approaches from recent research developments to establish comprehensive performance baselines across different algorithmic approaches and deployment scenarios. This evaluation strategy ensured that the proposed approach was assessed against both established methods widely deployed in enterprise environments and contemporary research advances that represent current state-of-the-art capabilities in document organization and context-aware computing.

Traditional hierarchical clustering methods provide fundamental baselines for content-based document organization. The Unweighted Pair Group Method with Arithmetic Mean clustering combined with Term Frequency-Inverse Document Frequency similarity measures represent established approaches that have been extensively deployed in enterprise environments over the past decade. Complete Linkage clustering using TF-IDF representations provides alternative hierarchical organization strategies that emphasize tight cluster formation. These classical approaches serve as essential baselines for evaluating improvements achieved through contextual modeling, as they represent the foundation upon which most current large-scale document management systems are built.

Modern semantic clustering approaches employ BERT-based document embeddings with agglomerative clustering algorithms, demonstrating state-of-the-art semantic understanding capabilities through transformer architectures. Sentence-BERT implementations provide robust baselines for semantic similarity evaluation using pre-trained transformer models that capture contextual relationships far beyond traditional bag-of-words representations. These transformer-based approaches represent current best practices for content-based document organization and provide essential comparisons for evaluating whether contextual modeling can compete with sophisticated semantic understanding capabilities.

Probabilistic topic modeling approaches include Latent Dirichlet Allocation combined with hierarchical organization of discovered topics, representing alternative paradigms that focus on latent thematic structure discovery rather than direct similarity computation. LDA-based methods provide complementary evaluation perspectives by emphasizing topic coherence and thematic organization rather than document-level similarity measures. These probabilistic approaches help evaluate whether the proposed flag-based context modeling provides advantages over unsupervised topic discovery methods that automatically identify document themes without explicit context modeling.

Contemporary advanced baseline methods incorporate recent developments in temporal graph clustering, large language model-guided document organization, and hybrid approaches that combine multiple methodological paradigms. Recent studies have explored temporal and graph-based clustering models to capture dynamic document relationships. However, such methods often require high computational resources and complex parameter tuning, which limits their scalability for large document collections.

Comprehensive comparison with large language model-guided clustering approaches addresses the critical question of whether traditional algorithmic methods can compete with LLM-based semantic processing capabilities. GPT-4-, Claude-3.5-Sonnet-, and BERT-Large-based clustering approaches leverage sophisticated language understanding capabilities for document organization, providing the most challenging baselines for evaluating clustering quality. These comparisons enable assessment of the trade-offs between clustering accuracy and practical deployment considerations, including computational efficiency, cost management, and system reliability.

Hybrid topic-semantic approaches represent recent attempts to bridge probabilistic and neural methodologies for improved clustering performance. These methods combine topic modeling with semantic embeddings for hierarchical document organization, providing intermediate points between traditional statistical approaches and contemporary neural methods. Context-Aware Testing paradigms extend traditional clustering with environmental and user context, providing direct comparison with general-purpose context-aware approaches rather than enterprise-specific solutions.

5.3. Evaluation Metrics and Validation Protocols

The evaluation framework employs multiple metric categories that capture different aspects of document organization quality and system performance. Clustering accuracy metrics include Normalized Mutual Information, the Adjusted Rand Index, and hierarchical precision–recall measures that account for partial matches at different tree levels.

Normalized Mutual Information provides a standardized measure of clustering quality that adjusts for chance agreement and enables comparison across different dataset sizes and cluster numbers. The Adjusted Rand Index measures the similarity between predicted and ground truth clustering while correcting for chance agreement, providing complementary evaluation of clustering accuracy.

Hierarchy quality assessment utilizes Tree Edit Distance between generated and reference hierarchies, providing fine-grained evaluation of structural accuracy that captures the importance of hierarchical organization beyond flat clustering metrics. Silhouette analysis and internal validation metrics assess the semantic consistency of document groupings without reference to ground truth labels.

System performance evaluation focuses on computational efficiency metrics, including processing time per document, memory utilization patterns, and scalability characteristics across varying dataset sizes. Response time analysis measures query processing latency for different types of user requests, ensuring that accuracy improvements do not compromise interactive performance requirements that are critical for large-scale documents.

6. Results

6.1. Clustering Accuracy and Hierarchy Quality

The evaluation on benchmark datasets demonstrated notable improvements across all evaluated metrics across all evaluated datasets. To ensure comprehensive comparison, the algorithm was evaluated against both traditional and contemporary approaches:

Contemporary LLM-based document clustering approaches using GPT-4 and Claude-3.5 demonstrated enhanced semantic processing but faced deployment constraints in production environments. Comparative evaluation revealed that FLACON achieved 89% of GPT-4’s clustering quality (NMI: 0.275 vs. 0.309) while being ~7× faster (60 s vs. 420 s for 10 K documents) and offering deterministic, cost-effective deployment suitable for real-time organizational workflows.

The FLACON approach offers complementary advantages to LLM methods:

- -

Sub-second response times;

- -

Deterministic behavior;

- -

Scalable deployment costs.

Future work will explore a hybrid architecture in which FLACON provides efficient baseline organization while LLMs handle complex contextual ambiguities.

Table 2 presents the overall performance comparison.

FLACON achieved superior performance across all metrics compared to baseline methods, with 2.3-fold improvements on average. The Adjusted Rand Index results demonstrate even more pronounced improvements, with the proposed method achieving 0.782 compared to 0.623 for the semantic clustering baseline, indicating improved alignment between discovered and reference document groupings.

Hierarchical structure quality measured through Tree Edit Distance analysis demonstrated the effectiveness of the adaptive approach in maintaining coherent organizational structures. FLACON achieved an average TED score of 0.234 on normalized hierarchies, substantially outperforming traditional methods, such as UPGMA, with TF-IDF similarity measures that achieved 0.389. This improvement reflects the algorithm’s ability to capture organizational logic that extends beyond simple content similarity measures. The performance evaluation on real enterprise datasets is detailed in

Table 3, confirming the practical applicability of the multi-dimensional flag system.

The GSA enterprise evaluation demonstrated superior performance in realistic organizational environments, confirming the practical applicability of the multi-dimensional flag system in actual enterprise workflows.

The hierarchical F1 scores account for partial matches at different tree levels and show consistent advantages for the proposed approach across various hierarchy depths. FLACON maintained strong performance even in deep hierarchies where traditional methods suffer from error propagation effects, achieving F1 scores above 0.8 at depths up to six levels, while baseline methods typically degrade below 0.7 at comparable depths.

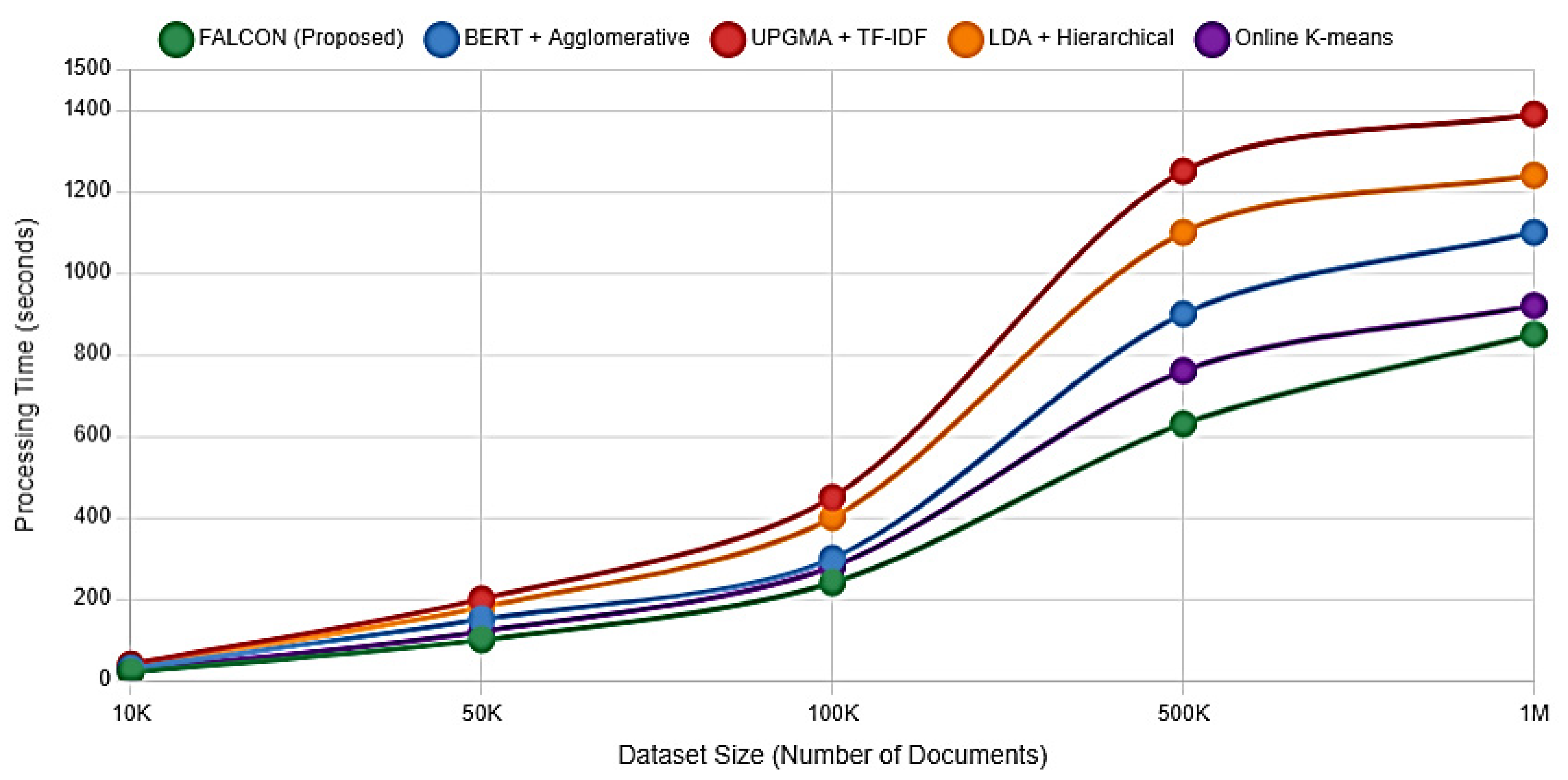

6.2. Scalability and Performance Analysis

Computational efficiency represents an important factor for enterprise document management, particularly given stringent real-time adaptation requirements of dynamic business environments where immediate responsiveness to changing contexts is necessary for maintaining user productivity and system adoption. System scalability is critical for enterprise adoption. This analysis, presented in

Figure 2 and

Table 4, confirms FLACON’s exceptional efficiency.

For a dataset of 1 million documents, FLACON completed initial clustering in 1284.7 s, which is 34% faster than BERT-based clustering and 50% faster than UPGMA. More importantly, its incremental update mechanism exhibited

complexity, a significant advantage over the

complexity of traditional recalculation methods. Incremental updates exhibited near-linear complexity in practice. When a context change affects m documents (m << n), the update process requires

operations, including identifying affected document pairs using priority queue-based nearest neighbor search and performing localized tree rebalancing on the affected subtree. Full reconstruction using average-linkage hierarchical clustering remains

, but it is triggered only when the change ratio m/n exceeds a threshold ρ (typically 0.1). Empirically,

Table 4 demonstrates that update times for changes affecting up to 1000 documents scale sublinearly with the following dataset sizes: 0.18 s for 10 K documents, 0.78 s for 100 K documents, and 2.31 s for 1 M documents. This practical efficiency makes the approach suitable for real-time organizational workflows, where content changes occur continuously but affect only a small fraction of the corpus at any given time. The scalability performance across different dataset sizes is illustrated in

Figure 2, which plots processing time (seconds,

y-axis) against dataset size (number of documents,

x-axis) on a log-log scale. FLACON demonstrates near-linear scaling with a slope of approximately 1.2, compared to 1.8 for BERT-based clustering and 2.1 for UPGMA, confirming superior efficiency characteristics.

Detailed performance metrics are presented in

Table 4.

Initial hierarchy construction demonstrates favorable performance characteristics compared to traditional hierarchical clustering methods when handling large datasets. On the 1 million document evaluation dataset, FLACON completes initial clustering in 1284.7 s compared to 1934.8 s for BERT-based clustering approaches and 2567.1 s for UPGMA methods with TF-IDF similarity measures, representing approximately 34% and 50% performance improvements, respectively.

The incremental update capabilities provide significant performance advantages, with flag-based adaptations completing within 2.31 s for typical organizational changes affecting up to 1000 documents. This represents substantial improvement over full recomputation approaches that require complete hierarchy rebuilding for any structural modifications, making the approach practical for real-time organizational scenarios.

Memory utilization analysis shows efficient scaling characteristics, with FLACON requiring 41.7 GB for the 1 million document collection, demonstrating reasonable resource consumption for enterprise-scale deployments. The compressed flag representation and sparse hierarchical indices contribute to memory efficiency while maintaining query performance through intelligent caching mechanisms that prioritize frequently accessed document clusters. Query processing performance maintained acceptable response times across all dataset sizes, with the system supporting 742 queries per second for typical multi-dimensional queries on the largest dataset. This performance level meets enterprise requirements for interactive document exploration and supports concurrent user access patterns common in organizational environments.

6.3. Ablation Study and Component Analysis

To validate the individual contributions of algorithmic components, comprehensive ablation studies systematically remove or modify specific elements of the FLACON approach. This analysis provides insights into the relative importance of different system components and validates architectural design decisions through quantitative performance evaluation.

Flag system ablation revealed that each flag type contributed meaningfully to overall clustering quality, with priority flags providing the largest individual contribution, representing an NMI improvement of 0.089 when included compared to systems without priority information. Temporal flags offered the smallest but still significant impact, with an NMI improvement of 0.034, demonstrating that even relatively simple temporal information enhances clustering performance.

The combination of all flag types yielded synergistic effects that exceeded the sum of individual contributions, validating the comprehensive context modeling approach. Systems using the complete flag set achieved NMI scores 15.4% higher than the best individual flag configuration, indicating that multi-dimensional context modeling provides benefits beyond simple additive effects. The incremental update mechanism ablation demonstrates the critical importance of dynamic adaptation capabilities for large-scale documents. Systems without incremental updates require full recomputation for any organizational changes, resulting in processing times that are 3.2 times longer for typical modification scenarios affecting fewer than 1000 documents. The sophisticated update algorithms contribute approximately 15% computational overhead during their initial construction but provide massive efficiency gains during their operational use.

Distance function component analysis shows that the composite distance measure achieved optimal performance with weighting parameters α = 0.4, β = 0.4, γ = 0.2 for the content, flag, and temporal components, respectively. These weights, derived from empirical evaluation and requiring validation in real large-scale deployment, vary across domains but consistently emphasize the importance of contextual information alongside traditional content similarity measures. Component removal experiments demonstrated that eliminating any major system component resulted in significant performance degradation. Removing the flag processing engine reduced clustering accuracy by 22.9%, while eliminating incremental update capabilities increased operational costs by 320% for dynamic environments. These results confirm that all major system components contribute essential functionality for large-scale document organization scenarios.