1. Introduction

Entropy, one of the fundamental concepts in physics, plays a pivotal role in the study of complex climate systems. In its thermodynamic formulation, it describes the degree of disorder and energy dissipation [

1,

2,

3] while in Shannon’s information theory, it serves as a quantitative measure of uncertainty and complexity in data such as temperature and precipitation time series [

1,

4,

5,

6]. Contemporary research suggests a significant relationship between physical and informational entropy, opening new avenues for the quantitative assessment of climate variability [

7,

8].

The Earth’s climate system operates as an open, far-from-equilibrium system in which temperature and pressure gradients drive atmospheric and oceanic circulations [

1,

9,

10]. These processes lead to the transport of heat and moisture and to the production of entropy through energy dissipation, phase transitions, and diffusion [

11].

Shannon entropy enables the assessment of uncertainty in the behavior of the climate system, and its analysis across time and space allows for the identification of regions particularly prone to extreme weather events [

12,

13,

14].

In recent years, the concept of information entropy has gained recognition as a powerful tool for characterizing the complexity and unpredictability of climate-related processes. Although explicit references are limited, several hydrological studies—such as those conducted in the Shiyang River Basin—have demonstrated strong conceptual alignment with entropy-based approaches [

15].

In particular, the variability of river flows in response to seasonal and spatial fluctuations in precipitation, temperature, and evaporation reflects the uncertainty and dynamic structure that entropy aims to capture. Observed asymmetries in the correlations between streamflow and meteorological factors, along with the effects of permafrost thawing, underscore the need for analytical frameworks that go beyond traditional deterministic measures. Incorporating entropy-based metrics into hydrological modeling can thus enhance our understanding of system instability and climate sensitivity, especially under conditions of increasing variability and anthropogenic change [

15,

16].

In this study, we analyzed spatial fields and entropy fluxes computed from monthly temperature and precipitation data, employing the formalism of gradients and divergence [

1,

2,

17]. This approach allowed us to capture the directional transport of climate information and to identify local structures of order and chaos that remain undetectable through conventional statistical methods [

11,

14,

18]. The spatial variability of entropy gradients reflects dominant directions of climate variability flow and may indicate external influences such as the impact of the Atlantic Ocean or continentality gradients [

19,

20,

21]. The term “climate variability flow” is not a standard expression and requires clarification. In this study, it refers to the spatio-temporal flow of information related to climate variability, expressed through entropy gradients and fluxes calculated for temperature and precipitation.

Given the use of monthly climatic variables, Shannon entropy was selected due to its balanced sensitivity to distributional variability without amplifying the influence of outliers. While Rényi entropy may provide deeper insight into the behavior of extreme values, it is more suited to high-frequency or event-based data [

22]. The spatiotemporal analysis of Shannon entropy enables the identification of areas characterized by elevated distributional uncertainty, which may coincide with regions experiencing heightened climate variability. However, entropy alone does not allow for a quantitative assessment of extremes; to capture rare events more effectively, additional indicators such as kurtosis or alternative entropy formulations (e.g., Rényi entropy) are more appropriate [

5,

23,

24].

The correlation of entropy flux patterns with large-scale atmospheric indices (such as the NAO and AO) enables the linking of local variability patterns with global-scale phenomena [

25,

26,

27]. Regions with elevated entropy relative to their surroundings act as variability generators, whereas areas with low divergence may function as stabilizers of the system, sensitive to external disturbances. Such spatial differentiation enables the identification of entropy sources, sinks, and informational gaps—elements crucial for climate monitoring and forecasting [

10,

12,

28].

The use of Shannon entropy as a measure of uncertainty and complexity in climatic processes was previously proposed in studies such as [

29], which demonstrated that entropy effectively captures the dynamics of meteorological systems, particularly in the context of seasonal and spatial variability in precipitation. Building upon this foundation, the present study extends the approach by incorporating both temperature and precipitation into two-dimensional probability distributions, representing a significant methodological advancement.

In the international literature, there is growing interest in the application of copula-based statistical models—such as the Frank, Gumbel, and Clayton copulas—for analyzing the interdependence between climatic variables. Notable examples include studies [

30,

31], where copulas were primarily used to model the joint occurrence of extreme precipitation and drought events. In contrast, our study employed copula functions in conjunction with entropy estimation, enabling not only the identification of dependencies between temperature and precipitation, but also an information-theoretic assessment of their joint statistical structure—a dimension not addressed in previous research.

Moreover, the application of Mann–Kendall trend tests and Pettitt change-point detection to entropy time series, rather than directly to meteorological variables, represents a less common but promising methodological approach [

32]. This enables the detection of subtle shifts in the underlying structure of a non-stationary climate system.

The findings of this study are consistent with those of [

33], who demonstrated that spatial entropy increases in regions experiencing intensified seasonal variability—a pattern also observed in our results for Central and Southeastern Europe.

The observed variation in the correlations between entropy measures (including their temporal and spatial derivatives) and indicators of extreme weather events is consistent with the findings of Aristov et al. (2022) [

34], who demonstrated that data resolution, local topographic conditions, and climate type significantly modulate the statistical relationship between entropy and meteorological parameters.

In summary, this study not only validates previous findings, but also introduces an integrated framework that combines copula analysis, time-series evaluation, and spatial entropy mapping. The proposed methodology offers a complementary perspective to traditional analyses of climatic trends and extremes, demonstrating strong consistency with existing regional and global studies, while also contributing novel insights into the multivariate characterization of climate variability.

The method of analyzing spatial entropy flows proposed in this study represents an innovative approach to investigating climate variability, going beyond the capabilities of classical statistical tools [

35]. Unlike conventional trend and anomaly analyses based on simple descriptive statistics—such as means, standard deviations, or the frequency of extremes—the information-theoretic tools applied here enable the detection of subtle structural changes in the distribution of climatic data.

A particularly novel aspect is the use of spatiotemporal derivatives of Shannon entropy to identify local directions of climatic information flow, as well as their relationships with extreme weather events [

36,

37]. By employing the formalism of gradients and field theory, this analysis allows for the detection of regions that generate atmospheric instability (entropy sources) and zones that act to stabilize the system (entropy sinks). Through integration with the Fokker–Planck framework, the method also enables the interpretation of observed changes as the result of the drift and diffusion of information in a stochastic system.

Another crucial element is the use of copulas to model asymmetric dependencies between climatic variables, allowing for a more accurate representation of their interrelationships, particularly in the distribution tails [

38,

39,

40]. Additionally, the application of the block bootstrap method provides estimates of uncertainty and statistical significance, thereby enhancing the reliability of the results [

41,

42,

43,

44].

In contrast to classical linear regression, the method presented here does not assume linearity or stationarity, making it better suited to the nonlinear and transition-prone nature of atmospheric systems. The spatial perspective on entropy also enables the mapping of informational gaps—regions where modeling is challenged by a lack of structured patterns. This type of analysis not only helps identify areas of elevated risk, but also yields insights into the underlying structure of the climate system itself.

Particularly valuable is the method’s ability to capture regime transitions in atmospheric circulation, as demonstrated by the observed associations between entropy gradients and large-scale indices such as NAO and GISTEMP [

37,

45]. Thus, the proposed method offers not only a quantitative, but also a qualitative perspective on climate variability, serving as a bridge between classical climatology and modern complex systems analysis. As a result, it supports the formulation of more robust hypotheses regarding the origin of extreme atmospheric phenomena.

Through spatiotemporal entropy analysis, it also becomes possible to gain a deeper understanding of the processes driving local climate fluctuations. From a practical standpoint, this methodology may serve as a decision-support tool for climate adaptation planning, thereby making a significant contribution to the development of advanced tools for assessing climate system dynamics [

40,

46].

2. Data Preparation for Analysis

The analysis presented in this study was based on high-quality gridded datasets published by the National Oceanic and Atmospheric Administration (NOAA [

47,

48,

49]): Terrestrial Precipitation: 1900–2010 Gridded Monthly Time Series (Version 3.01) and Terrestrial Air Temperature: 1900–2010 Gridded Monthly Time Series (Version 3.01). The study area covers approximately 5570 km × 4050 km, spanning latitudes from 10° S to 40° N and longitudes from 35° E to 72° E.

The analysis used monthly precipitation totals and monthly mean air temperatures for the period 1901–2010 [

47,

50]. The data were provided by NOAA as part of a climate reanalysis product and interpolated onto a regular angular grid with a spatial resolution of 0.5° × 0.5°, centered at 0.25°.

For each grid cell, individual time series of temperature and precipitation were extracted and served as the basis for subsequent analyses grounded in informational entropy.

The NOAA data were utilized at their original resolution, without re-interpolation or grid rescaling. A major reason for selecting this dataset was its temporal and spatial homogeneity. Both temperature and precipitation fields were developed using standardized interpolation algorithms and quality control procedures, applied consistently by the same institution. This ensures a high level of data integrity and facilitates comparability across regions and time periods.

To ensure the reliability of long-term trend analyses, NOAA datasets are routinely verified and homogenized. This includes statistical identification of anomalies, data gaps, and inconsistencies, as well as cross-validation with ground-based observations and satellite products [

48,

49,

51]. As a result, the NOAA dataset provides a stable and trustworthy foundation for detecting climate variability signals associated with entropy flow, as well as for analyzing the spatial directions of climate information transport.

In the conducted comparative analysis of meteorological data, the consistency and reliability of monthly NOAA reanalysis data were assessed through comparison with an independent observational dataset—E-OBS, developed by the Copernicus Climate Change Service [

51,

52]. The E-OBS data, available at a daily resolution of 0.25° × 0.25°, were first aggregated into monthly precipitation totals and mean temperatures for the period 1980–2010. In the next step, bilinear spatial interpolation was applied to align E-OBS data with the NOAA grid, thereby enabling direct, point-by-point comparisons with the gridded NOAA dataset. Only after ensuring temporal and spatial consistency were grid-cell-level comparisons carried out. This careful data preparation allowed for a robust assessment of the agreement between the two datasets in terms of monthly distributions, long-term trends, and the temporal structure of variability.

The validation results indicated strong agreement between the datasets. For precipitation, the relative root mean square error (RRMSE) rarely exceeded 0.1, indicating minimal discrepancies relative to E-OBS [

53,

54]. Mean monthly differences between the precipitation datasets were below 5 mm/month in most areas, and high Spearman rank correlations (>0.8) confirmed a strong match in the temporal variability structure. Even greater consistency was observed in the temperature data: RRMSE values were below 0.05 across much of the grid, the average temperature difference between NOAA and E-OBS was less than 2 °C in 95% of cases, and rank correlations exceeded 0.95 at most locations [

55]. Observed local discrepancies—primarily in mountainous or coastal regions—can be attributed to interpolation limitations and local characteristics of the observational network.

To ensure data quality, all NOAA time series were screened for missing data, and grid points with incomplete temporal coverage (<360 months) were excluded from further comparison. No imputation of missing data was applied, in order to avoid introducing systematic bias. This approach ensured fair comparative conditions and high confidence in the conclusions.

In light of the analysis, it can be stated with high confidence that the NOAA reanalysis data showed very strong agreement with the E-OBS observational dataset and can be considered a reliable source for further studies on climate variability, both in the temporal and spatial dimensions.

3. Methodology

This study investigated the relationships between the structure of informational entropy and extreme weather phenomena, with a particular focus on maximum temperatures and minimum precipitation totals [

56,

57].

This approach is based on the analysis of annual values of entropy and their spatial gradients (entropy potential) for individual months, calculated at grid points covering the European domain. For each grid point, monthly values of information entropy were computed based on the joint distributions of atmospheric conditions—temperature and precipitation.

Although the input data had a monthly resolution (monthly mean temperatures and precipitation totals), entropy was not calculated based on individual monthly observations. Instead, a moving time-window approach was employed: for each grid point and each calendar month (e.g., January, February, etc.), a sample of 70 consecutive years was extracted. Within each window, the joint distributions of temperature and precipitation were estimated, and the corresponding entropy values were calculated. This process was repeated by shifting the window one year forward (e.g., 1901–1970, 1902–1971, and so on).

In this way, a time series of entropy values was obtained for each calendar month, where each value represents 70-year conditional statistics, rather than single monthly observations. For simplicity, we refer to these values throughout the manuscript as entropy estimated in a 70-year window for a given calendar month. For example, the entropy for January 1971 was based on data from 1901 to 1970, for January 1972 on data from 1902 to 1971, and so forth. As a result, we obtained 40 seasonal annual entropy values (calculated within a 70-year window for each calendar month) to which a time index can be assigned, enabling trend estimation and other temporal analyses.

For each grid point, entropy was computed separately for each calendar month, based on the joint distributions of atmospheric conditions (temperature and precipitation). To capture the actual, often nonlinear and asymmetric dependence structure between temperature and precipitation in a changing climate system, bivariate copula functions were used [

20,

58].

The approach is based on the analysis of monthly entropy values and their spatial gradients (entropy potential) across a grid covering the European domain [

2,

12,

26,

59].

Additionally, for each month, the spatial gradient of entropy was computed and interpreted as a vectorial entropy potential, with its magnitude (|∇Entropy|) serving as a proxy for local atmospheric variability and instability. The direction and rate of change in uncertainty across space were defined by the entropy gradient vector (∇Entropy) [

60,

61].

The primary objective of the analysis was to determine whether statistically significant relationships existed between entropy levels and their spatial dynamics, on the one hand, and extreme values of maximum/minimum temperature and precipitation, on the other hand, at the same geographic locations. Three types of relationships were examined: (1) between the entropy value (Entropy) and weather extremes, (2) between the entropy potential (|∇Entropy|) and extremes, and (3) between the temporal derivative of entropy and extremes [

57,

60].

The first approach tested whether higher uncertainty levels (greater entropy) at a given location correspond with increased risk of high temperatures or drought. A positive correlation between entropy and maximum temperature would suggest that regions with higher variability are more prone to experiencing extreme heat events. Conversely, a negative correlation between entropy and minimum precipitation totals would imply that higher uncertainty favors the occurrence of severe droughts [

36,

62,

63].

The second approach focused on the analysis of the entropy gradient, interpreted as the rate and direction of spatial entropy changes. The magnitude of the gradient vector (|∇Entropy|), or entropy potential, was used to identify regions of pronounced spatial instability that may serve as initiation zones for extreme events. The analysis thus examined whether sharp spatial variations in entropy are linked to the occurrence of extreme temperature and precipitation values [

60,

64,

65].

All correlations were computed using two variants: temporal and seasonal–spatial. In the temporal analysis, for each grid point, the Spearman correlation was calculated between the 12-month entropy or entropy gradient series and the corresponding monthly values of weather extremes [

66]. The resulting correlation coefficient indicated the strength and direction of the relationship between monthly entropy fluctuations and variations in extreme weather events.

While Spearman’s rank correlation is a useful tool for general data exploration, it is not optimally suited for characterizing relationships between extreme events. In particular, for variables with highly asymmetric distributions or heavy tails—such as extreme precipitation or temperature maxima—classical correlation measures may fail to accurately capture the underlying dependence structure [

46,

67]. In such cases, more appropriate approaches involve tail dependence analysis including the use of copulas, tail indices, or conditional exceedance probabilities. Therefore, the current approach should be viewed as a preliminary step in identifying potential dependencies, rather than a definitive method for their validation

A high positive temporal correlation signified that months with elevated entropy potential coincided with months of extreme conditions (e.g., heatwaves), while a high negative correlation indicated an inverse relationship—i.e., that increased local instability was associated with lower extreme values, such as droughts. In the seasonal–spatial variant, spatial correlations were examined within each month separately. Spearman correlations were computed between the spatial distribution of entropy (or entropy gradients) and the spatial distribution of weather extremes for a given month [

53,

66]. This analysis addressed whether, during a given season, areas with higher entropy potential also exhibited a greater risk of extreme weather. These spatial correlations provided insight into the general seasonal–spatial structure of the relationship between climate variability and extremes [

68].

To enhance the reliability of the results, we applied a circular block bootstrap resampling procedure, which preserves the autocorrelation structure typical of time series constructed from overlapping 70-year windows. Instead of simple resampling of individual observations with replacement, entire blocks of adjacent values were drawn. The block length was determined individually based on the analysis of the autocorrelation function (ACF) of the residuals, after detrending the data—specifically, using the minimum lag at which the ACF ceased to be statistically significant.

For each time series, 1000 bootstrap replications were generated, allowing us to obtain empirical distributions of the trend slope estimates as well as reliable confidence intervals. This procedure increases the robustness of the results to Type I errors, which may arise when autocorrelation is ignored in classical statistical tests.

Particular attention was given to the Spearman rank correlation coefficient, whose values were interpreted at the 5% significance level (corresponding to a 95% confidence interval) [

12,

69,

70]. This approach allowed not only for the estimation of the average strength of dependence, but also for the assessment of the stability of the results under the strong autocorrelation conditions typical of the analyzed time series.

The results were presented as spatial maps, including distributions of entropy, trends in entropy and entropy potential, temporal and seasonal–spatial correlation maps, and visualizations of entropy gradient streamlines. The streamlines indicated trajectories along which uncertainty propagates spatially—offering potential prognostic value. Particularly promising were the findings from the entropy gradient analysis, which pointed to the existence of spatial mechanisms that may initiate extreme weather events. Strong local entropy gradients may signal that the atmospheric system is approaching bifurcation thresholds that lead to more chaotic states.

Strong local entropy gradients may be interpreted as a potential signal that the atmospheric system is approaching bifurcation thresholds—an indication which, according to dynamical systems theory (e.g., Scheffer et al.) [

71], is often associated with increased susceptibility to transitions toward more chaotic states. This interpretation is hypothetical in nature and requires further empirical validation.

3.1. Distribution Fitting

The methodology presented in this study was based on bivariate copula functions, which offer a coherent and statistically well-founded approach to modeling dependencies between random variables with arbitrary marginal distributions. This approach allows for the separation of marginal modeling from dependence structure modeling, enhancing both flexibility and interpretability of the results.

The analytical process began with the estimation of marginal distribution parameters using the maximum likelihood estimation (MLE) method. This method provides efficient and consistent estimators, assuming standard regularity conditions are met. For each fitted marginal distribution, the Akaike Information Criterion (AIC) was applied to objectively select the best-fitting model, balancing model complexity and goodness of fit [

6,

72,

73].

The selected marginal distributions were then transformed into the uniform space using their corresponding cumulative distribution functions (CDFs), which is a standard step in copula construction. In the next stage, the parameters of the selected copula functions (e.g., Clayton, Gumbel, Frank, Gaussian, and Student-t copulas) were also estimated using MLE [

43,

55,

74]. This step enabled the full dependence structure to be captured independently of the marginal distributions.

Among the fitted copula functions, the one that best captured the dependencies observed in the data was selected. In addition to the Akaike Information Criterion (AIC), the primary selection criterion was the agreement between the empirical joint distribution function and the theoretical distribution derived from the copula model [

27,

45].

This ensures that the entire process is grounded in comparable and statistically robust measures of goodness-of-fit, eliminating arbitrariness in the choice of both margins and dependency structures. Such a methodology adheres to the standards of modern probabilistic modeling, integrating marginal and joint characteristics, and proves particularly useful in meteorological, hydrological, and financial applications.

Importantly, given a sufficiently large sample size and careful selection of candidate marginal and copula functions, this procedure enables not only the accurate description of dependencies, but also the prediction of extreme events and the assessment of joint risks associated with their co-occurrence. The framework thus constitutes a coherent and calibratable approach for constructing bivariate probabilistic models with strong statistical foundations.

3.1.1. Marginal Distributions

The modeling of Shannon entropy in this study relies on temperature and precipitation data. For each spatial grid cell, marginal distributions were analyzed separately for monthly mean temperatures and monthly total precipitation values.

The following distributions were considered as candidates for marginal fitting (see

Table 1) [

55]: Generalized Extreme Value (GEV), Normal, Log-normal, Weibull, Gamma, Extreme Value, Nakagami.

Each distribution was fitted using the maximum likelihood estimation (MLE) method, and its goodness of fit was assessed using the Anderson–Darling test. The optimal model was selected based on the Akaike Information Criterion (AIC), which allowed for consideration of local climatic conditions and regional differences in the variability of temperature and precipitation across Europe.

3.1.2. Bivariate Copula Functions

The application of bivariate copula functions in analyzing the joint variability of temperature and precipitation enabled a precise representation of the dependencies between these variables, particularly in the context of Shannon information entropy estimation. Traditional approaches that assume independence or simple linear correlation are insufficient for capturing the true, often nonlinear and asymmetric dependence structure that characterizes the relationship between temperature and precipitation in a variable climate system. Copulas, as statistical tools, allow for the decoupling of marginal distributions from the dependence structure, making it possible to model interactions even in the presence of strong asymmetries, tail dependencies, or extreme values [

75,

76].

This study employed four classic families of copulas: Gaussian, Clayton, Frank, and Gumbel, each contributing distinct interpretative strengths to climate analysis (see

Table 2) [

77,

78,

79].

The Gaussian copula, an extension of the multivariate normal distribution, is effective in capturing symmetric dependencies, but it does not account for tail dependence, which limits its utility in analyzing extreme co-occurrences such as droughts or heatwaves. Nevertheless, it remains valuable where dependence is moderate and variables do not deviate strongly from normality [

75].

The Clayton copula, characterized by strong lower-tail dependence, is well-suited for analyzing the co-occurrence of low precipitation and high temperatures, conditions typical of droughts. This makes it especially useful in exploring regional seasonal drought patterns, where standard methods fail to capture intense dependence under extreme conditions [

45].

In contrast, the Gumbel copula emphasizes upper-tail dependence, making it appropriate for modeling extreme co-occurring events, such as intense rainfall during heat-induced convective storms, where high temperatures promote evaporation and condensation [

80,

81].

The Frank copula offers greater flexibility in modeling moderate and symmetric dependencies, without favoring either tail. It is therefore used as an intermediate model, particularly where dependencies are evident but not extreme [

81].

The Akaike Information Criterion (AIC) was used to select the best-fitting copula at each grid point, allowing for localized adaptation of the model to regional climatic conditions. This enabled a faithful reconstruction of the complex landscape of temperature–precipitation dependencies across the continental scale. A key advantage of this approach lies in its ability to accurately estimate the joint distribution of variables, which then serves as the basis for computing Shannon entropy [

46,

76,

79,

82].

The spatial analysis of entropy derived from copula-based distributions allows for the identification of regions with distinct dependency structures (e.g., transition zones, areas of increased continentality, or regions influenced by orographic barriers). Furthermore, spatial differentiation in copula types reveals where particular extremes dominate—such as droughts or heavy rainfall—which has critical implications for climate risk assessment [

46].

Integrating copula functions with information entropy analysis represents a significant step toward a more comprehensive description of complex climate interactions. This approach combines the probabilistic rigor of dependence modeling with the interpretive strength of information theory. Since entropy estimated from copulas captures the entire dependence structure, rather than just correlation, it allows for the inclusion of subtle, locally specific climatic features. This proves especially effective in monthly data analysis, where distributions often vary seasonally and regionally, and traditional multivariate models fall short in reflecting the full complexity of dependencies. Ultimately, copula functions not only improve the accuracy of entropy estimation, but also enable inference about the causes and nature of extreme weather events, supporting the development of more advanced tools for monitoring and forecasting climate variability.

In this study, a semi-parametric approach was adopted which—while ensuring mathematical consistency and high operational efficiency—also involves a certain methodological trade-off. The use of established families of distributions and copulas allows for the straightforward derivation of derivatives, efficient computation of entropy, and implementation of estimation algorithms. At the same time, adopting a parametric model inevitably entails some simplification of the data structure: local features of the empirical distribution, such as asymmetry, multimodality, or unusual tails, may be partially smoothed or overlooked. This is the cost of increased interpretability and analytical tractability of the model, particularly in the context of entropy gradient analysis or modeling of information fluxes.

An alternative would be a hybrid approach, combining parametric marginal distributions with nonparametric copulas (e.g., kernel-based), or a fully nonparametric variant based on empirical distribution functions and flexible adaptive or rotational copulas. Although potentially more accurate, such methods involve substantially higher computational costs, reduced numerical stability, and more challenging interpretation. Given the aim of this study—comprehensive spatio-temporal analysis of information entropy—the use of parametric models represented a justified compromise between estimation accuracy and analytical capability.

Although it is theoretically possible to estimate the joint entropy

empirically from 70 observation pairs within a moving window (e.g., using

estimators or via discretization with the Miller–Madow correction), we deemed this approach unsuitable in our case [

83]. With such a limited sample size, two-dimensional differential entropy estimators operate at the edge of stability, are highly sensitive to technical parameter choices, and produce large variance, which hampers comparability across locations. In addition, the strong autocorrelation present in climate series within 70-year windows means that even bootstrap procedures yield wide, weakly informative confidence intervals. As a result, empirical entropy estimates would carry substantial uncertainty and be difficult to interpret. For these reasons, we opted for the semi-parametric approach, which ensures greater consistency and reproducibility of results across the entire analysis.

For the analysis of the bivariate distributions of temperature and precipitation, we employed a semi-parametric approach based on selecting marginal distributions and copulas from a limited set of candidate functions. For each marginal variable, seven univariate distributions were considered, while the bivariate dependencies were modeled using one of four copulas: Frank, Gaussian, Clayton, or Gumbel. Model selection for the marginal distributions and copulas was carried out separately for each grid point and calendar month, based on the full 110-year data series (1901–2010). The selected model was then kept fixed throughout the time series, with only its parameters updated within successive 70-year windows.

This approach has two key advantages:

it ensures consistency and comparability of results over time at each grid point, by avoiding artificial distortions in entropy analysis that could arise from changing model selections;

it reduces the risk of overfitting by preventing arbitrary model adaptation to short samples.

The limitation of this method is the potential for the imperfect fit of a single fixed model across the entire observation period, particularly if the structure of climate dependencies changes over time. Nevertheless, in the context of trend and entropy dynamics analysis, the benefits of modeling consistency and stability were judged to outweigh this limitation, and any potential misfit is expected to be systematic in nature, without distorting the relative changes over time.

3.1.3. Akaike Information Criterion (AIC)

The marginal distributions describing temperature and precipitation for each analyzed sequence were estimated using the maximum likelihood method, assessed via the Anderson–Darling test (ADT). The selection of the optimal model was based on Akaike Information Criterion (AIC) values and the goodness-of-fit between empirical and theoretical distribution functions.

AIC is a model selection metric that balances goodness-of-fit with model complexity. It is defined as:

where:

is the number of estimated model parameters and

is the maximum likelihood value of the model.

A lower AIC value indicates a better trade-off between fit and simplicity.

The log-likelihood function used in the computation is given by:

where

is the probability density function of the model evaluated at observation

given parameter vector

.

3.1.4. Anderson–Darling Test (AD Test)

The Anderson–Darling test is particularly useful in the analysis of climatic and hydrological variables as it provides a reliable assessment of distributional fit even in the extremes of the distribution, which is crucial for studies involving precipitation and temperature.

It belongs to the family of goodness-of-fit tests and represents an extension of the classical Kolmogorov–Smirnov test. The core idea is to compare the empirical cumulative distribution function with a theoretical cumulative distribution function of the candidate distribution. In contrast to the KS test, the Anderson–Darling test assigns greater weight to discrepancies in the tails of the distribution.

The test statistic is defined as:

where

denotes the ordered sample values and

is the sample size.

Large values of the statistic indicate a lack of fit between the sample and the theoretical distribution.

3.2. Statistical Tests Used

To assess trends in Shannon entropy for both precipitation and temperature, the block bootstrap resampling technique was employed to generate multiple statistical realizations and to estimate stable entropy values. For each iteration, a separate estimation of the marginal distribution parameters was performed, followed by the construction of a joint distribution using copula functions.

3.2.1. Pettitt Test (PCPT)

The PCPT has been widely used to detect changes in observed climatic and hydrological time series [

8,

16]. The Pettitt test is also applicable to investigate an unknown change point by considering a sequence of random variables

, which have a change point at

. As a result,

has a common distribution

but

has a different distribution

, where

. The null hypothesis

(no change but

) was tested against the alternative hypothesis

(change

) using the non-parametric statistic

where:

for the downward shift and

or the upward shift. The confidence level associated with

lub

is approximately determined by:

When

is smaller than the specified confidence level (for example, in this study, 0.95 was adopted), the null hypothesis is rejected.

The approximate

-value for the change point is defined as:

In this study, the Pettitt test was specifically applied to identify change points in the time series of Shannon entropy derived from monthly precipitation totals and average monthly temperatures. PCPT, based on a test statistic compared against a critical value, allows for determining whether the null hypothesis of no abrupt change can be rejected [

84]. This method is widely used in climatological and hydrological analyses due to its robustness in detecting structural changes in environmental time series [

12]. The test was applied recursively: after identifying the first change point at the 5% significance level, the corresponding segment was removed and the remaining data were analyzed again.

3.2.2. Modified Mann–Kendall Trend Test (MMKT)

Trend characteristics and patterns in entropy were analyzed using the Modified Mann–Kendall Test (MMKT)—a nonparametric statistical test widely used in studies of climate change [

85,

86]. Null hypotheses were rejected at a significance level of α = 0.05, corresponding to a 95% confidence level. The choice of this method was motivated by the fact that climate time series based on 70-year moving windows exhibit strong autocorrelation, which in the classical Mann–Kendall test leads to underestimation of the variance of the S statistic and thus the overstatement of trend significance.

The solution proposed by Hamed and Rao [

85] introduces a correction to the variance:

where

is the effective sample size, computed from the autocorrelation function

of the residuals at lag

, after removing the trend:

The corrected test statistic is given by:

where the

statistic is calculated as:

and:

The null hypothesis of no trend is rejected when (i.e., 1.96 for = 0.05).

In addition, to identify change points, the Pettitt test was applied [

66,

69,

84]. When a statistically significant change (

= 0.05) was detected, the time series was divided into subsequences, each of which was then re-analyzed using the Modified Mann–Kendall Test (MMKT). If no change point was identified, MMKT was applied to the entire series. This approach enabled the simultaneous detection of monotonic trends and potential regime shifts in the data.

3.2.3. Hirsch–Sen’s Slope Estimator

To quantitatively assess the magnitude of the trend, a non-parametric slope estimator proposed by Sen and later extended by Hirsch was applied. This method calculates the median of pairwise rate-of-change estimates over time, allowing not only for the detection of a trend’s presence, but also for the determination of its direction and magnitude [

87,

88,

89].

The linear trend is estimated using the median of all pairwise slopes:

where

, and

is treated as the median of all possible pairs of combinations for the entire dataset.

This estimator provides a robust measure of the rate of change over time, making it suitable for datasets that may include non-normal distributions or outliers.

3.2.4. Kolmogorov–Smirnov Test (KS)

The Kolmogorov–Smirnov (KS) test was used in this study as a statistical tool to assess the conformity of the correlation distributions—between entropy measures (, and ) and extreme meteorological variables—to a normal distribution.

The primary goal of applying the KS test was to verify whether the spatial and seasonal distributions of the Spearman correlation values exhibited a shape consistent with the normal distribution, which is critical for properly interpreting the strength and nature of dependencies between the variables.

where

are the empirical cumulative distribution functions of the two samples;

denotes the maximum absolute difference between the two distribution functions.

4. Shannon Entropy as a Measure of Climate Information

Shannon entropy, though originally derived from information theory, is widely applied in environmental and climate data analyses as a nonparametric measure of uncertainty, disorder, and variability in probability distributions. In climatological literature, informational entropy has been successfully used to analyze seasonal and spatial climate variability, as well as to detect shifts in weather regimes. In the present approach, emphasis is placed on the empirical evaluation of entropy’s evolution across time and space as an indicator of local climate instability—without requiring reference to an external or idealized distribution [

90]. Defining such a “reference” distribution can be challenging, if not impossible, in the context of complex and nonlinear atmospheric processes. Under conditions of high meteorological variability and spatial heterogeneity, adopting an arbitrary reference distribution may lead to ambiguous or misleading interpretations. It is important to note that Shannon entropy can serve as an indicator of the overall variability and instability of a distribution, but it should not be interpreted as a measure of the intensity or frequency of extreme events.

For this reason, a self-contained approach based on empirical entropy was adopted. This enabled tracking the degree of order in weather data without relying on additional model assumptions, thereby enhancing the method’s universality and robustness against errors arising from incorrect distributional specifications. In informational terms, an increase in Shannon entropy reflects a higher level of randomness and complexity in the distribution of climate variables, which directly translates into a reduced predictability of weather conditions and, potentially, greater vulnerability of the system to extreme events. Thus, the use of entropy as an indicator for analyzing climate variability aligns with the growing interest in nonparametric measures of uncertainty that allow for the assessment of irregularities and risk within a dynamically changing climate system.

Shannon entropy quantifies the uncertainty associated with predicting the value of a random variable [

5,

39,

91]. It is calculated from the estimated probability distribution, and the accuracy of this estimate directly influences the reliability of the entropy computation. An improperly selected or poorly fitted distribution may result in erroneous entropy values, potentially leading to incorrect conclusions about the underlying climate structure.

The formula for the Shannon entropy of a continuous bivariate random variable

, with joint probability density function

, is defined as [

38,

92,

93]:

Here,

is the joint PDF of the bivariate distribution derived from the copula and marginals, marginal PDFs

and

. The copula density

is derived from a selected family of copulas.

The formula for the joint PDF becomes:

Use the definition:

to estimate the entropy as the negative mean of the log joint PDF.

In discussing units of Shannon entropy for continuous distributions, the results are typically expressed in units of information—nats (when natural logarithms are used) or bits (when base-2 logarithms are used). The choice of unit depends on analytical conventions and the logarithmic system employed [

12].

To preempt known criticisms and limitations associated with the use of Shannon entropy, this study was designed with methodological rigor. Measures implemented included:

standardization of measurement units across the entire dataset,

consistent estimation of marginal distribution parameters using the Maximum Likelihood Estimation (MLE) method,

and uniform discretization procedures for all input data.

The selection of both marginal distributions and copula functions was guided by the Akaike Information Criterion (AIC), allowing for an objective assessment of model fit under consistent modeling assumptions. These methodological safeguards ensured the integrity of the entire procedure, aligning it with the rigorous standards required in climate data analysis—particularly in the context of studying extreme weather events and their relationship to informational measures of uncertainty, such as entropy.

One of the key limitations in using Shannon entropy as a measure of uncertainty in climate analyses is that the entropy of a probability distribution does not necessarily reflect the temporal predictability of a signal. It is possible for a meteorological variable (e.g., temperature or precipitation) to exhibit high entropy, suggesting substantial uncertainty about its values, while its temporal structure remains orderly and regular, making the signal highly predictable. Conversely, a signal with low entropy—which theoretically implies lower uncertainty—may, in practice, be chaotic if its values are irregularly distributed in time.

Such discrepancies arise because classical information entropy operates solely on frequency distributions, ignoring the temporal context and sequence dynamics. A particularly illustrative example is random time series shuffling (permutation): while such a permutation does not alter the entropy of the distribution (since the set of values and their probabilities remain unchanged), it completely destroys the temporal structure of the signal, resulting in a loss of predictability. This example highlights a critical limitation of the current approach based on static distributions.

Thus, while Shannon entropy is a powerful tool for analyzing spatial and structural variability, its temporal interpretation requires caution. There is a need to consider extending the approach to include dynamic or algorithmic entropy measures that account for sequence order and the properties of the underlying generating process.

The use of information entropy as a measure of uncertainty in climate variables, although theoretically well-founded and innovative, also involves important practical limitations, stemming from both the characteristics of the input data and the analytical methodology.

One major limitation is the spatial resolution of the data. A clear example is the comparative analysis between the NOAA and E-OBS datasets. Although grid harmonization (through spatial averaging or interpolation) was performed, such transformations inevitably introduce a degree of uncertainty—especially in regions with strong topographic variability (e.g., mountains and coastal areas), where local weather conditions can differ significantly over short distances.

A second important limitation concerns the temporal scale and data aggregation. While monthly aggregation facilitates comparisons and reduces random fluctuations, it can mask short-term extremes and dynamic weather changes—particularly relevant for convective precipitation, heatwaves, or frost events.

Moreover, entropy calculated from probability distributions estimated within a 70-year window for a given calendar month does not fully capture the intensity and frequency of extreme events occurring over shorter time intervals, which may limit its usefulness in the context of early warning for abrupt phenomena.

A third constraint involves the method of estimating the joint probability distributions, which directly affects the accuracy of Shannon entropy estimation. The use of long historical time series (e.g., 70-year moving windows) is justified from the perspective of statistical stability, but it can lead to the dilution of climate change signals, especially in the presence of non-stationarities or change points, as revealed by the Pettitt test. Consequently, entropy calculated over extended periods may not reflect the current structure of weather variability over shorter time horizons.

A fourth potential drawback is the method’s sensitivity to missing data and how these gaps are handled. Although NOAA data undergo homogenization and quality control, gaps may still occur in certain regions (e.g., over seas, sparsely populated, or mountainous areas), which are filled using various interpolation techniques. In regions with a high share of estimated data, entropy results may be subject to greater systematic error.

The combined effect of these limitations can reduce the accuracy of entropy estimation, particularly in localized analyses and at fine spatial scales. This may lead to the under- or overestimation of variability, which in turn can distort interpretations of trends and correlations with extreme weather events. On regional or continental scales, these limitations are less severe—results tend to retain greater statistical consistency and are useful for spatial comparisons. Nevertheless, the universality of findings should be treated with caution, especially when applied to local climate extreme forecasting or risk assessment in sensitive sectors, such as agriculture, water management, or disaster preparedness.

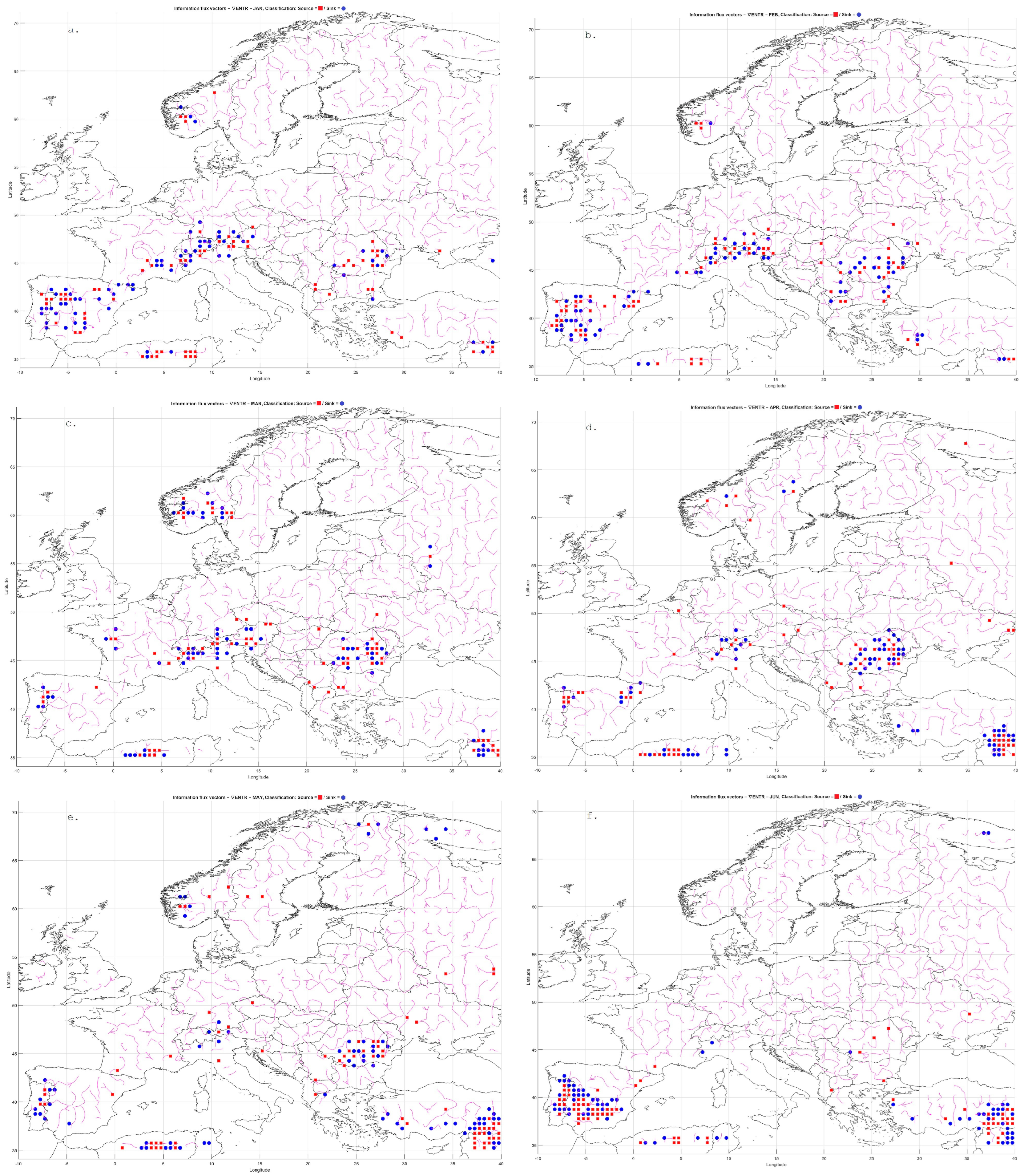

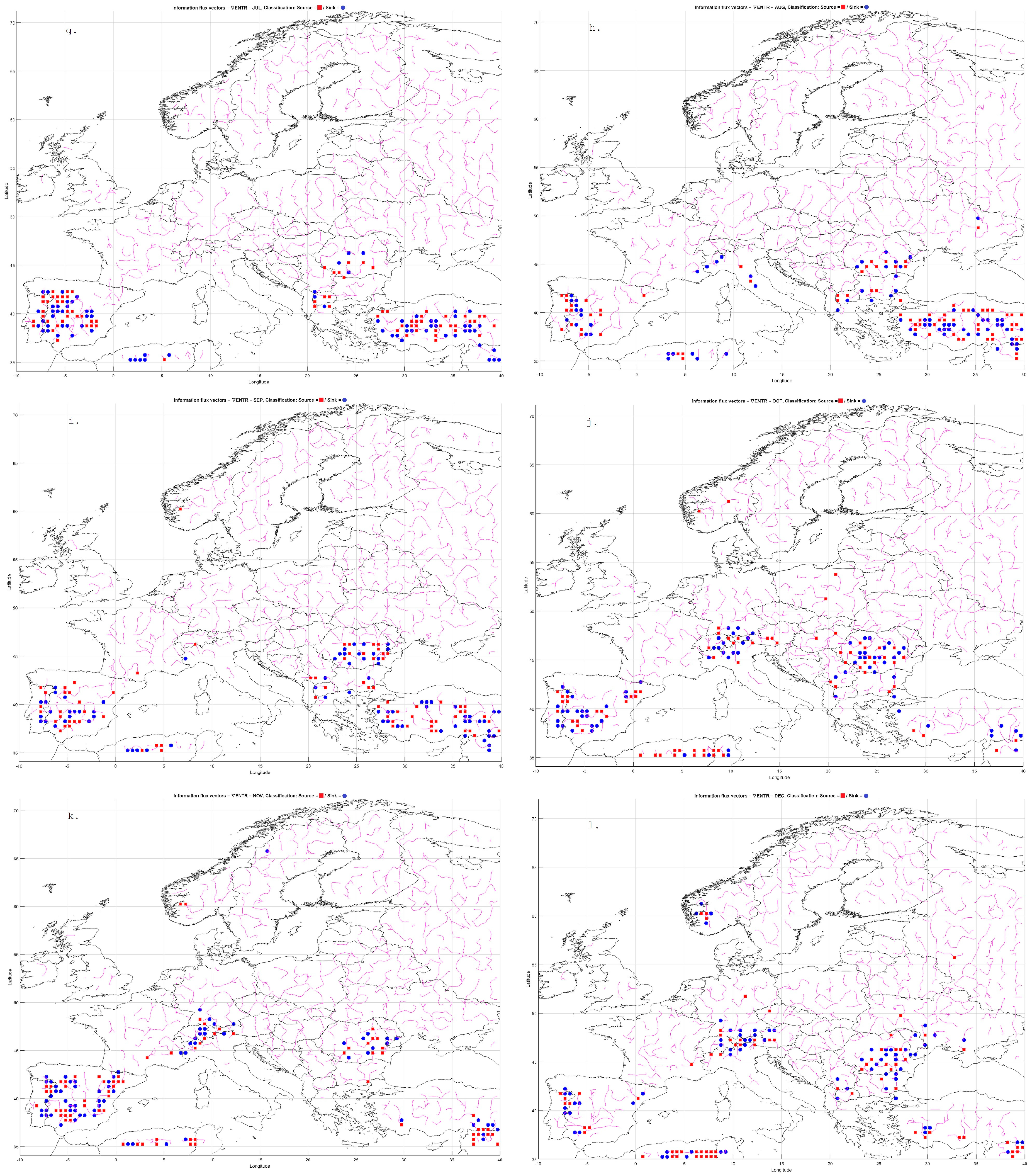

4.1. Entropy Fluxes as a Tool for Spatiotemporal Analysis of Climate Variability

In the analysis of extreme weather phenomena—such as heatwaves, droughts, or flash floods—information-theoretic metrics, particularly informational entropy, are increasingly being employed [

61,

94]. The proposed approach extends classical entropy analysis by incorporating field-based aspects: spatial gradients, divergence, and the associated entropy flux [

95]. The introduction of operators known from continuum physics (such as

) enables a mathematical representation of informational flows between adjacent cells of the analytical grid [

96]. This serves as a foundation for detecting sources and sinks of climate variability. In particular, extreme events may be preceded by changes in the informational structure of the atmospheric system, whose spatiotemporal patterns can be captured through the analysis of entropy fluxes [

17,

97].

Informational entropy is not a classical energy function but rather a measure of informational disorder—making its diffusion conceptually different from classical heat diffusion. The interpretation of “information flux” as the spatial derivative of entropy is metaphorical but statistically valid, provided it is understood as a representation of statistical structure, rather than a literal physical energy transfer. It is essential to emphasize that in the context of “climate information”, the term refers to the statistical structure of weather variability, not to concrete datasets [

24].

This study presents a framework based on the spatiotemporal analysis of informational entropy distributions aimed at understanding the dynamics of extreme climate events. Each geographic grid cell (with a resolution of 0.25° × 0.25°) is assumed to hold a value of entropy , calculated from meteorological variables describing local conditions. This entropy can be interpreted as a measure of uncertainty or complexity of the climatic regime at a given location and time. The spatial gradient vector of entropy, , indicates the direction and intensity of uncertainty change across space, while its orientation reveals the direction in which structural changes in information propagate.

The entropy flux is then defined as:

representing a hypothetical flow of climate information between neighboring cells, analogous to diffusion mechanisms in physics. The divergence of this flux,

allows for the identification of areas that act as sources or sinks of variability, which can be critical in detecting spatial dynamic regimes. This formalism is grounded in classical field theory and employs well-established mathematical tools of vector calculus (gradient, divergence, Laplacian). Applying this methodology to meteorological data enables the identification of regions with elevated instability, which may serve as precursors to extreme weather events.

In particular, the temporal derivative of entropy,

, serves as an indicator of local atmospheric system dynamics, where sharp increases may signal impending destabilization, such as intense precipitation or heatwaves. This approach effectively integrates classical concepts of information with climate process analysis and introduces a novel dimension to the detection and prediction of environmental hazards [

60].

It should be emphasized that the interpretations of entropy gradients and fluxes presented here are hypothetical and serve as a conceptual framework for analyzing the dynamics of the climate system. Entropy values, their spatial gradients, and temporal derivatives are treated as quantitative indicators describing the system’s complexity and uncertainty; however, their links to atmospheric mechanisms—such as the persistence of local weather regimes or the generation of extremes—should be regarded as working assumptions that require further verification. In particular, the interpretation of the “stability of local weather regimes” refers to long-term statistical stability within the 70-year analysis windows, rather than a direct representation of short-term synoptic dynamics. We consider our approach as a starting point for further empirical and modeling studies that may confirm or refine the proposed relationships.

4.2. The Informational Entropy Field

For the informational entropy function derived from the bivariate distribution of temperature and precipitation variables

at a given spatial point

, the time-window-based estimate can be expressed as:

For each grid cell with geographical coordinates

at time

, the concept of the spatial entropy gradient vector is introduced as:

where

denote partial spatial derivatives of entropy along the west–east and south–north axes, respectively. This gradient reflects both the direction and intensity of local informational complexity changes in space. The entropy flux vector is defined analogously to classical diffusion:

where

is the entropy diffusion coefficient, which may be treated either as a fixed empirical constant or as a function of local environmental conditions.

Further analysis relies on the divergence operator; sources and sinks of entropy in space are identified via the divergence of the entropy flux vector:

when

, positive divergence values indicate areas (grid cells) acting as sources of information, or generators of variability.

When , negative divergence values indicate absorbers of variability, associated with relative atmospheric stability.

4.3. Relationship with Weather Extremes

Climatic extremes can be analyzed through the lens of local entropy stability. Stability is quantified by examining temporal changes in entropy:

Persistently low entropy values with weak spatial gradients suggest a stable weather regime—a potential predictor of droughts or heatwaves. Conversely, a sharp increase in the entropy time derivative signals regime destabilization, serving as a predictor of floods or severe storms.

The concept of entropy flux offers a novel perspective on climatic processes as a dynamic system of information exchange between neighboring regions. Areas with strong positive divergence may indicate localized instabilities conducive to extreme events—such as the initiation of convective storms or surface overheating under weak circulation conditions. In contrast, regions with negative divergence may correspond to stabilizing zones, for example, persistent high-pressure systems that sustain stable weather conditions.

This spatial differentiation enhances our understanding of which regions act as sources or sinks of variability over a given period, with direct implications for the risk assessment of extreme weather events.

Beyond spatial aspects, the temporal derivative of entropy

provides insight into the stability of local weather regimes. An increase in this derivative can be interpreted as a signal of the destabilization and growing unpredictability of the system—conditions that often precede the onset of climatic extremes. The combined assessment of spatial entropy gradients and the temporal evolution of entropy and its derivatives delivers a more comprehensive picture of atmospheric system dynamics [

98].

5. Results of the Analyses and Discussion

To enable a precise assessment of information entropy trends for precipitation and temperature, a block bootstrap resampling method was applied to generate multiple realizations of Shannon entropy. The entropy calculations were based on the bivariate joint probability distribution of temperature and precipitation (

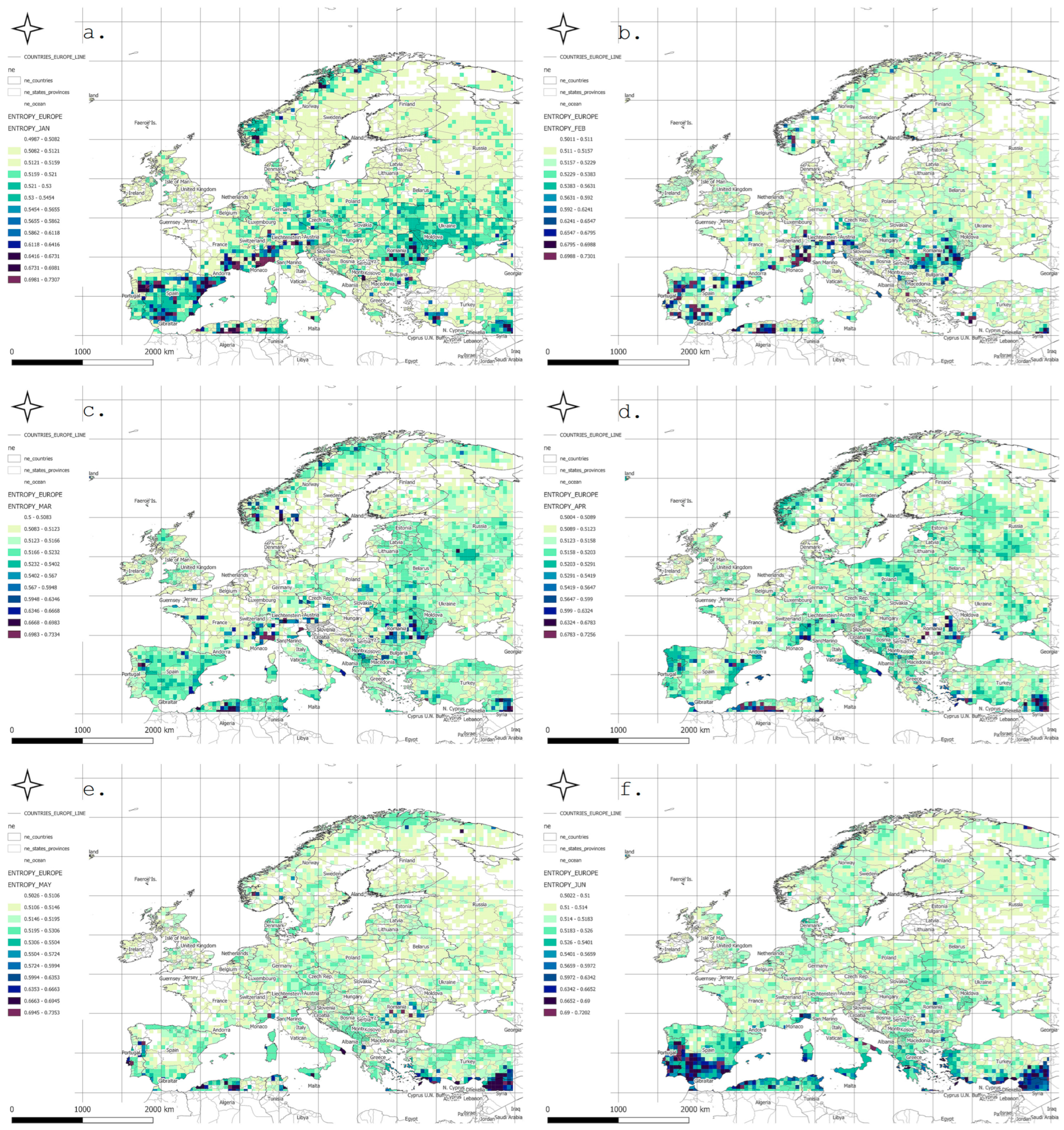

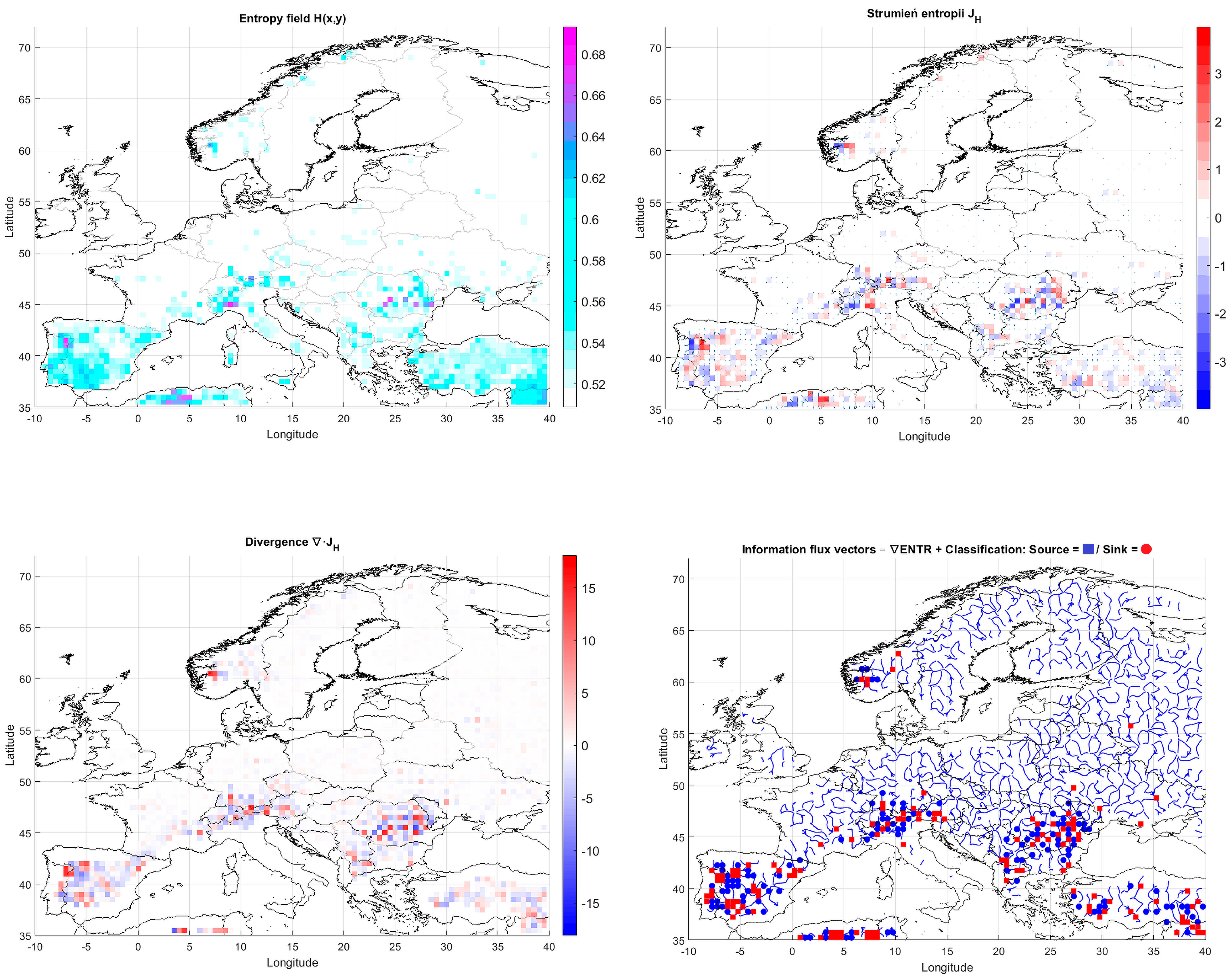

Figure 1).

The analysis of meteorological entropy was conducted separately for each calendar month, using data from the 1901–2010 climatological period. For each of the 40 years analyzed, entropy was calculated using 70-year seasonal time series: for the year 1971, the period 1901–1970 was used; for 1972, the period 1902–1971; and so on, up to 2010, for which data from 1941–2010 were used.

From the resulting time series of entropy values, seasonal trends were computed, and their statistical significance was evaluated using the Modified Mann–Kendall test (MMKT) at a 5% significance level. Additionally, to detect potential change points in the trend trajectories, the Pettitt test (PCPT) was applied, also at a 5% significance level. If the presence of a change point was confirmed, each newly segmented subsequence was analyzed separately for trends using the MMKT. If no change point was identified, the trend was assessed over the entire sequence. The results were presented graphically, providing a clearer and more precise visualization of the changes in entropy values and the evolution of their trends.

5.1. Shannon Entropy Values

For comparative purposes, the Shannon entropy values were normalized to the range

. The original entropy range—computed via integration from the joint bivariate probability distribution based on a sample size of 70

pairs—spanned from 0 to 12.259 bits. This normalization allowed for the consistent interpretation of spatial and temporal patterns of variability (

Figure 1).

The maximum joint entropy for variables and was defined with respect to the number of possible states in a 70-element sample. For each marginal variable, the maximum entropy equals , while for the bivariate distribution it corresponds to (). This value represents the case of a uniform distribution, in which all pairs are equally probable, and serves as a reference point for normalizing the estimated Shannon entropy values. Through this normalization, entropy values fall within the range , allowing for direct comparison across different grid points and time periods. In practice, values of indicate maximum uncertainty (a distribution close to uniform), whereas reflects a highly concentrated, ordered distribution.

In January, low entropy values dominated across Northern Europe, indicating stable and predictable winter conditions. Elevated entropy values appeared locally in the south—particularly over the Iberian Peninsula, southern France, and the Mediterranean regions—where precipitation variability is higher.

In February and March, similar patterns persisted, although the zone of increased entropy gradually shifted toward Central Europe. April showed growing spatial differentiation, particularly over the Alps, the Balkans, and Southeastern Europe. In May, a general decline in entropy was observed, reflecting the typical springtime stabilization of the atmosphere.

Entropy peaks in June and July, especially across Southeastern Europe—including the Balkans, Greece, Turkey, and Northern Africa—were likely linked to intensified convective storm activity and localized precipitation events. In August, high entropy zones shifted northward, covering parts of southern Germany, Poland, and Ukraine.

In September, variability was moderate in Western Europe, while remaining elevated in continental regions. October brought a further decline in entropy over Northern and Central Europe, though high values persisted in the Mediterranean basin. In November, entropy decreased across most regions, remaining moderate only in Southeastern Europe. December, like January, was marked by low entropy across nearly all of Europe, associated with dominant, stable winter synoptic patterns.

The observed spatial patterns of entropy revealed a pronounced seasonal rhythm: minimal variability during winter and peak variability during summer, especially in the southern and southeastern parts of the continent. High entropy in the warmer months reflected the intensification of convective processes and increased atmospheric instability.

Mountainous regions—such as the Alps and Carpathians—exhibited elevated entropy values throughout much of the year, indicative of complex orographic conditions and local microcirculations. In contrast, the Mediterranean coasts showed high variability particularly in autumn and winter, associated with episodic Mediterranean cyclones. Scandinavia and Northeastern Europe displayed the lowest entropy values for most of the year, confirming their stable and strongly seasonal climatic character.

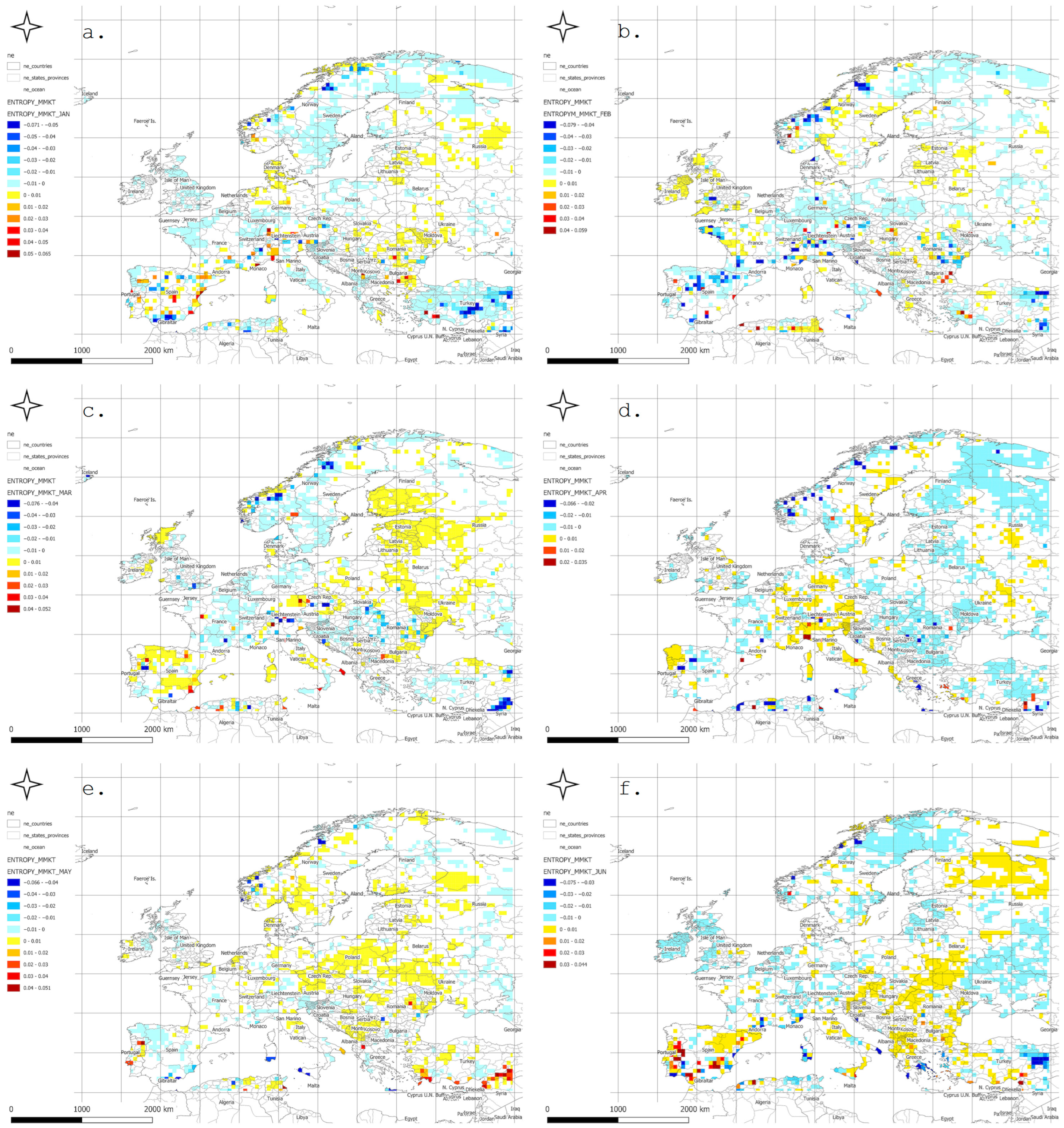

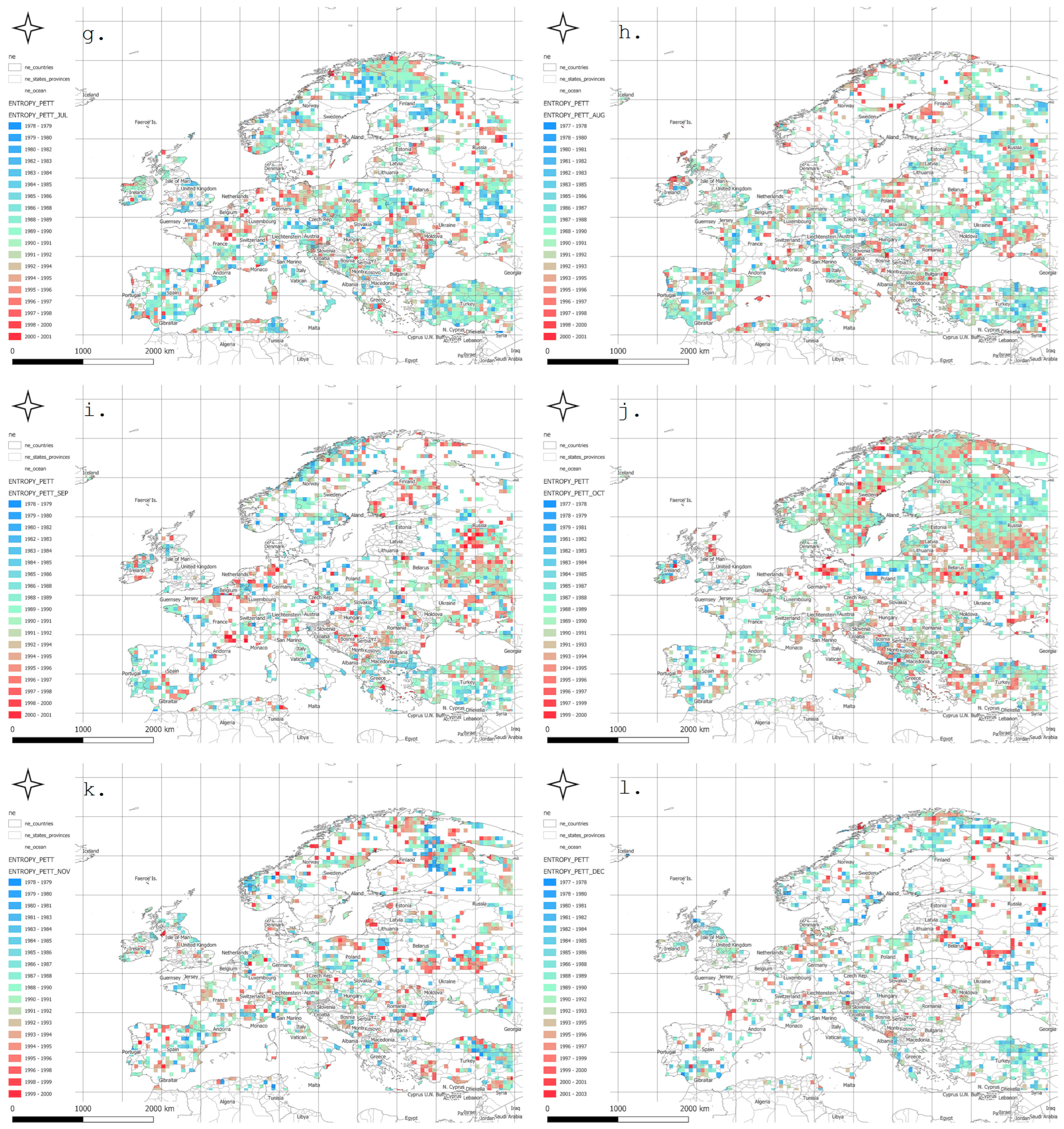

5.2. Trend and Seasonal Variability of Shannon Entropy

The spatial distribution of meteorological entropy trends, calculated separately for each calendar month, is presented in

Figure 2. In January, positive trends (yellows and light oranges) dominated, particularly across Central Europe and Scandinavia, which may indicate increasing variability in winter weather conditions. February displayed a greater number of negative trends in Southeastern Europe and the Alpine region, possibly reflecting a stabilization of local winter climates.

In March, distinctly positive trends appeared in Germany, Poland, Scandinavia, and northern France, suggesting a rise in weather variability during the early spring period. April presented a more spatially scattered trend pattern, though positive values still prevailed across Central and Eastern Europe.

May exhibited particularly strong positive trends in the Black Sea basin, the Balkans, and western Russia. June showed widespread positive trends across most of Europe, indicating a rise in atmospheric instability associated with the onset of summer.

In July and August, positive trends dominated Northern and Central Europe, while the southern parts of the continent showed more areas with no statistically significant changes. September revealed decreasing entropy trends in Spain, Portugal, and southern France, while positive trends persisted in the northern parts of the continent.

In October, entropy increases were especially prominent in Eastern Europe, including the Baltic states and western Russia. November brought marked positive trends in Central and Eastern Europe, along with localized decreasing trends in the western regions. In December, strong positive trends dominated across Northern Europe, with additional increases observed locally in the Alpine and Balkan regions.

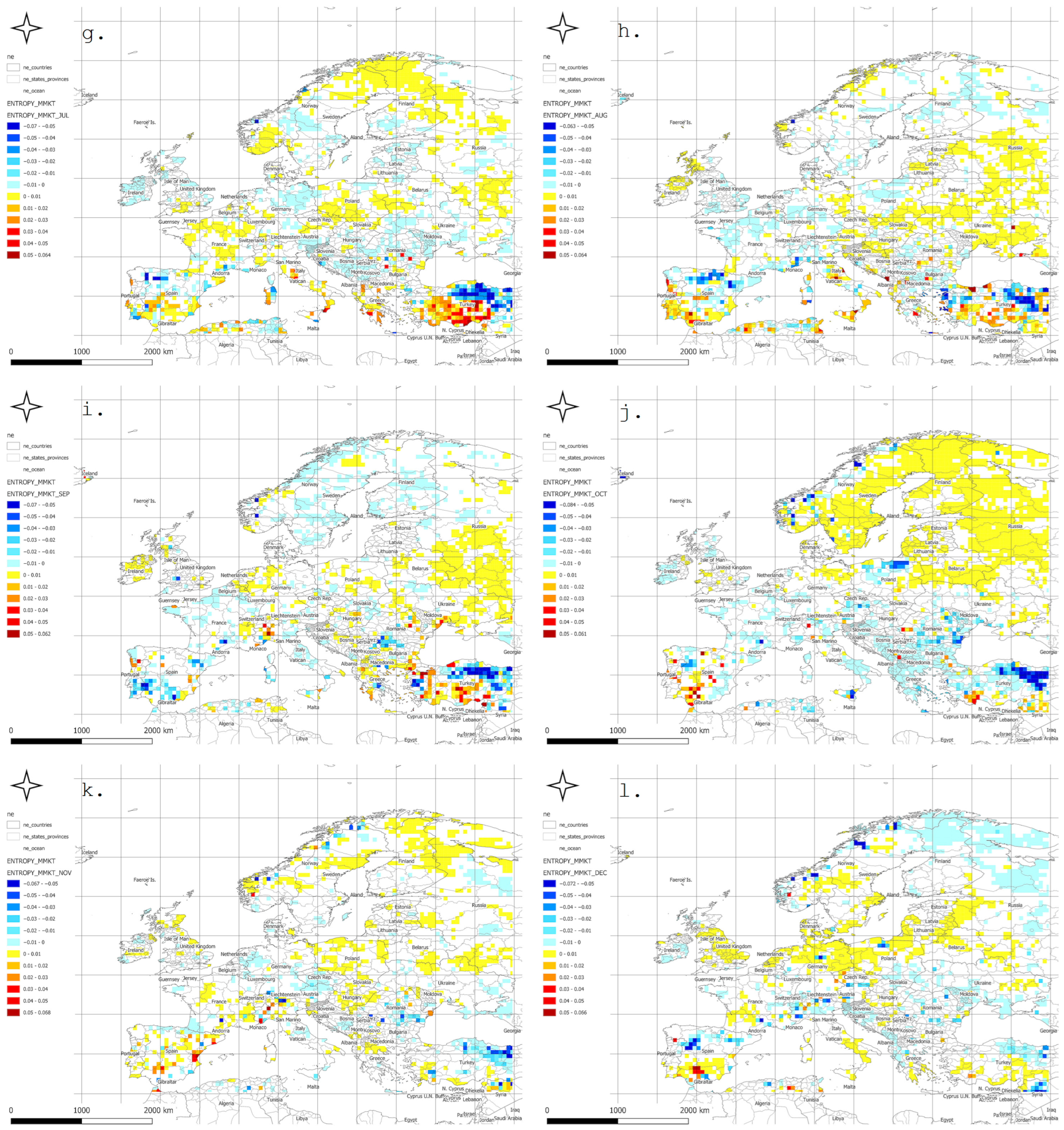

Figure 3 presents the spatial distribution of the year in which a statistically significant change in meteorological entropy trend occurred, as determined using the Pettitt change point test (PCPT) at the 5% significance level.

In January, most change points were detected in windows ending after 1990, particularly across Central and Northern Europe. February showed a more dispersed pattern, with numerous change points in windows ending between 1980 and 1995—especially over the Balkans and southwestern Europe. In March, change points appeared earlier, often in windows ending in the 1970s, mainly in the Alpine region, Germany, and France. April exhibited a concentration of change points in windows ending around 1990, particularly in Central and Eastern Europe.

In May and June, red and orange hues dominated, indicating that the entropy trend changes tended to occur near the end of the analysis period (1995–2010). July and August displayed more spatially and temporally heterogeneous distributions of change points, suggesting that localized processes may be influencing entropy during the summer season.

In September, relatively early change points were observed, particularly in Northern Scandinavia and parts of Western Europe. October and November were characterized by a dense clustering of change points in Central and Northern Europe, primarily during the 1985–2000 period. December showed a prevalence of late-occurring changes, concentrated mostly in Southeastern Europe.

Mountain regions, such as the Alps and Carpathians, frequently exhibited earlier occurrences of change points, which may reflect a heightened sensitivity of entropy to shifting climatic conditions in orographically complex areas. In contrast, the absence of detected change points in regions such as Northern Scandinavia or Southern Spain suggests a relative stability of entropy trends in those areas.

The identified change point patterns also exhibited a seasonal character: during winter months, changes were more likely to occur in the final two decades of the study period, whereas during spring and autumn, the majority of change points clustered in the 1980s and 1990s.

5.3. Temporal Spearman Correlations

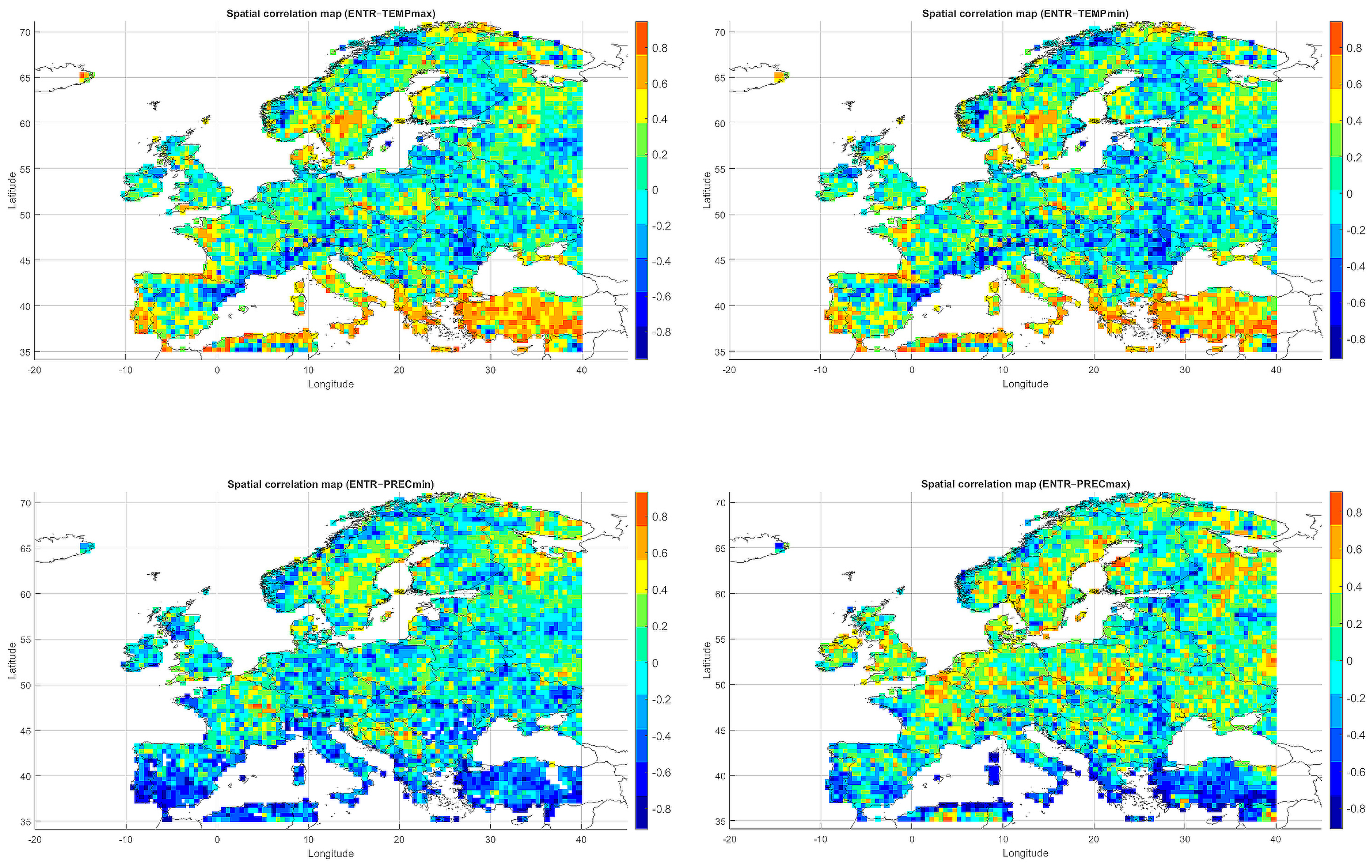

Below, we present correlation maps between meteorological entropy and selected climate parameters. The color scale on the right side of each map indicates the strength and direction of the correlation: warm colors (yellow, orange, red) represent positive correlations, while cool colors (various shades of blue) correspond to negative correlations.

5.3.1. Spatial Relationship Between Entropy and Monthly Maximum Temperature

The Spearman correlation map between informational entropy and monthly maximum temperature (

Figure 4) revealed clear spatial variability in their relationship. In Southern Europe—particularly over the Iberian and Apennine Peninsulas, Greece, and Turkey—strong positive correlations dominated, locally exceeding 0.6. This suggests that increased atmospheric irregularity is associated with the occurrence of extremely high temperatures, which is typical of the Mediterranean climate regime.

In Central and Northern Europe (Germany, Poland, the Baltic States), correlations were weak or near zero, possibly reflecting the dominance of other climatic drivers. Scandinavia and the North Sea region also showed positive correlations, although more limited in extent. Mountain regions (the Alps, Carpathians, and Pyrenees) displayed strong local contrasts, likely influenced by orographic effects. A patchwork of correlations was observed in Eastern Europe (Ukraine, Russia), indicating the absence of a unified mechanism. In parts of France and Italy, notable negative correlations appeared, suggesting that higher entropy may coincide with lower maximum temperatures.

Overall, the spatial pattern indicates that the relationship between entropy and extreme temperatures is particularly pronounced in regions characterized by high seasonality and thermal instability.

5.3.2. Spatial Relationship Between Entropy and Monthly Minimum Temperature

The correlation pattern with minimum temperature (

Figure 4) differed significantly from that observed for

. In Southeastern Europe (Greece, Bulgaria, Turkey, and the Black Sea coast), strong negative correlations dominated, implying that higher entropy may be associated with nighttime cooling and an increased risk of low

values.

In Central Europe, correlations were more variable—often close to zero, occasionally negative. In Scandinavia and northern Russia, weak positive correlations prevailed, which may reflect a buffering effect of atmospheric variability on temperature drops. Mountain regions (Alps, Carpathians) exhibited strong local contrasts, likely due to microscale processes and topographic complexity. Neutral relationships dominated in Western Europe (France, Germany), while the Iberian Peninsula displayed considerable heterogeneity, with both positive and negative values.

The relationship between entropy and is especially relevant in the context of frost risk and agricultural impacts. In arid and mountainous regions, strong negative correlations suggest the need for localized microclimatic analyses.

5.3.3. Spatial Relationship Between Entropy and Monthly Maximum Precipitation

The map of entropy correlations with monthly maximum precipitation totals (

Figure 4) revealed a predominance of positive correlations across Central and Northern Europe (Germany, Poland, the Baltic States, Scandinavia). This indicates that higher entropy may be linked to increased risk of high-precipitation months, often associated with cold fronts and cyclonic activity.

In Southern Europe (Spain, Italy, Greece), negative correlations prevailed, suggesting that in these regions, increased atmospheric variability does not correspond to greater monthly precipitation maxima—likely due to the influence of summer dry seasonality. Mountain areas (Alps, Pyrenees) displayed a diverse range of correlation values, reflecting local effects such as orographic lifting and convective storms. Western Europe (France, the British Isles) showed localized positive correlations.

In many temperate regions, correlations exceeded +0.4, indicating the potential of entropy as an indicator for extreme precipitation risk.

5.3.4. Spatial Relationship Between Entropy and Monthly Minimum Precipitation

Correlations between entropy and monthly minimum precipitation totals (

Figure 4) revealed a distinct north–south contrast. In Southern Europe (Spain, Italy, Greece, Turkey), strong negative correlations dominated, indicating a link between high entropy and increased drought risk. In Western and Central Europe, correlations were generally weak or neutral.

In Scandinavia and the British Isles, weak positive correlations prevailed, suggesting that atmospheric variability does not directly translate into dry months in these regions. Areas around the Black Sea exhibited negative correlations, likely due to strong precipitation seasonality and local circulation patterns.

In mountainous regions (Alps, Carpathians, Pyrenees), as well as in Southern Spain and Sicily, strong local negative correlations appeared, indicating that entropy may be a reliable indicator of monthly drought risk. Urban areas (e.g., London, Paris, Berlin) did not exhibit significant anomalies.

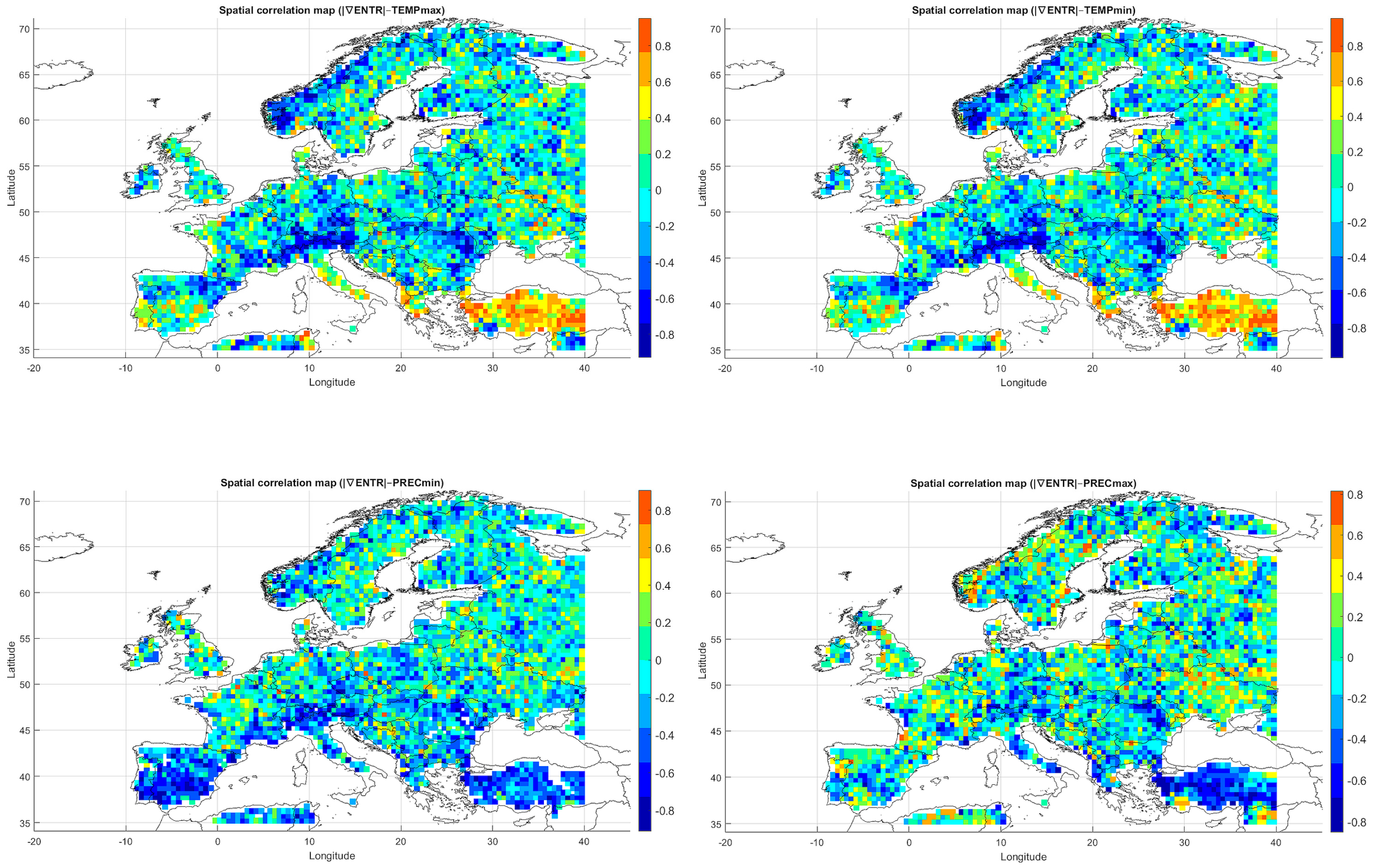

5.3.5. Spatial Relationship Between Entropy Gradient Magnitude and Monthly Maximum Temperature

The Spearman correlation map between the entropy gradient and monthly maximum temperature (

Figure 5) revealed strong regional contrasts. In Southeastern Europe (Turkey, Greece, and the Balkans), dominant strong positive correlations (>0.6) indicate a link between local variability changes and susceptibility to heatwaves. In these regions, the entropy gradient may reflect growing thermal instability.

In Central and Western Europe, correlations were predominantly negative or near zero, suggesting the stabilizing effect of a temperate climate. The Alps and southern Germany exhibited strong negative correlations, potentially indicating that increases in entropy gradients are associated with reductions in temperature extremes.

In Scandinavia (Norway and Sweden), positive correlations were observed, possibly reflecting the high sensitivity of the boreal climate. The Benelux countries, France, and the British Isles showed values close to zero, while localized positive correlations appeared in Italy, Ukraine, and Romania—regions that may serve as transitional zones between maritime and continental climatic influences.

Topography emerged as a significant factor: in mountainous areas, entropy gradients often displayed an inverse relationship with temperature compared to lowland regions.

5.3.6. Spatial Relationship Between Entropy Gradient Magnitude and Monthly Minimum Temperature

The correlation map between the entropy gradient and minimum temperature (

Figure 5) revealed strongly negative values in Southeastern Europe—particularly Turkey, Greece, and the Balkans—reaching below −0.6. This suggests that increasing variability may be associated with intensified cold nights, likely due to inversions or radiative cooling effects.

In Western Europe, correlations were near zero, while in the Alps and Carpathians, localized positive values appeared—likely influenced by terrain features and local atmospheric dynamics. Scandinavia exhibited moderate positive correlations, which may indicate the dampening of cold extremes under increased instability.

In the Adriatic region, the entropy gradient showed strong negative correlations with , possibly driven by advective cooling. In Central Europe (Poland, Germany, Czechia), a mosaic pattern emerged, likely due to seasonal shifts in the influence of precipitation and snow cover.

The entropy gradient may serve as an indicator of frost risk, though the relationship is not uniform and requires seasonal analysis—particularly in winter months.

5.3.7. Spatial Relationship Between Entropy Gradient Magnitude and Monthly Minimum Precipitation

The analysis of the entropy gradient’s correlation with minimum monthly precipitation (

Figure 5) revealed a predominance of negative relationships in Southern Europe—especially in Spain, southern France, Greece, Italy, and Turkey. Values below −0.4 suggest a connection between increasing spatial variability and the risk of drought conditions.

In Central Europe (Poland, Czechia, Germany), correlations were weak or neutral. In Scandinavia and the North Sea region, positive correlations were more common, suggesting greater precipitation stability despite increased entropy gradients.

Localized positive correlations were observed in the Alps and Carpathians, while positive values dominated in Eastern Europe (Ukraine, Russia), indicating a more continental-type response of precipitation to spatial variability.

Overall, the entropy gradient appears useful in detecting regions vulnerable to drought, particularly in the Mediterranean basin.

5.3.8. Spatial Relationship Between Entropy Gradient Magnitude and Monthly Maximum Precipitation

Correlations between the entropy gradient and monthly maximum precipitation (

Figure 5) were generally positive across temperate regions—especially in Germany, Poland, the Baltic States, and Western Russia—ranging from 0.2 to 0.6. This suggests that spatial instability is associated with the intensification of extreme precipitation episodes.

Scandinavia (Norway and Sweden) also showed positive correlations. In Southern Europe (Spain, Italy, Greece), correlations were mostly negative or neutral, likely due to strong seasonality and limited spatial variability during dry periods.

Urban areas (e.g., London, Paris, Berlin) and mountain regions (e.g., the Alps) exhibited moderate positive relationships. The entropy gradient thus emerges as a sensitive indicator of spatial rainfall dispersion, potentially valuable for assessing flash flood risk.

5.4. Seasonal–Spatial Spearman Correlations

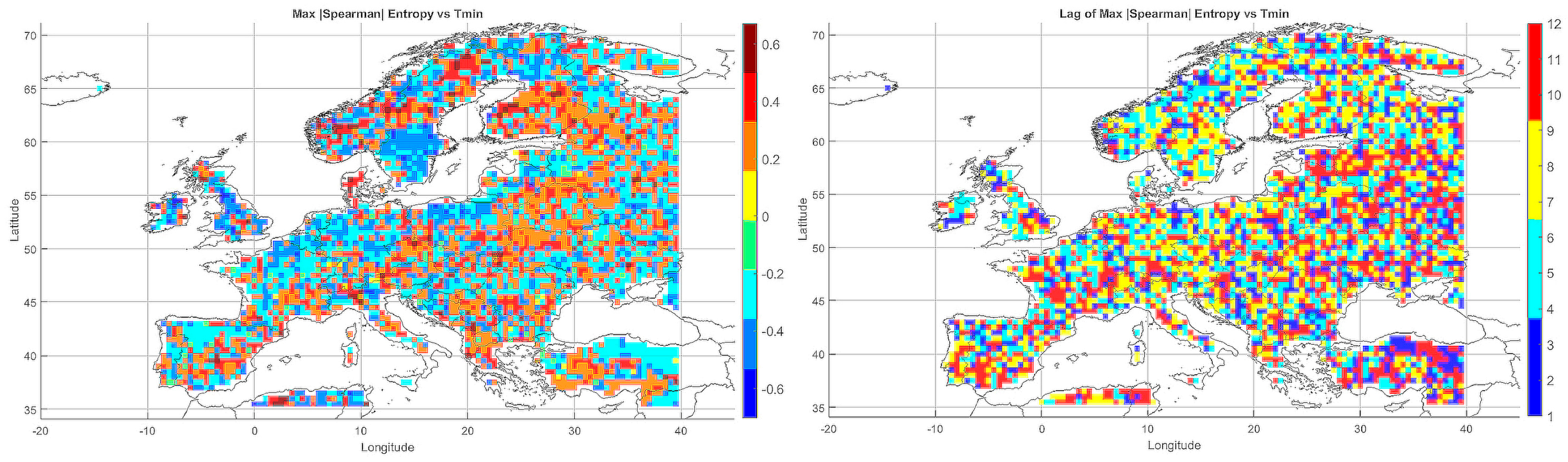

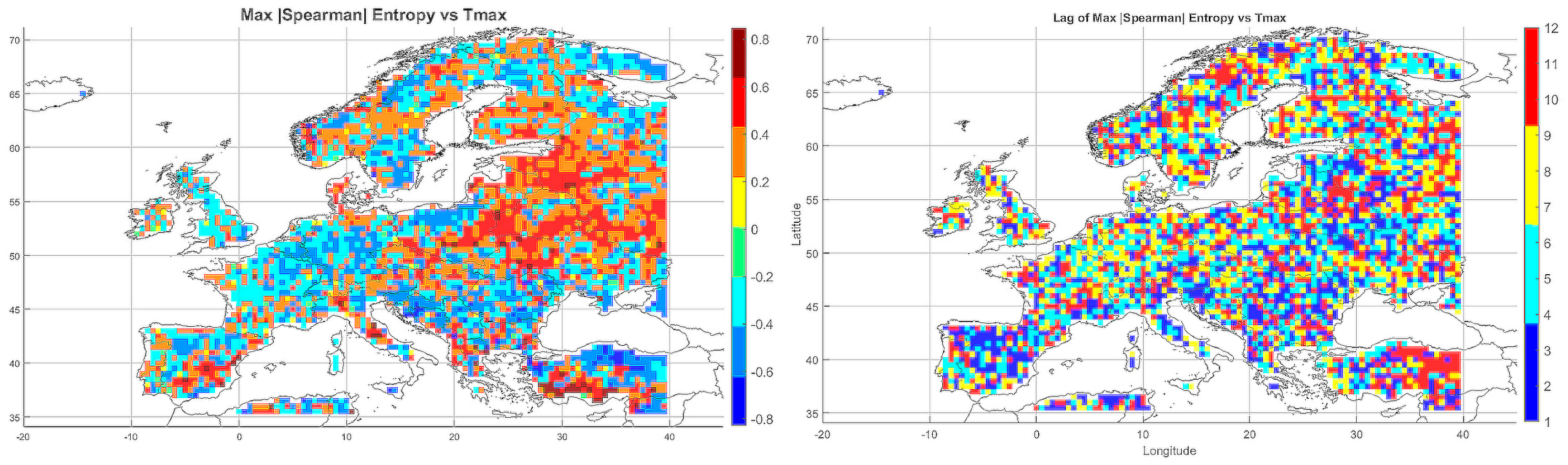

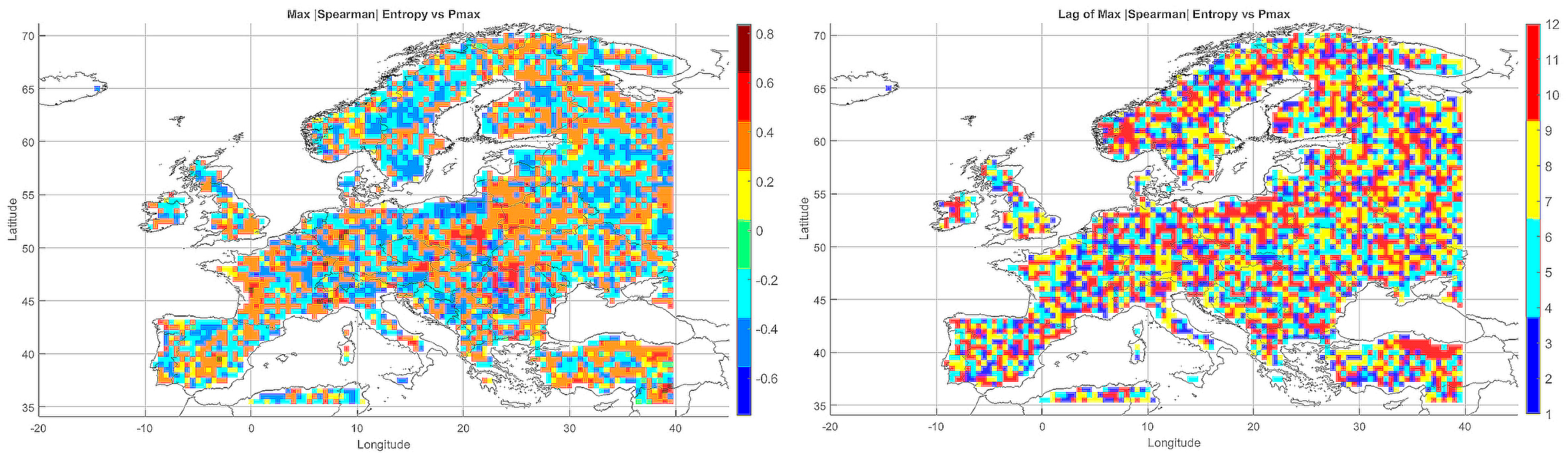

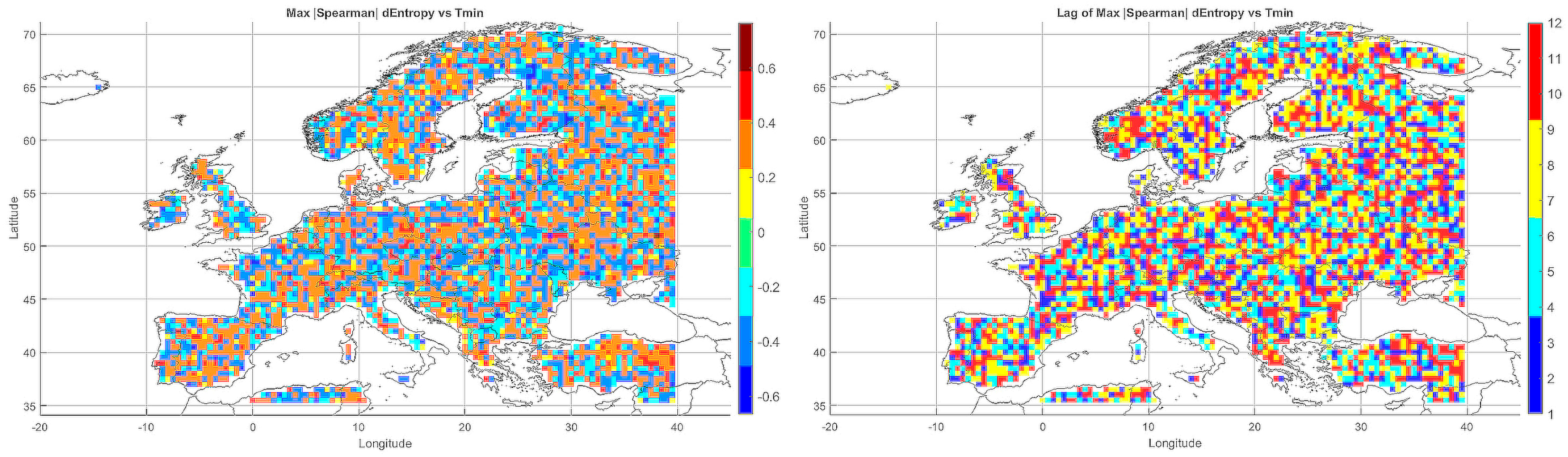

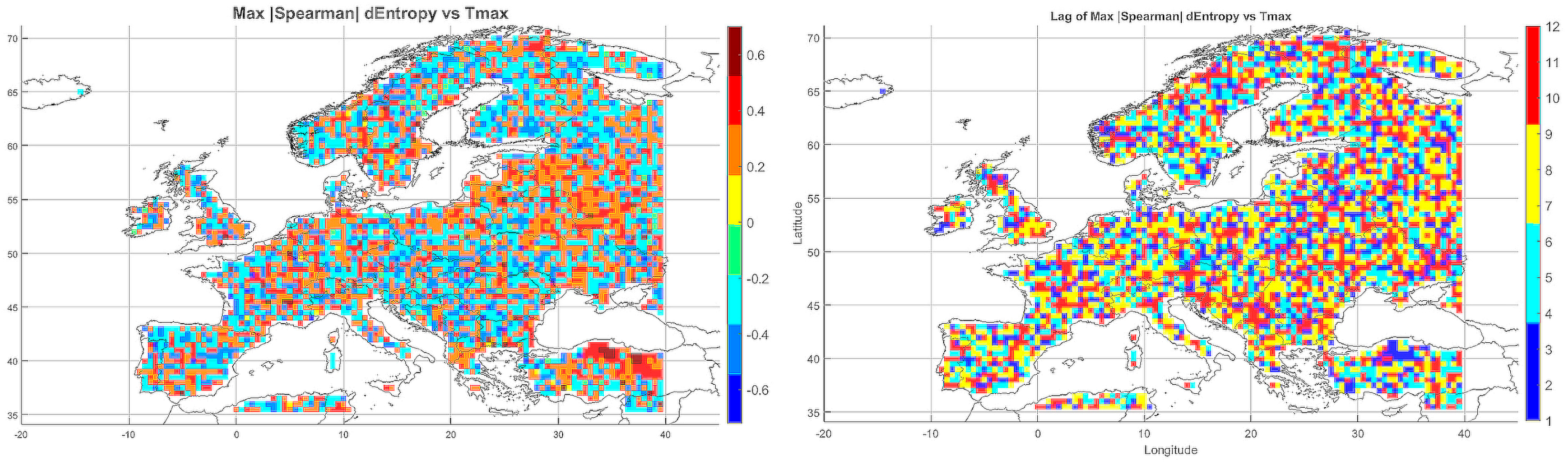

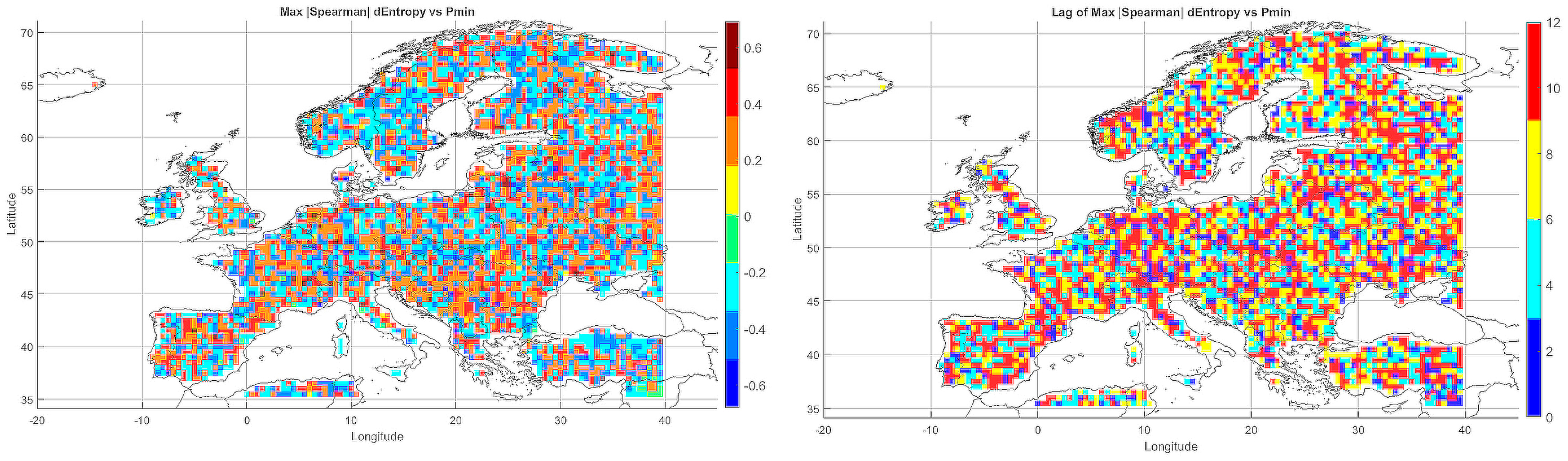

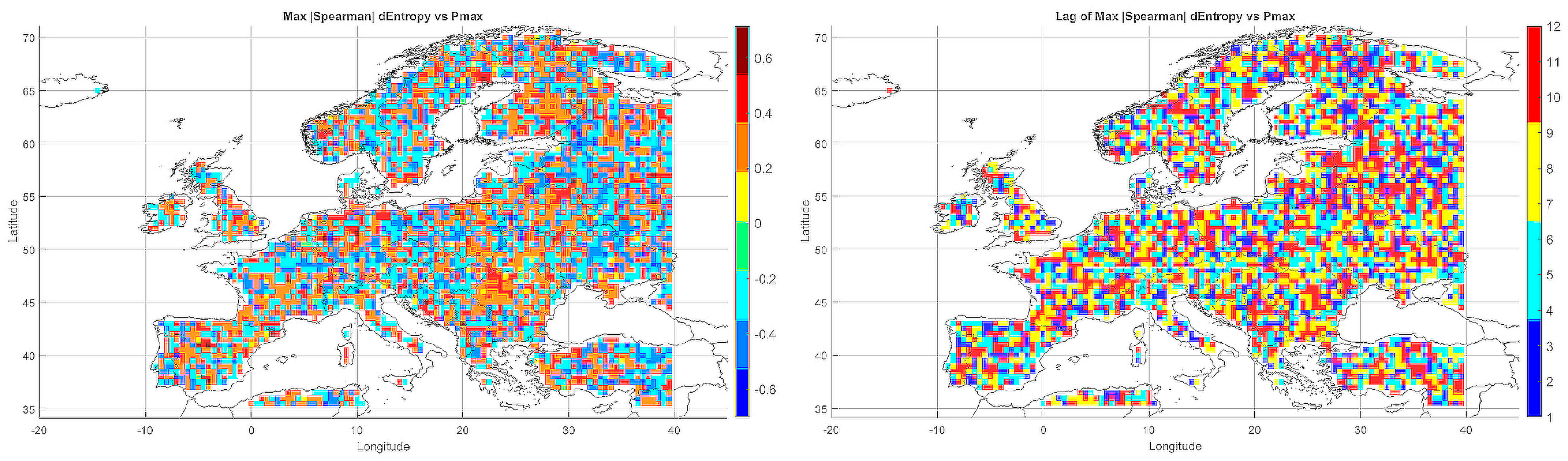

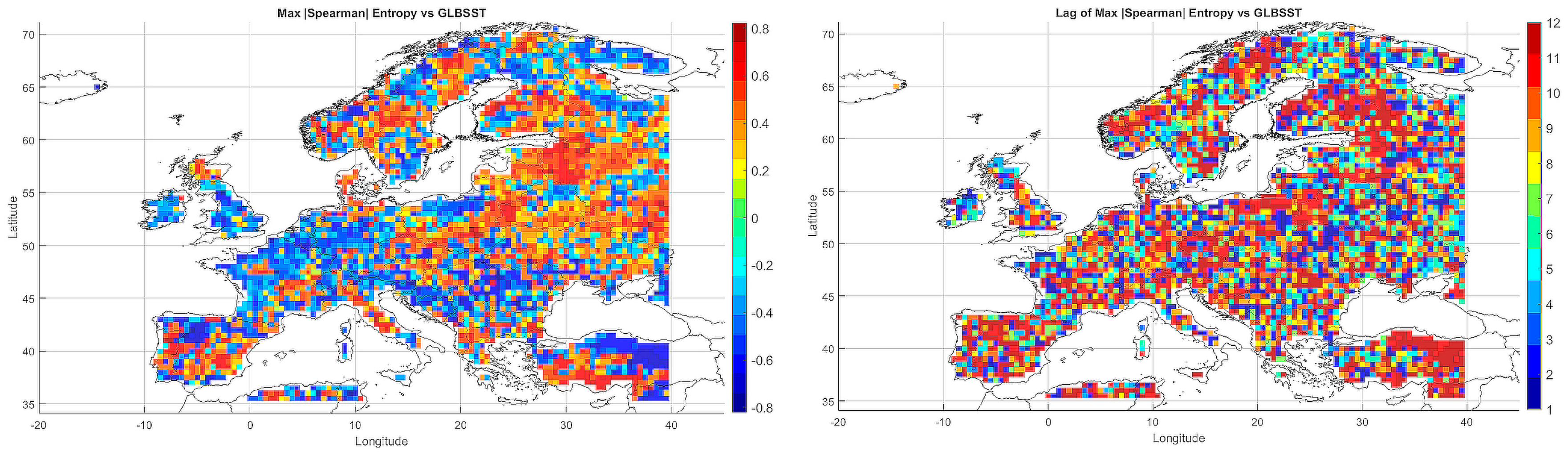

A spatial–seasonal analysis was performed on the maximum absolute values of the Spearman rank correlations between meteorological entropy (incorporating monthly temperature and precipitation) and extreme climate parameters. Time lags from 1 to 12 months were considered to evaluate the predictive potential of entropy in anticipating climate changes.

5.4.1. Entropy and Monthly Minimum Temperature

Figure 6 presents maps of the maximum absolute correlations (left panel) and the corresponding time lags (right panel). In Central-Eastern Europe and Scandinavia, positive correlations dominated (ranging from 0.4 to 0.6), suggesting that increases in entropy precede declines in

. In contrast, negative correlations were observed in Southern Europe (Spain, Italy, Greece), indicating the inverse relationship.