MVIB-Lip: Multi-View Information Bottleneck for Visual Speech Recognition via Time Series Modeling

Abstract

1. Introduction

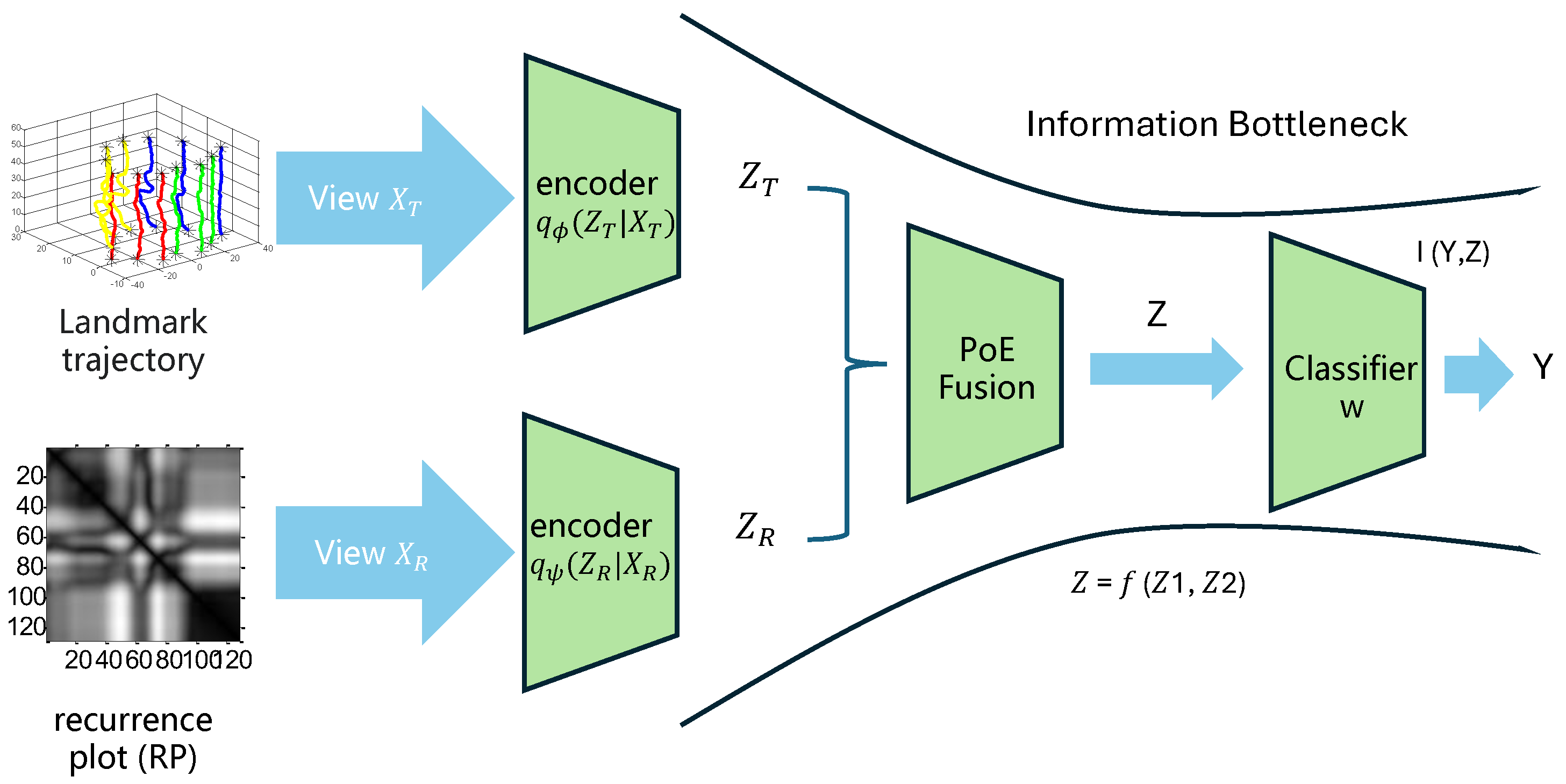

- We propose MVIB-Lip, the first multi-view IB framework for lipreading that jointly exploits temporal landmark dynamics and recurrence plot textures.

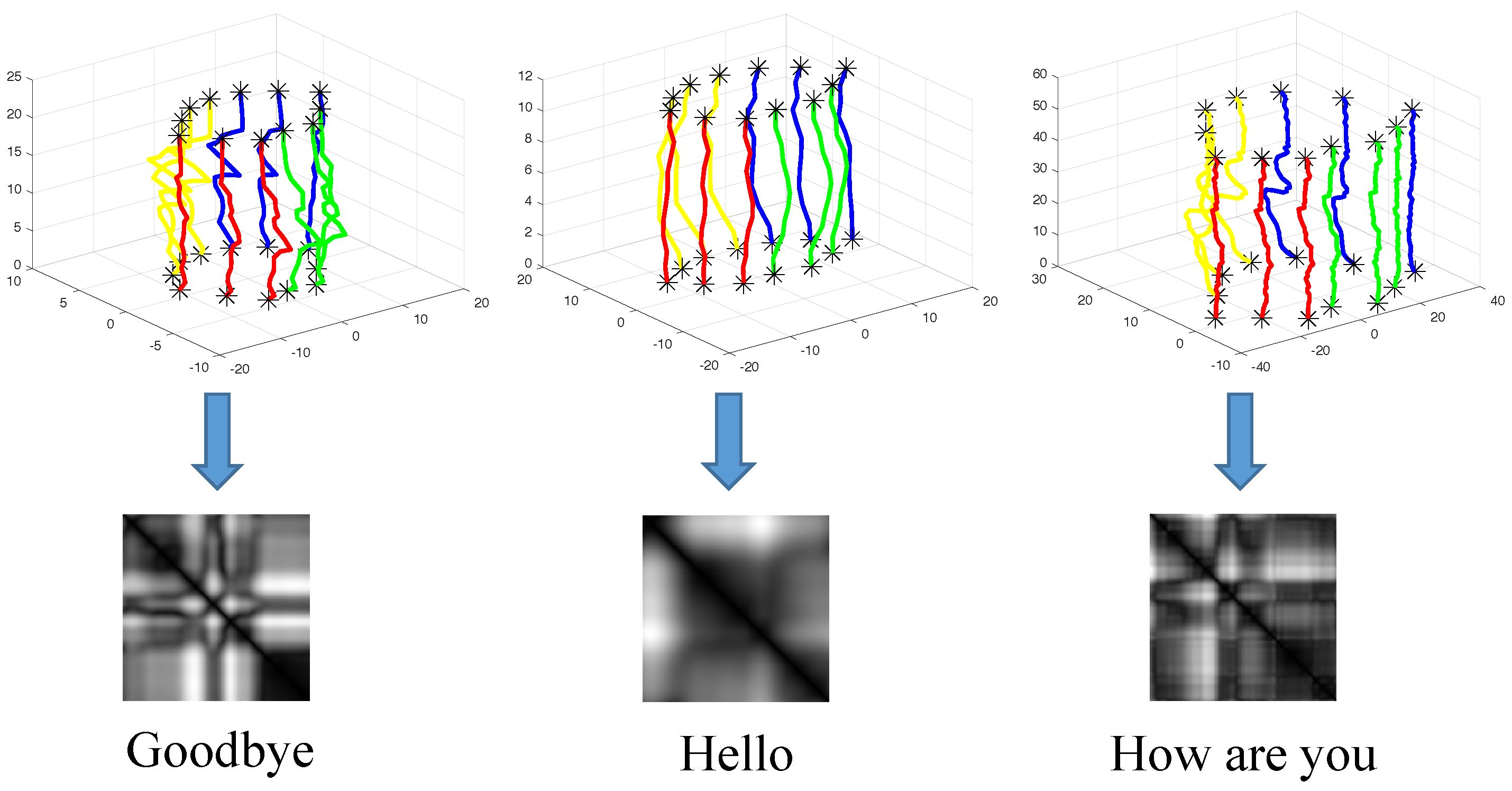

- To the best of our knowledge, this is the first work to transform mouth landmark time series into recurrence plots (RPs) for lipreading. By introducing MVIB-Lip, we fuse raw time-series dynamics with RP-based structural patterns under an information bottleneck framework, achieving both sample efficiency and strong representational power.

- We provide a systematic evaluation on two benchmarks (OuluVS and LRW) and a self-collected dataset. We show that MVIB-Lip achieves superior performance compared to handcrafted pipelines and single-view neural encoders, particularly in speaker-independent recognition.

2. Related Works

2.1. Traditional Visual Speech Recognition

2.2. Deep Learning for Lipreading

2.3. Multi-View Learning and Information Bottleneck

3. System Description

3.1. Multivariate Time Series Generation

3.2. Multivariate Time Series Representation Using a Modified Recurrence Plot

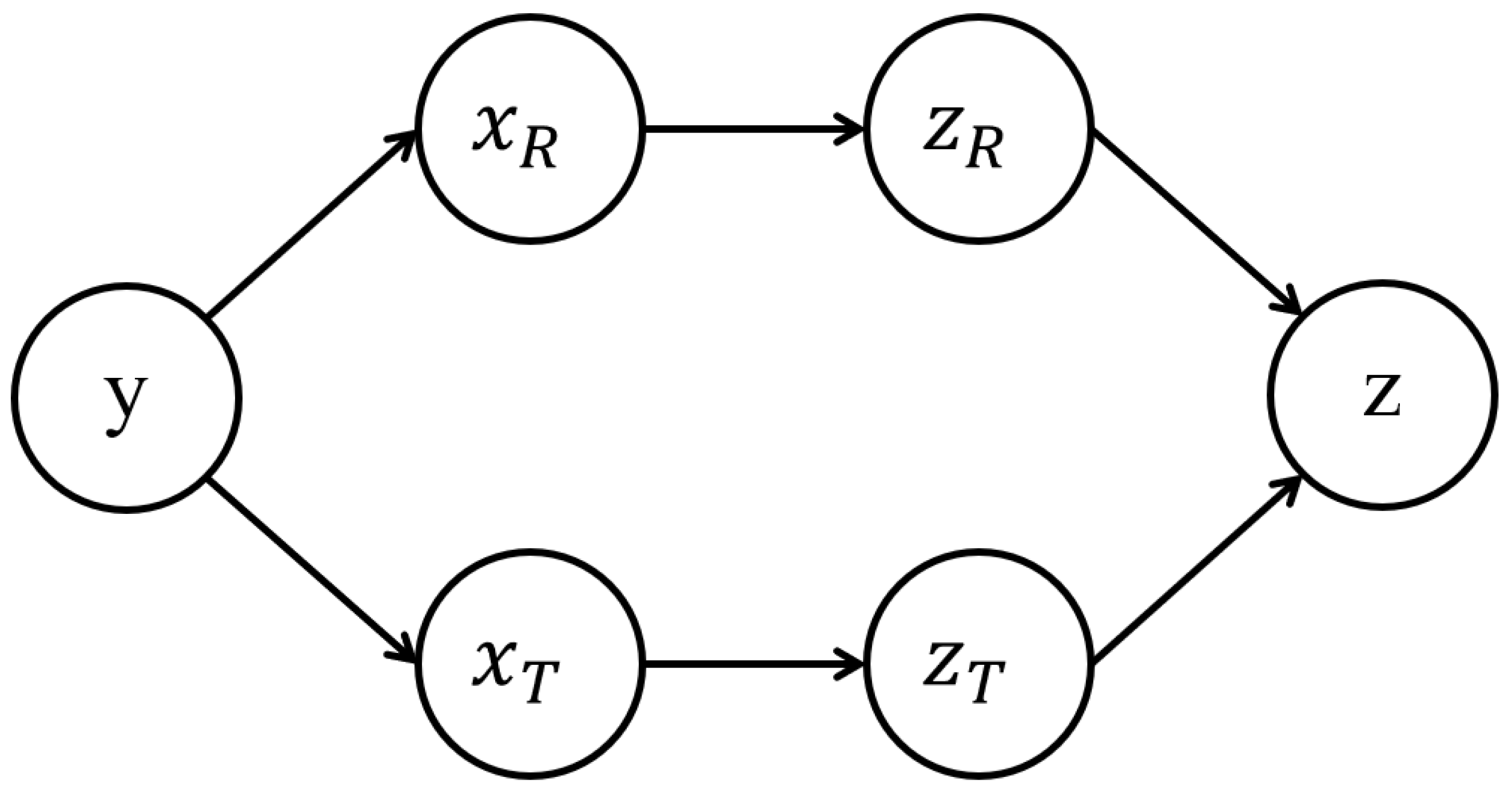

3.3. Multi-View Information Bottleneck for Lipreading

3.4. Optimization

4. Experiments

4.1. Datasets

4.2. Results

4.3. Ablation Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| IB | Information Bottleneck |

| MVIB | Multi-View Information Bottleneck |

| SDM | Supervised Descent Method |

| RP | Recurrence Plot |

References

- Matthews, I.; Cootes, T.F.; Bangham, J.A.; Cox, S.; Harvey, R. Extraction of visual features for lipreading. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 198–213. [Google Scholar] [CrossRef]

- Zhao, G.; Barnard, M.; Pietikäinen, M. Lipreading with local spatiotemporal descriptors. IEEE Trans. Multimed. 2009, 11, 1254–1265. [Google Scholar] [CrossRef]

- Fan, X.; Busso, C.; Hansen, J.H.L. Audio-visual isolated digit recognition for whispered speech. In Proceedings of the 2011 19th European Signal Processing Conference, Barcelona, Spain, 29 August–2 September 2011; pp. 1500–1503. [Google Scholar]

- Tao, F.; Busso, C. Lipreading approach for isolated digits recognition under whisper and neutral speech. In Proceedings of the Interspeech, Singapore, 14–18 September 2014; pp. 1154–1158. [Google Scholar]

- Almajai, I.; Cox, S.; Harvey, R.; Lan, Y. Improved speaker independent lip reading using speaker adaptive training and deep neural networks. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 2722–2726. [Google Scholar]

- Movellan, J.R. Visual speech recognition with stochastic networks. In Proceedings of the Advances in Neural Information Processing Systems, Denver, Colorado, 27 November–2 December 1995; pp. 851–858. [Google Scholar]

- Wang, S.L.; Lau, W.H.; Leung, S.H. Automatic lipreading with limited training data. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 881–884. [Google Scholar]

- Zhao, G.; Pietikäinen, M.; Hadid, A. Local spatiotemporal descriptors for visual recognition of spoken phrases. In Proceedings of the International Workshop on HUMAN-Centered Multimedia, Augsburg, Germany, 28 September 2007; pp. 57–66. [Google Scholar]

- Yau, W.C.; Kumar, D.K.; Chinnadurai, T. Lip-reading technique using spatio-temporal templates and support vector machines. In Progress in Pattern Recognition, Image Analysis and Applications, Proceedings of the Iberoamerican Congress on Pattern Recognition, Havana, Cuba, 9–12 September 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 610–617. [Google Scholar]

- Lan, Y.; Theobald, B.J.; Harvey, R.; Ong, E.J.; Bowden, R. Improving visual features for lip-reading. In Proceedings of the AVSP, Kanagawa, Japan, 30 September–3 October 2010. [Google Scholar]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on feature distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Assael, Y.M.; Shillingford, B.; Whiteson, S.; de Freitas, N. LipNet: Sentence-level lipreading. arXiv 2016, arXiv:1611.01599. [Google Scholar]

- Chung, J.S.; Zisserman, A. Lip reading in the wild. In Computer Vision–ACCV 2016, Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Cham, Switzerland, 2016; pp. 87–103. [Google Scholar]

- Wang, C. Multi-Grained Spatio-temporal Modeling for Lip-reading. In Proceedings of the BMVC, Cardiff, UK, 9–12 September 2019. [Google Scholar]

- Ma, Y.; Sun, X. Spatiotemporal Feature Enhancement for Lip-Reading: A Survey. Appl. Sci. 2025, 15, 4142. [Google Scholar] [CrossRef]

- Ma, P.; Petridis, S.; Pantic, M. Visual speech recognition for multiple languages in the wild. Nat. Mach. Intell. 2022, 4, 930–939. [Google Scholar] [CrossRef]

- Shi, B.; Hsu, W.N.; Mohamed, A. Robust Self-Supervised Audio-Visual Speech Recognition. In Proceedings of the Interspeech, Incheon, Republic of Korea, 18–22 September 2022; pp. 2118–2122. [Google Scholar]

- Cootes, T.; Taylor, C.; Cooper, D.; Graham, J. Active Shape Models-Their Training and Application. Comput. Vis. Image Underst. 1995, 61, 38–59. [Google Scholar] [CrossRef]

- Cootes, T.F.; Edwards, G.J.; Taylor, C.J. Active appearance models. In Computer Vision-ECCV’98, Proceedings of the European Conference on Computer Vision, Freiburg, Germany, 2–6 June 1998; Springer: Berlin/Heidelberg, Germany, 1998; pp. 484–498. [Google Scholar]

- Saenko, K.; Livescu, K.; Siracusa, M.; Wilson, K.; Glass, J.; Darrell, T. Visual speech recognition with loosely synchronized feature streams. In Proceedings of the Tenth IEEE International Conference on Computer Vision, Beijing, China, 17–21 October 2005; Volume 2, pp. 1424–1431. [Google Scholar]

- Potamianos, G.; Neti, C.; Gravier, G.; Garg, A.; Senior, A.W. Recent advances in the automatic recognition of audiovisual speech. Proc. IEEE 2003, 91, 1306–1326. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhao, G.; Hong, X.; Pietikäinen, M. A review of recent advances in visual speech decoding. Image Vis. Comput. 2014, 32, 590–605. [Google Scholar] [CrossRef]

- Liu, L.; Feng, G.; Beautemps, D. Automatic dynamic template tracking of inner lips based on CLNF. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5130–5134. [Google Scholar]

- Cox, S.J.; Harvey, R.W.; Lan, Y.; Newman, J.L.; Theobald, B.J. The challenge of multispeaker lip-reading. In Proceedings of the International Conference on Auditory-Visual Speech Processing (AVSP2008), Moreton Island, QLD, Australia, 26–29 September 2008; pp. 179–184. [Google Scholar]

- Potamianos, G.; Neti, C.; Luettin, J.; Matthews, I. Audio-Visual Automatic Speech Recognition: An Overview. Issues Vis. Audiov. Speech Process. 2004, 22, 23. [Google Scholar]

- Son Chung, J.; Senior, A.; Vinyals, O.; Zisserman, A. Lip reading sentences in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6447–6456. [Google Scholar]

- Afouras, T.; Chung, J.S.; Senior, A.; Vinyals, O.; Zisserman, A. Deep audio-visual speech recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 44, 8717–8727. [Google Scholar] [CrossRef] [PubMed]

- Stafylakis, T.; Tzimiropoulos, G. Combining Residual Networks with LSTMs for Lipreading. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 3652–3656. [Google Scholar]

- Martinez, B.; Ma, P.; Petridis, S.; Pantic, M. Lipreading using temporal convolutional networks. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6319–6323. [Google Scholar]

- Ma, P.; Petridis, S.; Pantic, M. End-to-end audio-visual speech recognition with conformers. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 7613–7617. [Google Scholar]

- Tong, Z.; Song, Y.; Wang, J.; Wang, L. Videomae: Masked autoencoders are data-efficient learners for self-supervised video pre-training. Adv. Neural Inf. Process. Syst. 2022, 35, 10078–10093. [Google Scholar]

- Cai, Z.; Ghosh, S.; Stefanov, K.; Dhall, A.; Cai, J.; Rezatofighi, H.; Haffari, R.; Hayat, M. Marlin: Masked autoencoder for facial video representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1493–1504. [Google Scholar]

- Petridis, S.; Wang, Y.; Li, Z.; Pantic, M. End-to-End Multi-View Lipreading. In Proceedings of the British Machine Vision Conference, BMVC 2017, London, UK, 4–7 September 2017. [Google Scholar]

- Zhang, X.; Zhang, C.; Sui, J.; Sheng, C.; Deng, W.; Liu, L. Boosting lip reading with a multi-view fusion network. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar]

- Zhang, W.; Wang, J.; Luo, Y.; Yu, L.; Yu, W.; He, Z.; Shen, J. Mtga: Multi-view temporal granularity aligned aggregation for event-based lip-reading. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 10176–10184. [Google Scholar]

- Tishby, N.; Pereira, F.C.; Bialek, W. The information bottleneck method. In Proceedings of the 37-th Annual Allerton Conference on Communication, Control and Computing, Monticello, IL, USA, 22–24 September 1999; pp. 368–377. [Google Scholar]

- Yu, S.; Giraldo, L.G.S.; Príncipe, J.C. Information-Theoretic Methods in Deep Neural Networks: Recent Advances and Emerging Opportunities. In Proceedings of the IJCAI, Virtual, 19–26 August 2021; pp. 4669–4678. [Google Scholar]

- Alemi, A.A.; Fischer, I.; Dillon, J.V.; Murphy, K. Deep Variational Information Bottleneck. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Zhang, Q.; Yu, S.; Xin, J.; Chen, B. Multi-view information bottleneck without variational approximation. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 4318–4322. [Google Scholar]

- Wang, Q.; Boudreau, C.; Luo, Q.; Tan, P.N.; Zhou, J. Deep multi-view information bottleneck. In Proceedings of the 2019 SIAM International Conference on Data Mining, Calgary, AB, Canada, 2–4 May 2019; SIAM: Philadelphia, PA, USA, 2019; pp. 37–45. [Google Scholar]

- Mai, S.; Zeng, Y.; Hu, H. Multimodal information bottleneck: Learning minimal sufficient unimodal and multimodal representations. IEEE Trans. Multimed. 2022, 25, 4121–4134. [Google Scholar] [CrossRef]

- Xiong, X.; De la Torre, F. Supervised descent method and its applications to face alignment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 532–539. [Google Scholar]

- Liu, L.; Hu, J.; Zhang, S.; Deng, W. Extended supervised descent method for robust face alignment. In Computer Vision-ACCV 2014 Workshops, Proceedings of the Asian Conference on Computer Vision, Singapore, 1–2 November 2014; Springer: Cham, Switzerland, 2014; pp. 71–84. [Google Scholar]

- Baltrusaitis, T.; Robinson, P.; Morency, L.P. Constrained local neural fields for robust facial landmark detection in the wild. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 2–8 December 2013; pp. 354–361. [Google Scholar]

- Eckmann, J.P.; Kamphorst, S.O.; Ruelle, D. Recurrence Plots of Dynamical Systems. Europhys. Lett. (EPL) 1987, 4, 973–977. [Google Scholar] [CrossRef]

- Souza, V.M.; Silva, D.F.; Batista, G.E. Extracting Texture Features for Time Series Classification. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 1425–1430. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the IEEE International Conference on Computer Vision, Bombay, India, 4–7 January 1998; pp. 839–846. [Google Scholar]

- Song, J.; Zheng, Y.; Wang, J.; Zakir Ullah, M.; Jiao, W. Multicolor image classification using the multimodal information bottleneck network (MMIB-Net) for detecting diabetic retinopathy. Opt. Express 2021, 29, 22732–22748. [Google Scholar] [CrossRef] [PubMed]

- Ahuja, K.; Caballero, E.; Zhang, D.; Gagnon-Audet, J.C.; Bengio, Y.; Mitliagkas, I.; Rish, I. Invariance principle meets information bottleneck for out-of-distribution generalization. Adv. Neural Inf. Process. Syst. 2021, 34, 3438–3450. [Google Scholar]

- Principe, J.C. Information Theoretic Learning: Renyi’s Entropy and Kernel Perspectives; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Belghazi, M.I.; Baratin, A.; Rajeshwar, S.; Ozair, S.; Bengio, Y.; Courville, A.; Hjelm, D. Mutual information neural estimation. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 531–540. [Google Scholar]

- Oord, A.v.d.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Yang, S.; Zhang, Y.; Feng, D.; Yang, M.; Wang, C.; Xiao, J.; Long, K.; Shan, S.; Chen, X. LRW-1000: A naturally-distributed large-scale benchmark for lip reading in the wild. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; pp. 1–8. [Google Scholar]

- Cinar, G.T.; Principe, J.C. Clustering of time series using a hierarchical linear dynamical system. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 6741–6745. [Google Scholar]

- You, X.; Guo, W.; Yu, S.; Li, K.; Príncipe, J.C.; Tao, D. Kernel learning for dynamic texture synthesis. IEEE Trans. Image Process. 2016, 25, 4782–4795. [Google Scholar] [CrossRef]

- Weng, X.; Kitani, K. Learning Spatio-Temporal Features with Two-Stream Deep 3D CNNs for Lipreading. In Proceedings of the British Machine Vision Conference (BMVC), Cardiff, UK, 9–12 September 2019. [Google Scholar]

- Xue, F.; Li, Y.; Liu, D.; Xie, Y.; Wu, L.; Hong, R. Lipformer: Learning to lipread unseen speakers based on visual-landmark transformers. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 4507–4517. [Google Scholar] [CrossRef]

| “Excuse me.” | “Hello.” |

|---|---|

| “Goodbye.” | “See you.” |

| “Nice to meet you.” | “Thank you.” |

| “How are you.” | “I am sorry.” |

| “You are welcome.” | “Have a good time.” |

| Dataset | LBP [8] | HMM [4] | DBN [21] | ResNet-18 + TCN | VideoMAE [32] | Ours |

|---|---|---|---|---|---|---|

| OuluVS | 64.2 | 60.9 | 42.8 | 84.9 | 86.1 | 87.0 |

| Self-data | 64.7 | 65.2 | 39.2 | 80.4 | 81.7 | 83.3 |

| Method | LRW | LRW-1000 |

|---|---|---|

| LRW [14] | 61.1 | 28.0 |

| Two-stream 3DCNN [57] | 84.1 | 38.7 |

| Multi-Scale TCN [30] | 85.3 | 41.4 |

| Ours | 86.2 | 42.1 |

| Configuration | Accuracy |

|---|---|

| Time series only | 82.3 |

| Recurrence plot only | 80.7 |

| Multi-view fusion (w/o IB) | 85.4 |

| Multi-view fusion (with IB, full model) | 86.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Sun, H.; Cai, J.; Wu, J. MVIB-Lip: Multi-View Information Bottleneck for Visual Speech Recognition via Time Series Modeling. Entropy 2025, 27, 1121. https://doi.org/10.3390/e27111121

Li Y, Sun H, Cai J, Wu J. MVIB-Lip: Multi-View Information Bottleneck for Visual Speech Recognition via Time Series Modeling. Entropy. 2025; 27(11):1121. https://doi.org/10.3390/e27111121

Chicago/Turabian StyleLi, Yuzhe, Haocheng Sun, Jiayi Cai, and Jin Wu. 2025. "MVIB-Lip: Multi-View Information Bottleneck for Visual Speech Recognition via Time Series Modeling" Entropy 27, no. 11: 1121. https://doi.org/10.3390/e27111121

APA StyleLi, Y., Sun, H., Cai, J., & Wu, J. (2025). MVIB-Lip: Multi-View Information Bottleneck for Visual Speech Recognition via Time Series Modeling. Entropy, 27(11), 1121. https://doi.org/10.3390/e27111121