CSCVAE-NID: A Conditionally Symmetric Two-Stage CVAE Framework with Cost-Sensitive Learning for Imbalanced Network Intrusion Detection

Abstract

1. Introduction

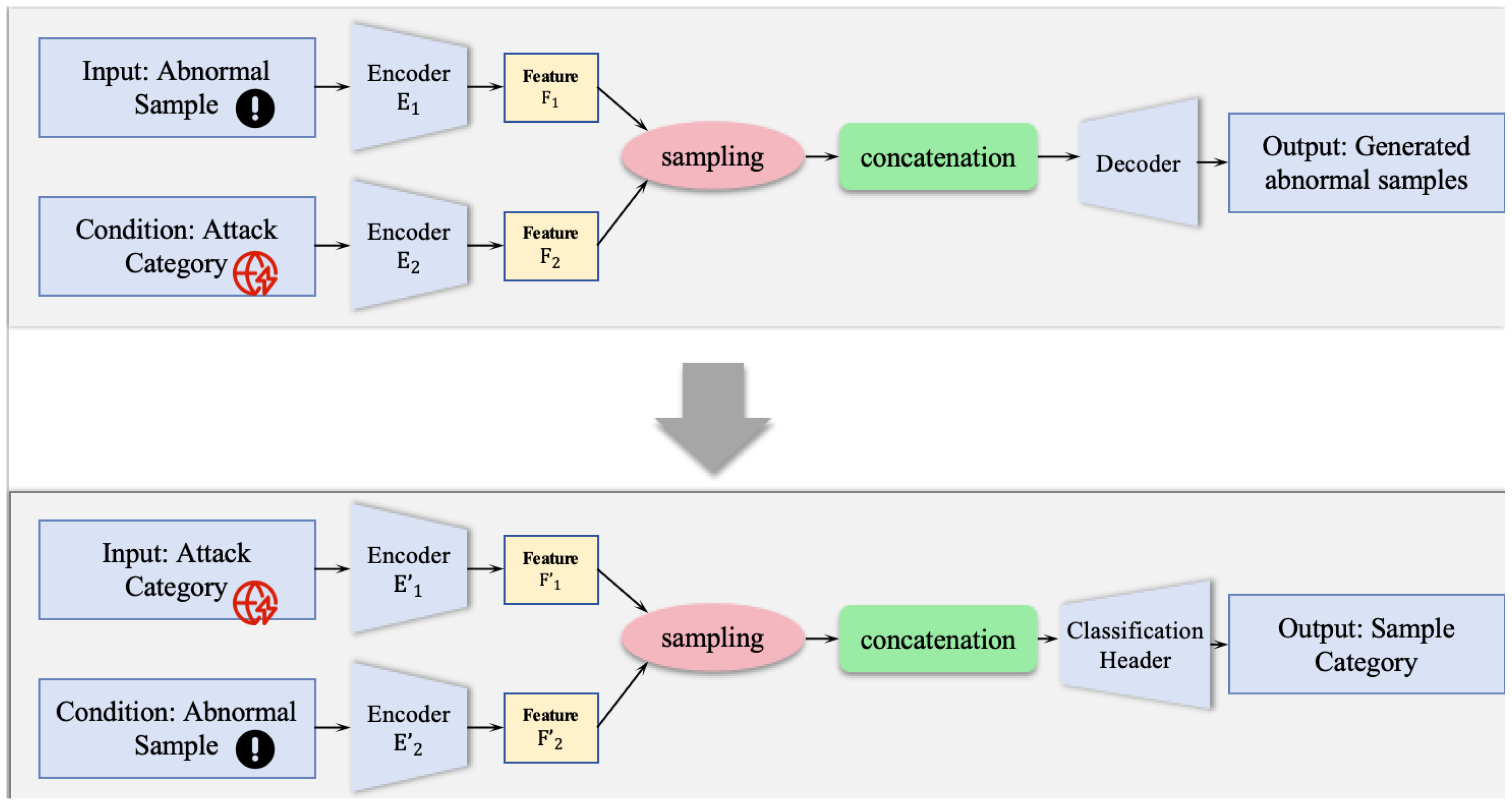

- We propose a data augmentation scheme based on a Conditional Variational Autoencoder (CVAE), namely, the DA-CVAE model. This model leverages the attack category as a condition to directionally generate high-quality and diverse synthetic samples for minority classes within the dataset. Consequently, it effectively counteracts the training bias induced by the severe class imbalance, a common issue in such data.

- To enable high-precision multi-class classification, we developed the CSMC-CVAE model, which operates on the fundamental principle of matching probabilistic distributions. This model reframes the classification task as a problem of quantifying the consistency between a given sample’s feature distribution and the prototypical distribution of each candidate class.

- To further address the issue of class imbalance at the algorithmic level, we incorporated a cost-sensitive learning strategy within the CSMC-CVAE’s training paradigm. This was achieved by augmenting the loss function with a predefined cost matrix, which effectively forces the optimization process to prioritize the correct classification of samples from underrepresented classes.

- Experimental results on two public datasets indicate that our proposed CSCVAE-NID framework significantly outperforms both conventional and state-of-the-art methods in terms of detection accuracy.

2. Related Work

2.1. Machine Learning-Based Network Intrusion Detection

2.2. Deep Learning-Based Network Intrusion Detection

2.3. Imbalanced Data Handling in Network Intrusion Detection

3. The Proposed Methodology

3.1. Overview

3.2. DA-CVAE for Data Augmentation

3.3. Cost-Sensitive CVAE for Multi-Class Classification

- Our approach automates cost assignment by using the inverse of class frequencies. This method obviates the need for subjective, manual cost matrix definition by domain experts, ensuring that the weighting scheme is transparent and highly reproducible.

- By assigning higher weights to minority classes, the contribution of their misclassification errors to the total loss is significantly magnified. This compels the optimization process to prioritize learning from these underrepresented samples, directly counteracting the learning bias induced by the dominant majority classes.

- The frequency-inverse weighting scheme is inherently adaptive and does not require prior domain knowledge of attack severity. Costs are automatically inferred from the data distribution, allowing our framework to be readily applied to diverse datasets without manual recalibration, which enhances its versatility and practical applicability.

4. Experiments and Result Analysis

4.1. Experimental Setup

- (1)

- : measures the proportion of true positive instances among all instances classified as positive (anomalous), and .

- (2)

- : is the percentage of correctly predicted anomaly samples out of the total number of actual anomaly samples, and .

- (3)

- -: The - is the harmonic mean of Precision and Recall, providing a single metric to measure the overall detection accuracy of a model, and -.

4.2. Experimental Results

4.2.1. Comparisons with State-of-the-Art Methods

4.2.2. Ablation Studies

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mao, J.; Wei, Z.; Li, B.; Zhang, R.; Song, L. Toward Ever-Evolution Network Threats: A Hierarchical Federated Class-Incremental Learning Approach for Network Intrusion Detection in IIoT. IEEE Internet Things J. 2024, 11, 29864–29877. [Google Scholar] [CrossRef]

- Nguyen, X.H.; Le, K.H. nNFST: A single-model approach for multiclass novelty detection in network intrusion detection systems. J. Netw. Comput. Appl. 2025, 236, 104128. [Google Scholar] [CrossRef]

- Thakkar, A.; Kikani, N.; Geddam, R. Fusion of linear and non-linear dimensionality reduction techniques for feature reduction in LSTM-based Intrusion Detection System. Appl. Soft Comput. 2024, 154, 111378. [Google Scholar] [CrossRef]

- Cai, S.; Zhao, Y.; Lyu, J.; Wang, S.; Hu, Y.; Cheng, M.; Zhang, G. DDP-DAR: Network intrusion detection based on denoising diffusion probabilistic model and dual-attention residual network. Neural Netw. 2025, 184, 107064. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Wu, Y.; Huang, X. Toward transferable adversarial attacks against autoencoder-based network intrusion detectors. IEEE Trans. Ind. Inform. 2024, 20, 13863–13872. [Google Scholar] [CrossRef]

- Qi, L.; Yang, Y.; Zhou, X.; Rafique, W.; Ma, J. Fast anomaly identification based on multiaspect data streams for intelligent intrusion detection toward secure industry 4.0. IEEE Trans. Ind. Inform. 2021, 18, 6503–6511. [Google Scholar] [CrossRef]

- Mehedi, S.T.; Anwar, A.; Rahman, Z.; Ahmed, K.; Islam, R. Dependable intrusion detection system for IoT: A deep transfer learning based approach. IEEE Trans. Ind. Inform. 2022, 19, 1006–1017. [Google Scholar] [CrossRef]

- Xu, C.; Shen, J.; Du, X. A method of few-shot network intrusion detection based on meta-learning framework. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3540–3552. [Google Scholar] [CrossRef]

- Papamartzivanos, D.; Mármol, F.G.; Kambourakis, G. Dendron: Genetic trees driven rule induction for network intrusion detection systems. Future Gener. Comput. Syst. 2018, 79, 558–574. [Google Scholar] [CrossRef]

- Abdulhammed, R.; Musafer, H.; Alessa, A.; Faezipour, M.; Abuzneid, A. Features dimensionality reduction approaches for machine learning based network intrusion detection. Electronics 2019, 8, 322. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. ICISSp 2018, 1, 108–116. [Google Scholar]

- Mendonça, R.V.; Teodoro, A.A.; Rosa, R.L.; Saadi, M.; Melgarejo, D.C.; Nardelli, P.H.; Rodríguez, D.Z. Intrusion detection system based on fast hierarchical deep convolutional neural network. IEEE Access 2021, 9, 61024–61034. [Google Scholar] [CrossRef]

- Atefinia, R.; Ahmadi, M. Network intrusion detection using multi-architectural modular deep neural network. J. Supercomput. 2021, 77, 3571–3593. [Google Scholar] [CrossRef]

- Yang, J.; Chen, X.; Chen, S.; Jiang, X.; Tan, X. Conditional variational auto-encoder and extreme value theory aided two-stage learning approach for intelligent fine-grained known/unknown intrusion detection. IEEE Trans. Inf. Forensics Secur. 2021, 16, 3538–3553. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J. The evaluation of Network Anomaly Detection Systems: Statistical analysis of the UNSW-NB15 data set and the comparison with the KDD99 data set. Inf. Secur. J. Glob. Perspect. 2016, 25, 18–31. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J.; Creech, G. Novel Geometric Area Analysis Technique for Anomaly Detection Using Trapezoidal Area Estimation on Large-Scale Networks. IEEE Trans. Big Data 2019, 5, 481–494. [Google Scholar] [CrossRef]

- Gamage, S.; Samarabandu, J. Deep learning methods in network intrusion detection: A survey and an objective comparison. J. Netw. Comput. Appl. 2020, 169, 102767. [Google Scholar] [CrossRef]

- Dogan, G. ProTru: A provenance-based trust architecture for wireless sensor networks. Int. J. Netw. Manag. 2016, 26, 131–151. [Google Scholar] [CrossRef]

- Lawal, M.A.; Shaikh, R.A.; Hassan, S.R. Security analysis of network anomalies mitigation schemes in IoT networks. IEEE Access 2020, 8, 43355–43374. [Google Scholar] [CrossRef]

- Li, Y.; Li, J. MultiClassifier: A combination of DPI and ML for application-layer classification in SDN. In Proceedings of the 2014 2nd International Conference on Systems and Informatics (ICSAI 2014), Shanghai, China, 15–17 November 2014; pp. 682–686. [Google Scholar]

- Moustafa, N.; Turnbull, B.; Choo, K.K.R. An ensemble intrusion detection technique based on proposed statistical flow features for protecting network traffic of internet of things. IEEE Internet Things J. 2018, 6, 4815–4830. [Google Scholar] [CrossRef]

- Maniriho, P.; Mahoro, L.J.; Niyigaba, E.; Bizimana, Z.; Ahmad, T. Detecting intrusions in computer network traffic with machine learning approaches. Int. J. Intell. Eng. Syst. 2020, 13, 433–445. [Google Scholar] [CrossRef]

- Tong, X.; Tan, X.; Chen, L.; Yang, J.; Zheng, Q. BFSN: A novel method of encrypted traffic classification based on bidirectional flow sequence network. In Proceedings of the 2020 3rd International Conference on Hot Information-Centric Networking (HotICN), Hefei, China, 12–14 December 2020; pp. 160–165. [Google Scholar]

- Zhao, R.; Gui, G.; Xue, Z.; Yin, J.; Ohtsuki, T.; Adebisi, B.; Gacanin, H. A novel intrusion detection method based on lightweight neural network for internet of things. IEEE Internet Things J. 2021, 9, 9960–9972. [Google Scholar] [CrossRef]

- Popoola, S.I.; Adebisi, B.; Hammoudeh, M.; Gui, G.; Gacanin, H. Hybrid deep learning for botnet attack detection in the internet-of-things networks. IEEE Internet Things J. 2020, 8, 4944–4956. [Google Scholar] [CrossRef]

- Ma, C.; Du, X.; Cao, L. Analysis of multi-types of flow features based on hybrid neural network for improving network anomaly detection. IEEE Access 2019, 7, 148363–148380. [Google Scholar] [CrossRef]

- Lunardi, W.T.; Lopez, M.A.; Giacalone, J.P. Arcade: Adversarially regularized convolutional autoencoder for network anomaly detection. IEEE Trans. Netw. Serv. Manag. 2022, 20, 1305–1318. [Google Scholar] [CrossRef]

- Zhou, Y.; Song, X.; Zhang, Y.; Liu, F.; Zhu, C.; Liu, L. Feature encoding with autoencoders for weakly supervised anomaly detection. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 2454–2465. [Google Scholar] [CrossRef]

- Al-Qarni, E.A.; Al-Asmari, G.A. Addressing imbalanced data in network intrusion detection: A review and survey. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 136–143. [Google Scholar] [CrossRef]

- Liu, Z.; Li, Y.; Chen, N.; Wang, Q.; Hooi, B.; He, B. A survey of imbalanced learning on graphs: Problems, techniques, and future directions. IEEE Trans. Knowl. Data Eng. 2025, 37, 3132–3152. [Google Scholar] [CrossRef]

- Elyan, E.; Moreno-Garcia, C.F.; Jayne, C. CDSMOTE: Class decomposition and synthetic minority class oversampling technique for imbalanced-data classification. Neural Comput. Appl. 2021, 33, 2839–2851. [Google Scholar] [CrossRef]

- Arifin, M.A.S.; Stiawan, D.; Yudho Suprapto, B.; Susanto, S.; Salim, T.; Idris, M.Y.; Budiarto, R. Oversampling and undersampling for intrusion detection system in the supervisory control and data acquisition IEC 60870-5-104. IET Cyber-Phys. Syst. Theory Appl. 2024, 9, 282–292. [Google Scholar] [CrossRef]

- Zheng, M.; Hu, X.; Hu, Y.; Zheng, X.; Luo, Y. Fed-UGI: Federated undersampling learning framework with Gini impurity for imbalanced network intrusion detection. IEEE Trans. Inf. Forensics Secur. 2024, 20, 1262–1277. [Google Scholar] [CrossRef]

- Tao, X.; Zheng, Y.; Chen, W.; Zhang, X.; Qi, L.; Fan, Z.; Huang, S. SVDD-based weighted oversampling technique for imbalanced and overlapped dataset learning. Inf. Sci. 2022, 588, 13–51. [Google Scholar] [CrossRef]

- Tan, X.; Su, S.; Huang, Z.; Guo, X.; Zuo, Z.; Sun, X.; Li, L. Wireless sensor networks intrusion detection based on SMOTE and the random forest algorithm. Sensors 2019, 19, 203. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.H.; Septian, T.W. Combining oversampling with recurrent neural networks for intrusion detection. In Proceedings of the International Conference on Database Systems for Advanced Applications, Taipei, Taiwan, 11–14 April 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 305–320. [Google Scholar]

- Al, S.; Dener, M. STL-HDL: A new hybrid network intrusion detection system for imbalanced dataset on big data environment. Comput. Secur. 2021, 110, 102435. [Google Scholar] [CrossRef]

- Jedrzejowicz, J.; Jedrzejowicz, P. GEP-based classifier for mining imbalanced data. Expert Syst. Appl. 2021, 164, 114058. [Google Scholar] [CrossRef]

- Luo, Y.; Chen, R.; Li, C.; Yang, D.; Tang, K.; Su, J. An improved binary simulated annealing algorithm and TPE-FL-LightGBM for fast network intrusion detection. Electronics 2025, 14, 231. [Google Scholar] [CrossRef]

- Chen, W.; Yang, K.; Yu, Z.; Shi, Y.; Chen, C.P. A survey on imbalanced learning: Latest research, applications and future directions. Artif. Intell. Rev. 2024, 57, 137. [Google Scholar] [CrossRef]

- Zeng, W.; Zhang, C.; Liang, X.; Xia, J.; Lin, Y.; Lin, Y. Intrusion detection-embedded chaotic encryption via hybrid modulation for data center interconnects. Opt. Lett. 2025, 50, 4450–4453. [Google Scholar] [CrossRef]

- Wang, Z.; Chu, X.; Li, D.; Yang, H.; Qu, W. Cost-sensitive matrixized classification learning with information entropy. Appl. Soft Comput. 2022, 116, 108266. [Google Scholar] [CrossRef]

- Telikani, A.; Gandomi, A.H. Cost-sensitive stacked auto-encoders for intrusion detection in the Internet of Things. Internet Things 2021, 14, 100122. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Habibi, O.; Chemmakha, M.; Lazaar, M. Imbalanced tabular data modelization using CTGAN and machine learning to improve IoT Botnet attacks detection. Eng. Appl. Artif. Intell. 2023, 118, 105669. [Google Scholar] [CrossRef]

- Chen, H.; Wei, J.; Huang, H.; Wen, L.; Yuan, Y.; Wu, J. Novel imbalanced fault diagnosis method based on generative adversarial networks with balancing serial CNN and Transformer (BCTGAN). Expert Syst. Appl. 2024, 258, 125171. [Google Scholar] [CrossRef]

- He, H.; Bai, Y.; Garcia, E.A.; Li, S. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 1322–1328. [Google Scholar]

| Dataset | Label | Category | Original Training Set | Augmented Training Set |

|---|---|---|---|---|

| UNSW-NB15 | 0 | Normal | 56,000 | 56,000 |

| 1 | DoS | 12,264 | 22,264 | |

| 2 | Reconnaissance | 10,491 | 20,491 | |

| 3 | Shellcode | 1133 | 2133 | |

| 4 | Worms | 130 | 230 | |

| CICIDS2017 | 0 | Benign | 105,222 | 105,222 |

| 1 | DoS | 21,550 | 31,550 | |

| 2 | Port Scan | 10,809 | 20,809 | |

| 3 | Brute Force | 5235 | 7235 | |

| 4 | Web Attack | 1476 | 2476 | |

| 5 | Bot | 857 | 1057 |

| Predicted Class | |||

|---|---|---|---|

| Attack | Normal | ||

| Actual Class | Attack | True Positive (TP) | False Negative (FN) |

| Normal | False Positive (FP) | True Negative (TN) | |

| Component | Layer | Filter Size | Stride |

|---|---|---|---|

| Encoder | Conv1 | 3 × 3 | (2, 2) |

| Conv2 | (1, 1) | ||

| Conv3 | (2, 2) | ||

| Conv4 | (1, 1) | ||

| Decoder | DeConv1 | (1, 1) | |

| DeConv2 | (2, 2) | ||

| DeConv3 | (1, 1) | ||

| DeConv4 | (2, 2) | ||

| Multi-Classification Header | Dense(128) | ||

| Softmax |

| Method | Dataset | Total Training Time (h) | Average Inference Time (ms) |

|---|---|---|---|

| TMG-IDS | CICIDS-2017 | 8.41 | 4.64 |

| SALAD | 8.35 | 1.23 | |

| DCHAE | 8.29 | 2.58 | |

| CAEP | 7.83 | 3.71 | |

| CSCVAE-NID (Ours) | 8.22 | 0.90 |

| Label | RFFE | IDS-INT | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| Normal | 0.869 | 0.882 | 0.875 | 0.933 | 0.927 | 0.930 |

| Attack | 0.857 | 0.826 | 0.841 | 0.930 | 0.908 | 0.919 |

| Macro- | 0.866 | 0.867 | 0.866 | 0.932 | 0.922 | 0.927 |

| Label | MF-Net | TMG-IDS | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| Normal | 0.925 | 0.916 | 0.920 | 0.967 | 0.958 | 0.962 |

| Attack | 0.941 | 0.936 | 0.938 | 0.972 | 0.951 | 0.961 |

| Macro- | 0.929 | 0.922 | 0.925 | 0.970 | 0.956 | 0.963 |

| Label | SALAD | DCHAE | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| Normal | 0.946 | 0.940 | 0.943 | 0.961 | 0.972 | 0.966 |

| Attack | 0.962 | 0.935 | 0.948 | 0.956 | 0.968 | 0.962 |

| Macro- | 0.950 | 0.939 | 0.944 | 0.960 | 0.971 | 0.965 |

| Label | CAEP | CSCVAE-NID (Ours) | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| Normal | 0.975 | 0.968 | 0.971 | 0.989 | 0.981 | 0.985 |

| Attack | 0.961 | 0.954 | 0.957 | 0.985 | 0.992 | 0.988 |

| Macro- | 0.971 | 0.964 | 0.967 | 0.988 | 0.984 | 0.986 |

| Label | RFFE | IDS-INT | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| Normal | 0.893 | 0.904 | 0.898 | 0.925 | 0.941 | 0.933 |

| Attack | 0.858 | 0.873 | 0.865 | 0.931 | 0.916 | 0.923 |

| Macro- | 0.883 | 0.895 | 0.889 | 0.927 | 0.933 | 0.930 |

| Label | MF-Net | TMG-IDS | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| Normal | 0.952 | 0.937 | 0.944 | 0.974 | 0.930 | 0.951 |

| Attack | 0.928 | 0.940 | 0.934 | 0.946 | 0.953 | 0.950 |

| Macro- | 0.945 | 0.938 | 0.941 | 0.966 | 0.937 | 0.951 |

| Label | SALAD | DCHAE | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| Normal | 0.955 | 0.941 | 0.948 | 0.979 | 0.975 | 0.977 |

| Attack | 0.936 | 0.965 | 0.950 | 0.952 | 0.966 | 0.959 |

| Macro- | 0.949 | 0.948 | 0.948 | 0.971 | 0.972 | 0.971 |

| Label | CAEP | CSCVAE-NID (Ours) | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| Normal | 0.964 | 0.949 | 0.956 | 0.982 | 0.989 | 0.985 |

| Attack | 0.958 | 0.973 | 0.965 | 0.968 | 0.977 | 0.972 |

| Macro- | 0.962 | 0.956 | 0.959 | 0.978 | 0.985 | 0.981 |

| Label | RFFE | IDS-INT | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| 0 | 0.931 | 0.879 | 0.904 | 0.928 | 0.901 | 0.914 |

| 1 | 0.719 | 0.921 | 0.808 | 0.846 | 0.769 | 0.806 |

| 2 | 0.545 | 0.835 | 0.659 | 0.653 | 0.717 | 0.684 |

| 3 | 0.298 | 0.352 | 0.323 | 0.446 | 0.570 | 0.499 |

| 4 | 0.772 | 0.160 | 0.265 | 0.483 | 0.384 | 0.428 |

| Macro- | 0.838 | 0.871 | 0.854 | 0.871 | 0.851 | 0.861 |

| Label | MF-Net | TMG-IDS | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| 0 | 0.954 | 0.909 | 0.931 | 0.929 | 0.951 | 0.940 |

| 1 | 0.672 | 0.823 | 0.740 | 0.892 | 0.819 | 0.854 |

| 2 | 0.559 | 0.541 | 0.550 | 0.821 | 0.752 | 0.785 |

| 3 | 0.342 | 0.578 | 0.430 | 0.689 | 0.550 | 0.612 |

| 4 | 0.741 | 0.636 | 0.684 | 0.547 | 0.508 | 0.527 |

| Macro- | 0.850 | 0.842 | 0.842 | 0.905 | 0.898 | 0.901 |

| Label | SALAD | DCHAE | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| 0 | 0.934 | 0.944 | 0.939 | 0.963 | 0.920 | 0.941 |

| 1 | 0.872 | 0.804 | 0.837 | 0.752 | 0.903 | 0.820 |

| 2 | 0.859 | 0.915 | 0.886 | 0.671 | 0.841 | 0.746 |

| 3 | 0.507 | 0.701 | 0.588 | 0.283 | 0.707 | 0.404 |

| 4 | 0.497 | 0.447 | 0.471 | 0.574 | 0.735 | 0.645 |

| Macro- | 0.908 | 0.914 | 0.911 | 0.882 | 0.903 | 0.892 |

| Label | CAEP | CSCVAE-NID (Ours) | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| 0 | 0.955 | 0.946 | 0.950 | 0.974 | 0.959 | 0.966 |

| 1 | 0.826 | 0.908 | 0.865 | 0.879 | 0.921 | 0.899 |

| 2 | 0.771 | 0.674 | 0.719 | 0.806 | 0.840 | 0.823 |

| 3 | 0.303 | 0.512 | 0.381 | 0.768 | 0.693 | 0.729 |

| 4 | 0.533 | 0.609 | 0.568 | 0.692 | 0.784 | 0.735 |

| Macro- | 0.901 | 0.897 | 0.899 | 0.934 | 0.933 | 0.933 |

| Label | RFFE | IDS-INT | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| 0 | 0.841 | 0.874 | 0.857 | 0.904 | 0.918 | 0.911 |

| 1 | 0.918 | 0.859 | 0.888 | 0.937 | 0.906 | 0.921 |

| 2 | 0.902 | 0.934 | 0.918 | 0.926 | 0.860 | 0.892 |

| 3 | 0.961 | 0.782 | 0.862 | 0.798 | 0.851 | 0.824 |

| 4 | 0.743 | 0.689 | 0.715 | 0.905 | 0.699 | 0.789 |

| 5 | 0.892 | 0.577 | 0.700 | 0.931 | 0.784 | 0.851 |

| Macro- | 0.860 | 0.868 | 0.864 | 0.906 | 0.906 | 0.906 |

| Label | MF-Net | TMG-IDS | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| 0 | 0.935 | 0.947 | 0.941 | 0.955 | 0.967 | 0.961 |

| 1 | 0.972 | 0.931 | 0.951 | 0.926 | 0.934 | 0.930 |

| 2 | 0.938 | 0.984 | 0.960 | 0.983 | 0.925 | 0.953 |

| 3 | 0.904 | 0.846 | 0.874 | 0.920 | 0.881 | 0.900 |

| 4 | 0.896 | 0.821 | 0.857 | 0.874 | 0.796 | 0.833 |

| 5 | 0.961 | 0.919 | 0.940 | 0.907 | 0.942 | 0.924 |

| Macro- | 0.938 | 0.941 | 0.939 | 0.949 | 0.953 | 0.951 |

| Label | SALAD | DCHAE | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| 0 | 0.929 | 0.911 | 0.920 | 0.946 | 0.956 | 0.951 |

| 1 | 0.967 | 0.963 | 0.965 | 0.913 | 0.943 | 0.928 |

| 2 | 0.943 | 0.926 | 0.934 | 0.947 | 0.954 | 0.950 |

| 3 | 0.950 | 0.825 | 0.883 | 0.932 | 0.893 | 0.912 |

| 4 | 0.912 | 0.907 | 0.910 | 0.908 | 0.849 | 0.878 |

| 5 | 0.827 | 0.743 | 0.783 | 0.925 | 0.941 | 0.933 |

| Macro- | 0.935 | 0.916 | 0.925 | 0.939 | 0.950 | 0.944 |

| Label | CAEP | CSCVAE-NID (Ours) | ||||

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| 0 | 0.940 | 0.981 | 0.960 | 0.979 | 0.983 | 0.981 |

| 1 | 0.929 | 0.952 | 0.940 | 0.965 | 0.976 | 0.970 |

| 2 | 0.951 | 0.925 | 0.938 | 0.991 | 0.994 | 0.992 |

| 3 | 0.937 | 0.956 | 0.946 | 0.983 | 0.978 | 0.980 |

| 4 | 0.908 | 0.867 | 0.887 | 1.000 | 1.000 | 1.000 |

| 5 | 0.944 | 0.883 | 0.913 | 1.000 | 0.989 | 0.994 |

| Macro- | 0.944 | 0.938 | 0.952 | 0.977 | 0.982 | 0.980 |

| Datasets | Metrics | Comb. 1 (MC-CVAE) | Comb. 2 (+DA-CVAE) | Comb. 3 (+Cost-Sens.) | Comb. 4 (CSMC-CVAE) |

|---|---|---|---|---|---|

| UNSW-NB15 | Precision | 0.914 | 0.951 | 0.968 | 0.977 |

| Recall | 0.926 | 0.945 | 0.970 | 0.982 | |

| F1-score | 0.920 | 0.948 | 0.969 | 0.980 | |

| CICIDS2017 | Precision | 0.894 | 0.917 | 0.928 | 0.934 |

| Recall | 0.890 | 0.908 | 0.925 | 0.933 | |

| F1-score | 0.892 | 0.912 | 0.926 | 0.933 |

| Datasets | UNSW-NB15 | CICIDS2017 | ||||

|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | |

| 0.962 | 0.965 | 0.963 | 0.914 | 0.912 | 0.913 | |

| 0.973 | 0.974 | 0.973 | 0.926 | 0.928 | 0.927 | |

| 0.977 | 0.982 | 0.980 | 0.934 | 0.933 | 0.933 | |

| 0.975 | 0.971 | 0.973 | 0.930 | 0.924 | 0.927 | |

| 0.967 | 0.963 | 0.965 | 0.923 | 0.917 | 0.921 | |

| 0.958 | 0.955 | 0.956 | 0.917 | 0.915 | 0.916 | |

| Data Augmentation | Dataset | Classifier | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| CTGAN | CICIDS-2017 | CSMC-CVAE | 0.968 | 0.971 | 0.969 |

| BCTGAN | 0.971 | 0.975 | 0.973 | ||

| ADASYN | 0.959 | 0.968 | 0.963 | ||

| DA-CVAE (Ours) | 0.977 | 0.982 | 0.980 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Yu, X. CSCVAE-NID: A Conditionally Symmetric Two-Stage CVAE Framework with Cost-Sensitive Learning for Imbalanced Network Intrusion Detection. Entropy 2025, 27, 1086. https://doi.org/10.3390/e27111086

Wang Z, Yu X. CSCVAE-NID: A Conditionally Symmetric Two-Stage CVAE Framework with Cost-Sensitive Learning for Imbalanced Network Intrusion Detection. Entropy. 2025; 27(11):1086. https://doi.org/10.3390/e27111086

Chicago/Turabian StyleWang, Zhenyu, and Xuejun Yu. 2025. "CSCVAE-NID: A Conditionally Symmetric Two-Stage CVAE Framework with Cost-Sensitive Learning for Imbalanced Network Intrusion Detection" Entropy 27, no. 11: 1086. https://doi.org/10.3390/e27111086

APA StyleWang, Z., & Yu, X. (2025). CSCVAE-NID: A Conditionally Symmetric Two-Stage CVAE Framework with Cost-Sensitive Learning for Imbalanced Network Intrusion Detection. Entropy, 27(11), 1086. https://doi.org/10.3390/e27111086