1. Introduction

With the rapid growth of modern industries and manufacturing, rotating machinery and equipment are widely utilized in key sectors such as aerospace, automotive production, and energy generation. Bearings, as essential components of rotating machinery and equipment, play a crucial role in ensuring the safety, reliability, and efficiency of equipment operations [

1]. Failing to detect bearing faults promptly can lead to equipment malfunction, causing substantial economic losses or even casualties. Therefore, the timely and accurate detection of bearing faults is of great practical importance [

2,

3].

Traditional signal processing and analysis techniques have been extensively applied in the diagnosis of faults in rotating machinery; however, these methods often struggle to meet the increasingly complex requirements of modern equipment, particularly in terms of accuracy and efficiency. They are especially ineffective in varying operational conditions and noisy environments. Early machine learning models, such as K-Nearest Neighbors (KNNs) [

4], Artificial Neural Networks (ANNs) [

5], and Support Vector Machines (SVMs) [

6], when combined with feature extraction techniques in signal processing, have shown improvements and have enhanced fault identification performance [

7]. However, these approaches rely heavily on manual feature engineering, which can result in information loss and a degradation of classification performance if poor features are selected. With advancements in chip technology, deeper neural network models can now be efficiently trained. Deep learning (DL) models have the capability to automatically extract features directly from raw signals, eliminating the need for manual preprocessing, which results in greater robustness and improved performance [

8]. These models have achieved notable success in bearing fault diagnosis. Specifically, deep learning approaches such as Convolutional Neural Networks (CNNs) [

9,

10], Recurrent Neural Networks (RNNs) [

11], and Transformers [

12,

13] have been widely utilized in bearing fault diagnosis due to their robust automatic feature extraction abilities, which significantly enhance diagnostic accuracy, particularly in complex operational conditions.

However, there are two major bottlenecks in these approaches:

- (a)

Sample-dependent problem: Existing methods require a large number of labeled fault samples to achieve high accuracy [

14]. In real industrial scenarios, the scarcity of early fault samples, variable working conditions, and the high cost of labeling create challenges in meeting data requirements, as data distribution varies significantly [

15].

- (b)

Poor adaptability to dynamic working conditions: In variable-speed and high-noise environments, traditional feature extraction methods struggle to capture weak fault features. Moreover, in practical applications, equipment is typically shut down immediately once a fault occurs, preventing the collection of sufficient samples for model training [

16]. Therefore, more effective methods are needed for diagnosing bearing faults in real-world industries, especially for bearings operating under diverse conditions with limited data.

To tackle the challenge of limited data, Few-shot Learning (FSL) techniques have attracted growing interest in recent years. Luo et al. (2024) introduced an Elastic Prototypical Network that improves transfer diagnosis robustness under unstable rotational speeds [

17]; Jiang et al. (2024) designed a Recursive Prototypical Network with Coordinate Attention to enhance separability in few-shot cross-condition scenarios [

18]; Lin et al. (2025) proposed a Prototype Matching-based meta-learning model tailored for constrained-data diagnosis [

19]; Li et al. (2024) developed Learn-Then-Adapt, a test-time adaptation scheme enabling on-the-fly cross-domain adaptation without target labels [

20]; Cui et al. (2024) presented a Dictionary Domain Adaptation Transformer to alleviate cross-machine distribution shift by dictionary-level alignment [

21]; Yan et al. (2023) built LiConvFormer, a lightweight separable-multi-scale convolution plus broadcast self-attention framework for efficient deployment [

22]; Liu and Peng (2025) proposed a semi-supervised meta-learning approach with simplifying graph convolution for variable-condition few-shot diagnosis [

23]; Zhu et al. (2024) formulated a cloud–edge test-time adaptation pipeline with customized contrastive learning for online machinery diagnosis [

24]; Li et al. (2025) introduced a Multi-Variable Transformer-based meta-learning architecture that couples Transformer encoders with MAML for multivariate time series [

25]; and Xiao et al. (2025) provided a comprehensive survey on domain generalization for rotating machinery, consolidating settings, benchmarks, and open issues [

26].

Meta-learning is a central strategy within FSL. It has been demonstrated that this facilitates a rapid and effective adaptation to new tasks, with minimal data, by means of learning efficient learning strategies. This method has demonstrated considerable benefits, especially in the context of bearing fault detection [

27]. Meta-learning approaches are generally divided into three types: optimization-based methods, model-based methods, and metric-based methods [

28]. Among these, optimization-based approaches are designed to offer a globally shared initialization for all meta-tasks [

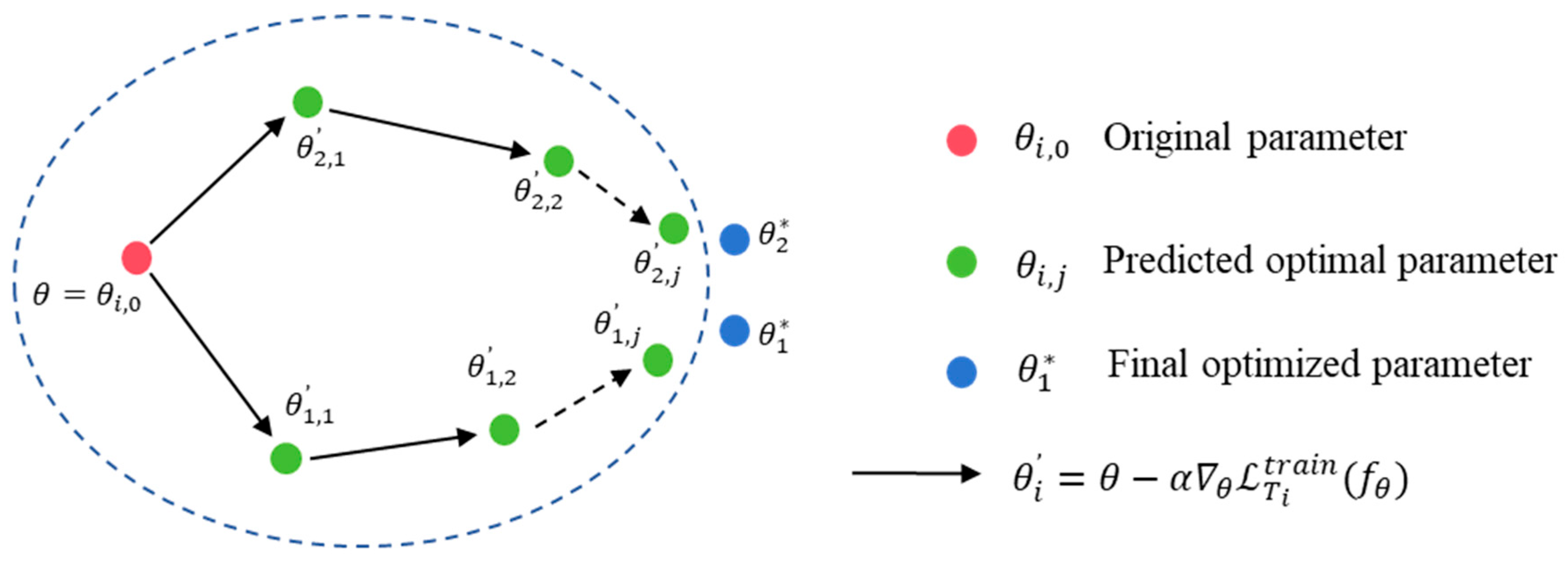

29], helping the model to rapidly achieve superior classification accuracy with only minor parameter adjustments and a small amount of data. Traditional optimization-based methods, such as model-independent meta-learning (MAML) [

30], substantially improve the model’s ability to quickly adapt to new tasks by setting shared initial weights during the meta-training phase [

31,

32].

In recent times, notable advancements have been made in applying meta-learning to diagnosing faults in rotating machinery. For example, Wang and Liu (2025) [

33] proposed a multi-scale meta-learning network (MS-MLN), which integrates a multi-scale feature encoder with a metric embedding strategy. This network effectively combines data from multiple scales without the need for manual feature extraction, leading to quick generalization at the task level. Lin et al. (2023) [

34] introduced the GMAML algorithm, which is specifically tailored to solve the issue of small-sample cross-domain bearing fault detection problems driven by diverse signals (such as acceleration/acoustics). The development of the channel interaction feature encoder (MK-ECA) was based on multi-core efficient channel attention and included a weight guidance factor (WGF) in the inner optimization of MAML, which adaptively tunes the training strategy and substantially enhances cross-domain generalization. Su et al. (2022) [

35] proposed the DRHRML method, which integrates Maximum Mean Discrepancy (MMD) constraints via the Improved Sparse Denoising Autoencoder (ISDAE) for data reconstruction. This approach reduces noise and maintains distributional consistency, achieving fast adaptation to small sample sizes and cross-task generalization through MAML-based recursive meta-learning (RML), leading to significant test accuracy improvements under various working conditions. Dong et al. (2025) [

36] introduced MTFL, aimed at small-sample cross-domain bearing fault diagnosis under diverse operating conditions. In their approach, 1D vibration signals are converted to 2D images (STI and MSMY branches), features are extracted using multi-source pre-trained ResNet18, and multi-source, two-branch features are selected and fused using SRF. The domain gap is narrowed through Domain Adaptation (DA) with a Learning Linear Adaptor, and the final classification is performed with a prototype network.

Although the above methods perform well across different tasks and operating conditions, the traditional MAML algorithm still has certain limitations, particularly when applied to cross-domain tasks. the inner-loop learning rate in MAML is fixed and does not adjust dynamically with the complexity of the task or changes in the data. This rigid learning rate strategy limits the model’s ability to adapt and generalize in more intricate and fluctuating task scenarios. Moreover, the varying complexity of bearing equipment operating conditions introduces challenges for fault diagnosis, especially in relation to cross-domain generalization. There are often notable differences in data distribution between the source and target domains, which makes it difficult to apply models trained on the source domain directly to the target domain, thus affecting diagnostic performance [

37,

38].

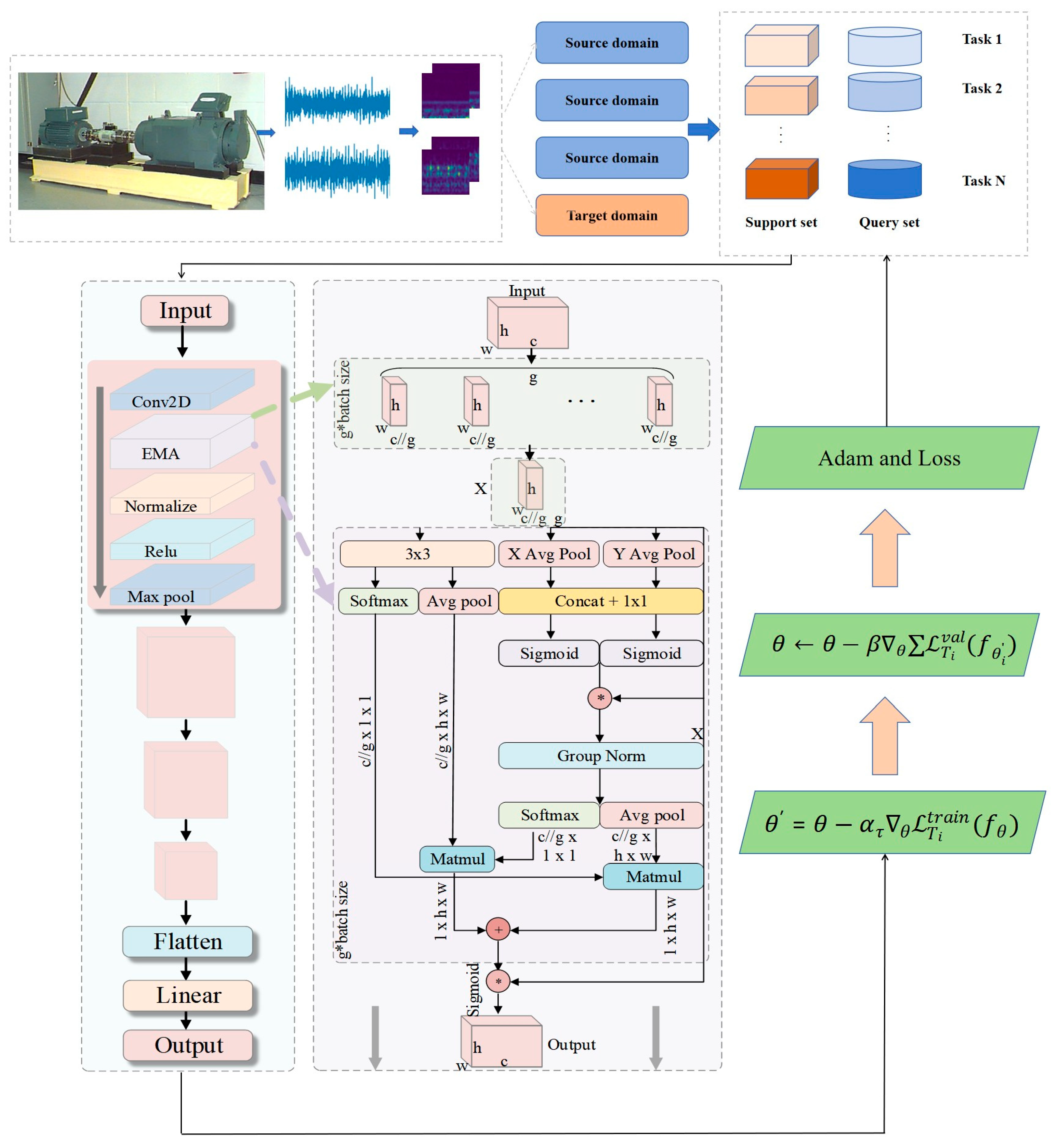

To address these challenges, this paper presents an adaptive meta-learning method, AdaMETA, for analyzing vibration signals obtained from bearings under different operating conditions. Compared to existing methods, AdaMETA provides three innovative contributions:

- (a)

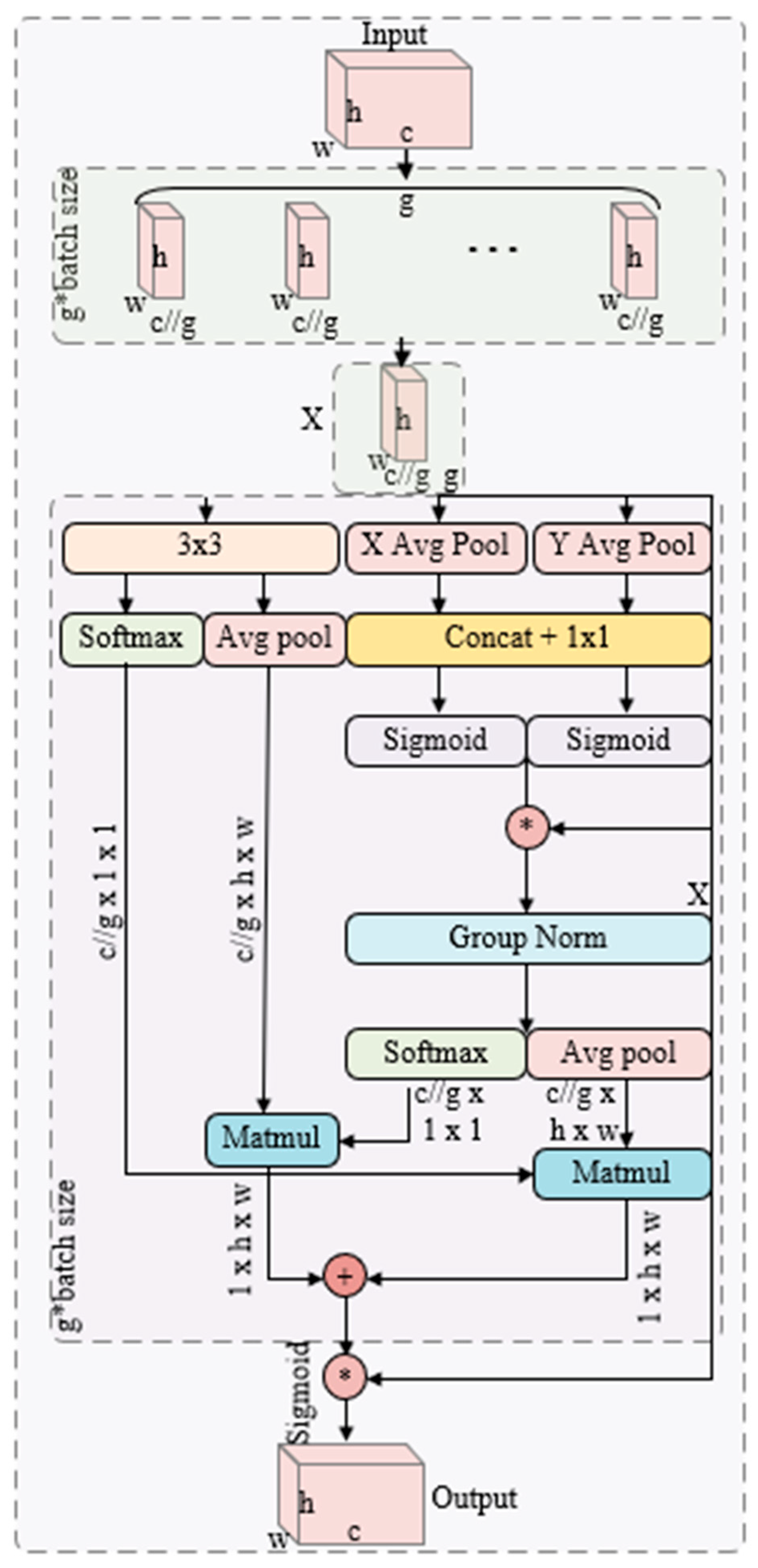

Efficient Multi-scale Attention Feature Extraction Encoder (C-EMA): A feature extraction encoder based on efficient multi-scale attention (EMA) is introduced, capable of more efficiently capturing key features in fault signals and enhancing feature learning under limited sample conditions. By integrating multi-scale information, C-EMA adaptively adjusts attention to different scale features, thereby improving the model’s recognition accuracy across diverse fault patterns.

- (b)

Improved MAML Algorithm with Dynamically Adjusted Inner-Loop Learning Rate: To address the limitations of the traditional MAML algorithm, an improved mechanism for adjusting the inner-loop learning rate is proposed. By dynamically modifying the learning rate based on task complexity, the model can flexibly meet the learning requirements of different tasks, thereby enhancing the generalization performance for cross-domain tasks. This innovation not only optimizes the learning strategy but also increases the model’s adaptability when facing diverse task types.

- (c)

Validation of Cross-domain Generalization Capability from Multiple Source Domains to a Target Domain: To better align with real-world industrial applications, the dataset is divided into four domains, with three serving as source domains and one as the target domain. An experimental scheme is designed to test cross-domain generalization from multiple source domains to the target domain. This experimental setup verifies the model’s training effectiveness under multi-source domains and assesses its cross-domain generalization ability to the target domain. The model’s robustness and effectiveness are further evaluated through a sample-limited cross-domain diagnostic scenario and noise interference experiments.

The remainder of this paper is structured as follows:

Section 2 introduces the fundamental theory of Model-Agnostic Meta-Learning (MAML) and the Efficient Multi-scale Attention Mechanism (EMA).

Section 3 presents a detailed description of the proposed method and diagnostic procedures. The reliability of the proposed method is validated through multiple experimental sets in

Section 4. Finally,

Section 5 concludes the paper.

4. Experimental Results and Analysis

4.1. Dataset Processing

4.1.1. Overview of the CWRU Dataset

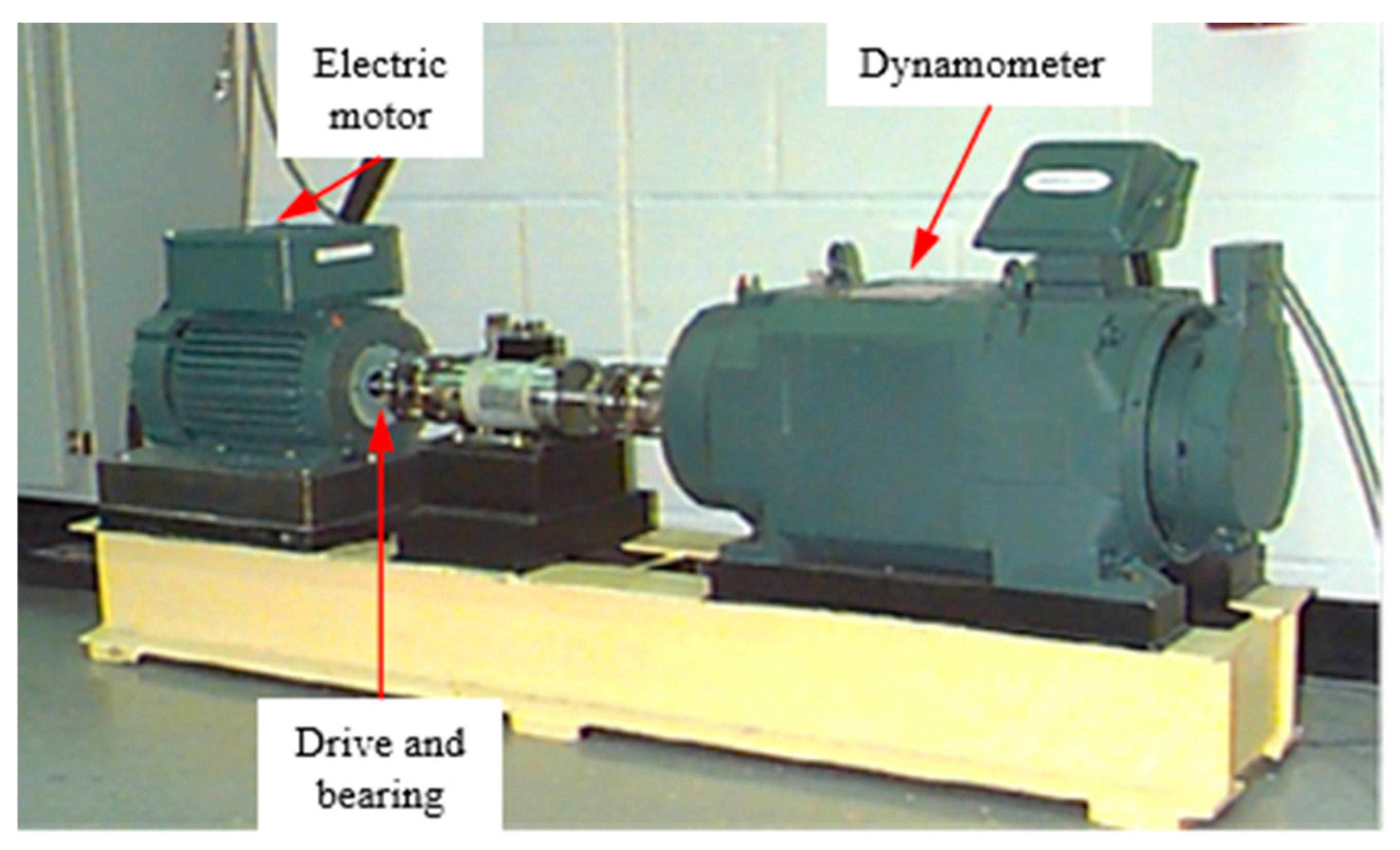

The CWRU (Case Western Reserve University Bearing Data Center) bearing failure dataset is one of the most commonly used public datasets in the field of health monitoring of rotating machinery [

42] and is widely used for the validation of vibration signal-driven fault diagnosis algorithms. The data are collected by piezoelectric accelerometers mounted at the drive end (DE) and fan end (FE) with sampling frequencies of 12 kHz and 48 kHz. The experimental platform, as shown in

Figure 5, uses 2 hp three-phase induction motors, with speeds corresponding to four load conditions (0 hp, 1 hp, 2 hp, and 3 hp) and rated speeds of approximately 1797 rpm, 1772 rpm, 1750 rpm, and 1730 rpm, respectively. The failure types cover Normal and three typical defects—Inner Ring (IR), Outer Ring (OR), and Rolling Element (RE), each with three damage sizes: 0.007″, 0.014″, and 0.021″, for a total of nine failure states (see

Table 2). The defects are accurately hole-made by electric discharge machining (EDM), which ensures the consistency of fault depth and location, thus ensuring that the dataset has a high degree of confidence in terms of controllability of working conditions and experimental reproducibility. The raw data are stored in the form of time-domain vibration signals without any preliminary processing and are suitable for the extraction of features and modeling methods in the time domain, frequency domain, and time–frequency domain.

4.1.2. Experimental Data Partitioning and Small-Sample Cross-Domain Settings

To capture load-dependent distribution shifts, the CWRU data are split into four load domains (0, 1, 2, 3 hp), each with 10 classes (1 normal, 9 faults). In cross-domain few-shot detection, one domain is randomly chosen as the target ; the remaining three are merged as the source . We train fully supervised on . In , only K samples per class (K ≤ 5) are used for adaptation, and the rest are used for testing, simulating scarce target-condition data. This design preserves load-induced statistical differences and avoids fault-type confounds, providing a clearer test of generalization and robustness.

Raw vibration signals are segmented with a 1024-sample sliding window (

, covering ≥ 2 rotor cycles) and 50% overlap to augment data and limit inter-sample correlation while preserving frequency resolution. After segmentation, short-time Fourier transforms (STFTs) produce fixed-size time–frequency images that capture local transients and global spectral patterns (see

Figure 6). Compared with pure time–domain features, these representations are more sensitive to cross-load distribution shifts and offer a stronger basis for few-shot cross-domain diagnosis.

4.2. Comparison of Algorithms in Different Cross-Domain Scenarios

This section evaluates the performance of the AdaMETA diagnostic model across four distinct low-shot cross-domain scenarios, with the scenario details provided in

Table 3. For each sub-task, which follows the ‘10-way 5-shot’ configuration, five samples from each class in the source domain are randomly selected to construct the task. The diagnostic model, once trained, is tested under different load conditions in the target domain. The results for all methods are shown in

Table 4 and

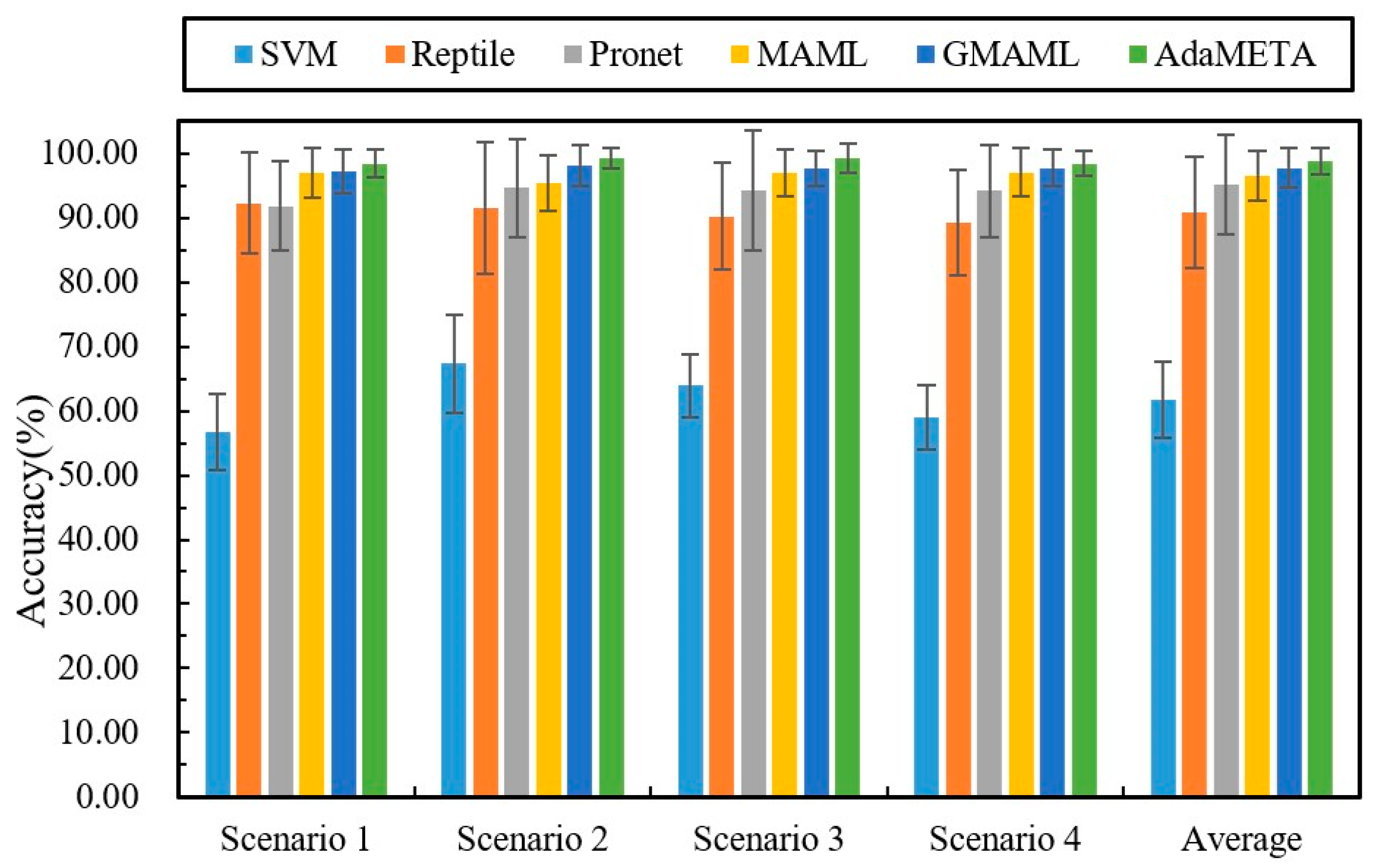

Figure 7.

The results show that, in comparative experiments across four typical load scenarios (Scenario 1 to Scenario 4), the proposed method achieves the highest classification accuracy across all test conditions with the smallest variation. Specifically, the accuracy rates for Scenarios 1 to 4 were 98.37 ± 2.17%, 99.16 ± 1.62%, 99.26 ± 2.31%, and 98.39 ± 1.88%, respectively, with an average accuracy of 98.8 ± 1.99%. The following results were obtained through comparison.

The comparative results demonstrate that, in the few-shot cross-domain fault diagnosis knowledge transfer setting, meta-learning frameworks significantly outperform traditional machine learning and conventional deep learning methods. Using Support Vector Machine (SVM) as a benchmark, the average diagnostic accuracy across the four load scenarios is only 61.75%, with the best performance in Scenario 2 at 67.35%. In contrast, under the same conditions, the four meta-learning models—Reptile, ProNet, MAML, and GMAML—achieved average accuracies of 90.8%, 95.2%, 96.56%, and 97.68%, respectively. This performance gap arises because meta-learning algorithms emphasize cross-task transfer and rapid adaptation during training, rather than overfitting to individual samples, enabling efficient and robust fault identification even in cases where target-domain samples are scarce.

Compared with typical meta-learning baselines such as Reptile, ProNet, and MAML, the proposed method achieves higher fault identification accuracy across four cross-load transfer scenarios. In these scenarios, the average accuracy improved by 8.0% over Reptile, 3.60% over ProNet, and 2.24% over MAML. Compared to the latest GMAML method, the proposed method improved by 1.12%. These gains stem from the method’s ability to efficiently extract shared diagnostic priors across multiple source-domain tasks and leverage a rapid adaptation mechanism to fully exploit the information potential of sparse target-domain samples, significantly enhancing cross-domain generalization. As illustrated in

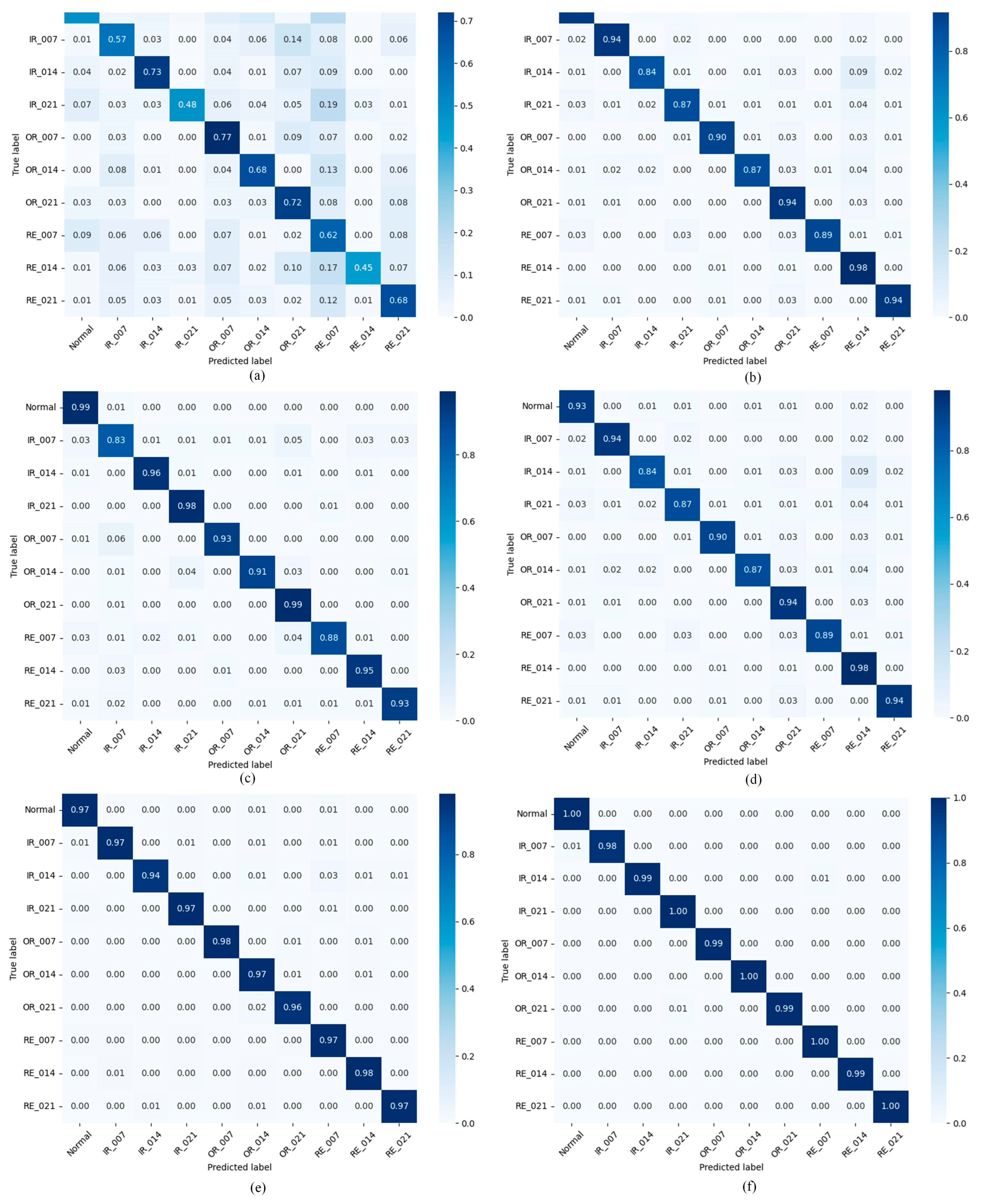

Figure 8, the 3D confusion matrix presents the specific classification results of the various methods in Scenario 2, demonstrating that the proposed method accurately identifies samples across categories.

4.3. Ablation Experiments of the Proposed Method

To rigorously assess the contribution of each component to overall performance, this study designed ablation experiments evaluating four model combinations across four scenarios; the results are shown in

Figure 9, and the specific accuracy is shown in

Table 5.

The experimental data revealed significant performance differences across scenarios. In Scenario 1, 2DCNN+EMA+DT-MAML performed best, with an accuracy of 98.37 ± 2.17%, significantly outperforming the other methods. A similar trend was observed in Scenario 2, where 2DCNN+EMA+DT-MAML attained 99.16 ± 1.62% accuracy, again exceeding the other combinations. In Scenarios 3 and 4, 2DCNN+EMA+DT-MAML continued to perform strongly, achieving 99.26 ± 2.31% and 98.39 ± 1.88% accuracy, respectively, and remaining significantly higher than the models that did not include EMA or DT.

Further analysis of average performance showed that the 2DCNN-based model combining EMA and DT (i.e., 2DCNN+EMA+DT-MAML) achieved an average accuracy of 99.04 ± 1.99% across the four scenarios, significantly outperforming the other combinations. This result confirms the importance and effectiveness of EMA and DT in improving the model’s generalization and robustness. Additionally, compared with using DT or EMA alone, their joint use produced a synergistic effect, further improving model performance.

In summary, these ablation experiments clearly demonstrate the key role of EMA and DT-MAML in enhancing the performance of the proposed method and validate the effectiveness of their joint application.

4.4. Effect of Dynamic Inner-Loop Learning Rate α on Diagnostic Performance

To evaluate the impact of the dynamic inner-loop learning rate

on the model’s convergence performance, this study conducted three comparative experiments: fixed

, fixed

combined with the EMA module (fixed

+ EMA), and fixed

+ EMA combined with dynamic

(fixed

+ EMA + dynamic

, i.e., the method proposed in this paper). The detailed information is shown in

Table 6. The experimental results, as shown in

Figure 10, demonstrate that the dynamic

method (blue line) rapidly reduces the loss value to 1.0 after only 15 iterations, approximately 40% faster than the fixed

+EMA method (green line), highlighting the significant role of dynamic

in accelerating convergence during the early stages of training.

Notably, the fixed + EMA method exhibits noticeable loss fluctuations and oscillations between 15 and 65 iterations (red region), reflecting instability in the optimization process due to task conflicts, which limits the model’s rapid convergence early on. In contrast, the model using a fixed (orange line) did not exhibit oscillations but reached a plateau after 200 iterations, with the loss value stabilizing around 0.3 and failing to decrease further. This suggests that a fixed learning rate lacks an effective adjustment mechanism, preventing optimization in the later stages of training.

In contrast, the dynamic scheduling strategy effectively mitigates these issues, avoiding oscillations while continuously driving model optimization in the later stages of training, ultimately reducing the loss value to a minimum of 0.1. These results demonstrate the critical role of dynamic learning rate scheduling in improving the model convergence speed, avoiding oscillations, and driving optimization in the later stages of training, which positively impacts the model’s generalization performance. The experimental results confirm the significant value of dynamic learning rate in cross-domain fault diagnosis tasks from both theoretical and practical perspectives.

4.5. Comparison of Algorithm Effect in Different Noise Environments

In order to verify the robustness and generalization ability of the proposed small-sample cross-domain bearing fault diagnosis method in real industrial environments, this study further carried out experimental analyses under noise interference conditions. Specifically, Gaussian white noise of different intensities was artificially added to the bearing vibration signals to simulate the sensor measurement errors and environmental disturbances in the real production environment. Gaussian white noise is a random signal with uniform spectrum and obeys Gaussian distribution, and its probability density function is defined as: , where denotes the mean of the noise and denotes the variance.

In the experiments, the diagnostic accuracy of the proposed method is compared and analyzed with four classical methods, namely, Reptile, ProNet, RelationNet, and MAML, in multiple cross-domain scenarios using different signal-to-noise ratios (SNRs) from −6 dB to 6 dB. The experimental results are shown in

Figure 11. As the noise intensity increases (i.e., the SNR value decreases), the accuracy of each diagnostic method tends to decrease. However, the method proposed in this paper consistently exhibits stronger noise immunity and is able to maintain the highest diagnostic accuracy under all noise levels with a slower and more stable decreasing trend. This indicates that the method proposed in this paper can effectively suppress noise interference, demonstrates significant robustness and generalization performance, and is more suitable for the complex and changing environments in real industrial scenarios.

4.6. T-SNE Visualization

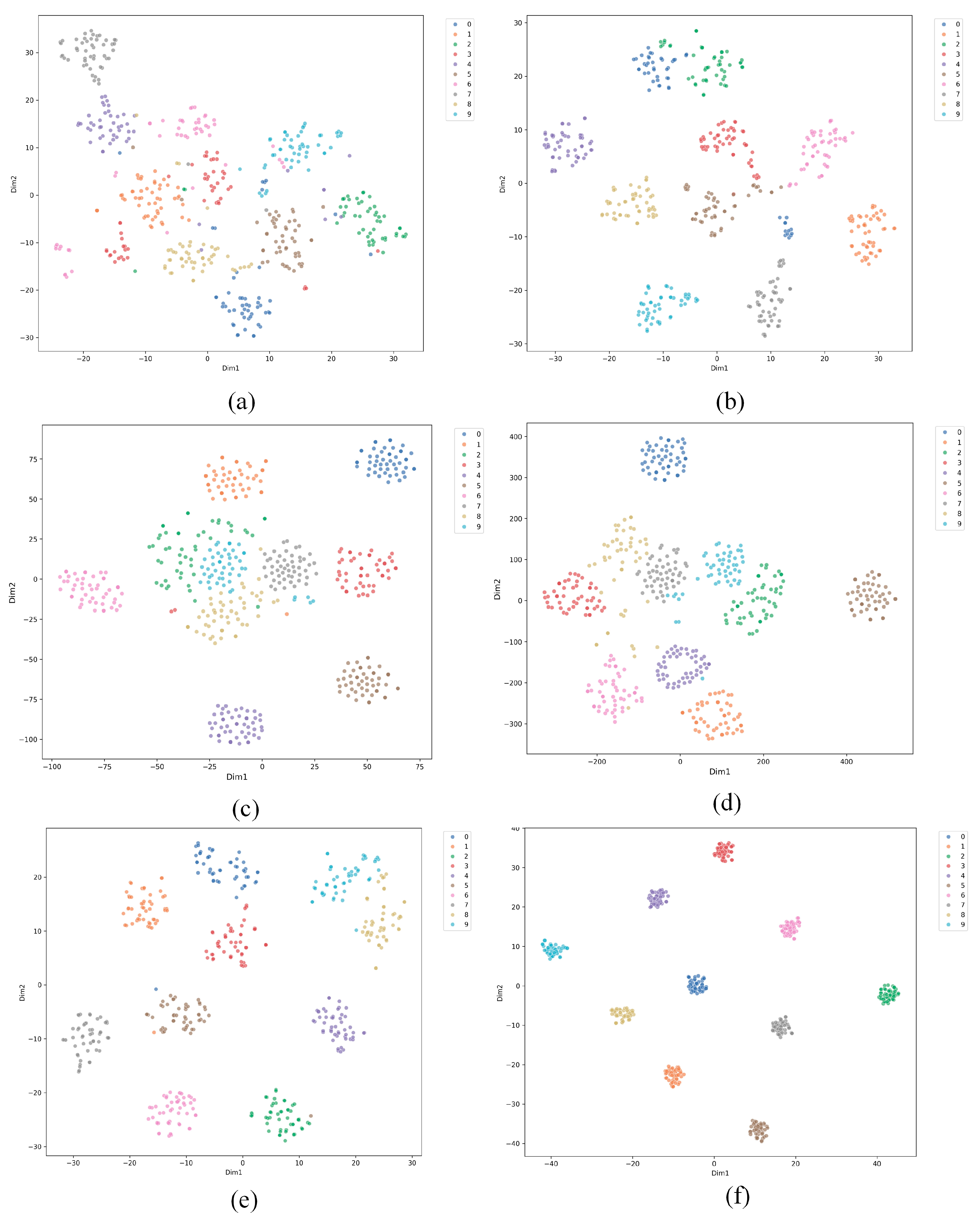

Figure 12 presents the t-SNE results for six methods applied to small-sample cross-domain fault diagnosis of bearings. Each sub-figure shows a different method with data points colored by class. (a) shows the results for SVM, with widely spread data points and some class overlap, indicating difficulty in handling the task. (b) shows the results for Reptile, with more distinct clusters but still some class mixing, suggesting better performance than SVM but challenges with small samples. (c) and (d) show the results for Prototypical Networks (ProtoNet) and Relation Networks (RelationNet), both showing more compact clusters and reduced overlap, though some intersections remain. (e) shows the results for MAML, with better separation and less overlap, indicating strong adaptability to small-sample cross-domain tasks. (f) shows the results for the proposed method, which achieves the best clustering with minimal overlap, demonstrating superior performance in handling small-sample cross-domain fault diagnosis. Overall, while traditional methods such as SVM struggle with class separation, newer meta-learning approaches, especially the proposed method, significantly improve the handling of small-sample cross-domain tasks.

4.7. Comparison of Attention Mechanisms

To validate the superiority of the Efficient Multi-scale Attention (EMA) module in few-shot cross-domain diagnostic tasks and address the reviewers’ suggestions, this section presents comparison experiments with mainstream attention mechanisms. Under the identical AdaMETA framework, network architecture, and training settings, we replace the C-EMA module with two widely used and classical attention mechanisms for comparison:

Squeeze-and-Excitation Network (SENet) [

43]: A classic channel attention mechanism that performs squeezing via global average pooling and constructs inter-channel dependencies using fully connected layers.

Convolutional Block Attention Module (CBAM) [

40]: A hybrid attention mechanism that sequentially applies channel attention followed by spatial attention.

The experiments are conducted in the most representative cross-load Scenario 2 (source domains: D

0, D

1, D

3 → target domain: D

2), with the task setting of 10-way 5-shot. All comparison methods employ the same dynamic learning rate strategy (DT-MAML) to ensure fairness. The comparison results are shown in

Table 7.

The analysis of

Table 7 leads to a clear conclusion: the EMA module we adopted achieves the best diagnostic performance while introducing the lowest computational overhead.

Performance Advantage: The accuracy and stability of EMA are significantly higher than those of SENet and CBAM. We attribute its advantage primarily to the fact that EMA does not involve dimensionality reduction and employs multi-scale grouping. SENet uses fully connected layers for dimensionality reduction in channel attention, which may lead to information loss. In contrast, the EMA module avoids any form of dimensionality reduction, preserving the integrity of channel information to the greatest extent. Additionally, by processing features through grouping and integrating multi-scale receptive fields, EMA is more flexible in capturing multi-scale patterns in fault signals than the single-scale CBAM.

Efficiency Advantage: As shown in

Table 7, the additional parameter count (ΔParams) and computational load (ΔFLOPs) of the EMA module are much lower than those of CBAM and significantly lower than SENet. This advantage stems from EMA’s compact group structure and parallel path design, which achieves powerful attention effects through efficient intra-group cross-channel interaction, without requiring complex submodules (such as the spatial attention in CBAM) or fully connected layers (such as SENet).

In conclusion, this comparison experiment robustly demonstrates, from both performance and efficiency perspectives, that the EMA module is a more competitive choice than SENet and CBAM for the few-shot cross-domain bearing fault diagnosis task in this paper, achieving the best balance between performance and complexity.

4.8. Cross-Sensor Location Generalization Capability Verification Experiment

To further validate the generalization ability of the AdaMETA framework under different distribution shifts, this section presents a novel and more challenging experiment: fault diagnosis across sensor locations. This experiment simulates a common industrial scenario, where a model trained at one location (e.g., the drive end) is required to effectively diagnose faults at a different location (e.g., the fan end) with only a few samples.

4.8.1. Experimental Setup and Data Partitioning

This experiment is based on the CWRU dataset, utilizing the vibration data collected simultaneously from both the drive end (DE) and fan end (FE). Although the same bearing system is monitored, inherent differences in vibration signals arise due to variations in the mechanical sensor mounting positions, including differences in signal propagation paths, attenuation characteristics, and signal-to-noise ratios. These variations result in significant data distribution shifts, providing an ideal and realistic “cross-domain” validation platform.

Source Domain (): The data collected from the drive end (DE) under four different load conditions (0, 1, 2, 3 hp) are selected. This forms a diverse source domain designed to help the model learn fault features at the drive end that are independent of load conditions and can generalize across the drive end.

Target Domain (): The data collected from the fan end (FE) under the same four load conditions are selected. The key setup here is that, in the target domain, we simulate an extreme small-sample scenario, where only five samples (i.e., 5-shot) are provided for each fault category (10 categories in total) to adapt the model. The remaining fan end samples are used for testing.

Task Construction: We follow the 10-way 5-shot meta-learning task format as outlined in

Section 4.1.2. Each training task is randomly sampled from the diverse loads in the source domain (DE), while testing is conducted on the small sample set from the target domain (FE) for adaptation and evaluation. The data preprocessing pipeline (1024-sample-length sliding window and STFT converted to time-frequency spectrograms) is consistent with the main experiment to ensure fairness in comparisons.

This “DE -> FE” transfer setup is significantly more challenging than the previous cross-load transfer. It not only involves load variation but also introduces more fundamental signal characteristic changes due to the physical location difference of the sensors.

4.8.2. Cross-Location Diagnostic Results and Analysis

We compared the proposed AdaMETA method with a series of baseline methods in this new scenario, and the results are shown in

Table 8.

The analysis of

Table 8 leads to the following conclusions:

- (a)

Task Challenge: The average accuracy of all methods shows a significant decline compared to the cross-load experiment in

Section 4.2. This confirms that the distribution differences caused by cross-sensor locations are more severe than simple load variations.

- (b)

Outstanding Generalization of AdaMETA: The proposed AdaMETA method still achieves the best performance in this new scenario, with an accuracy of 97.63%, which is significantly higher than the other comparison methods. Compared to the strong baseline GMAML, our method provides an improvement of approximately 2.75%.

- (c)

Stability Demonstration: AdaMETA also achieves the lowest standard deviation (3.29%), indicating that our method exhibits stronger robustness and stability when facing complex distribution shifts caused by location changes, making it less sensitive to task random sampling.