On the Equivalence of Gibbs, Boltzmann, and Thermodynamic Entropies in Equilibrium and Nonequilibrium Scenarios

Abstract

1. Introduction

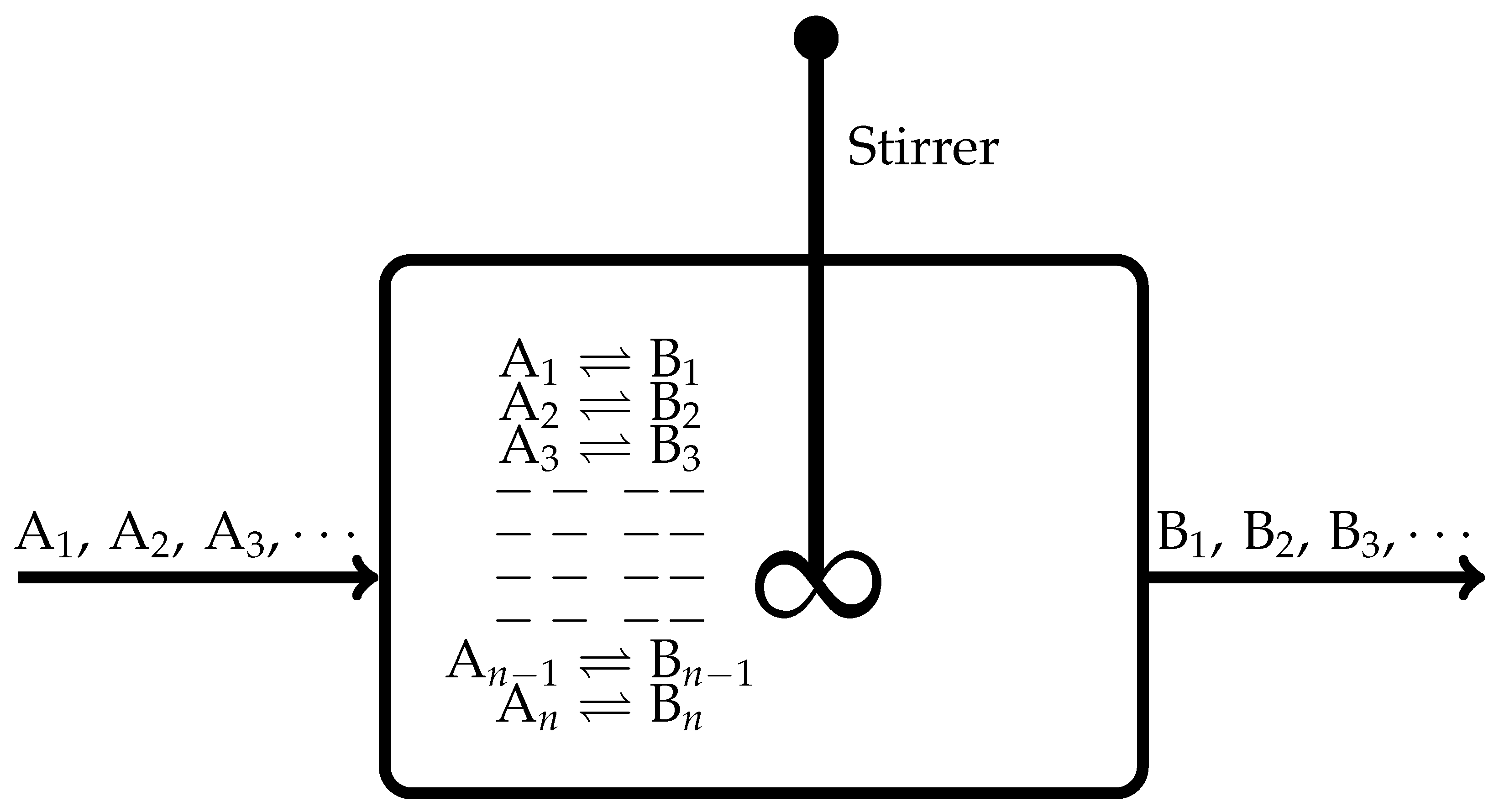

2. Continuously Stirred Tank Reactor with Several Independent Chemical Reactions

- When all the flows are stopped, the CSTR depicted in Figure 1 evolves towards an equilibrium state. During this evolution, according to the De Donderian Equation [20], we have the following functional dependence:where S is the entropy, U is the internal energy, V is the volume of the reactor, are the respective extents of advancement of the chemical reaction identified with the running subscript r, and t is time.

- When this CSTR attains an equilibrium state, the functional dependence of Equation (1) transforms to the following:That is, now we have for all , implying they do not remain independent thermodynamic variables in the case of chemically reacting systems.

- On attainment of a nonequilibrium steady state, the functional dependence of Equation (1) becomes time-independent:

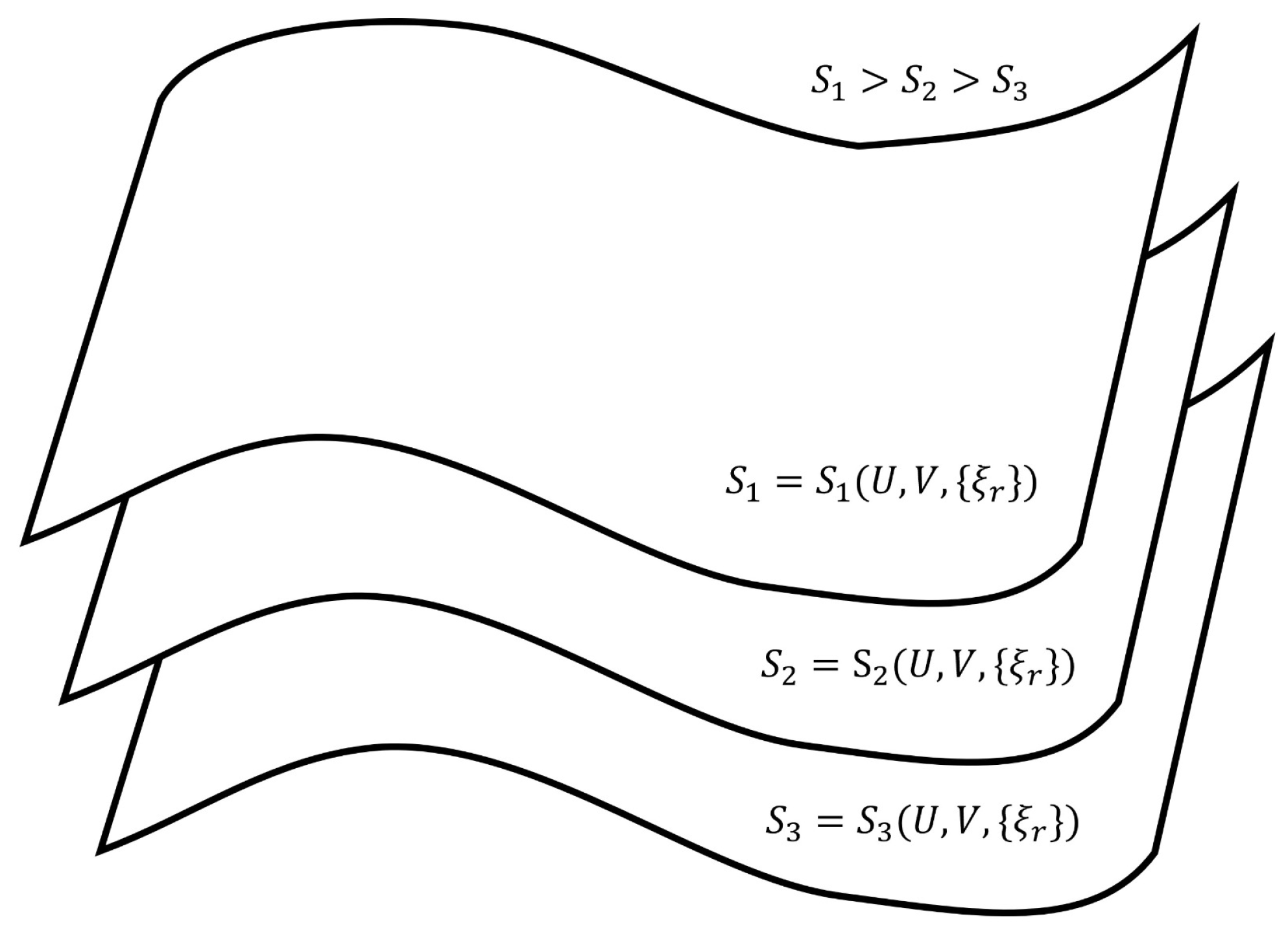

3. Geometrical Aspects of Thermodynamic Entropy Based on Isentropic Hypersurfaces Housing Nonequilibrium Steady States

- The paths through a succession of nonequilibrium steady states of a given hypersurface of Figure 2 are the reversible isentropic paths. Their thermodynamic description in the De Donderian settings [20] reads as follows:where p is the pressure, T is the temperature and are the respective chemical affinities.The paths through a succession of nonequilibrium steady states across the hypersurfaces in Figure 2 are also reversible ones but are non-isentropic, as the entropy does not remain constant. Its description in the present case is as follows:Recall that, by definition, a thermodynamically reversible path is a limiting case but never realized in practice; hence, they are termed as quasi-static paths, which, in principle, can be traversed in either direction. Also, since such paths are prescribed as the ones connecting infinitesimally close successions of time-independent nonequilibrium states, they cannot be termed irreversible paths, though the rate of entropy production remains non-zero. Earlier too, Keizer coined such reversible paths through a succession of nonequilibrium steady states [27,28,29] in his version of statistical thermodynamics of nonequilibrium processes based on the fluctuations of nonequilibrium steady states. On such paths, the complete compensation of the rate of entropy driven out and the rate of entropy production, as stated in Equation (5), is maintained (In biological systems an example approaching to a reversible path through a succession of nonequilibrium steady states is often presented. For example, biochemical reactions in biological cells, which are usually modelled as undergoing in a CSTR, operate under the nonequilibrium steady state conditions. And often other physico-chemical processes of a biological system are cooperatively associated with them. It has been observed that in biological systems it is not always possible to maintain the existence of the same nonequilibrium steady state continuously, but the system reversibly shifts to a new nonequilibrium steady state which gradually returns to the original one. This transition is considered as very close to a reversible passage through nonequilibrium steady states. That is why, the other biological processes dependent on this nonequilibrium steady state remain practically unhampered).On the other hand, the isentropic planes depicted in Figure 3 consist of equilibrium states. Therefore, when the thermodynamic reversible paths, through a succession of equilibrium states, are prescribed, then on such paths, no entropy production exists. Hence, in this case, the non-isentropic paths are described by and Equation (7), whereas the isentropic paths follow , with the following explicit description:

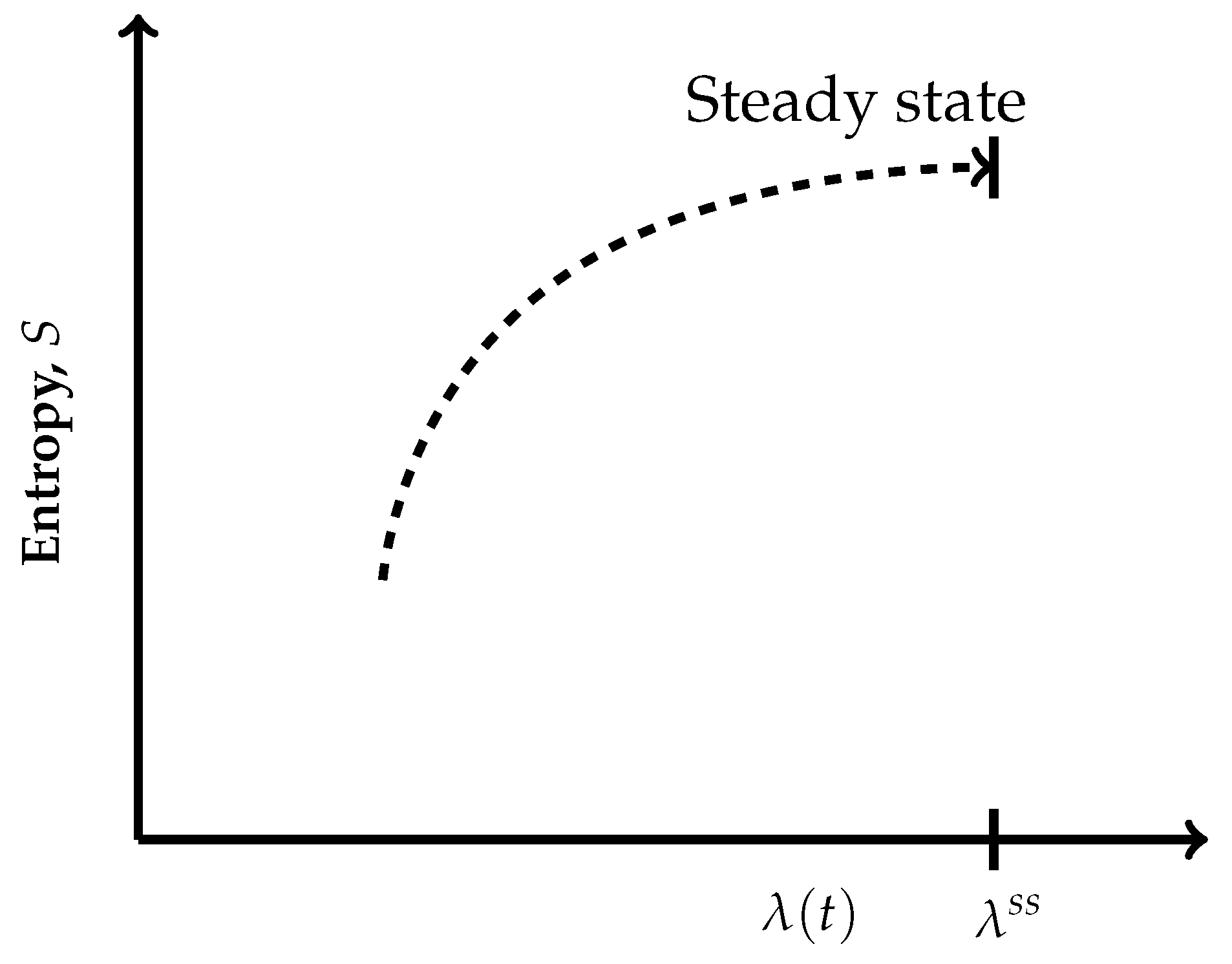

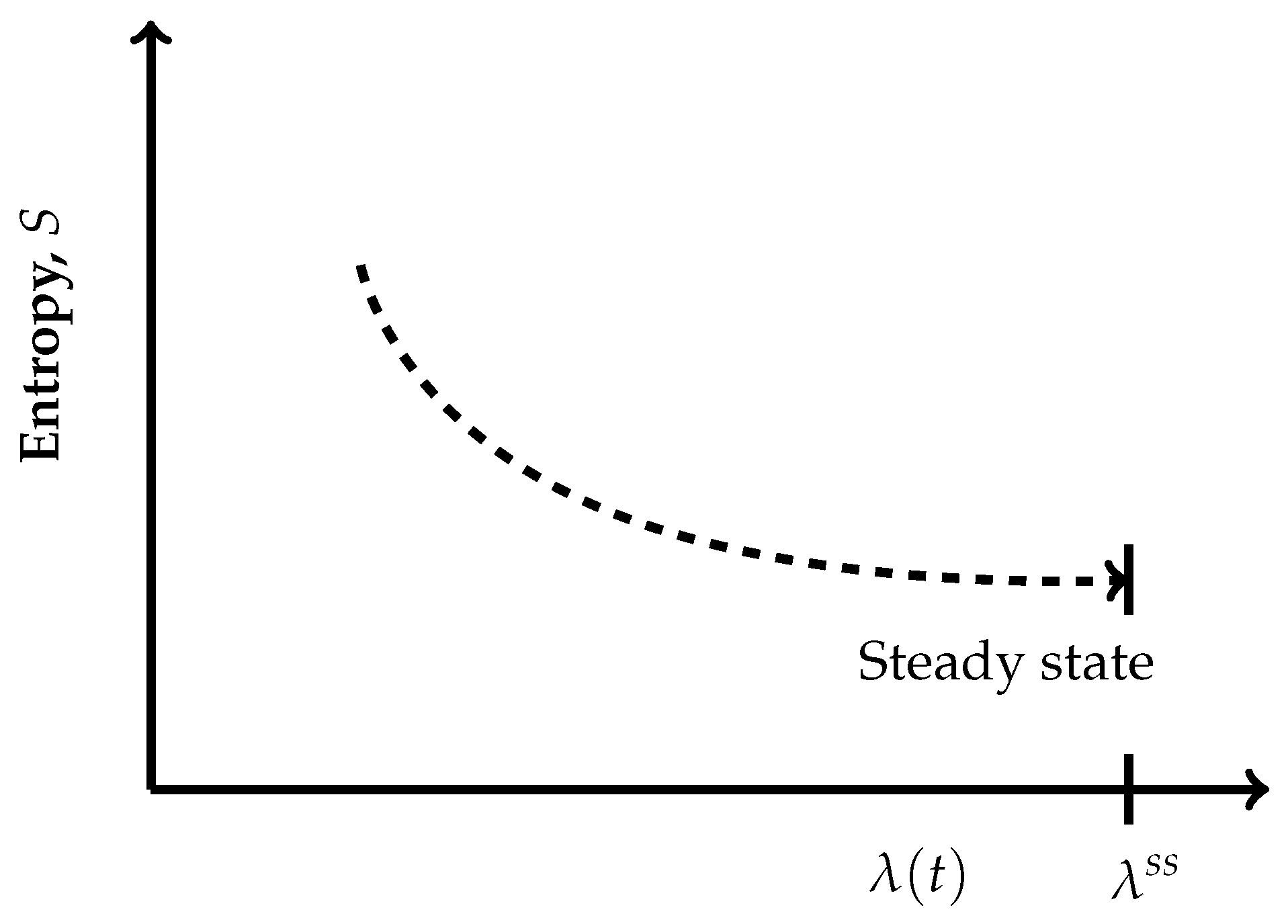

- The thermodynamic irreversible trajectories are the ones in which the system leaves an isentropic hypersurface (an isentropic plane), attains a nearby time-dependent nonequilibrium state, and thereafter marches through a succession of time-dependent nonequilibrium states. They can be of two types: (1) in this category, the end state is a time-independent state (a nonequilibrium steady state or an equilibrium state), and (2) in this category, we club all those trajectories not converging to a steady state. In the first category, ultimately, the system attains the state of . The variation of entropy during the attainment of this final state at constant U and V has two options. Recall that, by definition on an isentropic hypersurface, there is an exact compensation of entropy production and entropy driven out, as described in Equation (5). Therefore, for initiation of an irreversible process, the system has to leave an isentropic hypersurface. This happens only when the balance between the rate of entropy production and the rate of entropy driven out breaks down. Therefore, if the rate of entropy driven out dominates over the rate of entropy production, then the system follows the trajectory of decreasing entropy, whereas when the rate of entropy production dominates over the rate of entropy driven out, then during corresponding evolution of the system, its entropy increases. At this stage of our discussion, we introduce a parameter , through which we quantify the said imbalance between the rate of entropy production and the rate of entropy driven out. This parameter is different than the extent of the advancement of reaction . This is so because the said imbalance also contains contributions to the rate of entropy production and the rate of entropy driven out by the non-steady state fluxes in and out of reactants and product molecules. When entropy is decreasing, the operative and simple expression very close to the nonequilibrium steady state is , whereas in the case of increasing entropy, we have the simple expressions . However, when the system is attaining an equilibrium state, implying that the rate of entropy exchange remains zero, we have the expression . In the above three expressions, k is the kinetic constant, and in first two expressions, the superscript denotes a nonequilibrium steady state. Moreover, it is easy to comprehend that as the nonequilibrium steady state is approached, the existence time of the time-dependent nonequilibrium states goes on increasing and becomes sufficiently long in the close vicinity of it. The corresponding trajectories near a steady state appear as depicted in Figure 4 and Figure 5. When Figure 4 is used for the evolution to an equilibrium state, the parameters and need to be used instead of and .Which is the condition of extremization of entropy at the nonequilibrium steady state. When the final state is an equilibrium state, the variation of entropy will be as depicted in Figure 4, but then it requires the use of and instead of and in this figure, re-expressing Equation (9) as . However, away from the position on these curves, say at , we haveAnd away from an equilibrium state at a position on the curve of Figure 4, this condition reads as . It illustrates that there is no possibility at all to have and for a time-dependent nonequilibrium state even for the situations which are in very close proximity to a steady state. Hence, at the phenomenological level, we can neither claim them as being of maximum entropy nor of minimum entropy with regard to their neighboring states on the trajectory.

4. Statistical Mechanical Versus Phenomenological Aspects and the Question of Equivalence of Entropies

5. A Brief Note on Natural Fluctuations

6. Concluding Remarks

- In determining the equivalence between thermodynamic and statistical mechanically defined entropies, a crucial role is played by the ability of the system to detour all the members of the microstates of the system that is an ability to obey ergodicity.

- The requirement stated above is meticulously met only when a system is in an equilibrium state but also in nonequilibrium steady state. This is so because they both are time-independent states. Thus, even though a nonequilibrium steady state belongs to the nonequilibrium regime, the equivalence between Gibbs and thermodynamic entropies is guaranteed. Of course, for the equivalence of Boltzmann entropy the absence of non-ideality is demanded.

- In the case of time-dependent nonequilibrium states with an exceedingly small magnitude of , the requirement stated in 1 above is practically met; hence, for them, the equilvalence between thermodynamic and Gibbs entropies exists.

- For all time-dependent nonequilibrium states belonging to considerably large magnitudes of , because the condition of ergodicity is not met though the Boltzmann and Gibbs entropies, they can be calculated, but their practical utility remains doubtful, and hence their equivalence with the thermodynamic entropy is not possible. However, in practice, we measure thermodynamic parameters to a fair degree of confidence in such systems. It means that the system executes a time averaging within the existence time of the nonequilibrium state. During this period, the members of the or W which get sampled out cannot be identified beforehand. Hence, how to perform the commensurate ensemble averaging is not clear.

- Thus, except for equilibrium states, nonequilibrium steady states, and the time-dependent non-equilibriun states with exceedingly small magnitudes of , the Jaynes maximum entropy estimates should not be executed on Boltzmann and Gibbs entropies because of their uncertain practical utilities. And even if it is executed, its practical utility will need to be weighed properly. A similar situation might exist in the other scientific fields wherein the Jaynes maximum entropy estimate is employed; hence, caution needs to be exercised.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CSTR | Continuously stirred tank reactor |

References

- Hill, T.L. An Introduction to Statistical Thermodynamics, 1st ed.; Addison-Wesley Publishing Company, INC.: Reading, MA, USA, 1960. [Google Scholar]

- Fowler, R.; Guggenheim, E.A. Statistical Thermodynamics. A Version of Statistical Mechanics for Students of Physics and Chemistry; Cambridge University Press: Cambridge, UK, 1956. [Google Scholar]

- Tolman, R.C. The Principles of Statistical Mechanics, revised ed.; Dover Publications: New York, NY, USA, 2010; ISBN 13978-0486638966. [Google Scholar]

- McQuarrie, D.A. Statistical Mechanics, 1st ed.; Harper and Row: New York, NY, USA, 1976; ISBN 978-1-891389-15-3. [Google Scholar]

- Jaynes, E.T. Gibbs vs. Boltzmann Entropies. Am. J. Phys. 1965, 33, 391–398. [Google Scholar] [CrossRef]

- De Groot, S.R.; Mazur, P. Non-Equilibrium Thermodynamics; North Holland: Amsterdam, The Netherlands, 1962. [Google Scholar]

- Jaynes, E.T. Information Theory and Statistical Mechanics. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information Theory and Statistical Mechanics. II. Phys. Rev. 1957, 108, 171–190. [Google Scholar]

- Prigogine, I. Introduction to Thermodynamics of Irreversible Processes; John Wiley-Interscience: New York, NY, USA, 1967. [Google Scholar]

- Haase, R. Thermodynamics of Irreversible Processes; Addison-Wesley: Reading, MA, USA, 1969. [Google Scholar]

- Rastogi, R.P. Introduction to Non-Equilibrium Physical Chemistry. Towards Complexity and Non-Linear Sciences; Elsevier: Amsterdam, The Netherlands, 2008. [Google Scholar]

- Lebon, G.; Jou, D.; Casas-Vázquez, J. Understanding Non-Equilibrium Thermodynamics. Foundations, Applications, Frontiers; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Andersen, B. Finite-Time Thermodynamics. Ph.D. Thesis, Physics Laboratory II, University of Copenhagen, Copenhagen, Denmark, 1983. [Google Scholar]

- Andresen, B. Tools of Finite-Time Thermodynamics. 2003. Available online: https://www.semanticscholar.org/paper/TOOLS-OF-FINITE-TIME-THERMODYNAMICS-Andresen/c8cf8c2ac0f16ac35e8689e1d4682d40eccc9831 (accessed on 2 February 2007).

- Andresen, B. Aktuelle Trends in der Thermodynamik in Endlicher Zeit. Angew. Chem. 2011, 123, 2742–2757. [Google Scholar] [CrossRef]

- Salamon, P.; Andresen, B.; Berry, R.S. Thermodynamics in Finite Time. II. Potentials for Finite-Time Processes. Phys. Rev. A 1977, 15, 2094–2102. [Google Scholar] [CrossRef]

- Glasstone, S. Thermodynamics for Chemists; Princeton: New Jersey, NJ, USA, 1967. [Google Scholar]

- Blinder, S.M. Advanced Physical Chemistry; Collier-Macmillan: New York, NY, USA, 1969. [Google Scholar]

- Atkins, P.; de Paula, J. Atkins’ Physical Chemistry, 8th ed.; W. H. Freeman and Company: New York, NY, USA, 2006. [Google Scholar]

- Prigogine, I.; Defay, R. Chemical Thermodynamics; Everett, D.H., Translator; Longmans Green: London, UK, 1954. [Google Scholar]

- Bhalekar, A.A. On the Generalized Phenomenological Irreversible Thermodynamic Theory (GPITT). J. Math. Chem. 1990, 5, 187–196. [Google Scholar] [CrossRef]

- Bhalekar, A.A. Measure of Dissipation in the Framework of Generalized Phenomenological Irreversible Thermodynamic Theory (GPITT). In Proceedings of the 1992 International Symposium on Efficiency, Costs, Optimization and Simulation of Energy Systems (ECOS’92), Zaragoza, Spain, 15–18 June 1992; Valero, A., Tsatsaronis, G., Eds.; American Society of Mechanical Engineers: New York, NY, USA, 1992; pp. 121–128. [Google Scholar]

- García-Colín, L.S.; Bhalekar, A.A. Recent Trends in Irreversible Thermodynamics. Proc. Pakistan Acad. Sci. 1997, 34, 35–58. [Google Scholar]

- Bhalekar, A.A. Universal Inaccessibility Principle. Pramana—J. Phys. 1998, 50, 281–294. [Google Scholar] [CrossRef]

- Blinder, S. Carathéodory’s Formulation of the Second Law. In Physical Chemistry: An Advanced Treatise, 1st ed.; Jost, W., Ed.; Academic Press: New York, NY, USA, 1971; Chapter 10; pp. 613–637. [Google Scholar] [CrossRef]

- Blinder, S.M. Mathematical methods in elementary thermodynamics. J. Chem. Educ. 1996, 43, 85–92. [Google Scholar] [CrossRef]

- Keizer, J. Fluctuations, Stability, and Generalized State Functions at Nonequilibrium Steady States. J. Chem. Phys. 1976, 65, 4431–4444. [Google Scholar] [CrossRef]

- Keizer, J. Thermodynamics at Nonequilibrium Steady States. J. Chem. Phys. 1978, 69, 2609–2620. [Google Scholar] [CrossRef]

- Keizer, J. Statistical Thermodynamics of Nonequilibrium Processes; Springer: Berlin/Heidelberg, Germany, 1987. [Google Scholar] [CrossRef]

- Chapman, S.; Cowling, T.G. Mathematical Theory of Non-Uniform Gases: An Account of the Kinetic Theory of Viscosity, Thermal Conduction and Diffusion in Gases, 3rd ed.; Cambridge University Press: Cambridge, UK, 1970. [Google Scholar]

- Grad, H. On the Kinetic Theory of Rarefied Gases. Commun. Pure Appl. Math. 1949, 2, 331–407. [Google Scholar] [CrossRef]

- Grad, H. Principles of the Kinetic Theory of Gases. In Thermodynamik der Gase/Thermodynamics of Gases; Flügge, S., Ed.; Springer: Berlin/Heidelberg, Germany, 1958; Handbuch der Physik/Encyclopedia of Physics; Volume XII, pp. 205–294. [Google Scholar]

- Liboff, R.L. Kinetic Theory: Classical, Quantum, and Relativistic Descriptions, 3rd ed.; Graduate Texts in Contemporary Physics; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Bhalekar, A.A. The Universe of Operations of Thermodynamics vis-à-vis Boltzmann Integro-Differential Equation. Indian J. Phys. 2003, 77, 391–397. [Google Scholar]

- García-Colín, L.S. Extended Nonequilibrium Thermodynamics, Scope and Limitations. Rev. Mex. Fís. 1988, 34, 344–366. [Google Scholar]

- García-Colín, L.S.; Uribe, F.J. Extended Irreversible Thermodynamics Beyond the Linear Regime. A Critical Overview. J. Non-Equilib. Thermodyn. 1991, 16, 89–128. [Google Scholar]

- García-Colín, L.S. Extended Irreversible Thermodynamics: Some Unsolved Questions. In Proceedings of the AIP Conference Proceedings, CAM-94 Physics Meeting, Cancun, Mexíco, 26–30 September 1994; Zepeda, A., Ed.; Centro de Investigacion y de Estudios Avanzados del IPN: Woodbury, NY, USA, 1995; Volume 342, pp. 709–714. [Google Scholar]

- García-Colín, L.S. Extended Irreversible Thermodynamics. An Unfinished Task. Mol. Phys. 1995, 86, 697–706. [Google Scholar] [CrossRef]

- Jou, D.; Casas-Vázquez, J.; Lebon, G. Extended Irreversible Thermodynamics, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 1996. [Google Scholar]

- Nettleton, R.E.; Sobolev, S.L. Applications of Extended Thermodynamics to Chemical, Rheological, and Transport Processes: A Special Survey. Part I. Approaches and Scalar Rate Processes. J. Non-Equilib. Thermodyn. 1995, 20, 200–229. [Google Scholar]

- Nettleton, R.E.; Sobolev, S.L. Applications of Extended Thermodynamics to Chemical, Rheological, and Transport Processes: A Special Survey. Part II. Vector Transport Processes, Shear Relaxation, and Rheology. J. Non-Equilib. Thermodyn. 1995, 20, 297–331. [Google Scholar]

- Nettleton, R.E.; Sobolev, S.L. Applications of Extended Thermodynamics to Chemical, Rheological, and Transport Processes: A Special Survey. Part III. Wave Phenomena. J. Non-Equilib. Thermodyn. 1996, 21, 1–16. [Google Scholar]

- Müller, I.; Weiss, W. Irreversible Thermodynamics—Past and Present. Eur. Phys. J. H 2012, 37, 139–236. [Google Scholar]

- Lebon, G.; Jou, D. Early History of Extended Irreversible Thermodynamics (1953–1983): An Exploration Beyond Local Equilibrium and Classical Transport Theory. Eur. Phys. J. H 2015, 40, 205–240. [Google Scholar] [CrossRef]

- Vilar, J.M.G.; Rubi, J.M. Communication: System-size scaling of Boltzmann and alternate Gibbs entropies. J. Chem. Phys. 2014, 140, 201101. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhalekar, A.A.; Tangde, V.M. On the Equivalence of Gibbs, Boltzmann, and Thermodynamic Entropies in Equilibrium and Nonequilibrium Scenarios. Entropy 2025, 27, 1055. https://doi.org/10.3390/e27101055

Bhalekar AA, Tangde VM. On the Equivalence of Gibbs, Boltzmann, and Thermodynamic Entropies in Equilibrium and Nonequilibrium Scenarios. Entropy. 2025; 27(10):1055. https://doi.org/10.3390/e27101055

Chicago/Turabian StyleBhalekar, Anil A., and Vijay M. Tangde. 2025. "On the Equivalence of Gibbs, Boltzmann, and Thermodynamic Entropies in Equilibrium and Nonequilibrium Scenarios" Entropy 27, no. 10: 1055. https://doi.org/10.3390/e27101055

APA StyleBhalekar, A. A., & Tangde, V. M. (2025). On the Equivalence of Gibbs, Boltzmann, and Thermodynamic Entropies in Equilibrium and Nonequilibrium Scenarios. Entropy, 27(10), 1055. https://doi.org/10.3390/e27101055