1. Introduction

With the exponential growth of complex networks, inter-node interaction patterns exhibit dynamic and evolving characteristics. However, existing network representation frameworks still primarily rely on static graph models for structural abstraction [

1]. As a core technique for uncovering functional modules, predicting information dissemination pathways, and identifying pivotal hubs, community detection holds significant value in complex systems with complex interaction patterns and modular structures, such as social networks and biological protein interaction networks [

2,

3]. These networks frequently exhibit hierarchical and multi-scale structures, where accurately identifying community boundaries is essential to uncovering their underlying organizational principles [

4,

5]. However, many real-world networks are not only structurally complex but also involve dynamic information flows, which pose new challenges for community detection [

6]. Current community detection methods face limitations in characterizing information dissemination processes within real-world networks.

Modularity optimization approaches identify community structures by maximizing the disparity between internal connectivity densities and those expected in random networks [

7]. Nevertheless, a critical limitation arises: since these methods rely on static structures, they struggle to capture the nonlinear attenuation patterns in multi-hop information propagation. For instance, in social networks, the influence of topics diminishes with increasing propagation distance, while in protein interaction networks, signal transduction efficiency is constrained by topological distance. Although differing in nature, both exemplify the hierarchical and nonlinear decay of information dissemination [

8]. Furthermore, modularity-based approaches tend to prioritize local structural metrics, which may overlook inter-community dependencies and lead to suboptimal global community structures. For example, they are prone to misjudgments in ring-shaped graphs or networks with bridging nodes [

9,

10]. Although recent multi-objective optimization-based community detection methods attempt to balance internal cohesion maximization and inter-community linkage minimization, they remain predominantly reliant on static topological information. As a result, they lack the capacity to model node-level information coupling and heterogeneous propagation patterns [

11,

12].

The stochastic block model (SBM), as another classical community detection framework, assumes identical or similar connection probabilities among nodes within the same community. This rigid homogeneity assumption struggles to accommodate the heterogeneity of node connection patterns observed in real-world networks [

13]. such as the “core-periphery” structure in social networks or interdisciplinary connections in academic networks [

14]. While enhanced versions like the degree-corrected SBM (DC-SBM) mitigate this issue to some extent, they still fail to capture the temporal dynamics and dynamic coupling characteristics inherent in information dissemination processes [

15,

16].

Graph neural networks (GNNs) introduce a novel paradigm for community detection tasks, leveraging graph convolutional or attention mechanisms to recursively aggregate information from multi-hop neighborhoods [

17]. However, most existing GNNs adopt fixed-depth architectures, which constrain the receptive field and limit the ability to capture complex propagation patterns and semantic dependencies between distantly connected nodes [

18,

19].

While a variety of community detection methods have achieved notable progress, most existing approaches still face challenges in capturing nonlinear, long-range, and dynamic-like propagation patterns that characterize many real-world networks. These limitations highlight the potential benefits of developing models that, even when operating on static topologies, can approximate certain aspects of temporal diffusion, such as long-range interactions and propagation attenuation. To this end, we introduce a Dynamic Propagation-Aware Multi-Hop Aggregation Model (DAMA). Although DAMA is built for static graphs, it incorporates propagation-aware mechanisms—such as the IFM—derived solely from static topological features, without relying on time-evolving snapshots. In doing so, DAMA approximates diffusion-like behaviors in a static setting, aiming to bridge the gap between structural topology and dynamic information flow. The primary contributions of this work are summarized as follows:

Information Flow Matrix (IFM): We construct an IFM to model nonlinear attenuation in node-to-node propagation strength within static graphs. This matrix helps the model to better account for multi-step propagation, aiding in the detection of dependencies that may be weakened or overlooked in traditional adjacency-based models. Such capability is essential for effectively modeling complex propagation patterns in static networks.

Adaptive Sparse Sampling: We propose an adaptive sampling mechanism that selectively retains neighbors with high propagation strength based on dynamically adjusted hierarchical thresholds. This reduces redundant connections and suppresses noise within the neighborhood structure, enhancing structural clarity.

Hierarchical Multi-Hop Gated Aggregation: We design a hierarchical multi-hop aggregation mechanism with a dual-gating strategy. By combining node-level propagation intensity with batch-wise feature statistics, the model adaptively balances the contributions of different hop-level features, enabling flexible modeling of hierarchical dependencies in static networks.

2. Related Work

A broad range of community detection methods have been developed to analyze structural patterns in complex networks. Traditional approaches include modularity optimization, probabilistic generative models such as the stochastic block model (SBM), and, more recently, graph neural networks (GNNs). While primarily designed for static graphs, recent efforts have sought to incorporate temporal or propagation-aware cues into these models. For instance, degree-corrected SBM variants account for local structural heterogeneity, while dynamic GNNs leverage graph snapshots to model temporal transitions. However, a persistent challenge remains: effectively modeling long-range dependencies and attenuation in information propagation using only static topological structures.

Modularity Optimization-based Methods: Modularity-driven community detection represents a classical paradigm. Greedy modularity optimization algorithms, such as Louvain, are widely adopted for their simplicity but are often trapped in local optima [

20]. Rustamaji et al. propose an improved method based on modularity decomposition, which significantly enhances modularity through an innovative node-community disassembly strategy [

21]. Yuan et al. introduced the Modularity Subset Maximization (MSM) algorithm, transforming modularity maximization into a non-convex subset identification problem solved via difference-of-convex programming [

22]. Despite their effectiveness on static networks, these methods suffer from resolution limits, local optima, and neglect of node attributes, hindering their ability to capture hierarchical network structures [

23].

Probabilistic Generative Models: Stochastic block models (SBMs) characterize community structures by defining probabilistic network generation processes. Classical variants, including mixed-membership SBM (MMSB) and degree-corrected SBM (DCSBM), have been extensively studied to model overlapping communities and degree heterogeneity [

24,

25]. Sun et al. propose the vGraph framework, a probabilistic generative model for joint node-community representation learning, enabling both overlapping and non-overlapping community detection [

26]. However, such models typically assume static and simplistic network structures, inadequately supporting complex multi-hop topological relationships, dynamic evolution, and heterogeneous node attributes [

27,

28].

Graph Neural Network-based Methods: Deep learning approaches leveraging GNNs have recently emerged as a research hotspot. Sobolevsky et al. developed a recurrent GNN variant for unsupervised community detection via modularity optimization, enabling continuous optimization of partitioning quality functions [

29]. Zhou et al. proposed the Bernoulli–Poisson Graph Convolutional Network (BP-GCN) for heterogeneous social networks, which integrates self-attention mechanisms to identify node relations on symmetric contextual paths and achieves end-to-end community detection [

30]. Li et al. propose a dynamic graph community detection algorithm that combines graph convolutional networks with contrastive learning, capturing temporal evolution through relevance aggregation and feature smoothing [

31]. Although GNN-based methods excel on large-scale graphs, most existing models assume homogeneous networks or fixed topologies, limiting their capability to model heterogeneous propagation and multi-scale structures. Compared with dynamic graph neural networks such as ST-GCN, which operate on time-ordered graph sequences, DAMA adopts a static-topology-aware dynamic modeling strategy. It encodes information decay through an IFM derived from structural proximity and potential propagation bias. This avoids the need for temporal snapshots while still capturing multi-hop and hierarchical propagation patterns, approximating diffusion intensity through topological proximity and distance-based attenuation. In contrast, GraphSAGE performs fixed-step neighborhood sampling without modeling the strength or decay of information across distances [

32]. Furthermore, Dynamic GNNs such as DySAT and EvolveGCN are designed to handle evolving graph structures. DySAT captures both structural and temporal dependencies through self-attention layers, while EvolveGCN models GCN weight dynamics via recurrent networks [

33,

34]. These models typically rely on sequential graph snapshots, which may not be available in static settings. In contrast, DAMA is designed to operate entirely on static graphs. It captures dynamic-like propagation behavior through potential-based influence matrices derived from static topology, rather than learning from time-variant edge or node dynamics. Our approach provides a static-graph alternative that approximates dynamic propagation without requiring temporal.

Other Classical Approaches: Label propagation algorithms (LPA) are renowned for their simplicity and efficiency. Li et al. enhanced LPA with modularity optimization and node importance (LPA-MNI), reducing randomness by initial community identification followed by label updating based on node importance [

35]. Spectral clustering partitions nodes via Laplacian matrix eigenvectors but requires predefined community numbers and incurs high computational costs, making it unsuitable for extremely large networks. For multi-modal networks, multi-view learning has been explored. For instance, Lin et al. proposed Multi-view Attributed Graph Clustering (MvAGC), which integrates attribute perspectives via graph filtering and anchor selection to improve community partitioning [

36]. Although effective in certain scenarios, these methods still face inherent limitations. Existing community detection methods have achieved notable success on static networks but face persistent challenges in handling dynamic evolution, nonlinear propagation attenuation, and heterogeneous interaction patterns.

In summary, while existing methods contribute significantly to static graph modeling, they often lack mechanisms for simulating dynamic propagation over static structures. To address this, we propose DAMA, which bridges this gap by embedding diffusion-like behaviors into static topologies through influence-aware multi-hop aggregation, introducing dynamic-like propagation modeling on static graphs via influence-based matrices and adaptive multi-hop feature fusion.

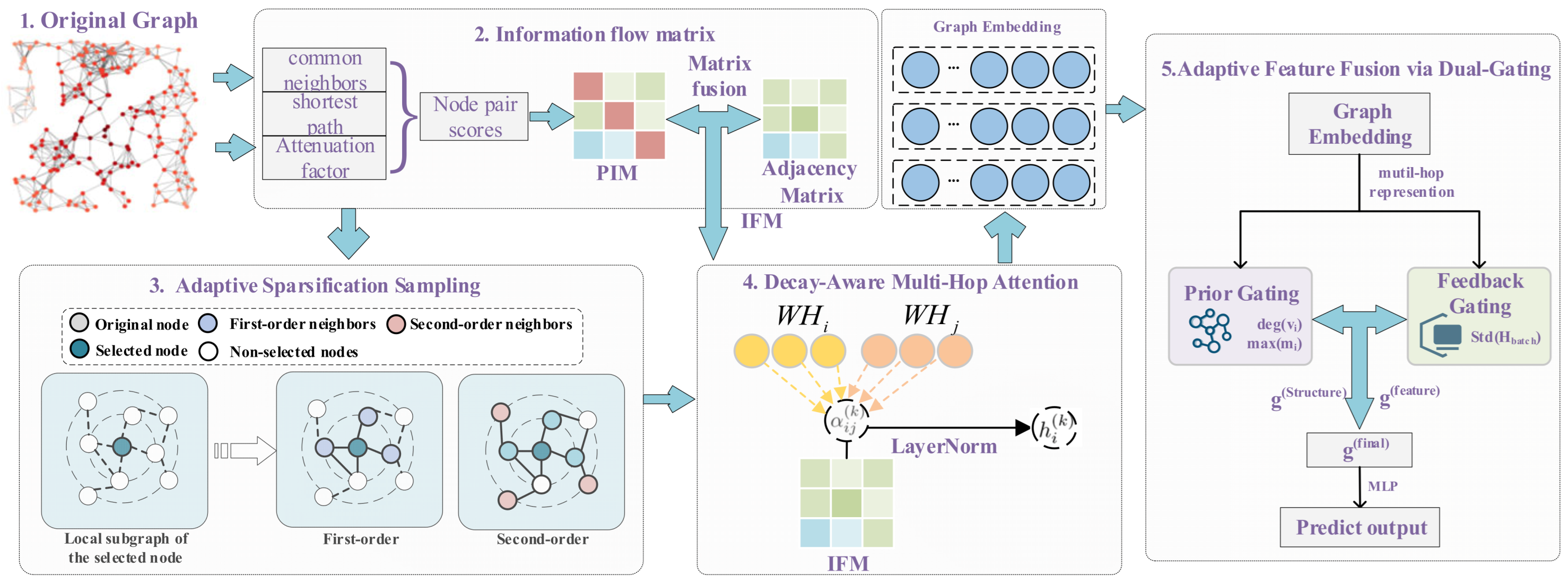

3. DAMA: Model Architecture

The overall architecture of the proposed DAMA model is shown in

Figure 1. It comprises three interlinked components: an IFM that captures topological propagation influence within static graphs, an adaptive sparse sampling module that constructs hierarchical subgraphs by retaining high-influence neighbors based on IFM weights, and a hierarchical gated aggregation mechanism that dynamically fuses multi-hop features to generate community-sensitive node representations. The following subsections elaborate on the design and implementation of each component.

3.1. Information Flow Matrix Construction

The Potential-based Influence Matrix (PIM), denoted as

, quantifies the strength of information propagation between node pairs in a graph. An example of its matrix structure is illustrated in

Figure 2.

Inspired by epidemic diffusion models such as the Susceptible-Infected (SI) model, as well as principles from wireless communications, PIM is based on the intuition that information tends to flow from nodes with higher potential to those with lower potential, with signal strength attenuating over distance. Here, the “potential” reflects the node’s propensity to propagate information, influenced by its local connectivity.

To model the attenuation effect, PIM employs an exponential decay function , where denotes the shortest path length between nodes and , and controls the decay rate. This function captures the natural weakening of influence as information travels further through the network.

Besides distance, PIM incorporates neighborhood overlap to reflect structural similarity; nodes sharing more common neighbors are more likely to influence each other. The influence score between nodes

and

is thus defined as

where

is the set of neighbors of

. This formulation models a potential-driven spatial diffusion process that primarily emphasizes short-range proximity and local structural similarity.

Notably, the importance of first- and second-order neighborhoods has been highlighted in prior work; for example, Ran et al. systematically incorporated micro- (node-pair) and mesoscopic (community-level) structural features into a machine learning framework for community detection, demonstrating that such low-order structural dependencies are particularly informative [

37]. Our PIM formulation is consistent with this principle, as it jointly leverages distance decay and local neighborhood overlap to capture influence between nodes.

Empirical studies indicate that when

, the decay reduces the influence below 50%, consistent with the “three degrees of influence” theory in social networks [

38,

39]. As detailed in

Section 4.4 (

Figure 3), hyperparameter tuning shows that smaller

values enhance model stability. Therefore, we set

to balance empirical robustness and theoretical consistency.

To combine propagation dynamics and graph topology, we define an IFM as a weighted sum of the PIM and the adjacency matrix

:

Based on the results reported in

Appendix A.1 Table A1,

Table A2,

Table A3,

Table A4,

Table A5,

Table A6 and

Table A7, we select the hyperparameter combinations that achieve the best overall performance for each dataset. This configuration balances structural locality and diffusion influence, favoring short-range information while retaining sensitivity to potential longer-range influences through subsequent aggregation.

3.2. Adaptive Sparse Sampling Module

To alleviate the inefficiency caused by uncontrolled neighborhood expansion in large-scale graph computations, we introduce an information flow-aware adaptive sparse sampling module. This module aims to preserve high-influence propagation paths while eliminating structurally redundant or noisy connections. The sampling process is guided by the (IFM) and proceeds in a hierarchical manner.

Given a target node

, the sampling begins with its initial neighborhood

, and iteratively constructs higher-order sparse neighborhoods up to a maximum depth

. At each iteration

, the algorithm computes a dynamic threshold:

where

denotes the propagation intensity from node

to its neighbor

based on the IFM, and

is a sparsification coefficient that controls the selectivity level.

Only neighbors with

are retained to form the k-hop sparse set

. To determine whether further expansion is necessary, the algorithm computes the propagation intensity variation:

where

. If

, indicating diminishing new information, the sampling process terminates early.

Finally, the sparse neighborhood is constructed by aggregating all valid layers:

This dynamic strategy ensures that only structurally meaningful nodes are retained, significantly reducing computational cost and suppressing noisy signals. Empirical results (

Appendix A.2,

Table A8) confirm that performance is stable across different values of

. In line with prior findings that structural information is largely contained within low-order neighborhoods [

38,

39], we set the maximum hop K = 3 and adopt the dataset-specific optimal thresholds reported in

Appendix A.2 Table A8 and

Table A9 as the default configuration for all experiments.

3.3. Hierarchical Multi-Hop Gated Aggregation

To model both local and high-order structural information, we propose a hierarchical multi-hop aggregation framework consisting of two stages: multi-hop attention-based neighborhood fusion and adaptive dual-gating for feature integration.

3.3.1. Decay-Aware Multi-Hop Attention

As defined in Equation (6), we introduce a decay-aware graph attention mechanism to capture the structural dynamics of community structures during information propagation. This mechanism quantifies the topological influence between nodes through an adaptive decay function and leverages the sparsity of attention weights to selectively aggregate neighborhood features that significantly affect a node’s community affiliation.

Here, and denote the feature vectors of nodes and , respectively; represents the k-hop neighborhood of node ; and is the shortest-path distance between nodes and . The decay function emphasizes contributions from short-path neighbors while reducing interference from distant nodes. and are learnable projection matrices used to model the structural similarity between node features.

To mitigate the gradient vanishing problem, as shown in Equation (7), we apply layer normalization to smooth and calibrate aggregated feature distributions, thereby improving training stability.

3.3.2. Adaptive Feature Fusion via Dual-Gating

To address the imbalance between local topological features and global path information caused by static weight allocation in traditional community detection methods, we introduce a two-level gating mechanism guided by prior knowledge and data distribution feedback. This mechanism dynamically adjusts the contribution of local and high-order features to enable adaptive decision-making for multi-order representations.

- (1)

Prior Knowledge-Guided Initial Gating:

We generate the initial gating weights using node degree and local information flow intensity to quantify each node’s structural importance within its local community, as defined in Equation (8). This ensures that local topological features are effectively captured.

Here, denotes the degree of node , quantifying its connection density in the local community, and represents the maximum information flow intensity in the neighborhood of . The learnable routing matrix maps scalar priors to the K-hop feature weight space.

- (2)

Data Distribution Feedback-Based Gating:

To adaptively refine the gating weights, we incorporate batch-wise feature statistics, as shown in Equation (9). This feedback mechanism adjusts the gating based on empirical feature distribution, improving feature representation and model generalization.

Here, denotes the standard deviation vector of node features in the current batch, quantifying the distribution dispersion across feature dimensions. The feedback projection matrix compresses high-dimensional statistics into the gating weight space, and ⊙ represents the Hadamard product.

We further apply a sparsification mapping, as defined in Equation (10), to suppress redundant signals and promote generalization.

During model training, the cross-entropy loss for community partitioning and the sparsity regularization term of the gating mechanism are jointly optimized to enhance the model’s expressive power and generalization performance. The overall loss function is defined as shown in Equation (11):

Here, denotes the cross-entropy loss, which measures the discrepancy between model predictions and ground-truth labels; is the regularization coefficient that controls the strength of the sparsity regularization term; and denotes the gating weight of the k-th hop for node i, used to adaptively control the contribution of each hop-level feature. The associated sparsity regularization encourages the model to retain only essential hops, enhancing interpretability and robustness.

4. Experiments and Results

4.1. Datasets

We conduct experiments using seven real-world network datasets and two synthetic networks generated by the LFR benchmark model. Detailed descriptions of these datasets are summarized in

Table 1.

4.2. Evaluation Metrics

We evaluate the performance of the DAMA model in community detection using the following metrics, summarized in

Table 2.

Here, denotes the total number of nodes; and represent the true and predicted labels of node , respectively; is an indicator function; represents mutual information; and are the entropies of the true and predicted labels, respectively. is the Rand Index, and is its expected value under random labeling; is the adjacency matrix of the graph; and are the degrees of nodes and . is the total number of edges; and is an indicator function equal to 1 if nodes and belong to the same community and 0 otherwise. In our experimental setup, each experiment is repeated 50 times, and the average values of the above metrics are reported to ensure the stability and reliability of the results.

4.3. Experimental Setup

The parameter configuration of the proposed DAMA model comprises a multi-hop graph attention layer, a gating mechanism module, and a classifier module. Specifically, the multi-hop graph attention layer employs 8 attention heads, each outputting 16-dimensional features, which are aggregated to form a 128-dimensional node representation. During message passing, a learnable decay coefficient is introduced to modulate the influence of neighborhoods based on shortest-path distances.

The gating mechanism module integrates 8 attention heads to achieve adaptive fusion based on node degree distribution and batch-wise statistical features, followed by a two-layer MLP (128 → 128) with ReLU activation. The classifier module consists of a two-layer MLP (128 → 64 → number of classes) also utilizing ReLU activation. All graph attention layers adopt ELU activation functions, and a dropout rate of 0.6 is maintained throughout the training process.

The model is trained using the Adam optimizer with a learning rate of 0.001 and weight decay of 1 × 10−4. The training process is fixed for 200 epochs.

For the supervised community detection task, we adopt a standard transductive learning setup: nodes are randomly split into 80% for training and 20% for testing, with binary masks ensuring that the loss is computed only on the training set and all reported metrics are evaluated exclusively on the test set. This guarantees strict separation between the training and evaluation processes. This partitioning scheme is validated through 50 independent repeated experiments with different random seeds, and the average results across all experiments are reported to ensure statistical reliability and prevent information leakage between the training and evaluation phases.

4.4. Hyperparameter Experiments

We evaluate the impact of the sparsification strength parameter and the information decay parameter on the model’s performance and stability on the Cora dataset using a grid search strategy. Both and are sampled from the range [0.1, 1.0] with a step size of 0.1. Each combination is tested over 20 independent runs.

The optimal hyperparameter settings may vary across datasets. However, due to the substantial computational cost of exhaustive hyperparameter tuning on all datasets, we performed this process only on the representative Cora dataset. The configuration identified on Cora was then directly applied to all other datasets. The consistently superior results achieved by our model (as shown in the comparative experiments of Figure 6) confirm the strong generalizability and robustness of this hyperparameter set across diverse data.

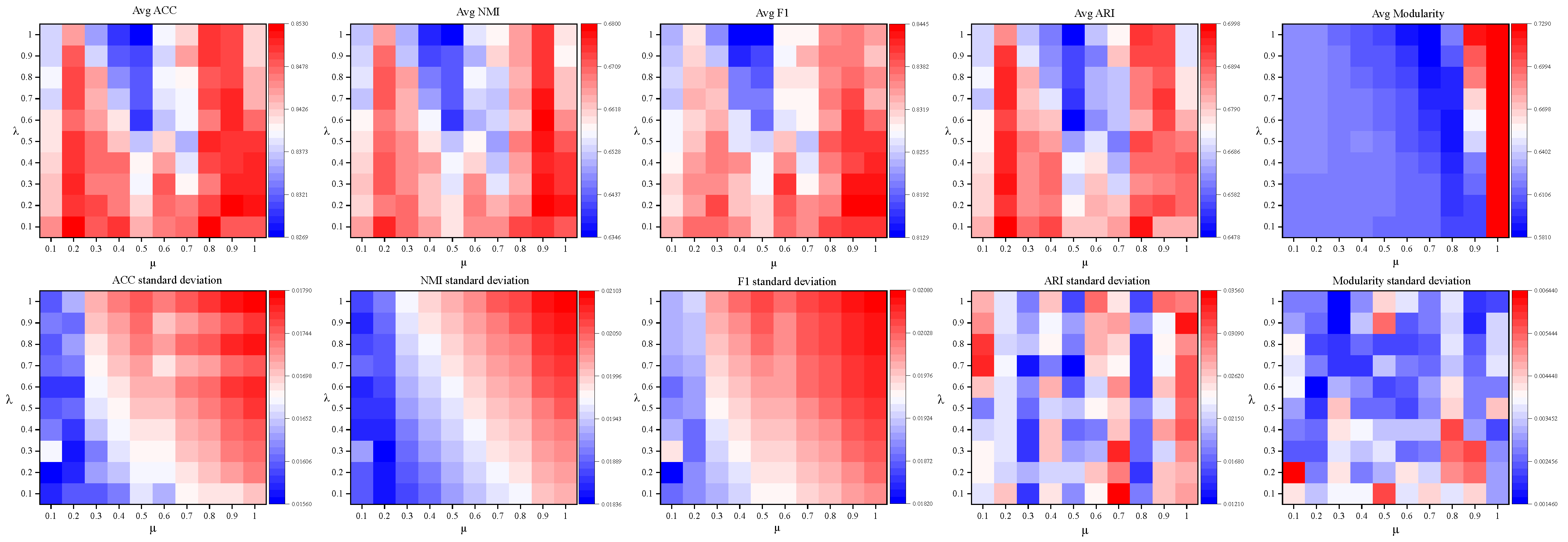

The mean values and standard deviations of the Accuracy, NMI, and F1 metrics were calculated. The experimental results are illustrated in

Figure 3.

Figure 3.

Heatmaps of Mean Values and Standard Deviations for Accuracy, NMI, and F1 Metrics.

Figure 3.

Heatmaps of Mean Values and Standard Deviations for Accuracy, NMI, and F1 Metrics.

The mean values and standard deviations of Accuracy, NMI, F1, ARI, and Modularity were calculated, and the experimental results are shown in

Figure 3. It can be observed that the model performs well when

is in the ranges [0.2–0.3, 0.8–1.0], and all metrics tend to improve as

increases. However, the current experimental results are limited by the upper bound of

, which restricts exploration of the parameter space’s global characteristics.

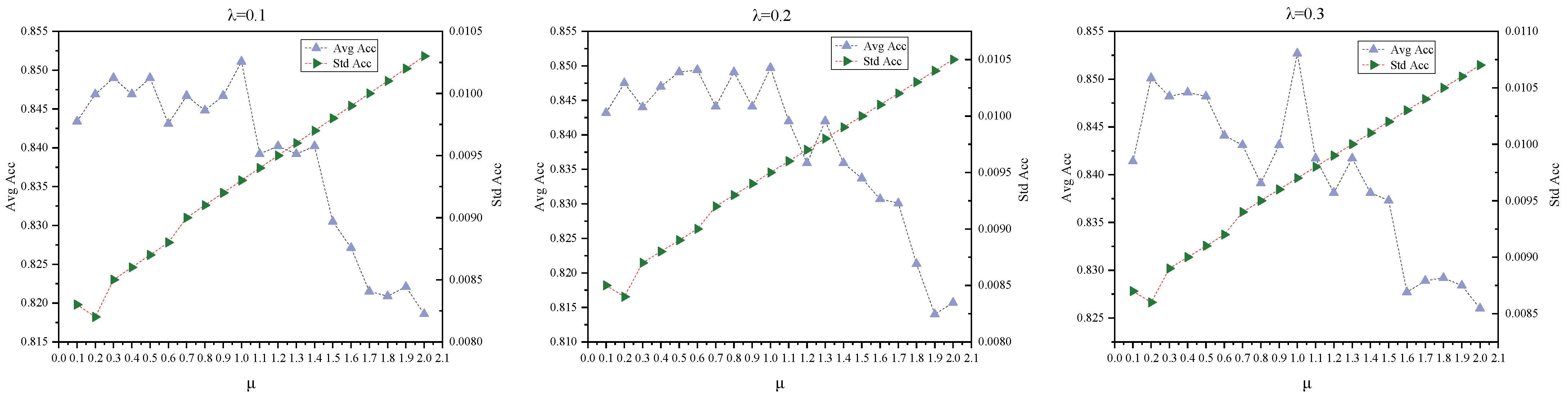

To address this limitation, we extended the sparsification parameter range in subsequent experiments. Specifically, we fixed

(a low-variance interval, relatively stable) and increased the upper bound of

to 2 to investigate the model’s behavior under extreme sparsification, using the ACC metric for evaluation (as it is representative and provides an intuitive reflection of overall performance). These results are shown in

Figure 4.

- (1)

As shown in the upper layer of

Figure 3 and

Figure 4, the model exhibits notable performance fluctuations when

, but the best results are consistently achieved at

; further increasing

leads to a performance decline. Within the range

, the combined effect of

and

keeps fluctuations in Accuracy, F1-score, and ARI within approximately ±2%. However, due to its sensitivity to class alignment, NMI exhibits larger fluctuations of up to ±4%. Modularity reaches its maximum when

.

- (2)

As shown in the lower layer of

Figure 3 and

Figure 4, the sparsification parameter

affects both model performance and stability. Overall, the standard deviations of the evaluation metrics are small: Accuracy, NMI, F1-score, and Modularity all remain below 0.004, while ARI exhibits a higher standard deviation of approximately 0.02. As

increases, the standard deviations of Accuracy, NMI, and F1-score show a slight upward trend. This effect is likely due to the increased sparsity induced by the adaptive sparse sampling module at higher

, which amplifies divergence in input feature distributions and consequently increases output uncertainty. ARI and Modularity, in contrast, show irregular fluctuations because they depend on exact label matching and the global network structure. Overall, increasing

can improve certain performance metrics but may reduce stability. Therefore, for applications that require stable outputs, it is advisable to select relatively lower intervals of

.

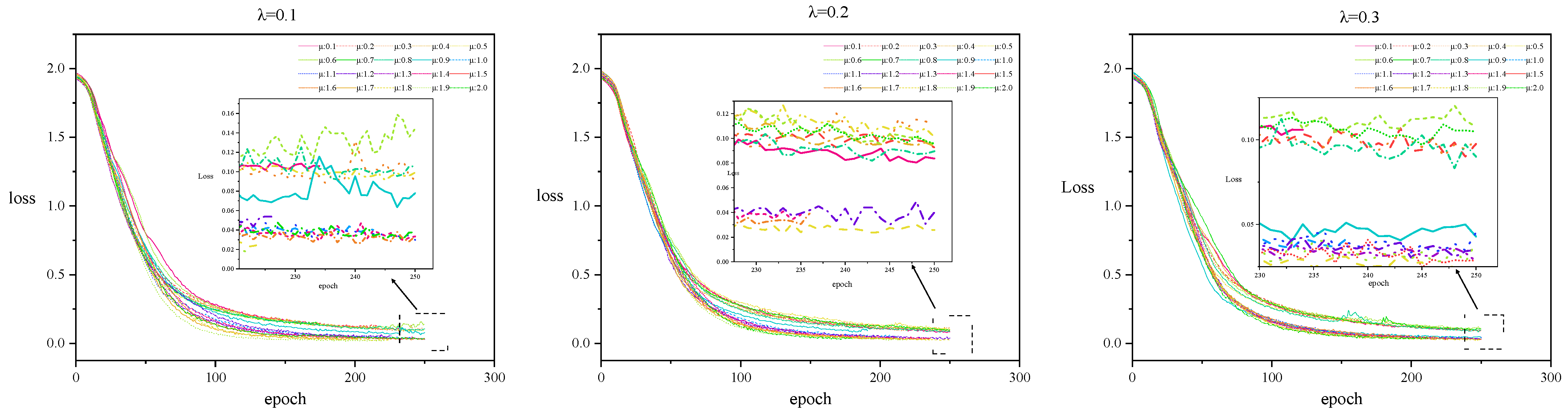

To validate the convergence characteristics of the model in the extended parameter space, we retain the configuration

from

Figure 4 and record the epoch-wise evolution of the training loss under different

values. The results are presented in

Figure 5.

The following conclusions can be drawn from

Figure 5:

- (1)

During the initial training phase (up to 200 epochs), the loss curves for different values largely overlap, suggesting that the sparsification strength does not significantly affect the convergence trajectory in the early stages. However, after 200 epochs, the loss curves begin to exhibit non-monotonic fluctuations, indicating potential overfitting or instability.

- (2)

Comparing different sparsification settings shows that when , the final loss values remain consistently higher than those observed in the setting. We hypothesize that larger values simplify the data representation by increasing the intensity of feature filtering, which in turn facilitates faster convergence and easier optimization.

- (3)

The training process follows a typical three-phase pattern: in the rapid convergence phase (epochs < 100), loss decreases sharply; in the fine optimization phase (100 ≤ epochs ≤ 200), the rate of loss reduction slows; and in the overfitting risk phase (epochs > 200), loss fluctuates unpredictably. We recommend training for 180–200 epochs to balance convergence and generalization.

4.5. Comparative Experiments

To comprehensively evaluate the effectiveness of the proposed DAMA model across diverse graph learning scenarios, we conduct comparative experiments against multiple baseline models, covering both GNN-based and traditional community detection approaches (

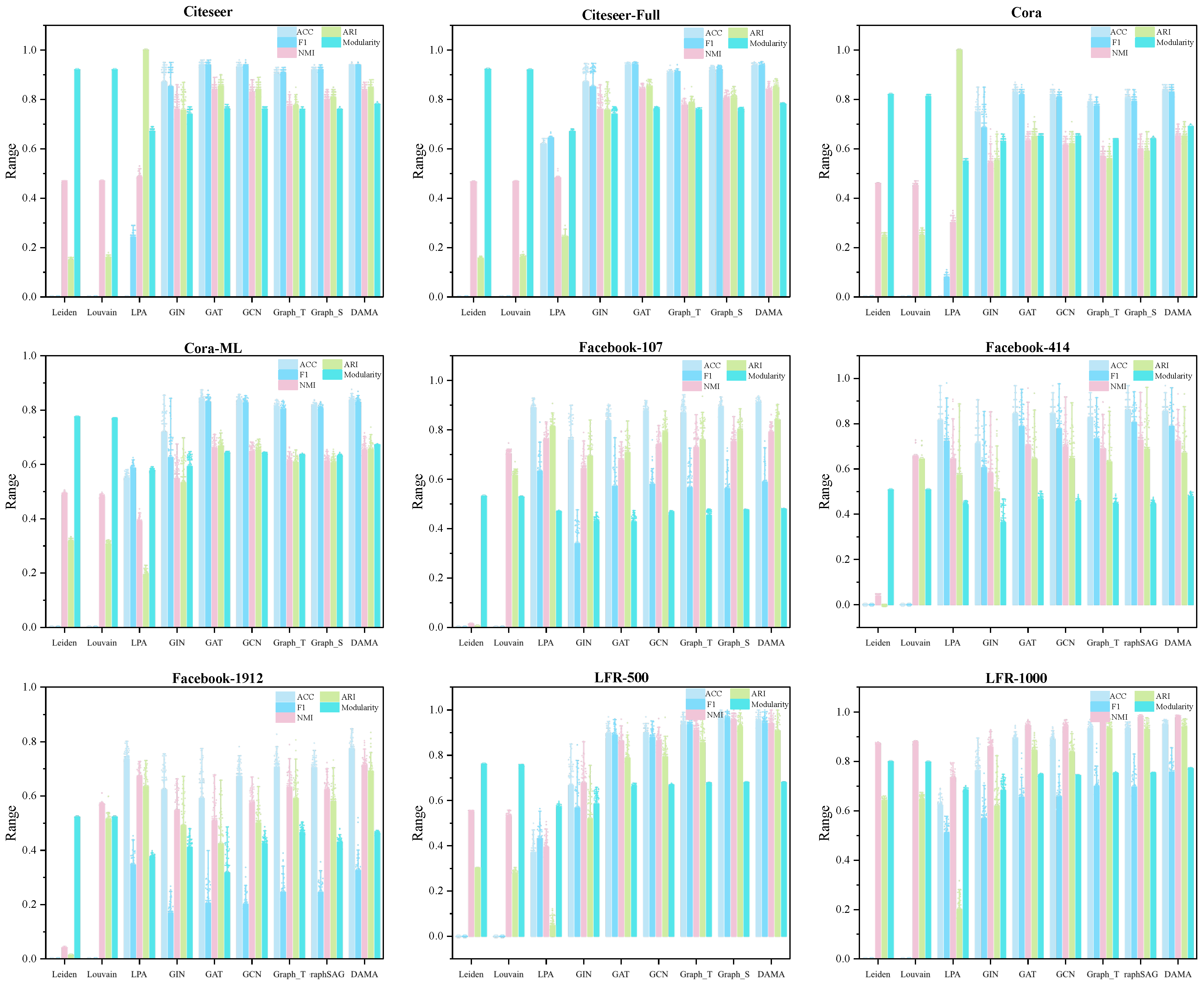

Table 3). The baselines include models employing local aggregation, global reasoning, inductive learning, label propagation, and modularity optimization. To ensure fair comparison, all GNN baselines (GCN, GAT, GraphSAGE, GIN) are implemented with 2 layers, 64 hidden units, and trained using the same optimizer settings (Adam with learning rate 0.001) and data splitting strategy as DAMA. For traditional methods (Louvain, Leiden, LPA), we use the implementations provided by the original authors with default parameters as recommended in their respective publications. The comparative experimental results are shown in

Figure 6. In the figure, Graph_S represents GraphSAGE, and Graph_T represents Graph Transformer.

The following conclusions are drawn based on

Figure 6 and the results summarized in

Table 1:

In terms of overall performance, the proposed DAMA method demonstrates significant advantages across multiple evaluation metrics. Experimental results indicate that DAMA achieves the best or near-best comprehensive performance across different types of datasets. Notably, DAMA consistently attains higher modularity scores compared to other graph neural network methods, highlighting its superior capability in capturing community structures. Moreover, the model exhibits relatively stable performance across multiple runs, reflecting its robust behavior.

When compared with baseline GNN models, clear performance differences are observed. GAT and GCN achieve comparable and stable results across most datasets, but their overall performance remains slightly lower than DAMA. GIN shows considerable fluctuations on small-sample or high-noise datasets, underscoring its limitations in capturing multi-hop dependencies. GraphSAGE and GraphTransform perform well on large-scale dense graphs, yet slightly underperform compared to GAT and GCN on small-scale or sparse graphs, indicating that different GNN architectures have distinct advantages depending on graph characteristics.

Traditional community detection methods exhibit limited performance in this comparison. Leiden and Louvain algorithms, due to over-optimization of modularity, perform poorly on external evaluation metrics and do not involve node label prediction, thus failing to provide classification metrics such as ACC and F1. The label propagation algorithm (LPA) performs poorly on sparse or structurally complex graphs and shows limited effectiveness.

From the perspective of dataset characteristics and model adaptability, in sparse graphs, GAT and GCN effectively leverage local neighborhood information, while DAMA further improves performance. LPA and other traditional methods exhibit comparatively weaker performance in such graphs. On large-scale community-structured graphs, GraphSAGE, GraphTransform, and DAMA achieve prominent results in ACC, NMI, and ARI, whereas the instability of GIN reflects its limited capacity in capturing long-range dependencies. In social network graphs, where node degree distributions are highly uneven, all models face greater challenges. Nevertheless, DAMA maintains stable performance across all metrics and achieves notably higher modularity than other methods, further confirming its superiority in capturing community structures.

4.6. Ablation Experimental

To evaluate the effectiveness of the core components in the DAMA framework, we conduct comprehensive ablation studies on the Information Flow Matrix (IFM), the Adaptive Sparse Sampling (ASS), and the Hierarchical Multi-Hop Gated Aggregation (MSG). By examining performance variations after removing each individual component, we assess their adaptability to sparse, dense, and modular networks, and further reveal the intrinsic relationships between graph topological characteristics and architectural design. The detailed experimental results are systematically reported in

Table 4.

Based on the dataset characteristics in

Table 1 and the ablation results in

Table 4, we derive the following observations. For all tables, the boldfaced values represent the relatively superior results:

The core modules of the DAMA model exhibit complementary roles across different graph structures. The IFM is primarily responsible for constructing global node representations and enhancing structural discrimination. Ablation results show that removing IFM leads to the most pronounced performance degradation across most datasets (e.g., CiteSeer, Cora, Cora-ML, Facebook 414, LFR-1000), highlighting its key role in integrating global features.

The ASS proves particularly effective in dense and modular graphs, where it dynamically adjusts the receptive field to filter redundant connections and reinforce community boundaries. Its impact is clearly dataset-dependent: in graphs such as CiteSeer and Cora-ML, removing ASS results in noticeable performance drops, indicating its importance for structure optimization and denoising in dense or modular networks. In contrast, in graphs like Facebook 107 and Facebook 1912, its removal leads to minor changes or slight fluctuations, suggesting that its contribution depends on the intrinsic structural characteristics of the graph.

The MSG enhances model robustness by integrating multi-scale feature information. Across datasets including CiteSeer, Cora, and LFR-1000, removing MSG causes visible performance decreases, with the effect on CiteSeer comparable to that of IFM. This indicates that MSG plays a key role in capturing graph structural information at multiple scales, and its absence can limit the model’s comprehensive understanding of the graph, thus affecting overall representation and generalization.

In summary, IFM, ASS, and MSG function complementarily: IFM supports global information integration, MSG strengthens cross-scale feature capture, and ASS optimizes local structure awareness and sampling strategies. This complementary design ensures that DAMA can dynamically adapt to diverse graph topologies, maintaining robust performance and high generalization across sparse, dense, and modular networks.

4.7. Noise Robustness Experiments

To thoroughly evaluate the robustness of our model under noisy environments, we introduce various perturbation mechanisms into the Cora dataset to simulate realistic graph noise scenarios. Special emphasis is placed on assessing the effectiveness of the adaptive sparse sampling module in suppressing structural disturbances while preserving essential topological information.

Noise is injected from two perspectives—structural noise and feature (label) noise—with perturbation ratios ranging from 0.1 to 0.5. This indicates that 10% to 50% of the total edges or labels are subject to modification. The types of noise and their corresponding design purposes are summarized in

Table 5.

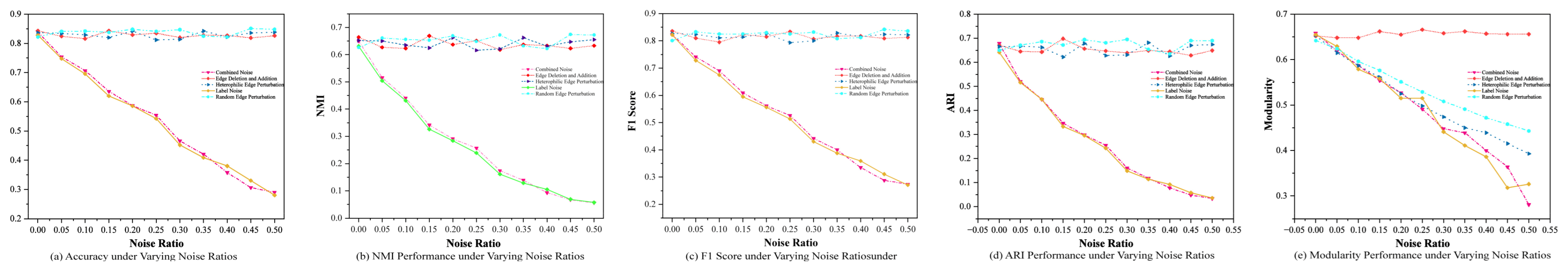

Under the above settings, experiments are conducted for each type of noise at various perturbation intensities. The results are illustrated in

Figure 7.

Based on the results in

Figure 7, the following observations can be made:

- (1)

As the intensity of structural noise increases, the model exhibits only a marginal decline in both Accuracy and F1-score, indicating strong robustness against structural perturbations. Meanwhile, although the Adjusted Rand Index (ARI) shows relatively larger fluctuations, it generally stabilizes at a consistent level. Modularity generally decreases with increasing noise, except in the Edge Deletion and Addition scenario, where changes are relatively small as the community structure remains largely intact. This robustness can be primarily attributed to the adaptive sparse sampling module, which effectively identifies and filters out redundant or anomalous edges, thereby significantly mitigating the adverse impact of structural noise and preserving the essential topological properties of the graph.

- (2)

In contrast, under label noise and combined noise scenarios, the model experiences a substantial degradation in Accuracy, Normalized Mutual Information (NMI), F1-score, and ARI as the noise intensity increases. This observation suggests that the model has limited tolerance to label corruption and lacks a robust correction mechanism for noisy annotations. These findings underscore the critical importance of high-quality labels in constructing accurate classification boundaries. Furthermore, under combined noise settings, the performance deterioration closely mirrors that observed under label noise alone, indicating that label corruption serves as the dominant factor driving model performance degradation in complex noise environments.

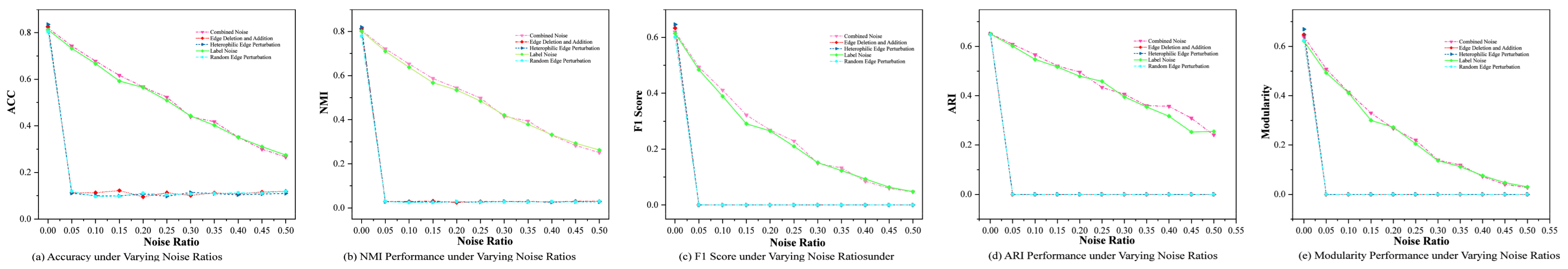

To further verify the role of the adaptive sparse sampling module, a comparative experiment was conducted in which this module was removed while all other configurations remained unchanged. The performance in terms of Accuracy, NMI, F1-score, ARI, and Modularity under varying noise intensities is shown in

Figure 8.

A comparison between

Figure 7 and

Figure 8 reveals that, after removing the adaptive sparse sampling module, the model’s performance degrades significantly under structural perturbations. These results provide strong evidence that the adaptive sparse sampling mechanism plays a vital role in alleviating structural information corruption and improving the model’s robustness.

5. Conclusions

To bridge the gap between structural topology and information flow in static graphs, we propose DAMA, a model that captures multi-scale, dynamic propagation patterns to enhance structural modeling and suppress noisy or irrelevant neighbors.

Extensive experiments on both real-world and synthetic graphs demonstrate that DAMA consistently outperforms representative baseline models across multiple evaluation metrics, including Accuracy, NMI, F1-score, ARI, and Modularity. Moreover, the model’s explicit modeling of propagation strength and adaptive sampling contributes to its stronger robustness and better interpretability. Ablation and sensitivity analyses further confirm the effectiveness of each component and the reliability of the gating mechanism. In future work, we plan to extend DAMA to dynamic or attributed graphs and explore its application in unsupervised and online community detection scenarios.