The Impact of Blame Attribution on Moral Contagion in Controversial Events

Abstract

1. Introduction

2. Literature Review

2.1. Contextual Boundaries of Moral-Emotional Diffusion

2.2. Arousal-Driven Mechanisms of Emotional Sharing

2.3. Attribution Frames and Issue Types

- Attributable to individual actions: Events framed mainly as the choices, intentions, or misconduct of specific people (e.g., a police officer, a teacher, a local official). The problem is treated as a discrete event caused by identifiable actors, and solutions focus on disciplining, rewarding, or replacing those individuals.

- Attributable to social structures: Events framed as the result of rules, incentives, institutions, or broad social conditions (e.g., laws, hiring systems, cultural norms). The problem is treated as systemic and persistent across cases, and solutions emphasize policy or organizational reform.

- Attributable to a mix of individual actions and social structures: Events where narratives link specific actors’ behaviors to the larger systems that enable or constrain them. Both personal agency and structural conditions are presented as necessary parts of the explanation, and solutions combine accountability for individuals with reforms to rules or contexts.

3. Materials and Methods

3.1. Data Collection and Issue Classification

3.2. Operationalization of Variables

- Moral-Emotional Words: Words present in both lexicons (n = 1957);

- Distinctly Moral Words: Words unique to the moral lexicon (n = 3747);

- Distinctly Emotional Words: Words unique to the affective lexicon (n = 25,358).

- User Verification (Moderator): Effects-coded as ordinary user (−1) or verified user (+1);

- Follower Count (Control): To control for user influence, we use the log-transformed number of followers due to the variable’s right-skewed distribution;

- Media Type (Control): Effects-coded as text-only (−1) or multimedia (e.g., images, video) (+1);

- Post Length (Control): The character count of the post is included as an additional control in our robustness checks.

- Core Lexicons: The DUTIR lexicon [39] provided word polarity (positive/negative) and discrete emotion categories (e.g., joy, sadness).

- Scoring Logic: The formula accounts for a word’s polarity (+1 or −1), its strength (on a 5-point scale), the multiplicative effect of negators, and the weighting of degree adverbs.

3.3. Analytic Strategy

- i is indexes the i-th post;

- is the expected repost count for post i;

- is the intercept;

- Xj,i is the value of the j-th predictor (main effect) for post i;

- is the coefficient for the j-th predictor;

- is the value of the k-th interaction term for post i;

- is the coefficient for the k-th interaction term.

4. Results

4.1. Main Effects of Language on Reposts and Cross-Issue Differences (Model 1)

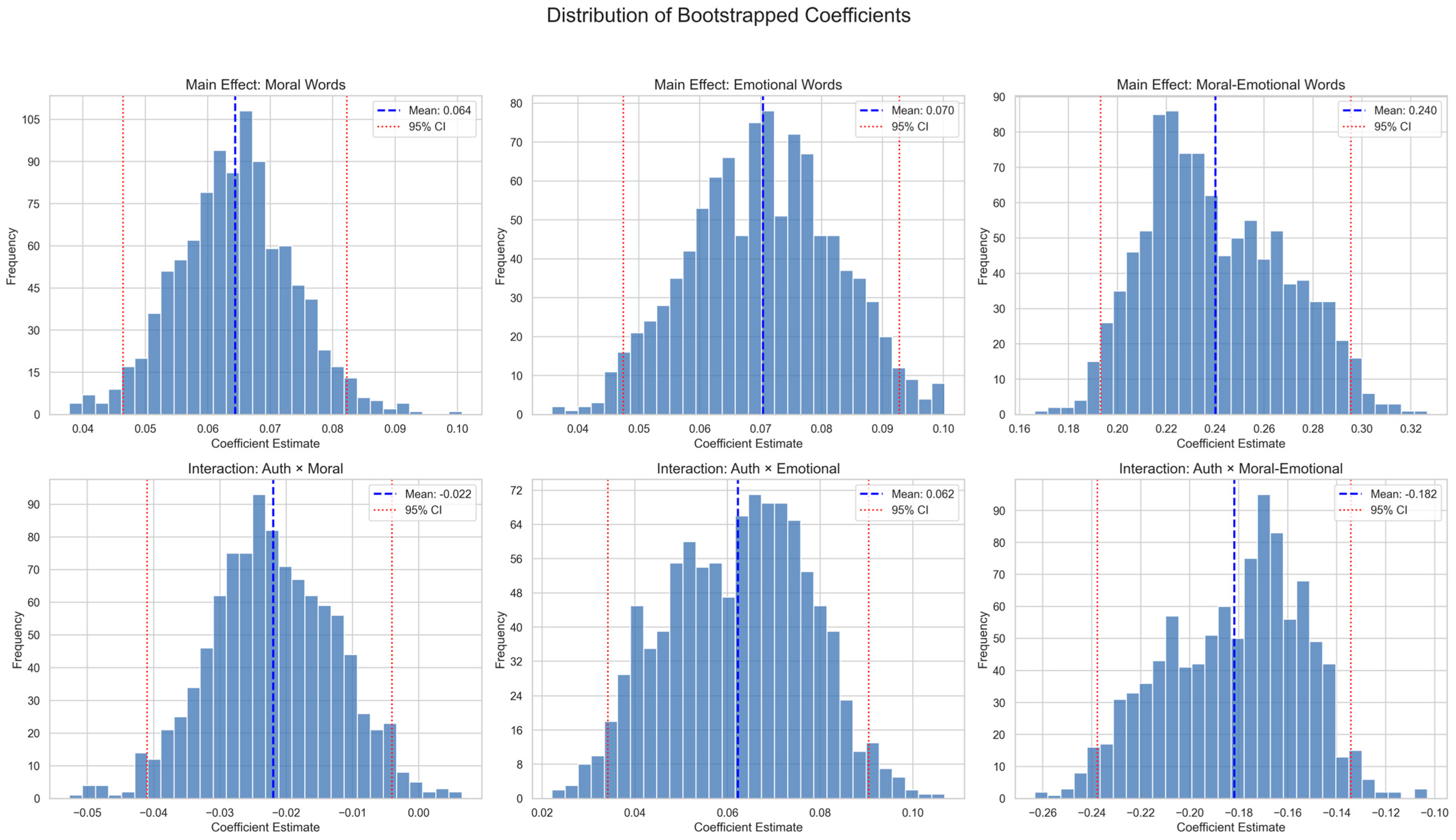

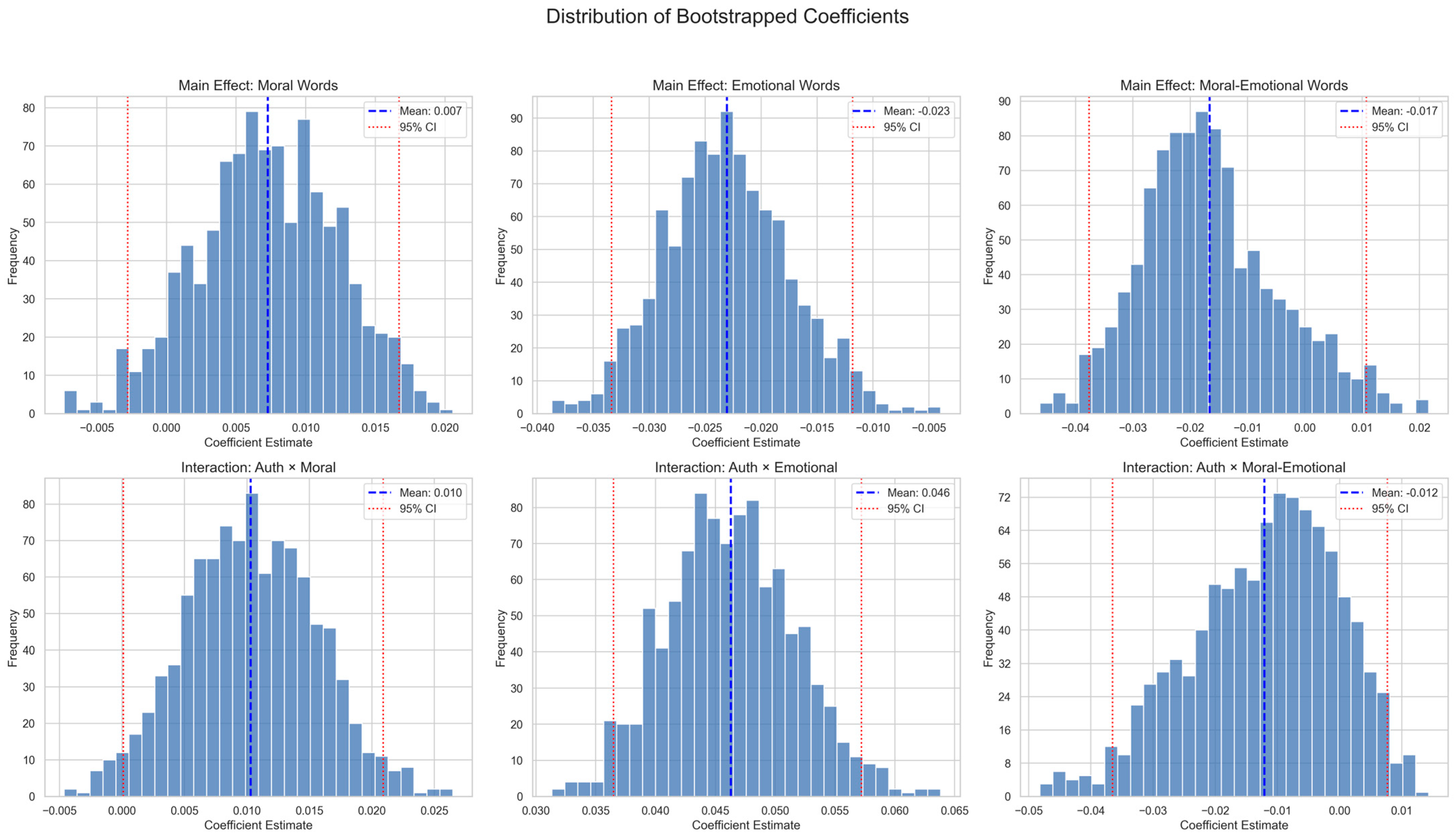

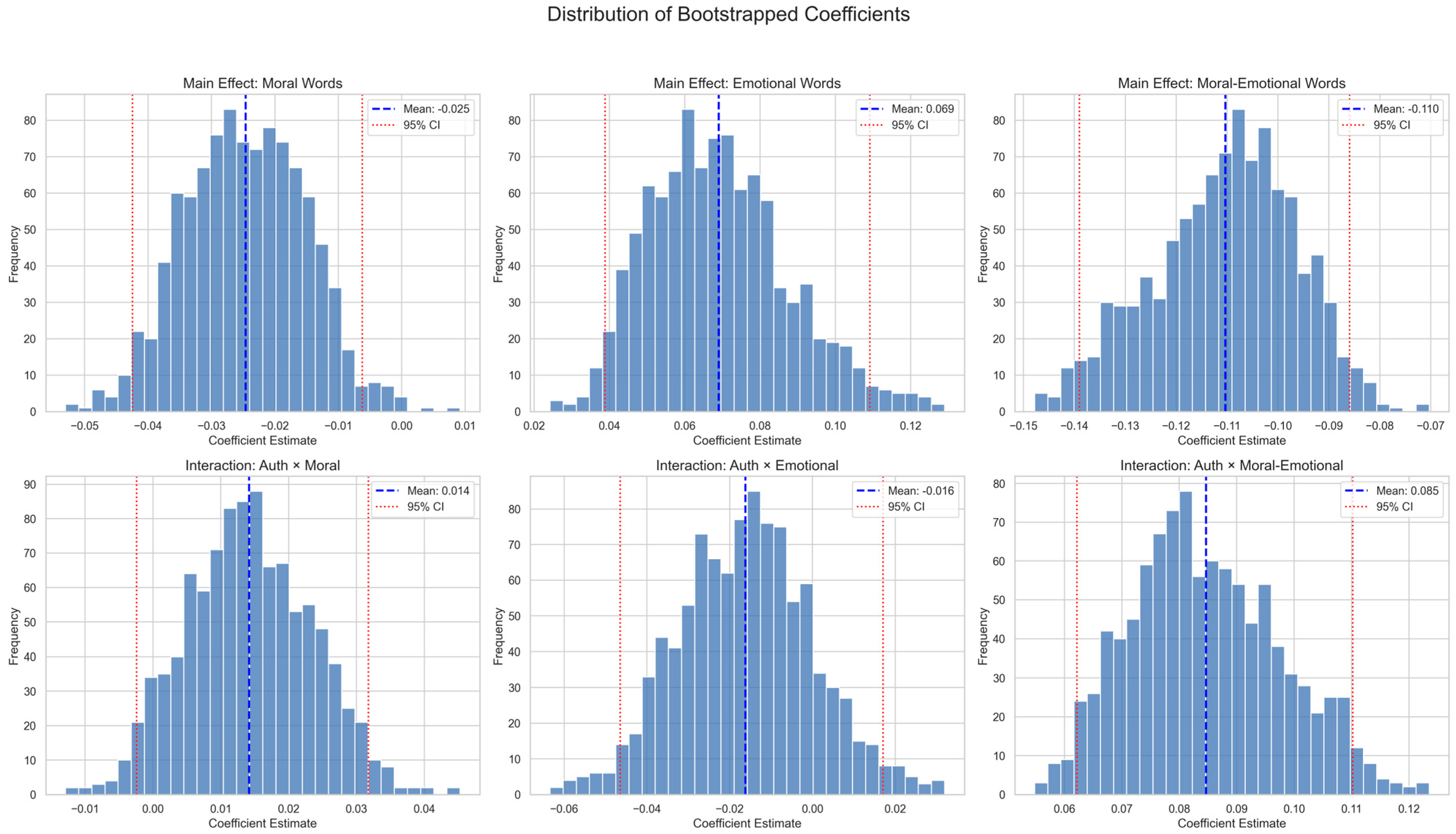

4.2. The Moderating Role of User Identity

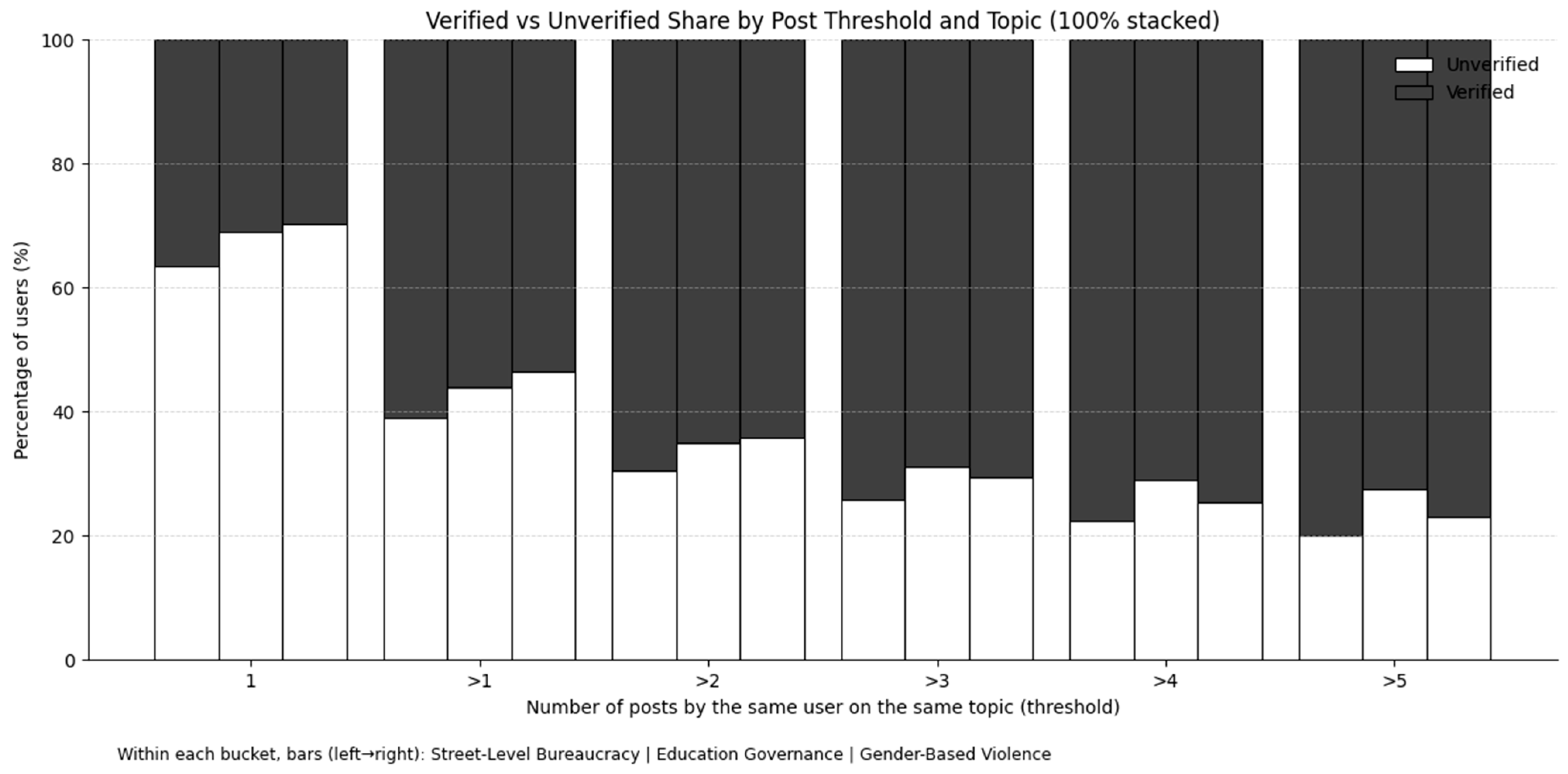

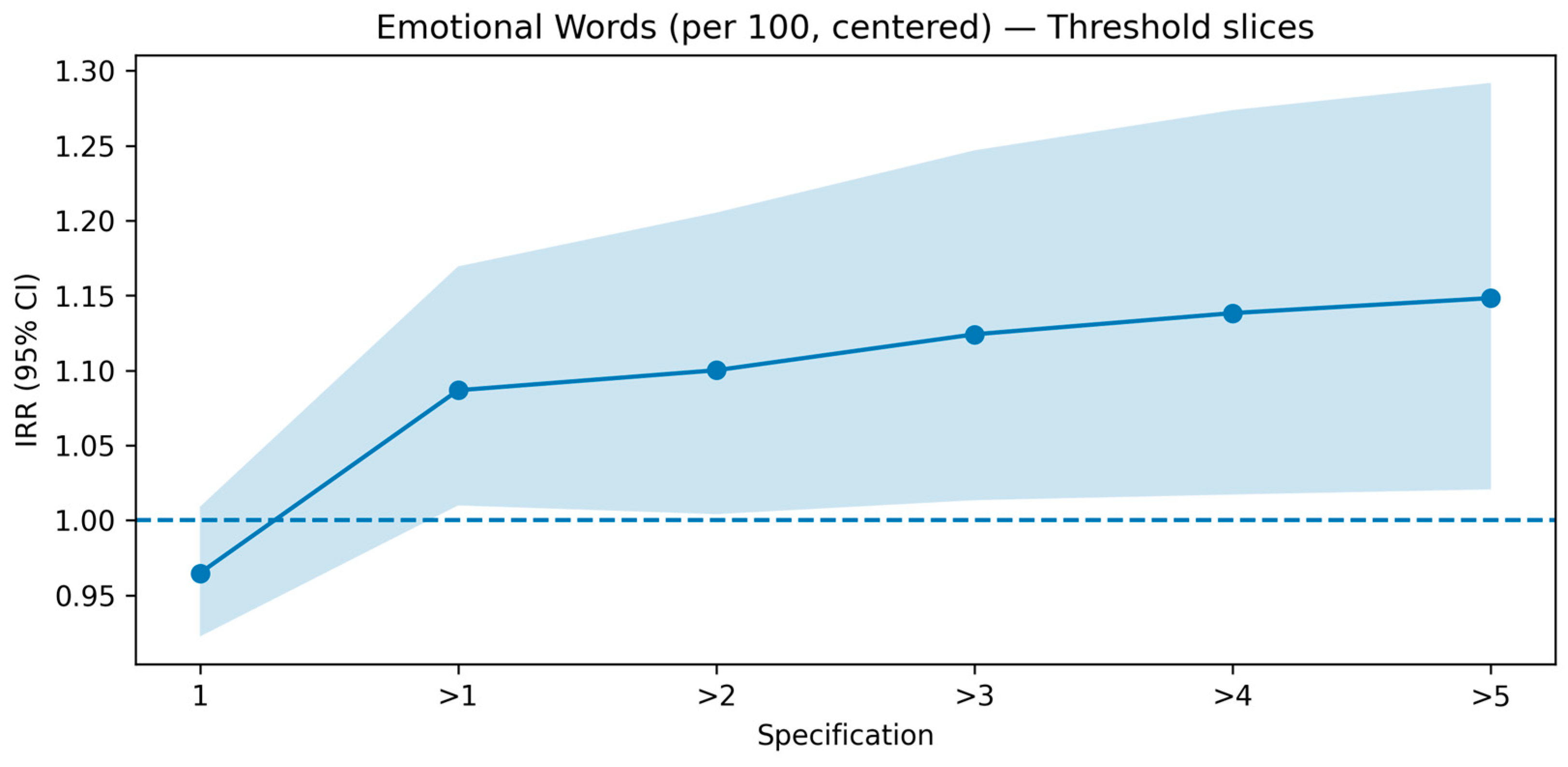

4.3. Robustness Checks

- Model Simplification: The results held when retaining only moral-emotional words, their interactions, and controls;

- Control Variables: The results were consistent when adding post length or removing media type as controls (except EG);

- Interaction Terms: The results were unchanged when retaining only interactions involving moral-emotional words.

4.4. Exploratory Analysis: Effects of Emotion Valence and Categories

5. Discussion

5.1. Summary of Findings

5.2. Theoretical and Methodological Implications

5.3. Practical Implications

5.4. Study Limitations and Future Research

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| C-MFD | Chinese Moral Foundation Dictionary |

| DUTIR | Information Retrieval Laboratory of Dalian University of Technology |

| EG | Education Governance |

| GBV | Gender-Based Violence |

| ICS | Informative Cluster Size |

| IRR | Incident Rate Ratio |

| LLMs | Large language models |

| MAD | Motivation–Attention–Design |

| ME | Moral-Emotional |

| NGOs | Non-Governmental Organizations |

| SLB | Street-Level Bureaucracy |

| UNHCR | the United Nations High Commissioner for Refugees |

| VAD | Valence, Arousal, and Dominance |

| WHO | World Health Organization |

Appendix A

Appendix A.1. Retaining Only Moral-Emotional Words, Its Interaction, and Controls

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.217390 | 0.041110 | 0.000000 | 0.804616 | 0.742329 | 0.872131 |

| Moral-Emotional Words | 0.375602 | 0.026065 | 0.000000 | 1.455867 | 1.383360 | 1.532175 |

| Log-Followers | 0.579699 | 0.011191 | 0.000000 | 1.785500 | 1.746764 | 1.825095 |

| Authentication Type | −0.263814 | 0.042460 | 0.000000 | 0.768116 | 0.706781 | 0.834774 |

| Media Type | 0.775174 | 0.040371 | 0.000000 | 2.170970 | 2.005811 | 2.349728 |

| Authentication Type × Moral-Emotional Words | −0.175586 | 0.025691 | 0.000000 | 0.838966 | 0.797766 | 0.882293 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.534210 | 0.016998 | 0.000000 | 0.586132 | 0.566926 | 0.605989 |

| Moral-Emotional Words | 0.040000 | 0.011373 | 0.000436 | 1.040810 | 1.017867 | 1.064271 |

| Log-Followers | 0.389409 | 0.005399 | 0.000000 | 1.476108 | 1.460571 | 1.491812 |

| Authentication Type | −0.257230 | 0.023808 | 0.000000 | 0.773190 | 0.737940 | 0.810125 |

| Media Type | 1.105046 | 0.016779 | 0.000000 | 3.019364 | 2.921683 | 3.120310 |

| Authentication Type × Moral-Emotional Words | −0.057767 | 0.011364 | 0.000000 | 0.943869 | 0.923079 | 0.965128 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.796287 | 0.020897 | 0.000000 | 0.451000 | 0.432901 | 0.469856 |

| Moral-Emotional Words | −0.178625 | 0.009666 | 0.000000 | 0.836420 | 0.820723 | 0.852417 |

| Log-Followers | 0.465685 | 0.007418 | 0.000000 | 1.593105 | 1.570109 | 1.616438 |

| Authentication Type | −0.352854 | 0.029390 | 0.000000 | 0.702679 | 0.663346 | 0.744345 |

| Media Type | 1.454114 | 0.019577 | 0.000000 | 4.280688 | 4.119552 | 4.448127 |

| Authentication Type × Moral-Emotional Words | 0.068475 | 0.009606 | 0.000000 | 1.070874 | 1.050900 | 1.091227 |

Appendix A.2. Adding “Weibo Text Length” as a Control Variable

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.266911 | 0.041788 | 0.000000 | 0.765741 | 0.705524 | 0.831098 |

| Moral Words | 0.065051 | 0.012663 | 0.000000 | 1.067213 | 1.041052 | 1.094031 |

| Emotional Words | 0.059407 | 0.013989 | 0.000022 | 1.061207 | 1.032505 | 1.090707 |

| Moral-Emotional Words | 0.290223 | 0.030306 | 0.000000 | 1.336726 | 1.259638 | 1.418532 |

| Log-Followers | 0.573758 | 0.011179 | 0.000000 | 1.774925 | 1.736459 | 1.814243 |

| Weibo Text Length | 0.000011 | 0.000060 | 0.858478 | 1.000011 | 0.999893 | 1.000129 |

| Authentication Type | −0.248562 | 0.042358 | 0.000000 | 0.779921 | 0.717787 | 0.847434 |

| Media Type | 0.818020 | 0.041630 | 0.000000 | 2.266008 | 2.088458 | 2.458653 |

| Authentication Type × Moral Words | −0.030702 | 0.012559 | 0.014498 | 0.969765 | 0.946185 | 0.993931 |

| Authentication Type × Emotional Words | 0.048822 | 0.013802 | 0.000404 | 1.050034 | 1.022009 | 1.078826 |

| Authentication Type × Moral-Emotional Words | −0.149645 | 0.030042 | 0.000001 | 0.861014 | 0.811780 | 0.913233 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.556557 | 0.017126 | 0.000000 | 0.573179 | 0.554259 | 0.592745 |

| Moral Words | 0.002020 | 0.006677 | 0.762226 | 1.002022 | 0.988995 | 1.015221 |

| Emotional Words | 0.006198 | 0.006865 | 0.366581 | 1.006218 | 0.992770 | 1.019848 |

| Moral-Emotional Words | 0.035733 | 0.011490 | 0.001872 | 1.036379 | 1.013300 | 1.059983 |

| Log-Followers | 0.398845 | 0.005473 | 0.000000 | 1.490103 | 1.474205 | 1.506173 |

| Weibo Text Length | 0.000184 | 0.000016 | 0.000000 | 1.000184 | 1.000152 | 1.000216 |

| Authentication Type | −0.258659 | 0.024131 | 0.000000 | 0.772086 | 0.736420 | 0.809480 |

| Media Type | 1.080857 | 0.017218 | 0.000000 | 2.947204 | 2.849406 | 3.048360 |

| Authentication Type × Moral Words | −0.015137 | 0.006654 | 0.022906 | 0.984977 | 0.972215 | 0.997906 |

| Authentication Type × Emotional Words | 0.019762 | 0.006738 | 0.003358 | 1.019959 | 1.006577 | 1.033518 |

| Authentication Type × Moral-Emotional Words | −0.045073 | 0.011486 | 0.000087 | 0.955928 | 0.934648 | 0.977691 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.939071 | 0.021373 | 0.000000 | 0.390991 | 0.374951 | 0.407718 |

| Moral Words | −0.053188 | 0.007042 | 0.000000 | 0.948202 | 0.935204 | 0.961380 |

| Emotional Words | 0.076158 | 0.008092 | 0.000000 | 1.079133 | 1.062153 | 1.096384 |

| Moral-Emotional Words | −0.153362 | 0.009586 | 0.000000 | 0.857819 | 0.841853 | 0.874089 |

| Log-Followers | 0.498102 | 0.007378 | 0.000000 | 1.645596 | 1.621970 | 1.669566 |

| Weibo Text Length | 0.000541 | 0.000035 | 0.000000 | 1.000542 | 1.000473 | 1.000610 |

| Authentication Type | −0.401442 | 0.028933 | 0.000000 | 0.669354 | 0.632452 | 0.708409 |

| Media Type | 1.287149 | 0.020405 | 0.000000 | 3.622444 | 3.480429 | 3.770253 |

| Authentication Type × Moral Words | 0.004250 | 0.006996 | 0.543498 | 1.004259 | 0.990583 | 1.018124 |

| Authentication Type × Emotional Words | −0.010354 | 0.007928 | 0.191549 | 0.989699 | 0.974439 | 1.005198 |

| Authentication Type × Moral-Emotional Words | 0.074454 | 0.009589 | 0.000000 | 1.077296 | 1.057239 | 1.097734 |

Appendix A.3. Removing the “Media Type” Control

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | 0.343293 | 0.030056 | 0.000000 | 1.409582 | 1.328944 | 1.495113 |

| Moral Words | 0.048405 | 0.012447 | 0.000101 | 1.049596 | 1.024301 | 1.075515 |

| Emotional Words | 0.021084 | 0.013800 | 0.126549 | 1.021308 | 0.994055 | 1.049309 |

| Moral-Emotional Words | 0.285592 | 0.029590 | 0.000000 | 1.330549 | 1.255580 | 1.409996 |

| Log-Followers | 0.603235 | 0.011199 | 0.000000 | 1.828022 | 1.788336 | 1.868590 |

| Authentication Type | −0.230176 | 0.042500 | 0.000000 | 0.794393 | 0.730903 | 0.863399 |

| Authentication Type × Moral Words | −0.022805 | 0.012443 | 0.066844 | 0.977453 | 0.953904 | 1.001585 |

| Authentication Type × Emotional Words | 0.072603 | 0.013799 | 0.000000 | 1.075303 | 1.046610 | 1.104783 |

| Authentication Type × Moral-Emotional Words | −0.150410 | 0.029404 | 0.000000 | 0.860355 | 0.812174 | 0.911394 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | 0.136150 | 0.016189 | 0.000000 | 1.145854 | 1.110066 | 1.182795 |

| Moral Words | 0.032359 | 0.007630 | 0.000022 | 1.032888 | 1.017556 | 1.048451 |

| Emotional Words | −0.012159 | 0.007287 | 0.095204 | 0.987915 | 0.973905 | 1.002126 |

| Moral-Emotional Words | 0.008760 | 0.013338 | 0.511351 | 1.008798 | 0.982767 | 1.035518 |

| Log-Followers | 0.386775 | 0.005835 | 0.000000 | 1.472225 | 1.455484 | 1.489158 |

| Authentication Type | −0.162665 | 0.026295 | 0.000000 | 0.849876 | 0.807185 | 0.894825 |

| Authentication Type × Moral Words | −0.025404 | 0.007637 | 0.000879 | 0.974916 | 0.960432 | 0.989618 |

| Authentication Type × Emotional Words | 0.004536 | 0.007291 | 0.533890 | 1.004546 | 0.990292 | 1.019005 |

| Authentication Type × Moral-Emotional Words | −0.049634 | 0.013338 | 0.000198 | 0.951578 | 0.927025 | 0.976781 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.285021 | 0.021238 | 0.000000 | 0.751998 | 0.721338 | 0.783961 |

| Moral Words | −0.103117 | 0.007617 | 0.000000 | 0.902022 | 0.888656 | 0.915588 |

| Emotional Words | 0.178041 | 0.010284 | 0.000000 | 1.194874 | 1.171031 | 1.219203 |

| Moral-Emotional Words | −0.273849 | 0.009372 | 0.000000 | 0.760447 | 0.746605 | 0.774545 |

| Log-Followers | 0.525339 | 0.007392 | 0.000000 | 1.691032 | 1.666709 | 1.715710 |

| Authentication Type | −0.447767 | 0.028629 | 0.000000 | 0.639054 | 0.604183 | 0.675937 |

| Authentication Type × Moral Words | −0.001112 | 0.007614 | 0.883846 | 0.998888 | 0.984093 | 1.013906 |

| Authentication Type × Emotional Words | −0.019505 | 0.010102 | 0.053502 | 0.980684 | 0.961458 | 1.000294 |

| Authentication Type × Moral-Emotional Words | 0.153685 | 0.009328 | 0.000000 | 1.166123 | 1.144997 | 1.187639 |

Appendix A.4. Retaining Only the Interaction Involving Moral-Emotional Words

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.264842 | 0.041446 | 0.000000 | 0.767327 | 0.707460 | 0.832261 |

| Moral Words | 0.057704 | 0.011536 | 0.000001 | 1.059401 | 1.035717 | 1.083627 |

| Emotional Words | 0.067855 | 0.013628 | 0.000001 | 1.070210 | 1.042003 | 1.099179 |

| Moral-Emotional Words | 0.286592 | 0.028211 | 0.000000 | 1.331881 | 1.260238 | 1.407597 |

| Log-Followers | 0.572715 | 0.011216 | 0.000000 | 1.773075 | 1.734522 | 1.812485 |

| Authentication Type | −0.254347 | 0.042549 | 0.000000 | 0.775422 | 0.713380 | 0.842861 |

| Media Type | 0.828858 | 0.041189 | 0.000000 | 2.290701 | 2.113042 | 2.483297 |

| Authentication Type × Moral-Emotional Words | −0.143663 | 0.025637 | 0.000000 | 0.866180 | 0.823732 | 0.910815 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.537638 | 0.017030 | 0.000000 | 0.584126 | 0.564952 | 0.603952 |

| Moral Words | 0.007749 | 0.006880 | 0.260018 | 1.007779 | 0.994281 | 1.021461 |

| Emotional Words | 0.021775 | 0.006948 | 0.001725 | 1.022014 | 1.008190 | 1.036027 |

| Moral-Emotional Words | 0.033407 | 0.011707 | 0.004324 | 1.033971 | 1.010516 | 1.057971 |

| Log-Followers | 0.389684 | 0.005397 | 0.000000 | 1.476515 | 1.460980 | 1.492215 |

| Authentication Type | −0.257130 | 0.023826 | 0.000000 | 0.773268 | 0.737988 | 0.810234 |

| Media Type | 1.110094 | 0.016959 | 0.000000 | 3.034642 | 2.935434 | 3.137203 |

| Authentication Type × Moral-Emotional Words | −0.054054 | 0.011350 | 0.000002 | 0.947381 | 0.926539 | 0.968691 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.848220 | 0.021064 | 0.000000 | 0.428176 | 0.410860 | 0.446223 |

| Moral Words | −0.049636 | 0.007076 | 0.000000 | 0.951575 | 0.938470 | 0.964864 |

| Emotional Words | 0.108148 | 0.008337 | 0.000000 | 1.114212 | 1.096154 | 1.132568 |

| Moral-Emotional Words | −0.170564 | 0.009693 | 0.000000 | 0.843189 | 0.827321 | 0.859361 |

| Log-Followers | 0.476161 | 0.007393 | 0.000000 | 1.609882 | 1.586722 | 1.633381 |

| Authentication Type | −0.371823 | 0.029063 | 0.000000 | 0.689476 | 0.651300 | 0.729891 |

| Media Type | 1.420245 | 0.019737 | 0.000000 | 4.138136 | 3.981114 | 4.301350 |

| Authentication Type × Moral-Emotional Words | 0.072037 | 0.009671 | 0.000000 | 1.074695 | 1.054517 | 1.095259 |

Appendix A.5. Cluster-Robust Bootstrapping

Appendix B

Appendix B.1. Effect of Emotional Polarity on Repost Rates

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.207792 | 0.041573 | 0.000001 | 0.812376 | 0.748807 | 0.881341 |

| Moral Words | 0.075579 | 0.011330 | 0.000000 | 1.078509 | 1.054824 | 1.102726 |

| Positive Emotional Score | 0.004156 | 0.005098 | 0.414945 | 1.004165 | 0.994181 | 1.014250 |

| Negative Emotional Score | 0.027638 | 0.005008 | 0.000000 | 1.028024 | 1.017982 | 1.038164 |

| Positive Moral-Emotional Score | 0.080523 | 0.010645 | 0.000000 | 1.083854 | 1.061475 | 1.106705 |

| Negative Moral-Emotional Score | 0.053824 | 0.010204 | 0.000000 | 1.055298 | 1.034403 | 1.076616 |

| Log-Followers | 0.568665 | 0.011244 | 0.000000 | 1.765908 | 1.727416 | 1.805259 |

| Authentication Type | −0.273876 | 0.044214 | 0.000000 | 0.760426 | 0.697304 | 0.829263 |

| Media Type | 0.815004 | 0.041677 | 0.000000 | 2.259186 | 2.081979 | 2.451475 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.549141 | 0.016991 | 0.000000 | 0.577446 | 0.558532 | 0.597000 |

| Moral Words | 0.008885 | 0.006688 | 0.184020 | 1.008924 | 0.995786 | 1.022236 |

| Positive Emotional Score | 0.003300 | 0.002657 | 0.214267 | 1.003306 | 0.998094 | 1.008545 |

| Negative Emotional Score | 0.029804 | 0.003122 | 0.000000 | 1.030252 | 1.023968 | 1.036575 |

| Positive Moral-Emotional Score | 0.002167 | 0.005511 | 0.694139 | 1.002170 | 0.991403 | 1.013053 |

| Negative Moral-Emotional Score | −0.011641 | 0.002945 | 0.000077 | 0.988426 | 0.982737 | 0.994149 |

| Log-Followers | 0.385857 | 0.005415 | 0.000000 | 1.470874 | 1.455345 | 1.486569 |

| Authentication Type | −0.235552 | 0.023961 | 0.000000 | 0.790134 | 0.753885 | 0.828126 |

| Media Type | 1.109948 | 0.016852 | 0.000000 | 3.034202 | 2.935619 | 3.136095 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | −0.730555 | 0.020130 | 0.000000 | 0.481641 | 0.463009 | 0.501024 |

| Moral Words | −0.041851 | 0.007185 | 0.000000 | 0.959013 | 0.945603 | 0.972614 |

| Positive Emotional Score | 0.040241 | 0.004393 | 0.000000 | 1.041062 | 1.032137 | 1.050065 |

| Negative Emotional Score | 0.003724 | 0.002653 | 0.160375 | 1.003731 | 0.998526 | 1.008964 |

| Positive Moral-Emotional Score | −0.007633 | 0.006387 | 0.232051 | 0.992396 | 0.980051 | 1.004897 |

| Negative Moral-Emotional Score | −0.006962 | 0.003419 | 0.041730 | 0.993062 | 0.986429 | 0.999739 |

| Log-Followers | 0.458547 | 0.007319 | 0.000000 | 1.581775 | 1.559246 | 1.604628 |

| Authentication Type | −0.354262 | 0.029656 | 0.000000 | 0.701691 | 0.662068 | 0.743686 |

| Media Type | 1.484741 | 0.019711 | 0.000000 | 4.413823 | 4.246556 | 4.587677 |

Appendix B.2. Effects of Discrete Moral-Emotional Categories on Reposts

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | 2.198364 | 0.031632 | 0.000000 | 9.010261 | 8.468609 | 9.586558 |

| ME_Joy Score per | 0.152332 | 0.099538 | 0.125920 | 1.164546 | 0.958143 | 1.415413 |

| ME_Good Score | 0.021793 | 0.010856 | 0.044692 | 1.022032 | 1.000517 | 1.044011 |

| ME_Sadness Score | 0.052037 | 0.046180 | 0.259808 | 1.053415 | 0.962257 | 1.153209 |

| ME_Fear Score | 0.184603 | 0.149119 | 0.215731 | 1.202741 | 0.897929 | 1.611024 |

| ME_Disgust Score | −0.009094 | 0.013691 | 0.506525 | 0.990947 | 0.964710 | 1.017897 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | 1.320753 | 0.018629 | 0.000000 | 3.746242 | 3.611925 | 3.885555 |

| ME_Joy Score | 0.017168 | 0.016244 | 0.290554 | 1.017317 | 0.985438 | 1.050226 |

| ME_Good Score | −0.002324 | 0.008210 | 0.777152 | 0.997679 | 0.981754 | 1.013863 |

| ME_Sadness Score | 0.028687 | 0.044693 | 0.520958 | 1.029103 | 0.942792 | 1.123315 |

| ME_Fear Score | 0.118506 | 0.092438 | 0.199841 | 1.125814 | 0.939254 | 1.349428 |

| ME_Disgust Score | −0.011865 | 0.003992 | 0.002956 | 0.988205 | 0.980503 | 0.995967 |

| Coefficient | Std.Err. | p > |z| | Incident Rate Ratio (IRR) | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|---|

| Intercept | 1.425181 | 0.024268 | 0.000000 | 4.158611 | 3.965439 | 4.361192 |

| ME_Joy Score per | 0.021623 | 0.047039 | 0.645747 | 1.021858 | 0.931861 | 1.120547 |

| ME_Good Score | 0.066351 | 0.012561 | 0.000000 | 1.068602 | 1.042616 | 1.095236 |

| ME_Sadness Score | −0.050929 | 0.030307 | 0.092870 | 0.950346 | 0.895539 | 1.008507 |

| ME_Fear Score | 0.272934 | 0.061817 | 0.000010 | 1.313814 | 1.163899 | 1.483038 |

| ME_Disgust Score | 0.001301 | 0.004676 | 0.780798 | 1.001302 | 0.992168 | 1.010520 |

Appendix C

Appendix C.1. Human–AI Coding Run Log

| Time zone | UTC+8 |

| Provider/Model | Google, Gemini 2.5 Pro (chat interface) |

| Access date | 14 July 2025 |

| Deterministic settings | Default |

| Prompt | Prompt v1.0 (see Appendix C.2) |

| Data | Micro Hotspot Big Data Research Institute, Weibo hot events 2024 (n = 349 events; provider clustered posts to events and assigned unique IDs). |

Appendix C.2. Prompt v1.0

References

- Brady, W.J.; Crockett, M.J.; Bavel, J.J.V. The MAD Model of Moral Contagion: The Role of Motivation, Attention, and Design in the Spread of Moralized Content Online. Perspect. Psychol. Sci. 2020, 15, 978–1010. [Google Scholar] [CrossRef]

- Peters, K.; Kashima, Y.; Clark, A. Talking about Others: Emotionality and the Dissemination of Social Information. Eur. J. Soc. Psychol. 2009, 39, 207–222. [Google Scholar] [CrossRef]

- Burton, J.W.; Cruz, N.; Hahn, U. Reconsidering Evidence of Moral Contagion in Online Social Networks. Nat. Hum. Behav. 2021, 5, 1629–1635. [Google Scholar] [CrossRef]

- King, G.; Pan, J.; Roberts, M.E. How Censorship in China Allows Government Criticism but Silences Collective Expression. Am. Polit. Sci. Rev. 2013, 107, 326–343. [Google Scholar] [CrossRef]

- Yang, Z.; Vicari, S. The Pandemic across Platform Societies: Weibo and Twitter at the Outbreak of the COVID-19 Epidemic in China and the West. Howard J. Commun. 2021, 32, 493–506. [Google Scholar] [CrossRef]

- Brady, W.J.; Wills, J.A.; Jost, J.T.; Tucker, J.A.; Bavel, J.J.V. Emotion Shapes the Diffusion of Moralized Content in Social Networks. Proc. Natl. Acad. Sci. USA 2017, 114, 7313–7318. [Google Scholar] [CrossRef] [PubMed]

- Hoynes, W. Media and the Construction of Social Problems. In The Cambridge Handbook of Social Problems; Treviño, A.J., Ed.; Cambridge University Press: Cambridge, UK, 2018; pp. 477–496. ISBN 978-1-108-42616-9. [Google Scholar]

- Giachanou, A.; Rosso, P.; Crestani, F. The Impact of Emotional Signals on Credibility Assessment. J. Assoc. Inf. Sci. Technol. 2021, 72, 1117–1132. [Google Scholar] [CrossRef] [PubMed]

- Heider, F. The Psychology of Interpersonal Relations, 1st ed.; John Wiley & Sons: New York, NY, USA, 1958; ISBN 978-0-203-78115-9. [Google Scholar] [CrossRef]

- Lipsky, M. Street-Level Bureaucracy: Dilemmas of the Individual in Public Service; 30th Anniversary Expanded Edition; Russell Sage Foundation: New York, NY, USA, 2010; ISBN 978-0-87154-544-2. [Google Scholar]

- OECD Education Organisation and Governance. Available online: https://www.oecd.org/en/topics/policy-issues/education-organisation-and-governance.html (accessed on 27 September 2025).

- UNHCR (The UN Refugee Agency). Gender-Based Violence. Available online: https://www.unhcr.org/us/what-we-do/protect-human-rights/protection/gender-based-violence (accessed on 27 September 2025).

- World Health Organization Violence Against Women. Available online: https://www.who.int/news-room/fact-sheets/detail/violence-against-women (accessed on 27 September 2025).

- Brady, W.J.; Gantman, A.P.; Van Bavel, J.J. Attentional Capture Helps Explain Why Moral and Emotional Content Go Viral. J. Exp. Psychol. Gen. 2020, 149, 746–756. [Google Scholar] [CrossRef] [PubMed]

- Deng, W.; Yang, Y. Cross-Platform Comparative Study of Public Concern on Social Media during the COVID-19 Pandemic: An Empirical Study Based on Twitter and Weibo. Int. J. Environ. Res. Public. Health 2021, 18, 6487. [Google Scholar] [CrossRef]

- Chen, J.; She, J. An Analysis of Verifications in Microblogging Social Networks—Sina Weibo. In Proceedings of the 32nd IEEE ICDCS Workshops (ICDCSW ‘12), Macau, China, 18–21 June 2012; pp. 147–154. [Google Scholar] [CrossRef]

- Wang, D.; Zhou, Y.; Qian, Y.; Liu, Y. The Echo Chamber Effect of Rumor Rebuttal Behavior of Users in the Early Stage of COVID-19 Epidemic in China. Comput. Hum. Behav. 2022, 128, 107088. [Google Scholar] [CrossRef]

- Brady, W.J.; Wills, J.A.; Burkart, D.; Jost, J.T.; Van Bavel, J.J. An Ideological Asymmetry in the Diffusion of Moralized Content on Social Media among Political Leaders. J. Exp. Psychol. Gen. 2019, 148, 1802–1813. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, D.; Zhu, Y.; Ma, H.; Xiao, H. Emotions Spread like Contagious Diseases. Front. Psychol. 2025, 16, 1493512. [Google Scholar] [CrossRef]

- Stieglitz, S.; Dang-Xuan, L. Emotions and Information Diffusion in Social Media—Sentiment of Microblogs and Sharing Behavior. J. Manag. Inf. Syst. 2013, 29, 217–247. [Google Scholar] [CrossRef]

- Berger, J.; Milkman, K.L. What Makes Online Content Viral? J. Mark. Res. 2012, 49, 192–205. [Google Scholar] [CrossRef]

- Pröllochs, N.; Bär, D.; Feuerriegel, S. Emotions Explain Differences in the Diffusion of True vs. False Social Media Rumors. Sci. Rep. 2021, 11, 22721. [Google Scholar] [CrossRef]

- Ferrara, E.; Yang, Z. Quantifying the Effect of Sentiment on Information Diffusion in Social Media. PeerJ Comput. Sci. 2015, 1, e26. [Google Scholar] [CrossRef]

- Schöne, J.P.; Parkinson, B.; Goldenberg, A. Negativity Spreads More than Positivity on Twitter After Both Positive and Negative Political Situations. Affect. Sci. 2021, 2, 379–390. [Google Scholar] [CrossRef]

- Fan, R.; Xu, K.; Zhao, J. Higher Contagion and Weaker Ties Mean Anger Spreads Faster than Joy in Social Media. arXiv 2016, arXiv:1608.03656. [Google Scholar] [CrossRef]

- Weiner, B.; Osborne, D.; Rudolph, U. An Attributional Analysis of Reactions to Poverty: The Political Ideology of the Giver and the Perceived Morality of the Receiver. Personal. Soc. Psychol. Rev. 2010, 15, 199–213. [Google Scholar] [CrossRef] [PubMed]

- Ross, L. The Intuitive Psychologist and His Shortcomings: Distortions in the Attribution Process. In Advances in Experimental Social Psychology; Berkowitz, L., Ed.; Academic Press: New York, NY, USA, 1977; Volume 10, pp. 173–220. [Google Scholar]

- Mills, C.W. The Sociological Imagination, 40th anniversary ed.; Oxford University Press: New York, NY, USA, 2000; ISBN 978-0-19-513373-8. [Google Scholar]

- Ryan, W. Blaming the Victim; Revised, updated edition; Vintage Books: New York, NY, USA, 1976; ISBN 978-0-394-71762-3. [Google Scholar]

- Eseonu, T. Street-Level Bureaucracy: Dilemmas of the Individual in Public Services. Adm. Theory Prax. 2023, 45, 259–261. [Google Scholar] [CrossRef]

- Stockmann, D.; Luo, T. Which Social Media Facilitate Online Public Opinion in China? Probl. Post-Communism 2017, 64, 189–202. [Google Scholar] [CrossRef]

- Galtung, J. Violence, Peace, and Peace Research. J. Peace Res. 1969, 6, 167–191. [Google Scholar] [CrossRef]

- Heise, L.L. Violence against Women: An Integrated, Ecological Framework. Violence Women 1998, 4, 262–290. [Google Scholar] [CrossRef]

- Ziems, C.; Held, W.; Shaikh, O.; Chen, J.; Zhang, Z.; Yang, D. Can Large Language Models Transform Computational Social Science? Comput. Linguist. 2024, 50, 237–291. [Google Scholar] [CrossRef]

- Bail, C.A. Can Generative AI Improve Social Science? Proc. Natl. Acad. Sci. USA 2024, 121, e2314021121. [Google Scholar] [CrossRef]

- Chew, R.; Bollenbacher, J.; Wenger, M.; Speer, J.; Kim, A. LLM-Assisted Content Analysis: Using Large Language Models to Support Deductive Coding. arXiv 2023, arXiv:2306.14924. [Google Scholar] [CrossRef]

- Peng, T.-Q.; Yang, X. Recalibrating the Compass: Integrating Large Language Models into Classical Research Methods. arXiv 2025, arXiv:2505.19402. [Google Scholar] [CrossRef]

- Cheng, C.Y.; Zhang, W. C-MFD 2.0: Developing a Chinese Moral Foundation Dictionary. Comput. Commun. Res. 2023, 5, 1. [Google Scholar] [CrossRef]

- Xu, L.; Lin, H.; Pan, Y.; Ren, H.; Chen, J. Constructing the Affective Lexicon Ontology. J. China Soc. Sci. Tech. Inf. 2008, 27, 180–185. [Google Scholar] [CrossRef]

- Qiao, Y.; Zhong, Z.; Xu, X.; Cao, R. Emotional Polarity and Contagion Patterns in Public Opinion on Emergencies: A Social Network Analysis Perspective. J. Jishou Univ. Sci. 2021, 42, 131–142. [Google Scholar] [CrossRef]

- Wu, D. Sentiment_Dictionary. Available online: https://github.com/DavionWu2018/Sentiment_dictionary (accessed on 27 September 2025).

- Fang, T. Dictionary of Adverbs of Degree and Negative Words. Available online: https://figshare.com/articles/dataset/____/12240233/1?file=22519265 (accessed on 27 September 2025).

- Yang, B.; Zhang, R.; Cheng, X.; Zhao, C. Exploring Information Dissemination Effect on Social Media: An Empirical Investigation. Pers. Ubiquitous Comput. 2023, 27, 1469–1482. [Google Scholar] [CrossRef]

- Guerini, M.; Staiano, J. Deep Feelings: A Massive Cross-Lingual Study on the Relation between Emotions and Virality. In Proceedings of the 24th International Conference on World Wide Web (WWW ‘15 Companion), Florence, Italy, 18–22 May 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 299–305. [Google Scholar] [CrossRef]

- Brady, W.J.; McLoughlin, K.L.; Torres, M.P.; Luo, K.F.; Gendron, M.; Crockett, M.J. Overperception of Moral Outrage in Online Social Networks Inflates Beliefs about Intergroup Hostility. Nat. Hum. Behav. 2023, 7, 917–927. [Google Scholar] [CrossRef] [PubMed]

- Rathje, S.; Van Bavel, J.J.; van der Linden, S. Out-Group Animosity Drives Engagement on Social Media. Proc. Natl. Acad. Sci. USA 2021, 118, e2024292118. [Google Scholar] [CrossRef]

- Del Vicario, M.; Vivaldo, G.; Bessi, A.; Zollo, F.; Scala, A.; Caldarelli, G.; Quattrociocchi, W. Echo Chambers: Emotional Contagion and Group Polarization on Facebook. Sci. Rep. 2016, 6, 37825. [Google Scholar] [CrossRef] [PubMed]

- Bonifazi, G.; Cauteruccio, F.; Corradini, E.; Marchetti, M.; Terracina, G.; Ursino, D.; Virgili, L. A Framework for Investigating the Dynamics of User and Community Sentiments in a Social Platform. Data Knowl. Eng. 2023, 146, 102183. [Google Scholar] [CrossRef]

| Coefficient | p > |z| | IRR | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|

| Intercept | −0.267364 | 0.000000 | 0.765394 | 0.705306 | 0.830601 |

| Moral Words | 0.065249 | 0.000000 | 1.067424 | 1.041346 | 1.094156 |

| Emotional Words | 0.059540 | 0.000020 | 1.061348 | 1.032668 | 1.090825 |

| Moral-Emotional Words | 0.290710 | 0.000000 | 1.337377 | 1.260496 | 1.418948 |

| Log-Followers | 0.573866 | 0.000000 | 1.775116 | 1.736695 | 1.814387 |

| Authentication Type | −0.248834 | 0.000000 | 0.779710 | 0.717619 | 0.847173 |

| Media Type | 0.818284 | 0.000000 | 2.266607 | 2.089126 | 2.459166 |

| Authentication Type × Moral Words | −0.030681 | 0.014607 | 0.969785 | 0.946196 | 0.993962 |

| Authentication Type × Emotional Words | 0.048961 | 0.000383 | 1.050180 | 1.022182 | 1.078945 |

| Authentication Type × Moral-Emotional Words | −0.149819 | 0.000001 | 0.860864 | 0.811626 | 0.913088 |

| Coefficient | p > |z| | IRR | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|

| Intercept | −0.539663 | 0.000000 | 0.582945 | 0.563792 | 0.602749 |

| Moral Words | 0.006391 | 0.355392 | 1.006411 | 0.992863 | 1.020144 |

| Emotional Words | 0.021411 | 0.002210 | 1.021642 | 1.007729 | 1.035747 |

| Moral-Emotional Words | 0.035958 | 0.002339 | 1.036612 | 1.012883 | 1.060897 |

| Log-Followers | 0.390396 | 0.000000 | 1.477566 | 1.461997 | 1.493300 |

| Authentication Type | −0.259564 | 0.000000 | 0.771388 | 0.736167 | 0.808295 |

| Media Type | 1.111927 | 0.000000 | 3.040211 | 2.940554 | 3.143246 |

| Authentication Type × Moral Words | −0.013255 | 0.054699 | 0.986832 | 0.973578 | 1.000267 |

| Authentication Type × Emotional Words | 0.018812 | 0.006967 | 1.018990 | 1.005161 | 1.033009 |

| Authentication Type × Moral-Emotional Words | −0.051778 | 0.000011 | 0.949540 | 0.927834 | 0.971753 |

| Coefficient | p > |z| | IRR | IRR_CI_2.5% | IRR_CI_97.5% | |

|---|---|---|---|---|---|

| Intercept | −0.853224 | 0.000000 | 0.426039 | 0.408699 | 0.444116 |

| Moral Words | −0.049441 | 0.000000 | 0.951761 | 0.938478 | 0.965232 |

| Emotional Words | 0.111466 | 0.000000 | 1.117916 | 1.099604 | 1.136533 |

| Moral-Emotional Words | −0.169417 | 0.000000 | 0.844157 | 0.828182 | 0.860439 |

| Log-Followers | 0.477302 | 0.000000 | 1.611720 | 1.588509 | 1.635269 |

| Authentication Type | −0.370692 | 0.000000 | 0.690257 | 0.652124 | 0.730619 |

| Media Type | 1.421567 | 0.000000 | 4.143608 | 3.986287 | 4.307139 |

| Authentication Type × Moral Words | −0.001102 | 0.876981 | 0.998898 | 0.985056 | 1.012936 |

| Authentication Type × Emotional Words | −0.022161 | 0.007877 | 0.978083 | 0.962226 | 0.994201 |

| Authentication Type × Moral-Emotional Words | 0.069734 | 0.000000 | 1.072223 | 1.051976 | 1.092860 |

| Topic | ||||

|---|---|---|---|---|

| Number of Posts Appearing in Data Set | Street-Level Bureaucracy | Education Governance | Gender-Based Violence | Mean |

| 1 | 67.75 | 73.33 | 77.08 | 72.72 |

| >1 | 32.25 | 26.67 | 22.92 | 27.28 |

| >2 | 17 | 12.99 | 9.75 | 13.25 |

| >3 | 11.07 | 8.23 | 5.68 | 8.33 |

| >4 | 7.88 | 5.85 | 3.77 | 5.83 |

| >5 | 5.99 | 4.46 | 2.72 | 4.39 |

| Range | 1–76 | 1–380 | 1–466 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Wang, Q.; Cao, R. The Impact of Blame Attribution on Moral Contagion in Controversial Events. Entropy 2025, 27, 1052. https://doi.org/10.3390/e27101052

Li H, Wang Q, Cao R. The Impact of Blame Attribution on Moral Contagion in Controversial Events. Entropy. 2025; 27(10):1052. https://doi.org/10.3390/e27101052

Chicago/Turabian StyleLi, Hua, Qifang Wang, and Renmeng Cao. 2025. "The Impact of Blame Attribution on Moral Contagion in Controversial Events" Entropy 27, no. 10: 1052. https://doi.org/10.3390/e27101052

APA StyleLi, H., Wang, Q., & Cao, R. (2025). The Impact of Blame Attribution on Moral Contagion in Controversial Events. Entropy, 27(10), 1052. https://doi.org/10.3390/e27101052