1. Introduction

A central goal of quantum information theory is the precise quantification of correlations, uncertainties, and distinguishability within quantum systems. Various information-theoretic quantities have been defined to capture the fundamental limits of quantum information processing tasks like communication, computation, or entanglement manipulation.

Traditionally, the fundamental quantity is the von Neumann entropy [

1], from which many quantities relevant in classical information science, like the mutual information or the conditional entropy, can be generalized to the quantum regime (cf. [

2]). These measures are relevant due to their operational meaning in quantifying optimal rates of achieving various information processing tasks in the asymptotic regime of infinitely many independent uses of a resource (e.g., quantum state). A notable example is the quantum relative entropy [

3], which can be related to quantum hypothesis testing [

4]. Several other information quantities can be expressed in terms of the relative entropy, such as the private information for the rate of secret-key distillation and the coherent information for the rate of entanglement distillation [

5]. In the so-called one-shot setting (cf. Ref. [

6]), only a finite amount of resources are considered. In this approach, the goal is to find optimal rates to transform the limited resources, such as quantum states, into the target states while allowing fixed error bounds. Moreover, the one-shot setting is crucial for security analysis in the context of quantum cryptography without any assumptions on the actions of a malicious third party [

7]. As in the asymptotic setting, several information quantities with operational meaning have been identified such as the

-hypothesis testing mutual information [

8] and the smooth max-mutual information [

9] that can be related to secret-key distillation in the one-shot setting.

As quantum technologies advance, the landscape of information quantities and related processing tasks becomes increasingly diverse (see Ref. [

10] for a comprehensive overview of information measures and related processing tasks) and a unified framework capable of accommodating both asymptotic and one-shot scenarios is essential. Generalized divergences offer such a unifying language. By definition, each divergence satisfies monotonicity under completely positive trace-preserving (CPTP) maps, i.e., a data-processing inequality under the action of quantum channels, that guarantee operational meaning and allow us to derive an entire spectrum of information measures. Examples include the Petz–Rényi relative entropy [

11,

12,

13], the sandwiched Rényi relative entropy [

14,

15], the geometric Rényi relative entropy [

16,

17] and (smooth) max/min-relative entropies [

7]. Note that the relative entropy also satisfies a data-processing inequality and thus many information quantities in the asymptotic setting can be expressed by this specific divergence. Replacing the relative entropy by other divergences in similar expressions allows one to define generalized quantities that are applicable in other regimes, e.g., the one-shot setting.

Despite their broad applicability, evaluating these divergence-based measures poses significant computational problems in the case of high-dimensional quantum states or if their definition involves challenging or infeasible optimizations. In this work, we define several types of divergence-based information measures and prove their invariance under local isometric or unitary transformations.

In

Section 2, we introduce the setting and notation and define the types of generalized divergence-based information quantities. We continue to present and prove the main result of this contribution, namely the invariance of information quantities of the defined types under local isometric or unitary transformations in

Section 3. Finally, we conclude our results in

Section 4 and provide an outlook to potential applications and future research directions.

2. Methods: Generalized Divergences and Information Quantities

In this section, we briefly introduce the notation and necessary objects to define several types of generalized information quantities and prove their invariance under local isometric or unitary transformations.

2.1. Notation and Setting

We consider two parties A and B with corresponding Hilbert spaces . and denote the spaces of positive-semidefinite operators and density operators (i.e., quantum states) acting on , respectively. Isometries are denoted by a capital V. Corresponding channels, i.e., completely positive and trace-preserving maps, are written as . Unitary operators are referred to with a capital U. The identity map is written as and ∘ denotes the composition of maps. States or operators are labeled with the systems they act on. For any multipartite state or operator, e.g., the state , the marginal state or operator is given by .

2.2. Generalized Divergence-Based Types of Information Quantities

The information quantities we analyze in this contribution are defined via so-called generalized divergences

[

18,

19].

Definition 1 (Generalized divergence, data-processing inequality). For quantum states and positive-semidefinite operators , a function that satisfies the data-processing inequality under any channel , , is called (generalized) divergence.

For quantum states, the data-processing inequality can be interpreted as the property that no physical transformation can make two states more distinguishable. It is known that any divergence is invariant under the application of isometric transformations

[

10]:

Generalized divergences are used to define generalized information quantities for bipartite quantum states

. There exist several ways to define information quantities based on divergences with operational meaning. In this work, we consider quantities that relate a bipartite state and its local marginals. Using specific instances for the divergences, these quantities can be related to various information-processing tasks (cf. [

10] for a comprehensive overview). The method to prove local invariance of the quantities we present in this work, however, does not depend on the specific form of the divergence, but only on how it is applied to the state. We therefore define several types of information quantities independent of the specific form of the divergence. Some types involve a so-called smoothing, i.e., an optimization over an environment of the quantum state. For this, the sine distance [

20] is often used as a distance measure that is closely related to the fidelity [

21] as defined below.

In the following, let and let and be states.

Definition 2 (Fidelity, sine distance)

. The fidelity of two quantum states ρ and σ is defined as follows: The sine distance is defined as: However, other distinguishability functions potentially with different properties can be used as well. In this work, we only require that the function obeys the data-processing inequality and invariance under isometric transformations. As both properties are satisfied by any divergence (cf. Definition 1 and (

1)), including the sine distance [

10], the smoothing environment

around a state

is defined as follows:

Definition 3 (Smoothing environment)

. Let be any divergence. For the sine distance, it specifically reads:

In this work, we define and analyze the following types of information quantities:

Definition 4 (Types of generalized mutual information)

. Definition 5 (Types of generalized conditional entropies)

. The defined types include information quantities in both the asymptotic and the one-shot setting. Examples of this are the (generalized) quantum mutual information (

), the coherent information (

), the Petz-Rényi mutual information, the sandwiched Rényi mutual information, the geometric Rényi mutual information, the smooth min-mutual information (see Ref. [

22] for these quantities), the

-hypothesis testing mutual information (

), the smooth max-conditional and min-conditional entropy (

), and the smooth max-mutual information (

).

3. Results: Local Invariance of Information Quantities

We show that the types and are invariant under any local isometric transformation, and that the types and are invariant if the first subsystem is transformed unitarily, while the second subsystem can be transformed by any isometry. More precisely, we prove below:

Proposition 1. Any information quantity of type and as in Definition 4 is invariant under local isometric transformations of the form .

Proposition 2. Any information quantity of type and as in Definitions 4 and 5 is invariant under local unitary transformations in the first system and isometric transformations in the second system of the form .

The proofs for Propositions 1 and 2 use the technical Lemmas 1, 2 and 4 related to the isometric transformations and the corresponding so-called reversal channel [

2], defined in the following. Due to

in general, the map

is not necessarily trace-preserving, although it is completely positive. The reversal channel

corresponding to an isometric channel

is a completely positive and trace-preserving map satisfying

, hence reversing the action of an isometric channel.

Definition 6 (Reversal channel)

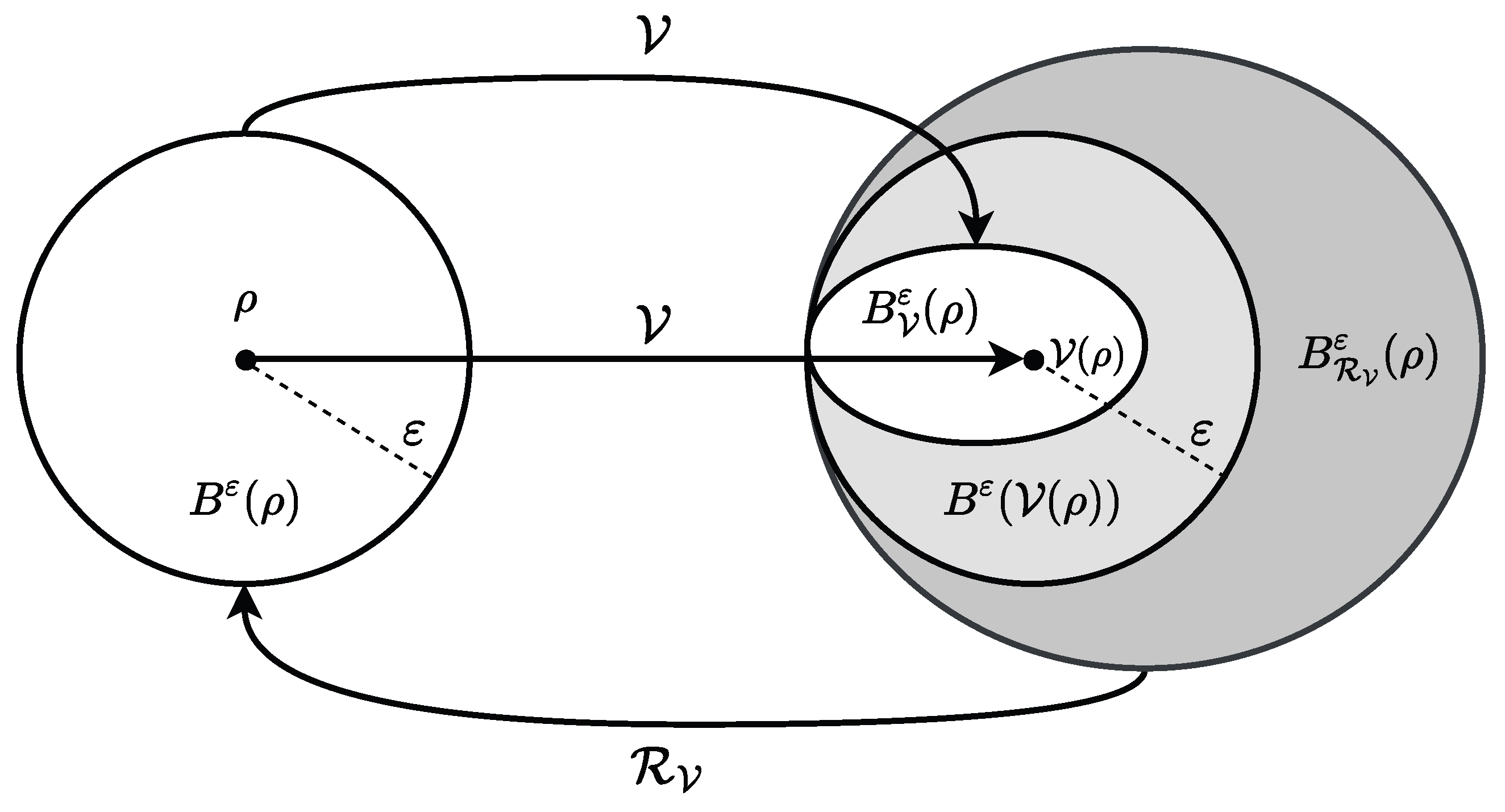

. Let be an isometric channel and . The reversal channel corresponding to and ω is defined as for any . Note, that this channel is not unique and , in general. Also note that while , needs to be a quantum state such that the map is trace-preserving. Given an isometric channel , we define the following sets that relate a smoothing environment to its image and pre-image under the isometric channel and its reversal channel:

Definition 7. For a state ρ with ε-environment as in Definition 3, an isometric channel , and the reversal channel , we define: We now show that these sets can be ordered (see

Figure 1), implying inequalities for smoothed information quantities as used in the proofs of Propositions 1 and 2.

Lemma 1. Regarding the sets from Definitions 3 and 7, the following set relations hold: Proof. For the first relation let

. Using the invariance under isometric transformations (

1), we have

. Now let

. Using the data-processing inequality for any quantum channel, we have

. □

A second technical property of the reversal channels is used for proving invariance under local transformations. There exist reversal channels for local transformations that preserve the reversal property locally, in the sense .

For mutual information quantities of types using quantum states as arguments of the divergence, the following fact is used for proving Proposition 1.

Lemma 2. Let be a local isometry. For any state and any local quantum states , there exists a reversal channel satisfying Proof. Define the reversal channel with

for any local quantum state

. Using the definition of

and

, one can directly calculate:

□

For conditional entropies of types using both quantum states and positive-semidefinite operators as arguments of the divergence, the following Lemma is used for proving invariance in the proof of Proposition 2.

Lemma 3. Let with unitary and isometric . For any local quantum state , there exists a reversal channel satisfyingwhere is the maximally mixed state of A with dimension . Proof. Define the reversal channel with

for any local quantum state

. Considering

, using the definition of

and calculating as in the proof of Lemma 2, one finds:

□

In the special case of smoothed states present in both arguments of the divergence as in , the following property is used for proving Proposition 2.

Lemma 4. Let with unitary and isometric . For any state and any quantum state , there exists a reversal channel satisfying Proof. Set

for any quantum state

. Using the definition of

and

, we then have for any local isometry

:

On the other hand, using

, one finds

showing the claimed equality. □

Using these results, we now prove Propositions 1 and 2.

Proof of Proposition 1. Let , where . Let denote the image of .

First consider type

. Using

and the invariance of any divergence under isometric evolution (

1), one has:

Next, consider type

. Again, using the invariance under isometric transformations, one has:

Conversely, using the data-processing inequality for

with the reversal channel

, one has:

Using the reversal channel as in Lemma 2, with arbitrary

, yields therefore:

Note, that the second inequality holds because

. The inequalities below (31) and (39) together imply the claimed equality.

Finally, consider type

. Using similar arguments and Lemma 1, one finds:

Conversely, using the reversal channel again as in Lemma 2 with arbitrary

together with Lemma 1 and noting the set equality

, one concludes:

□

Proof of Proposition 2. Let , with unitary and with isometric . Let denote the image of .

First, consider the type

. Using the invariance of

under isometric transformations, and Lemma 1, one has:

Conversely, using again Lemma 1 and applying the data-processing inequality for the reversal channel

as in Lemma 4, one finds:

Now, since

is unitary, Lemma 4 implies together with the set equality

,

proving the claimed property.

Finally, consider the conditional entropy types and . Noting due to the fact that is unitary, the proofs of local invariance are equivalent to those for and given in the proof of Proposition 1, respectively, if is replaced by and Lemma 3 is used instead of Lemma 2. □

4. Summary and Conclusions

In this work, we defined types of quantum information quantities based on generalized divergences and analyzed their properties regarding local isometric and unitary transformations. Leveraging properties of the reversal channel associated with the local transformation, we proved that several types of information quantities imply invariance under any local isometric transformation, while others are invariant if one of the subsystems is transformed by a unitary. Two main technical results are utilized to prove the local invariance. First, the smoothing environment required by some types of information quantities is related to its image and its pre-image under the isometric channel and a corresponding reversal channel, implying the set relation of Lemma 1. Second, the action of the reversal channel for a local isometric channel is characterized in Lemmas 2–4. Combining these insights with the data-processing inequality of any generalized divergence allows us to derive the main results regarding invariance for several types of information quantities under local isometric or unitary transformation as presented in Propositions 1 and 2.

Since methods for proving the invariance do not depend on the specific form of the generalized divergence used to define the information quantity, the invariances hold for numerous key quantities with operational relevance in quantum information processing. These invariances can enable more efficient computation of quantum information quantities by reducing complex states to simpler, equivalent forms with symmetries or smaller dimensions. For instance, consider the setting of quantum cryptography including a third party holding the purifying system of a bipartite state. If the output of a tripartite quantum channel is a pure state, it is equivalent to the purified output of a corresponding bipartite channel up to a local isometry in the purifying system. The dimension of the purification system of the latter state, however, may be smaller than the dimension of the former purification system. Consequently, the evaluation of relevant information quantities like the private information may be significantly improved by calculating it for the smaller but equivalent output state. Such invariances reduce computational overhead and unlock flexibility in protocol design. Given that the protocol performance is measured by an invariant information quantity, local unitary or isometric operations like encoding or correction operations can be added, modified or removed while preserving performance metrics.

Note, that the general proofs for local invariance depend only on the monotonicity by the data-processing inequality and the reversal properties of the isometric channel as given in Lemma 2–4. Therefore, local invariance may be shown similarly for other channels and corresponding reversals, such as the Petz recovery map (cf. [

10]).

The computation of generalized information quantities remains a significant challenge, in general. While some quantities like the hypothesis testing mutual information can be calculated efficiently, e.g., by semidefinite programs [

23,

24], others can only be approximated (see Ref. [

25] regarding the smooth max-relative entropy) or lack a general and efficient solution like the smooth max-mutual information. Our results may enable new methods to bound or approximate these quantities by leveraging state equivalences. Finally, we note that the invariance under local isometries, notably local Clifford and unitary operations, plays a pivotal role in the classification and optimization of graph states as used in error-correction [

26,

27] or measurement-based quantum computation [

28,

29], allowing for the optimization of codes or resource states with respect to an invariant information measure. In conclusion, the results of this contribution allow us to derive the behavior of general types of information quantities under local isometric or unitary transformations. Therefore, they improve the capability to characterize and compute information quantities relevant throughout the vast field of quantum information processing.

Author Contributions

Conceptualization, C.P.; validation, C.P., T.C.S. and B.C.H.; formal analysis, C.P.; writing—original draft preparation, C.P.; writing—review and editing, C.P., T.C.S. and B.C.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in whole, or in part, by the Austrian Science Fund (FWF) [10.55776/P36102]. For the purpose of open access, the author has applied a CC BY public copyright licence to any Author Accepted Manuscript version arising from this submission.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Neumann, J.v. Thermodynamik quantenmechanischer Gesamtheiten. Nachrichten Von Der Ges. Der Wiss. Zu Göttingen Math.-Phys. Kl. 1927, 1927, 273–291. [Google Scholar]

- Wilde, M.M. Quantum Information Theory; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar] [CrossRef]

- Umegaki, H. Conditional expectation in an operator algebra. IV. Entropy and information. Kodai Math. Semin. Rep. 1962, 14, 59–85. [Google Scholar] [CrossRef]

- Hiai, F.; Petz, D. The proper formula for relative entropy and its asymptotics in quantum probability. Commun. Math. Phys. 1991, 143, 99–114. [Google Scholar] [CrossRef]

- Devetak, I.; Winter, A. Distillation of secret key and entanglement from quantum states. Proc. R. Soc. A Math. Phys. Eng. Sci. 2005, 461, 207–235. [Google Scholar] [CrossRef]

- Tomamichel, M. Quantum Information Processing with Finite Resources—Mathematical Foundations; Springer: Cham, Switzerland, 2016; Volume 5. [Google Scholar] [CrossRef]

- Renner, R. Security of Quantum Key Distribution. arXiv 2006, arXiv:quant-ph/0512258. [Google Scholar] [CrossRef]

- Wang, L.; Renner, R. One-Shot Classical-Quantum Capacity and Hypothesis Testing. Phys. Rev. Lett. 2012, 108, 200501. [Google Scholar] [CrossRef] [PubMed]

- Buscemi, F.; Datta, N. The Quantum Capacity of Channels With Arbitrarily Correlated Noise. IEEE Trans. Inf. Theory 2010, 56, 1447–1460. [Google Scholar] [CrossRef]

- Khatri, S.; Wilde, M.M. Principles of Quantum Communication Theory: A Modern Approach. arXiv 2024, arXiv:2011.04672. [Google Scholar] [CrossRef]

- Petz, D. Quasi-entropies for States of a von Neumann Algebra. Publ. Res. Inst. Math. Sci. 1985, 21, 787–800. [Google Scholar] [CrossRef]

- Petz, D. Quasi-entropies for finite quantum systems. Rep. Math. Phys. 1986, 23, 57–65. [Google Scholar] [CrossRef]

- Tomamichel, M.; Colbeck, R.; Renner, R. A Fully Quantum Asymptotic Equipartition Property. IEEE Trans. Inf. Theory 2009, 55, 5840–5847. [Google Scholar] [CrossRef]

- Müller-Lennert, M.; Dupuis, F.; Szehr, O.; Fehr, S.; Tomamichel, M. On quantum Rényi entropies: A new generalization and some properties. J. Math. Phys. 2013, 54, 122203. [Google Scholar] [CrossRef]

- Wilde, M.M.; Winter, A.; Yang, D. Strong Converse for the Classical Capacity of Entanglement-Breaking and Hadamard Channels via a Sandwiched Rényi Relative Entropy. Commun. Math. Phys. 2014, 331, 593–622. [Google Scholar] [CrossRef]

- Petz, D.; Ruskai, M.B. Contraction of Generalized Relative Entropy Under Stochastic Mappings on Matrices. Infin. Dimens. Anal. Quantum Probab. Relat. Top. 1998, 01, 83–89. [Google Scholar] [CrossRef]

- Matsumoto, K. A New Quantum Version of f-Divergence. In Reality and Measurement in Algebraic Quantum Theory; Ozawa, M., Butterfield, J., Halvorson, H., Rédei, M., Kitajima, Y., Buscemi, F., Eds.; Springer: Singapore, 2018; pp. 229–273. [Google Scholar] [CrossRef]

- Polyanskiy, Y.; Verdú, S. Arimoto channel coding converse and Rényi divergence. In Proceedings of the 2010 48th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 29 September–1 October 2010; pp. 1327–1333. [Google Scholar] [CrossRef]

- Sharma, N.; Warsi, N.A. Fundamental Bound on the Reliability of Quantum Information Transmission. Phys. Rev. Lett. 2013, 110, 080501. [Google Scholar] [CrossRef]

- Rastegin, A.E. Sine distance for quantum states. arXiv 2006, arXiv:quant-ph/0602112. [Google Scholar] [CrossRef]

- Uhlmann, A. The “transition probability” in the state space of a *-algebra. Rep. Math. Phys. 1976, 9, 273–279. [Google Scholar] [CrossRef]

- Datta, N. Min- and Max-Relative Entropies and a New Entanglement Monotone. IEEE Trans. Inf. Theory 2009, 55, 2816–2826. [Google Scholar] [CrossRef]

- Dupuis, F.; Krämer, L.; Faist, P.; Renes, J.M.; Renner, R. Generalized entropies. In XVIIth International Congress on Mathematical Physics; World Scientific: Singapore, 2012; pp. 134–153. [Google Scholar] [CrossRef]

- Datta, N.; Tomamichel, M.; Wilde, M.M. On the second-order asymptotics for entanglement-assisted communication. Quantum Inf. Process. 2016, 15, 2569–2591. [Google Scholar] [CrossRef]

- Nuradha, T.; Wilde, M.M. Fidelity-Based Smooth Min-Relative Entropy: Properties and Applications. IEEE Trans. Inf. Theory 2024, 70, 4170–4196. [Google Scholar] [CrossRef]

- Bény, C.; Oreshkov, O. General Conditions for Approximate Quantum Error Correction and Near-Optimal Recovery Channels. Phys. Rev. Lett. 2010, 104, 120501. [Google Scholar] [CrossRef]

- Sarkar, R.; Yoder, T.J. A graph-based formalism for surface codes and twists. Quantum 2024, 8, 1416. [Google Scholar] [CrossRef]

- Hein, M.; Eisert, J.; Briegel, H.J. Multiparty entanglement in graph states. Phys. Rev. A 2004, 69, 062311. [Google Scholar] [CrossRef]

- Briegel, H.J.; Browne, D.E.; Dür, W.; Raussendorf, R.; Van den Nest, M. Measurement-based quantum computation. Nat. Phys. 2009, 5, 19–26. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).