1. Introduction

A comprehensive, self-contained proof of security for quantum key distribution (QKD) was presented in [

1] that considers the effects of finite resources. The analysis includes both entanglement-based and prepare-and-measure protocols within a unified framework, using a security reduction to relate the latter to the former. Specifically, the considered protocols correspond to variants of BBM92 [

2] and BB84 [

3], respectively.

When a QKD protocol is represented as a completely positive trace-preserving (CPTP) map, its security can be quantified by its operational distinguishability from an ideal protocol, which is defined as one in which the final keys are independent, uniformly distributed random strings. A QKD protocol that is -secure has a maximum distinguishing probability of in an optimal experiment. Formally, is the diamond distance between the actual and ideal CPTP maps and extends the notion of trace distance from quantum states to quantum channels.

A critical stage in QKD is key reconciliation, where error-correction information is exchanged over a public channel. This stage can significantly influence the security. To prevent the leakage of information to a potential adversary, encryption of reconciliation data has been proposed. Among the possible schemes, the one-time pad cipher (OTP) is of particular interest due to its unconditional security, which remains intact even against quantum adversaries, provided that the strict requirements are met. The main limitation of the OTP lies in its demand for a secret key whose length is at least equal to the length of the message and which must be securely exchanged in advance between the communicating parties. For instance, in [

4], a standalone non-quantum key distribution method based on optical noise and supplemented by privacy amplification is proposed to address this requirement.

The explicit integration of the OTP scheme into QKD has been explored in several studies. In [

5], the OTP is used to encrypt error-correction data in order to decouple error correction from privacy amplification. In this approach, Alice and Bob must initially share an OTP key whose length is equal to the requirements of a full QKD session, and the cost of this initial key is to be offset by generating a longer quantum key. A similar strategy is adopted in [

6], where part of the QKD key generated in a previous session is reused as the OTP key for the following session. While both methods are effective in principle, they require an initial pre-shared key. Consequently, a complete proof of security should consider both the initialization phase and the security implications of the key-chaining process.

In this paper, we present an alternative use of the OTP cipher within QKD that avoids pre-shared keys and chaining. Specifically, a designated block of the raw key obtained within the same QKD session is used as the OTP key to encrypt the error-correction data for the remaining portion of the raw key. A key distinction from earlier proposals is that the OTP keys used by Alice and Bob are necessarily different. Since the OTP keys originate from the same QKD session in which they are applied, eliminating the need for key reuse across sessions, the protocol allows a formal assessment of security on a per-session basis alone.

We extend the non-asymptotic security proof of Tomamichel and Leverrier [

1], which analyzes the security of QKD at finite key lengths while allowing for a small probability of failure. In this setting, we show that the OTP extension achieves the same quantum key rate as the conventional protocol—where the key rate is defined as the ratio of the final key length to the total number of quantum systems shared between Alice and Bob—at the desired security level. No additional assumptions are required for the OTP keys beyond those that already apply to the raw key, which are briefly summarized in the next section and described in detail in [

1] (pp. 7–9).

While the proposed OTP extension of the QKD protocol does not change its security level, it provides a practical advantage by reducing the computational requirements of error correction. We illustrate these benefits using low-density parity-check (LDPC) codes, a subclass of forward error-correction (FEC) codes that allow the receiver to detect and correct errors without retransmission. We show that the size of the encoding problem decreases by the square of the syndrome length, while the size of the decoding problem remains the same. Moreover, the QKD session requires less compression during privacy amplification.

We begin with an overview of the QKD reference protocol and summarize the main conclusions of the original security proof. The formalism and notation introduced in [

1] (pp. 3–7) are adopted, and readers are encouraged to consult that work for a full treatment of the proof. A complete restatement of all theorems and lemmas is not necessary here. However, we highlight those assumptions that are relevant to the modeling of the OTP extension and the security proof.

The structure of the paper is as follows.

Section 2 recalls the entanglement-based QKD protocol and introduces the notation used in the analysis.

Section 3 presents the modification of the error-correction step, including a visualization of the classical and quantum systems involved.

Section 4 establishes theoretical bounds on the length of the error-correction data for both noisy and noise-free channels. In

Section 5, we adjust the mathematical model of the QKD reference protocol, which serves as the basis for extending the original security proof to the OTP-enhanced protocol in

Section 6.

Section 7 evaluates the performance benefits of the modified error-correction scheme when implemented with LDPC codes. Finally,

Section 8 concludes the paper.

2. Reference Protocol

The security proof in [

1] applies to a variant of the entanglement-based QKD protocol introduced in [

2] and is subsequently extended to prepare-and-measure schemes with essentially identical results. For completeness, we briefly recall the relevant elements of this otherwise well-known protocol.

The protocol takes as input a bipartite quantum state and outputs two, typically identical, binary strings and representing the final keys held by Alice and Bob, respectively. The protocol may also abort under one of two conditions: failure of parameter estimation, indicated by the flag , or failure of error correction, indicated by the flag .

Alice and Bob each start with

m quantum systems, where the specific physical mechanism by which they are obtained is left unspecified. These systems are modeled as tensor products of local Hilbert spaces of the systems

A and

B. The proof in [

1] relies on several assumptions—such as deterministic detection, commuting measurements, and measurement complementarity—which, while necessary for the original proof, are not repeated here. Likewise, the sifting procedure used to ensure basis matching has been completed in advance.

At the beginning of the protocol, Alice generates a set of random seeds and transmits them to Bob via the authenticated classical communication channel. These seeds determine the random selection of a subset of raw key bits for parameter estimation (), the choice of measurement bases for parameter estimation (), and the measurement bases of the remaining systems used in key extraction (). Two other seeds, and , are used to randomly select particular hash functions from a universal family of hash functions. For clarity, we explicitly introduce these seeds when they appear in the following analysis.

The measurement outcomes of the m quantum systems—on both Alice’s and Bob’s sides—are recorded in binary registers. Each register is partitioned into two disjoint segments according to a random selection procedure controlled by the shared seeds: a segment of length k reserved for parameter estimation, denoted by V on Alice’s side and W on Bob’s side, and a segment of length n reserved for key distillation, denoted by X on Alice’s side and Y on Bob’s side.

In the parameter estimation step, Alice transmits a transcript of her V over the public channel. After receiving , Bob compares V with his corresponding W and determines whether the observed error rate is below a predefined threshold . If the threshold is exceeded, Bob sets , and the protocol aborts.

The error-correction procedure is characterized by the quintuple

, where the details of syndrome-based error correction are explained in

Section 7. In short, to reconcile discrepancies between

X and

Y, Alice computes an error-correction syndrome

and transmits its public transcript

of length

r over the authenticated classical channel. Bob applies an efficient correction algorithm

, producing

as his best estimate of Alice’s

X.

Both parties then check the success of the error correction by computing the hash values of X and , respectively, using a randomly chosen function from a family of universal hash functions . The choice of hash function is determined by the seed generated by Alice and transmitted over the public channel. After receiving Alice’s hash transcript of length t, Bob compares the results and sets the error-correction flag accordingly; if , the protocol aborts.

In the final stage, privacy amplification is performed to meet the prescribed security parameters. A random hash function from a family of universal hash functions is selected using the seed , generated and publicly announced by Alice. The final keys, of length l, are computed as and .

We summarize the notation used in the modeling and proof in

Table 1, similar to [

1], with additional registers introduced. The notation used to describe error-correction algorithms is given separately in

Section 7.

3. OTP-Protected Error Correction

We make no restrictions on the choice of error-correction method, except that it must operate in a non-interactive, or one-way, mode. In [

1], the authors propose the use of a linear code defined by a parity-check matrix. While interactive error-correction methods could, in principle, also be protected by OTP encryption, such an approach falls outside the scope of our current analysis.

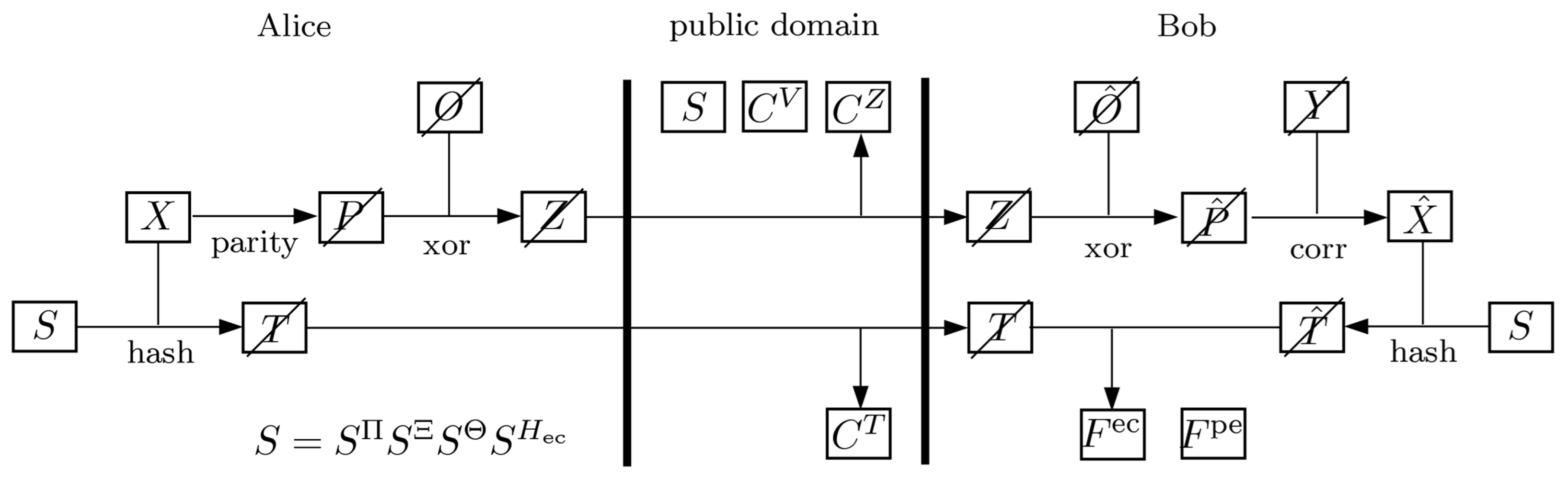

To describe the extension of the QKD protocol, we adopt the representation in

Figure 1, which provides a modified view of the joint evolution of the classical and quantum systems during and after error correction, based on the original representation in [

1]. In this diagram, the boxes represent subsystems accessible to Alice, Bob, and the public channel, while temporary classical systems are indicated by crossed-out boxes. The preceding and following steps of the protocol are identical to those in the reference formulation.

In the proposed extension, Alice and Bob agree on a subset of their raw quantum key, which serves as a one-time pad. The quantum representations of the corresponding classical OTP registers, denoted by

O on Alice’s side and

on Bob’s side, contain the respective measurement outcomes of their initial quantum systems

A and

B. The formal introduction of these newly defined registers, together with the measurement maps that determine their contents, can be found in

Section 5.

The modified procedure is as follows. Alice first computes the error-correction data and then applies the OTP protection by forming , where xor denotes the bitwise addition modulo 2. The public transcript of register Z is then transmitted via the authenticated classical channel.

After obtaining

, Bob reverses the transformation by calculating

. He then applies the error-correction algorithm to obtain

. The estimate

is then processed analogously to the reference protocol: the two parties compute the hash values

T and

, respectively, with Bob performing the comparison of the values to verify successful reconciliation, setting the error-correction flag

accordingly. Depending on the result, the protocol either aborts or proceeds with privacy amplification, as described in

Section 2.

5. Revised Mathematical Model

We now revise the mathematical model of the QKD protocol from [

1], which will serve as the basis for extending the original security proof to the OTP-enhanced version in the following section. As mentioned above, we assume that Alice and Bob each have a collection of

m individual quantum systems, with Alice’s systems described by the tensor product of Hilbert spaces

, and Bob’s systems analogously by

. The states of these systems are arbitrary, finite-dimensional, and otherwise unrestricted, so that the joint input state is fully described by a density operator

.

Once the random seeds have been distributed over the authenticated public channel, the global state of the protocol is described by

. Each of the classical registers is represented in the model as a quantum state. For example, the register encoding the seed

is described as the maximally mixed state

where

is the set of all subsets of size

k chosen from

m elements, and

forms an orthonormal basis of the register space.

Since the OTP modification only affects the error-correction phase, the modeling of the parameter estimation remains identical to that in [

1]. Measurements are represented as CPTP maps that transform quantum systems into the content of a classical register. A general measurement is defined as

where

A denotes the measured subsystem,

X the resulting register, and

the measurement operator yielding outcome

x. Without going into the explicit representation in orthonormal bases, we denote by

the state obtained after applying the measurement map to the subsystems selected by

and storing the outcomes in the registers

V on Alice’s side and

W on Bob’s side:

The measurement process is conceptually divided into two groups: (i) measurements used for parameter estimation and (ii) measurements used for extracting the secret key. This division is formal and has no impact on the practical realization of the protocol. Note that the measurement operators depend on bases determined by the random seed

, represented as the maximally mixed classical state

. In addition, the state

in (

6) is extended by a similarly constructed

.

To incorporate the OTP scheme, we need to change the second group of measurements. Instead of the total measurement map defined in [

1] as

we introduce the modified map

in which the raw keys are split into two components:

X and

O on Alice’s side, and

Y and

on Bob’s side. The OTP blocks satisfy

, while the remaining raw key lengths are

. The partitioning itself is arbitrary, provided that both parties select the same subset of the raw key. After the quantum systems

A and

B have been discarded, the resulting classical state is

Since the parameter estimation phase is modeled identically to [

1], Equation (

9) conditioned on the parameter estimation outcome is

where

denotes the CPTP map corresponding to the parameter estimation function

which determines the quantum representation of the flag

. Note that, for a general function

, the corresponding CPTP map is defined as

which leaves the register

X intact while appending a new register

Y, i.e.,

.

By renaming V as transcript published on the public channel and discarding W, we obtain , which is the input state for the error correction and OTP encryption.

Let

,

, and

denote the CPTP maps implementing the respective functions as given in

Section 3, and let

denote the map that computes the verification hash

T and the success flag

. The final state after error correction is then represented by the composition

where the new subsystems

,

,

, and

arise from the respective CPTP maps and the inclusion of the uncorrelated quantum representation of the random seed

.

The modeling of the privacy amplification remains unchanged from [

1]. Specifically, the distilled keys are compressed via a universal hash function

, which is selected and publicly announced by Alice using a random seed

. The final keys are

and

, where the process is represented by a CPTP map

. The final state of the protocol is therefore

where

denotes the collection of all transcripts, seeds, and flags exposed on the public channel. This state has the same structural form as in [

1], although the sizes and interdependencies of the subspaces differ due to the OTP modifications.

6. Security Proof Extension

We quantify the distinguishability between the CPTP map representing the QKD protocol formalized above and that of an ideal protocol, in which the final keys

and

are replaced by independent, uniformly distributed random bit strings. For the entanglement-based formulation, this distinguishability is evaluated by the diamond distance

where the supremum is taken over all normalized states on the joint system

. Here,

E denotes the purifying environment controlled by the eavesdropper, Eve. It suffices to assume

. Since purification represents the strongest possible adversary, any attack by Eve, whether collective, coherent, or memory-based, can be modeled as her holding the purification. However, it is important to emphasize that real-world security can be compromised if the underlying assumptions are violated. For example, vulnerabilities can arise if the source of the quantum state deviates from the modeled behavior or if the assumption of a sealed laboratory does not hold.

In [

1], the authors establish an upper bound on

by uniformly bounding the trace distance of the protocol’s final state from the corresponding ideal state. More precisely, they consider

where the notation

denotes the sub-normalized state [

1] (p. 5) conditioned on both successful parameter estimation and successful error correction. The ideal key of length

l is modeled as the maximally mixed state

defined in the orthonormal basis

.

The derivation of

and its proof can be summarized as follows. Lemma 1 of [

1] (p. 15) establishes that

can be decomposed into two contributions: one quantifying the correctness of the protocol and the other quantifying its secrecy.

The correctness term is upper-bounded by Theorem 2 of [

1] (p. 16) as

where

t denotes the length of the verification hash used during error correction.

For secrecy, the problem is reduced to bounding the simplified trace-distance expression

The security analysis introduces a scalar parameter, , that accounts for the unlikely event that parameter estimation passes, based on the observed error rate between registers V and W, while the fraction of mismatches between X and Y still exceeds by at least . The parameter thus acts as a smoothing parameter, permitting optimization over nearby quantum states in non-asymptotic entropy calculations.

Before turning to the OTP-modified protocol, we recall the final result from Theorem 3 of [

1] (p. 16), which provides the secrecy bound:

with

and

In addition to the quantities already introduced, the complementarity of Alice’s measurements in different bases is required in the last equation, with

defined as in [

1] (p. 9). Ideally,

. Combining Lemma 1, Theorems 2 and 3, the security of the original QKD protocol, expressed by the diamond distance, is bounded as

The modifications to the proof for the OTP-extended protocol begin with a reformulated conclusion of Corollary 5 [

1] (p. 18). In particular, the registers used for key distillation are split into a key component and an OTP component,

and

. This change yields the following uncertainty relation:

where

. For consistency with [

1], we continue to use the designations

X and

Y for the raw keys prior to distillation, although the introduction of

O and

shortens them relative to the original. This uncertainty relation bounds Eve’s maximum probability of correctly guessing Alice’s key, given her quantum side information.

To adequately account for finite-size effects and the possibility of early termination, smooth min- and max-entropies are employed. For a sub-normalized state

, these are defined as

and

where

denotes the set of sub-normalized states on

, and

denotes the purified distance [

1] (p. 4). The smoothing parameter

defines an

-ball of nearby sub-normalized states around

, which ensures the robustness of the entropy bounds against statistical fluctuations. The standard definition of the (non-smooth) conditional quantum min-entropy [

1] (p. 6) is used in (

25), while the conditional max-entropy in (

26) follows from the duality relation

for any tripartite pure state

.

We now apply the same modification introduced in the uncertainty relation to the bound on the conditional smooth max-entropy of the protocol state after successful parameter estimation. The adapted Proposition 8 of [

1] (p. 19) gives

valid for any

such that

and

. No additional proofs are required here, since the adjustments are purely notational, arising from the introduction of the registers

O and

.

Following the logic of Proposition 11 in [

1] (p. 21), we combine the above result with the uncertainty relation to obtain

where

.

Discarding

W and rewriting

V as

can be accounted for by the data-processing inequality [

1] (p. 7), i.e.,

, valid for any CPTP map

, yielding

The OTP-encrypted error-correction data

transmitted from Alice to Bob can be integrated using the chain rule [

1] (p. 7), i.e.,

, for a classical register

X. Applying this to the OTP-protected exchange, similar to what is implemented for the syndrome exchange of the original protocol, we obtain

where

corresponds to the OTP-specific redundancy length

.

Next, we eliminate the explicit dependency on

O in (

30) by exploiting the properties of bitwise modulo-2 addition. Let us first assume a sub-normalized classical–quantum state

, where

X,

Y, and

Z are classical registers and

A is a quantum system possibly correlated with them. Suppose the registers are related by

Given

X and

Z, the value of

Y is uniquely determined if

is known. Therefore, the non-smoothed conditional min-entropy

is equal to

. This can be shown first by noting that the min-entropy in the case of a classical

X conditioned on a quantum system

B can be expressed more conveniently using guessing probability as

[

1] (p. 6). Since the classical

Y is uniquely determined by

and

Z, if one can guess

X correctly, one automatically knows

Y, i.e.,

, where

B is treated as

.

The following equalities then hold by construction:

where the first equality follows from the definition (

25), the second from the fact that non-smoothed min-entropies are maximized over the same

-ball, and the last again from the definition. In the above, the relation between

X,

Y, and

Z is enforced by construction. We smooth first before tracing out

Y, which ensures a tight, operationally meaningful bound. On the other hand, restricting the smoothing region too early can result in a smaller

-ball, which would lead to a less strict relation

, which holds in general [

10] (p. 82). We substitute

,

,

, and

, apply the sub-normalization

, and replace

with

to get

With the lower bound of min-entropy established in the same form as [

1],

we can proceed analogously. In particular, by (i) adding the independent seed

for the error-correction verification hash to the left-hand side, (ii) subtracting the verification hash length

t on the right-hand side, (iii) imposing the condition

via Lemma 10 [

1] (p. 21), and (iv) finalizing with Corollary 12 [

1] (p. 22), we recover the results of Theorem 3 [

1] (

20)–(

22), with the only difference being that

r is replaced by

.

Comparing the OTP-protected scheme with the original formulation for a given

m, we first notice that the raw key length available for distillation differs. In the original scheme,

n is equal to

, while in the OTP scheme,

n is reduced to

. Substituting the latter into the expression for redundancy (

3), we obtain

Rearranging yields

In other words, although

exceeds

r for correcting the same message length, in the OTP-protected variant, the effective message length is shortened. This ensures that, for a given

m and

, the redundancy parameters

and

r match exactly.

Consequently, the achievable key rate

is identical in both schemes for a fixed security parameter

. In this respect, the OTP-extended and original protocols are therefore equivalent. Since (

32) is exact, while the rest of the proof reuses the bounds of the original proof, the

bound is as strict as in the reference scheme.

7. Error-Correction Performance

We demonstrate the benefits of the OTP scheme using LDPC codes, which achieve performance close to the Shannon limit for reliable communication over noisy channels. First introduced by Gallager in 1962 [

11], LDPC codes became practical with the rise of efficient computational techniques and are now widely deployed. Their main feature is a sparse parity-check matrix—predominantly zeros with relatively few ones—enabling efficient iterative decoding, most commonly implemented via belief-propagation or message-passing algorithms.

In the following, we compare syndrome-based error correction with the parity-based OTP approach. The notation used to describe the error-correction algorithms is summarized in

Table 2.

In the syndrome-based method, Alice holds a sparse parity-check matrix

H of size

. She calculates the syndrome of her raw key as

where

denotes her raw key represented as a column vector of length

, and all operations are performed modulo 2. The rows of

H correspond to

r parity-check equations.

After obtaining

, Bob applies an LDPC decoding algorithm to recover the most probable candidate

, i.e., the vector closest to his raw key

, subject to the condition

where

denotes the identity-extended parity-check matrix.

Equivalently, the process can be described in terms of error vectors. Bob first computes his own syndrome , then identifies the most likely error vector satisfying , and finally reconstructs Alice’s key . Both formulations are equivalent and lead to the same corrected key.

In contrast, parity-based error correction over the noisy channel requires Alice to employ a parity-generator matrix

P of size

. This matrix is derived from the decomposition

, where

A is a submatrix of size

, corresponding to parity-check equations for the message bits, and

B is a square submatrix of dimension

corresponding to parity-check equations for the parity bits themselves. The generator matrix can be calculated as

Alice first computes her parity bits as

where

is represented as a column vector of length

. The OTP encryption

followed by the decryption

on Bob’s side effectively emulates the transmission of parity bits through a noisy channel, where

and

are one-time pad vectors on the respective sides.

After obtaining

, Bob employs LDPC decoding algorithms to find the most probable key

that is closest to

by solving the equation

Although

is larger than

H, the decoding task (

38) can be considered just as difficult as (

41). This is because, in the syndrome-based approach, the received syndrome

in (

38) is already correct, while in the OTP scheme, the errors are present in

and

. The combined length of these erroneous components is equal to the length of

alone in the syndrome case, under the condition

. The advantage of the OTP scheme is on Alice’s side: because of the smaller matrix dimensions, computing (

40) requires about

fewer operations than computing (

37). This estimate is approximate, since different algorithmic optimizations may be applied in practice.

The decoding tasks are comparable, and thus the robustness of the QKD protocol—measured by the success probability

—remains essentially the same for both schemes, subject to some random variations. In contrast, the encoding tasks differ in difficulty: when expressed as the ratio of encoding problem sizes, determined by the number of elements in

P and

H and using (

36), we obtain

Since the same parity-check matrix can be reused across multiple sessions, recalculating

P for each session is unnecessary. To compute (

39), the submatrix

B must be nonsingular; however, the explicit inversion of

B is not required to generate the parity bits. Instead, an

decomposition of

B can be performed, where

, with

L a lower triangular matrix and

U an upper triangular matrix. By heuristically rearranging the rows and columns of

H, both

L and

U can be made sparse. This enables efficient calculation of the parity bits using standard forward and backward substitution.

In practice, the raw key is segmented to allow the use of parity-check matrices of manageable size, suitable for software implementation or, preferably, for efficient hardware implementation. For example, 5G parity-check matrices [

12] (pp. 19–26) can be employed. Using the 5G Base Graph 1 matrix with a lifting factor of 224, a matrix

H of size 5072 × 10,000 can be extracted, which experimentally achieves an error-correction success rate of 0.99 at a bit error rate of 0.09. The resulting error-correction efficiency is

, since the theoretical redundancy is

. The corresponding parity-generator matrix

P then has the dimensions

, giving a ratio of (

42) equal to 0.4928. In other words, under this setup, syndrome encoding is approximately twice as demanding as parity encoding when assessed purely in terms of problem size.

In the syndrome scheme, H is employed to generate error-correction data, whereas in the OTP scheme it is primarily used to verify parity-check equations on the receiver side, an approach more in line with classical wireless communication. One could alternatively define H as the parity generator in the OTP case, but this would break the equivalence , since the problem dimensions would no longer align.

The final key length

l and the parameters

k,

t, and

for a given

,

m,

, and

are identical across both schemes. However, since the length of the distilled keys

n is different, the compression ratio used in privacy amplification must be adjusted accordingly. In particular, the OTP scheme requires a compression ratio that is only a fraction of the ratio of the reference scheme,

which is independent of

m and coincides with the size ratio of the encoding problems (

42).

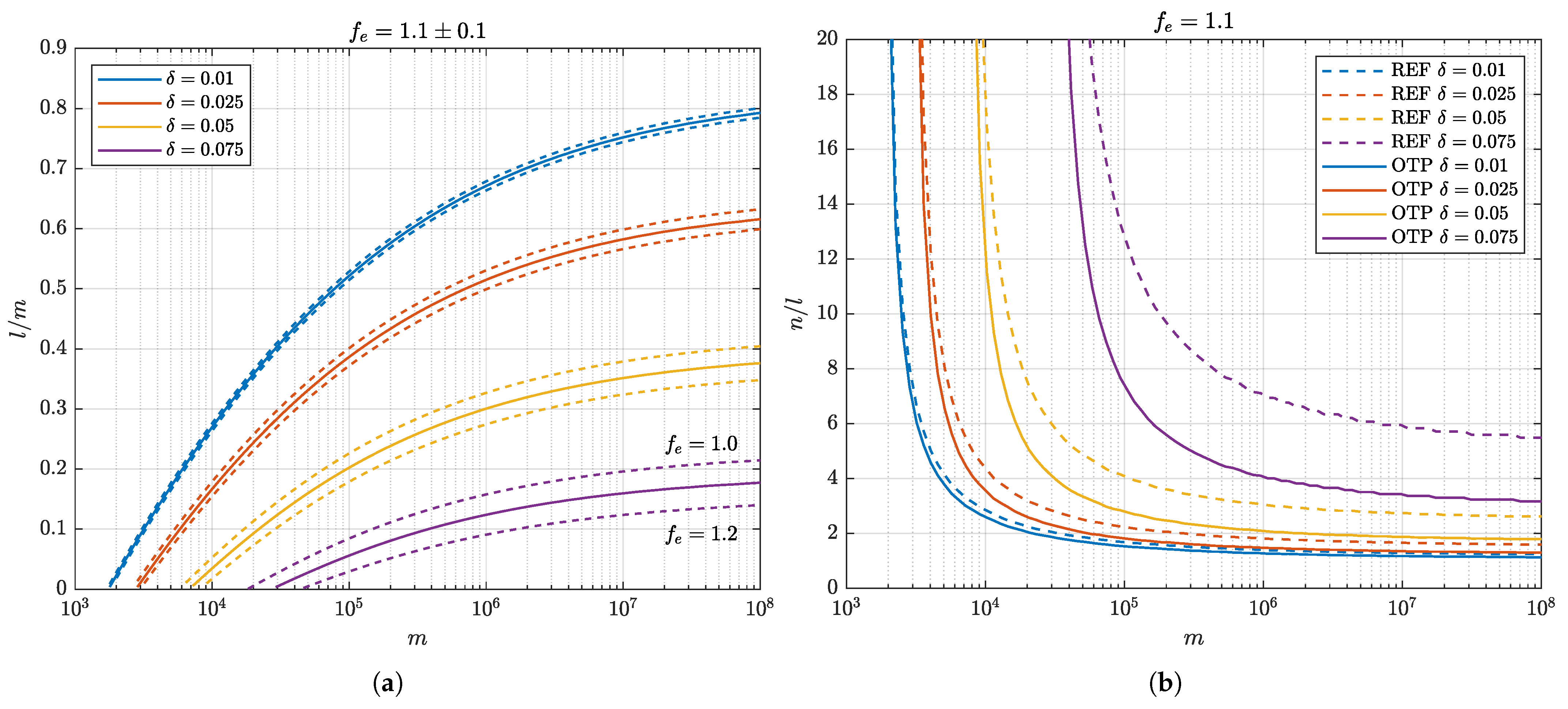

Figure 2a presents the achievable key rates

determined by numerical optimization for

and error-correction efficiency

within a range of

.

Figure 2b shows the corresponding compression ratios under

.