Image-Based Telecom Fraud Detection Method Using an Attention Convolutional Neural Network

Abstract

1. Introduction

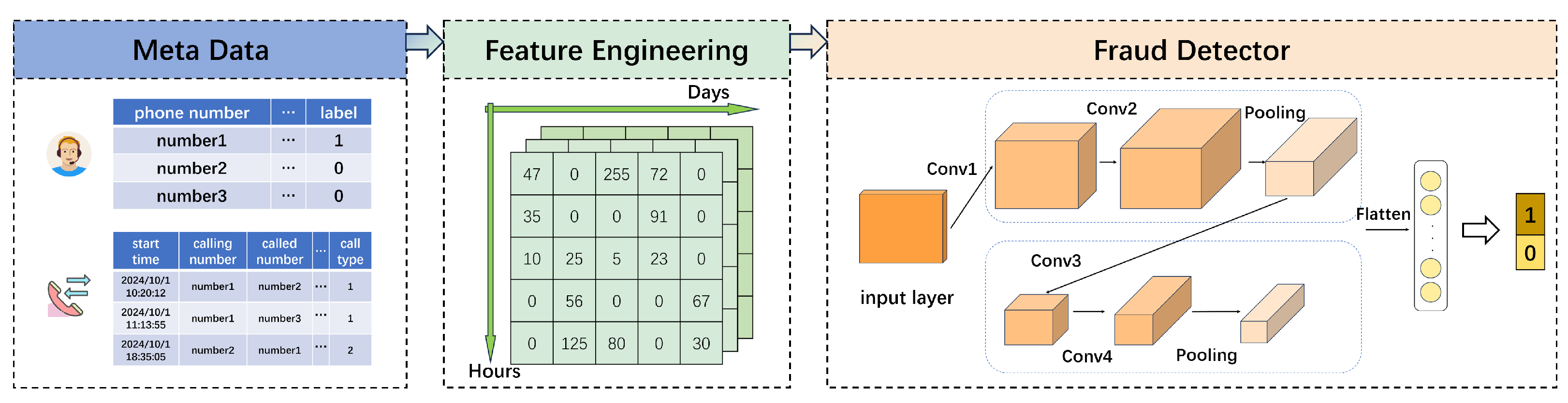

- (1)

- To address the challenge of automatically extracting useful features from telecom data, we propose a feature transformation mechanism that converts Call Detail Record (CDR) text data into structured matrices. This mechanism transforms key features into image-like matrices, such as the proportion of call duration per caller and the number of called numbers, capturing the temporal and behavioral patterns of user interactions. These matrices are then stacked together to form an 8-dimensional tensor, which serves as a rich, high-dimensional representation of the user’s communication behavior. By using this transformation, our approach not only automates the feature extraction process but also significantly reduces the need for manual intervention from domain experts.

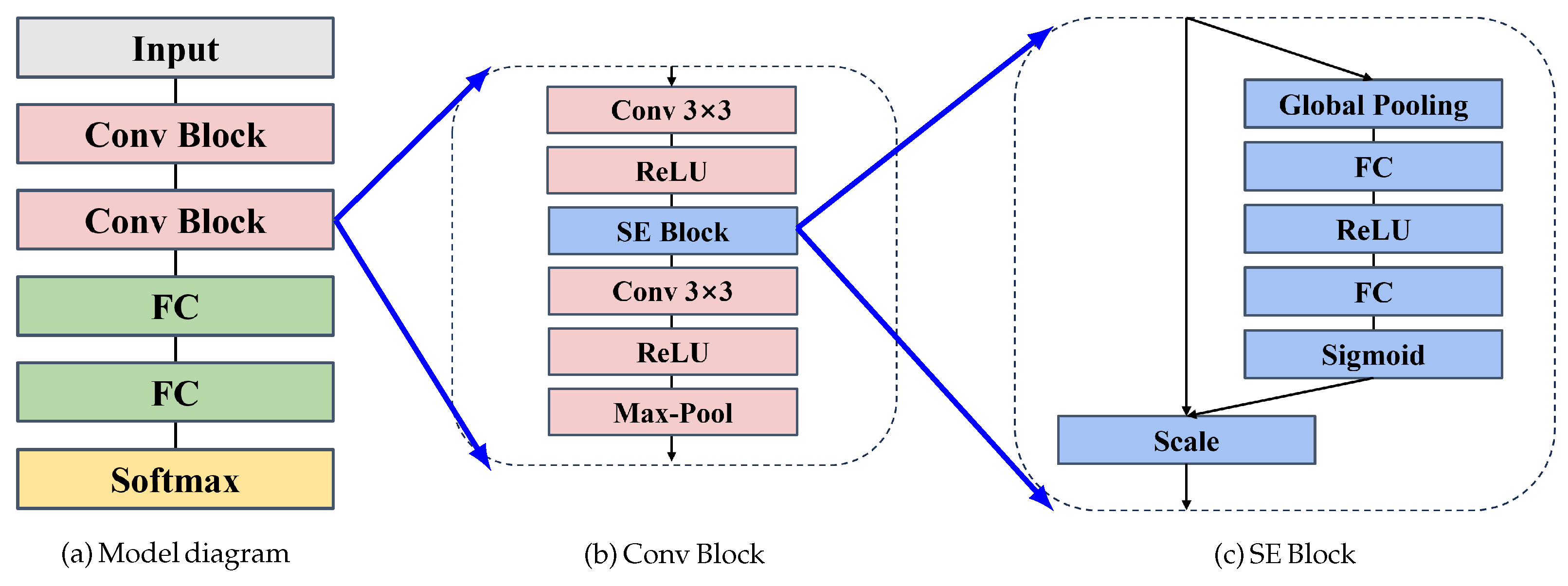

- (2)

- We propose a novel approach that combines Squeeze-and-Excitation (SE) blocks [36] with a Convolutional Neural Network (CNN) for detecting telephone fraud. The SE blocks dynamically learn a set of weights that enable the model to emphasize the most informative features while suppressing less relevant ones. This adaptive adjustment of channel importance enhances the model’s ability to focus on critical features, improving performance on complex tasks like fraud detection. By incorporating SE blocks into the CNN, our method strengthens the network’s feature selection process, leading to more accurate and reliable fraud detection outcomes.

2. Related Work

2.1. Rule-Based Methods

2.2. Traditional Machine Learning

2.3. Deep Learning Approaches

2.4. Graph Neural Networks

3. Materials and Methods

3.1. Datasets

3.2. A Fraud Detection Framework

3.2.1. Feature Engineering

3.2.2. Convolutional Neural Network

4. Experiment and Discussion

4.1. Experiment Setup

4.1.1. Training Environment

4.1.2. Parameter Settings

4.1.3. Evaluation Metrics

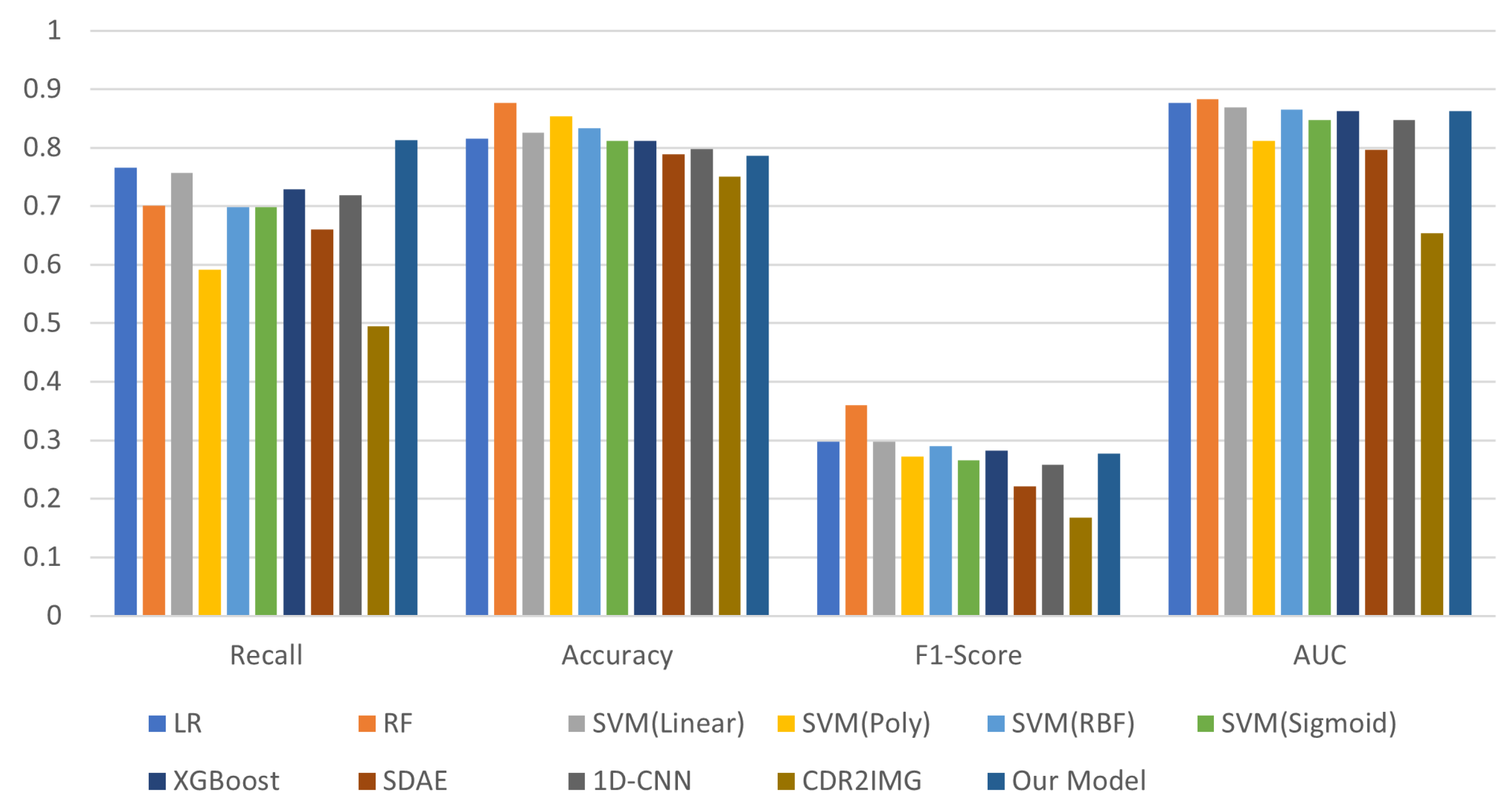

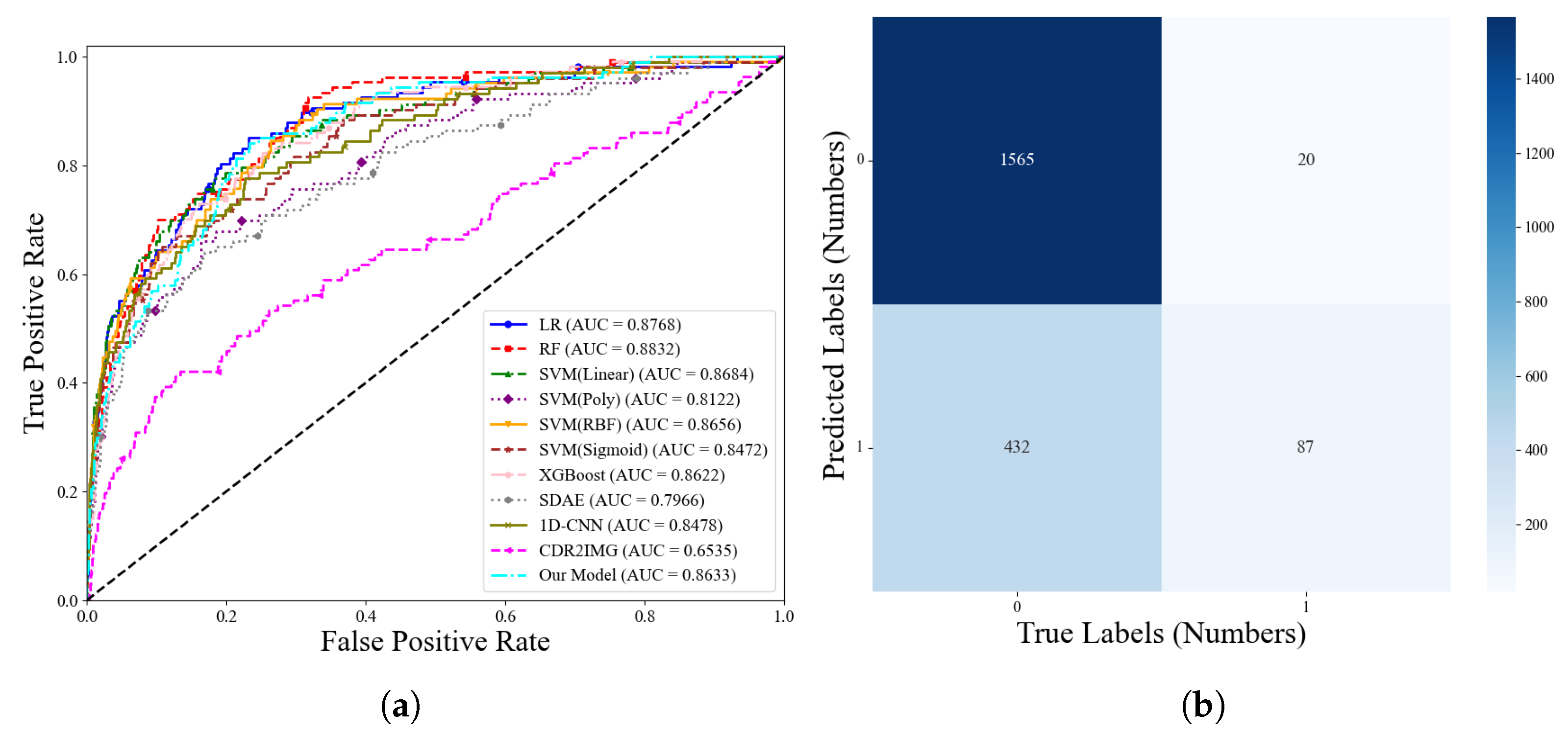

4.2. Experimental Analysis

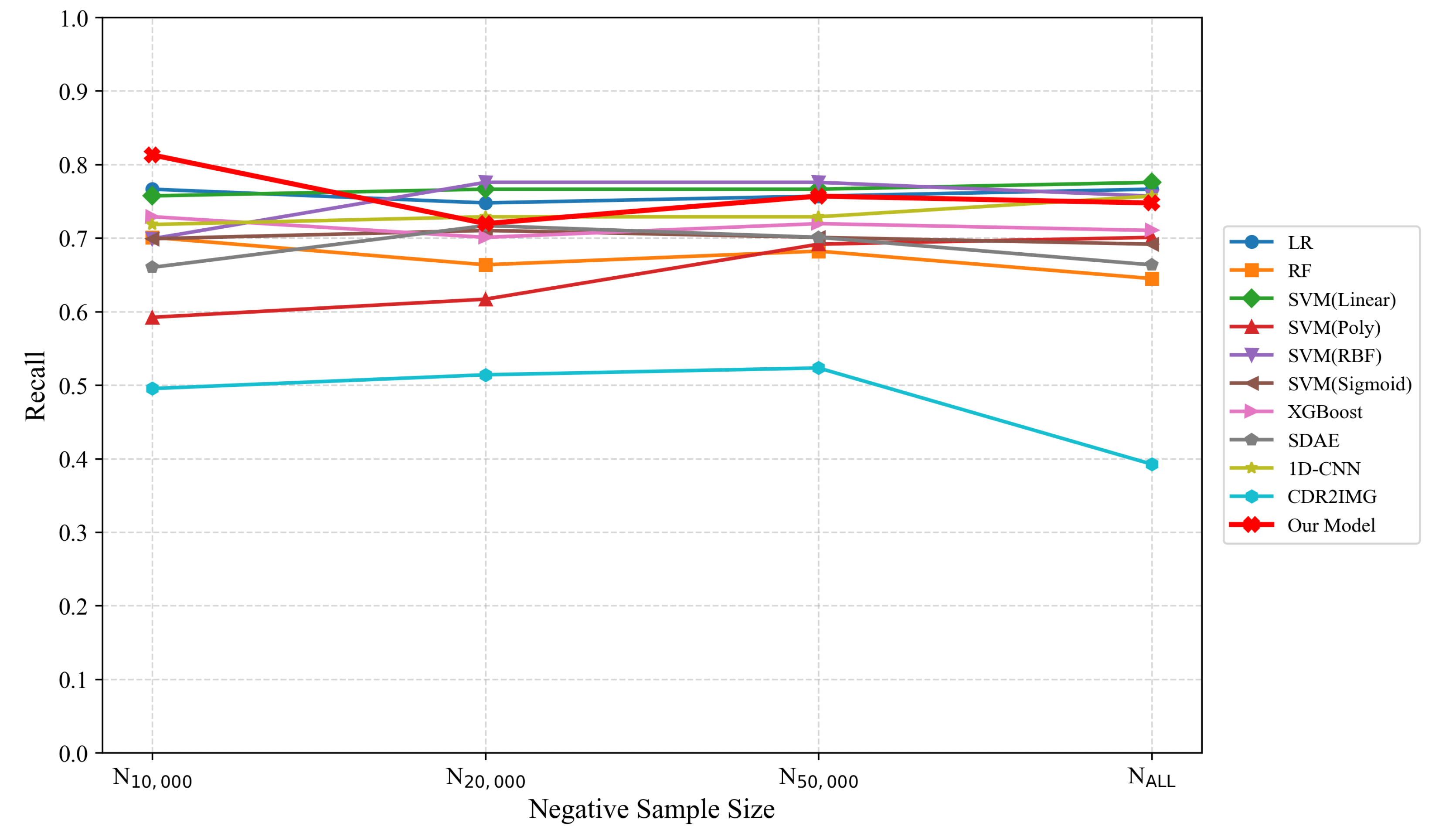

4.2.1. Performance Comparisison

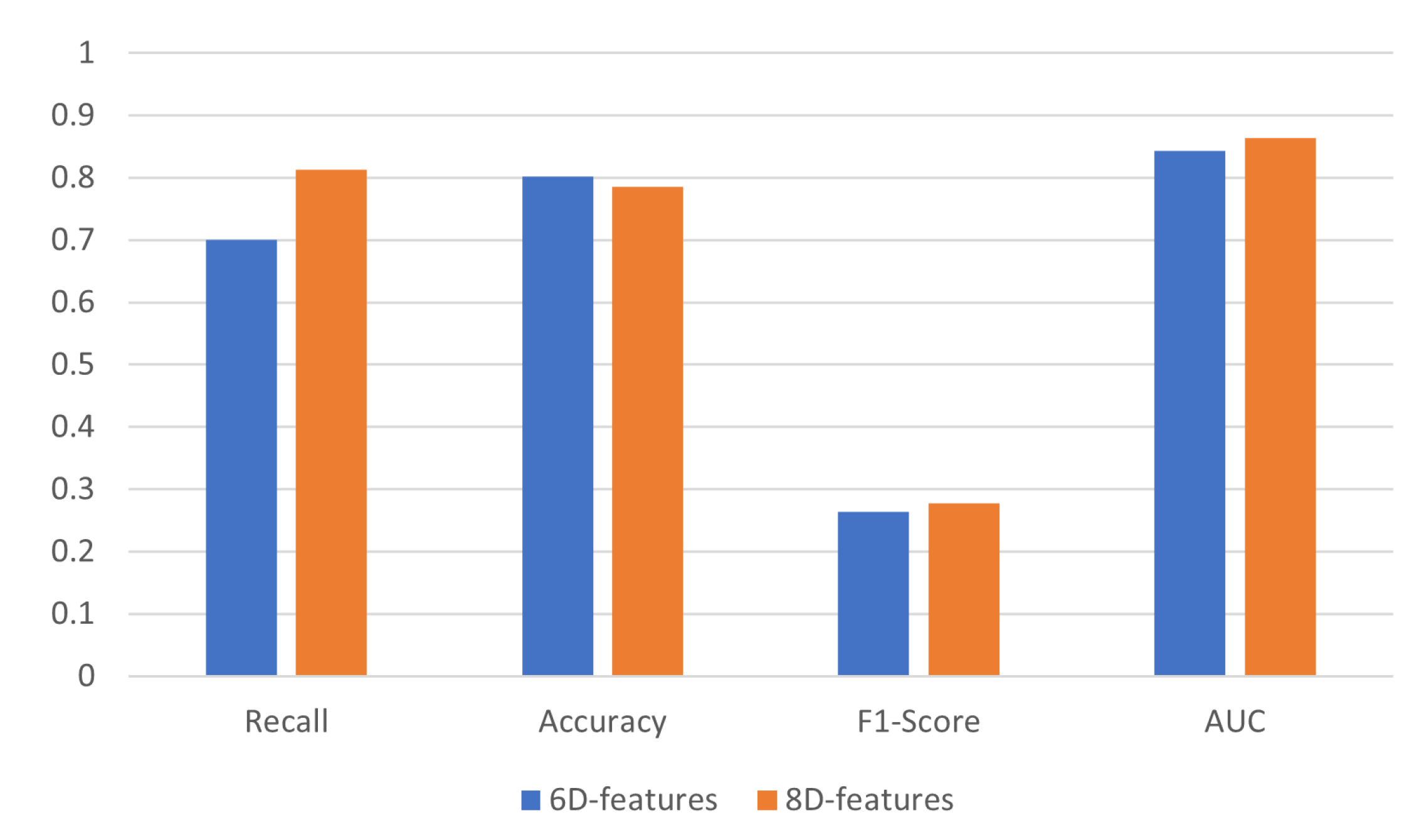

4.2.2. Ablation Study

5. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhu, R.; Ye, H.; Sun, H.; Li, X.; Duan, Y.; Hou, J. Construction and application of knowledge-base in telecom fraud domain. Int. J. Intell. Inf. Database Syst. 2021, 14, 198–214. [Google Scholar] [CrossRef]

- Shen, S. Study on Telecommunication Fraud from a Student’s Perspective. Int. J. Front. Sociol. 2023, 5, 137–142. [Google Scholar]

- The “Two Highs and One Ministry” Issued the “Opinions on Several Issues Concerning the Application of Law in Handling Criminal Cases of Telecom Network Fraud, etc. (II)”. 2021. Available online: https://www.mps.gov.cn:8090/n2254098/n4904352/c7942849/content.html (accessed on 21 September 2025).

- “Public Security 2021” Year-End Review Report. 2021. Available online: https://www.mps.gov.cn/n2254314/n6409334/c8294658/content.html (accessed on 21 September 2025).

- The Crackdown and Governance of New Types of Telecom and Internet-Related Crimes Have Shown Significant Results. 2023. Available online: https://www.mps.gov.cn/n2254314/n6409334/c9061407/content.html (accessed on 21 September 2025).

- Ministry of Public Security: In 2023, a Total of 437,000 Telecom and Internet Fraud Cases Were Solved. 2024. Available online: https://www.chinanews.com.cn/gn/2024/01-09/10142690.shtml (accessed on 21 September 2025).

- How to Overcome the Challenges in Combating Telecom Network Fraud Crimes. 2017. Available online: https://www.spp.gov.cn/llyj/201702/t20170205_180096.shtml (accessed on 21 September 2025).

- Gopal, R.K.; Meher, S.K. A rule-based approach for anomaly detection in subscriber usage pattern. In World Academy of Science, Engineering and Technology; WASET.ORG: Riverside, CT, USA, 2007; pp. 396–399. [Google Scholar]

- Taniguchi, M.; Haft, M.; Hollmén, J.; Tresp, V. Fraud detection in communication networks using neural and probabilistic methods. In Proceedings of the 1998 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP’98 (Cat. No. 98CH36181), Seattle, WA, USA, 15 May 1998; Volume 2, pp. 1241–1244. [Google Scholar]

- Fawcett, T.; Provost, F. Adaptive fraud detection. Data Min. Knowl. Discov. 1997, 1, 291–316. [Google Scholar] [CrossRef]

- Saaid, F.A.; King, R.; Nur, D. Development of Users’ Call Profiles using Unsupervised Random Forest. In Proceedings of the Third Annual ASEARC Conference, Newcastle, Australia, 7–8 December 2009. [Google Scholar]

- Lu, C.; Lin, S.; Liu, X.; Shi, H. Telecom fraud identification based on ADASYN and random forest. In Proceedings of the 2020 5th International Conference on Computer and Communication Systems (ICCCS), Shanghai, China, 15–18 May 2020; pp. 447–452. [Google Scholar]

- Lihong, B.J. Fraud Phone Identification Furthermore, Management Based On Big Data Mining Technology. Chang. Inf. Commun. 2021, 34, 126–128. [Google Scholar]

- Ji, Z.; Ma, Y.c.; Li, S.; Li, J.l. SVM based telecom fraud behavior identification method. Comput. Eng. Softw. 2017, 38, 46–51. [Google Scholar]

- Wang, D.; Wang, Q.-y.; Zhan, S.-y.; Li, F.-x.; Wang, D.-z. A feature extraction method for fraud detection in mobile communication networks. In Proceedings of the Fifth World Congress on Intelligent Control and Automation (IEEE Cat. No. 04EX788), Hangzhou, China, 15–19 June 2004; Volume 2, pp. 1853–1856. [Google Scholar]

- Li, R.; Zhang, Y.; Tuo, Y.; Chang, P. A novel method for detecting telecom fraud user. In Proceedings of the 2018 3rd International Conference on Information Systems Engineering (ICISE), Shanghai, China, 4–6 May 2018; pp. 46–50. [Google Scholar]

- Sallehuddin, R.; Ibrahim, S.; Zain, A.M.; Elmi, A.H. Detecting SIM box fraud by using support vector machine and artificial neural network. J. Teknol. (Sci. Eng.) 2015, 74, 131–143. [Google Scholar] [CrossRef]

- Subudhi, S.; Panigrahi, S. Quarter-sphere support vector machine for fraud detection in mobile telecommunication networks. Procedia Comput. Sci. 2015, 48, 353–359. [Google Scholar] [CrossRef]

- Arafat, M.; Qusef, A.; Sammour, G. Detection of wangiri telecommunication fraud using ensemble learning. In Proceedings of the 2019 IEEE Jordan International Joint Conference on Electrical Engineering and Information Technology (JEEIT), Amman, Jordan, 9–11 April 2019; pp. 330–335. [Google Scholar]

- Gowri, S.M.; Ramana, G.S.; Ranjani, M.S.; Tharani, T. Detection of telephony spam and scams using recurrent neural network (RNN) algorithm. In Proceedings of the 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 19–20 March 2021; Volume 1, pp. 1284–1288. [Google Scholar]

- Zhen, Z.; Gao, J. CDR2IMG: A Bridge from Text to Image in Telecommunication Fraud Detection. Comput. Syst. Sci. Eng. 2023, 47, 955. [Google Scholar] [CrossRef]

- Yang, J.-K.; Xia, W.C. Fraud Call Identification Based on User Behavior Analysis. Comput. Syst. Appl. 2021, 30, 311–316. [Google Scholar]

- Wahid, A.; Msahli, M.; Bifet, A.; Memmi, G. NFA: A neural factorization autoencoder based online telephony fraud detection. Digit. Commun. Netw. 2024, 10, 158–167. [Google Scholar] [CrossRef]

- Li, S.; Xu, G.; Liu, Y. Fraud Call Identification Based on Broad Learning System and Convolutional Neural Networks. In Proceedings of the 2022 IEEE 8th International Conference on Computer and Communications (ICCC), Chengdu, China, 9–12 December 2022; pp. 1471–1476. [Google Scholar]

- Hu, X.; Chen, H.; Liu, S.; Jiang, H.; Chu, G.; Li, R. BTG: A Bridge to Graph machine learning in telecommunications fraud detection. Future Gener. Comput. Syst. 2022, 137, 274–287. [Google Scholar] [CrossRef]

- Ren, L.; Zang, Y.; Hu, R.; Li, D.; Wu, J.; Huan, Z.; Hu, J. Do not ignore heterogeneity and heterophily: Multi-network collaborative telecom fraud detection. Expert Syst. Appl. 2024, 257, 124974. [Google Scholar] [CrossRef]

- Chu, G.; Wang, J.; Qi, Q.; Sun, H.; Tao, S.; Yang, H.; Liao, J.; Han, Z. Exploiting Spatial-Temporal Behavior Patterns for Fraud Detection in Telecom Networks. IEEE Trans. Dependable Secur. Comput. 2023, 20, 4564–4577. [Google Scholar] [CrossRef]

- Hu, X.; Chen, H.; Chen, H.; Li, X.; Zhang, J.; Liu, S. Mining mobile network fraudsters with augmented graph neural networks. Entropy 2023, 25, 150. [Google Scholar] [CrossRef]

- Wu, J.; Hu, R.; Li, D.; Ren, L.; Huang, Z.; Zang, Y. Beyond the individual: An improved telecom fraud detection approach based on latent synergy graph learning. Neural Netw. 2024, 169, 20–31. [Google Scholar] [CrossRef]

- Koi-Akrofi, G.Y.; Koi-Akrofi, J.; Odai, D.A.; Twum, E.O. Global telecommunications fraud trend analysis. Int. J. Innov. Appl. Stud. 2019, 25, 940–947. [Google Scholar]

- Ghosh, K.; Bellinger, C.; Corizzo, R.; Branco, P.; Krawczyk, B.; Japkowicz, N. The class imbalance problem in deep learning. Mach. Learn. 2024, 113, 4845–4901. [Google Scholar] [CrossRef]

- Gambo, M.L.; Zainal, A.; Kassim, M.N. A convolutional neural network model for credit card fraud detection. In Proceedings of the 2022 International Conference on Data Science and Its Applications (ICoDSA), Bandung, Indonesia, 6–7 July 2022; pp. 198–202. [Google Scholar]

- Priscilla, C.V.; Prabha, D.P. Influence of optimizing xgboost to handle class imbalance in credit card fraud detection. In Proceedings of the 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 August 2020; pp. 1309–1315. [Google Scholar]

- Huang, H.; Liu, B.; Xue, X.; Cao, J.; Chen, X. Imbalanced credit card fraud detection data: A solution based on hybrid neural network and clustering-based undersampling technique. Appl. Soft Comput. 2024, 154, 111368. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Subudhi, S.; Panigrahi, S. Use of Possibilistic fuzzy C-means clustering for telecom fraud detection. In Computational Intelligence in Data Mining, Proceedings of the International Conference on CIDM, Bhubaneswar, India, 10–11 December 2016; Springer: Singapore, 2017; pp. 633–641. [Google Scholar]

- Xing, J.; Yu, M.; Wang, S.; Zhang, Y.; Ding, Y. Automated fraudulent phone call recognition through deep learning. Wirel. Commun. Mob. Comput. 2020, 2020, 8853468. [Google Scholar] [CrossRef]

- Hu, X.; Chen, H.; Zhang, J.; Chen, H.; Liu, S.; Li, X.; Wang, Y.; Xue, X. GAT-COBO: Cost-Sensitive Graph Neural Network for Telecom Fraud Detection. IEEE Trans. Big Data 2024, 10, 528–542. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, C.; Lu, M.; Yang, J.; Gui, J.; Zhang, S. From Simple to Complex Scenes: Learning Robust Feature Representations for Accurate Human Parsing. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5449–5462. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, Q.; Wang, X.; Zhou, L.; Li, Q.; Xia, Z.; Ma, B.; Shi, Y.Q. Light-Field Image Multiple Reversible Robust Watermarking Against Geometric Attacks. IEEE Trans. Dependable Secur. Comput. 2025; Early Access. [Google Scholar]

| Layer | Number of Kernels | Kernel Size | Stride | Padding | Output |

|---|---|---|---|---|---|

| Input layer | 24 × 31 × 8 | ||||

| Conv1 | 64 | 3 × 3 | (1, 1) | same | 24 × 31 × 64 |

| SE1 | 64 | - | - | - | 24 × 31 × 64 |

| Conv2 | 128 | 3 × 3 | (1, 1) | valid | 19 × 26 × 128 |

| Max-pool1 | - | 2 × 2 | (2, 2) | 0 | 9 × 13 × 128 |

| Conv3 | 64 | 3 × 3 | (1, 1) | same | 9 × 13 × 64 |

| SE2 | 64 | - | - | - | 9 × 13 × 64 |

| Conv4 | 128 | 3 × 3 | (1, 1) | valid | 4 × 8 × 128 |

| Max-pool2 | - | 2 × 2 | (2, 2) | 0 | 2 ×4 × 128 |

| FC1 | - | - | - | - | 256 × 1 |

| FC2 | - | - | - | - | 2 × 1 |

| Model | Hyperparameters |

|---|---|

| Our proposed model | Epoch = 100, batch = 8, the optimization method is Adam, learning rate = 0.0001, decay = 1 , = 0.95, = 3 |

| LR | C = 100, penalty = ‘12’ |

| RF | max_depth = 13, max_features = 9, min_sample_leaf = 10, min_samples_split = 50, n_estimators = 200 |

| SVM (linear/poly/RBF/sigmoid) | C = 100, gamma = ‘auto’, cache_ = 500 |

| XGBoost | colsample_bytree = 0.8, gamma = 0, learning rate = 0.01, max_depth = 3, n_estimators = 100 |

| SDAE | Epoch = 800, batch = 512, the optimization method is Adam, learning rate = 0.0001, decay = 1 , = 0.95, = 3 |

| 1D-CNN | Epoch = 150, batch = 8, the optimization method is Adam, learning rate = 0.0001, decay = 0.0001, = 0.95, = 3 |

| CDR2IMG | Epoch = 150, batch = 8, the optimization method is Adam, learning rate = 0.0001, decay = 0.0001, = 0.95, = 3 |

| User Status | Prediction = 0 | Prediction = 1 |

|---|---|---|

| label = 0 | TN | FP |

| label = 1 | FN | TP |

| Negative Sampel Count | Metric | LR | RF | SVM (L) | SVM (P) | SVM (R) | SVM (S) | XGBoost | SDAE | 1D-CNN | CDR2IMG | Our Model |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| N10,000 | Recall | 0.7664 | 0.7009 | 0.7573 | 0.5922 | 0.6990 | 0.6990 | 0.7290 | 0.6601 | 0.7184 | 0.4953 | 0.8130 |

| Accuracy | 0.8156 | 0.8763 | 0.8251 | 0.8451 | 0.8327 | 0.8113 | 0.8118 | 0.7882 | 0.7975 | 0.7509 | 0.7859 | |

| F1-score | 0.2971 | 0.3606 | 0.2977 | 0.2723 | 0.2903 | 0.2662 | 0.2826 | 0.2218 | 0.2578 | 0.1682 | 0.2779 | |

| AUC | 0.8768 | 0.8832 | 0.8684 | 0.8122 | 0.8656 | 0.8472 | 0.8118 | 0.7966 | 0.8478 | 0.6535 | 0.8632 | |

| N20,000 | Recall | 0.7477 | 0.6636 | 0.7664 | 0.6168 | 0.7757 | 0.7103 | 0.7009 | 0.7169 | 0.7289 | 0.5140 | 0.7196 |

| Accuracy | 0.8225 | 0.8880 | 0.8232 | 0.8442 | 0.8293 | 0.8191 | 0.8093 | 0.8093 | 0.8397 | 0.7138 | 0.8190 | |

| F1-score | 0.1800 | 0.2359 | 0.1887 | 0.1710 | 0.1915 | 0.1698 | 0.1608 | 0.1381 | 0.1914 | 0.0856 | 0.1714 | |

| AUC | 0.8775 | 0.8813 | 0.8809 | 0.8221 | 0.8862 | 0.8577 | 0.8693 | 0.8289 | 0.8868 | 0.6461 | 0.8734 | |

| N50,000 | Recall | 0.7570 | 0.6822 | 0.7664 | 0.6916 | 0.7757 | 0.7009 | 0.7196 | 0.7009 | 0.7289 | 0.5233 | 0.7570 |

| Accuracy | 0.8241 | 0.8849 | 0.8272 | 0.8226 | 0.8333 | 0.7720 | 0.8091 | 0.8135 | 0.8206 | 0.6754 | 0.8069 | |

| F1-score | 0.0835 | 0.1115 | 0.0859 | 0.0762 | 0.0897 | 0.0320 | 0.0739 | 0.0736 | 0.0792 | 0.0330 | 0.0766 | |

| AUC | 0.8755 | 0.8757 | 0.8793 | 0.8357 | 0.8863 | 0.8227 | 0.8618 | 0.8288 | 0.8844 | 0.6321 | 0.8616 | |

| NALL | Recall | 0.7664 | 0.6449 | 0.7757 | 0.7009 | 0.7570 | 0.6916 | 0.7103 | 0.6635 | 0.7570 | 0.3925 | 0.7476 |

| Accuracy | 0.8233 | 0.8837 | 0.8252 | 0.814 | 0.8368 | 0.7073 | 0.8089 | 0.8321 | 0.8379 | 0.8066 | 0.7713 | |

| F1-score | 0.0471 | 0.0595 | 0.0482 | 0.0412 | 0.0502 | 0.0262 | 0.0209 | 0.0431 | 0.0505 | 0.0226 | 0.0362 | |

| AUC | 0.8763 | 0.8777 | 0.8816 | 0.8339 | 0.8812 | 0.7753 | 0.8505 | 0.8088 | 0.8755 | 0.6064 | 0.8429 |

| Model | Recall | Accuracy | F1-Score | AUC |

|---|---|---|---|---|

| 6d feature model | 0.7009 | 0.8018 | 0.2645 | 0.8437 |

| 8d feature model (WCE) | 0.7757 | 0.7884 | 0.2716 | 0.8436 |

| 8d feature model | 0.8130 | 0.7859 | 0.2779 | 0.8632 |

| 10d feature model | 0.7102 | 0.8174 | 0.2835 | 0.8677 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Dang, J.; Wang, Y.; Yang, J. Image-Based Telecom Fraud Detection Method Using an Attention Convolutional Neural Network. Entropy 2025, 27, 1013. https://doi.org/10.3390/e27101013

Li J, Dang J, Wang Y, Yang J. Image-Based Telecom Fraud Detection Method Using an Attention Convolutional Neural Network. Entropy. 2025; 27(10):1013. https://doi.org/10.3390/e27101013

Chicago/Turabian StyleLi, Jiyuan, Jianwu Dang, Yangping Wang, and Jingyu Yang. 2025. "Image-Based Telecom Fraud Detection Method Using an Attention Convolutional Neural Network" Entropy 27, no. 10: 1013. https://doi.org/10.3390/e27101013

APA StyleLi, J., Dang, J., Wang, Y., & Yang, J. (2025). Image-Based Telecom Fraud Detection Method Using an Attention Convolutional Neural Network. Entropy, 27(10), 1013. https://doi.org/10.3390/e27101013