Abstract

Quantum reservoir computing (QRC) has emerged as a promising paradigm for harnessing near-term quantum devices to tackle temporal machine learning tasks. Yet, identifying the mechanisms that underlie enhanced performance remains challenging, particularly in many-body open systems where nonlinear interactions and dissipation intertwine in complex ways. Here, we investigate a minimal model of a driven-dissipative quantum reservoir described by two coupled Kerr-nonlinear oscillators, an experimentally realizable platform that features controllable coupling, intrinsic nonlinearity, and tunable photon loss. Using Partial Information Decomposition (PID), we examine how different dynamical regimes encode input drive signals in terms of redundancy (information shared by each oscillator) and synergy (information accessible only through their joint observation). Our key results show that, near a critical point marking a dynamical bifurcation, the system transitions from predominantly redundant to synergistic encoding. We further demonstrate that synergy amplifies short-term responsiveness, thereby enhancing immediate memory retention, whereas strong dissipation leads to more redundant encoding that supports long-term memory retention. These findings elucidate how the interplay of instability and dissipation shapes information processing in small quantum systems, providing a fine-grained, information-theoretic perspective for analyzing and designing QRC platforms.

1. Motivation and Introduction

Reservoir computing (RC) is a computational paradigm that harnesses the intrinsic dynamics of complex systems to process time-dependent inputs efficiently [1,2,3]. Unlike conventional recurrent neural networks (RNNs), RC requires training only at the readout layer, circumventing expensive weight-update procedures on internal nodes [4]. Quantum reservoir computing (QRC) extends these ideas to quantum platforms, leveraging quantum superposition and entanglement to amplify the dimensionality of the feature space and potentially enhance computational capabilities [3,5]. Early demonstrations of QRC have shown promise in tasks like time-series prediction, classification, and memory capacity estimation, and ongoing efforts explore a range of theoretical and experimental strategies for improving performance [6,7,8,9,10,11,12,13,14,15].

Recent QRC research has primarily focused on many-body quantum systems, where quantum phase transitions are suspected to boost computational expressivity [16,17]. While numerical studies reveal intriguing heuristics, such as enhanced memory capacity near critical points, designing optimal quantum reservoirs remains an open question, partly due to the complexity of analyzing large quantum systems. Here, we adopt a complementary approach by studying a pair of coupled Kerr-nonlinear oscillators, a minimal yet experimentally realizable quantum platform [18]. This system exhibits rich dynamical behaviors, including dynamical instability (bifurcation) and dissipation due to photon loss that can be precisely tuned. As such, it offers a tractable yet nontrivial testbed for exploring how quantum correlation, instability, and dissipation govern quantum information processing.

To dissect how these coupled oscillators encode incoming signals, we draw on information-theoretic concepts from neuroscience, where measures of synergy and redundancy helped analyze how neural networks collectively encode stimuli [19,20,21,22]. As the traditional mutual information metric fails to separate out redundant and synergistic contributions to information encoding, we employ Partial Information Decomposition (PID) [23], which partitions the total information into three components: redundancy, capturing information that both oscillators share; unique information, provided by each oscillator individually; and synergy, arising only when both oscillators are observed together. This perspective provides a fine-grained view of the internal encoding structure of the reservoir.

To connect the system’s dynamics to its information-encoding strategy, we combine numerical simulations with non-equilibrium mean-field theory based on the Keldysh formalism [24], focusing on how small external perturbations propagate through the system. In particular, we study how the coupling strength, frequency detuning, and photon loss rate influence the system’s response, and then show how these distinct dynamical regimes lead to different synergy and redundancy profiles in the oscillators’ outputs.

Our main findings reveal that near a critical coupling strength leading to dynamical bifurcation, the system transitions from predominantly redundant encoding to a regime featuring significant synergistic information. This synergistic behavior arises from the interplay between fast collective oscillations and overdamped soft modes. We show that increasing dissipation suppresses quantum correlations and promotes highly redundant encoding modes. In contrast, near the onset of dynamical instability, synergy is amplified and enriches short-term responsiveness, improving short-term memory retention. Taken together, these results highlight how dissipation and dynamic instability in a minimal system can steer a quantum reservoir toward redundant or synergistic processing, each regime benefiting different computational tasks.

In relation to recent proposals using two coupled Kerr-nonlinear oscillators as quantum reservoirs [25,26], which highlight the roles of dissipation for fading memory and moderate coupling for richer dynamics, our work differs by focusing on PID to examine how synergy and redundancy influences the reservoir’s memory capacity. This complements prior findings to show that critical points in Kerr dynamics can shift encoding from redundant to synergistic regimes. Meanwhile, single Kerr oscillators with large Hilbert spaces [13,27] highlight how dimensionality alone can serve as a computational resource, but our key question of whether the whole can exceed the sum of its parts requires at least two coupled oscillators for emergent synergistic encoding. Lastly, although [28] studies larger arrays of Kerr oscillators and demonstrates the near-bifurcation enhancements of nonlinear memory and employ higher-order cumulant expansions to handle higher photon numbers, we limit ourselves to at most a second-order expansion in a parameter regime where it remains accurate, focusing on the PID-based insights rather than reservoir benchmarks and thus complementing other findings in the literature.

To guide the reader, this paper is organized as follows. Section 2 introduces the coupled Kerr-oscillator model and the relevant information-theoretic measures, including PID and quantum mutual information. Section 3 then presents our core numerical findings on synergy and redundancy, comparing fully quantum dynamics with both mean-field and cumulant expansion analyses. We also discuss the mechanisms driving redundant and synergistic encoding and examine how dissipation influences these encoding modes. In Section 3.4, we connect these insights to the quantum reservoir’s memory capacity. Finally, we conclude in Section 4 by summarizing our results and outlining directions for future research. A pedagogical overview of PID can be found in Appendix A and Appendix B, and the details of Keldysh approach to linear response analysis is provided in Appendix C.

2. Quantum Model, Relevant Information Measures, and Performance Metrics

We first introduce our quantum system to study the interplay of instability and dissipation and their influence on modes of information encoding. Then, we outline the key goals of our study, and introduce information measures and metrics to characterize our quantum systems, and finally describe the numerical methods to study them.

2.1. Model

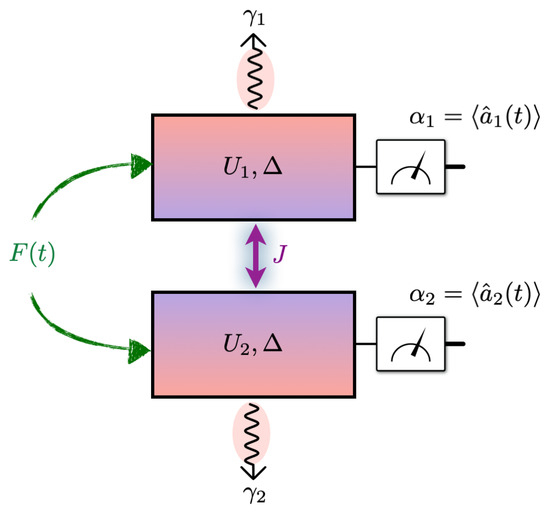

We consider a minimal model that can exhibit dynamical transitions from simple to more complex dynamics, and also support both redundant and synergistic modes of information encoding: a pair of coupled Kerr-nonlinear oscillators. Such systems are well studied in cavity quantum electrodynamics and nonlinear optics, where the interplay of nonlinearities and external driving yields rich dynamical behavior [29,30,31,32]. By focusing on just two coupled cavities, each supporting a single mode at a particular resonance frequency, we avoid the complexity of many-body phases that arise in the thermodynamic limit, thus isolating the essential ingredients needed to explore the onset of coordinated information encoding behaviors in quantum systems. The schematic diagram of this setup is presented in Figure 1.

Figure 1.

Schematic of two coupled Kerr-nonlinear oscillators. Each cavity i features a Kerr nonlinearity and a photon loss rate . A time-dependent drive (green arrows) injects identical signals into both cavities, while the coherent tunneling of strength J (violet arrow) couples the two modes. We measure the mean fields to probe the system’s response. The Hamiltonian is specified by Equation (1), and the Lindblad Equation (2) governs this driven-dissipative dynamics. We assume both cavities have the same detuning from the drive frequency. This work investigates how the readouts encode the time-dependent drive across different dynamical regimes of the coupled Kerr oscillators.

We work in a frame rotating at the driving frequency , and the corresponding Hamiltonian is given by

where and are the annihilation and creation operators for the cavity mode, respectively. The parameter J governs the coupling strength between the two cavities, enabling photon tunneling and collective mode formation [30]. The detuning measures the offset of the cavity’s resonance frequency from the driving frequency. The nonlinear Kerr coefficient characterizes the anharmonicity of each mode which introduces photon-photon interactions essential for generating nonclassical states. And is a common external drive that contains time-dependent information applied to both cavities.

To observe transitions between redundant and synergistic information encoding, the two cavities must differ in their nonlinear properties. In particular, having distinct Kerr coefficients () breaks symmetry and enables nontrivial interactions between the modes. With these minimal ingredients (a coherent drive, a tunable coupling, and carefully chosen nonlinearities), this system provides a controlled setting to study the fundamental mechanisms underlying both redundant and synergistic coding in interacting quantum systems.

To incorporate noise and dissipation, we consider the time evolution of an open quantum system weakly coupled to a Markovian bath. Specifically, we model the dynamics of the system’s density matrix using the Lindblad master equation [33]:

where is the photon decay rate of the ith cavity associated with the Lindblad superoperator describing single-photon loss, , which acts on the density matrix as

In our simulations, we take for simplicity.

For the common time-dependent external driving field , we choose , where is a dimensionless, time-dependent signal, and F is a characteristic strength of the drive. To ensure that the system’s intrinsic dynamics dominate, we select F to be small or comparable to other energy scales, and regard as a perturbation. In this work, is taken to be a symmetric telegraph process with [34]. While telegraph noise may not be a directly implementable noise model in all bosonic systems (an open quantum system can couple to telegraph noise if it interacts with a fermionic bath; see [35,36] for related studies), we employ it here as a convenient testing ground. Its well-characterized statistical properties [34] and ease of numerical simulation make it a useful drive model for probing how redundant and synergistic information encoding emerges in quantum dynamics. In Section 3.2, we also compare the results with those obtained using different noise models to assess their generality.

2.2. From Quantum to Semiclassical (Mean-Field) Dynamics

Directly simulating the Lindblad equation is practical only when the average photon number is small, as the Hilbert space dimension grows rapidly with photon occupation. In this low-photon regime, we simulate the full quantum dynamics in Equation (2) to capture all quantum correlations, compute PID and QMI, and analyze how nonclassical effects influence information encoding.

As we increase the driving strength or adjust parameters to reach higher photon-number regimes, the full quantum simulation becomes computationally expensive. In this regime, quantum fluctuations often play a less significant role, and a semiclassical approximation becomes suitable. By factorizing expectation values as , the dynamics reduce to coupled nonlinear ordinary differential equations (ODEs) for :

These ODEs are much easier to solve, allowing us to examine information encoding under conditions where the photon number is large and quantum correlations are negligible.

To bridge the gap between the fully quantum and semiclassical treatments, we also employ a second-order cumulant expansion (see Appendix D). This approach partially restores some quantum correlations while remaining more tractable than the full density-matrix simulation. We expect that in parameter regimes where quantum correlations matter, the cumulant expansion will improve upon the semiclassical approximation but still remain simpler than the full quantum approach.

2.3. Characterizing Information Processing

To characterize how our system of coupled Kerr-nonlinear oscillators processes and encodes the input signal into the output readouts taken to be

we analyze three complementary figures of merit. First, we use the Partial Information Decomposition (PID) to separate the total information that the output observables encode about the input into redundant and synergistic components. Second, we employ the quantum mutual information (QMI) to quantify the role of quantum correlations in shaping these encoding modes. Finally, we consider the memory capacity in a quantum reservoir computing (QRC) context to assess how information is retained over time. While PID and QMI directly characterize the system’s response to external inputs without any training procedure, the memory capacity inherently involves a training step to quantify how well past inputs can be reconstructed from the system’s outputs. In this way, all three measures together provide a comprehensive view of the system’s information processing capabilities.

2.3.1. Partial Information Decomposition (PID)

Let s, , and be random variables representing the input signal and the measured observables from the two oscillators, respectively. The mutual information can be decomposed into redundant, synergistic, and unique components as

where is the redundant information present in both and , is the unique information contributed solely by , and is the synergistic information accessible only through the joint knowledge of and .

By constructing the empirical joint distribution from simulation data and applying the BROJA-2PID algorithm [37], we isolate and . This allows us to determine whether the oscillators encode input information redundantly or synergistically, thereby shedding light on their cooperative information processing strategies across different dynamical phases of the system. More detailed discussions and example calculations of PID can be found in Appendix A and Appendix B.

2.3.2. Quantum Mutual Information (QMI)

Although our primary Partial Information Decomposition (PID) analysis focuses on classical correlations between input-output variables, the underlying reservoir dynamics remain fundamentally quantum. To probe the quantum correlations inherent in our system, we compute the quantum mutual information (QMI) between the two oscillators.

Let be the density matrix describing the joint state of the two coupled Kerr oscillators. We partition the system into subsystems 1 and 2, each corresponding to one oscillator. The QMI is then given by

where and are the reduced density matrices of each oscillator, and is the von Neumann entropy.

Because each oscillator’s Hilbert space is, in principle, infinite dimensional, we employ a finite photon-number basis up to a cutoff to ensure computational tractability. Namely,

where is the Fock state with photons in oscillator i, and are the matrix elements obtained by time-averaging the steady-state solution of the master equation. We verify that increasing beyond ∼10 does not significantly alter the results within the quantum regime studied in this work, suggesting that the computed QMI converges to a within numerical precision.

To evaluate Equation (7), we first diagonalize the full two-oscillator density matrix

where and are the eigenvalues and eigenbases of , respectively. We then obtain the reduced density matrices for each oscillator by tracing out the other as and Both and are similarly diagonalized to compute their von Neumann entropies, and . Substituting these into Equation (7) gives the QMI. The resulting quantifies the total correlations between the two oscillators, including both classical and quantum components, and thus complements a classical PID-based analysis.

2.3.3. Memory Capacity (MC)

In addition to instantaneous encoding, we are interested in how the system stores information over time, as is relevant in quantum reservoir computing (QRC). The memory capacity quantifies how well the current state of the reservoir (the outputs , ) retains information about past inputs . By analyzing how the reconstruction error of past inputs varies with the delay , we derive a memory measure that complements the PID and QMI analyses.

A high memory capacity suggests that the reservoir not only encodes the input at a given instant but also preserves information over extended periods. Comparing the memory capacity with and reveals how different dynamical regimes influence both the instantaneous and temporal dimensions of information processing in this system. Details of the MC calculation are provided in Section 3.4.

3. Results and Discussion

We now present numerical evidence that coupled Kerr oscillators can encode input signals in either a redundant or synergistic fashion, depending on J, , and .

3.1. Emergence of Synergistic Encoding

We begin by examining how the joint mutual information (MI), , compares to the individual MIs and when probing our driven-dissipative system. We focus on two representative parameter sets: a mean-field regime with larger drive and smaller Kerr nonlinearities, and a quantum regime with smaller drive and larger Kerr nonlinearities. In both cases, we fix the detuning and damping at and . Concretely, in the mean-field case, we take , , and , while in the quantum regime, we take , , and .

3.1.1. Synergy from Total Mutual Information Consideration

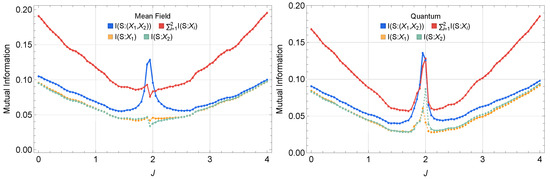

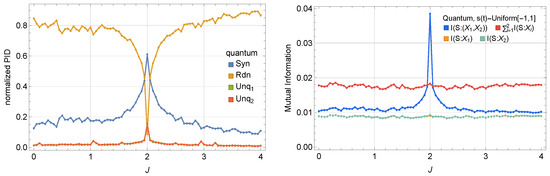

Figure 2 compares with and , revealing that

in the mean-field dynamics regime. This information excess indicates that measuring both and jointly can reveal strictly more information about the external drive signal than either observable alone, suggesting a potential synergistic encoding mechanism.

Figure 2.

Classical mutual information , compared to and in (Left) a mean-field regime and (Right) a quantum regime. In the mean-field regime, exceeds or alone near , hinting at synergy. On the other hand, in the quantum regime, is comparable to, but not always exceeding, the sum of and .

3.1.2. Transition from Redundant to Synergistic Encoding

To further investigate whether this information surplus really originates from synergistic effects (rather than unique information in each oscillator), we perform Partial Information Decomposition (PID) according to Equation (6). For clarity, we normalize synergy and redundancy by , respectively,

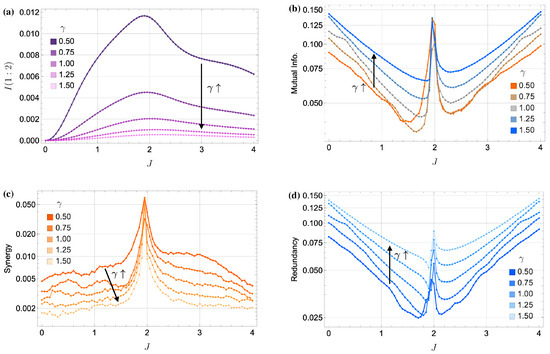

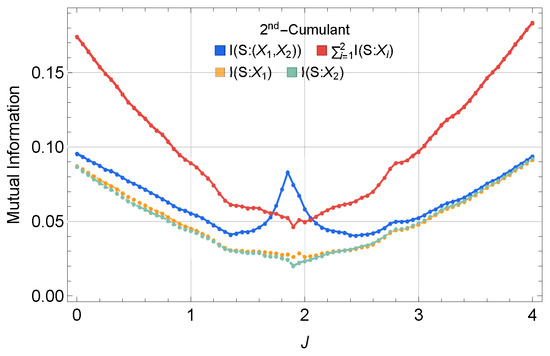

In Figure 3, we compare the normalized synergy (left) and redundancy (right) across three regimes (mean-field, second-order cumulant, and fully quantum) at fixed detuning and dissipation. As we sweep the coupling strength J from small to large, a pronounced synergy peak emerges near , marking a transition from predominantly redundant encoding to notably higher synergy (near ). In the quantum regime, stronger quantum correlations bias the encoding scheme slightly toward redundancy even near the peak. In contrast, the second-order cumulant approach captures the partial quantum correlations that lie between the mean-field regime (where correlations are suppressed) and the fully quantum regime (where all orders of correlations may appear). This second-order cumulant dynamics provides an approximate interpolation for regimes where quantum correlations are significant but not too strong (we set to represent a dynamical regime with non-negligible quantum correlations, motivating the use of second-order cumulants); see Appendix D.

Figure 3.

(Left) Normalized synergy vs. the coupling J. (Right) Normalized redundancy vs. the coupling J. A pronounced peak near marks the crossover from predominantly redundant to more synergistic encoding. In the fully quantum description, enhanced quantum correlations can favor redundancy even at the transition, whereas second-order cumulants interpolate between mean-field and quantum descriptions.

3.2. Underlying Mechanisms Enhancing Synergistic Coding: The Role of Soft and Fast Modes

In this section, we explain the sharp increase in synergy observed near , and attribute this behavior to the interplay between soft and fast modes in the coupled Kerr oscillators. Specifically, we demonstrate that the dominance of fast modes, enabled by the overdamping of soft modes, enhances coherent collective dynamics, leading to an increase in synergistic information.

3.2.1. Soft Modes and Potential Landscape Flatness

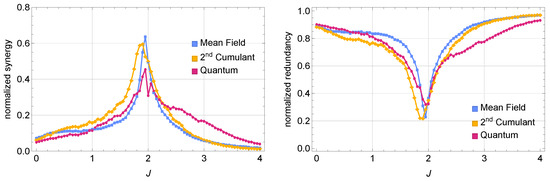

In the mean-field approximation without external drive (), the coupled Kerr oscillators evolve in the following effective potential (see Appendix C), which captures the interplay of detuning, Kerr nonlinearities, and coupling

where and . At , the Hessian of V evaluated at the steady-state solution develops zero eigenvalues, corresponding to flat or marginal directions. These flat directions represent soft modes, characterized by near-zero oscillation frequencies. Specifically, when evaluated at the steady state , the Hessian matrix of this effective potential (10) calculated in terms of the vector is

The eigenvalues of this Hessian are

For the relevant parameter regime in which and , exactly when that two flat directions (zero eigenvalues) emerge, and the other two directions are unstable at the second order (negative eigenvalues); see Figure 4.

Figure 4.

Effective potential around the steady state (red dot) in the regime, projected onto . (Left) When , the steady state is weakly unstable in all directions, with no soft modes present, and the system predominantly encodes inputs redundantly. (Center) At the critical point , flat directions appear, marking the onset of soft modes. In this near-critical regime, collective oscillations enhance synergistic encoding. (Right) For , the potential deforms into a saddle, with two stable and two unstable directions. Here, the soft modes again disappear, and the system encodes inputs redundantly.

3.2.2. Overdamping of Soft Modes at

Including dissipation with a rate can transform the nearly flat directions into overdamped dynamics as follows. Following the Keldysh formalism [38,39,40] (see Appendix C), the retarded Green’s function shows poles of the form

where (slow) and (fast) label the respective branches. Exactly at , the real part of vanishes, leaving only , indicating an overdamped relaxation to the steady state. In contrast, the fast modes remain oscillatory with frequencies . Consequently, at , the dynamics are dominated by the coherent oscillations of the fast modes, as the soft modes contribute only non-oscillatory relaxation.

3.2.3. Coherence-Driven Synergy Enhancement

When the dissipation rate is comparable to the oscillatory frequency of the fast modes (), the dominance of fast modes at reduces competition between oscillatory frequencies (soft modes disappear and only fast modes persist) and enhances the coherence of the system’s collective response. More specifically, following the discussion in Appendix C, one can consider the relaxation dynamics of the small perturbation around the steady state. It follows that the relaxation dynamics of each observable at site j can be expressed as

where and are initial-perturbation-dependent mode amplitudes. When , the soft mode contribution simplifies to pure exponential decay, and the dissipative dynamics are dominated by the single oscillatory frequency of the fast modes.

This coherence relaxation eliminates competing oscillations and minimizes overlapping contributions between modes, reducing redundancy. Also, the collective dynamics driven by the fast modes encode information that cannot be captured by any single oscillator alone, thereby enhancing synergistic information. This explains the peak in synergy observed near .

Figure 5 illustrates how the slow-mode poles (orange) move to the imaginary axis at , marking the disappearance of competing oscillatory timescales. In this near-critical regime, the relaxation dynamics become dominated by the underdamped (oscillatory) contributions of the fast modes, resulting in coherent dissipation. It is important to note that this result pertains to the regime where .

Figure 5.

Retarded Green’s function poles (A16) in the complex-frequency plane as J increases, illustrating the evolution of slow modes (orange dots) and fast modes (blue dots). For , both slow and fast modes coexist, and the system tends to encode inputs more redundantly. Near the critical point , the real part of approaches zero, indicating a disappearance of slow collective oscillations and an overdamped decay. In this near-critical regime, collective oscillations due to underdamped fast modes dominate and enhance synergistic encoding. For , the slow modes shift away from zero frequency, reducing synergy and transitioning the system back toward redundant encoding. This interplay between slow and fast modes governs how the system transitions from predominantly redundant to synergistic processing and back again as J crosses the critical point.

3.2.4. Generality of the Transition to Synergistic Behavior

The observed synergy peak at is not specific to the type of input signal driving the system. To demonstrate this, we perform numerical simulations of the master equation describing quantum dynamics with the input signal sampled from a uniform distribution in the interval , and uncorrelated in time. The results, shown in Figure 6, confirm that the transition in redundant/synergistic behavior persists at , regardless of the statistical properties of the input.

Figure 6.

Impact of uniform, uncorrelated noise input on information encoding at the quantum regime (, , and ). (Left) Normalized PID with uniform noise input. (Right) Comparison of total MI and partial MI contributions. The left panel shows a clear transition to synergistic encoding near . The right panel compares and , highlighting the emergence of synergy near the critical coupling.

These results emphasize that the transition to synergistic encoding at is an intrinsic feature of the system’s response dynamics, driven by the dominance of underdamped fast modes, and not much by the input signal properties. In the following section, we explore how increasing impacts the system’s encoding behavior, showing that fast relaxation towards the steady state progressively shifts the system from synergistic to redundant encoding.

3.3. Large Dissipation Leads to Redundant Encoding

As the damping rate increases, the dynamics progressively shift toward overdamped relaxation for both slow and fast modes. This transition leads to a steady-state regime where the two cavities become nearly identical, resulting in redundant coding of the input information. While the emergence of soft modes and the dominance of fast modes at enhance synergy, increasing gradually suppresses this effect, shifting the system toward a more redundant encoding regime dominated by rapid overdamped relaxation towards the steady state.

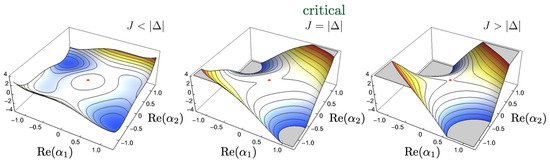

This behavior is particularly evident in the quantum regime, where the system approaches a product state at large , rendering the two oscillators effectively independent. As shown in Figure 7, increasing from the baseline value of (as seen in Figure 2 and Figure 3) results in a clear reduction in quantum correlations. Panel (a) of Figure 7 quantifies this through the time-averaged quantum mutual information (QMI), which decreases as increases. Notably, peaks in QMI at coincide with peaks in classical mutual information (MI) as shown in panel (b). This observation aligns with the intuition that higher quantum correlations often translate to enhanced classical information encoding near the critical coupling.

Figure 7.

Impact of increasing on information metrics in the quantum regime (, ). (a) Time-averaged quantum mutual information (QMI) between the two oscillators as a function of J for different . (b) Classical mutual information (MI) between the input signal and the outputs vs. J. (c) Absolute synergy vs. J. (d) Absolute redundancy vs. J. These plots illustrate the transition from low-synergistic to high-redundant encoding with increasing . At large dissipation, the two oscillators approach a product state, indicated by low QMI. Interestingly, despite the redundancy dominating at higher , the total mutual information near remains approximately constant.

Figure 7c,d further illustrate this transition by showing how absolute synergy diminishes and absolute redundancy grows with increasing . At low , the dynamics favor (weakly) synergistic encoding. At high , however, the system becomes dominated by (highly) redundant encoding, with both cavities responding similarly and independently of each other.

3.4. Memory Capacity of Synergistic and Redundant Encoding

We close our discussion by analyzing the performance of the coupled Kerr oscillators as a quantum reservoir, focusing on their capacity to retain and process temporal information. This memory capacity benchmark highlights how the synergistic and redundant behaviors identified earlier influence practical tasks such as time-series memorization.

3.4.1. Short-Term Memory Task

To quantify memory capacity, we train the system to recall past input signals using a short-term memory task. The input signal is sampled from a uniform distribution in the interval and is uncorrelated in time, and the target time series corresponds to the input signal at a previous time step:

where is the time step. The output observables and are used as feature vectors (here, we use more output readouts than in the previous sections since this input signal is more difficult to fit with fewer feature vectors). We construct an output vector :

and fit the target using a weight matrix via standard linear regression with Tikhonov regularization:

where the optimal weights minimize the mean square error (MSE) during training:

For simplicity, we set . After training, the memory capacity [1] for delay step n is evaluated as

where , with indicating perfect recall.

3.4.2. Tuning J Towards the Critical Coupling Strength

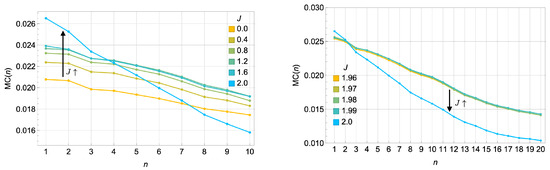

Figure 8 shows the memory capacity as J approaches the critical coupling . At smaller values of J, the memory capacity remains low across the delay steps. However, as J approaches , the system exhibits a notable change in behavior: the memory capacity for short delays (small n) increases significantly, while the memory capacity for longer delays (large n) decays more rapidly. This behavior reflects the trade-off between short-term and long-term memory as the system transitions to the synergistic regime dominated by fast modes.

Figure 8.

(Left) Memory capacity for n = 1–10 and , showing an increase in short-term memory capacity as . (Right) Memory capacity for n = 1–20 and , showing that the long-term capacity drops as . Parameters: , , . These results are averaged over 50 input realizations.

We note that, for low dissipation (e.g., ), the system may appear to retain information about initial conditions at long delays n, indicating that the fading memory property might not be fully realized. While a more comprehensive benchmark such as the information processing capacity (IPC) [41] could more definitively confirm or refute fading memory in this low-dissipation regime, our current goal is to investigate synergy and redundancy in a small quantum reservoir rather than to perform an exhaustive reservoir-computing benchmark. Instead, we emphasize qualitative trends in short- vs. long-term memory. A more detailed, IPC-based study would be an interesting direction for future work to fully study the computational potential of near-critical quantum reservoirs in this system.

3.4.3. Impact of Dissipation

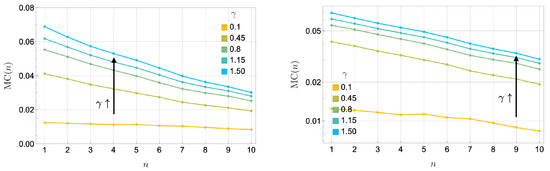

Figure 9 illustrates how dissipation () affects memory capacity at the critical coupling . As increases, the memory capacity can be attributed to the enhanced stability (more rapid relaxation towards) in the reservoir’s steady-state dynamics. Dissipation suppresses oscillatory behavior and stabilizes the reservoir’s response. This stabilization corresponds to a regime of highly redundant encoding, where information is stored near the steady state across somewhat identical subsystems.

Figure 9.

Memory capacity at the critical coupling as increases. (Left) Linear scale plot: vs. n. (Right) Log scale plot of . The left panel shows the average memory capacity (100 realizations). The right panel illustrates an exponential decay in . The exponential decay rate of the two-time correlation in the memory capacity, , remains approximately constant as increases, indicating that dissipation uniformly governs the loss of correlations across different dissipation rates. Differences in memory capacity primarily arise from the proportionality factor, with larger leading to a smaller variance in the output prediction in the denominator of Equation (18), as the output rapidly stabilizes to the steady state. This rapid stabilization corresponds to highly redundant encoding, and in turn enhances the total memory capacity for memorizing uniformly random input time series. Notably, in this redundant coding regime, the highly dissipative dynamics improve the quantum reservoir’s memory capacity.

Interestingly, as shown in Figure 8, while higher leads to improved total memory capacity, the decay rate of memory capacity in this redundant regime, , remains approximately constant across different dissipation rates. This suggests that dissipation uniformly governs the loss of correlations over time. Differences in memory capacity at high primarily arise from the proportionality factor in Equation (18). As dissipation increases, the variance in the reservoir’s output prediction decreases, reflecting rapid stabilization to the steady state. This reduced variance amplifies the initial memory capacity but does not alter the exponential decay rate of correlations.

These results highlight a subtle interplay between dissipation, memory capacity, and encoding modes. Near criticality at , the system exhibits synergistic behavior, where collective dynamical response dominates, enabling the reservoir to respond sensitively to recent input signals. This synergistic encoding boosts short-term memory retention but comes at the expense of long-term storage, as the system’s ability to retain correlations with far past inputs diminishes rapidly due to sensitivity to perturbation.

At higher dissipation rates , the system tends to encode inputs more redundantly and relaxes more quickly to a steady state. Interestingly, as illustrated in Figure 9, both short- and long-term memory capacities, at criticality, increase with for a simple uniformly random input memorization task, even though one might initially expect a trade-off. The underlying reason could be that the system has not yet fully achieved the fading memory regime, so further investigations, potentially through more comprehensive benchmarks such as IPC analysis, are needed to confirm how dissipation precisely balances short-term responsiveness and long-term stability right at a critical point.

Also from the perspective of quantum reservoir design, dissipation plays a critical role in ensuring the reservoir exhibits the fading memory property, where the influence of past inputs gradually diminishes, which is a necessary condition for designing operational reservoir computing [9,10].

4. Conclusions and Outlook

In this work, we explored coupled Kerr-nonlinear oscillators as a model open quantum system for studying modes of information processing in a small quantum reservoir computing (QRC) platform. By employing Partial Information Decomposition (PID) and analyzing memory retention tasks, we investigated how the interplay of dynamical instability and dissipation governs the encoding of input information into redundant or synergistic modes. These encoding schemes play a crucial role in determining the reservoir’s performance in processing and retaining temporal data.

Our findings reveal several key insights. First, near the critical coupling strength , the system transitions from predominantly redundant to synergistic encoding. This transition is driven by the dynamics of coherent oscillation modes that dominate as slow modes (soft modes) become overdamped. These collective dynamics enable the reservoir to process information synergistically, boosting short-term memory retention. Importantly, this synergy is robust across different input signals, including telegraph processes and uniform uncorrelated noise, suggesting that the observed transition is an intrinsic feature of the system’s bifurcation near . This dynamic instability was elucidated through the non-equilibrium (Keldysh) field-theoretic analysis, which highlighted how the disappearance of soft modes amplifies the dominance of fast, coherent modes.

Dissipation () plays an important role in shaping information encoding and memory capacity for our reservoir near criticality. At low , synergistic encoding enables collective processing and enhances short-term memory retention, as the reservoir’s dynamics are sensitive to recent input signals. However, as increases, dissipation suppresses coherent dynamics, rapidly driving the system toward redundant encoding. In this regime, large dissipation stabilizes encoding by enforcing redundant representations near the steady state, enabling the reservoir to retain information about far-past inputs at the expense of sensitivity to recent input information.

The connection between encoding modes and memory capacity reveals a trade-off: synergistic encoding favors short-term memory retention but is less suited for long-term storage due to its sensitivity to perturbations. Redundant encoding, on the other hand, sacrifices responsiveness to recent inputs but improves long-term retention. From a quantum reservoir design perspective, dissipation ensures the reservoir exhibits the fading memory property necessary for effective reservoir design [9,42]. By carefully tuning dissipation, one can hope to optimize the balance between short-term responsiveness and long-term stability and tailor the reservoir to specific computational tasks, aligning with the recent work on engineered dissipation as computational resources in quantum systems [10,43,44].

Coupled Kerr-nonlinear oscillators provide a minimal yet insightful testbed for analyzing transitions between encoding modes driven by dissipation and dynamical instability. Extending this framework to other quantum systems with dynamical phase transitions, such as Bose–Hubbard models [40], driven-dissipative platforms [45], and spin systems [13,16], could deepen our understanding of how dissipation and instability shape encoding dynamics in systems with more complex phase spaces. Another interesting direction is to develop a rigorous definition of quantum synergy. Although this work uses Partial Information Decomposition (PID) to analyze classical information derived from quantum observables, incorporating quantum correlations could provide a more comprehensive view of how coherence and other quantum effects either enhance or constrain information encoding, in line with [7,8,13]. Such development would refine our understanding of synergy and redundancy in small quantum systems from a more quantum information theoretic viewpoint.

In practice, realistic quantum devices inevitably face various noise sources and control imperfections. While these perturbations may shift the crossover point between redundant and synergistic encoding or relocate the system’s critical point, our results show that this crossover in the modes of encoding persists over a wide parameter range. With advanced experimental techniques, such as dynamical decoupling and error mitigation in quantum optics, we expect that the essential physics behind this crossover can still be realized in real experimental systems.

Lastly, it is important to recognize that the results presented here, like much of the prior work, assume perfect readout without the presence of shot noise. As system sizes scale, however, readout processes may suffer from exponential concentration phenomena, requiring exponentially many measurement shots to estimate input-dependent readouts accurately as discussed in [11]. This limitation presents a significant barrier to scalability. In larger quantum reservoir systems, future studies must address how synergy and redundancy behave under realistic measurement constraints. Incorporating the effects of finite measurement precision into the framework of encoding dynamics could lead to more scalable and experimentally feasible designs for quantum reservoirs.

Author Contributions

Conceptualization, methodology, investigation, formal analysis, writing, K.C. and T.C.; software, K.C.; funding acquisition, T.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research has received funding support from the NSRF via the Program Management Unit for Human Resources & Institutional Development, Research and Innovation [grant number B13F660057].

Data Availability Statement

The codes and datasets used in this work are available from the corresponding author upon reasonable request.

Acknowledgments

T.C. acknowledges insightful discussions with Wave Ngampruetikorn on synergistic and redundant coding.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Partial Information Decomposition (PID)

In classical (Shannon) information theory, we run into conceptual difficulties as soon as there are more than two random variables to handle because there is no single, universally accepted way to break down the total information among multiple variables following Shannon’s original recipe [46]. Quantifying the amount of information that is shared (redundant), exclusive (unique), or complementary (synergistic) among three or more variables is highly nontrivial and remains an active area of research; see, for example, [47,48,49,50,51,52]. In this work, we adopt one particular framework that meets three criteria: (1) It has a relatively straightforward formalism. (2) It captures whether and how our system is redundant or synergistic. (3) It does not exhibit major interpretational drawbacks for our purposes.

This framework, called Partial Information Decomposition (PID) [53], attempts to disentangle the multivariate information into non-overlapping, non-negative parts with clear interpretations. Concretely, consider a set of n random variables , where S (the target) is the variable whose information we want to capture, and (the sources) is the combined set of variables providing that information. The total (multivariate) mutual information between the source and the target is (for discrete distributions)

As outlined in the original PID paper [53], this methodology can in principle be generalized to any number of variables in terms of the PID lattice, but its complexity increases rapidly once . (Further developments can be found in [54,55,56,57,58].) In this work, we focus on the simplest nontrivial case , for which the PID formalism is most concretely developed and comparatively well understood.

Appendix A.1. PID for Three Variables

Let be three random variables, and consider , the mutual information between X and the pair . The three-variable PID proposes the following decomposition of into four distinct parts:

along with corresponding decompositions of the pairwise mutual informations:

Here, the four partial information (PI) terms have the following interpretations:

- (redundancy/shared information): the amount of information about X that is found in common in Y and Z.

- (synergy/complementary information): the information about X that is only accessible when considering Y and Z jointly, i.e., the “whole is greater than the sum of its parts”, which is typically a signature of the cooperation of constituents in complex systems.

- (unique information): the information about X that can be acquired solely from Y (or Z) and not from Z (or Y).

Because there are four unknown PI quantities but only three equations, Equations (A1)–(A3), one cannot solve for the PIs without additional theoretical constraints. Different PID axioms or definitions fix one of these quantities first (e.g., by prescribing a formula or an inequality), allowing the remaining quantities to be determined from the joint distribution . Approaches in the literature include (1) defining redundancy first [49,53,57,59], (2) defining synergy first [50,60], and (3) defining unique information first [23].

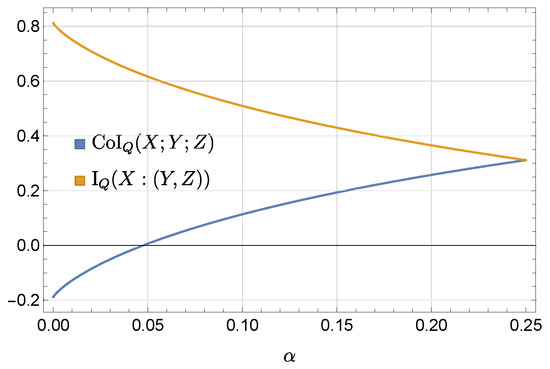

Appendix A.2. Co-Information

From Equations (A1)–(A3), one obtains the following notable combination of mutual information:

The left-hand side is often called the co-information [47] (or equivalently, interaction information [61] or sometimes just the mutual information [62]):

Although this is the simplest and most direct extension of mutual information to three variables, it has two important drawbacks. First, it can take positive or negative values (making some interpretations more subtle than the non-negative mutual information). Secondly, it cannot really distinguish redundancy and synergy because the right-hand side of (A4) just reflects their differences.

Nevertheless, for three-variable systems, CoI can sign whether redundancy or synergy dominates. Even though the co-information cannot quantify the amount of redundancy and synergy separately, it can describe whether the system is in a redundant coding or synergistic coding regime [21]. When , the system is said to be redundancy dominated, and when , the system is said to be synergy dominated [51,63]. This measure also plays a role in certain PID definitions and algorithms [23].

Appendix B. Explicit Examples for PID Calculation

In this appendix, we provide simple but useful examples to show how one can compute Partial Information Decomposition (PID) terms analytically under the BROJA approach [23]. The BROJA method obtains each partial information by solving a constrained optimization problem over all joint distributions , consistent with certain marginal constraints derived from the true distribution .

Appendix B.1. General Framework

Let be three discrete random variables with a true joint distribution . The BROJA definitions of the four partial information terms () are as follows [23]:

where is the co-information under the joint distribution Q, and

is the mutual information computed from the actual distribution P. Any subscripted quantity such as is computed from a candidate joint distribution , not from the true P.

Appendix B.2. Definition of Δ P

The space consists of all joint distributions whose and marginals match those of P. Formally, if is the set of all distributions on , then

for all . In other words, we fix the two-dimensional marginals of and to match the true data but allow to vary.

Appendix B.3. Foliation into Slices

To handle the high dimensionality of , a convenient approach parameterizes via a foliation; see Appendix A of [23]. Concretely, for each x with , we define

A joint distribution can then be specified by choosing, for each x, a , and combining via

Hence, can be viewed as the product of slice spaces for all x. Once a suitable parameterization of these slices is chosen, the optimization in (A6) becomes a finite-dimensional convex optimization problem. Next, we show how the procedure is performed in the simple cases following [23].

Appendix B.4. Example 1: AND Gate

Consider three binary variables with

and independent and identically distributed . The true joint distribution P is uniform on the events with probability each, and zero otherwise. From this P, one derives the marginals and .

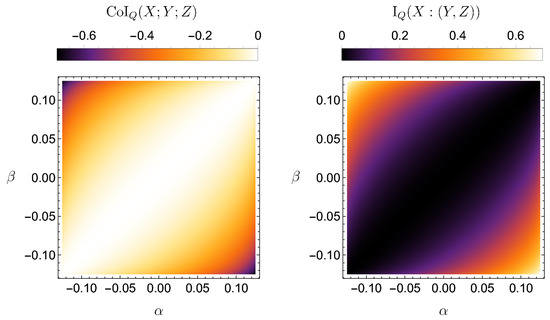

Appendix B.5. Parametrizing Δ P

Applying the slice formalism, one obtains

- For (which has ), the slice is a 1-parameter familywith .

- For (which has ), the slice is trivial because the only consistent distribution is .

Combining and as in (A9) and reparameterizing yields the 1-parameter family

Under each candidate , one can compute and , and thus solve the optimization problems over in (A6). Figure A1 shows and vs. .

Figure A1.

Co-information and mutual information for the AND-gate example, plotted as functions of . The optimum for redundancy (respectively, synergy) occurs at .

Solving these optimizations shows that is the critical point. Hence, each partial information is

Appendix B.6. Example 2: XOR Gate

Next, consider the binary XOR relation

where are again independent and identically distributed . The true distribution P is uniform on . One again sets up the slices and , each giving a family of distributions parameterized by and .

- : the most general distribution that satisfies the constraint (A8) iswith .

- : since the distribution for XOR operation is symmetric under swapping Y and Z, then it turns out thatwith another free parameter, .

Combining them with yields a two-parameter family

where , governing all . As before, one computes and to solve the optimization in (A6). Figure A2 shows the surfaces of CoI and I vs. .

Figure A2.

(Left) ; (Right) ; for the XOR-gate example. The optimum occurs at .

The optimum occurs at , giving

This perfectly aligns with the well-known fact that XOR is synergistic. Consider , and we happen to have only the knowledge of Y, where either we know that or . Without the knowledge of Z, we cannot infer any useful information about the value of X at all, and the probability is always equal to for any . Only when both Y and Z are presented together do we know exactly what the value of X should be, and it is exactly one bit of information (i.e., one yes/no question) that we need to know in order to eliminate all uncertainty about the value of X.

These two classic gates (AND and XOR) show how the BROJA optimization can be computed analytically in simple discrete cases. In practice, for larger or more complex systems, numerical methods are necessary, but the underlying principle is the same. One restricts to to preserve certain marginals and then solves the convex optimization problems in (A6).

Appendix C. Keldysh Action

In this appendix, we outline how to obtain the Keldysh action for the coupled Kerr oscillators described by Equations (1) and (2), and how the system’s linear response to external perturbation can be obtained from the retarded Green’s function. Readers seeking broader context on Keldysh formalism may consult [24,64,65].

From the Linblad Equation (1), one can reformulate this open-system evolution in a path-integral language by introducing “classical” fields and “quantum” fields , capturing forward and backward time contours, respectively [24]. After performing the usual Keldysh rotation, the action takes the following form:

Here, is the detuning frequency, is the Kerr nonlinearity, J is the coupling rate, and is an external drive. Varying with respect to and yields the semiclassical equations of motion that incorporate both Hamiltonian and dissipative dynamics.

Appendix C.1. Mean-Field Equations and the Effective Potential

Mean-field or semiclassical (or saddle-point) dynamics are found by setting and similarly for complex conjugates. One obtains as a trivial solution [24], and the mean-field dynamics for then follow from giving

where . This can be recast as a potential dynamics of the form

with the effective potential

which is the potential landscape discussed in Section 3.2.

Appendix C.2. Fluctuations and the Inverse Green’s Function

To analyze the system’s linear response to small perturbation about a mean-field or saddle-point solution, we expand the Keldysh action to the second order in the fluctuating fields . Namely, we set

expand in (A10) to the quadratic order in , and then consider the Fourier decomposition of the fluctuations

In block-matrix form, the resulting quadratic action reads

where groups the fluctuations . Explicitly, in the vector component such that

the inverse of retarded/advanced Green’s function and the Keldysh component of the inverse Green’s function read, respectively,

where for .

Appendix C.3. Classical Field Response

We are interested in the fluctuations of the classical field variables , which is precisely encoded the inverse retarded block, of (A12), that determines how those fluctuations grow or decay. Let be a small external drive coupling linearly to the oscillator modes as in (A11). At the level of fluctuations, one can write

where is the relevant component (or linear combination of components) of the retarded Green’s function. In the time domain,

with for . This causality condition sets the retarded (not advanced) nature of ; the system cannot respond before the perturbation arises.

Appendix C.4. Pole Structure and Oscillation vs. Decay

To analyze the dynamics near a stationary solution, e.g., , we linearize around that point and compute by inverting the block matrix. There, the inverse retarded Green’s function of (A12) becomes

The poles of appear where i.e., where the determinant of the retarded block vanishes. Writing such a pole as clarifies the physical nature of the fluctuation mode:

- is the frequency of oscillation.

- is the exponential decay rate if , signifying a stable, dissipative mode.

By setting and as discussed in Section 3.2, one obtains the poles describing the fast and slow relaxation modes in (12), that is

The real part of a slow-mode pole starts to disappear when the effective potential becomes marginally flat (e.g., ). Although one might expect a zero-frequency “soft” oscillation in a conservative dynamics setting, dissipation (encoded in terms of ) shifts that would-be neutral oscillatory mode into an overdamped decay channel.

In summary, the Keldysh action formalism provides a powerful lens to derive both the mean-field equations of motion and the fluctuation response in an open quantum system. Retarded Green’s functions, in particular, capture the causality of how a driven perturbation modifies the system at later times, thereby revealing the presence of overdamped or oscillatory collective modes. These results underpin the discussion in Section 3.2 on how the disappearance of slow modes leads to synergistic encoding at .

Appendix D. Second-Order Cumulant Expansion

In this appendix, we derive the second-order (2nd-order) cumulant expansion used to obtain semiclassical equations of motion for eight complex-valued expectation variables:

This second-order cumulant expansion serves as an interpolation between simpler mean-field dynamics, where second-order cumulants factorize and yield no correlations, and the full quantum description, in which higher-order cumulants can be nonzero when quantum correlations are sufficiently strong. We follow the standard cumulant-truncation scheme [66], imposing that all third- and fourth-order cumulants vanish [67]. That is, we set

where the subscript “C” denotes the connected (cumulant) part. This approximation can capture second-order correlations while keeping the system of equations tractable.

Below, we provide the resulting coupled ordinary differential equations (ODEs). These govern the dynamics of our reduced set of expectation values under the second-order expansion:

where is the Kronecker delta. In the main text, we assume and for simplicity.

Figure A3.

Classical mutual information for the 2nd-order cumulant description. This is to be contrasted with Figure 2 (left) to reveal how second-order description captures partial but nontrivial correlation effects correcting mean-field approximation. We set to represent a dynamical regime with non-negligible correlations, motivating the use of second-order cumulants description.

Note that these second-order equations provide an approximation to go beyond a strict mean-field approximation without the full computational cost of higher-order correlation. By discarding third- and fourth-order cumulants, we retain the information that captures pairwise correlations, which often dominate many relevant dynamics, while avoiding an intractable explosion in the number of degrees of freedom.

References

- Jaeger, H. Short Term Memory in Echo State Networks; GMD Report; Fraunhofer-Gesellschaft: München Germany, 2001. [Google Scholar]

- Lukoševičius, M.; Jaeger, H. Reservoir computing approaches to recurrent neural network training. Comput. Sci. Rev. 2009, 3, 127–149. [Google Scholar] [CrossRef]

- Nakajima, K. Reservoir computing: Theory, physical implementations, and applications. IEICE Tech. Rep. 2018, 118, 149–154. [Google Scholar]

- Lukoševičius, M. A practical guide to applying echo state networks. In Neural Networks: Tricks of the Trade, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 659–686. [Google Scholar]

- Fujii, K.; Nakajima, K. Harnessing disordered-ensemble quantum dynamics for machine learning. Phys. Rev. Appl. 2017, 8, 024030. [Google Scholar] [CrossRef]

- Innocenti, L.; Lorenzo, S.; Palmisano, I.; Ferraro, A.; Paternostro, M.; Palma, G.M. Potential and limitations of quantum extreme learning machines. Commun. Phys. 2023, 6, 118. [Google Scholar] [CrossRef]

- Motamedi, A.; Zadeh-Haghighi, H.; Simon, C. Correlations Between Quantumness and Learning Performance in Reservoir Computing with a Single Oscillator. arXiv 2023, arXiv:2304.03462. [Google Scholar]

- Götting, N.; Lohof, F.; Gies, C. Exploring quantumness in quantum reservoir computing. Phys. Rev. A 2023, 108, 052427. [Google Scholar] [CrossRef]

- Martínez-Peña, R.; Ortega, J.P. Quantum reservoir computing in finite dimensions. Phys. Rev. E 2023, 107, 035306. [Google Scholar] [CrossRef] [PubMed]

- Sannia, A.; Martínez-Peña, R.; Soriano, M.C.; Giorgi, G.L.; Zambrini, R. Dissipation as a resource for Quantum Reservoir Computing. Quantum 2024, 8, 1291. [Google Scholar] [CrossRef]

- Xiong, W.; Facelli, G.; Sahebi, M.; Agnel, O.; Chotibut, T.; Thanasilp, S.; Holmes, Z. On fundamental aspects of quantum extreme learning machines. Quantum Mach. Intell. 2025, in press. [Google Scholar]

- Mujal, P.; Martínez-Peña, R.; Giorgi, G.L.; Soriano, M.C.; Zambrini, R. Time-series quantum reservoir computing with weak and projective measurements. NPJ Quantum Inf. 2023, 9, 16. [Google Scholar] [CrossRef]

- Govia, L.; Ribeill, G.; Rowlands, G.; Krovi, H.; Ohki, T. Quantum reservoir computing with a single nonlinear oscillator. Phys. Rev. Res. 2021, 3, 013077. [Google Scholar] [CrossRef]

- Ivaki, M.N.; Lazarides, A.; Ala-Nissila, T. Quantum reservoir computing on random regular graphs. arXiv 2024, arXiv:2409.03665. [Google Scholar]

- Sornsaeng, A.; Dangniam, N.; Chotibut, T. Quantum next generation reservoir computing: An efficient quantum algorithm for forecasting quantum dynamics. Quantum Mach. Intell. 2024, 6, 57. [Google Scholar] [CrossRef]

- Martínez-Peña, R.; Giorgi, G.L.; Nokkala, J.; Soriano, M.C.; Zambrini, R. Dynamical phase transitions in quantum reservoir computing. Phys. Rev. Lett. 2021, 127, 100502. [Google Scholar] [CrossRef]

- Mujal, P.; Martínez-Peña, R.; Nokkala, J.; García-Beni, J.; Giorgi, G.L.; Soriano, M.C.; Zambrini, R. Opportunities in Quantum Reservoir Computing and Extreme Learning Machines. Adv. Quantum Technol. 2021, 4, 2100027. [Google Scholar] [CrossRef]

- Hartmann, M.; Brandão, F.; Plenio, M. Quantum many-body phenomena in coupled cavity arrays. Laser Photonics Rev. 2008, 2, 527–556. [Google Scholar] [CrossRef]

- Gat, I.; Tishby, N. Synergy and redundancy among brain cells of behaving monkeys. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 30 November–5 December 1998; Volume 11. [Google Scholar]

- Brenner, N.; Strong, S.P.; Koberle, R.; Bialek, W.; Steveninck, R.R.d.R.v. Synergy in a neural code. Neural Comput. 2000, 12, 1531–1552. [Google Scholar] [CrossRef] [PubMed]

- Schneidman, E.; Bialek, W.; Berry, M.J. Synergy, redundancy, and independence in population codes. J. Neurosci. 2003, 23, 11539–11553. [Google Scholar] [CrossRef] [PubMed]

- Latham, P.E.; Nirenberg, S. Synergy, redundancy, and independence in population codes, revisited. J. Neurosci. 2005, 25, 5195–5206. [Google Scholar] [CrossRef]

- Bertschinger, N.; Rauh, J.; Olbrich, E.; Jost, J.; Ay, N. Quantifying unique information. Entropy 2014, 16, 2161–2183. [Google Scholar] [CrossRef]

- Kamenev, A. Field Theory of Non-Equilibrium Systems; Cambridge University Press: Cambridge, UK, 2023. [Google Scholar]

- Dudas, J.; Carles, B.; Plouet, E.; Mizrahi, F.A.; Grollier, J.; Marković, D. Quantum reservoir computing implementation on coherently coupled quantum oscillators. NPJ Quantum Inf. 2023, 9, 64. [Google Scholar] [CrossRef]

- Dudas, J.; Grollier, J.; Marković, D. Coherently coupled quantum oscillators for quantum reservoir computing. In Proceedings of the 2022 IEEE 22nd International Conference on Nanotechnology (NANO), Palma de Mallorca, Spain, 4–8 July 2022; pp. 397–400. [Google Scholar] [CrossRef]

- Kalfus, W.D.; Ribeill, G.J.; Rowlands, G.E.; Krovi, H.K.; Ohki, T.A.; Govia, L.C.G. Hilbert space as a computational resource in reservoir computing. Phys. Rev. Res. 2022, 4, 033007. [Google Scholar] [CrossRef]

- Khan, S.A.; Hu, F.; Angelatos, G.; Türeci, H.E. Physical reservoir computing using finitely-sampled quantum systems. arXiv 2021, arXiv:2110.13849. [Google Scholar]

- Milburn, G.; Holmes, C. Quantum coherence and classical chaos in a pulsed parametric oscillator with a Kerr nonlinearity. Phys. Rev. A 1991, 44, 4704. [Google Scholar] [CrossRef] [PubMed]

- Milburn, G.; Corney, J.; Wright, E.M.; Walls, D. Quantum dynamics of an atomic Bose-Einstein condensate in a double-well potential. Phys. Rev. A 1997, 55, 4318. [Google Scholar] [CrossRef]

- Carusotto, I.; Ciuti, C. Quantum fluids of light. Rev. Mod. Phys. 2013, 85, 299. [Google Scholar] [CrossRef]

- Goto, H.; Kanao, T. Chaos in coupled Kerr-nonlinear parametric oscillators. Phys. Rev. Res. 2021, 3, 043196. [Google Scholar] [CrossRef]

- Breuer, H.P.; Petruccione, F. The Theory of Open Quantum Systems; Oxford University Press on Demand: Oxford, UK, 2002. [Google Scholar]

- Bena, I. Dichotomous Markov noise: Exact results for out-of-equilibrium systems. Int. J. Mod. Phys. B 2006, 20, 2825–2888. [Google Scholar] [CrossRef]

- Abel, B.; Marquardt, F. Decoherence by quantum telegraph noise: A numerical evaluation. Phys. Rev. B 2008, 78, 201302. [Google Scholar] [CrossRef]

- Franco, R.L.; D’Arrigo, A.; Falci, G.; Compagno, G.; Paladino, E. Entanglement dynamics in superconducting qubits affected by local bistable impurities. Phys. Scr. 2012, 2012, 014019. [Google Scholar] [CrossRef]

- Makkeh, A.; Theis, D.O.; Vicente, R. BROJA-2PID: A robust estimator for bivariate partial information decomposition. Entropy 2018, 20, 271. [Google Scholar] [CrossRef] [PubMed]

- Soriente, M.; Chitra, R.; Zilberberg, O. Distinguishing phases using the dynamical response of driven-dissipative light-matter systems. Phys. Rev. A 2020, 101, 023823. [Google Scholar] [CrossRef]

- Soriente, M.; Heugel, T.L.; Omiya, K.; Chitra, R.; Zilberberg, O. Distinctive class of dissipation-induced phase transitions and their universal characteristics. Phys. Rev. Res. 2021, 3, 023100. [Google Scholar] [CrossRef]

- Alaeian, H.; Soriente, M.; Najafi, K.; Yelin, S. Noise-resilient phase transitions and limit-cycles in coupled kerr oscillators. arXiv 2021, arXiv:2106.04045. [Google Scholar] [CrossRef]

- Dambre, J.; Verstraeten, D.; Schrauwen, B.; Massar, S. Information Processing Capacity of Dynamical Systems. Sci. Rep. 2012, 2, 514. [Google Scholar] [CrossRef]

- Martínez-Peña, R.; Ortega, J.P. Input-dependence in quantum reservoir computing. arXiv 2024, arXiv:2412.08322. [Google Scholar]

- Verstraete, F.; Wolf, M.M.; Ignacio Cirac, J. Quantum computation and quantum-state engineering driven by dissipation. Nat. Phys. 2009, 5, 633–636. [Google Scholar] [CrossRef]

- Fry, D.; Deshmukh, A.; Chen, S.Y.C.; Rastunkov, V.; Markov, V. Optimizing quantum noise-induced reservoir computing for nonlinear and chaotic time series prediction. Sci. Rep. 2023, 13, 19326. [Google Scholar] [CrossRef]

- Leghtas, Z.; Touzard, S.; Pop, I.M.; Kou, A.; Vlastakis, B.; Petrenko, A.; Sliwa, K.M.; Narla, A.; Shankar, S.; Hatridge, M.J.; et al. Confining the state of light to a quantum manifold by engineered two-photon loss. Science 2015, 347, 853–857. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Bell, A.J. The co-information lattice. In Proceedings of the Fifth International Workshop on Independent Component Analysis and Blind Signal Separation: ICA, Granada, Spain, 22–24 September 2003; Volume 2003. [Google Scholar]

- James, R.G.; Ellison, C.J.; Crutchfield, J.P. Anatomy of a bit: Information in a time series observation. Chaos Interdiscip. J. Nonlinear Sci. 2011, 21, 037109. [Google Scholar] [CrossRef] [PubMed]

- Harder, M.; Salge, C.; Polani, D. Bivariate measure of redundant information. Phys. Rev. E 2013, 87, 012130. [Google Scholar] [CrossRef] [PubMed]

- Olbrich, E.; Bertschinger, N.; Rauh, J. Information decomposition and synergy. Entropy 2015, 17, 3501–3517. [Google Scholar] [CrossRef]

- Rosas, F.E.; Mediano, P.A.; Gastpar, M.; Jensen, H.J. Quantifying high-order interdependencies via multivariate extensions of the mutual information. Phys. Rev. E 2019, 100, 032305. [Google Scholar] [CrossRef]

- Gutknecht, A.J.; Makkeh, A.; Wibral, M. From Babel to Boole: The Logical Organization of Information Decompositions. arXiv 2023, arXiv:2306.00734. [Google Scholar]

- Williams, P.L.; Beer, R.D. Nonnegative decomposition of multivariate information. arXiv 2010, arXiv:1004.2515. [Google Scholar]

- Bertschinger, N.; Rauh, J.; Olbrich, E.; Jost, J. Shared information—New insights and problems in decomposing information in complex systems. In Proceedings of the European Conference on Complex Systems 2012, Brussels, Belgium, 3–7 September 2012; Springer: Cham, Switzerland, 2013; pp. 251–269. [Google Scholar]

- Banerjee, P.K.; Griffith, V. Synergy, redundancy and common information. arXiv 2015, arXiv:1509.03706. [Google Scholar]

- Wibral, M.; Priesemann, V.; Kay, J.W.; Lizier, J.T.; Phillips, W.A. Partial information decomposition as a unified approach to the specification of neural goal functions. Brain Cogn. 2017, 112, 25–38. [Google Scholar] [CrossRef]

- Makkeh, A.; Gutknecht, A.J.; Wibral, M. Introducing a differentiable measure of pointwise shared information. Phys. Rev. E 2021, 103, 032149. [Google Scholar] [CrossRef] [PubMed]

- Schick-Poland, K.; Makkeh, A.; Gutknecht, A.J.; Wollstadt, P.; Sturm, A.; Wibral, M. A partial information decomposition for discrete and continuous variables. arXiv 2021, arXiv:2106.12393. [Google Scholar]

- Rauh, J.; Banerjee, P.K.; Olbrich, E.; Jost, J.; Bertschinger, N. On extractable shared information. Entropy 2017, 19, 328. [Google Scholar] [CrossRef]

- Griffith, V.; Koch, C. Quantifying synergistic mutual information. In Guided Self-Organization: Inception; Springer: Berlin/Heidelberg, Germany, 2014; pp. 159–190. [Google Scholar]

- McGill, W. Multivariate information transmission. Trans. IRE Prof. Group Inf. Theory 1954, 4, 93–111. [Google Scholar] [CrossRef]

- Yeung, R.W. A new outlook on Shannon’s information measures. IEEE Trans. Inf. Theory 1991, 37, 466–474. [Google Scholar] [CrossRef]

- Schneidman, E.; Still, S.; Berry, M.J.; Bialek, W. Network information and connected correlations. Phys. Rev. Lett. 2003, 91, 238701. [Google Scholar] [CrossRef] [PubMed]

- Dalla Torre, E.G.; Diehl, S.; Lukin, M.D.; Sachdev, S.; Strack, P. Keldysh approach for nonequilibrium phase transitions in quantum optics: Beyond the Dicke model in optical cavities. Phys. Rev. A 2013, 87, 023831. [Google Scholar] [CrossRef]

- Sieberer, L.M.; Buchhold, M.; Diehl, S. Keldysh field theory for driven open quantum systems. Rep. Prog. Phys. 2016, 79, 096001. [Google Scholar] [CrossRef] [PubMed]

- Kubo, R. Generalized cumulant expansion method. J. Phys. Soc. Jpn. 1962, 17, 1100–1120. [Google Scholar] [CrossRef]

- Sánchez-Barquilla, M.; Silva, R.; Feist, J. Cumulant expansion for the treatment of light–matter interactions in arbitrary material structures. J. Chem. Phys. 2020, 152, 034108. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).