Abstract

Accurate Remaining Useful Life (RUL) prediction is vital for effective prognostics in and the health management of industrial equipment, particularly under varying operational conditions. Existing approaches to multi-condition RUL prediction often treat each working condition independently, failing to effectively exploit cross-condition knowledge. To address this limitation, this paper introduces MoEFormer, a novel framework that combines a Mixture of Encoders (MoE) with a Transformer-based architecture to achieve precise multi-condition RUL prediction. The core innovation lies in the MoE architecture, where each encoder is designed to specialize in feature extraction for a specific operational condition. These features are then dynamically integrated through a gated mixture module, enabling the model to effectively leverage cross-condition knowledge. A Transformer layer is subsequently employed to capture temporal dependencies within the input sequence, followed by a fully connected layer to produce the final prediction. Additionally, we provide a theoretical performance guarantee for MoEFormer by deriving a lower bound for its error rate. Extensive experiments on the widely used C-MAPSS dataset demonstrate that MoEFormer outperforms several state-of-the-art methods for multi-condition RUL prediction.

1. Introduction

The Remaining Useful Life (RUL) prediction of machinery and components is a cornerstone of prognostics and health management, playing a pivotal role in ensuring the safety, reliability, and efficiency of industrial systems. RUL refers to the estimated time period during which a system or component is expected to perform its intended function before failure occurs. Accurate RUL predictions facilitate optimized maintenance schedules, reduce downtime, and minimize costs associated with unexpected failures. With the rapid advancements of Industry 4.0, RUL prediction has gained increasing importance across sectors such as aerospace, energy, manufacturing, and transportation, where system performance and reliability are critical [1,2]. However, the complexity of modern industrial systems, especially under dynamic operating conditions, presents significant challenges for accurately estimating RUL. To address these challenges, researchers have developed a wide range of RUL prediction methods, which can broadly be categorized into physics-based models, traditional machine learning approaches, and, more recently, deep learning techniques.

Physics-based models rely on first-principles knowledge, such as material degradation processes, structural dynamics, and failure mechanisms, to estimate RUL. These models are valued for their interpretability and the ability to incorporate domain expertise into them. However, their application is often limited by the need for precise system modeling and difficulties in capturing complex or nonlinear degradation patterns [3]. In contrast, traditional machine learning approaches, such as support vector machines, random forests, and regression models, have been widely employed for RUL prediction due to their capability to uncover patterns in historical sensor data and model nonlinear degradation processes [4]. While these methods have demonstrated promising results, they often require extensive feature engineering and face challenges in handling high-dimensional or time-series data, limiting their scalability and generalization performance.

In recent years, deep learning techniques have emerged as powerful tools for RUL prediction owing to their ability to automatically extract high-level features from large-scale sensor data. Architectures such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) have shown remarkable improvements in RUL prediction accuracy [5]. Additionally, attention-based models, including Transformers, and hybrid frameworks that combine multiple neural architectures have further expanded the horizons of RUL prediction [6,7]. The flexibility and robustness of deep learning make it particularly well suited for addressing the challenges posed by varying operating conditions and environments. However, many real-world systems operate under multi-condition scenarios, where environmental and operational variability substantially influence degradation behavior. Effectively modeling and generalizing across such scenarios remains a key challenge in RUL prediction [8,9]. Existing models often struggle to fully exploit the intricate relationships across operating conditions, leaving ample room for further innovation.

To address these limitations, this paper introduces MoEFormer, a novel framework specifically designed for multi-condition RUL prediction. MoEFormer integrates a Mixture of Encoders (MoE) architecture with a Transformer-based framework to enhance feature extraction, cross-condition knowledge fusion, and temporal dependency modeling. In contrast to existing methods, which often approach multi-condition RUL prediction in isolation, MoEFormer employs specialized encoders tailored to capture degradation features under specific operating conditions. A gated mixture module dynamically fuses these condition-specific features, enabling the model to leverage shared knowledge while preserving condition-specific insights. Additionally, the incorporation of Transformer layers improves the model’s ability to capture long-range temporal dependencies, which are crucial for accurate RUL estimation.

The main contributions of this paper are as follows:

- We propose a novel multi-condition RUL prediction framework that dynamically integrates condition-specific and shared knowledge using a Mixture-of-Encoders- and Transformer-based architecture.

- We derive a theoretical lower bound for the error rate of the proposed MoEFormer, providing a formal performance guarantee.

- Through extensive experiments on the C-MAPSS dataset, we demonstrate that MoEFormer outperforms existing state-of-the-art methods in predictive accuracy and robustness.

2. Related Work

2.1. Deep Learning for Sequence Modeling

The Remaining Useful Life (RUL) prediction problem can be abstractly formulated as a sequence modeling task. Deep learning has emerged as a powerful paradigm for sequence modeling, offering significant advantages over traditional statistical methods in capturing complex temporal dependencies. A major milestone in this domain was the development of recurrent neural networks (RNNs) [10] and their variants, such as long short-term memory (LSTM) networks [11] and gated recurrent units (GRUs) [12]. These architectures addressed the vanishing gradient problem in standard RNNs, enabling the modeling of long-range dependencies in sequential data. Convolutional neural networks (CNNs), though traditionally associated with image processing, have also been adapted for sequence modeling. Temporal convolutional networks [13] utilize causal convolutions and dilations to capture long-term dependencies in time-series data, often demonstrating superior computational efficiency and training stability compared to RNN-based methods.

The advent of attention mechanisms has further revolutionized sequence modeling by enabling models to selectively focus on relevant parts of input sequences regardless of their length. The Transformer architecture, introduced by Vaswani et al. [14], replaced recurrence with self-attention mechanisms and positional encodings, setting new benchmarks in sequence modeling. Transformer derivatives, such as BERT [15] and GPT [16], have inspired extensive exploration of attention-based architectures for time-series data. In this study, we leverage the Transformer architecture as the foundational model for sequence modeling.

2.2. Deep Learning for RUL Prediction

The application of deep learning to RUL prediction has garnered significant interest due to the nonlinear and complex nature of degradation processes in industrial systems. RNNs have been instrumental in modeling time-series data for RUL prediction. Early work by Malhi et al. [17] employed Elman RNNs to model degradation stages, laying the foundation for sequence-based architectures in predictive maintenance. The introduction of LSTM significantly enhanced the ability to capture long-term dependencies. For instance, Yuan et al. [18] and Wu et al. [19] trained LSTM models to directly predict RUL, while Zheng et al. [20] improved performance by integrating feed-forward layers on top of LSTM networks. Ellefsen et al. [21] incorporated unsupervised pretraining with restricted Boltzmann machines to enhance feature extraction alongside genetic algorithms for hyperparameter optimization. More recently, Wang et al. [5] introduced the ISSA-LSTM, utilizing an improved sparrow search algorithm for optimized LSTM parameter configuration. Similarly, Pent et al. [22] proposed a dual-channel LSTM network that adaptively extracts temporal features and their first-order derivatives for RUL prediction. Deng et al. [23] proposed a novel hybrid MC-GRU-PF model, achieving real-time degradation monitoring and RUL prediction of the ball screw where the analytical measurement model is not available.

Beyond RNN-based methods, CNNs have also shown strong promise in RUL prediction. CNNs excel at feature extraction through convolution and pooling operations, making them well suited for multi-channel sensor data. Babu et al. [6] and Li et al. [24] adopted CNNs to automatically extract temporal features, followed by feed-forward neural networks for RUL regression. Xu et al. [25] extended this approach by incorporating dilated convolutions, which enabled broader temporal context capture and faster training.

Hybrid architectures that integrate multiple deep learning models have further demonstrated the potential of deep learning in RUL prediction. For example, Liu et al. [26] combined LSTM networks with Gaussian process regression to provide both accurate predictions and uncertainty quantification. Preprocessing techniques, such as empirical mode decomposition, were employed to extract meaningful sensor patterns, especially in battery health monitoring. Zhu et al. [27] integrated CNNs and RNNs to fuse spatial and temporal features, achieving strong performance in RUL prediction. Similarly, Xu et al. [28] proposed a multi-source information fusion framework, combining CNNs and LSTMs to extract frequency- and time-domain features. Deng et al. [29] proposed a calibration-based hybrid transfer learning framework to improve the data fidelity and model generality of the hybrid physical and data-driven prognosis model for RUL prediction of rolling bearings across different machines. Jia et al. [30] presented a joint distribution adaptation-based transfer network with diverse feature aggregation, where the diverse feature aggregation module enhances feature extraction capability across large domain gaps and the joint maximum mean discrepancy is adopted to reduce the distribution discrepancy automatically. Ref. [31] combined the theoretical bound and deep adversarial network for machinery open-set diagnosis transfer, aiming to improve the diagnosis performance in open-set scenarios where some classes in the target domain are not seen during training.

Attention mechanisms have further enriched RUL prediction by enabling models to focus on critical features and time steps. Sun et al. [32] introduced an attention-based framework that fused handcrafted and learned features, improving prediction accuracy. Chen et al. [7] applied attention mechanisms to bearing RUL prediction, dynamically refining feature selection during training. Recent advances, such as the feature-sequence attention module by Cen et al. [33] and the feature reuse multi-scale attention residual network by Song et al. [34], have significantly advanced the accuracy of RUL predictions. Deng et al. [35] presented a double-layer attention-based adversarial network for partial transfer learning in machinery fault diagnosis, which can effectively transfer knowledge from the source domain to the target domain and improve the diagnosis accuracy even when there is a distribution discrepancy between the two domains.

In summary, although there are several prior works for addressing multi-condition RUL prediction, such as MODBNE [36], Cap-LSTM [8], and CNN-LSTM [37], these methods either incorporate condition-specific inputs as additional features or rely on domain adaptation techniques to align feature distributions. However, these approaches often fail to explicitly model cross-condition knowledge sharing or specialize in condition-specific feature extraction. In contrast, MoEFormer introduces a novel Mixture of Encoders (MoE) architecture, enabling the dynamic fusion of shared and condition-specific knowledge, and a Transformer-based predictor for capturing long-range temporal dependencies. This architectural innovation allows MoEFormer to outperform these methods, as demonstrated by significant reductions in RMSE and Score metrics on multi-condition datasets.

3. Preliminary

3.1. Transformer for Sequence Modeling

The Transformer model, introduced by Vaswani et al. [14], has become the cornerstone of many state-of-the-art deep learning architectures for sequence modeling tasks. It utilizes a self-attention mechanism to capture dependencies between elements of the input sequence without relying on the recurrent or convolutional structures traditionally used in sequence modeling. Given an input sequence of length n, where each element is a vector in , the Transformer processes this input sequence through multiple layers of self-attention and feed-forward networks to extract useful features. The input sequence is first embedded into a sequence with positional encodings to retain information about the relative positions of tokens in the sequence.

The core of the Transformer model is the self-attention mechanism. In this mechanism, each element in the sequence is compared with every other element, allowing the model to weigh the importance of different tokens when generating a representation. The self-attention operation computes a weighted sum of input vectors, where the weights are determined by the similarity between the tokens. Given an input sequence of embeddings , the self-attention mechanism computes the following:

where Q, K, and V are the query, key, and value matrices, respectively. These are computed from the input embeddings as follows:

where , , and are learned weight matrices. The softmax function ensures that the attention weights are normalized and sum to one, enabling the model to focus on the most relevant tokens in the sequence.

The attention block is followed by a position-wise feed-forward network, which consists of two fully connected layers with a ReLU activation in between:

After passing through the self-attention and feed-forward layers, the final representation of the sequence is obtained.

For sequence modeling tasks where the goal is to output a single feature from the sequence, the Transformer model typically uses a pooling operation or a linear layer applied to the final output. Let represent the output of the final layer for the n-th token. The output feature can be obtained by applying a pooling operation, such as

This produces a single output, which can be used for tasks such as regression or classification. The Transformer architecture is highly effective for sequence modeling tasks due to its ability to capture long-range dependencies via the self-attention mechanism, making it a powerful tool for RUL prediction.

3.2. RUL Prediction

Remaining Useful Life (RUL) prediction refers to the task of estimating the time duration until a system or component is expected to fail or reach a critical threshold beyond which its performance is no longer acceptable. From a machine learning perspective, RUL prediction is formulated as a regression problem, where the objective is to predict a continuous value representing the remaining time (or cycles) until failure, based on observed sensor data up to the current time. These sensor data provide critical information about the state of the equipment, and the goal of the prediction model is to infer the RUL from these data.

Let represent the feature vector at time t, where d is the dimensionality of the input feature space. The output of the model is the predicted RUL, denoted as , which corresponds to the expected number of time steps or cycles remaining before failure. Formally, the RUL prediction problem can be expressed as

where the following variables are used:

- is the learned model function;

- represents the model parameters, which are learned during training;

- are the sensor data that describe the system’s state within a window of length L ending at time T.

In many practical scenarios, the equipment may operate under varying working conditions, which can significantly affect the sensor data distribution. For example, a jet engine may operate at different Mach numbers, throttle resolver angles, altitudes, and ambient temperatures, leading to considerable differences in the sensor readings under each condition. As a result, RUL prediction becomes more challenging when these variations in operating conditions are present, as the data distribution under different conditions may be substantially different.

This paper specifically addresses the RUL prediction task under multiple working conditions. In this context, the sensor datum at time step t is collected from a specific working condition:

where represents the k-th working condition. Given the sensor data from K distinct working conditions , the goal is to build a model f (as defined in (5)) that minimizes the prediction error. The error is typically quantified using a loss function, such as the mean squared error (MSE):

where r is the true RUL value. This paper proposes MoEFormer, a Mixture of Encoders (MoE)-based model, to address the challenges of RUL prediction under multiple working conditions.

4. Methods

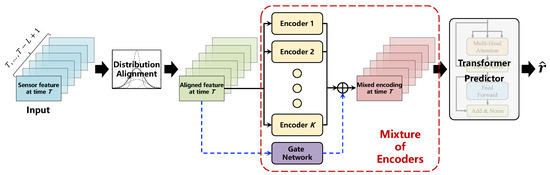

Figure 1 illustrates the framework of MoEFormer. The proposed MoEFormer consists of three primary components: distribution alignment, Mixture of Encoders, and Transformer predictor. The distribution alignment step aims to mitigate distribution drift across sensor features collected under varying operating conditions. The Mixture of Encoders component is designed to extract complementary knowledge from different working conditions, thereby generating a more comprehensive feature encoding for sensors across different times. Finally, the Transformer predictor is employed to capture temporal dependencies within the features, ultimately yielding the final RUL prediction. These components are discussed in detail below.

Figure 1.

Framework of the proposed MoEFormer. The model comprises three key components: distribution alignment, Mixture of Encoders (MoE), and Transformer predictor. The distribution alignment step aims to mitigate distribution drift in sensor features collected under varying operating conditions. The MoE component is designed to extract complementary knowledge from different working conditions. Lastly, the Transformer predictor captures temporal dependencies within the features, ultimately producing the final RUL prediction.

4.1. Distribution Alignment

Sensor feature sequences often originate from different working conditions, each of which may exhibit significantly different statistical properties. The goal of this module is to eliminate these discrepancies and align the feature distributions across varying operating conditions. To achieve this, we first compute the mean and standard deviation for the k-th working condition as follows:

where denotes the set of sensor features under the k-th working condition. For a sensor feature at time t, we assume it was collected under working condition , where is the index of the working condition. The feature sequence is then aligned as follows:

resulting in the aligned features , which facilitate the learning of subsequent model parameters.

4.2. Mixture of Encoders

While distribution alignment helps normalize sensor features from different working conditions, it does not imply that sensor characteristics across conditions fully reflect consistent equipment behavior. In fact, sensor data from different conditions contain complementary information about the equipment’s operating status. To leverage this complementary information, we introduce the Mixture of Encoders (MoE) module.

This module includes K feature encoders, corresponding to the K operating conditions. Each encoder specializes in processing the features from the k-th working condition. To integrate knowledge from all encoders, a gate control network is introduced to mix the outputs of the different encoders. The process is formalized as follows:

where is the weight assigned to the feature extracted by . The gate network consists of a two-layer fully connected network with ReLU activation, and the output is normalized using a softmax function.

Although each encoder is designed to specialize in the features from its respective working condition, we found that directly training the network end to end does not always yield optimal results. Therefore, we introduce an auxiliary loss to guide each encoder towards focusing on features from the corresponding working condition. Specifically, we use the working condition index of to apply a cross-entropy loss to the gate network’s output:

where is an indicator function that equals 1 if p is true and 0 otherwise.

4.3. Transformer Predictor

Once the features are processed through the Mixture of Encoders, we obtain a mixed-feature vector for each time step. While these features effectively represent the equipment’s status at a given time, the RUL prediction requires modeling the temporal dependencies among these features. To this end, MoEFormer introduces a Transformer predictor.

Given a sequence of mixed feature vectors , we employ the Transformer architecture, as described in Section 3.1, to model the temporal dependencies:

Since the final RUL prediction depends on the entire sequence, we introduce an averaging operation over the sequence:

Additionally, as noted by [38], the sensor time series trend contains valuable RUL-related information. To enhance the feature representation, MoEFormer incorporates this information by calculating the Pearson correlation coefficient between the aligned feature sequence and the time indices :

where is the trend coefficient vector. This vector is then concatenated with the output z from the Transformer and passed through a fully connected layer to produce the final RUL prediction:

The RUL loss is computed using the mean squared error between the predicted and the true RUL r:

5. Theoretical Analysis of MoEFormer

In this section, we derive a probabilistic error bound for MoEFormer. Specifically, we analyze the probability of a prediction result, denoted by , having an error not exceeding :

Intuitively, this probability decreases as decreases. Now, consider the prediction error probability for MoEFormer, as well as the error probability for a model utilizing only a single encoder. We aim to demonstrate that the error probability of MoEFormer, , is bounded below by a quantity that depends on . Formally, we present the following theorem:

Theorem 1.

(Probabilistic error bound for MoEFormer). Suppose K encoders process features independently, and a trained gate network assigns input features to encoders such that the ratio of the probability of assigning a sample to the correct encoder versus an incorrect encoder is , where . Then, the prediction error probability of MoEFormer is bounded below by

Proof.

Let us consider K encoders, each processing features independently. The probability that exactly s out of K encoders make correct predictions follows a binomial distribution:

where the event set . If the gate network assigns a sample to any one of these s correct encoders, MoEFormer will predict the sample correctly. The conditional probability that MoEFormer predicts correctly, given , is

where . By the law of total probability, the probability of MoEFormer making a correct prediction is

Rewriting the summation in terms of , we obtain

where the expectation is taken over . We define

Since is convex with respect to t (as its second derivative is positive), by Jensen’s inequality, we have

The expected value of t is

Substituting this into (25) yields

The last expression is strictly increasing with respect to when , and thus,

□

Theorem 1 establishes that the prediction error bound for MoEFormer, , is always better than that of a single encoder model, . Furthermore, the lower bound,

increases monotonically with . Intuitively, as increases (indicating better gate network performance), the lower bound on MoEFormer’s prediction accuracy improves, highlighting the importance of the gate network in leveraging cross-condition knowledge.

6. Experiments and Results

6.1. Settings

6.1.1. Dataset

The C-MAPSS dataset, developed by NASA using the Commercial Modular Aero-Propulsion System Simulation (C-MAPSS), is a widely recognized benchmark for research in Remaining Useful Life (RUL) prediction for turbofan engines. This dataset simulates the degradation process of turbofan engines under various operating conditions and fault modes, making it highly relevant for evaluating the performance of RUL prediction models. The dataset is divided into four subsets, FD001, FD002, FD003, and FD004, each representing different operational scenarios and fault conditions. These subsets include both training and testing data. The training data contain the full life-cycle degradation information of multiple engines, while the test data comprise incomplete engine life cycles that terminate before failure.

The primary goal is to predict the Remaining Useful Life (RUL) from the sensor readings, which consist of 21 sensor measurements and 3 operational parameters, including temperature, pressure, and speed, collected from various engine components. In this study, we focus on subsets FD002 and FD004 because they include multiple working conditions, aligning with our emphasis on RUL prediction under diverse operational scenarios. These subsets offer a richer dataset for analyzing how variations in engine states and conditions influence degradation dynamics. The training data in these subsets provide complete degradation trajectories, while the test data conclude before failure, simulating real-world scenarios where engines operate under dynamic and changing conditions. By leveraging these subsets, we validate the proposed method for multi-condition RUL estimation, ensuring robustness and generalizability to real-world applications. We follow the widely used data preprocessing step as detailed in [27].

6.1.2. Baselines

To demonstrate the effectiveness of the proposed MoEFormer model for multi-condition RUL prediction, we compare its performance against several state-of-the-art models in the field, including the following:

- CNN [6]: A convolutional-neural-network-based regression approach.

- MODBNE [36]: A multi-objective deep belief network ensemble method.

- Dual-Task LSTM [39]: A dual-task LSTM designed for joint learning of degradation assessment and RUL prediction.

- DCNN [24]: A deep convolutional neural network model that focuses on extracting local data features to improve RUL prediction.

- CNN-LSTM [37]: A hybrid weighted deep domain adaptation approach that combines CNN and LSTM architectures.

- Cap-LSTM [8]: A model that integrates capsule neural networks with LSTM modules for enhanced feature representation.

- BiRNN-ED [40]: An improved similarity-based prognostic model utilizing a bidirectional RNN with an encoder–decoder architecture.

- CNN-BiGRU [27]: A feature-fusion-based method that dynamically adjusts the weights of input features for RUL prediction.

- GCU-Transformer [41]: A Transformer-based architecture incorporating gated convolutional units for improved performance.

- HDNN [42]: A hybrid deep neural network that combines LSTM and CNN layers to extract both temporal and spatial features.

These models represent a wide range of advanced techniques, including convolutional networks, recurrent architectures, hybrid frameworks, and Transformer-based methods. Since most papers do not provide detailed code or hyperparameter configurations, we replicated their settings as described and directly extracted their reported results. For our proposed method, MoEformer, we used the following configuration: a window size of 50, (the weight of the auxiliary loss), a learning rate of 0.01, and training for 100 epochs with early stopping to prevent overfitting.

6.1.3. Performance Metrics

The evaluation of RUL prediction accuracy is conducted using two widely adopted metrics: the scoring function and the root mean squared error (RMSE).

The scoring function is specifically designed to account for the asymmetry in the consequences of prediction errors. It penalizes delayed predictions (when the predicted RUL exceeds the actual RUL) more heavily than advanced predictions (when the predicted RUL is less than the actual RUL). This asymmetry reflects real-world considerations, where delayed predictions may lead to catastrophic failures, whereas advanced predictions typically result in only minor disruptions due to premature maintenance. The scoring function is defined as follows:

where N is the total number of samples, r is the actual RUL, is the predicted RUL, and , are the penalty coefficients [27]. By applying heavier penalties to delayed predictions, this metric ensures that models are incentivized to prioritize safety-critical predictions.

RMSE is a more traditional evaluation metric that measures the overall magnitude of prediction errors without differentiating between advanced and delayed predictions. RMSE is defined as

RMSE provides an aggregate measure of prediction accuracy, with lower values indicating better performance. While it effectively quantifies the overall error magnitude, RMSE does not capture the asymmetric nature of prediction errors. As a result, it is less informative in scenarios where the timing of maintenance actions is critical.

6.2. Results

Table 1 summarizes the predictive performance of 11 methods across the four sub-datasets of the C-MAPSS dataset. For the single-condition datasets, FD001 and FD003, all methods achieved relatively strong performance, with minor differences in predictive accuracy. Although MoEFormer did not outperform all methods on these datasets, its performance was comparable to the best-performing approaches. In contrast, the multi-condition datasets, FD002 and FD004, presented greater challenges, as evidenced by the more pronounced performance gaps among the methods. On these more complex datasets, the proposed MoEFormer consistently delivered lower prediction errors than competing approaches. Notably, in terms of the Score metric, MoEFormer demonstrated a significant advantage over the second-best method, achieving reductions of 38.2% and 35% on FD002 and FD004, respectively. These results highlight MoEFormer’s superior capability in addressing the complexities of multi-condition RUL prediction tasks.

Table 1.

Performance comparison on C-MAPSS sub-datasets. The best performance is reported using bold, and the second best is reported using an underline. “-” means the results are not released. Metrics with a downward arrow ↓ indicate smaller values and better performance.

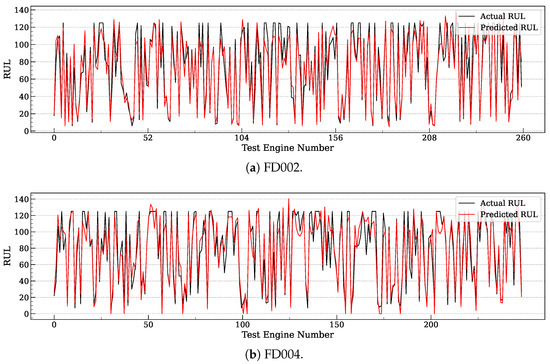

Figure 2 plots the real and predicted RUL for FD002 and FD004 of individual test samples (i.e., test engines). The x-axis corresponds to the index of test samples. Following the visualization method in [8], we connect the points into a curve to better illustrate the differences between the real and predicted RUL values. The results indicate that MoEFormer achieves high accuracy in most cases across these multi-condition datasets. However, for instances where the actual RUL is significantly large, MoEFormer tends to slightly underestimate the RUL. While this introduces a minor prediction bias, such early-warning errors are generally more acceptable than delayed predictions, as they allow for proactive maintenance scheduling. This aligns with the advantages reflected in the Score metric (Table 1), where MoEFormer demonstrates its robust performance.

Figure 2.

Comparison of MoEFormer’s predicted RUL values against the ground truth for FD002 and FD004.

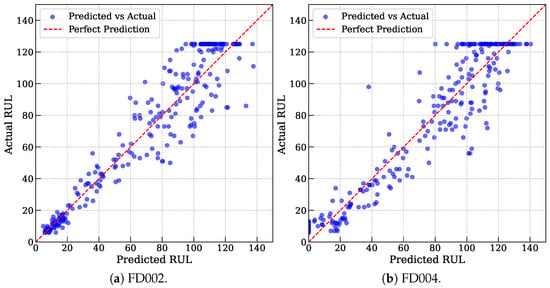

Figure 3 further illustrates the sample-wise distribution of MoEFormer’s predicted RUL values against the actual RUL values for FD002 and FD004. Most data points cluster near the anti-diagonal, indicating a strong agreement between predictions and ground truth. Notably, predictions for shorter actual RUL values (points near the bottom-left corner) align closely with the ideal diagonal, whereas predictions for longer RUL values (points near the top-right corner) exhibit slightly higher variance. This observation highlights the inherent difficulty of predicting longer RUL values. Interestingly, a horizontal line appears at actual RUL = 125, reflecting a practical constraint in the dataset where engines are retired once their cycles reach 125, irrespective of their true condition. This operational cutoff causes predictions in this region to slightly overestimate the actual values. Despite this artificial intervention, the prediction errors remain well within acceptable bounds, further demonstrating MoEFormer’s reliability and effectiveness in tackling multi-condition RUL prediction tasks.

Figure 3.

The sample-wise distribution of MoEFormer’s predicted RUL values against the actual RUL values for FD002 and FD004.

6.3. Ablation Study

This subsection presents an ablation study on the two key components of MoEFormer: distribution alignment (DA) and Mixture of Encoders (MoE). The study is conducted on the FD002 and FD004 datasets to evaluate the individual and combined contributions of these components. Specifically, three ablated versions of the model are analyzed: “w/o DA”, “w/o MoE”, and “w/o DA&MoE”, where DA, MoE, or both components are progressively removed. Table 2 compares the performance of these ablated models with the full MoEFormer.

Table 2.

Ablation study on two key designs of MoEFormer: distribution alignment (DA) and mixture of encoders (MoE). The best performance is reported using bold, and the second best is reported using an underline. Metrics with a downward arrow ↓ indicate smaller values and better performance.

The results reveal that removing both DA and MoE (“w/o DA&MoE”) leads to a significant deterioration in performance, characterized by large prediction errors and high Score values. As DA and MoE are gradually reintroduced, the prediction errors steadily decrease, demonstrating the critical importance of both components. Interestingly, DA and MoE exhibit distinct yet complementary roles across the two datasets: DA has a greater impact on FD002, while MoE contributes more significantly to the performance on FD004. These findings underscore the necessity of combining both components to achieve robust and accurate multi-condition RUL predictions. The complete MoEFormer model consistently delivers the best performance, validating the design of its key components.

6.4. Study on Hyperparameters

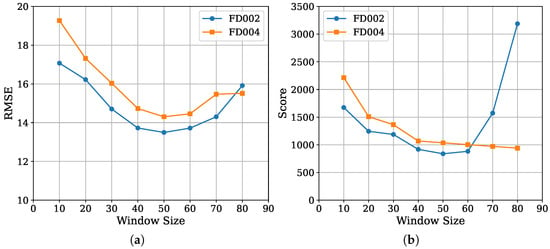

This section evaluates the effect of the two key hyperparameters, the window length L and the auxiliary loss weight , on MoEFormer’s prediction performance. Figure 4a illustrates the variation in RMSE as a function of L on the FD002 and FD004 datasets. A similar trend is observed for both datasets: RMSE decreases initially, stabilizes within the range of to 60, and then increases when L becomes excessively large. The best performance is achieved at .

Figure 4.

(a) RMSE and (b) Score of MoEFormer as a function of window size.

In contrast, as shown in Figure 4b, the Score metric follows a similar overall trend but reveals some dataset-specific differences. When , the Score on FD002 increases sharply, while it remains relatively stable on FD004. This discrepancy arises from the Score metric’s sensitivity to late-prediction errors. Specifically, as L increases, MoEFormer tends to overpredict RUL values on FD002 but slightly underpredicts them on FD004. This behavior likely reflects differences in the underlying data distributions of the two datasets. Overall, these results indicate that while MoEFormer’s performance is influenced by the choice of window length L, the model remains robust within a reasonable range. Optimal values for L can be easily determined through simple validation experiments, with being a reliable choice for both datasets.

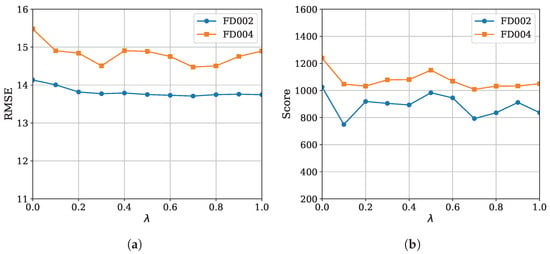

The performance of MoEFormer as a function of is shown in Figure 5. The results show that outperforms , demonstrating the auxiliary loss’s effectiveness. However, the performance does not change monotonically with , displaying irregular fluctuations depending on the metric. For instance, achieved the best RMSE on FD004, whereas provided the optimal Score on FD002, reflecting the metrics’ differing emphases: MSE penalizes overall error, while Score penalizes delayed predictions. Overall, provided the best trade-off across datasets and metrics. As a rough suggestion, it is recommended to set to a value between 0.2∼1.0, but the best choice might need adjustment for different scenarios through cross validation.

Figure 5.

(a) RMSE and (b) Score of MoEFormer as a function of (refer to (17)).

7. Conclusions

In this paper, we proposed MoEFormer, a novel framework for accurate multi-condition Remaining Useful Life (RUL) prediction. By combining a Mixture of Encoders (MoE) with a Transformer-based architecture, MoEFormer addresses the critical challenge of leveraging cross-condition knowledge while maintaining condition-specific feature extraction. The dynamic gated mixture module enables the model to seamlessly integrate shared and condition-specific knowledge, while the Transformer component effectively captures temporal dependencies. The proposed approach is further supported by a theoretical lower bound on the error rate, offering a formal performance guarantee. Extensive experiments conducted on the C-MAPSS dataset validate the effectiveness of MoEFormer, showing significant improvements in predictive accuracy and robustness over state-of-the-art methods, including a 38.2% and 35% reduction in the Score metric on the FD002 and FD004 datasets, respectively. This work not only advances the state of the art in RUL prediction but also highlights the importance of dynamic and adaptive architectures for handling diverse operational conditions. The integration of condition-specific expertise and cross-condition learning represents a meaningful step forward in the domains of prognostics and health management.

Despite the promising results, there are several directions for future research. First, while MoEFormer effectively models multi-condition scenarios, further exploration is needed to adapt the framework for scenarios with limited labeled data through techniques such as transfer learning or semi-supervised learning. Second, incorporating uncertainty quantification into the predictions could improve the reliability of the model for real-world applications, particularly in safety-critical industries. Third, expanding the application of MoEFormer to other datasets and domains, such as renewable energy systems or autonomous vehicles, could validate its versatility and generalization capabilities. Lastly, optimizing the computational efficiency of the model, especially for real-time deployments, remains an important area for future improvement.

Author Contributions

Conceptualization, Y.L.; methodology, Y.L. and Y.-a.G.; software, B.X. and Y.-a.G.; validation, B.X.; formal analysis, Y.-a.G.; investigation, B.X.; resources, Y.L.; data curation, B.X.; writing—original draft preparation, Y.-a.G.; writing—review and editing, Y.L.; visualization, B.X.; supervision, Y.L.; project administration, Y.L.; funding acquisition, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China grant number 62306028.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The original data presented in the study are openly available in NASA’s Open Data Portal at https://data.nasa.gov/Aerospace/CMAPSS-Jet-Engine-Simulated-Data/ff5v-kuh6/about_data, accessed on 13 January 2025.

Acknowledgments

The authors would like to express their sincere gratitude to the editor and the anonymous reviewers for their valuable and constructive comments and suggestions, which have significantly enhanced the quality of this manuscript. The authors also extend their thanks to NASA for their dedicated efforts in developing and providing the C-MAPSS dataset, which was instrumental in the research presented herein.

Conflicts of Interest

Author Yang Liu was employed by the company China Energy Railway Equipment Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MoE | Mixture of Encoders |

| RUL | Remaining Useful Life |

| CNN | Convolutional neural network |

| RNN | Recurrent neural network |

| LSTM | Long short-term memory |

References

- Si, X.S.; Wang, W.; Hu, C.H.; Zhou, D.H. Remaining useful life estimation—A review on the statistical data driven approaches. Eur. J. Oper. Res. 2011, 213, 1–14. [Google Scholar] [CrossRef]

- Lei, Y.; Li, N.; Guo, L.; Li, N.; Yan, T.; Lin, J. Machinery health prognostics: A systematic review from data acquisition to RUL prediction. Mech. Syst. Signal Process. 2018, 104, 799–834. [Google Scholar] [CrossRef]

- Orchard, M.E.; Vachtsevanos, G.J. A particle-filtering approach for on-line fault diagnosis and failure prognosis. Trans. Inst. Meas. Control 2009, 31, 221–246. [Google Scholar] [CrossRef]

- Wang, T.; Yu, J.; Siegel, D.; Lee, J. A similarity-based prognostics approach for remaining useful life estimation of engineered systems. In Proceedings of the International Conference on Prognostics and Health Management, Denver, CO, USA, 6–9 October 2008; pp. 1–6. [Google Scholar]

- Wang, H.; Zhang, X.; Ren, M.; Xu, T.; Lu, C.; Zhao, Z. Remaining useful life prediction of rolling bearings based on multi-scale permutation entropy and ISSA-LSTM. Entropy 2023, 25, 1477. [Google Scholar] [CrossRef] [PubMed]

- Sateesh Babu, G.; Zhao, P.; Li, X.L. Deep convolutional neural network based regression approach for estimation of remaining useful life. In Proceedings of the International Conference on Database Systems for Advanced Applicationsa, Dallas, TX, USA, 16–19 April 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 214–228. [Google Scholar]

- Chen, Z.; Wu, M.; Zhao, R.; Guretno, F.; Yan, R.; Li, X. Machine remaining useful life prediction via an attention-based deep learning approach. IEEE Trans. Ind. Electron. 2020, 68, 2521–2531. [Google Scholar] [CrossRef]

- Zhao, C.; Huang, X.; Li, Y.; Li, S. A novel cap-LSTM model for remaining useful life prediction. IEEE Sens. J. 2021, 21, 23498–23509. [Google Scholar] [CrossRef]

- Chang, L.; Lin, Y.h.; Zio, E. Remaining useful life prediction for complex systems considering varying future operational conditions. Qual. Reliab. Eng. Int. 2022, 38, 516–531. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; van Merrienboer, B.; Gulçehre, Ç.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder—Decoder for statistical machine translation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Proceedings of the International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020. [Google Scholar]

- Malhi, A.; Yan, R.; Gao, R.X. Prognosis of defect propagation based on recurrent neural networks. IEEE Trans. Instrum. Meas. 2011, 60, 703–711. [Google Scholar] [CrossRef]

- Yuan, M.; Wu, Y.; Lin, L. Fault diagnosis and remaining useful life estimation of aero engine using LSTM neural network. In Proceedings of the IEEE International Conference on Aircraft Utility Systems, Beijing, China, 10–12 October 2016; pp. 135–140. [Google Scholar]

- Wu, Y.; Yuan, M.; Dong, S.; Lin, L.; Liu, Y. Remaining useful life estimation of engineered systems using vanilla LSTM neural networks. Neurocomputing 2018, 275, 167–179. [Google Scholar] [CrossRef]

- Zheng, S.; Ristovski, K.; Farahat, A.; Gupta, C. Long short-term memory network for remaining useful life estimation. In Proceedings of the IEEE International Conference on Prognostics and Health Management, Seattle, WA, USA, 11–13 June 2017; pp. 88–95. [Google Scholar]

- Ellefsen, A.L.; Bjørlykhaug, E.; Æsøy, V.; Ushakov, S.; Zhang, H. Remaining useful life predictions for turbofan engine degradation using semi-supervised deep architecture. Reliab. Eng. Syst. Saf. 2019, 183, 240–251. [Google Scholar] [CrossRef]

- Peng, C.; Wu, J.; Wang, Q.; Gui, W.; Tang, Z. Remaining useful life prediction using dual-channel LSTM with time feature and its difference. Entropy 2022, 24, 1818. [Google Scholar] [CrossRef] [PubMed]

- Deng, Y.; Shichang, D.; Shiyao, J.; Chen, Z.; Zhiyuan, X. Prognostic study of ball screws by ensemble data-driven particle filters. J. Manuf. Syst. 2020, 56, 359–372. [Google Scholar] [CrossRef]

- Li, X.; Ding, Q.; Sun, J.Q. Remaining useful life estimation in prognostics using deep convolution neural networks. Reliab. Eng. Syst. Saf. 2018, 172, 1–11. [Google Scholar] [CrossRef]

- Xu, X.; Wu, Q.; Li, X.; Huang, B. Dilated convolution neural network for remaining useful life prediction. J. Comput. Inf. Sci. Eng. 2020, 20, 021004. [Google Scholar] [CrossRef]

- Liu, K.; Shang, Y.; Ouyang, Q.; Widanage, W.D. A data-driven approach with uncertainty quantification for predicting future capacities and remaining useful life of lithium-ion battery. IEEE Trans. Ind. Electron. 2020, 68, 3170–3180. [Google Scholar] [CrossRef]

- Zhu, Q.; Xiong, Q.; Yang, Z.; Yu, Y. A novel feature-fusion-based end-to-end approach for remaining useful life prediction. J. Intell. Manuf. 2023, 34, 3495–3505. [Google Scholar] [CrossRef]

- Xu, Q.; Wang, Z.; Jiang, C.; Jing, Z. Data-driven predictive maintenance framework considering the multi-source information fusion and uncertainty in remaining useful life prediction. Knowl. Based Syst. 2024, 303, 112408. [Google Scholar] [CrossRef]

- Deng, Y.; Du, S.; Wang, D.; Shao, Y.; Huang, D. A calibration-based hybrid transfer learning framework for RUL prediction of rolling bearing across different machines. IEEE Trans. Instrum. Meas. 2023, 72, 3511015. [Google Scholar] [CrossRef]

- Jia, S.; Deng, Y.; Lv, J.; Du, S.; Xie, Z. Joint distribution adaptation with diverse feature aggregation: A new transfer learning framework for bearing diagnosis across different machines. Measurement 2022, 187, 110332. [Google Scholar] [CrossRef]

- Deng, Y.; Lv, J.; Huang, D.; Du, S. Combining the theoretical bound and deep adversarial network for machinery open-set diagnosis transfer. Neurocomputing 2023, 548, 126391. [Google Scholar] [CrossRef]

- Sun, C.; Ma, M.; Zhao, Z.; Tian, S.; Yan, R.; Chen, X. Deep transfer learning based on sparse autoencoder for remaining useful life prediction of tool in manufacturing. IEEE Trans. Ind. Inform. 2018, 15, 2416–2425. [Google Scholar] [CrossRef]

- Cen, Z.; Hu, S.; Hou, Y.; Chen, Z.; Ke, Y. Remaining useful life prediction of machinery based on improved Sample Convolution and Interaction Network. Eng. Appl. Artif. Intell. 2024, 135, 108813. [Google Scholar] [CrossRef]

- Song, L.; Wu, J.; Wang, L.; Chen, G.; Shi, Y.; Liu, Z. Remaining useful life prediction of rolling bearings based on multi-scale attention residual network. Entropy 2023, 25, 798. [Google Scholar] [CrossRef] [PubMed]

- Deng, Y.; Huang, D.; Du, S.; Li, G.; Zhao, C.; Lv, J. A double-layer attention based adversarial network for partial transfer learning in machinery fault diagnosis. Comput. Ind. 2021, 127, 103399. [Google Scholar] [CrossRef]

- Zhang, C.; Lim, P.; Qin, A.K.; Tan, K.C. Multiobjective deep belief networks ensemble for remaining useful life estimation in prognostics. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2306–2318. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Yu, S.; Zhu, X.; Ji, Y.; Pecht, M. A weighted deep domain adaptation method for industrial fault prognostics according to prior distribution of complex working conditions. IEEE Access 2019, 7, 139802–139814. [Google Scholar] [CrossRef]

- Khelif, R.; Chebel-Morello, B.; Malinowski, S.; Laajili, E.; Fnaiech, F.; Zerhouni, N. Direct remaining useful life estimation based on support vector regression. IEEE Trans. Ind. Electron. 2016, 64, 2276–2285. [Google Scholar] [CrossRef]

- Miao, H.; Li, B.; Sun, C.; Liu, J. Joint learning of degradation assessment and RUL prediction for aeroengines via dual-task deep LSTM networks. IEEE Trans. Ind. Inform. 2019, 15, 5023–5032. [Google Scholar] [CrossRef]

- Yu, W.; Kim, I.Y.; Mechefske, C. An improved similarity-based prognostic algorithm for RUL estimation using an RNN autoencoder scheme. Reliab. Eng. Syst. Saf. 2020, 199, 106926. [Google Scholar] [CrossRef]

- Mo, Y.; Wu, Q.; Li, X.; Huang, B. Remaining useful life estimation via transformer encoder enhanced by a gated convolutional unit. J. Intell. Manuf. 2021, 32, 1997–2006. [Google Scholar] [CrossRef]

- Al-Dulaimi, A.; Zabihi, S.; Asif, A.; Mohammadi, A. A multimodal and hybrid deep neural network model for remaining useful life estimation. Comput. Ind. 2019, 108, 186–196. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).