Abstract

Controlling the time evolution of a probability distribution that describes the dynamics of a given complex system is a challenging problem. Achieving success in this endeavour will benefit multiple practical scenarios, e.g., controlling mesoscopic systems. Here, we propose a control approach blending the model predictive control technique with insights from information geometry theory. Focusing on linear Langevin systems, we use model predictive control online optimisation capabilities to determine the system inputs that minimise deviations from the geodesic of the information length over time, ensuring dynamics with minimum “geometric information variability”. We validate our methodology through numerical experimentation on the Ornstein–Uhlenbeck process and Kramers equation, demonstrating its feasibility. Furthermore, in the context of the Ornstein–Uhlenbeck process, we analyse the impact on the entropy production and entropy rate, providing a physical understanding of the effects of minimum information variability control.

1. Introduction

Time-varying probability density functions (PDFs) are a preferred approach for characterising the dynamics of various complex systems. PDFs commonly feature in emergent fields, such as active inference [1] or stochastic thermodynamics [2], where their value is derived either through data analysis or by solving the Fokker–Planck (FP) equation associated with an Itô/Stratonovich stochastic differential equation.

Drawing upon control theory [3], when the dynamics of the system under consideration are governed by an FP equation, we can explore control objectives such as the regulation (setting to a constant value) or tracking (following a time-varying reference) of the systems’ time-varying PDFs [4]. While the notion of controlling PDFs may initially appear impractical using conventional control engineering methods, advancements in technologies like optical tweezers have rendered it feasible, which is particularly evident in applications such as colloidal systems [5,6,7,8], with specific implications in biomolecule evolution control [9].

Regarding the control of FP equations, seminal works [10,11] present methodologies to control the system PDF governed by FP equations [12]. Building upon this foundation, ref. [13] discusses a bilinear optimal control problem where the control function depends on time and space. In [14], the authors prove the existence of optimal controls by considering first-order necessary conditions in the optimisation problem.

In applications like Brownian motion, we can control FP equations via reverse-engineering approaches such as the engineered swing equilibration (ESE) method [15,16]. The ESE protocol imposes a solution to the FP equation to obtain the corresponding PDF’s control parameters that provide a shortcut for time-consuming relaxations (for further details, refer to the methods section of [17]). Ref. [8] offers a comprehensive review of similar reverse-engineered approaches.

Since FP equations frequently serve as mathematical descriptions of mesoscopic systems (for further details, see [12]), i.e., systems at the nano/microscale such as molecular motors, the time evolution of the system’s PDF may not only need to adhere to time constraints but also to multiple “thermodynamic” constraints to be deemed “efficient”. For instance, the system may require the minimisation of the entropy production [18,19], a reduction in information variability [20], or an enhancement of self-organisation [21]. The incorporation of these “thermodynamic constraints” into the optimisation process implies an extension of the current literature results on the control of FP equations.

A theory that could offer insights into addressing these optimisation challenges stems from information geometry, a field resulting from the fusion of information theory and differential geometry [22]. As an evolving field, information geometry proposes novel solutions to tasks, such as maximum likelihood estimation [23], state prediction [24,25], the quantification of causality [26,27,28], or maximum work extraction [4,29]. In stochastic thermodynamics [2,30], information geometry aids in obtaining time-varying descriptions of the aforementioned constraints. For instance, based on the well-known Cauchy–Schwartz inequality [31], ref. [32] presents an inequality between the Fisher divergence [33] and the information length (IL) [25,34] to quantify the disorder in irreversible decay processes. Ref. [35] introduces an inequality describing the information rate as a speed limit on the evolution of any observable. In [4], the geodesic of the IL describes the path with the least statistical variation connecting initial and final probability distributions of the system dynamics (for further details, see [20]). Consequently, information geometry can be employed in a control protocol to enforce geodesic dynamics on the system’s PDF time evolution, achieving the minimum “geometric information variability” [21] and thereby establishing an optimal speed limit for the system’s observables. The primary challenge addressed in this study revolves around devising and applying a technique to achieve this minimum geometric information variability.

Developing an optimal protocol for the time evolution of the system’s PDFs under multiple constraints requires an exploration of existing control algorithms. The literature presents a significant amount of control procedures, spanning from classical PID control [36] to more sophisticated algorithms, like data-driven, model-free, or fractional-order controls (for instance, see [37,38,39]). However, we require an algorithm capable of handling intricate optimisation problems while remaining a feasible option for implementation in future experimental setups. One of the most popular optimisation-based control techniques is the model predictive control (MPC) scheme [40]. Generally, MPC is an online optimisation algorithm designed for constrained control problems, whose advantages have been observed in applications within robotics [41], solar energy [42], or bioengineering [43]. Furthermore, MPC can be easily implemented thanks to packages such as CasADi [44] or the Hybrid Toolbox [45].

Based on the presented discussion, this work offers a solution to an optimisation problem that integrates the concepts of the information length and the quadratic regulator (QR) [46] to guide the system’s PDF time evolution along the path with the minimum geometric information variability (the geodesic of the information length) using MPC. Although we could implement the IL, QR, or MPC in more complex scenarios, we only study their application to stochastic processes described by linear stochastic differential equations. Hence, the system’s PDF will consistently maintain a Gaussian distribution over time, assuming that the system’s initial conditions follow a Gaussian distribution. Constraining the analysis to linear stochastic dynamics allows us to use a set of deterministic differential equations to describe the evolution of the mean and covariance of the Gaussian distribution within the MPC method. The applicability of such a prediction model favours MPC over other alternatives, such as reinforcement learning [47], which could have been explored to determine the geodesic of the information length within a similar context. Additionally, MPC provides a low offline complexity, mature stability–feasibility–robustness theory, and good constraint handling [47].

The algorithm is applied to the Ornstein–Uhlenbeck process [48] and the Kramers equation [25], which both describe a particle over a heath reservoir (mesoscopic stochastic dynamics). In practice, changes in temperature and optical tweezers can manipulate the dynamics of both the noise amplitude and mean value in such systems, respectively [2,7,49]. Using previous findings from [50], the effects of the MPC method on the Ornstein–Uhlenbeck process are analysed by comparing IL values with the entropy production and entropy rate in the closed-loop (feedback-controlled) system. As a rigorous closed-loop stability analysis is beyond the scope of this work, we provide only a brief description of the BIBO stability conditions that are considered to constrain the control actions proposed by the MPC. Finally, we give a set of concluding remarks and discuss future work.

To help readers, in the following, we summarise our notations. is the set of real numbers. represents a column vector of real numbers of dimension n, represents a real matrix of dimension (bold-face letters are used to represent vectors and matrices), corresponds to the trace of the matrix , and is the element at row i and column j of the matrix . , , , and are the determinant, vectorisation, transpose, and inverse of matrix , respectively. The value denotes the identity matrix of order n. Newton’s notation is used for the partial derivative concerning the variable t (i.e., ). In addition, the average of a random vector is denoted by , with the angular brackets representing the average. Finally, in Table 1, we provide a brief description of the variables used throughout this paper.

Table 1.

Description of the different variables used throughout this work.

2. Preliminaries

As mentioned in Section 1, this work considers systems whose dynamics are governed by linear non-autonomous stochastic differential equations described in the form of the following set of Langevin equations:

where and are and real time-invariant matrices, respectively; is a (bounded smooth) external input, and is a Gaussian stochastic noise given by an n dimensional vector of -correlated Gaussian noises (), with the following statistical property:

Readers seeking a comprehensive study of systems governed by Equation (1) can refer to [51]. Additionally, for a concise introduction to linear time-varying and time-invariant systems, ref. [52] provides valuable insights.

The Fokker–Planck equation of (1) can also be utilised to depict the system’s PDF dynamics, characterised by both time t and the spatial variable vector . This is given as follows [53,54]:

If the initial PDF is Gaussian, a solution to (3) for at any time t is given by [53]

Equation (4) describes the dynamics of the probability distribution of the random variable governed by (1). The value of the mean and covariance in a linear stochastic process can be computed from the solution of the following set of differential equations [51]:

where is the matrix with elements . In this work, Equations (5a) and (5b) will be used to predict the behaviour of the probability distribution of the random variable in our control method.

2.1. Information Length (IL)

To obtain a minimum geometric information variability in the time evolution of the PDF of Equation (1), we need to investigate the concept of IL in more detail. In mathematical terms, given the joint PDF of the n-th order random variable , we define its IL as follows:

The value in (6) corresponds to the square of the Fisher information by considering the time as a parameter, and it is commonly called the information rate [21]. The dimension of is time, which means that it serves as a dynamical time unit for information change. Hence, as shown in Figure 1, the integration of between time 0 and t gives the total information change in that time interval; i.e., quantifies the number of statistically different states that the system passes through in time from an initial to a final [55]. Furthermore, the IL is a model-free tool that proved to be a true metric between two PDFs in the statistical space [26]. In [33], was shown to provide a universal bound on the timescale of transient dynamical fluctuations, independent of the physical constraints on the stochastic dynamics or their function.

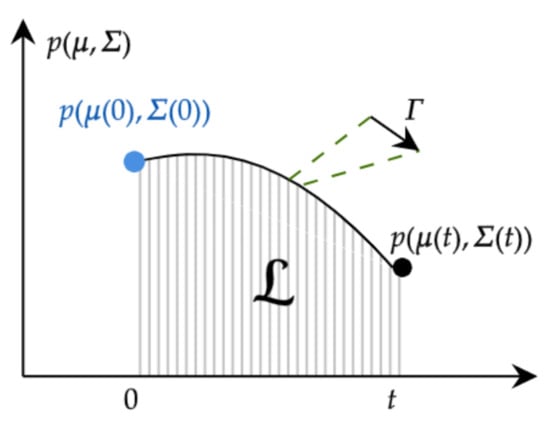

Figure 1.

Graphical description of the value of . From information geometry, quantifies the number of statistically different states that the system passes through in time from an initial to a final .

2.2. Information–Thermodynamic Relation

However, while we recognise the utility of the IL as a tool for analysing the dynamics of time-varying PDFs, its physical significance remains unclear. Therefore, establishing a connection to a physical quantity is crucial.

In this context, consider the value of the entropy rate defined as follows: [56]

where is the entropy production due to irreversible processes occurring inside the system and is the entropy flux from the system to the environment. Figure 2 shows a system subject to some boundary conditions to avoid matter exchange (i.e., a closed system). The system produces entropy and exchanges entropy with the environment (hence, it shares energy). Specifically, () when the entropy flows from the system (environment) to the environment (system). A system with a minimum entropy production produces the highest amount of free energy (useful work) [21,57]. We refer the reader to [58,59] for a complete review of thermodynamics. In a first-order linear stochastic differential equation, the value of the information rate can be related to and as follows (for further details, see [50]):

where D and are the noise amplitude and the variance of the first-order Langevin equation.

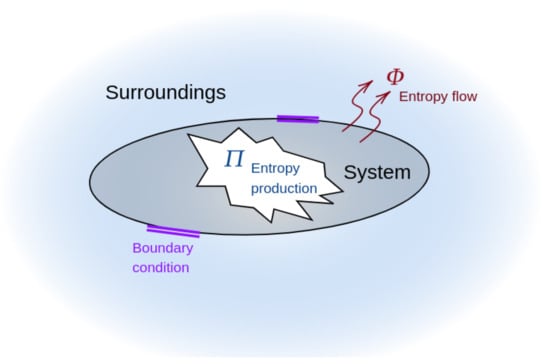

Figure 2.

Graphical description of Equation (8). In a closed system, entropy rate corresponds to the subtraction of the entropy produced by the system and the entropy exchanged with the environment .

Equation (9) can be used to describe the physical effects of a minimum variability control in a dynamical system. For instance, as will be discussed later in Section 4, to obtain a minimum information variability while being out of the equilibrium (i.e., ), the control will exert entropy to the environment, creating a small but negative value for . This means that a minimum information variability would not necessarily lead to a maximum free energy but to an optimal path where is limited by the value of according to (9). The complete derivation of how to compute the values of , and for a first-order Langevin equation is shown in Appendix C.

2.3. Minimum Information Variability Problem

Now that we understand the meaning of the IL, let us explain in more detail how the IL can be used to minimise deviations from the geodesic of the system’s PDF time evolution. In [32], the authors use the inequality where (Fisher divergence) with and given by (6). As mentioned in Section 1, such inequality follows from the Cauchy–Schwartz inequality with . But, most importantly, the equality holds for the minimum path where is constant (see, e.g., [19,32]), and the deviation from this equality is said to quantify the amount of the disorder in an irreversible process [32].

From [20], such a statement can be clarified by the following procedure. Let us define the time-average for a function as . Then, we can define the time-averaged variance

Equation (10) describes an accumulative deviation from the geodesic connecting the initial and final distributions of the system dynamics. Thus, we can conclude that by setting as a constant, we obtain a geodesic that defines a path where the process has the minimum geometric information variability.

2.4. BIBO Stability of the Linear Stochastic Process

As we address a control problem, it is essential to examine the bounded-input, bounded-output (BIBO) stability of (1). For this purpose, we revisit the BIBO stability conditions for Equations (5a) and (5b), which will be instrumental in our discussion of MPC stability.

Theorem 1.

Proof.

For a detailed proof of this result, please refer to [60,61]. □

Remark 1.

Theorem 1 is considered to be satisfied throughout our examples; i.e., the control method is applied to stable systems only. Furthermore, the control actions are constrained to finite values.

3. Main Results

To guide the system’s PDF time evolution along the geodesic of the IL while achieving a desired set point at time , we propose the following cost function:

where ; is the vector of states and that define the time evolution of ; is the desired position of the states defined by ; and at time t, and (such that ) is the vector of controls defined by . Therefore, . In this work, we call Equation (12) the Information Length Quadratic Regulator(IL-QR). As will be discussed in Section 3, MPC enables us to obtain the solution of (12) via a numerical scheme, circumventing analytic complexities while being applicable to practical scenarios. Analytically finding the geodesic dynamics involves using the solution of the Euler–Lagrange equations of the IL. The steps of such an approach are discussed and successfully applied in [4] for a first-order stochastic differential equation. In Appendix A, we give the details of the procedure when considering a more generalised scenario.

From (12), we observe that the first term on the right-hand side imposes a constant (needed to minimise the deviations from the geodesic [4]). The term involving guides the system towards a specified PDF defined by . The third term on the right-hand side of (12) regularises the control action to prevent abrupt changes in the inputs. Furthermore, and are matrices that penalise the error and the control input u, respectively. Additionally, penalises the square of the error , aiming to maintain close to over time.

In our approach, the control of the dynamics for is governed by , while the dynamics of are adjusted by controlling the noise width using a time-dependent vector , where its elements replace the non-zero constant values of the matrix in (5b). As discussed in the numerical examples, the noise width can be altered by modifying a macroscopic observable such as temperature (for further details, refer to the Brownian motion models presented in [12]).

Model Predictive Control

As discussed in Section 1, the solution of our optimisation problem will be computed through the MPC method. Hence, the following discrete optimal control problem encoding the MPC formulation is required:

In Equation (13), the symbol over and refers to their predicted values over the influence of the control throughout the optimisation process in the finite horizon of length N. Note that the value of is constrained by the discretised version of the set of Equations (5a) and (5b) given by

where and if a first-order approximation of the time derivative considering the sample period is applied (we apply a fourth-order Runge Kutta instead of a first-order approximation to compute in our simulations). Note that we have added the argument in Equation (13) when describing Equations (14) and (15) to emphasise the application of the control during the optimisation procedure. The initial conditions of (14) and (15) change every time an optimal control solution has been computed and they are subject to the measurements of the real/simulated process. Every element of the control vector is constrained by a lower and an upper limit denoted as and , respectively. Finally, f is the function describing the predicted value , which is defined as follows:

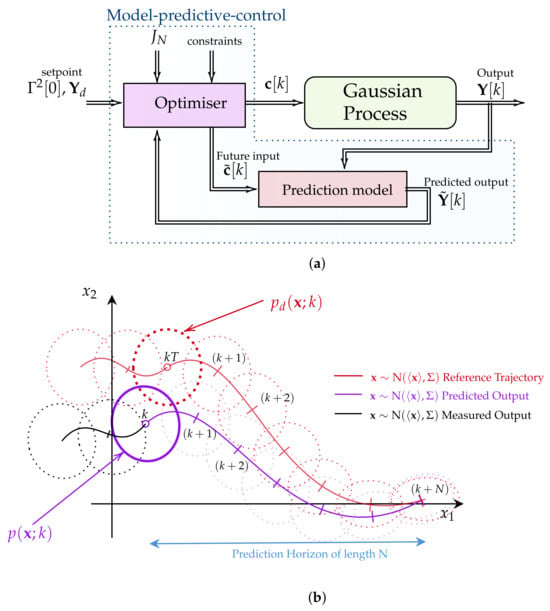

For a clearer grasp of the MPC method’s application in solving the IL-QR problem in real-world scenarios, Figure 3a,b show the control diagram and the functionality of the MPC’s optimiser when considering a second-order stochastic system, respectively.

Figure 3.

(a) A control diagram describing the main components of the implemented MPC methodology. The algorithm comprises a prediction model utilised to determine the optimal control using the interior point method with CasADi [44]. (b) A diagram illustrating a discrete MPC scheme applied to a second-order stochastic process. The algorithm predicts the behaviour of the dynamical system within a finite horizon of length N and compares it with the reference trajectory described by the PDF .

Figure 3a illustrates the real-time operation of the MPC algorithm. As the process evolves, the MPC algorithm receives input parameters, including a given set point , the prediction values of and from a prediction model, a set of constrains, the cost function , and the current system dynamics , to solve the optimisation problem given in (13). Afterwards, the optimal control solution of Equation (13) is applied to the system. The MPC method determines the optimal control by utilising the differential equations of and as a prediction model in a finite horizon of length N. Figure 3b provides a brief overview of the working principle of the MPC’s optimiser block.

In the case of a stochastic process described by a bivariate time-varying PDF p with random variables and , the MPC optimiser method initiates the optimisation process using the measured system’s PDF output (depicted in black). The optimisation process involves extrapolating the values of the PDF p within a finite horizon of length N and comparing them with the reference trajectory described by the PDF . The optimisation problem is solved using the interior point method with CasADi [44]. In this study, the use of deterministic descriptions of the first two statistical moments over time has facilitated the control, prediction, and simulation of the PDF, owing to the nature of the Langevin equations under consideration. However, in scenarios involving pure data or more complex stochastic differential equations, estimating time-varying PDFs may require inference methods [62] or stochastic simulations [63].

4. Simulation Results

To demonstrate the numerical implementation of MPC for solving the IL-QR cost function (13), the subsequent subsections delve into applying the minimum information variability to both the Ornstein–Uhlenbeck process and the Kramers equation. Throughout the simulations, we utilise various parameters, which are conveniently summarised in Table 2. Each system undergoes a pair of simulations or “experiments”, as detailed in Table 2.

Table 2.

Parameters used in the simulation results for the O-U and Kramers systems. The table includes figure numbers showing the simulation where the set of parameters was used.

4.1. The O-U Process

First, let us consider the Ornstein–Uhlenbeck (O-U) process (see Figure 4) defined by the following Langevin equation:

where is a random variable, is a deterministic force, is a damping constant, and is a short correlated random forcing such that with and .

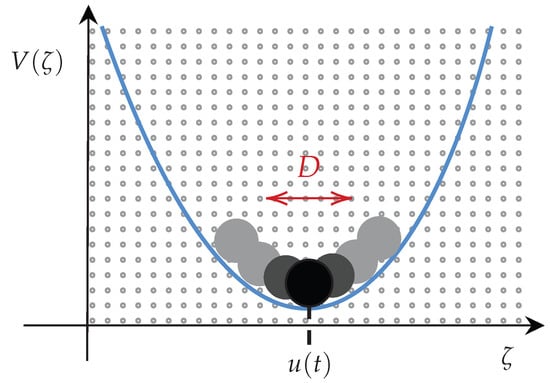

Figure 4.

The O-U process equation is commonly used to describe a prototype of a noisy relaxation process, for instance, the movement of a particle confined to a harmonic potential and thermal fluctuations with temperature T (, and is the Boltzmann constant) such that fluctuates stochastically.

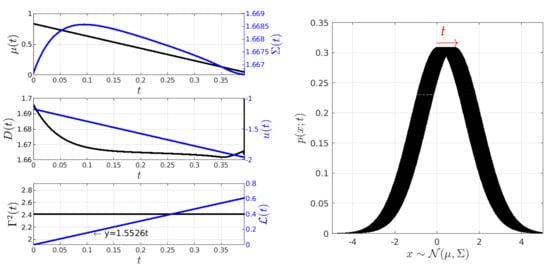

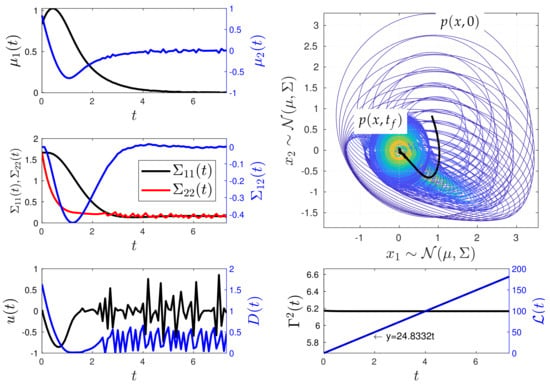

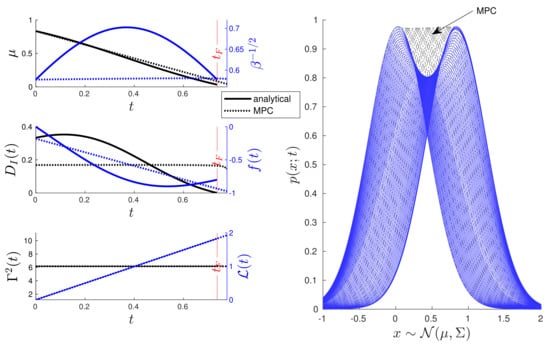

The results of the MPC implementation are shown in Figure 5, Figure 6, Figure 7 and Figure 8. In the following, it is important to note that, in the figures, black colour is used to represent values labelled on the y-left axis, while blue colour is used for values labelled on the y-right axis, unless otherwise specified. Figure 5 illustrates the scenario where the desired state of the O-U process is . It also shows the time evolution of the states and controls . From the results, we see that the method finds a geodesic motion (solution to the IL-QR) from the initial to the final state in less than seconds. The geodesic motion is corroborated by the constant value of and the plot of the information length whose shape is a line with a slope of . is computed by considering that . It is noteworthy that the value of is observed to vary slightly over time compared to the hyperbolic analytical solution in [4], which is provided for a non-constant (refer to Appendix A).

Figure 5.

Minimum information variability control of the O-U process (Experiment 1). In this experiment, the initial and desired PDFs have similar variances but different mean values. In the simulation, is maintained constant at a value of . To follow the geodesic, the input force needs to linearly decrease, while the noise amplitude decreases slightly exponentially. Conversely, the mean value undergoes a linear change, while the variance exhibits hyperbolic behaviour.

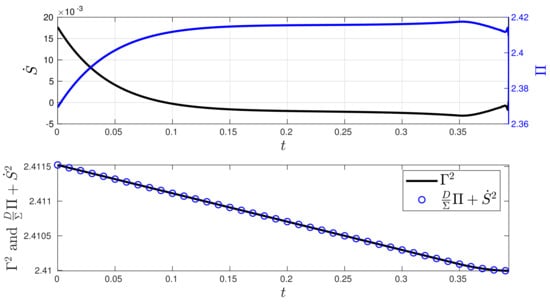

Figure 6.

Stochastic thermodynamics under minimum information variability for the O-U process (Experiment 1). The second plot demonstrates that the balance expressed via Equation (9) is maintained as expected. The control generates a small entropy rate converging to a negative value, indicating that most of the entropy flows from the system to the environment. The control induces entropy production to maintain the system out of equilibrium with respect to . This departure from equilibrium is supported by the presence of entropy production .

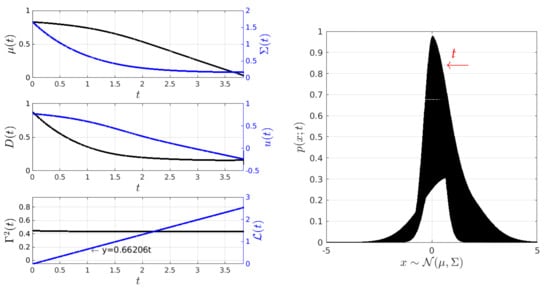

Figure 7.

Minimum information variability control of the O-U process (Experiment 2). In this experiment, the initial and desired PDFs have different variances and mean values. is maintained constant at a value of , while both the input force and the noise amplitude decrease exponentially.

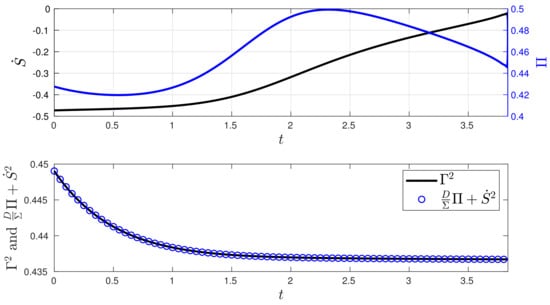

Figure 8.

Stochastic thermodynamics under minimum information variability for the O-U process (Experiment 2). The plot of Equation (9) demonstrates that to maintain a constant information rate while being out of equilibrium, we increase the value of entropy production , and most of the entropy flows out to the environment, as indicated by the negative sign of .

When analysing the stochastic thermodynamics of the O-U system controlled by the MPC technique, Figure 6 presents the plot of the entropy rate in comparison with the entropy production , along with a plot of the value of using Equation (9). The analytical expressions for and , along with their derivations, are given in Appendix C. In the closed-loop system, we can see that the MPC method induces slight changes in both the entropy production and the entropy rate during the process. Since the values of and in the desired state are close to, and far from, their initial conditions at state , respectively, the balance between , and as given by (9) is kept by maintaining an almost constant and a with a nearly constant velocity.

Under conditions almost similar to those in Figure 5, Figure 7 shows the behaviour of the closed-loop system PDF, the states and , and the controls D and u, as well as the behaviour of for . Here, is computed by considering that and . The final state is achieved at approximately . Once again, the geodesic behaviour is supported by the constant value of and the plot of the information length , which depicts a line with a slope of .

In comparison to the stochastic thermodynamics shown in Figure 6, Figure 8 exhibits minor changes in the entropy production and significant variations in the entropy rate of the closed-loop system, given that the values of and in the desired state differ from its initial condition . This disparity also leads to slight fluctuations in both the and . Additionally, Figure 8 demonstrates the validity of the balance in Equation (9).

4.2. Kramers Equation

To study the solution of the IL-QR problem in a higher-order system via the MPC method, let us consider the so-called Kramers equation. The Kramers equation describes the Brownian motion of particles in an external field [12]. The non-autonomous version of the Kramers equation is given by the following set of Langevin equations:

Here, is a short correlated Gaussian noise with a zero mean and a strength with the following property:

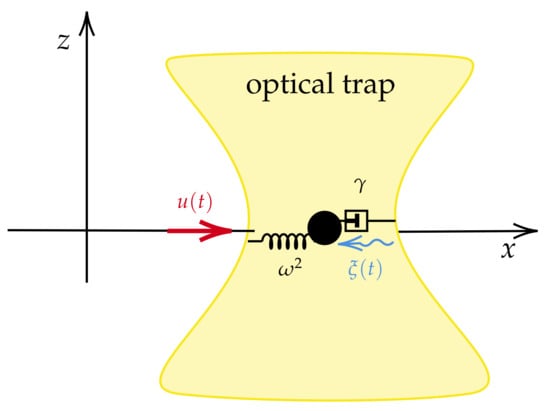

In practice, as shown in Figure 9, the Kramers Equation (18) is also a good first approximation to describe the dynamics in one-dimension of a particle in an optical trap [5]. The Kramers equation control and state vectors are defined by

Here, and are the mean values of the random variables and , respectively. , , and are the values describing the covariance matrix .

Figure 9.

Mechanical representation of the Kramers Equation (18) as a mass–spring–damper system. In this system, the external force is deterministic, while represents a stochastic perturbation on the process, which varies due to the temperature of the fluid [12].

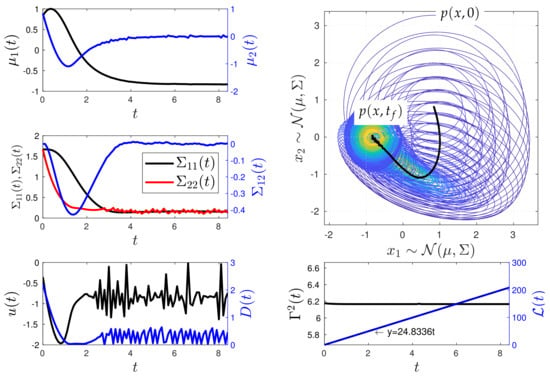

Figure 10 and Figure 11 show the simulation results of the closed-loop Kramers equation when considering the desired states and , respectively. These figures include the time evolution plots of the mean values and and the covariance matrix values , , and of the Kramers equation random variables and . In addition, they include the time evolution of the bivariate PDF with the spatial vector , with and representing the position and velocity of the system dynamics, respectively. The remaining parameters used in the simulations are listed in Table 2. Again, in the figures, black colour is used to represent values labelled on the y-left axis, while blue colour is used for values labelled on the y-right axis, unless otherwise specified.

Figure 10.

Minimum information variability control of the Kramers equation (Experiment 1). The control effectively adjusts the values of the covariance matrix and the mean vector by dynamically modifying u and D, transitioning the system’s PDF from one state to another out of equilibrium. It is noteworthy that, to maintain the system on the IL’s geodesic, the MPC method maintains a constant , resulting in abrupt changes in u and D as the system approaches the desired state . Physically, this leads to a high entropy production due to the control action.

Figure 11.

Minimum information variability control of the Kramers equation (Experiment 2). In this scenario, the value of results in slower dynamics compared to Experiment 1, as MPC now requires more time to reach . Once again, significant changes are observed in u and D to maintain a constant information rate when the system approaches the desired state .

For the first numerical experiment, Figure 10 demonstrates that the MPC method is capable of maintaining as constant through time while reaching the desired state . Here, the value of in (13) is obtained as follows:

where and , while and are taken from the corresponding and the mathematical model (18), respectively. The geodesic dynamics give a behaviour with slow oscillations in the state . The controls u and D present high oscillations as the system reaches the desired state . The system reaches at with an error of . The geodesic behaviour is supported by the linear behaviour of the information length compared to the fitted equation .

Figure 10 shows a second numerical experiment where is even farther from the system’s equilibrium. Yet, the MPC method can maintain as constant through time while reaching . Like the example of Figure 10, in this case . Small oscillations persists in the time evolution of , , , c, and . The system reaches the desired state at . Thus, the farther the desired state is from the initial state , the longer the time it takes to reach it while following the geodesic path. The geodesic behaviour is evidenced by the plot of the information length , whose behaviour is compared to the fitted equation . Similar to the example in Figure 10, the controls exhibit highly oscillatory behaviour as the system reaches .

5. Conclusions

In this work, we demonstrated the application of the MPC method, an online optimisation algorithm for constrained control problems, to achieve the minimum information variability in systems governed by linear stochastic differential equations. The linearity of the system results in time-varying Gaussian PDFs, with statistical moments determined by a set of deterministic differential equations. Our simulations demonstrate that the MPC method is a practical approach for determining the geodesic of the information length between the initial and desired probabilistic states through the solution of the proposed IL-QR loss function.

From a thermodynamic standpoint, simulations of the MPC in the O-U process reveal that the MPC directly influences the amount of entropy production generated by the system to meet all optimisation requirements. Future work will involve extending this approach to nonlinear stochastic dynamics, such as toy models [64], systems with uncertain physical parameters [65], Brownian motion [66], or diffusion [67,68]. This extension aims to maximise the free energy [21] by minimising the entropy production and to analyse closed-loop stochastic thermodynamics for higher-order systems. It is worth noting that extending the method to nonlinear dynamics can be achieved through the Laplace assumption [69] and/or by employing Kalman/particle filter methods [70,71].

Author Contributions

Conceptualisation, A.-J.G.-C., E.-j.K. and M.W.M.; Formal analysis, A.-J.G.-C.; Investigation, A.-J.G.-C. and E.-j.K.; Methodology, E.-j.K.; Project administration, E.-j.K.; Software, A.-J.G.-C. and M.W.M.; Supervision, E.-j.K.; Validation, A.-J.G.-C. and E.-j.K.; Visualisation, A.-J.G.-C. and E.-j.K.; Writing—original draft, A.-J.G.-C. and E.-j.K.; Writing—review and editing, A.-J.G.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research is partly supported by EPSRC grant (EP/W036770/1), National Research Foundation of Korea (RS-2023-00284119) and EPSRC grant EP/R014604/1.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

The corresponding author would like to thank César Méndez-Barrios for his valuable and constructive suggestions during the planning and development of this research work.

Conflicts of Interest

Author Mohamed W. Mehrez was employed by the company Zebra Technologies. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PDFs | Probability density functions |

| FP | Fokker–Planck |

| IL | Information length |

| MPC | Model predictive control |

| QR | Quadratic regulator |

| BIBO | Bounded-input, bounded-output |

Appendix A. A Solution by the Euler–Lagrange Equation

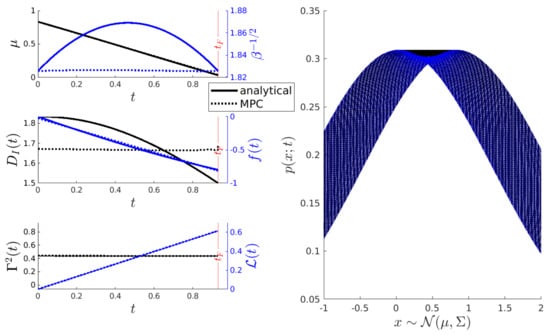

In [4], an analytical solution describing the geodesic motion connecting given initial and final probability distributions is found by solving the Euler–Lagrange equations in terms of the covariance and mean value of a first-order stochastic process. Here, we take the O-U process to compare such an analytical solution of the geodesic dynamics with the solution obtained by the MPC method. Additionally, we provide the set of differential equations describing the geodesic motion for an n-th order Gaussian process.

The Euler–Lagrange equations for the Lagrangian in terms of the vector (mean value) and the matrix (covariance) are

Using (7) in (A1) and (A2), we obtain (see Appendix B)

As mentioned above, [4] presents a closed-form analytical solution to the boundary value problem of Equations (A3) and (A4) when the dimensions of both and are one. Specifically, Equations (A3) and (A4) have a trivial solution where is constant. For a non-constant , the following hyperbolic solutions are found in [4]:

Here, and . The values of , and A are computed using a given set of boundary conditions (for a complete discussion, see [4]). For instance, the parameter

where using . Clearly, through Equations (A5) and (A6) and the dynamical model of the O-U process (17), we can construct the optimal control of the input and the optimal noise amplitude of . From [4], given that , such optimal controls are given by

To compare the analytical and MPC solutions of the geodesic of the IL, Figure A1 shows the behaviour of the O-U process when controlled through the analytical solutions (A8) and (A9) or the IL-MPC method. The figure contains different subplots that show the time evolution of , , , and and the optimal controls and f. In the simulation, the desired state and the damping are and , respectively. Additionally, we set a fixed final time (one cycle of the hyperbolic geodesic motion (A5) and (A6)) by considering the initial state . Figure A1 uses dashed and non-dashed lines for the MPC and the analytical response, respectively. Additionally, recall that black colour is used to represent values labelled on the y-left axis, while blue colour is used for values labelled on the y-right axis. From the comparison, a major conclusion is that the time evolution of is no longer hyperbolic when using the MPC method. This means that the MPC method finds an almost constant solution but not the hyperbolic solution shown in [4]. The MPC allows us to reach the final state at with an error of .

As a second example, Figure A2 shows the dynamics of the controlled O-U process when the initial state is (fixing ), the desired state , and the damping . Again, the MPC method recovers a geodesic solution where the time evolution is constant. In this scenario, the MPC method reaches the desired state with an error of in a time , demonstrating that the numerical optimisation scheme may not recover an optimal time.

As a final remark, note that if the n-th order case is considered, Equations (A3) and (A4) form a set of non-linear differential equations whose solution may be obtained by a numerical procedure. But, even for the case of a second-order stochastic process, this becomes a challenging problem (we have a boundary value problem of 12 non-linear differential equations). Hence, the MPC method provides an alternative solution to this problem while being an experimentally feasible approach, as demonstrated by the application to the Kramers equation in Section 4.2.

Appendix B. Geodesic Dynamics Derivation

Based on matrix calculus identities from [72], we can derive the Euler–Lagrange equations for . First, for we have

where . Therefore,

which leads to Equation (A3). For , we have

Appendix C. Entropy Rate in the O-U Process

The O-U process Fokker–Planck equation is given by

where and . The solution of (A15) is Gaussian and given by

The values of the entropy production and entropy flow for the O-U process can be obtained from the computation of the entropy rate of the solution of the Fokker–Planck Equation (A15) as follows (see also [21,50]):

Hence, the exact values of and are [21]

References

- Smith, R.; Friston, K.J.; Whyte, C.J. A step-by-step tutorial on active inference and its application to empirical data. J. Math. Psychol. 2022, 107, 102632. [Google Scholar] [CrossRef]

- Peliti, L.; Pigolotti, S. Stochastic Thermodynamics: An Introduction; Princeton University Press: Princeton, NJ, USA, 2021. [Google Scholar]

- Bechhoefer, J. Control Theory for Physicists; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar]

- Kim, E.j.; Lee, U.; Heseltine, J.; Hollerbach, R. Geometric structure and geodesic in a solvable model of nonequilibrium process. Phys. Rev. E 2016, 93, 062127. [Google Scholar] [CrossRef]

- Pesce, G.; Jones, P.H.; Maragò, O.M.; Volpe, G. Optical tweezers: Theory and practice. Eur. Phys. J. Plus 2020, 135, 949. [Google Scholar] [CrossRef]

- Deffner, S.; Bonança, M.V. Thermodynamic control—An old paradigm with new applications. EPL (Europhys. Lett.) 2020, 131, 20001. [Google Scholar] [CrossRef]

- Salapaka, M.V. Control of Optical Tweezers. Encyclopedia of Systems and Control; Springer: London, UK, 2021; pp. 361–368. [Google Scholar]

- Guéry-Odelin, D.; Jarzynski, C.; Plata, C.A.; Prados, A.; Trizac, E. Driving rapidly while remaining in control: Classical shortcuts from Hamiltonian to stochastic dynamics. Rep. Prog. Phys. 2023, 86, 035902. [Google Scholar] [CrossRef]

- Iram, S.; Dolson, E.; Chiel, J.; Pelesko, J.; Krishnan, N.; Güngör, Ö.; Kuznets-Speck, B.; Deffner, S.; Ilker, E.; Scott, J.G.; et al. Controlling the speed and trajectory of evolution with counterdiabatic driving. Nat. Phys. 2021, 17, 135–142. [Google Scholar] [CrossRef]

- Annunziato, M.; Borzi, A. Optimal control of probability density functions of stochastic processes. Math. Model. Anal. 2010, 15, 393–407. [Google Scholar] [CrossRef]

- Annunziato, M.; Borzì, A. A Fokker–Planck control framework for multidimensional stochastic processes. J. Comput. Appl. Math. 2013, 237, 487–507. [Google Scholar] [CrossRef]

- Risken, H. Fokker-planck equation. In The Fokker-Planck Equation; Springer: Berlin/Heidelberg, Germany, 1996; pp. 63–95. [Google Scholar]

- Fleig, A.; Guglielmi, R. Optimal control of the Fokker–Planck equation with space-dependent controls. J. Optim. Theory Appl. 2017, 174, 408–427. [Google Scholar] [CrossRef]

- Aronna, M.S.; Tröltzsch, F. First and second order optimality conditions for the control of Fokker-Planck equations. ESAIM Control. Optim. Calc. Var. 2021, 27, 15. [Google Scholar] [CrossRef]

- Martínez, I.A.; Petrosyan, A.; Guéry-Odelin, D.; Trizac, E.; Ciliberto, S. Engineered swift equilibration of a Brownian particle. Nat. Phys. 2016, 12, 843–846. [Google Scholar] [CrossRef] [PubMed]

- Baldassarri, A.; Puglisi, A.; Sesta, L. Engineered swift equilibration of a Brownian gyrator. Phys. Rev. E 2020, 102, 030105. [Google Scholar] [CrossRef] [PubMed]

- Martinez, I.; Petrosyan, A.; Guéry-Odelin, D.; Trizac, E.; Ciliberto, S. Faster than nature: Engineered swift equilibration of a brownian particle. arXiv 2015, arXiv:1512.07821. [Google Scholar]

- Saridis, G.N. Entropy in Control Engineering; World Scientific: Singapore, 2001; Volume 12. [Google Scholar]

- Salamon, P.; Nulton, J.D.; Siragusa, G.; Limon, A.; Bedeaux, D.; Kjelstrup, S. A simple example of control to minimize entropy production. J. Non-Equilib. Thermodyn. 2002, 27, 45–55. [Google Scholar] [CrossRef]

- Nicholson, S.B.; del Campo, A.; Green, J.R. Nonequilibrium uncertainty principle from information geometry. Phys. Rev. E 2018, 98, 032106. [Google Scholar] [CrossRef]

- Kim, E.J. Information Geometry, Fluctuations, Non-Equilibrium Thermodynamics, and Geodesics in Complex Systems. Entropy 2021, 23, 1393. [Google Scholar] [CrossRef]

- Nielsen, F. An elementary introduction to information geometry. Entropy 2020, 22, 1100. [Google Scholar] [CrossRef] [PubMed]

- Miura, K. An introduction to maximum likelihood estimation and information geometry. Interdiscip. Inf. Sci. 2011, 17, 155–174. [Google Scholar] [CrossRef]

- Leung, L.Y.; North, G.R. Information theory and climate prediction. J. Clim. 1990, 3, 5–14. [Google Scholar] [CrossRef]

- Guel-Cortez, A.J.; Kim, E.J. Information Geometric Theory in the Prediction of Abrupt Changes in System Dynamics. Entropy 2021, 23, 694. [Google Scholar] [CrossRef]

- Kim, E.J.; Guel-Cortez, A.J. Causal Information Rate. Entropy 2021, 23, 1087. [Google Scholar] [CrossRef] [PubMed]

- Barnett, L.; Barrett, A.B.; Seth, A.K. Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett. 2009, 103, 238701. [Google Scholar] [CrossRef]

- San Liang, X. Information flow and causality as rigorous notions ab initio. Phys. Rev. E 2016, 94, 052201. [Google Scholar] [CrossRef] [PubMed]

- Horowitz, J.M.; Sandberg, H. Second-law-like inequalities with information and their interpretations. New J. Phys. 2014, 16, 125007. [Google Scholar] [CrossRef]

- Allahverdyan, A.E.; Janzing, D.; Mahler, G. Thermodynamic efficiency of information and heat flow. J. Stat. Mech. Theory Exp. 2009, 2009, P09011. [Google Scholar] [CrossRef]

- Crooks, G.E. Measuring thermodynamic length. Phys. Rev. Lett. 2007, 99, 100602. [Google Scholar] [CrossRef] [PubMed]

- Flynn, S.W.; Zhao, H.C.; Green, J.R. Measuring disorder in irreversible decay processes. J. Chem. Phys. 2014, 141, 104107. [Google Scholar] [CrossRef]

- Nicholson, S.B.; Garcia-Pintos, L.P.; del Campo, A.; Green, J.R. Time—Information uncertainty relations in thermodynamics. Nat. Phys. 2020, 16, 1211–1215. [Google Scholar] [CrossRef]

- Guel-Cortez, A.J.; Kim, E.J. Information length analysis of linear autonomous stochastic processes. Entropy 2020, 22, 1265. [Google Scholar] [CrossRef]

- Ito, S.; Dechant, A. Stochastic time evolution, information geometry, and the Cramér-Rao bound. Phys. Rev. X 2020, 10, 021056. [Google Scholar] [CrossRef]

- Åström, K.J.; Hägglund, T. PID control. IEEE Control Syst. Mag. 2006, 1066, 30–31. [Google Scholar]

- Guel-Cortez, A.J.; Méndez-Barrios, C.F.; González-Galván, E.J.; Mejía-Rodríguez, G.; Félix, L. Geometrical design of fractional PDμ controllers for linear time-invariant fractional-order systems with time delay. Proc. Inst. Mech. Eng. Part I J. Syst. Control. Eng. 2019, 233, 815–829. [Google Scholar] [CrossRef]

- Brunton, S.L.; Kutz, J.N. Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Fliess, M.; Join, C. Model-free control. Int. J. Control 2013, 86, 2228–2252. [Google Scholar] [CrossRef]

- Lee, J.H. Model predictive control: Review of the three decades of development. Int. J. Control Autom. Syst. 2011, 9, 415–424. [Google Scholar] [CrossRef]

- Mehrez, M.W.; Worthmann, K.; Cenerini, J.P.; Osman, M.; Melek, W.W.; Jeon, S. Model predictive control without terminal constraints or costs for holonomic mobile robots. Robot. Auton. Syst. 2020, 127, 103468. [Google Scholar] [CrossRef]

- Kristiansen, B.A.; Gravdahl, J.T.; Johansen, T.A. Energy optimal attitude control for a solar-powered spacecraft. Eur. J. Control 2021, 62, 192–197. [Google Scholar] [CrossRef]

- Salesch, T.; Gesenhues, J.; Habigt, M.; Mechelinck, M.; Hein, M.; Abel, D. Model based optimization of a novel ventricular assist device. At-Automatisierungstechnik 2021, 69, 619–631. [Google Scholar] [CrossRef]

- Andersson, J.A.E.; Gillis, J.; Horn, G.; Rawlings, J.B.; Diehl, M. CasADi—A software framework for nonlinear optimization and optimal control. Math. Program. Comput. 2019, 11, 1–36. [Google Scholar] [CrossRef]

- Bemporad, A. Hybrid Toolbox—User’s Guide. 2004. Available online: http://cse.lab.imtlucca.it/~bemporad/hybrid/toolbox (accessed on 1 December 2023).

- Anderson, B.D.; Moore, J.B. Optimal Control: Linear Quadratic Methods; Courier Corporation: Chelmsford, MA, USA, 2007. [Google Scholar]

- Görges, D. Relations between model predictive control and reinforcement learning. IFAC-PapersOnLine 2017, 50, 4920–4928. [Google Scholar] [CrossRef]

- Heseltine, J.; Kim, E.J. Comparing Information Metrics for a Coupled Ornstein–Uhlenbeck Process. Entropy 2019, 21, 775. [Google Scholar] [CrossRef]

- Gieseler, J.; Gomez-Solano, J.R.; Magazzù, A.; Castillo, I.P.; García, L.P.; Gironella-Torrent, M.; Viader-Godoy, X.; Ritort, F.; Pesce, G.; Arzola, A.V.; et al. Optical tweezers—From calibration to applications: A tutorial. Adv. Opt. Photonics 2021, 13, 74–241. [Google Scholar] [CrossRef]

- Kim, E.J. Information geometry and non-equilibrium thermodynamic relations in the over-damped stochastic processes. J. Stat. Mech. Theory Exp. 2021, 2021, 093406. [Google Scholar] [CrossRef]

- Maybeck, P.S. Stochastic Models, Estimation, and Control; Academic Press: Cambridge, MA, USA, 1982. [Google Scholar]

- Kamen, E.W.; Levine, W. Fundamentals of linear time-varying systems. In The Control Handbook; CRC Press: Boca Raton, FL, USA, 1996; Volume 1. [Google Scholar]

- Jenks, S.; Mechanics, S., II. Introduction to Kramers Equation; Drexel University: Philadelphia, PA, USA, 2006. [Google Scholar]

- Pavliotis, G.A. Stochastic Processes and Applications: Diffusion Processes, the Fokker-Planck and Langevin Equations; Springer: Berlin/Heidelberg, Germany, 2014; Volume 60. [Google Scholar]

- Chamorro, H.; Guel-Cortez, A.; Kim, E.J.; Gonzalez-Longat, F.; Ortega, A.; Martinez, W. Information Length Quantification and Forecasting of Power Systems Kinetic Energy. IEEE Trans. Power Syst. 2022, 37, 4473–4484. [Google Scholar] [CrossRef]

- Tomé, T.; de Oliveira, M.J. Entropy production in nonequilibrium systems at stationary states. Phys. Rev. Lett. 2012, 108, 020601. [Google Scholar] [CrossRef] [PubMed]

- Nielsen, S.N.; Müller, F.; Marques, J.C.; Bastianoni, S.; Jørgensen, S.E. Thermodynamics in ecology—An introductory review. Entropy 2020, 22, 820. [Google Scholar] [CrossRef] [PubMed]

- Haddad, W.M. A Dynamical Systems Theory of Thermodynamics; Princeton University Press: Princeton, NJ, USA, 2019. [Google Scholar]

- Van der Schaft, A. Classical thermodynamics revisited: A systems and control perspective. IEEE Control Syst. Mag. 2021, 41, 32–60. [Google Scholar] [CrossRef]

- Chen, C.T. Linear System Theory and Design; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Behr, M.; Benner, P.; Heiland, J. Solution formulas for differential Sylvester and Lyapunov equations. Calcolo 2019, 56, 51. [Google Scholar] [CrossRef]

- Lindgren, F.; Bolin, D.; Rue, H. The SPDE approach for Gaussian and non-Gaussian fields: 10 years and still running. arXiv 2021, arXiv:2111.01084. [Google Scholar] [CrossRef]

- Erban, R.; Chapman, J.; Maini, P. A practical guide to stochastic simulations of reaction-diffusion processes. arXiv 2007, arXiv:0704.1908. [Google Scholar]

- Reutlinger, A.; Hangleiter, D.; Hartmann, S. Understanding (with) toy models. Br. J. Philos. Sci. 2018, 69, 1069–1099. [Google Scholar] [CrossRef]

- Ackermann, J. Robust Control: Systems with Uncertain Physical Parameters; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Lee, S.H.; Kapral, R. Friction and diffusion of a Brownian particle in a mesoscopic solvent. J. Chem. Phys. 2004, 121, 11163–11169. [Google Scholar] [CrossRef] [PubMed]

- Hadeler, K.P.; Hillen, T.; Lutscher, F. The Langevin or Kramers approach to biological modeling. Math. Models Methods Appl. Sci. 2004, 14, 1561–1583. [Google Scholar] [CrossRef]

- Balakrishnan, V. Diffusion in an External Potential. In Elements of Nonequilibrium Statistical Mechanics; Springer: Berlin/Heidelberg, Germany, 2021; pp. 168–190. [Google Scholar]

- Guel-Cortez, A.J.; Kim, E.J. Information geometry control under the Laplace assumption. Phys. Sci. Forum 2022, 5, 25. [Google Scholar] [CrossRef]

- Van Der Merwe, R.; Wan, E.A. The square-root unscented Kalman filter for state and parameter-estimation. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 01CH37221), Salt Lake City, UT, USA, 7–11 May 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 6, pp. 3461–3464. [Google Scholar]

- Elfring, J.; Torta, E.; Van De Molengraft, R. Particle filters: A hands-on tutorial. Sensors 2021, 21, 438. [Google Scholar] [CrossRef]

- Petersen, K.B.; Pedersen, M.S. The matrix cookbook. Tech. Univ. Den. 2008, 7, 510. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).