Abstract

The two-dimensional sample entropy marks a significant advance in evaluating the regularity and predictability of images in the information domain. Unlike the direct computation of sample entropy, which incurs a time complexity of for the series with N length, the Monte Carlo-based algorithm for computing one-dimensional sample entropy (MCSampEn) markedly reduces computational costs by minimizing the dependence on N. This paper extends MCSampEn to two dimensions, referred to as MCSampEn2D. This new approach substantially accelerates the estimation of two-dimensional sample entropy, outperforming the direct method by more than a thousand fold. Despite these advancements, MCSampEn2D encounters challenges with significant errors and slow convergence rates. To counter these issues, we have incorporated an upper confidence bound (UCB) strategy in MCSampEn2D. This strategy involves assigning varied upper confidence bounds in each Monte Carlo experiment iteration to enhance the algorithm’s speed and accuracy. Our evaluation of this enhanced approach, dubbed UCBMCSampEn2D, involved the use of medical and natural image data sets. The experiments demonstrate that UCBMCSampEn2D achieves a reduction in computational time compared to MCSampEn2D. Furthermore, the errors with UCBMCSampEn2D are only of those observed in MCSampEn2D, highlighting its improved accuracy and efficiency.

1. Introduction

Information theory serves as a foundational framework for developing tools to represent and manipulate information [1], particularly in the realms of signal and image processing. Within this paradigm, entropy stands out as a key concept, functioning as a metric for quantifying uncertainty or irregularity within a system or dataset [2]. Stemming from Shannon’s pioneering work on entropy [1], subsequent researchers have advanced the field by introducing diverse methods. Notable examples include one-dimensional approximate entropy (ApEn) [3,4], dispersion entropy [5], sample entropy (SampEn) [2], and other innovative approaches. Multiscale entropy, hierarchical entropy, and their variants have been applied to various fields, such as fault identification [6,7] and feature extraction [8], beyond physiological time series analysis.

In 1991, the concept of ApEn was introduced as a method for quantifying the irregularity of time series [3]. ApEn relies on the conditional probability of the negative average natural logarithm, specifically examining the likelihood that two sequences, initially similar at m points, will remain similar at the subsequent point. Addressing the computational challenges associated with self-similar patterns in ApEn, SampEn was subsequently developed, obtaining sampling points using global random sampling to represent the signal, leading to more robust estimations [1]. Notably, in the domain of biomedical signal processing, SampEn has been successfully employed, demonstrating its effectiveness and applicability [9].

Computing SampEn involves enumerating the number of similar templates within a time series, essentially requiring the count of matching template pairs for the given series. The direct computation of SampEn inherently has a computational complexity , where N represents the length of the time series under analysis. To expedite this process, kd-tree based algorithms have been proposed for sample entropy computation. These algorithms effectively reduce the time complexity to , where m is denoting the template (or pattern) length [10,11]. Additionally, various approaches like box-assisted [12,13], bucket-assisted [14], lightweight [15], and assisted sliding box (SBOX) [16] algorithms have been developed. Nonetheless, the computational complexity for all these algorithms remains at . To tackle the challenge of computational complexity, a rapid algorithm for estimating Sample Entropy using the Monte Carlo algorithm (MCSampEn) has been introduced in [17]. This algorithm features computational costs that are independent of N, and its estimations converge to the exact sample entropy as the number of repeated experiments increases. Experimental results reported in [17] demonstrate that MCSampEn achieves a speedup of 100 to 1000 times compared to kd-tree and assisted sliding box algorithms, while still delivering satisfactory approximation accuracy.

However, the MCSampEn algorithm utilizes a random sampling pattern where the importance of each sampling point varies. The application of averaging methods in this context leads to significant fluctuations in errors, resulting in a large standard deviation and slow convergence of the entire process. To address this, we introduce the upper confidence bound (UCB) strategy to set different confidence bounds for various sampling points, assigning varying levels of importance to these points. This approach mitigates errors caused by averaging methods, reduces the standard deviation of the MCSampEn algorithm, accelerates the convergence speed, and significantly improves computational speed.

In this paper, we extend MCSampEn to compute two-dimensional sample entropy, referred to as MCSampEn2D. To mitigate the challenges of convergence brought about by MCSampEn2D, we integrate MCSampEn2D with the upper confidence bound (UCB) strategy to reduce variance [18]. We call this refined algorithm as the UCBMCSampEn2D. By establishing a confidence upper bound for each experimental round, we differentiate the importance of each experiment. The higher the importance of an experiment, the greater its assigned confidence upper bound. Conversely, for experiments with lower confidence upper bounds, our objective is to minimize the errors they introduce to the greatest extent possible.

The UCBMCSampEn2D algorithm is a notable enhancement, enabling swift convergence and minimal errors, even in scenarios with a limited number of sampling points. The UCBMCSampEn2D algorithm eliminates the need for explicit knowledge of the data distribution. By optimistically adjusting the weights for each round of experiments and estimating the upper bound of the expected value, the UCBMASampEn2D algorithm operates without introducing additional computational burden. This algorithm continuously optimizes weights through online learning [19], providing a solution without the requirement for explicit knowledge of the data distribution. In this study, we systematically assess the performance of the UCBMCSampEn2D algorithm across medical image and natural image datasets. Our investigation reveals two primary advantages of the proposed UCBMCSampEn2D algorithm: (1) The UCBMCSampEn2D algorithm places greater emphasis on the importance of different rounds of experiments by assigning importance levels, leading to a reduction in overall errors and faster convergence speed. (2) Leveraging a reinforcement learning approach, the UCBMCSampEn2D algorithm utilizes local optima to represent true entropy. Through the application of upper confidence bounds, it effectively addresses the challenge of determining how to set importance levels.

Further detailed analysis and results will be provided in subsequent sections to expound upon these advantages and demonstrate the effectiveness of the UCBMCSampEn2D algorithm in comparison to conventional methods.

2. Fast Algorithms for Estimating Two-Dimensional Sample Entropy

In this section, we introduce the MCSampEn2D and UCBMCSampEn2D algorithms. Before delving into the details of these approaches, we will first establish the key mathematical symbols and fundamental concepts integral to understanding MCSampEn2D. This groundwork provides a clear and comprehensive exposition of both the MCSampEn2D and UCBMCSampEn2D algorithms.

2.1. Groundwork of Two-Dimensional Sample Entropy

Let be an image of size . For all and , define two-dimensional matrices with size , named template matrices, by

where is the embedding dimension vector [20]. We also define . For all and , let be the greatest element of the absolute differences between and . We denote by the cardinality of a set E. Then, for fixed k and l, we count , and compute

where r is the predefined threshold (tolerance factor). We also define as

Finally, is defined as follows [20]:

where . The parameter indicates the size of the matrices, which are analyzed or compared along images. In this study, [20,21], is chosen to obtain squared template matrices, and let . For all , we denote . The process for computing is summarized in Algorithm 1.

The parameter r is selected to strike a balance between the quality of the logarithmic likelihood estimates and the potential loss of signals or image information. If r is chosen to be too small (less than 0.1 of the standard deviation of an image), it leads to poor conditional probability estimates. Additionally, to mitigate the influence of noise on the data, it is advisable to opt for a larger r. Conversely, when r exceeds 0.4 of the standard deviation, excessive loss of detailed data information occurs. Therefore, a trade-off between large and small r values is essential. For a more in-depth discussion on the impact of these parameters in , please refer to [20].

| Algorithm 1 Two-dimensional sample entropy |

|

2.2. A Monte Carlo-Based Algorithm for Estimating Two-Dimensional Sample Entropy

The SampEn is fundamentally defined as , where B represents the number of matching template pairs of length m, and A represents the number of matching template pairs of length . The most computationally intensive step in calculating SampEn involves determining the ratio for templates of lengths m and . Notably, (resp. ) denotes the probability of template matches of length m (resp. ), and the ratio can be interpreted as a conditional probability. The statement indicates that the computation time of the MCSampEn method becomes independent of the data size and, instead, depends on the number of sampling points and the number of repetitions . This complexity is denoted as [17].

The objective of the MCSampEn algorithm [17] is to approximate this conditional probability for the original dataset by considering the conditional probability of a randomly subsampled dataset. Specifically, the MCSampEn randomly selects templates of length m and templates of length from the original time series. It subsequently computes the number of matching pairs in the selected templates of length m (resp. ), denoted as (resp. ). This selection process is repeated times, and the average value of (resp. ), represented as (resp. ), is then calculated. Finally, is employed to approximate the complexity measurement for the time series. The entire process can be expressed succinctly using the following formula:

where (resp. ) means the number of matching pairs in the selected templates of length m (resp. ) in in the k-th experiment.

When extending the MCSampEn algorithm to process two-dimensional data, a random sampling step is essential at the outset to acquire N data points. The technique employs a specific sampling strategy to sample positive integer sets, denoted as and . Subsequently, for each , compute and , which indicates a two-dimensional matrix . Then, we randomly select templates of size and templates of length from the original two-dimensional data. It subsequently computes the number of matching pairs, with tolerant factor r, in the selected templates of length (resp. ), denoted as (resp. ). This selection process is repeated times, and the average value, represented as (resp. ), is then calculated. Replacing and by and in (5), respectively, we obtain an approximation of . This process is summarized in Algorithm 2, which calls Algorithm 1.

| Algorithm 2 Two-dimensional Monte Carlo sample entropy (MCSampEn2D) |

|

It is easy to check the computational cost of MCSampEn2D is when is fixed. Through a proof process similar to that of Theorem 5 in [17], we can see that the output of MCSampEn2D, , is approximating the output of SampEn2D with the rate in the sense of almost sure convergence when is fixed. Furthermore, in our examination of MCSampEn2D, we observed variability in the significance of the randomly subsampled dataset. This is manifested as large fluctuations in the errors between the values of (or ) obtained in each of the rounds of experiments and their respective average values, (or ). Such fluctuations amplify the errors in the output of MCSampEn2D. This phenomenon made us realize that if we could capture the importance of different experimental rounds and use this importance to calculate a weighted average of (or ) obtained in the rounds of experiments, we could achieve a faster algorithm than MCSampEn2D.

2.3. Monte Carlo Sample Entropy Based on the UCB Strategy

The UCB strategy is a refinement in the field of optimization, particularly tailored for the intricate problem of the multi-armed bandit. This problem, often conceptualized as a machine or ’gambler’ with multiple levers (or ’arms’), each offering random rewards on each interaction, demands finding the optimal lever that maximizes reward yield with minimal experimentation. The UCB strategy’s core principle is to meticulously balance the pursuit of exploration and exploitation. Exploration, in this context, signifies the willingness to experiment with untested options, while exploitation underscores the preference to capitalize on the rewards offered by gamblers with a proven track record of high performance. This balancing act, inherent in the UCB strategy, aids in optimizing the overall reward yield by efficiently determining the optimal balance between exploring new options and exploiting known high-reward choices, thereby minimizing the number of trials required to reach the optimal solution [22,23].

In the preceding section, we presented the MCSampEn2D for estimating two-dimensional sample entropy. The precision of MCSampEn2D is contingent upon factors such as the number of experimental rounds, denoted as , the quantity of sampled points, , and the representativeness of these sampled points. Given that distinct experimental rounds involve the selection of different sampled points, their representativeness inherently varies. In Equation (5), the weight assigned to each round is statically set to . To enhance accuracy, we can dynamically adjust the weights based on the representativeness of the sampled points in each round.

The adjustment of weights based on the representativeness of the sampled points serves to refine the estimation of sample entropy in each round, thereby enhancing the overall precision of the algorithm. This approach aims to ensure that the sampling process more accurately reflects the inherent characteristics of the dataset.

In the realm of decision-making under limited resources, the multi-armed bandit problem represents a classic challenge in reinforcement learning, involving a series of choices with the goal of maximizing cumulative rewards. The UCB strategy emerges as a crucial approach for tackling the multi-armed bandit problem. Its central concept revolves around dynamically assessing the potential value of each choice [24,25], seeking a balance between the exploration of unknown options and the exploitation of known ones.

At the core of the UCB strategy is the principle of making selections based on the upper confidence bounds. In this strategy, each arm represents a potential choice, and the true reward value of each arm remains unknown. In Algorithm 2, we refer to the process executed from step 5 to step 6 as one epoch. Under the UCB strategy, we conceptualize each epoch as an arm, which means that epochs represent arms, and dynamically update its upper confidence bound based on a designated reward function for each epoch. The UCB strategy involves the following three steps at each time step.

Firstly, the average reward for each round of epochs is calculated by

where signifies the average reward for the i-th round. In our design, each epoch is utilized only once per round, thus we set for all . Here, represents the reward for the current epoch, estimated from historical data.

To design , we define the following notations. For all , let be the average of the sample entropy of the preceding rounds, , be the entropy computed for the ongoing round, and , where . Considering that different images inherently possess distinct entropies, we introduce the error ratio to characterize the error situation for the i-th round. This ratio is then used as an input to the reward function . In formulating the reward function, our objective is to assign higher rewards to rounds where errors are closer to 0. Multiple choices exist for defining the reward function R, provided that it aligns with the design specifications of the particular scenario. Various mathematical functions, such as the cosine function and normal distribution function, among others, are viable options for constructing the reward function. The selection of a specific function is contingent upon its ability to meet the desired criteria and effectively capture the intended behavior in the context of the given problem. Then, we set the average reward for the i-th round of epochs formula as

where a is a scaling factor for the reward and b controls the scale of .

Secondly, we calculate the upper confidence limit boundary for each round of epochs by

where represents the upper confidence bound for the i-th round and set as a constant equal to 1, we use a parameter c to control the degree of exploration. Denote , which is the set of UCB.

Thirdly, for the set , we employ the softmax function to determine the proportional weight each epoch round should have, thereby replacing the average proportion used in MCSampEn2D. We then calculate and for the UCBMCSampEn2D by

where and the UCBMCSampEn can be calculated by

The pseudocode for the entire UCBMCSampEn is outlined in Algorithm 3.

| Algorithm 3 Monte Carlo sample entropy based on UCB strategy |

Sample numbers and epoch numbers .

|

Because the averaging strategy in the MCSampEn method does not consider the varying importance of sampling points across different epochs, it can result in significant errors. Although the UCB strategy introduces bias [26], it can mitigate the errors introduced by the averaging strategy, transforming the uniform strategy in the original method into an importance-based strategy. This adjustment aligns the sampling more closely with the actual characteristics of the data.

3. Experiments

This section is dedicated to thoroughly evaluating the effectiveness of the UCBMCSampEn algorithm by implementing it across various domains. All of our experiments were carried out on the Linux platform. The platform utilizes an Intel(R) Xeon(R) Gold 6248R processor with a clock frequency of 3.00 GHz.

3.1. Datasets

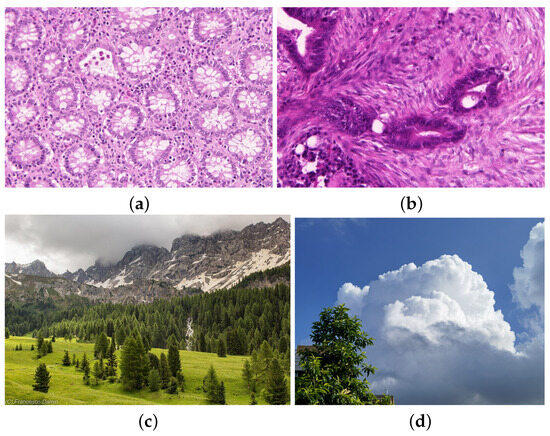

To facilitate a thorough investigation, our experiments incorporate a range of sequences characterized by distinct features. These sequences are categorized primarily into datasets encompassing medical image data and natural image data. The medical image dataset, named Warwick QU dataset, is derived from the Colon Histology Images Challenge Contest for Gland Segmentation (GlaS), organized by MICCAI 2015, where participants developed algorithms for segmenting benign and diseased tissues [27]. It contains 165 samples extracted from H&E-stained colon histology slides. The slides were derived from 16 different patients, from which malignant and benign visual fields were extracted. The dataset example is illustrated in Figure 1.

Figure 1.

Examples of reference images include: images (a,b) in the Warwick QU Dataset, which represent benign and malignant cases, respectively, each with a size of ; image (c) is a natural image with a size of ; and image (d), called the wallpaper, with a size of , is used to verify the method’s performance on large-scale data.

3.2. Main Results

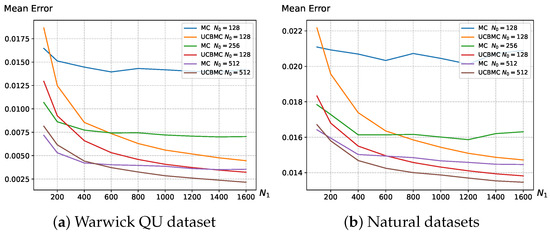

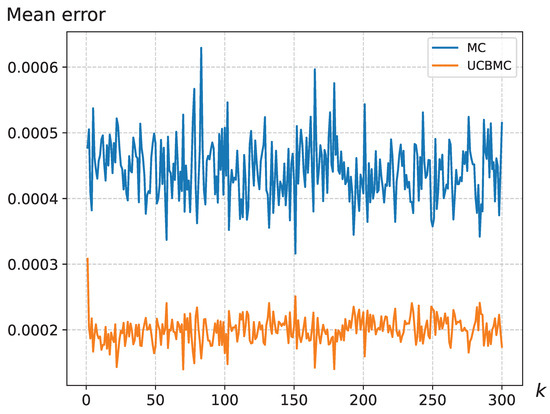

In this section, we validate the effectiveness of the UCBMCSampEn2D, comparing its computational time and computational error with the MCSampEn2D algorithm. Figure 2 illustrates the variation in entropy mean error with the number of epochs using sampling points on the Warwick QU dataset and natural image dataset. The formula for calculating the mean error is as follows:

where W represents the number of images in the dataset, is the entropy of the i-th image calculated using the direct algorithm, and is the entropy of the i-th image calculated using the MCSampEn2D (or UCBMCSampEn2D) algorithm. The results demonstrate that the UCBMCSampEn2D algorithm converges more quickly, significantly reducing the error in comparison to the MCSampEn2D algorithm.

Figure 2.

(a) depicts the average error variation in MCSampEn2D and UCBMCSampEn2D experiments on the Warwick QU dataset with changing , where parameters are set to and ; (b) depicts the average error variation in MCSampEn2D and UCBMCSampEn2D experiments on natural datasets with changing , where parameters are set to , , and . The reward function is set as the cosine function.

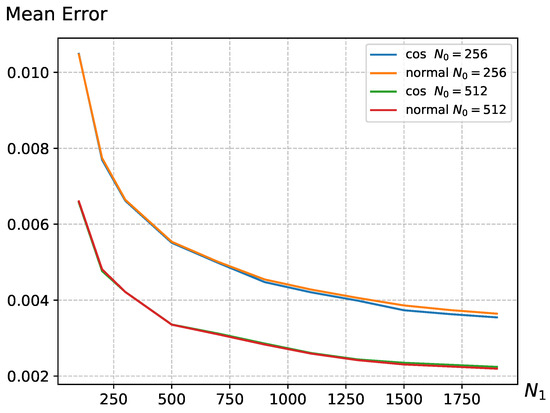

In Equation (6), we discussed that the reward function R offers flexibility, providing multiple choices to meet the design requirements of the scenario. In Figure 3, we conducted experiments using two different reward functions, and the results indicate that, with reasonable parameter settings, different reward functions R exhibit similar trends in average error changes. This suggests that the UCB strategy has a certain degree of generality and is not confined to specific forms. The remaining experiments were all conducted using the cosine function.

Figure 3.

The average error variation in different reward function R with changing on the Warwick QU dataset, where parameters are set to and . The parameters for the cosine function in the reward function are set as and , while for the normal distribution function, the parameters are set as and .

Figure 2 provides a detailed view of the situation with sampling points set at . Based on the experimental results, it is evident that the UCBMCSampEn2D algorithm demonstrates more rapid convergence with an increase in the number of experiments () compared to the MCSampEn2D. In Figure 2, with , the UCBMCSampEn2D algorithm initially exhibits a larger error during the first 150 rounds of experiments. However, as the number of experiments increases, the UCBMCSampEn2D algorithm quickly reduces the error and achieves a lower convergence error than the MCSampEn2D. This substantial improvement in accuracy is consistently observed across various values of .

This phenomenon is elucidated in Section 2.3 of our algorithm, where the first i rounds of epochs are utilized to calculate the average entropy, simulating the true entropy. When i is small, there is not enough historical data to support it, the average entropy at this point introduces a relatively large error. However, since the entropy calculated from the previous i rounds is relatively close, the reward obtained for these initial rounds in (6) tends to be too high, leading to a larger error. As i increases, the average entropy more closely approximates the true entropy, and the weights assigned subsequently become more accurate in reflecting the real situation, thereby enabling the algorithm to converge more effectively.

Table 1 details the specifics of the UCBMCSampEn2D algorithm. Throughout the experiment, we maintained a consistent template length of and a fixed similarity threshold of . Adjustments were made only to the number of sampling points, , and the number of epochs, .

Table 1.

The mean error comparison among different algorithms under the same amount of time. The UCB parameters were set at and .

In Table 2, under identical time constraints and with the same values of and , the UCBMCSampEn2D algorithm demonstrates an error that is only of the error observed with the MCSampEn2D algorithm when is small. Additionally, when is large, the UCBMCSampEn algorithm consistently outperforms the MCSampEn2D algorithm in terms of error reduction.

Table 2.

The comparison of time and error for image (d) in Figure 1 under different methods.

We can see that the MCSampEn2D algorithm has a significantly improved computation speed compared to the traditional SampEn2D, with an acceleration ratio exceeding a thousand-fold. Additionally, we conducted a time comparison between the UCBMCSampEn2D algorithm and the MCSampEn2D algorithm, setting the error below . The UCBMCSampEn2D algorithm also demonstrated advantages, as shown in Table 2. In comparison to the MCSampEn2D algorithm, the UCBMCSampEn2D algorithm reduced the computation time by nearly . Moreover, for larger-sized sequences, the UCBMCSampEn2D algorithm exhibited a significant advantage over the MCSampEn2D algorithm in terms of computation time and error.

Simultaneously, we conducted numerical experiments on randomly generated binary images with a size of . The generation function [20] had a parameter . The results are shown in Table 3. Our method continues to demonstrate advantages even in the presence of high data randomness.

Table 3.

The comparison of time and error for randomly generated binary images under different methods.

4. Discussion

4.1. Analysis of the UCB Strategy

In Section 2.2, we observed that the MCSampEn2D algorithm utilizes a random sampling method to select points and computes sample entropy across epoch numbers by averaging the outcomes. The chosen points’ proximity to key image features affects their representativeness for the entire image, thereby influencing their relative importance. When sampled points accurately capture critical information, the error in that epoch number is reduced, resulting in sample entropy values that more closely approximate the true entropy. On the other hand, if the points fail to effectively capture information in the image, the resultant error in that round is magnified. The MCSampEn2D algorithm, which simply averages results across all rounds without weighing their importance, is adversely affected by these variations. This situation results in larger errors during convergence, particularly influenced by the number of sampled points . Additionally, due to its inherent random sampling method, the standard deviation of the MCSampEn2D algorithm’s results varies significantly with each epoch, leading to slower convergence and extended computation times.

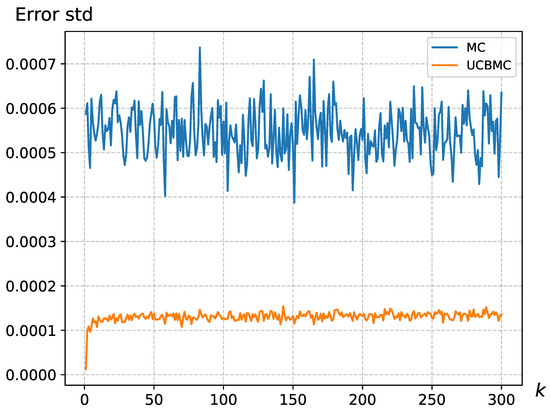

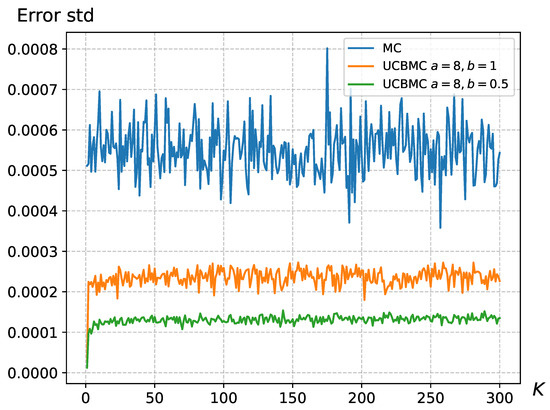

We have addressed the MCSampEn2D algorithm’s limitation in accurately reflecting the importance of epochs by integrating the UCB strategy. This strategy assigns significance to different epochs, thus modulating their individual impact on the final result. To compare the effectiveness of these approaches, we conducted 50 epochs each for MCSampEn2D and UCBMCSampEn2D using images from a natural dataset, specifically of size . We calculated the average and standard deviation of the error for the k-th round (). The results, displayed in Figure 4 and Figure 5, reveal that the standard deviation of MCSampEn2D shows significant fluctuations across different rounds, while UCBMCSampEn2D maintains a more consistent performance. Furthermore, the error values for MCSampEn2D are consistently higher compared to those of UCBMCSampEn2D. This demonstrates that UCBMCSampEn2D not only achieves smaller errors than MCSampEn2D within the same timeframe but also effectively mitigates the issue of MCSampEn2D’s inability to adequately capture the importance of each epoch.

Figure 4.

The error standard deviation variation for the wallpaper, where , , where the UCB parameters were set at and .

Figure 5.

The mean error variation for the wallpaper, where the UCB parameters were set at and .

Furthermore, we observed larger errors in the initial rounds of epochs with UCBMCSampEn2D. This can be attributed to the fact that the average entropy, serving as a temporary anchor, does not initially consider the historical context. Consequently, this leads to an overly high reward, , in Equation (6) which, in turn, causes the confidence bound, , in Equation (7) to be inconsistent. As a result, this inconsistency contributes to larger errors in the early rounds of epochs.

4.2. The Impact of Parameters on the UCB Strategy

In Section 2.3, where we introduced the formula for UCBMCSampEn2D, it was observed that the parameters a and b significantly impact the convergence speed and error of the algorithm. Since in (6) reflects the proportion of bias, an unreasonable bias proportion could render the reward function ineffective. We conducted 50 experiments using wallpaper images and computed the standard deviation of the error for the k-th round (), as shown in Figure 6. The results in Figure 6 demonstrate that the effectiveness of UCBMCSampEn2D is influenced by the parameters a and b. Appropriate selection of the values for a and b can reduce the standard deviation of the errors. Based on our experimental tests, we recommend using and . However, adjustments may still be necessary based on the image size and the number of sampling points .

Figure 6.

The error standard deviation variation for the wallpaper, where and .

4.3. The Application of Sample Entropy in Medical Image Dataset

In the Warwick QU dataset, all pathological slices can be categorized into two classes: Benign and Malignant. We computed the entropy using the UCBMCSampEn2D algorithm for the dataset and utilized an SVM for training and classification, yielding the results presented in Table 4. It is evident that the entropy calculated by the UCBMCSampEn2D algorithm exhibits distinct trends for the two types of pathological slices, demonstrating potential in pathological slice diagnosis and positioning it as a viable feature for aiding future work in this field. The findings suggest that sample entropy can serve as a valuable supplementary characteristic in the context of pathological diagnosis.

Table 4.

The UCBMCSampEn2D results for some different categories of pathological slices in Warwick QU dataset.

5. Conclusions

This paper introduces two accelerated algorithms for estimating two dimensional sample entropies, termed MCSampEn2D and UCBMCSampEn2D. These algorithms were rigorously tested on both medical and natural datasets. The study’s significance is manifold: firstly, the MCSampEn2D algorithm, an extension of the MCSampEn algorithm, substantially improves the computational efficiency for two-dimensional sample entropy. Further, we delve into the convergence challenges faced by the MCSampEn2D algorithm and adopt the UCB strategy to mitigate these issues. This strategy, as applied in our study, prioritizes the varying significance of different epochs, with its upper confidence bounds effectively mirroring this importance. The experiments detailed in Section 3 validate the efficacy of both the MCSampEn2D and UCBMCSampEn2D algorithms.

Overall, due to the UCBMCSampEn2D algorithm’s impressive performance in computing sample entropy, it demonstrates considerable promise for analyzing diverse images while minimizing computational time and error.

Author Contributions

Conceptualization, Y.J.; methodology, Y.J. and Z.Z.; software, Z.Z. and W.L.; validation, Y.J. and Z.Z.; formal analysis, Y.J. and Z.Z.; investigation, Y.J.; writing—original draft preparation, Z.Z.; writing—review and editing, Y.J., Z.Z., R.W. and W.G.; visualization, Z.Z. and Z.L.; supervision, Y.J.; project administration, Y.J.; funding acquisition, Y.J. and W.G. All authors have read and agreed to the published version of the manuscript.

Funding

Project supported by the Key-Area Research and Development Program of Guangdong Province, China (No. 2021B0101190003), the Natural Science Foundation of Guangdong Province, China (No. 2022A1515010831) and the National Natural Science Foundation of China (No. 12101625).

Data Availability Statement

The numerical experiments in this work utilize the following datasets: Kaggle Dataset Landscape Pictures is available at https://www.kaggle.com/datasets/arnaud58/landscape-pictures; Warwick QU Dataset is available at https://warwick.ac.uk/fac/cross_fac/tia/data/glascontest/download; Wall paper is available at https://t.bilibili.com/894092636358443014.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shannon, C.E. A Mathematical Theory of Communication. Assoc. Comput. Mach. 2001, 5, 1559–1662. [Google Scholar] [CrossRef]

- Richman, J.S.; Moorman, J.R. Physiological time-series analysis using approximate entropy and sample entropy. Am. J. Physiol. Heart Circ. Physiol. 2000, 278, H2039–H2049. [Google Scholar] [CrossRef] [PubMed]

- Pincus, S.M. Approximate entropy as a measure of system complexity. Proc. Natl. Acad. Sci. USA 1991, 88, 2297–2301. [Google Scholar] [CrossRef] [PubMed]

- Tomčala, J. New fast ApEn and SampEn entropy algorithms implementation and their application to supercomputer power consumption. Entropy 2020, 22, 863. [Google Scholar] [CrossRef] [PubMed]

- Rostaghi, M.; Azami, H. Dispersion entropy: A measure for time-series analysis. IEEE Signal Process. Lett. 2016, 23, 610–614. [Google Scholar] [CrossRef]

- Li, Y.; Li, G.; Yang, Y.; Liang, X.; Xu, M. A fault diagnosis scheme for planetary gearboxes using adaptive multi-scale morphology filter and modified hierarchical permutation entropy. Mech. Syst. Signal Proc. 2017, 105, 319–337. [Google Scholar] [CrossRef]

- Yang, C.; Jia, M. Hierarchical multiscale permutation entropy-based feature extraction and fuzzy support tensor machine with pinball loss for bearing fault identification. Mech. Syst. Signal Proc. 2021, 149, 107182. [Google Scholar] [CrossRef]

- Li, W.; Shen, X.; Yaan, L. A comparative study of multiscale sample entropy and hierarchical entropy and its application in feature extraction for ship-radiated noise. Entropy 2019, 21, 793. [Google Scholar] [CrossRef] [PubMed]

- Aboy, M.; Cuesta-Frau, D.; Austin, D.; Mico-Tormos, P. Characterization of sample entropy in the context of biomedical signal analysis. In Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 5942–5945. [Google Scholar]

- Jiang, Y.; Mao, D.; Xu, Y. A fast algorithm for computing sample entropy. Adv. Adapt. Data Anal. 2011, 3, 167–186. [Google Scholar] [CrossRef]

- Mao, D. Biological Time Series Classification via Reproducing Kernels and Sample Entropy. Ph.D. Thesis, Syracuse University, Syracuse, NY, USA, 2008. [Google Scholar]

- Schreiber, T.; Grassberger, P. A simple noise-reduction method for real data. Phys. Lett. A 1991, 160, 411–418. [Google Scholar] [CrossRef]

- Theiler, J. Efficient algorithm for estimating the correlation dimension from a set of discrete points. Phys. Rev. A Gen. Phys. 1987, 36, 4456–4462. [Google Scholar] [CrossRef] [PubMed]

- Manis, G. Fast computation of approximate entropy. Comput. Meth. Prog. Bio. 2008, 91, 48–54. [Google Scholar] [CrossRef] [PubMed]

- Manis, G.; Aktaruzzaman, M.; Sassi, R. Low computational cost for sample entropy. Entropy 2018, 20, 61. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.H.; Chen, I.Y.; Chiueh, H.; Liang, S.F. A Low-Cost Implementation of Sample Entropy in Wearable Embedded Systems: An Example of Online Analysis for Sleep EEG. IEEE Trans. Instrum. Meas. 2021, 70, 9312616. [Google Scholar] [CrossRef]

- Liu, W.; Jiang, Y.; Xu, Y. A Super Fast Algorithm for Estimating Sample Entropy. Entropy 2022, 24, 524. [Google Scholar] [CrossRef] [PubMed]

- Garivier, A.; Moulines, E. On upper-confidence bound policies for switching bandit problems. In Proceedings of the International Conference on Algorithmic Learning Theory, Espoo, Finland, 5–7 October 2011; pp. 174–188. [Google Scholar]

- Anderson, T. Towards a theory of online learning. Theory Pract. Online Learn. 2004, 2, 109–119. [Google Scholar]

- Silva, L.E.V.; Senra Filho, A.C.S.; Fazan, V.P.S.; Felipe, J.C.; Murta, L.O., Jr. Two-dimensional sample entropy: Assessing image texture through irregularity. Biomed. Phys. Eng. Express 2016, 2, 045002. [Google Scholar] [CrossRef]

- da Silva, L.E.V.; da Silva Senra Filho, A.C.; Fazan, V.P.S.; Felipe, J.C.; Murta, L.O., Jr. Two-dimensional sample entropy analysis of rat sural nerve aging. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 3345–3348. [Google Scholar]

- Audibert, J.-Y.; Munos, R.; Szepesvári, C. Exploration–exploitation tradeoff using variance estimates in multi-armed bandits. Theor. Comput. Sci. 2009, 410, 1876–1902. [Google Scholar] [CrossRef]

- Zhou, D.; Li, L.; Gu, Q. Neural contextual bandits with ucb-based exploration. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 11492–11502. [Google Scholar]

- Gupta, N.; Granmo, O.-C.; Agrawala, A. Thompson sampling for dynamic multi-armed bandits. In Proceedings of the 2011 10th International Conference on Machine Learning and Applications and Workshops, Honolulu, HI, USA, 18–21 December 2011; Volume 1, pp. 484–489. [Google Scholar]

- Cheung, W.C.; Simchi-Levi, D.; Zhu, R. Learning to optimize under non-stationarity. In Proceedings of the 22nd International Conference on Artificial Intelligence and Statistics, Naha, Japan, 16–18 April 2019; pp. 1079–1087. [Google Scholar]

- Xu, M.; Qin, T.; Liu, T.-Y. Estimation bias in multi-armed bandit algorithms for search advertising. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013. [Google Scholar]

- Sarwinda, D.; Paradisa, R.H.; Bustamam, A.; Anggia, P. Deep learning in image classification using residual network (ResNet) variants for detection of colorectal cancer. Procedia Comput. Sci. 2021, 179, 423–431. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).