Abstract

We consider information dissemination over a network of gossiping agents. In this model, a source keeps the most up-to-date information about a time-varying binary state of the world, and n receiver nodes want to follow the information at the source as accurately as possible. When the information at the source changes, the source first sends updates to a subset of nodes. Then, the nodes share their local information during the gossiping period, to disseminate the information further. The nodes then estimate the information at the source, using the majority rule at the end of the gossiping period. To analyze the information dissemination, we introduce a new error metric to find the average percentage of nodes that can accurately obtain the most up-to-date information at the source. We characterize the equations necessary to obtain the steady-state distribution for the average error and then analyze the system behavior under both high and low gossip rates. We develop an adaptive policy that the source can use to determine its current transmission capacity m based on its past transmission rates and the accuracy of the information at the nodes. Finally, we implement a clustered gossiping network model, to further improve the information dissemination.

1. Introduction

Motivated by many applications—such as autonomous vehicular systems, content advertising on social media, and city emergency-warning systems—information dissemination over the networks has gained significant attention. For instance, in the case of autonomous vehicular systems or city emergency-warning systems, timely critical information, such as accident alerts or tornado warnings, needs to be disseminated as quickly and as accurately as possible. As another example, companies often want to let their potential customers know about their latest products through advertisements over social media. In both of these examples, there is a single information source where the most up-to-date information is disseminated to multiple receivers over time.

In this paper, we consider a communication system with a source and n receiver nodes. The source keeps the most recent information about the state of the world, which takes binary values 0 or 1, and changes according to an exponential distribution. Upon each information update, the source wants to let the receiver nodes know about the most recent information. As the source has limited transmission capacity, it cannot send information to more than nodes, and each information transmission at the source takes an exponentially distributed length of time. After sending updates to m nodes, in order to further disseminate information, local information is shared between each pair of receiver nodes, a process we shall refer to as gossiping. The gossiping period continues until the information at the source is updated again. At the end of each gossiping period, each receiver node that did not get the most recent information directly from the source comes up with an estimate based on the majority of the information it received from the other nodes. In order to measure the accuracy of the information dissemination at the end of each update cycle, we consider an error metric that takes value 1 for a receiver node that has a different estimate compared to the information at the source.

1.1. Related Work

In the gossip-network literature, a model where only one node tries to spread its information to the entire network was considered in [1] and named single-piece dissemination. Multi-piece spreading, where all nodes try to spread their individual information to the remaining nodes, was studied in [2]. Moreover, the problem of finding the average of all nodes’ initial information in a gossip network was studied under the framework of distributed averaging in [3,4]. The main goal of these works was to analytically characterize either the information spreading time [1,2] or the averaging time [3,4] in the entire network. In all these settings, the information was considered to be static, i.e., it did not change over time.

Reference [5] considered the problem of gossiping dynamic information. As the gossip network may consist of asynchronous agents where there is no central clock, in order to maintain the information flow in the gossip network, timestamping is a commonly used technique, where the agents keep the generation time of their local status updates [6]. During the gossiping phase, information updates among the agents are determined based on whoever has the largest timestamp of particular information, which indicates the information freshness of the local agents.

In another line of research, to measure information freshness, the age of information was defined as the difference between the current time and the timestamp of the last status update received by the agents. For a more detailed review of the age of information, we refer to [7,8]. Recently, scaling of the age of information was considered in gossip networks [9,10,11]. In [9], the stochastic hybrid system (SHS) method was used to characterize the version age in arbitrarily connected gossip networks, and scaling of the version age was studied in the symmetric ring and fully connected networks. By using the idea of clustering, scaling of the age of information was further improved in [10]. Then, scaling of the binary freshness metric [12,13,14], which takes either the value 1 when the information is fresh, or 0 otherwise, was studied in the gossip networks in [11].

In all these aforementioned works, the timestamp of the information plays a critical role in determining information dissemination in gossip networks. As the timestamp of the information increases, as new versions of the information are generated, either the size grows without bound—in which case, the agents spend most of their capacity in exchanging large numbers and comparing the values of these large timestamps [5], to determine the freshest information—or, in the case of a bounded timestamp, when overflow happens, the order for information freshness can be lost [6]. In certain applications, an external adversary may interrupt the information flow and alter the timestamp of the information, such that the older versions of the information may be re-branded as fresh information [15]. Recently, the effect of timestomping on age scaling was explored in [16].

Unlike the earlier works on gossip networks, as in [1,2], we considered in this paper a time-varying information source and, instead of tracking the information-spreading time, we studied the average percentage of the nodes that had access to the most recent information at the source before it was updated. Compared to the dynamic information dissemination in [6], in our work we did not use the timestamps of the information. Instead, to maintain the information flow, we used instantaneous signaling from the source to the nodes, to synchronize the nodes. We implemented an information updating mechanism consisting of two phases: in the first phase, only the source could send updates to m nodes; in the second phase, i.e., in the gossiping phase, only the nodes could share their local information. Thus, in the gossiping phase, incorrect information in the network could also spread. Works [9,10,11] considered the age of information in gossip networks, where each information update at the source was treated as a new update. In our work, on the other hand, we considered a binary dynamic information source. Thus, the content of the information affected our error metric. Furthermore, in [9,10,11], the nodes updated their information only if they received fresher information. By contrast, in our work, the nodes that did not receive any update directly from the source made decisions based on the majority of the updates that they received from the other nodes. As a result, the error metric and the information updating model that we considered differed from the earlier works in [9,10,11].

The binary information structure appears in real-world applications such as robotic networks, where a group of robots working on a horizontal line wants to decide whether a neighbor robot is in front of the group or behind it. Here, the binary information structure is sufficient to represent the relative position of the robots in the network. Inspired by this example, by using only the sign of the relative states, reference [17] considered a decentralized-online-convex-optimization problem with time-varying loss functions, and reference [18] solved a distributed discrete-time optimization over multi-agent networks. As another notable example, reference [19] considered a model where the actual opinion of the public evolved as a continuous variable in but the expressed opinions took only discrete binary values that resembled the opinion polls. Motivated by all these examples, in our work, we focused our attention on binary information dissemination as an initial step in analyzing the role of gossiping in information dissemination.

Finally, dissemination of misinformation on social networks has attracted significant interest in recent years. The network immunization problem has been considered, to prevent the diffusion of harmful information that can infect the network [20,21,22]. More specifically, reference [20] proposed an algorithm that utilizes the community structure for network immunization. Reference [21] proposed a comprehensive solution for the immediate detection and containment of harmful content, aiming to curb its propagation across the network. Reference [22] applied deep neural networks to develop context-aware algorithms that can detect fake news.

1.2. Contributions

In this work, we first characterize the equations necessary to obtain the steady-state distribution of the average error (which was also appeared in our preliminary work in [23]). Then, we provide analytical results for the high and low gossip rates. When the gossip rate is high, we show that the probability of obtaining correct information converges to a step function where if the majority of the nodes have the correct information then all the nodes are able to estimate the information correctly with probability 1. In other words, as the gossip rate increases, the information at all nodes becomes mutually available to them, and all the nodes in the network behave like a single node. However, when the gossip rate is low, the gossiping phase can be approximated by either not receiving any updates, in which case the nodes hold on to their prior information, or receiving a single update. Based on this approximation, we characterize analytically the gain obtained through gossiping, and we find an adaptive selection policy for the source, which suggests that the source should send updates to more nodes when the nodes have mostly incorrect information. Then, to further reduce the average error, we implement the idea of clustering, where, instead of sending information to all nodes, the source sends its information only to a smaller number of cluster heads. Then, the cluster heads forward the information to nodes within their clusters. For this network model, we characterize the equations to find the long-term average error at the cluster heads and at the nodes in the clusters. Finally, we provide extensive simulations, to illustrate the effect of gossiping and clustering on information dissemination.

2. System Model and Problem Formulation

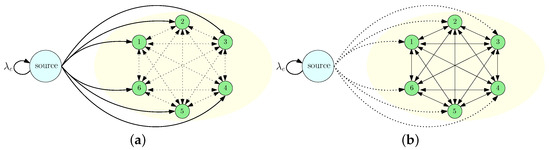

We considered an information updating system consisting of a source and n receiver nodes, as shown in Figure 1. The source kept the most up-to-date information about a state of the world that took binary values of 0 or 1. The information at the source was updated following a Poisson process with rate . We defined the time interval between the jth and th information update at the source as the jth update cycle and denoted it by . We assumed that the source was able to send instantaneous signals to the nodes. After receiving these signals, the nodes knew that information at the source had been updated, but they did not know what information had been realized at the source. Such instantaneous signalings exist in many practical systems. For example, consider a news provider making news either to support or oppose a topic of interest. After the news is published, the news provider can send an instantaneous notification to its subscribers about the occurrence of the news through push notifications on smart devices or headlines in TV broadcasts or their websites. However, after receiving these notifications, individuals still do not know the actual update until they enter the news provider’s website or watch the TV broadcast. As another application, consider a city emergency-warning system, or anomaly detection in security applications where warning signals can occur over time. As warning signals can also happen due to false alarms, upon receiving such warning signals, individuals do not know whether there is an actual anomaly or not, until further test results can confirm the actual status. Thus, motivated by the aforementioned examples, we utilized the synchronization signal, which can indicate the information update at the source but does not provide any information about the source’s state realization.

Figure 1.

A communication system that consists of a source and fully connected n nodes where (a) only the source sends updates to the nodes, and (b) the nodes share their local information, called the gossiping phase.

We denoted the information at the source at update cycle j as . For a given , the information at the source at the th update cycle was equal to with probability and to with probability p, i.e.,

for all j, where . As , the nodes kept their state estimation unchanged whenever a new update cycle started. (Our results are extendable to the setting where . In this case, the optimal decision taken by each node should be to revert their belief at the beginning of each update cycle.)

The source updated each receiver node according to a Poisson process with rate . In this system, in addition to the update arrivals from the source, each node can share its local information with the other nodes, a process called gossiping. Specifically, in this work, we considered a fully connected network where each node was connected to every other node with equal update rates. The total update rate of a node was . Thus, in this network, each node updated its neighbor nodes following a Poisson process with rate . We denoted the information at node i at update cycle as . The nodes wanted to follow the most up-to-date information prevailing at the source as accurately as possible, based on the updates received from the source as well as from the neighbor nodes during an update cycle.

In this paper, we considered an information updating mechanism where at the beginning of each update cycle the source sent its current information to m nodes where , as shown in Figure 1a. Here, we assumed that the source knew (or was able to sense/monitor) the information prevailing at the nodes and, thus, it sent updates to the nodes that carried different information compared to the source. (This approach was motivated especially by online advertisements, whereby companies such as Amazon and Google are able to monitor whether a potential customer is interested in their target products by the customer’s search, view, and click history and, thus, present their advertisements accordingly. They can sense the final opinion of their potential customer by observing the potential customer’s behavior, such as buying an advertised product). During this phase, if the information at the source was updated, then another update cycle started and, thus, the jth update cycle could be terminated before sending updates to m nodes. If the source sent updates to m nodes, it sent another instantaneous signal to start the gossiping among the nodes. Then, we entered the gossiping phase in the update cycle . During the gossiping phase, illustrated in Figure 1b, the nodes shared their local information with one another. When the information at the source was updated, the gossiping phase ended. At the end of the gossiping period, the nodes that did not get an update directly from the source updated their information based on the majority of the updates they received during the gossiping period. If a node did not get any updates from the source or the other nodes, it kept its local information unchanged. We denoted the information at node i at the end of the gossiping period by . In order to measure the performance of the information dissemination process, we defined the error metric for node i at the update cycle j as

Then, the average estimation error over all the nodes equaled , and the long-term average estimation error over all the nodes was given by

In the next section, we provide detailed analyses to characterize the long-term average error .

3. The Long-Term Average Error

In this section, we characterize the long-term average error . Let us consider a generic update cycle and, for simplicity of presentation, let us drop the index j from the variables in the rest of the analysis. At the beginning of the update cycle, we denote the number of nodes that have the same information as the source by . In this phase, either the source sends an update to a node after an exponential time with the rate or the information at the source is updated after an exponential time with the rate . Thus, the source sends an update to a node with probability or the information at the source is updated and the next update cycle starts with probability . Therefore, during a typical update cycle I with , the source sends updates with the following probability mass function (pmf):

Similarly, if , we have

For an update cycle with , the network enters the gossiping phase with probability , which decreases with m. In other words, choosing a large m decreases the probability of entering the gossiping phase. On the other hand, choosing a small m results in sending updates to a small number of nodes and, thus, in the gossiping phase, incorrect information can be spread. Therefore, there is an optimal m that achieves the smallest average error .

If the source sends updates to m nodes before the information at the source is updated, then the gossiping phase starts. During the gossiping phase, either each node receives an update from the other nodes after an exponential time with rate or the information at the source is updated after an exponential time with rate . As in [24], in the gossiping phase, node i receives updates with the following pmf:

In other words, has geometric distribution with parameter , i.e., .

At the beginning of the gossiping phase, there are nodes with the same information as the source and nodes with incorrect information. For the nodes with , conditioned on the total number of updates that they received during the gossiping phase, the distribution of the number of updates that are equal to is given by

where is a random variable denoting the number of updates that are equal to . In other words, for a node i that has , conditioned on , the random variable has a binomial distribution with parameters , i.e., . Similarly, for the nodes i with , we have

At the end of the gossiping period, based on the majority of the updates, the nodes i that have as their prior information estimate the information at the source as with probability , which is given by

We note that the first summation term in (9) corresponds to the case where a node receives a strictly higher number of during the gossiping period. The second summation term in (9) refers to the case where a node receives an equal number of and . In this case, a node estimates the information as either or with equal probabilities. If a node does not get any updates during the gossiping phase, it keeps its current information that is given by the last term in (9). Similarly, for a node i that has prior information , we can derive an expression for the probability of updating its information to , denoted by , as

Note that this expression is identical to that in (9), except that in the summations we use the probabilities given in (8) and that is excluded. In the next theorem, we state the long-term average error.

Theorem 1.

Under the proposed gossiping network, the long-term average error Δ is given by

where is provided in (13) and is the row vector of steady-state probabilities of the Markov chain over the state space . The unique stationary distribution is given by the solution of for a stochastic matrix , where the transition probabilities of are given later in (14).

Proof.

We note that at the end of an update cycle with a gossiping phase, m nodes that obtain information directly from the source will have (In the gossiping phase, these nodes send information to other nodes with rate , but they do not update their information based on the updates received from the other nodes). There are N nodes that have prior information . These nodes will update their information to with probability and to with probability . Thus, the total number of nodes that update their information to , denoted by , has the binomial distribution . On the other hand, there are nodes that have prior information . At the end of the gossiping phase, these nodes will update their information to with probability and to with probability . Thus, the total number of nodes that change their information to , denoted by , obeys the binomial distribution . Therefore, at the end of the gossiping period, the total number of the nodes that have is equal to , where has the following pmf:

for , where and .

Next, let us define to be the number of nodes that have the same information with the source at the end of the update cycle , i.e., . If the update cycle ends before entering the gossiping phase, then either or . In these cases, the source sends updates to nodes with probability distributions given in (4) and (5), respectively. If the source is able to send updates to m nodes, then the gossiping phase starts and, as a result, nodes will have with probabilities , where . Thus, the probability distribution of for a given N is given by

With the pmf of as provided in (13), we can fully characterize the transition probabilities of going from N nodes that have at the beginning of an update cycle to nodes that have at the end of that update cycle. Now let us consider a Markov chain over the state space , where by abuse of notation we label the first states by , and the last states by . We can then represent the transition probabilities between different states , using a stochastic matrix , where denotes the probability of moving from state a to state b and is given by

We note that the stochastic matrix in (14) is irreducible, as every state b is accessible from any state a in a finite update cycle duration. As for all a in (14), the Markov chain induced by is also aperiodic. Thus, the above Markov chain admits a unique stationary distribution given by the solution of , such that , . Finally, we characterize the long-term average error among all the nodes by (11). □

In the following section, we proceed to approximate the probabilities and provided in this section, to understand the effect of gossiping better when the gossip rate is low and high compared to the information change rate at the source .

4. Analysis for High and Low Gossip Rates

In this section, we develop approximations for and , which are the probabilities of choosing at the end of a gossiping period when the nodes have the same prior information with the source and when they do not, respectively. First, by assuming sufficiently large n and N, we can approximate the conditional pmfs for given in (7) and (8) by the binomial distribution . Let us denote the corresponding and obtained by substituting this binomial approximation into (9) and (10) by and , respectively. As , for the rest of this section we will only approximate , and can find the probability accordingly. Next, for sufficiently large values of , we can approximate as

where and . We note that (15) is due to the normal approximation of binomial distribution by using the central limit theorem (CLT). In the following proposition, we show that can be approximated by a summation of Q-functions.

Proposition 1.

When λ is sufficiently large compared to , can be approximated by

Proof.

Using the CLT, there exists a sufficiently large K, such that the difference between the probabilities and is smaller than . Then, we have

where , for . The above expression can be further upper-bounded by

As the term can be made smaller than by choosing , the difference between and can be smaller than for every by choosing sufficiently large . □

Next, we show that can be approximated by the summation of Q-functions when is sufficiently large. In the following proposition, we show that as the probability converges to a step function.

Proposition 2.

As the probability converges to a step function given by

Proof.

First, we consider the case when . In this case, we note that is a decreasing function of . Thus, for any arbitrary , there exists an L, such that . Therefore, we have

As for , as in the proof of Proposition 1, by choosing sufficiently large , one can show that . Thus, if , we have .

Next, we consider the case when . As for all x, we have

Note that is a decreasing function of . Thus, for any , there exists a large , such that . Therefore, we can write

Now, similar to the first part of the proof, we can show that by selecting a sufficiently large . Thus, when , we have . Finally, when , we note that the terms in (16) become 0, which implies . □

In Proposition 2, we showed that when the gossip rate is sufficiently large, the nodes start to have access to information from all other nodes. As a result, all the nodes in the network collectively start to behave like a single node, where at the end of a gossiping period the information is updated based on the majority of the information at all nodes. In other words, if the majority of the nodes have the same information as the source, which happens if , all the nodes update their information to and, thus, they will have the same information as the source at the end of the gossiping period. On the other hand, when the majority of the nodes have the incorrect information , which happens if , then all the nodes will have the incorrect information at the end of the gossiping period. Therefore, when the information at the source changes frequently (i.e., is large) and the source has limited total update rate capacity (i.e., is small), a high gossip rate can cause incorrect information to disseminate in the network. As a result, gossiping can be harmful in these scenarios. On the other hand, when the source has high transmission rates, at each update cycle, it is enough for the source to send its information to the number of nodes that achieves the majority, i.e., . After that, the remaining nodes can obtain the correct information during the gossiping phase. Thus, when the source has enough transmission rate, high gossip rates among the nodes can be utilized by sending the updates to at most half the network.

Next, we consider the case in which the gossip rate is relatively low compared to the rate of information change at the source, . When the gossip rate is low, the nodes either do not get any updates, in which case they hold on to their prior information, or they mostly get only one update from the other nodes and, hence, update their information based on the only received update. In the following proposition, we approximate the probability when is low.

Proposition 3.

When λ is sufficiently small, the probability can be approximated by

Proof.

When is sufficiently low, the nodes may not receive any updates or receive a single update packet from the other nodes in the gossiping phase. Thus, the nodes that have the incorrect information as prior information obtain with probability , which is equal to

Next, we consider the difference between and , which is given by

As , we have

Thus, when the gossip rate is sufficiently low compared to , the upper bound on (19) can be made arbitrarily small, making the approximation tight. □

Gossip Gain and an Adaptive Policy for Selecting Transmission Capacity

As a result of gossiping, when is low, the nodes that have the correct information as prior information keep their information as with probability , which is given by . Thus, when is small, the probability can be equivalently approximated by

Therefore, when the gossip rate is low, we have

Thus, at the end of the gossiping period, there are nodes that have the same information as the source . If we consider the system with no gossiping, where only the source can send updates to m nodes, at the end of an update cycle most nodes have the same information as the source. Thus, compared to the system with no gossiping, the gain (error reduction) obtained as a result of gossiping can be computed as

which is obtained by subtracting from and dividing the result by n due to the definition of . Note that the last term in (21) is equal to the probability of entering the gossiping phase.

Let us denote the average error for a system with no gossiping (that is, ) by . If the gossip rate is low, the overall gain obtained from gossiping, , can be approximated by

where is a scaling function, in terms of p, to represent the effect of gossiping on the steady-state distribution .

When the gossip rate among the nodes is low, the gossip gain in (21) depends on the selection of m. Therefore, if the source is allowed to dynamically choose its transmission capacity m in terms of N, a natural choice is to adaptively select an m which maximizes the gossiping gain by solving . Solving this equation in terms of m gives us

where . (We note that has two solutions. The other solution is equal to in (23) except that the square-root term has a positive sign. One can show that this root is always larger than and, thus, cannot be a feasible selection for m). In fact, it is easy to see from (23) that the optimal solution always lies in the range .

When the source has infinite transmission capacity, we have , which suggests that the source should send its information to at most half of the nodes that carry incorrect information. In the other extreme case, when the source’s transmission capacity is equal to 0, we have , in which case the source should not send its information to any other nodes. In general, for a given , in (23) is a decreasing function of N, which means that when N is small, i.e., when most of the nodes have incorrect information, the source should send updates to a higher number of nodes. As N increases, the source should send updates to a smaller number of nodes, as most nodes carry the same information as the source. In the following section, in order to reduce the average error, we implement clustering in the gossip network.

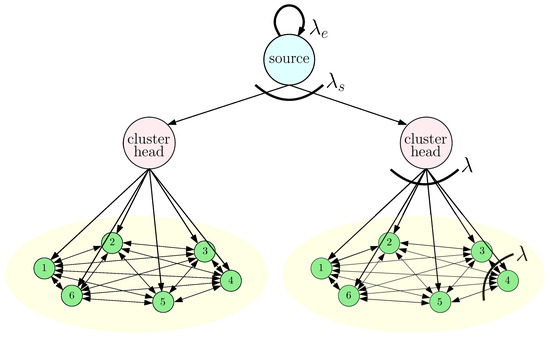

5. Average Error in Clustered Networks

In this section, we explore the idea of clustering in the gossip network, in order to further reduce the average error. As illustrated in Figure 2, the gossip network is partitioned into clusters with equal cluster size , i.e., without loss of generality, we assume that n is divisible by and, thus, we have . Each cluster has a designated cluster head that is connected to the source directly. In the clustered network, when the information at the source is updated, instead of sending updates to individual nodes directly, the source only sends its information to cluster heads that carry different information compared to the source. Thus, the source can send its information to a smaller number of cluster heads with higher update rates. Furthermore, as the cluster heads behave like an information source of each cluster, by using cluster heads, we can increase the total update rates going from the source to the individual nodes while decreasing the total number of connections at the gossip network. The downside of clustering is that if the information is updated during transmission from the source to the cluster heads, information may not be disseminated to individual nodes in the gossip network. Thus, we need to choose the number of cluster heads optimally, to minimize the average error.

Figure 2.

A clustered gossip network that consists of a source and cluster heads and fully connected nodes.

At the update cycle , let the number of cluster heads that have the same information as the source be , where . Before the information at the source is updated again, the source sends updates to cluster heads with the following probability distribution:

If , it means that the information at the source is updated before all the cluster heads obtain the information at the source. In this case, a new update cycle begins and the source starts sending information to the cluster heads again. If all the cluster heads obtain the most current information at the source, then the cluster heads start sending their information to nodes within their corresponding cluster that carries different information compared to the source with a total update rate of . (In this section, we introduce the cluster heads as special nodes of the network, as in [10]. However, using these special nodes may not always be possible, as they may result in additional costs for the system. Considering these factors, we take the total update rate of the cluster heads as , which is the same as that of the regular nodes at the clusters, which means that in the absence of these special nodes, some of the nodes in the gossip network can be used as cluster heads. As all the clusters in the network are identical, we focus on a typical cluster and obtain the average error of a node within that cluster). In a typical update cycle I, we define as the number of nodes that carry the same information at the source. When , a cluster head sends updates to nodes with the following pmf:

If , then we have

When , if the cluster head sends updates to nodes, then the gossiping phase starts and all the nodes within the same cluster share their local information with one another. When the information at the source is updated, the gossiping phase ends, and the nodes that do not get information directly from the cluster head update their information based on the majority of the updates that they received during the gossiping phase.

In the next lemma, for a given state , we provide the expression for the long-term average error at the nodes within the clusters.

Lemma 1.

Under the proposed clustered network structure, for a given , the long-term average error at the nodes within the clusters, denoted by , is given by

where is later provided in (30) and is the row vector of the steady-state distribution of the Markov chain formed over the state space . The unique stationary distribution is given by the solution of for a stochastic matrix , where the transition probabilities of can be derived by replacing , N, and n in (14) by , , and , respectively.

Proof.

For a given , the average error analysis with a clustered gossip network similarly follows from Section 3. During the gossiping phase, node i receives updates with the pmf in (6). Similar to (7) and (8), we can rewrite and by replacing N and m with and , respectively. Then, we can define and as in (9) and (10), respectively. Before starting the gossiping period, nodes have and nodes have as their prior information. At the end of the gossiping period, we have nodes that have the information , where and . The pmf of follows from (12), with and .

Next, we define to be the number of nodes in a cluster that have the same information as the source at the end of an update cycle. For a given , , and , similar to (13), the probability distribution of is given by

When , we have

From (28) and (29), we obtain

Finally, for a given , the states form a Markov chain. Similar to the average error analysis in Section 3, we label the first states by , and the last states by . The stochastic matrix consists of , which denotes the probability of moving from state a to state b, and can be derived by replacing , N, and n in (14) with , , and , respectively. Then, we arrive at a unique stationary distribution that satisfies and . Thus, for a given , we can characterize the long-term average error among all the nodes within the same cluster by (27). □

In the following theorem, we state the long-term average error of the nodes at the clusters and at the cluster heads.

Theorem 2.

Under the proposed clustered network structure, the long-term average error of the nodes at the clusters, denoted by , is given by

where is the row vector of the steady-state distribution of the Markov chain with the state space . The unique stationary distribution is given by the solution of for a stochastic matrix , where the transition probabilities of are given later in (34). Furthermore, the average error of the nodes at the cluster heads, denoted by , is given by

where is provided in (33).

Proof.

In order to obtain the long-term average error , we need to find the probability distribution for . For that, we note that in the clustered network, the information at the source and the number of cluster heads that have the same information at the source, i.e., , also form a Markov chain. During the source’s update transmission to the cluster heads, by using (24) we write the probability distribution for transition to state from state , as follows:

The Markov chain formed by has the states , where we label these states from 1 to , correspondingly. Then, the stochastic matrix , consisting of , which denotes the probability of moving from state a to state b and is given by (34). Then, we can arrive at the unique stationary distribution that satisfies , and , . Finally, by using , we obtain the average error of a node in a cluster in (31).

Similarly, the average error at the cluster heads can be obtained by using (32) with the stationary distribution and in (33). □

In general, the clustered networks can model a system where not all the nodes have access to the source directly. In a way, cluster heads constitute a small group of nodes that have the privilege of accessing the information source directly. These nodes can be considered as paid subscribers to the source, while regular nodes can have free access to the information through these paid subscribers and gossiping. Thus, looking at the average difference between the errors at the cluster heads, , and at the regular nodes, , tells us how much a regular node can increase its quality of information through subscription. We can also imagine the clustered gossip networks in a way such that if every node is connected (subscribed) to the source directly, the information quality at the individual nodes may decrease due to the limited update capacity of the source. Instead, these nodes may choose some nodes as subscribers and share the cost of the subscription. As a result, through clustering, the nodes can decrease the cost of accessing the information while increasing the overall quality of their information.

In the next section, we provide numerical results to shed light on the effects of gossiping and clustering on information dissemination.

6. Numerical Results

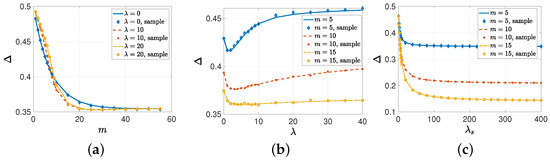

This section has three subsections: in the first one, we discuss the numerical results of the effects of various parameters, such as transmission capacity m, rate of information change , information transmission rate at the source , gossip rate , and the number of nodes n on information dissemination in gossip networks; in the second one, we provide simulation results to corroborate the analytical results in Section 4; in the third one, simulations illustrate the results of Section 5—that is, the effects of clustering on information dissemination.

6.1. Simulations for the Effects of Various System Parameters on Information Dissemination

In the numerical results provided in this subsection, we provide real-time simulations over 200,000 update cycles, and we provide the sample average errors with the markers in Figure 3 and Figure 4. In the first numerical study, we took , , , and . We found the average error with respect to m when Note that corresponded to the case of no gossiping among the nodes. We see in Figure 3a that when m was small, i.e., when the source could send updates to a small number of nodes, the average error increased with gossip rate . As m was small and the information change rate was high, incorrect information disseminated, due to gossiping in the network. As a result, the system with no gossiping () achieved the lowest average error. When we increased m sufficiently, the nodes started to have access to the same information as the source, and gossiping helped to disseminate the correct information. That is why the systems with gossiping—i.e., —achieved lower average error compared to the system with no gossiping. The lowest average error was achieved when for and for . Here, we also note that the average error was lower when compared to , which shows that for a given m, there is an optimal gossip rate that achieves the lowest average error. Finally, increasing m further decreased the probability of entering the gossiping phase, and that is why all the curves in Figure 3a overlap when .

Figure 3.

The average error with respect to (a) m when , (b) the gossip rate for , and (c) the source’s update rate for .

Figure 4.

Average error with respect to n (a) when , (b) when , and (c) when and .

In the second numerical study, we considered the same variable selections as in the previous example except that we took and changed from 0 to 40. We see in Figure 3b that increasing the gossip rate initially helped to reduce the average error . Then, increasing further increased as the incorrect information among the nodes became more available. We see in Figure 3b that the minimum average error was obtained when for , for , and or for . We note that as the source sent updates to more nodes, the optimal gossip rate increased.

In the third numerical study, we considered , , , and . We increased from 1 to 400 for . We see in Figure 3c that increasing initially decreased the average error faster. However, as depended also on the other parameters, such as m and the gossip rate , increasing further did not improve the average error and it converged to 0.348 for , 0.21 for , and 0.144 for .

In the fourth numerical study, we considered the effect of the network size n on the information dissemination. For that, first, we took , , , , and , and we increased with the network size n. In this case, as the network size increased, the source’s transmission rate also increased. However, we kept the total number of nodes that the source could send updated to the same, i.e., for all n. In Figure 4a, when , we see that the average error initially decreased with n, as was initially a primary limiting factor. Increasing n further increased as m became more important. That is why all these three curves overlap each other when is sufficiently large. Then, we considered a scenario where we kept and only increased . In Figure 4b, increasing the maximum number of nodes that the source could send updates to in an update cycle alone did not reduce as n increased. As we increased n, became the presiding factor, and all the curves in Figure 4b overlap. Finally, we increased both the source’s transmission rate and capacity m with n, i.e., and . As a result, in Figure 4c, we observe that we could achieve a constant by increasing and m proportional to n.

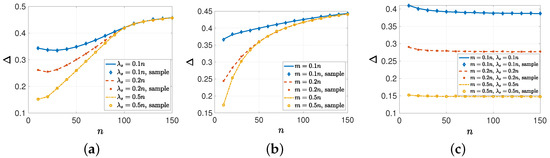

6.2. Simulations for High and Low Gossiping Rates

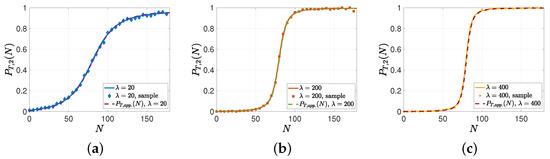

In this subsection, we provide numerical results for the analysis developed for high and low gossip rates in Section 4. Here, we also ran real-time simulations over 10,000,000 update cycles. As , , and , out of 10,000,000 update cycles, approximately in update cycles, the system entered the gossiping phase. As and were the probabilities of individuals that were able to obtain the source’s information as a result of gossiping, the sample averages of and were obtained approximately over 3000 update cycles, where the system entered the gossiping phase. In the first numerical study, we verified the analytical results in Propositions 1 and 2. For this simulation, we numerically evaluated when , , , , for Then, we compared to . In Figure 5, we observe that when was high compared to , could be approximated well by , which was given by the summation of Q-functions in (16). Furthermore, due to Proposition 2, as we increased from 20 to 400, and, thus, converged to a step function, i.e., when , we observed that converged to 0, and when , converged to 1 while we had .

Figure 5.

A sample evolution of , which is approximated by in (16) when is high compared to for (a) , (b) , and (c) .

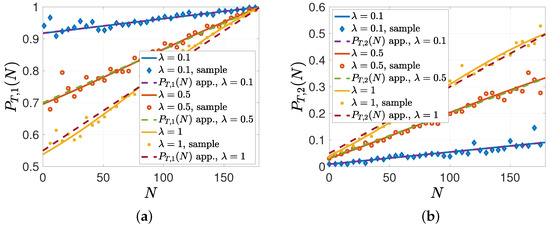

In the remaining numerical studies, we considered the case when the gossip rate was low compared to . In the second simulation, we evaluated and with the same parameters except for We have shown in Proposition 3 that when is low compared to , can be approximated by in (18). We see in Figure 6b that when and , matched closely to in (18). When , could still be approximated well by , but their differences started to be noticeable. Similarly, for the low gossiping rate, we see in Figure 6a that the approximation for given in (20) was close when . When the gossip rate was low, during the gossiping phase, the nodes either did not receive any updates, in which case they held on to their previous beliefs, or only got one update. That is why in Figure 6b, when N was low, , which was the probability of having the correct information as a result of gossiping for a node that had incorrect prior information, was close to 0, and then it increased with N.

In the third simulation study, when the gossip rate was low, we numerically found the gossip gain (22), which was the difference between the average error with no gossiping and the average error with gossiping . For this example, we took , , , , and . We plotted with respect to m in Figure 7a. We observed in Figure 7a that for all values of p, the gossip gain initially increased with m as the source sent correct information to a sufficient number of nodes. Then, increasing m further decreased the gossip gain as the probability of entering the gossiping phase decreased in an update cycle. We observe in Figure 7a that the optimum gain was obtained when for all p values. We note that the scaling term in (22) was equal to , , and for , , and , respectively. We also note that in (21) decreased N in the next update cycle with probability p and increased N with probability . Thus, the term in (22), which was the amplitude of the gossip gain, decreased with p.

Figure 7.

(a) The gossip gain in (22) with regard to m for . (b) A sample evolution of in (23) and its rounding to the nearest integer for different values of .

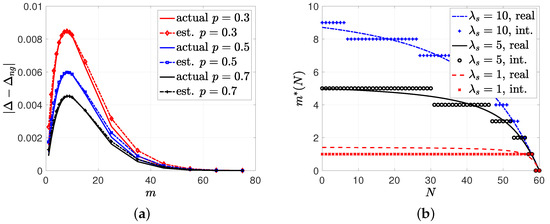

Based on in (21), we can find the optimal m that maximizes the gossip gain for each N, which is provided as in (23). So far, in this work, we have only considered the case where m is kept constant for all update cycles. However, in (23) decreases with N, which suggests a policy that selects m adaptively, depending on N. In the next simulation result, we took , , , , and . In Figure 7b, we plotted and their corresponding rounding to the nearest integer. We see in Figure 7b that the source sent updates to more nodes as increased.

In the last simulation study, we compared the performances of the proposed adaptive policy and the constant policy for selecting m. We considered , , , , and varied from 1 to 200. We first implemented the adaptive-m transmission policy by using the nearest integer rounding of in (23), which was denoted by . We then found the stationary distribution and calculated the average m, using , which is depicted in Figure 8b. In order to make a fair comparison, we took the nearest integer rounding of , which is shown with the dashed lines in Figure 8b, and implemented the constant m transmission policy. We see in Figure 8a that the adaptive m policy (even without gossiping) achieved significantly lower average error compared to the constant m policy. In Figure 8a, we also observe that as the gossiping took place, especially when nodes had the correct information, the average error decreased with the gossip rate . In the adaptive m selection policy, we see in Figure 8b that increasing gossip rate not only achieved lower but also decreased the source’s transmission capacity . Even though we found this policy for low gossip rates (), we observed that it was an effective transmission policy even for the higher values of and could achieve lower compared to the constant m policy.

Figure 8.

The comparison between (a) the average error and (b) the average m for the adaptive m and constant m selection policies.

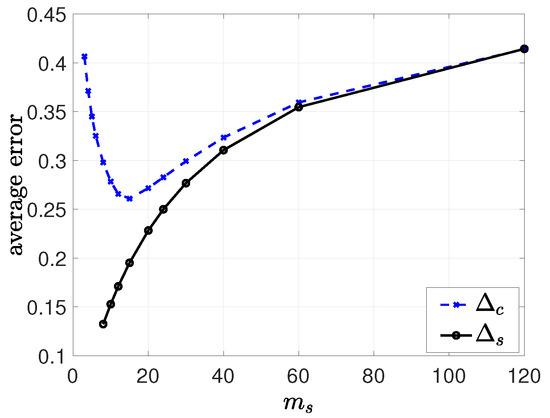

6.3. Simulations for the Clustered Networks

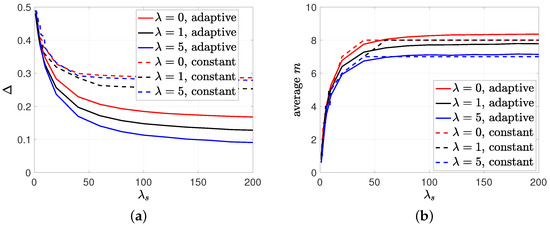

In this subsection, we provide the results of simulations that illustrate the effects of clustering on information dissemination. In the first numerical study, we chose , , , , and . We took and considered all values that could divide n. In Figure 9, we plotted the long-term average error at the clusters, , and at the cluster heads, . We see that increasing the number of cluster heads initially helped to reduce as the update rates from the cluster heads to the nodes increased. We see in Figure 9 that the minimum was achieved when . Increasing further increased , as the average error at the cluster heads became large.

Figure 9.

The long-term average error at the clusters, , and at the cluster heads, , as we increase the number of clusters .

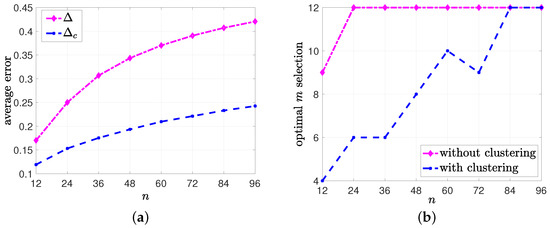

In the second numerical study, we compared the performances of the gossiping networks with and without clustering when the source’s transmission capacity m had an upper limit . For this numerical study, we took the same set of variables as in the first numerical study, but we increased . For each n, we found the optimum m for the network model without clustering and the optimum for the clustered network that minimized the average error at the nodes. We plotted the minimum average error values in Figure 10a and the optimum m and selections in Figure 10b. We see in Figure 10a that the average error with clustering, , was smaller than the average error without clustering, , for all values of n, although the source used its maximum capacity for in the network model without clustering, as shown in Figure 10b. For the clustered network model, the optimal number of cluster heads mostly increased with n and reached for .

Figure 10.

The comparison between (a) the average errors and and (b) the optimum m selections for the network models w and w/o clustering.

7. Conclusions and Future Directions

In this work, we considered information dissemination over gossip networks consisting of a source that keeps the most up-to-date information about a binary state of the world and n nodes whose common goal is to follow the binary state of the world as accurately as possible. We first characterized the equations necessary to obtain the average error over all the nodes. Then, we provided analytical results for the high and low gossip rates. As information became available among the nodes in the high gossip rates, all the nodes behaved like a single node. In the low gossip case, we analyzed the gossip gain, which was the error reduction compared to the system with no gossiping, and we obtained , which maximized the gain. This suggests an adaptive m selection policy using , where the source sends updates to more nodes if most of them have incorrect prior information. Finally, we implemented a clustered gossiping network model and characterized the average errors at the cluster heads and at the nodes in the clusters.

We would like to note that, in this paper, information change probability p and update rate are taken as given exogenous parameters to the source. As time passes, the source generates updates based on Poisson ticking with rate among which information is reverted with rate . Let us assume that is fixed (while ) and m is constant. As is constant, information change rate over time does not change as we vary . When is low (and, thus, p is relatively large), then information at the source is flipped more frequently and the update cycle duration gets longer (as a result, the probability of entering the gossiping phase is higher). As the information is flipped more often, the majority of the nodes may have incorrect information. During the gossiping phase, this may increase the average error, as incorrect information may be disseminated further in the network. On the other hand, when is high while p is low, the information at the source is updated more frequently, but information does not get mutated much. In this case, the probability of entering the gossiping phase decreases and, thus, the system may not benefit from gossiping. Therefore, for a fixed , there should be an optimal p and selection that minimizes the average error. We leave the optimization problem over as a future research direction.

As a future direction of research, one could consider the problem where the information at the source can take different values based on a known pmf. Furthermore, here we have considered only fully connected networks, and extending these results to arbitrarily connected networks could be another interesting direction. One could consider a setting where the source does not have access to the prior information on the nodes, and has to select nodes randomly. In addition to the real-time simulation results, we would like to test our results with the real-world datasets provided in [25]. Finally, one can consider a setting where, although some nodes have the most accurate information, they maliciously send incorrect information to others during the gossiping phase, thus increasing average error.

Author Contributions

Conceptualization, M.B., S.R.E. and T.B.; methodology, M.B., S.R.E. and T.B.; software, M.B.; validation, M.B., S.R.E. and T.B.; formal analysis, M.B., S.R.E. and T.B.; investigation, M.B., S.R.E. and T.B.; resources, M.B., S.R.E. and T.B.; data curation, M.B.; writing—original draft preparation, M.B., S.R.E. and T.B.; writing—review and editing, M.B., S.R.E. and T.B.; visualization, M.B.; supervision, S.R.E. and T.B.; project administration, S.R.E. and T.B.; funding acquisition, S.R.E. and T.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Air Force Office of Scientific Research FA9550-23-1-0107, the NSF CAREER Award EPCN-1944403, and the ARO MURI Grant AG285.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shah, D. Gossip Algorithms; Now Publishers Inc.: Norwell, MA, USA, 2009. [Google Scholar]

- Mosk-Aoyama, D.; Shah, D. Information dissemination via network coding. In Proceedings of the IEEE ISIT, Seattle, DC, USA, 9–14 July 2006; pp. 1748–1752. [Google Scholar]

- Boyd, S.; Ghosh, A.; Prabhakar, B.; Shah, D. Analysis and optimization of randomized gossip algorithms. In Proceedings of the IEEE CDC, Nassau, Bahamas, 14–17 December 2004; pp. 5310–5315. [Google Scholar]

- Boyd, S.; Ghosh, A.; Prabhakar, B.; Shah, D. Randomized gossip algorithms. IEEE Trans. Inf. Theory 2006, 52, 2508–2530. [Google Scholar] [CrossRef]

- Mukund, M.; Sohoni, M. Keeping track of the latest gossip in a distributed system. Distrib. Comput. 1997, 10, 137–148. [Google Scholar] [CrossRef]

- Israeli, A.; Li, M. Bounded time-stamps. In Proceedings of the 28th Annual Symposium on Foundations of Computer Science, Los Angeles, CA, USA, 12–14 October 1987; pp. 371–382. [Google Scholar]

- Sun, Y.; Kadota, I.; Talak, R.; Modiano, E. Age of information: A new metric for information freshness. Synth. Lect. Commun. Netw. 2019, 12, 1–224. [Google Scholar]

- Yates, R.D.; Sun, Y.; Brown, D.R.; Kaul, S.K.; Modiano, E.; Ulukus, S. Age of information: An introduction and survey. IEEE JSAC 2021, 39, 1183–1210. [Google Scholar] [CrossRef]

- Yates, R.D. The age of gossip in networks. In Proceedings of the IEEE ISIT, Melbourne, Australia, 12–20 July 2021; pp. 2984–2989. [Google Scholar]

- Buyukates, B.; Bastopcu, M.; Ulukus, S. Version age of information in clustered gossip networks. IEEE JSAC 2022, 3, 85–97. [Google Scholar] [CrossRef]

- Bastopcu, M.; Buyukates, B.; Ulukus, S. Gossiping with binary freshness metric. In Proceedings of the IEEE Globecom Workshops, Madrid, Spain, 7–11 December 2021; pp. 1–6. [Google Scholar]

- Cho, J.; Garcia-Molina, H. Effective page refresh policies for web crawlers. Acm Trans. Database Syst. 2003, 28, 390–426. [Google Scholar] [CrossRef]

- Azar, Y.; Horvitz, E.; Lubetzky, E.; Peres, Y.; Shahaf, D. Tractable near-optimal policies for crawling. Proc. Natl. Acad. Sci. USA 2018, 115, 8099–8103. [Google Scholar] [CrossRef] [PubMed]

- Kolobov, A.; Peres, Y.; Lubetzky, E.; Horvitz, E. Optimal freshness crawl under politeness constraints. In Proceedings of the ACM SIGIR Conference, Paris, France, 21–25 July 2019. [Google Scholar]

- Minnaard, W.; de Laat, C.T.A.M.; van Loosen MSc, M. Timestomping NTFS; IMSc Final Research Project Report; University of Amsterdam: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Kaswan, P.; Ulukus, S. Susceptibility of age of gossip to timestomping. arXiv 2022, arXiv:2205.08510. [Google Scholar]

- Zhang, J.; You, K.; Başar, T. Distributed discrete-time optimization in multiagent networks using only sign of relative state. IEEE Trans. Autom. Control. 2019, 64, 2352–2367. [Google Scholar] [CrossRef]

- Cao, X.; Başar, T. Decentralized online convex optimization based on signs of relative states. Automatica 2021, 129, 109676. [Google Scholar] [CrossRef]

- Etesami, S.R.; Bolouki, S.; Nedić, A.; Başar, T.; Poor, H.V. Influence of conformist and manipulative behaviors on public opinion. IEEE Trans. Control. Netw. Syst. 2018, 6, 202–214. [Google Scholar] [CrossRef]

- Coban, Ö.; Truică, C.O.; Apostol, E.S. CONTAIN: A Community-based Algorithm for Network Immunization. arXiv 2023, arXiv:2303.01934. [Google Scholar]

- Petrescu, A.; Truică, C.O.; Apostol, E.S.; Karras, P. Sparse shield: Social network immunization vs. harmful speech. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Virtual Event, 1–5 October 2021; pp. 1426–1436. [Google Scholar]

- Truică, C.O.; Apostol, E.S.; Karras, P. DANES: Deep Neural Network Ensemble Architecture for Social and Textual Context-aware Fake News Detection. arXiv 2023, arXiv:2302.01756. [Google Scholar]

- Bastopcu, M.; Etesami, S.R.; Başar, T. The dissemination of time-varying information over networked agents with gossiping. In Proceedings of the IEEE ISIT, Espoo, Finland, 26 June–1 July 2022. [Google Scholar]

- Bastopcu, M.; Ulukus, S. Information freshness in cache updating systems. IEEE Trans. Wirel. Commun. 2021, 20, 1861–1874. [Google Scholar] [CrossRef]

- Shu, K.; Mahudeswaran, D.; Wang, S.; Lee, D.; Liu, H. Fakenewsnet: A data repository with news content, social context, and spatiotemporal information for studying fake news on social media. Big Data 2020, 8, 171–188. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).