Information Difference of Transfer Entropies between Head Motion and Eye Movement Indicates a Proxy of Driving

Abstract

:1. Introduction

2. Related Works

2.1. Transfer Entropy

2.2. Coordination of Head and Eyes

2.3. Complexity Measures for Visual Scanning

3. The Proposed Methodology for New Measures

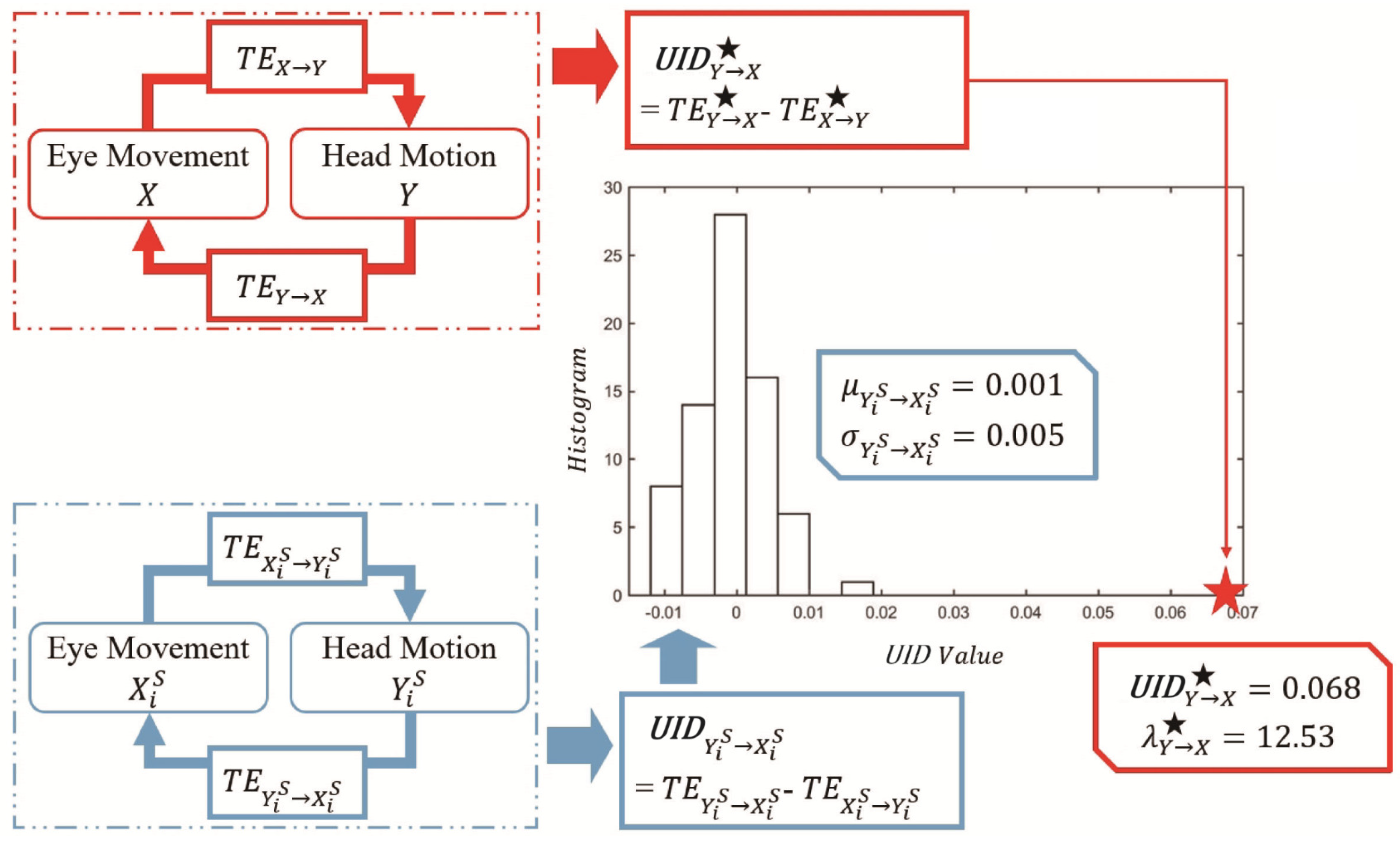

3.1. A Unidirectional Information Difference (UID)

3.1.1. Significance Test

3.2. A Normalized Unidirectional Information Difference (NUID)

4. Experiment

4.1. Virtual Reality Environment and Task

4.2. Apparatus

4.3. Participants

4.4. Procedure

5. Results and Discussion

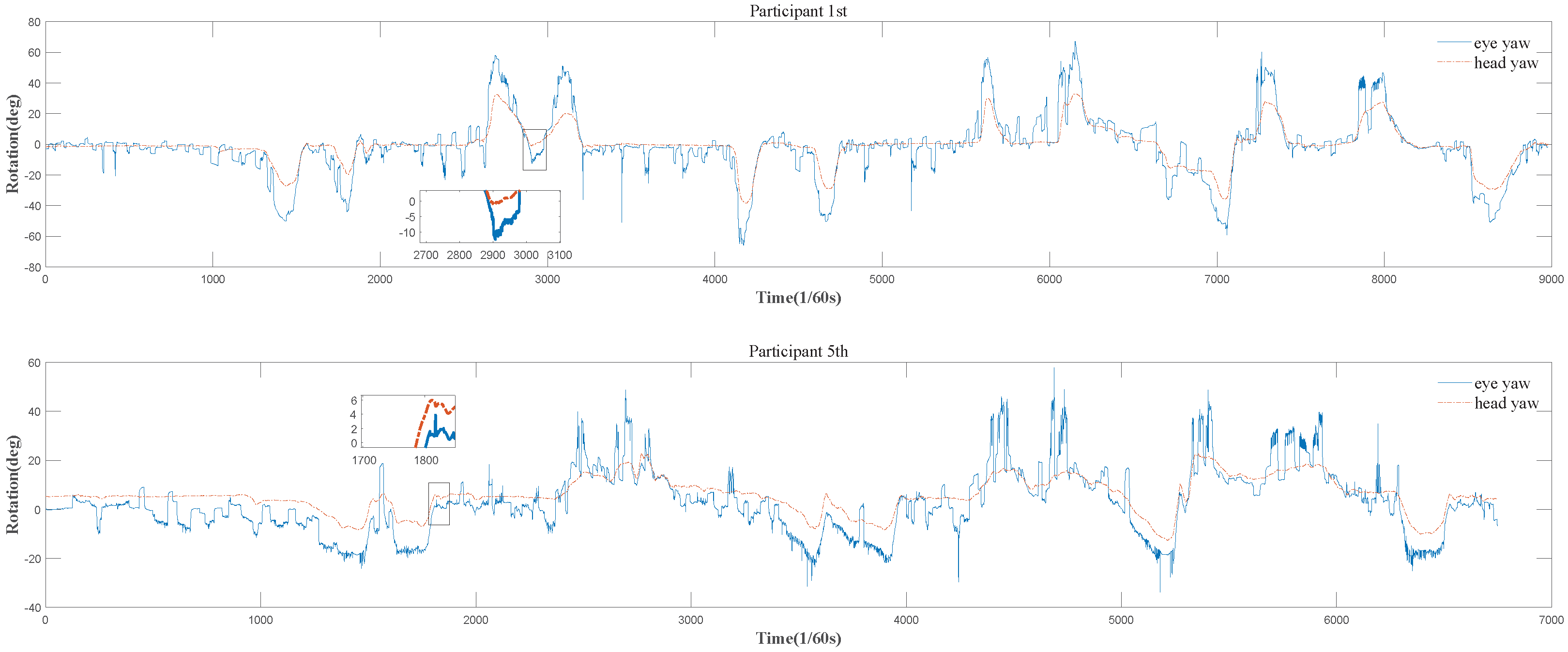

5.1. Temporal Sequences of Head Motion and Eye Movement Data

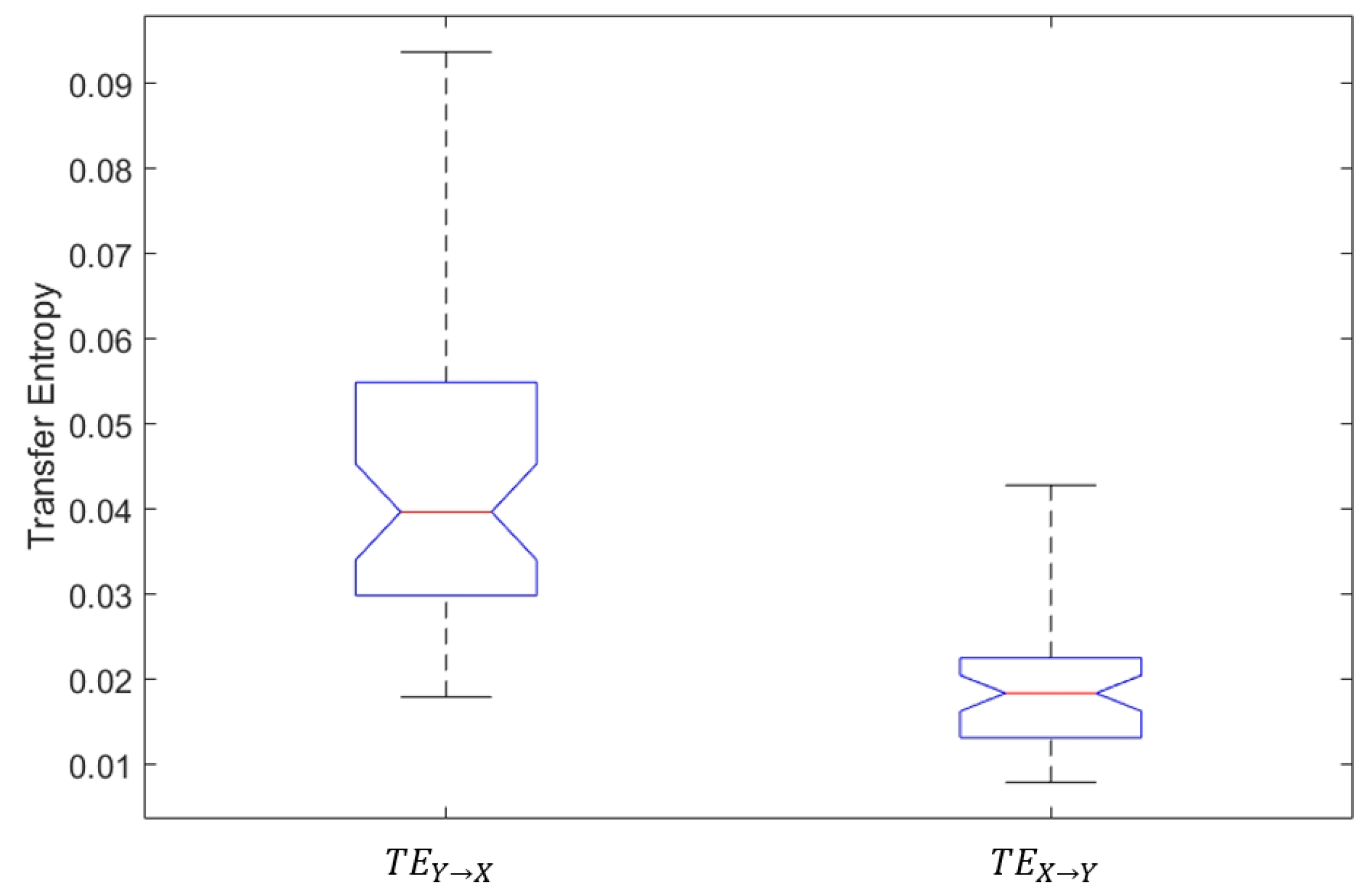

5.2. Transfer Entropies between Head Motion and Eye Movement

5.3. The Unidirectional Information Difference between Head Motion and Eye Movement

5.4. The Normalized Unidirectional Information Difference between Head Motion and Eye Movement

6. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hayhoe, M.; Ballard, D. Eye movements in natural behavior. Trends Cogn. Sci. 2005, 9, 188–194. [Google Scholar] [CrossRef] [PubMed]

- Henderson, J.M. Gaze control as prediction. Trends Cogn. Sci. 2017, 21, 15–23. [Google Scholar] [CrossRef] [PubMed]

- Kapitaniak, B.; Walczak, M.; Kosobudzki, M.; Jóźwiak, Z.; Bortkiewicz, A. Application of eye-tracking in drivers testing: A review of research. Int. J. Occup. Med. Environ. Health 2015, 28, 941–954. [Google Scholar] [CrossRef] [PubMed]

- Amini, R.E.; Al Haddad, C.; Batabyal, D.; Gkena, I.; De Vos, B.; Cuenen, A.; Brijs, T.; Antoniou, C. Driver distraction and in-vehicle interventions: A driving simulator study on visual attention and driving performance. Accid. Anal. Prev. 2023, 191, 107195. [Google Scholar] [CrossRef] [PubMed]

- Pelz, J.; Hayhoe, M.; Loeber, R. The Coordination of eye, head, and hand movements in a natural task. Exp. Brain Res. 2001, 139, 266–277. [Google Scholar] [CrossRef] [PubMed]

- Freedman, E.G. Coordination of the eyes and head during visual orienting. Exp. Brain Res. 2008, 190, 369. [Google Scholar] [CrossRef] [PubMed]

- Doshi, A.; Trivedi, M.M. Head and eye gaze dynamics during visual attention shifts in complex environments. J. Vis. 2012, 12, 189–190. [Google Scholar] [CrossRef]

- Fang, Y.; Nakashima, R.; Matsumiya, K.; Kuriki, I.; Shioiri, S. Eye-head coordination for visual cognitive processing. PLoS ONE 2015, 10, e0121035. [Google Scholar] [CrossRef]

- Mikula, L.; Mejia-Romero, S.; Chaumillon, R.; Patoine, A.; Lugo, E.; Bernardin, D.; Faubert, J. Eye-head coordination and dynamic visual scanning as indicators of visuo-cognitive demands in driving simulator. PLoS ONE 2020, 15, e0240201. [Google Scholar] [CrossRef]

- Morasso, P.; Sandini, G.; Tagliasco, V.; Zaccaria, R. Control strategies in the eye-head coordination system. IEEE Trans. Syst. Man Cybern. 1977, 7, 639–651. [Google Scholar] [CrossRef]

- Bossomaier, T.; Barnett, L.; Lizier, J.T. An Introduction to Transfer Entropy: Information Flow in Complex Systems; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Weiss, R.S.; Remington, R.; Ellis, S.R. Sampling distributions of the entropy in visual scanning. Behav. Res. Methods Instrum. Comput. 1989, 21, 348–352. [Google Scholar] [CrossRef]

- Thomas, S. Measuring information transfer. Phys. Rev. Lett. 2000, 85, 461–464. [Google Scholar]

- Granger, C.W. Some recent development in a concept of causality. J. Econom. 1988, 39, 199–211. [Google Scholar] [CrossRef]

- Pfeil, K.; Taranta, E.M.; Kulshreshth, A.; Wisniewski, P.; LaViola, J.J. A Comparison of Eye-Head Coordination between Virtual and Physical Realities. In Proceedings of the 15th ACM Symposium on Applied Perception, SAP ’18, New York, NY, USA, 10–11 August 2018. [Google Scholar] [CrossRef]

- Nakashima, R.; Shioiri, S. Why Do We Move Our Head to Look at an Object in Our Peripheral Region? Lateral Viewing Interferes with Attentive Search. PLoS ONE 2014, 9, e92284. [Google Scholar] [CrossRef] [PubMed]

- Land, M.F. Predictable eye-head coordination during driving. Nature 1992, 359, 318–320. [Google Scholar] [CrossRef] [PubMed]

- Lappi, O. Eye movements in the wild: Oculomotor control, gaze behavior & frames of reference. Neurosci. Biobehav. Rev. 2016, 69, 49–68. [Google Scholar] [PubMed]

- Sidenmark, L.; Gellersen, H. Eye, head and torso coordination during gaze shifts in virtual reality. ACM Trans. Comput. Hum. Interact. 2019, 27, 1–40. [Google Scholar] [CrossRef]

- Land, M.F. Eye movements and the control of actions in everyday life. Prog. Retin. Eye Res. 2006, 25, 296–324. [Google Scholar] [CrossRef]

- Tong, M.H.; Zohar, O.; Hayhoe, M.M. Control of gaze while walking: Task structure, reward, and uncertainty. J. Vis. 2017, 17, 28. [Google Scholar] [CrossRef]

- Hansen, J.H.L.; Busso, C.; Zheng, Y.; Sathyanarayana, A. Driver modeling for detection and assessment of driver distraction: Examples from the UTDrive test bed. IEEE Signal Process. Mag. 2017, 34, 130–142. [Google Scholar] [CrossRef]

- Itoh, Y.; Hayashi, Y.; Tsukui, I.; Saito, S. The ergonomic evaluation of eye movement and mental workload in aircraft pilots. Ergonomics 1990, 33, 719–732. [Google Scholar] [CrossRef] [PubMed]

- Chanijani, S.S.M.; Klein, P.; Bukhari, S.S.; Kuhn, J.; Dengel, A. Entropy based transition analysis of eye movement on physics representational competence. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, Heidelberg, Germany, 12–16 September 2016; pp. 1027–1034. [Google Scholar]

- Shiferaw, B.; Downey, L.; Crewther, D. A review of gaze entropy as a measure of visual scanning efficiency. Neurosci. Biobehav. Rev. 2019, 96, 353–366. [Google Scholar] [CrossRef] [PubMed]

- Mendenhall, W.; Beaver, R.J.; Beaver, B.M. Introduction to Probability and Statistics; Cengage Learning: Boston, MA, USA, 2012. [Google Scholar]

- Schreiber, T.; Schmitz, A. Surrogate time series. Phys. D Nonlinear Phenom. 1999, 142, 346–382. [Google Scholar] [CrossRef]

- Knijnenburg, T.A.; Wessels, L.F.A.; Reinders, M.J.T.; Shmulevich, I. Fewer permutations, more accurate p-values. Bioinformatics 2009, 25, 161–168. [Google Scholar] [CrossRef] [PubMed]

- Wikipedia. Correlation and Dependence. 2021. Available online: https://en.wikipedia.org/wiki/Correlationanddependence (accessed on 15 December 2023).

- Marschinski, R.; Kantz, H. Analysing the information flow between financial time series. Phys. Condens. Matter 2002, 30, 275–281. [Google Scholar] [CrossRef]

- Mao, X.; Shang, P. Transfer entropy between multivariate time series. Commun. Nonlinear Sci. Numer. Simul. 2016, 47, 338–347. [Google Scholar] [CrossRef]

- Borojeni, S.S.; Chuang, L.; Heuten, W.; Boll, S. Assisting Drivers with Ambient Take-Over Requests in Highly Automated Driving. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Automotive’UI 16, New York, NY, USA, 24–26 October 2016; pp. 237–244. [Google Scholar] [CrossRef]

- Lv, Z.; Xu, Q.; Schoeffmann, K.; Parkinson, S. A Jensen-Shannon Divergence Driven Metric of Visual Scanning Efficiency Indicates Performance of Virtual Driving. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Plopski, A.; Hirzle, T.; Norouzi, N.; Qian, L.; Bruder, G.; Langlotz, T. The Eye in Extended Reality: A Survey on Gaze Interaction and Eye Tracking in Head-Worn Extended Reality. ACM Comput. Surv. 2022, 55, 1–39. [Google Scholar] [CrossRef]

- Brams, S.; Ziv, G.; Levin, O.; Spitz, J.; Wagemans, J.; Williams, A.; Helsen, W. The relationship between gaze behavior, expertise, and performance: A systematic review. Psychol. Bull. 2019, 145, 980–1027. [Google Scholar] [CrossRef]

- Green, M. “How long does it take to stop?” Methodological analysis of driver perception-brake times. Trans. Hum. Factors 2000, 2, 195–216. [Google Scholar] [CrossRef]

- Yadav, A.K.; Velaga, N.R. Effect of alcohol use on accelerating and braking behaviors of drivers. Traffic Inj. Prev. 2019, 20, 353–358. [Google Scholar] [CrossRef]

- HTC. HTC Vive. 2021. Available online: https://www.htcvive.com (accessed on 15 December 2023).

- 7INVENSUN. 7INVENSUN Instrument aGlass. 2021. Available online: https://www.7invensun.com (accessed on 15 December 2023).

- Logitech. Logitech G29. 2021. Available online: https://www.logitechg.com/en-us/products/driving/driving-force-racing-wheel.html (accessed on 15 December 2023).

- Lehmann, E.L. Elements of Large-Sample Theory; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Gonzalez, R.C. Digital Image Processing; Pearson Education India: Chennai, India, 2009. [Google Scholar]

- Theiler, J.; Eubank, S.; Longtin, A.; Galdrikian, B.; Farmer, J.D. Testing for nonlinearity in time series: The method of surrogate data. Phys. D Nonlinear Phenom. 1992, 58, 77–94. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Routledge: London, UK, 2013. [Google Scholar]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Jentsch, C.; Politis, D.N. Covariance matrix estimation and linear process bootstrap for multivariate time series of possibly increasing dimension. Ann. Stat. 2015, 43, 1117–1140. [Google Scholar] [CrossRef]

| Participant | Trial 1 | Trial 2 | Trial 3 | Trial 4 | ||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2.19 | 2.98 | 1.99 | 2.77 | 1.68 | 1.85 | 1.75 | 2.99 |

| 2 | 1.82 | 3.03 | 2.60 | 6.93 | 1.64 | 3.52 | 2.00 | 4.76 |

| 3 | 2.25 | 3.68 | 1.97 | 2.58 | 2.42 | 2.72 | 2.66 | 4.48 |

| 4 | 0.79 | 1.97 | 1.25 | 2.23 | 1.31 | 3.16 | 1.17 | 3.86 |

| 5 | 1.82 | 4.96 | 1.13 | 5.87 | 0.86 | 7.66 | 1.59 | 3.84 |

| 6 | 1.83 | 5.38 | 4.28 | 8.71 | 2.06 | 5.09 | 1.99 | 3.96 |

| 7 | 1.45 | 4.36 | 2.99 | 6.41 | 2.26 | 6.60 | 1.09 | 4.74 |

| 8 | 1.39 | 2.31 | 1.49 | 3.51 | 3.00 | 6.86 | 2.31 | 3.88 |

| 9 | 1.76 | 5.09 | 2.17 | 6.50 | 1.85 | 5.60 | 2.16 | 3.79 |

| 10 | 1.12 | 1.80 | 1.23 | 4.30 | 1.08 | 2.99 | 1.86 | 4.95 |

| 11 | 2.27 | 5.02 | 1.22 | 2.43 | 2.09 | 3.98 | 1.10 | 2.80 |

| 12 | 1.32 | 3.58 | 2.34 | 7.87 | 2.81 | 8.99 | 2.36 | 9.37 |

| Participant | Trial 1 | Trial 2 | Trial 3 | Trial 4 |

|---|---|---|---|---|

| 1 | 0.79 | 0.78 | 0.17 | 1.24 |

| 2 | 1.21 | 4.33 | 1.88 | 2.76 |

| 3 | 1.43 | 0.61 | 0.30 | 1.82 |

| 4 | 1.18 | 0.98 | 1.85 | 2.70 |

| 5 | 3.14 | 4.73 | 6.80 | 2.25 |

| 6 | 3.55 | 4.43 | 3.02 | 1.97 |

| 7 | 2.91 | 3.42 | 4.33 | 3.65 |

| 8 | 0.91 | 2.02 | 3.86 | 1.57 |

| 9 | 3.33 | 4.33 | 3.75 | 1.62 |

| 10 | 0.68 | 3.06 | 1.91 | 3.09 |

| 11 | 2.76 | 1.21 | 1.89 | 1.70 |

| 12 | 2.26 | 5.53 | 6.18 | 7.01 |

| Participant | Trial 1 | Trial 2 | Trial 3 | Trial 4 |

|---|---|---|---|---|

| 1 | 8.89 | 9.18 | 15.25 | 10.23 |

| 2 | 17.33 | 18.08 | 7.83 | 5.68 |

| 3 | 17.01 | 5.48 | 11.20 | 11.64 |

| 4 | 11.94 | 15.03 | 12.25 | 16.67 |

| 5 | 16.02 | 12.18 | 12.53 | 8.02 |

| 6 | 15.86 | 20.18 | 21.13 | 17.15 |

| 7 | 17.28 | 19.36 | 26.34 | 22.89 |

| 8 | 7.86 | 13.92 | 13.58 | 10.74 |

| 9 | 16.40 | 17.84 | 17.37 | 13.94 |

| 10 | 14.32 | 16.86 | 13.14 | 14.47 |

| 11 | 19.35 | 12.71 | 18.87 | 19.43 |

| 12 | 14.67 | 12.52 | 16.69 | 15.06 |

| Participant | Trial 1 | Trial 2 | Trial 3 | Trial 4 | ||||

|---|---|---|---|---|---|---|---|---|

() | (/m) | () | (/m) | () | (/m) | () | (/m) | |

| 1 | −1.75 | 0.37 | −1.13 | 0.43 | −0.35 | 0.42 | −0.18 | 0.34 |

| 2 | 1.23 | 0.71 | 2.77 | 0.64 | 3.11 | 0.69 | 5.05 | 0.89 |

| 3 | 1.66 | 0.45 | 1.65 | 0.41 | −0.80 | 0.42 | −0.28 | 0.40 |

| 4 | 4.85 | 0.72 | 1.50 | 0.56 | 4.45 | 0.56 | 4.84 | 0.52 |

| 5 | 5.95 | 0.44 | 6.02 | 0.48 | 8.07 | 0.42 | 4.47 | 0.55 |

| 6 | 4.18 | 0.25 | −1.42 | 0.29 | 3.00 | 0.30 | 0.88 | 0.30 |

| 7 | 6.28 | 0.56 | 0.60 | 0.52 | 5.17 | 0.64 | 8.20 | 0.57 |

| 8 | −2.54 | 0.35 | 2.15 | 0.46 | 2.58 | 0.40 | 0.91 | 0.40 |

| 9 | 3.40 | 0.62 | 1.25 | 0.44 | −0.99 | 0.40 | −1.04 | 0.47 |

| 10 | 3.21 | 0.44 | 7.97 | 0.51 | 8.45 | 0.36 | 5.93 | 0.50 |

| 11 | 4.76 | 0.45 | 2.41 | 0.42 | 4.12 | 0.44 | 3.15 | 0.38 |

| 12 | 2.39 | 0.64 | 2.69 | 0.59 | 4.35 | 0.55 | 2.93 | 0.71 |

| Methods | , p-Value | , p-Value | , p-Value |

|---|---|---|---|

| , | , | , | |

| 0.19, | 0.19, | 0.26, | |

| 0.07, | 0, | −0.01, | |

| −0.07 | −0.09, | −0.15, | |

| 0.01, | 0, | −0.03, | |

| −0.06, | −0.01, | −0.02, | |

| −0.24, | −0.17, | −0.24, | |

| 0.25, | 0.11, | 0.09, | |

| 0.08, | 0.11, | 0.14, | |

| −0.17, | −0.13, | −0.21, |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, R.; Xu, Q.; Wang, S.; Parkinson, S.; Schoeffmann, K. Information Difference of Transfer Entropies between Head Motion and Eye Movement Indicates a Proxy of Driving. Entropy 2024, 26, 3. https://doi.org/10.3390/e26010003

Zhang R, Xu Q, Wang S, Parkinson S, Schoeffmann K. Information Difference of Transfer Entropies between Head Motion and Eye Movement Indicates a Proxy of Driving. Entropy. 2024; 26(1):3. https://doi.org/10.3390/e26010003

Chicago/Turabian StyleZhang, Runlin, Qing Xu, Shunbo Wang, Simon Parkinson, and Klaus Schoeffmann. 2024. "Information Difference of Transfer Entropies between Head Motion and Eye Movement Indicates a Proxy of Driving" Entropy 26, no. 1: 3. https://doi.org/10.3390/e26010003

APA StyleZhang, R., Xu, Q., Wang, S., Parkinson, S., & Schoeffmann, K. (2024). Information Difference of Transfer Entropies between Head Motion and Eye Movement Indicates a Proxy of Driving. Entropy, 26(1), 3. https://doi.org/10.3390/e26010003