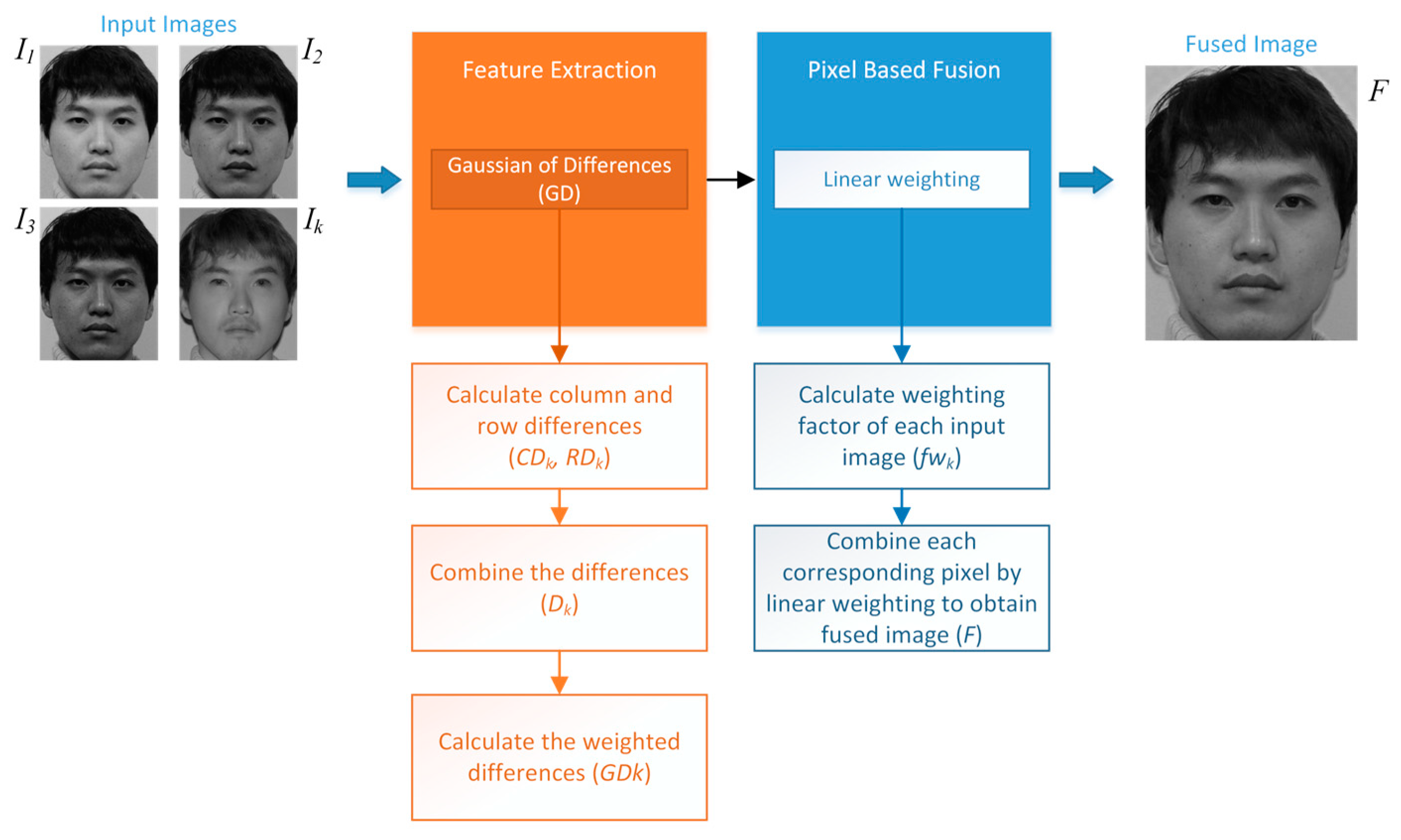

Figure 1.

Proposed general image fusion method based on pixel-based linear weighting using the Gaussian of differences (GD).

Figure 1.

Proposed general image fusion method based on pixel-based linear weighting using the Gaussian of differences (GD).

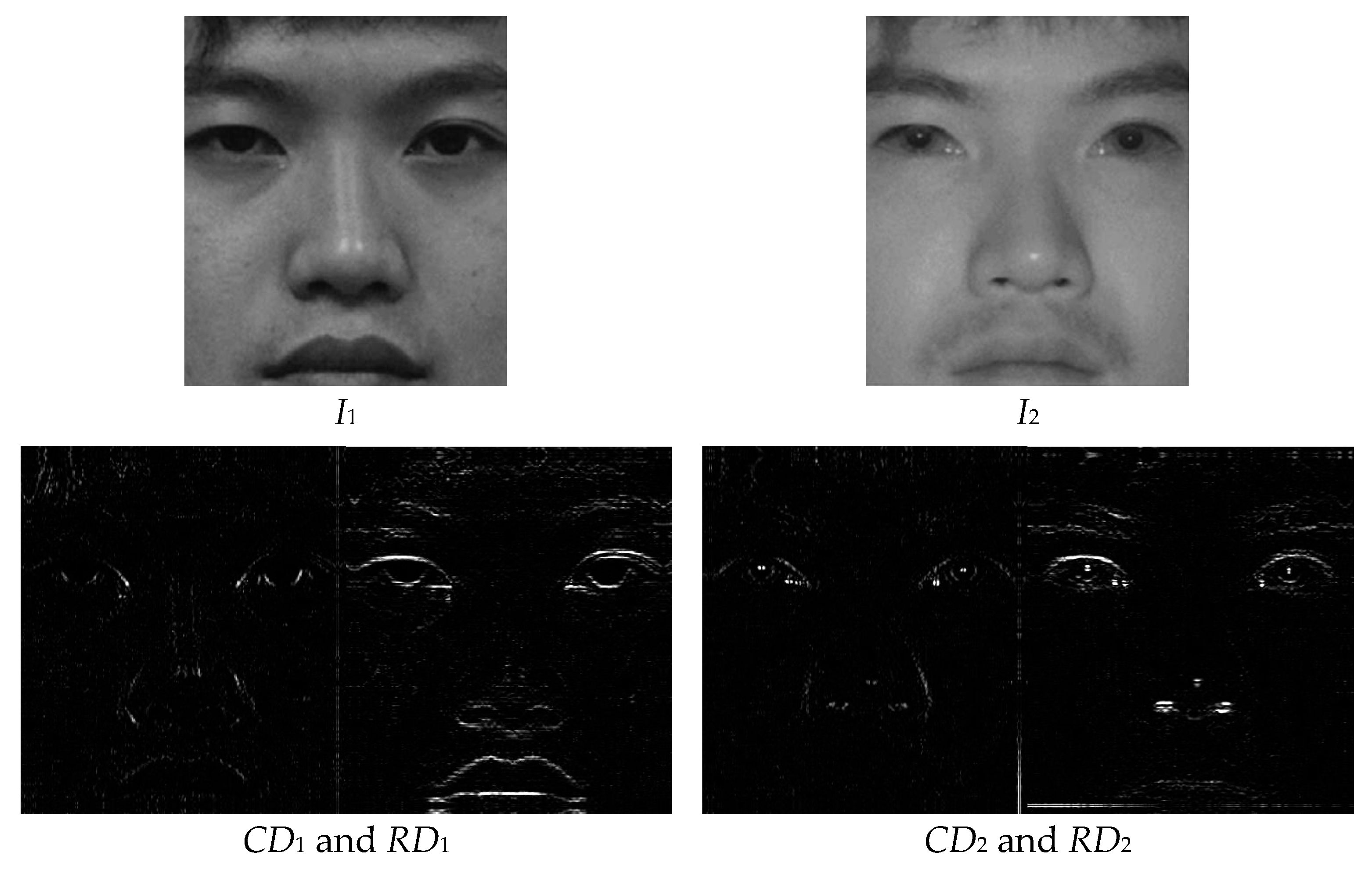

Figure 2.

Sample input images (

I1 and

I2) [

54] and their column and row differences.

Figure 2.

Sample input images (

I1 and

I2) [

54] and their column and row differences.

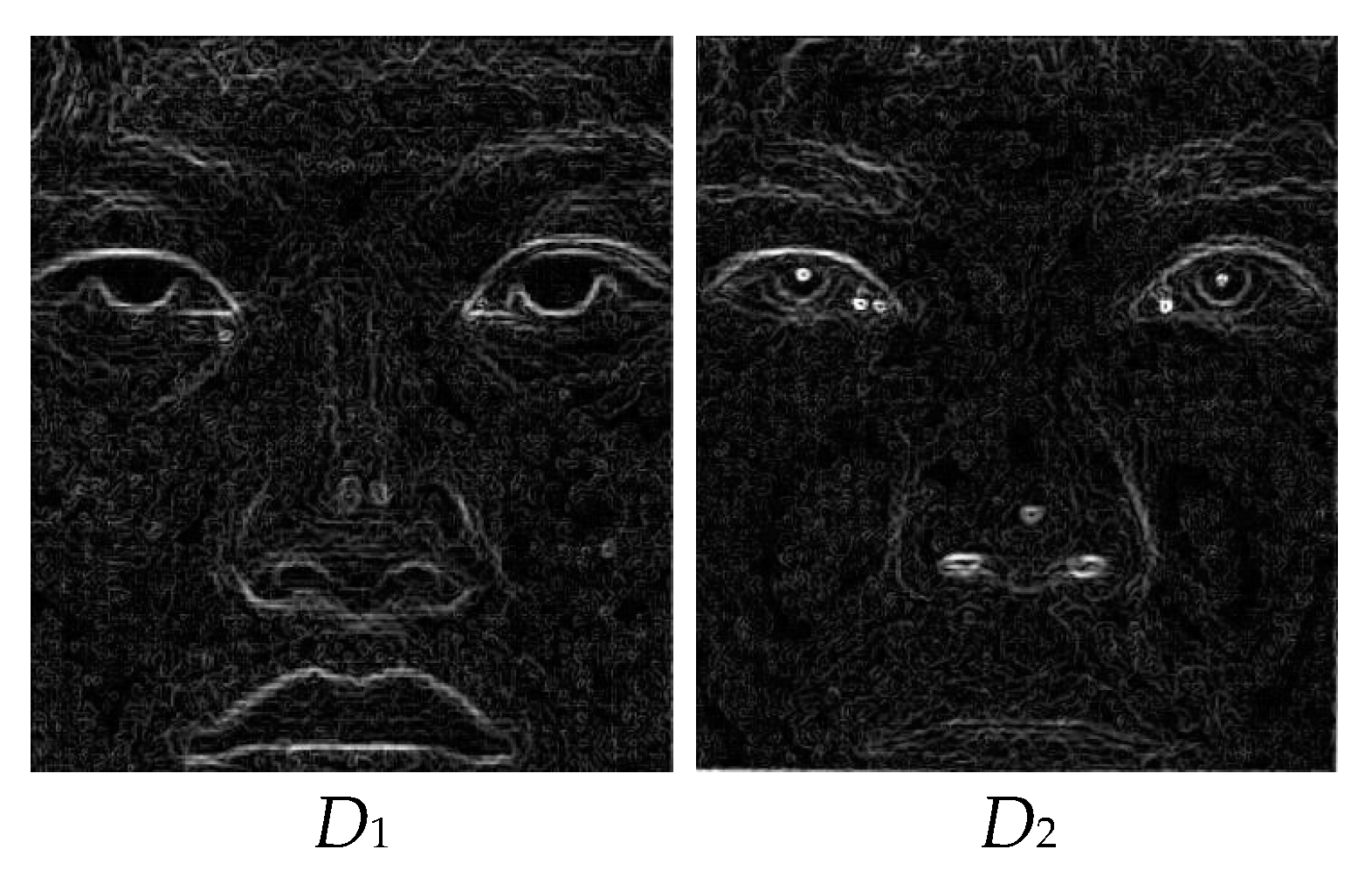

Figure 3.

Combined difference images (D) of the input images.

Figure 3.

Combined difference images (D) of the input images.

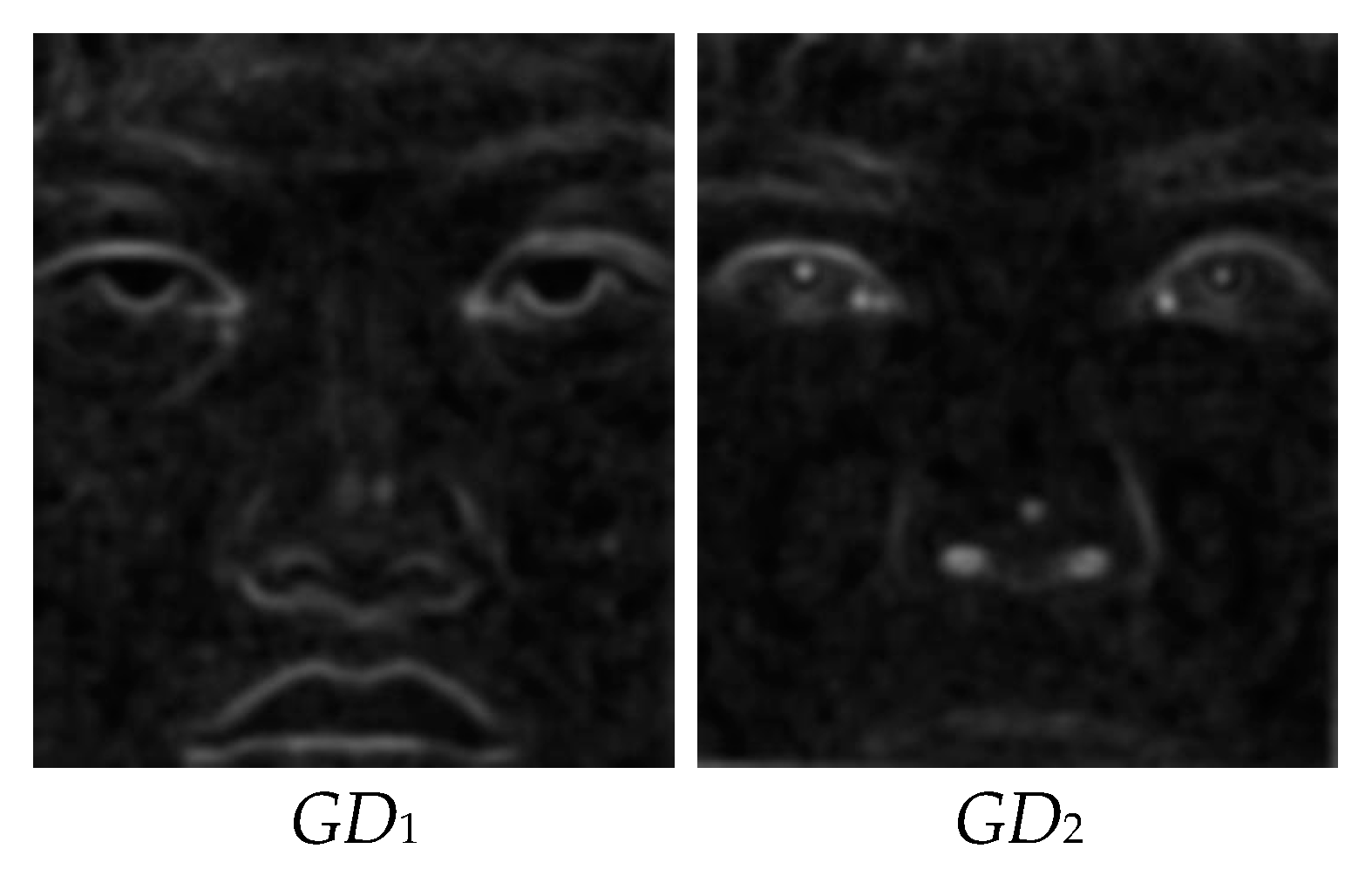

Figure 4.

Gaussian of differences (GD) of the input images.

Figure 4.

Gaussian of differences (GD) of the input images.

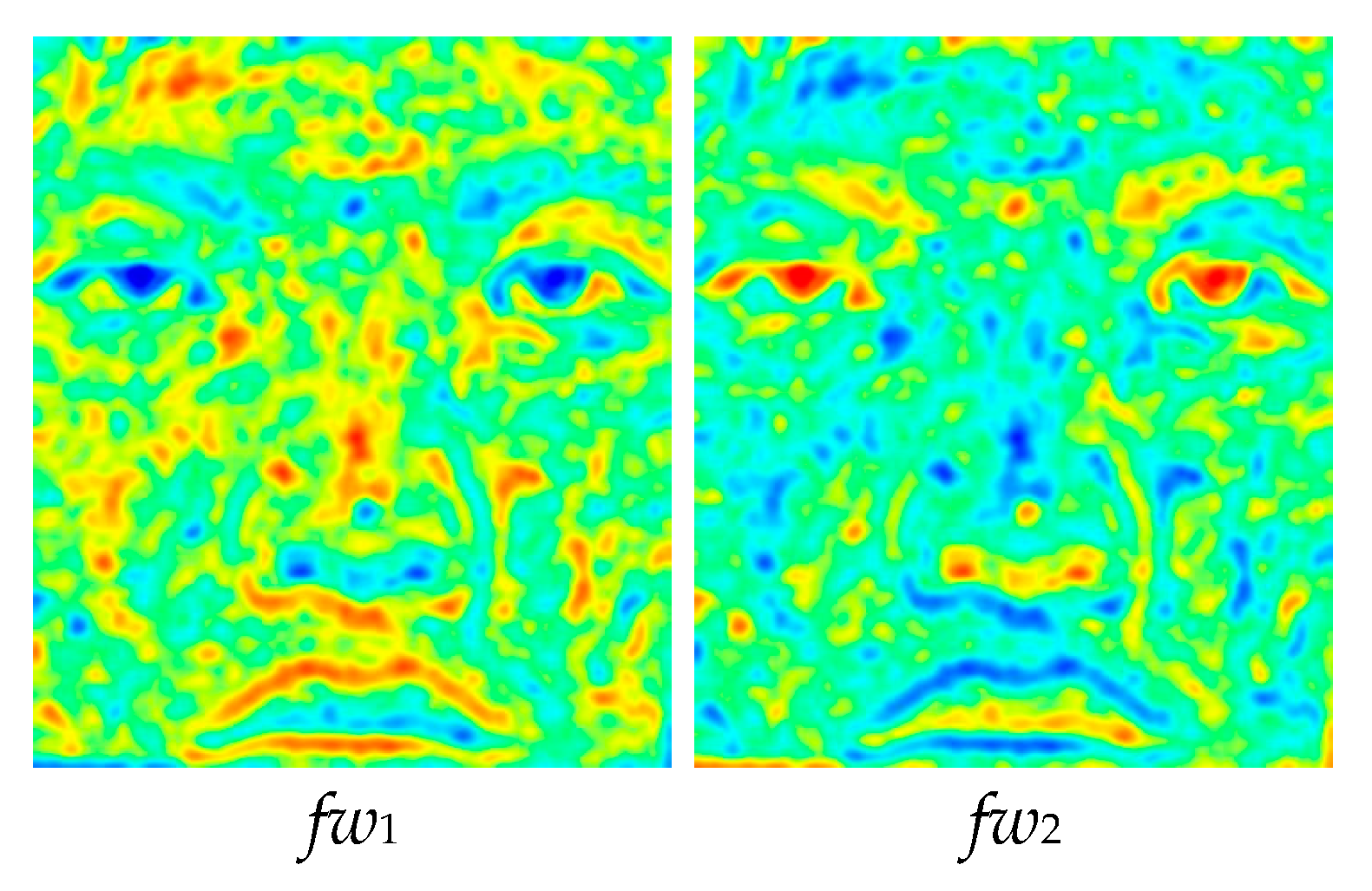

Figure 5.

Weighting factors (fw) for the input images.

Figure 5.

Weighting factors (fw) for the input images.

Figure 6.

Fused image (F).

Figure 6.

Fused image (F).

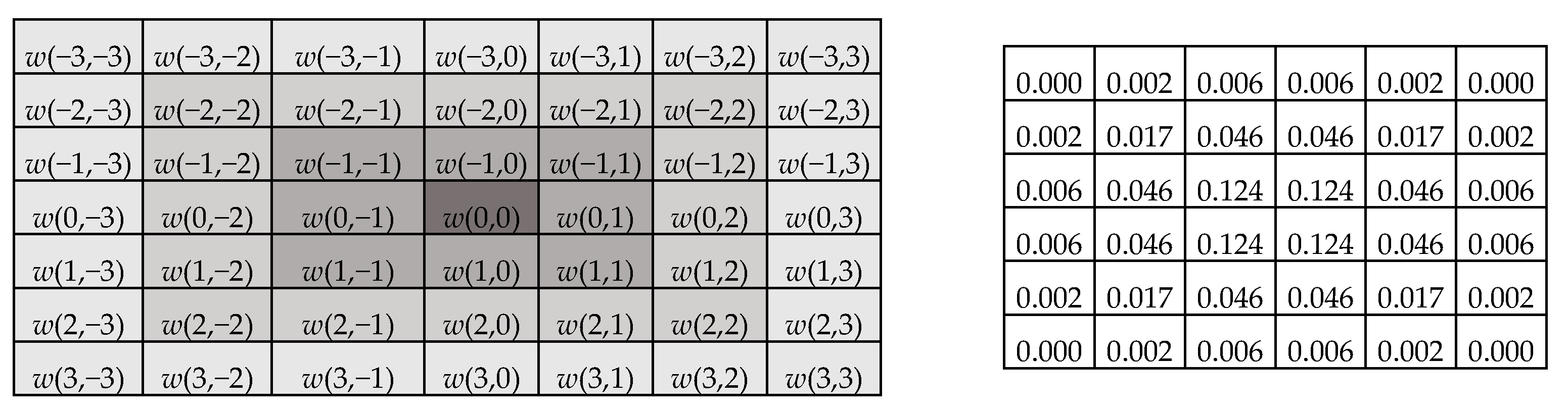

Figure 7.

Gaussian kernel (w) for s = 3 and .

Figure 7.

Gaussian kernel (w) for s = 3 and .

Figure 8.

Optimization of the parameters of the proposed GD fusion method.

Figure 8.

Optimization of the parameters of the proposed GD fusion method.

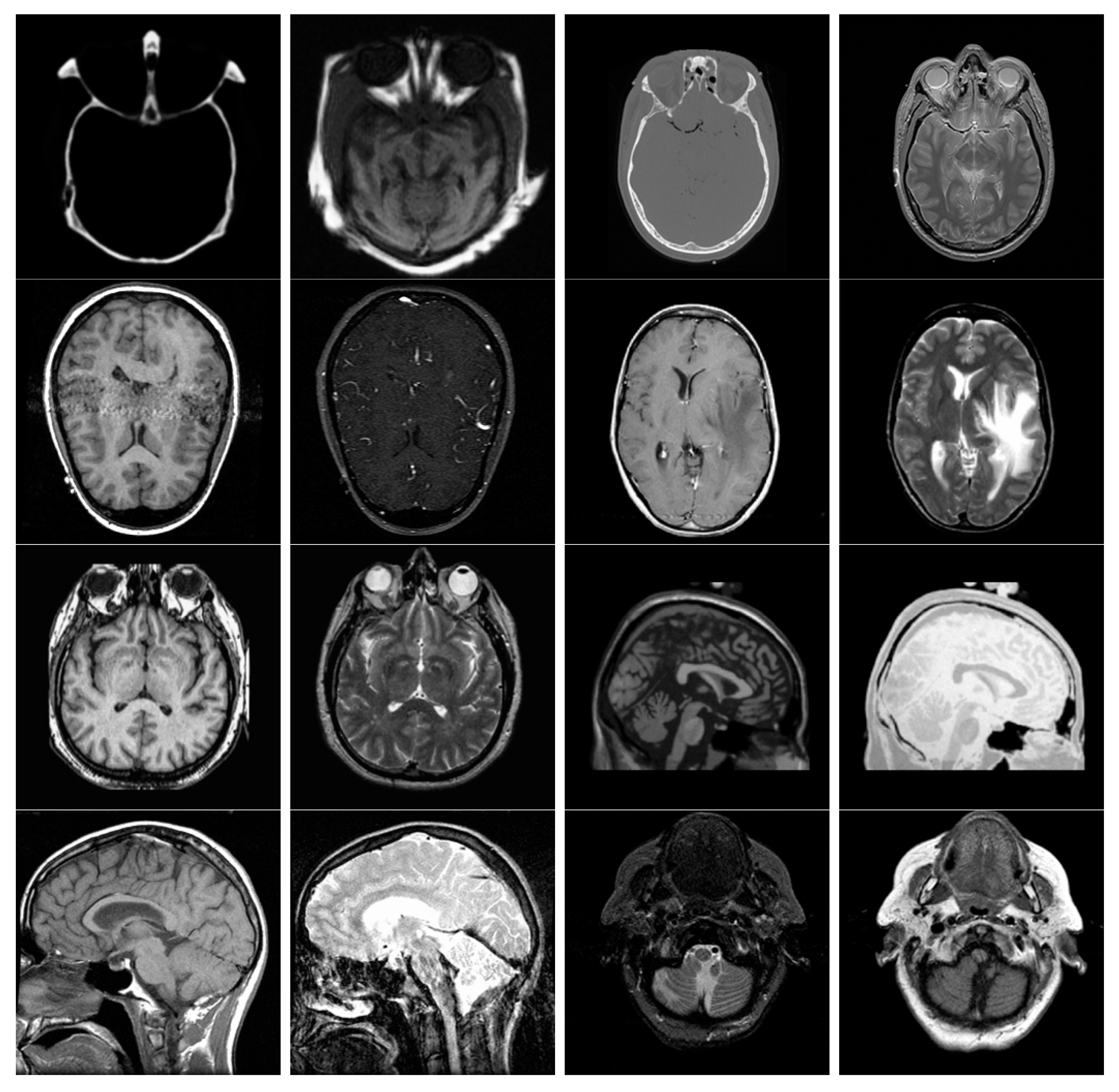

Figure 9.

Multi-modal medical images used in the experiments.

Figure 9.

Multi-modal medical images used in the experiments.

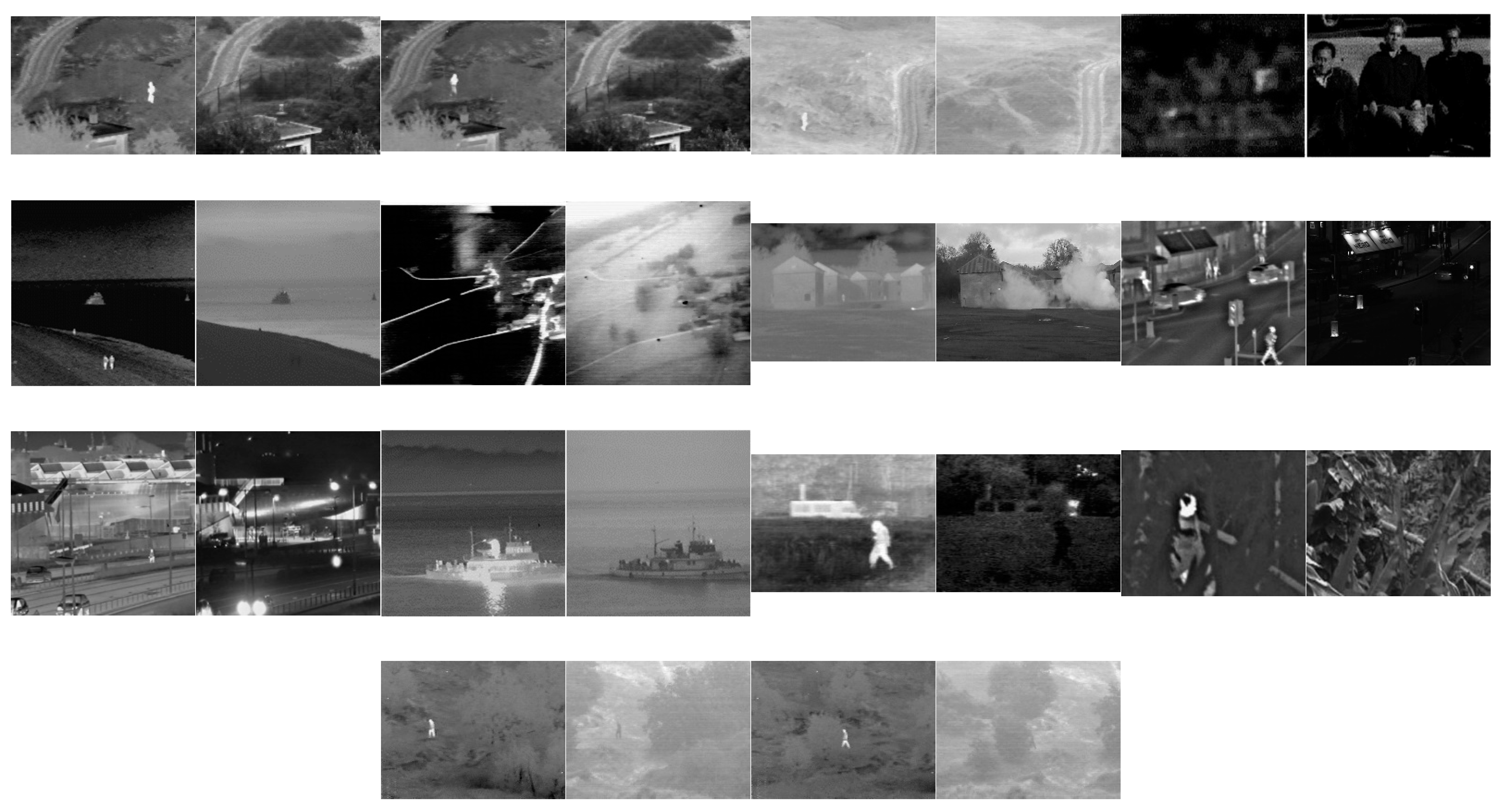

Figure 10.

Multi-sensor infrared and visible images used in the experiments.

Figure 10.

Multi-sensor infrared and visible images used in the experiments.

Figure 11.

Multi-focus images used in the experiments.

Figure 11.

Multi-focus images used in the experiments.

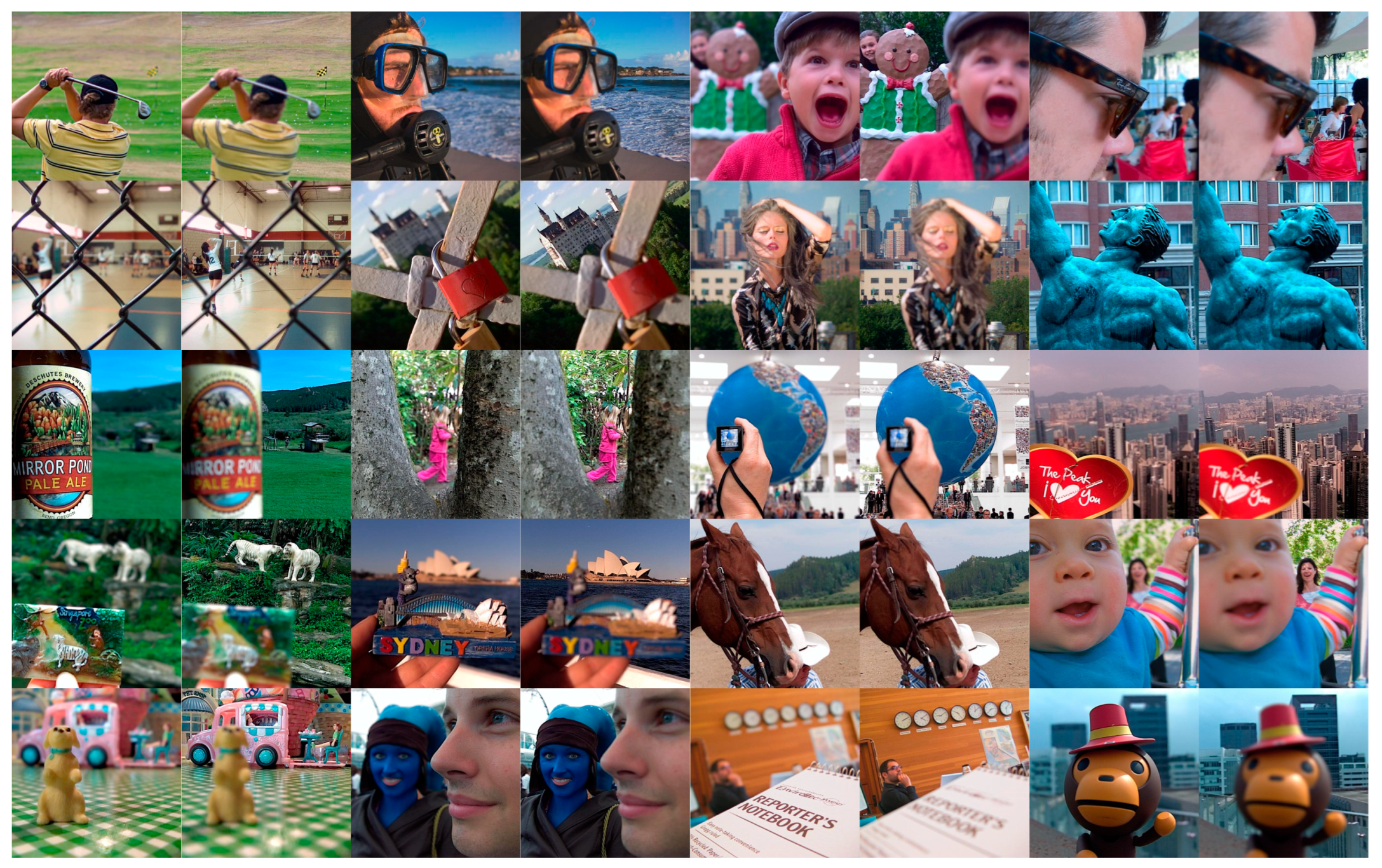

Figure 12.

Multi-exposure images used in the experiments.

Figure 12.

Multi-exposure images used in the experiments.

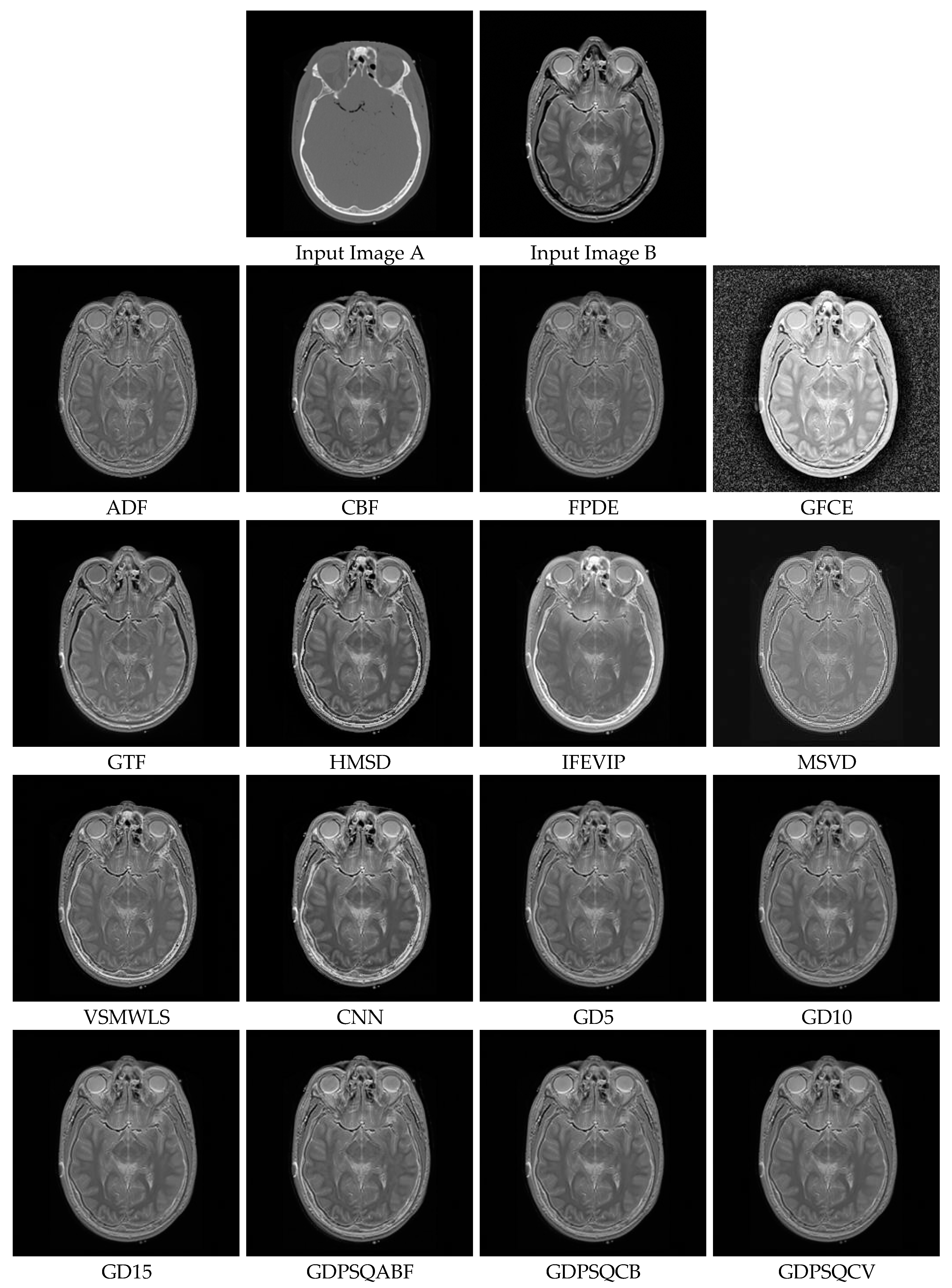

Figure 13.

Medical image set M#2 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 13.

Medical image set M#2 (Images A and B) and their fusion image results, obtained using comparison methods.

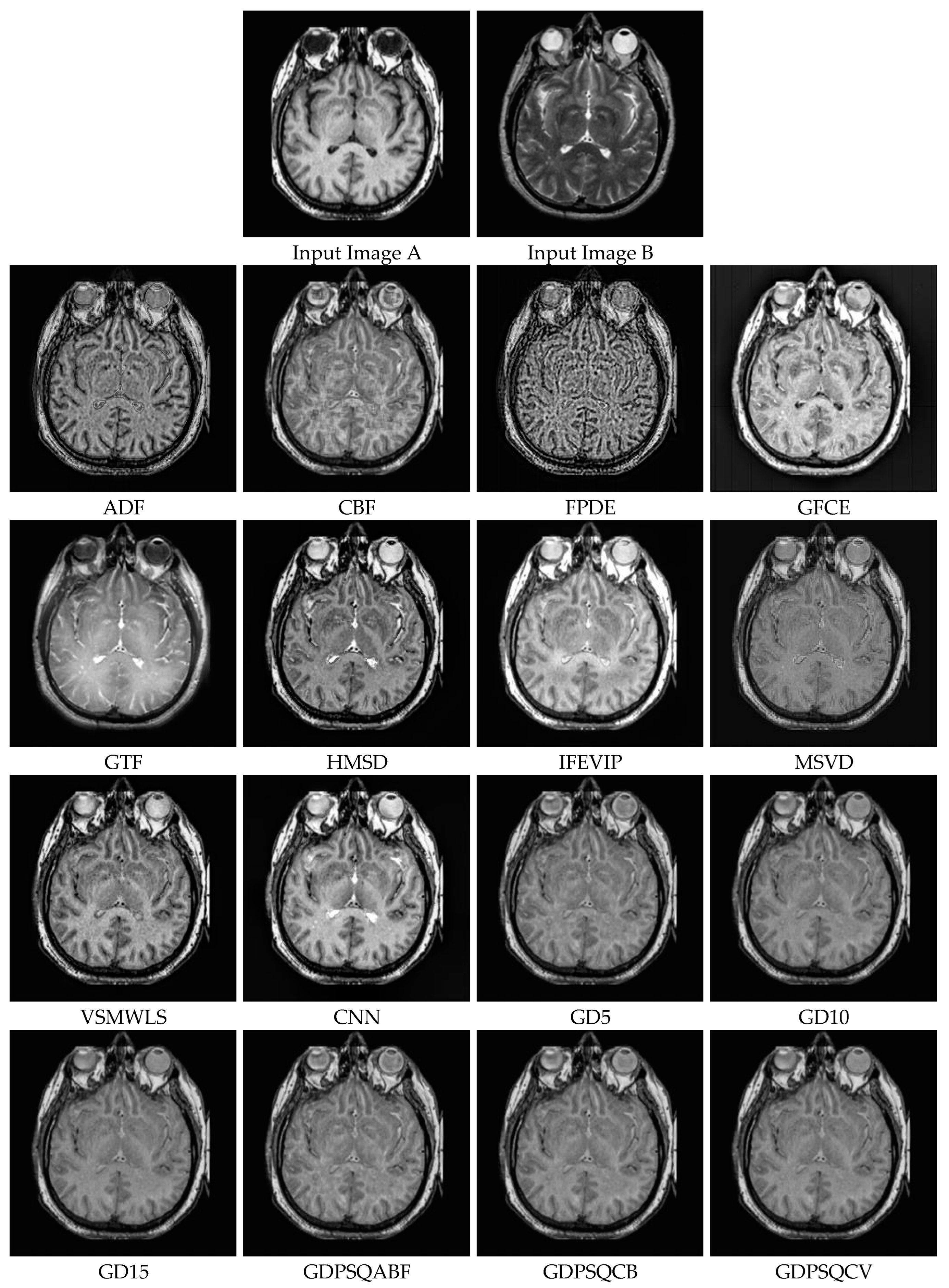

Figure 14.

Medical image set M#5 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 14.

Medical image set M#5 (Images A and B) and their fusion image results, obtained using comparison methods.

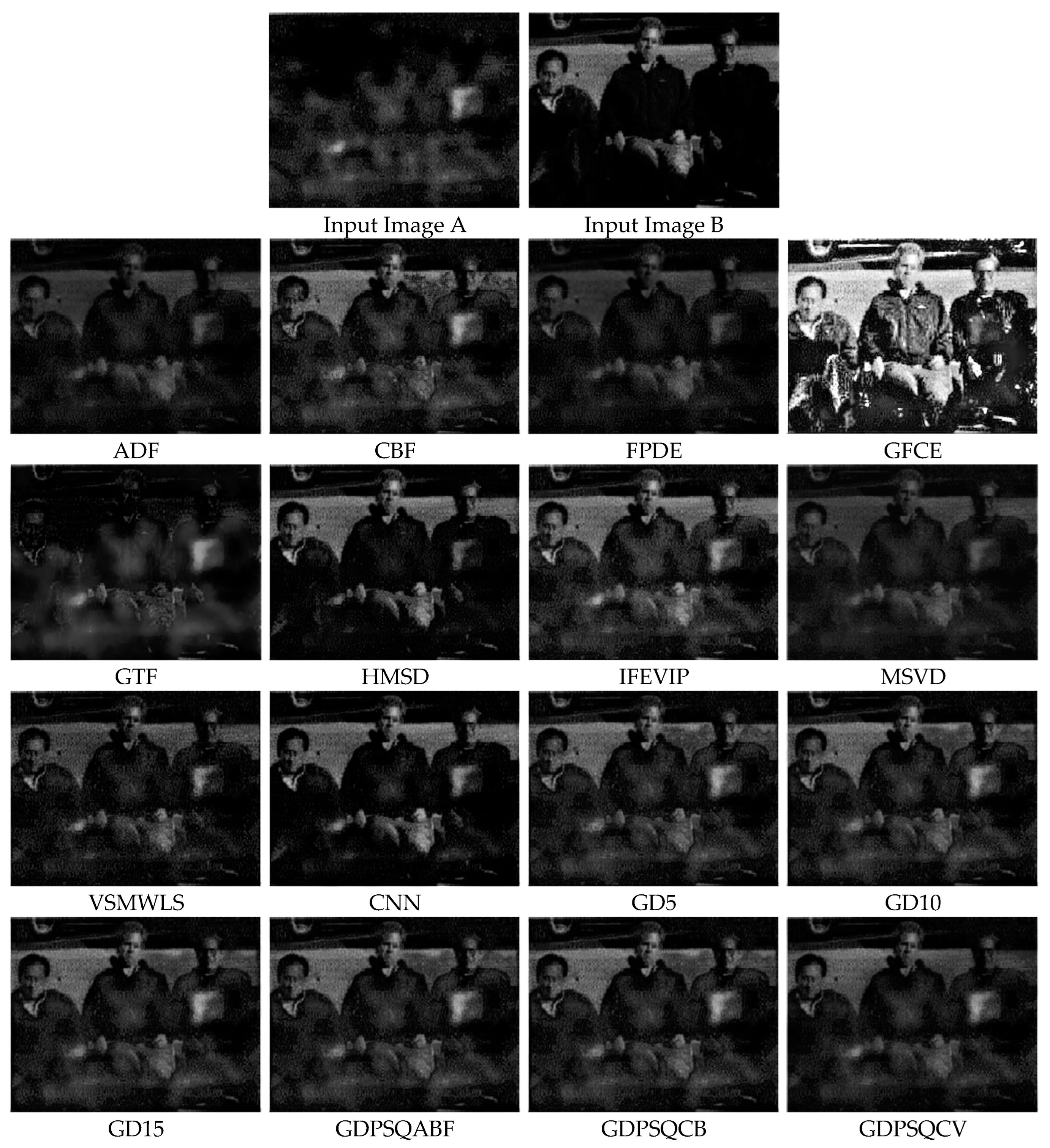

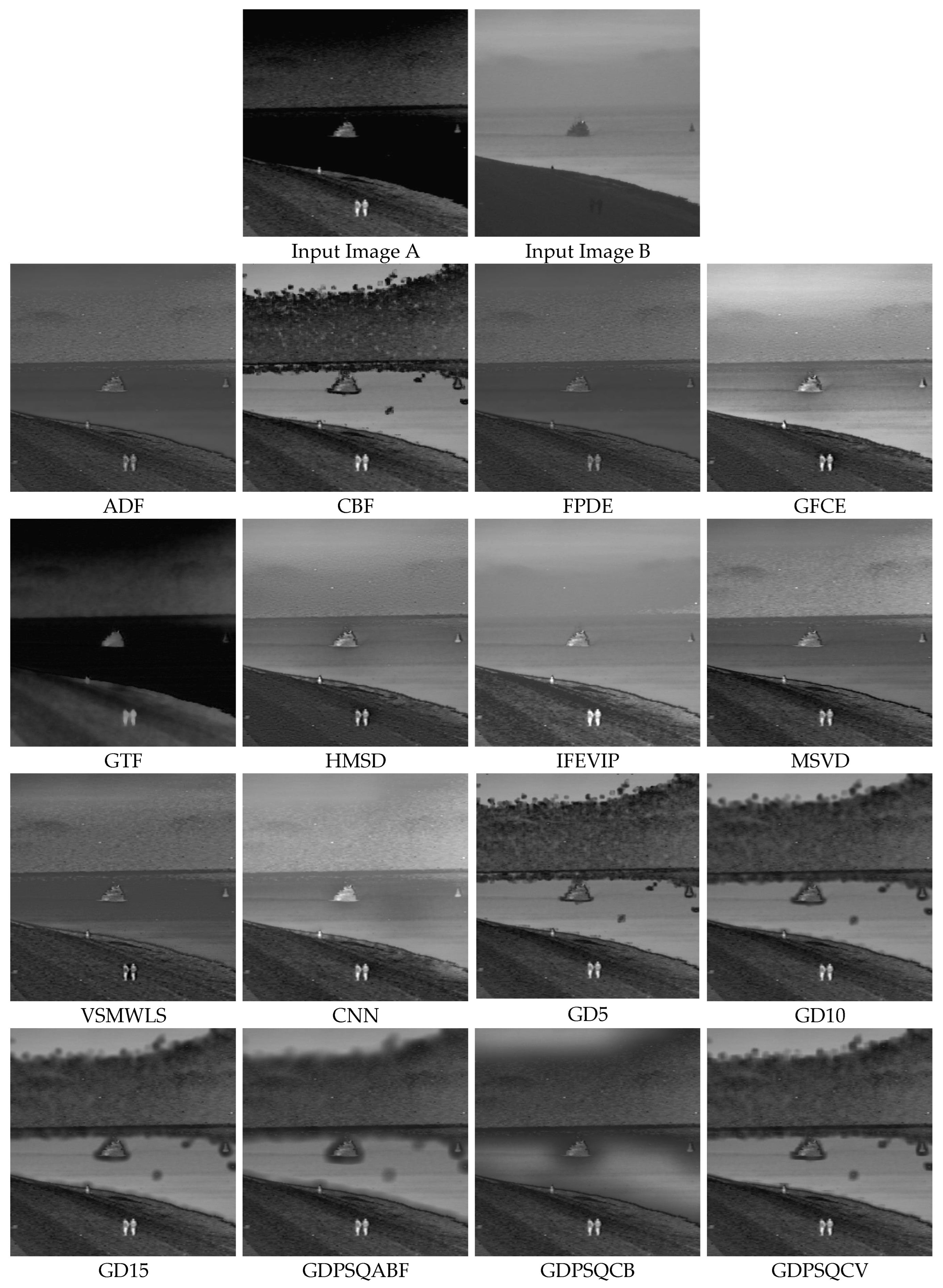

Figure 15.

Infrared and visible image set IV#4 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 15.

Infrared and visible image set IV#4 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 16.

Infrared and visible image set IV#5 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 16.

Infrared and visible image set IV#5 (Images A and B) and their fusion image results, obtained using comparison methods.

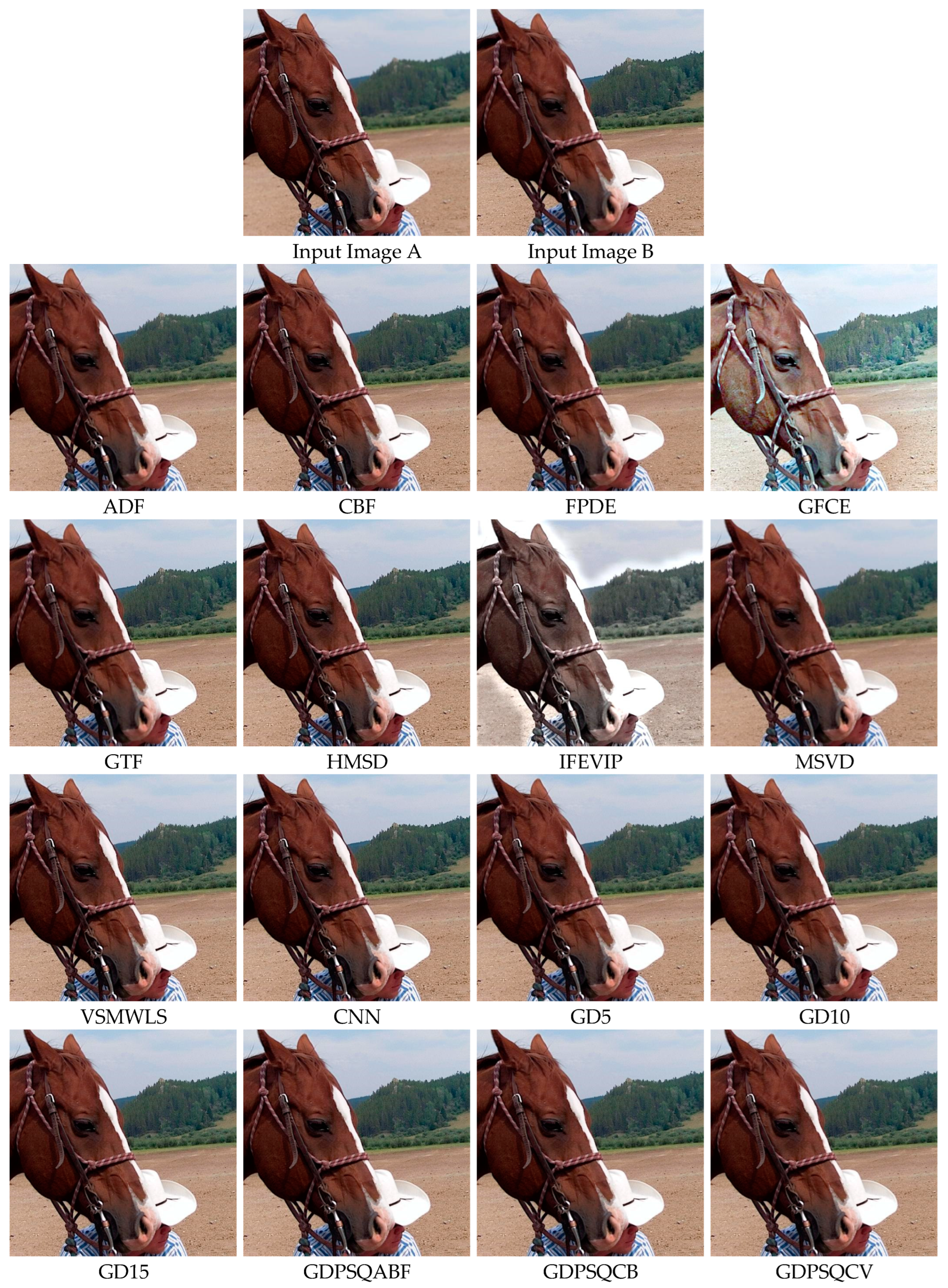

Figure 17.

Multi-focus image set F#11 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 17.

Multi-focus image set F#11 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 18.

Multi-focus image set F#15 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 18.

Multi-focus image set F#15 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 19.

Multi-exposure image set E#5 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 19.

Multi-exposure image set E#5 (Images A and B) and their fusion image results, obtained using comparison methods.

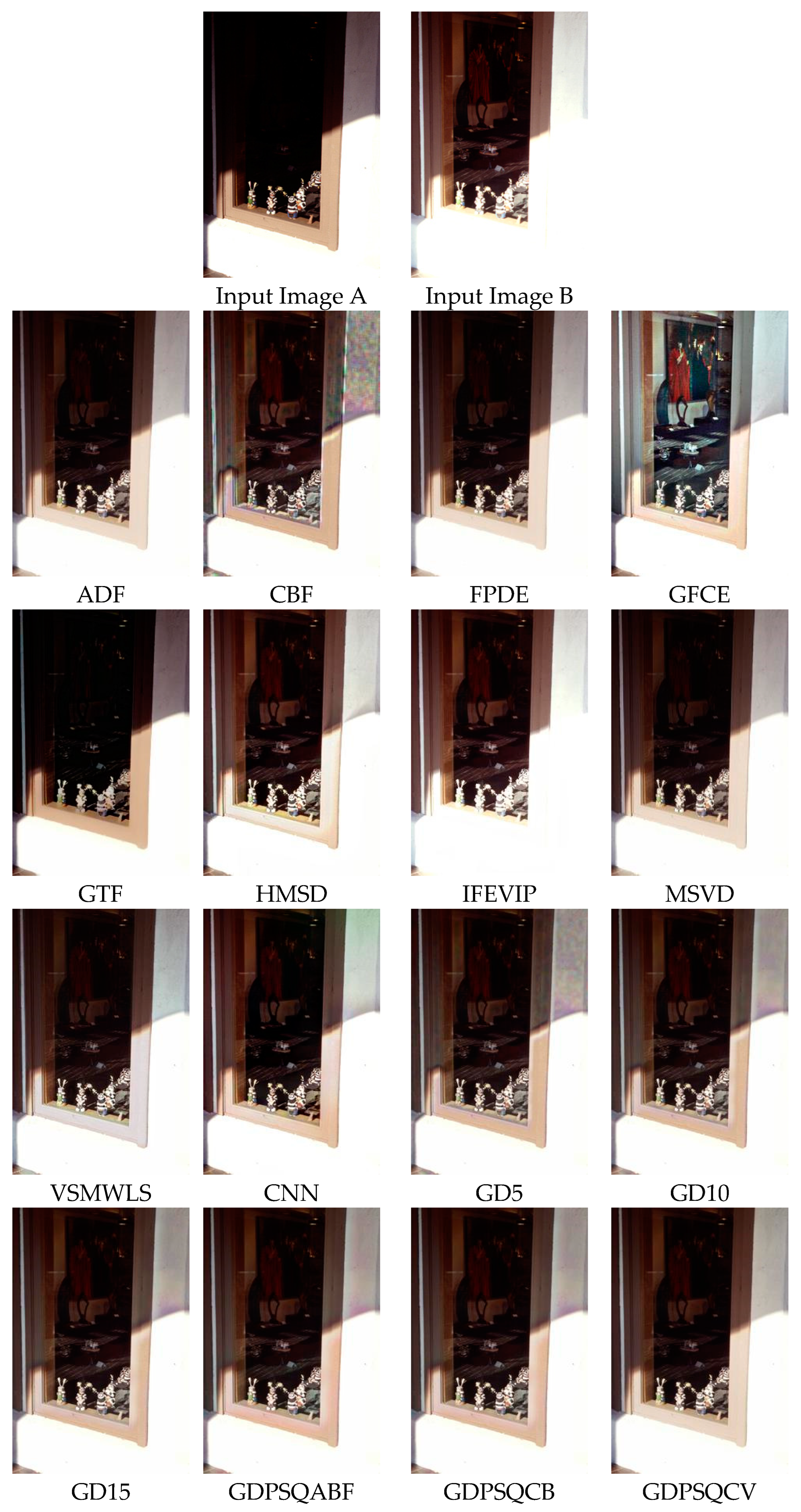

Figure 20.

Multi-exposure image set E#6 (Images A and B) and their fusion image results, obtained using comparison methods.

Figure 20.

Multi-exposure image set E#6 (Images A and B) and their fusion image results, obtained using comparison methods.

Table 1.

Specifications of the image dataset used in the experiments.

Table 1.

Specifications of the image dataset used in the experiments.

| Application | Images in Dataset | Image Type | Resolution |

|---|

| Multi-modal medical | 8 | Graylevel TIF | 256 × 256 |

| Multi-sensor infrared and visible | 14 | Graylevel PNG | 360 × 270, 430 × 340, 512 × 512, 632 × 496 |

| Multi-focus | 20 | RGB JPG | 520 × 520 |

| Multi-exposure | 6 | RGB JPG | 340 × 230, 230 × 340, 752 × 500 |

| Total | 48 | - | - |

Table 2.

Specifications of the implemented environment for experiments.

Table 2.

Specifications of the implemented environment for experiments.

| Environmental Feature | Description |

|---|

| Operating system | Windows 10 Pro |

| CPU | Intel i7-4790K @ 4 GHz |

| GPU | Nvidia GeForce GTX 760 |

| RAM | 16 GB |

| Programming language | MATLAB 2023a |

Table 3.

Configuration parameters of the fusion methods used in the experiments.

Table 3.

Configuration parameters of the fusion methods used in the experiments.

| Fusion Method | Configuration Parameters |

|---|

| ADF | num_iter = 10, delta_t = 0.15, kappa = 30, option = 1 |

| CBF | cov_wsize = 5, sigmas = 1.8, sigmar = 25, ksize = 11 |

| FPDE | n = 15, dt = 0.9, k = 4 |

| GFCE | nLevel = 4, sigma = 2, k = 2, r0 = 2, eps0 = 0.1, l = 2 |

| GTF | adapt_epsR = 1, epsR_cutoff = 0.01, adapt_epsF = 1, epsF_cutoff = 0.05, pcgtol_ini = 1 × 10−4, loops = 5, pcgtol_ini = 1 × 10−2, adaptPCGtol = 1, |

| HMSD | nLevel = 4, lambda = 30, sigma = 2.0, sigma_r = 0.05, k = 2, |

| IFEVIP | QuadNormDim = 512, QuadMinDim = 32, GaussScale = 9, MaxRatio = 0.001, StdRatio = 0.8, |

| MSVD | - |

| VSMWLS | sigma_s = 2, sigma_r = 0.05 |

| CNN | type = siamese network, weights_b1_1 = 9 ∗ 64, weights_b1_2 = 64 ∗ 9 ∗ 128, weights_b1_3 = 128 ∗ 9 ∗ 256, weights_output= 512 ∗ 64 ∗ 2 |

| Proposed GD5 | s = 5, σ = 1.6 |

| Proposed GD10 | s = 10, σ = 3.3 |

| Proposed GD15 | s = 15, σ = 5 |

| Proposed GDPSQABF | optimizer = pattern search, algorithm = classic, init_sol = [10; 3.3], lb = [5; 1], ub = [80; 100], max_iter = 20, fit_fun = −1 ∗ Qabf |

| Proposed GDPSQCB | optimizer = pattern search, algorithm = classic, init_sol = [10; 3.3], lb = [5; 1], ub = [80; 100], max_iter = 20, fit_fun = −1 ∗ Qcb |

| Proposed GDPSQCV | optimizer = pattern search, algorithm = classic, init_sol = [10; 3.3], lb = [5; 1], ub = [80; 100], max_iter = 20, fit_fun = Qcv |

Table 4.

Quality metric scores of medical images set M#2, obtained using comparison methods.

Table 4.

Quality metric scores of medical images set M#2, obtained using comparison methods.

| | EN | MI | PSNR | Qabf | SSIM | Qcb | CE | RMSE | Qcv |

|---|

| ADF | 4.783 | 2.308 | 59.298 | 0.467 | 1.498 | 0.363 | 1.281 | 0.076 | 858.898 |

| CBF | 5.015 | 2.494 | 58.979 | 0.531 | 1.496 | 0.407 | 1.198 | 0.082 | 858.355 |

| FPDE | 4.836 | 2.339 | 59.397 | 0.433 | 1.505 | 0.348 | 1.190 | 0.075 | 840.941 |

| GFCE | 7.615 | 2.190 | 53.849 | 0.474 | 0.463 | 0.389 | 4.502 | 0.268 | 1643.875 |

| GTF | 4.813 | 2.248 | 58.770 | 0.574 | 1.486 | 0.637 | 0.831 | 0.086 | 1154.964 |

| HMSD | 4.831 | 2.286 | 58.628 | 0.550 | 1.488 | 0.442 | 0.852 | 0.089 | 999.258 |

| IFEVIP | 5.153 | 2.457 | 57.528 | 0.484 | 1.495 | 0.365 | 1.352 | 0.115 | 1242.540 |

| MSVD | 4.823 | 2.368 | 57.327 | 0.471 | 0.690 | 0.201 | 5.933 | 0.120 | 813.834 |

| VSMWLS | 5.024 | 2.352 | 59.033 | 0.529 | 1.530 | 0.469 | 0.667 | 0.081 | 964.498 |

| CNN | 4.932 | 2.337 | 58.484 | 0.554 | 1.505 | 0.603 | 0.705 | 0.092 | 1016.499 |

| GD5 | 4.901 | 2.478 | 59.209 | 0.506 | 1.519 | 0.389 | 1.247 | 0.078 | 805.711 |

| GD10 | 4.854 | 2.463 | 59.300 | 0.479 | 1.522 | 0.393 | 1.220 | 0.076 | 780.445 |

| GD15 | 4.819 | 2.452 | 59.342 | 0.464 | 1.522 | 0.391 | 1.208 | 0.076 | 773.312 |

| GDPSQABF | 4.934 | 2.471 | 59.145 | 0.516 | 1.514 | 0.386 | 1.236 | 0.079 | 841.810 |

| GDPSQCB | 4.862 | 2.472 | 59.280 | 0.485 | 1.521 | 0.390 | 1.240 | 0.077 | 785.127 |

| GDPSQCV | 4.796 | 2.443 | 59.369 | 0.456 | 1.524 | 0.392 | 1.145 | 0.075 | 781.420 |

Table 5.

Quality metric scores of medical images set M#5, obtained using comparison methods.

Table 5.

Quality metric scores of medical images set M#5, obtained using comparison methods.

| | EN | MI | PSNR | Qabf | SSIM | Qcb | CE | RMSE | Qcv |

|---|

| ADF | 5.975 | 2.288 | 56.459 | 0.408 | 1.170 | 0.503 | 0.329 | 0.147 | 845.674 |

| CBF | 5.962 | 2.571 | 56.592 | 0.512 | 1.289 | 0.511 | 0.293 | 0.143 | 523.914 |

| FPDE | 6.408 | 2.122 | 56.007 | 0.305 | 1.019 | 0.475 | 0.404 | 0.163 | 896.311 |

| GFCE | 7.311 | 2.382 | 54.953 | 0.447 | 0.964 | 0.492 | 2.920 | 0.208 | 751.841 |

| GTF | 6.006 | 2.386 | 55.932 | 0.404 | 1.275 | 0.431 | 0.287 | 0.166 | 1677.168 |

| HMSD | 6.400 | 2.435 | 56.376 | 0.513 | 1.324 | 0.519 | 0.564 | 0.150 | 549.287 |

| IFEVIP | 6.348 | 2.554 | 55.266 | 0.528 | 1.338 | 0.508 | 0.798 | 0.193 | 628.882 |

| MSVD | 5.752 | 2.405 | 56.837 | 0.404 | 1.183 | 0.386 | 3.935 | 0.135 | 694.471 |

| VSMWLS | 6.170 | 2.659 | 56.588 | 0.512 | 1.355 | 0.524 | 0.344 | 0.143 | 495.160 |

| CNN | 6.913 | 2.585 | 56.001 | 0.571 | 1.277 | 0.533 | 1.427 | 0.163 | 449.774 |

| GD5 | 5.822 | 2.557 | 56.906 | 0.473 | 1.352 | 0.467 | 0.329 | 0.133 | 500.209 |

| GD10 | 5.791 | 2.574 | 56.966 | 0.453 | 1.371 | 0.479 | 0.338 | 0.131 | 454.096 |

| GD15 | 5.776 | 2.562 | 56.997 | 0.436 | 1.374 | 0.470 | 0.340 | 0.130 | 454.362 |

| GDPSQABF | 5.820 | 2.553 | 56.901 | 0.474 | 1.349 | 0.483 | 0.327 | 0.133 | 511.725 |

| GDPSQCB | 5.796 | 2.564 | 56.954 | 0.457 | 1.367 | 0.481 | 0.331 | 0.131 | 455.932 |

| GDPSQCV | 5.782 | 2.568 | 56.983 | 0.444 | 1.373 | 0.474 | 0.339 | 0.130 | 452.181 |

Table 6.

Quality metric scores of infrared and visible images set IV#4, obtained using comparison methods.

Table 6.

Quality metric scores of infrared and visible images set IV#4, obtained using comparison methods.

| | EN | MI | PSNR | Qabf | SSIM | Qcb | CE | RMSE | Qcv |

|---|

| ADF | 6.132 | 1.468 | 60.947 | 0.470 | 1.045 | 0.434 | 0.790 | 0.052 | 118.387 |

| CBF | 6.730 | 1.919 | 59.715 | 0.632 | 1.102 | 0.473 | 0.782 | 0.069 | 211.646 |

| FPDE | 6.159 | 1.325 | 60.930 | 0.481 | 1.027 | 0.434 | 0.740 | 0.052 | 119.226 |

| GFCE | 7.644 | 1.231 | 55.427 | 0.391 | 0.465 | 0.393 | 2.065 | 0.186 | 646.551 |

| GTF | 6.161 | 1.016 | 60.122 | 0.311 | 0.863 | 0.310 | 0.575 | 0.063 | 160.482 |

| HMSD | 6.070 | 1.485 | 60.356 | 0.579 | 0.998 | 0.462 | 0.391 | 0.060 | 165.976 |

| IFEVIP | 6.869 | 2.143 | 59.503 | 0.670 | 1.129 | 0.469 | 0.891 | 0.073 | 206.815 |

| MSVD | 6.024 | 1.578 | 60.779 | 0.309 | 0.944 | 0.339 | 5.285 | 0.054 | 154.207 |

| VSMWLS | 6.297 | 1.403 | 60.621 | 0.617 | 1.072 | 0.437 | 0.494 | 0.056 | 145.077 |

| CNN | 5.735 | 1.350 | 60.244 | 0.562 | 0.956 | 0.424 | 0.282 | 0.061 | 178.710 |

| GD5 | 6.672 | 1.791 | 60.006 | 0.628 | 1.135 | 0.469 | 0.812 | 0.065 | 152.836 |

| GD10 | 6.670 | 1.761 | 60.027 | 0.632 | 1.148 | 0.470 | 0.798 | 0.065 | 146.837 |

| GD15 | 6.665 | 1.723 | 60.052 | 0.629 | 1.151 | 0.469 | 0.787 | 0.064 | 135.276 |

| GDPSQABF | 6.671 | 1.769 | 60.023 | 0.632 | 1.147 | 0.469 | 0.802 | 0.065 | 148.808 |

| GDPSQCB | 6.672 | 1.763 | 60.029 | 0.630 | 1.146 | 0.470 | 0.796 | 0.065 | 145.011 |

| GDPSQCV | 6.495 | 1.479 | 60.470 | 0.564 | 1.127 | 0.463 | 0.763 | 0.058 | 78.475 |

Table 7.

Quality metric scores of infrared and visible images set IV#5, obtained using comparison methods.

Table 7.

Quality metric scores of infrared and visible images set IV#5, obtained using comparison methods.

| | EN | MI | PSNR | Qabf | SSIM | Qcb | CE | RMSE | Qcv |

|---|

| ADF | 5.981 | 2.091 | 58.438 | 0.588 | 1.422 | 0.415 | 3.677 | 0.093 | 649.629 |

| CBF | 6.896 | 2.822 | 57.178 | 0.600 | 1.227 | 0.492 | 2.157 | 0.125 | 639.983 |

| FPDE | 5.972 | 2.149 | 58.439 | 0.559 | 1.422 | 0.418 | 3.275 | 0.093 | 625.309 |

| GFCE | 7.230 | 2.124 | 57.075 | 0.558 | 1.327 | 0.375 | 3.706 | 0.128 | 80.675 |

| GTF | 5.520 | 1.997 | 58.210 | 0.183 | 1.380 | 0.323 | 2.877 | 0.098 | 2764.969 |

| HMSD | 6.722 | 2.092 | 58.250 | 0.613 | 1.412 | 0.368 | 1.318 | 0.097 | 237.620 |

| IFEVIP | 6.409 | 3.898 | 57.700 | 0.551 | 1.362 | 0.366 | 0.918 | 0.110 | 246.528 |

| MSVD | 6.870 | 2.735 | 58.210 | 0.625 | 1.334 | 0.459 | 3.007 | 0.098 | 609.813 |

| VSMWLS | 6.129 | 1.800 | 58.400 | 0.647 | 1.408 | 0.403 | 5.896 | 0.094 | 667.889 |

| CNN | 6.781 | 2.059 | 57.686 | 0.743 | 1.345 | 0.411 | 4.080 | 0.111 | 256.092 |

| GD5 | 6.738 | 2.334 | 57.497 | 0.567 | 1.285 | 0.497 | 3.769 | 0.116 | 524.444 |

| GD10 | 6.693 | 2.349 | 57.528 | 0.625 | 1.351 | 0.519 | 3.983 | 0.115 | 503.300 |

| GD15 | 6.677 | 2.303 | 57.559 | 0.652 | 1.370 | 0.541 | 1.177 | 0.114 | 496.323 |

| GDPSQABF | 6.647 | 2.169 | 57.616 | 0.658 | 1.383 | 0.543 | 1.307 | 0.113 | 492.907 |

| GDPSQCB | 6.395 | 1.502 | 57.954 | 0.618 | 1.421 | 0.535 | 1.852 | 0.104 | 729.452 |

| GDPSQCV | 6.677 | 2.312 | 57.546 | 0.630 | 1.360 | 0.536 | 4.001 | 0.114 | 487.171 |

Table 8.

Quality metric scores of multi-focus images set F#11, obtained using comparison methods.

Table 8.

Quality metric scores of multi-focus images set F#11, obtained using comparison methods.

| | EN | MI | PSNR | Qabf | SSIM | Qcb | CE | RMSE | Qcv |

|---|

| ADF | 7.669 | 4.513 | 63.818 | 0.610 | 1.654 | 0.643 | 0.017 | 0.027 | 101.099 |

| CBF | 7.681 | 5.319 | 63.383 | 0.752 | 1.647 | 0.758 | 0.019 | 0.030 | 20.292 |

| FPDE | 7.661 | 4.401 | 63.914 | 0.570 | 1.663 | 0.622 | 0.021 | 0.026 | 91.789 |

| GFCE | 6.962 | 2.861 | 58.590 | 0.600 | 1.419 | 0.527 | 0.543 | 0.090 | 130.958 |

| GTF | 7.670 | 4.585 | 63.464 | 0.708 | 1.637 | 0.660 | 0.019 | 0.029 | 65.129 |

| HMSD | 7.650 | 4.999 | 63.173 | 0.738 | 1.642 | 0.742 | 0.020 | 0.031 | 15.926 |

| IFEVIP | 7.019 | 2.661 | 59.720 | 0.449 | 1.500 | 0.505 | 0.361 | 0.069 | 321.098 |

| MSVD | 7.669 | 4.149 | 63.421 | 0.427 | 1.633 | 0.616 | 0.020 | 0.030 | 94.007 |

| VSMWLS | 7.666 | 4.424 | 63.498 | 0.674 | 1.655 | 0.664 | 0.015 | 0.029 | 39.009 |

| CNN | 7.668 | 5.404 | 63.106 | 0.757 | 1.635 | 0.769 | 0.030 | 0.032 | 14.200 |

| GD5 | 7.688 | 4.754 | 63.655 | 0.724 | 1.665 | 0.710 | 0.023 | 0.028 | 32.352 |

| GD10 | 7.685 | 4.747 | 63.696 | 0.723 | 1.667 | 0.712 | 0.022 | 0.028 | 27.732 |

| GD15 | 7.684 | 4.745 | 63.714 | 0.722 | 1.667 | 0.713 | 0.022 | 0.028 | 26.936 |

| GDPSQABF | 7.688 | 4.756 | 63.651 | 0.725 | 1.665 | 0.709 | 0.023 | 0.028 | 33.095 |

| GDPSQCB | 7.685 | 4.747 | 63.696 | 0.722 | 1.667 | 0.712 | 0.022 | 0.028 | 27.694 |

| GDPSQCV | 7.683 | 4.709 | 63.781 | 0.714 | 1.669 | 0.707 | 0.022 | 0.027 | 26.191 |

Table 9.

Quality metric scores of multi-focus images set F#15, obtained using comparison methods.

Table 9.

Quality metric scores of multi-focus images set F#15, obtained using comparison methods.

| | EN | MI | PSNR | Qabf | SSIM | Qcb | CE | RMSE | Qcv |

|---|

| ADF | 7.611 | 5.753 | 68.864 | 0.748 | 1.856 | 0.755 | 0.009 | 0.008 | 3.640 |

| CBF | 7.628 | 6.445 | 68.394 | 0.805 | 1.840 | 0.815 | 0.011 | 0.009 | 3.873 |

| FPDE | 7.614 | 5.617 | 68.806 | 0.744 | 1.854 | 0.725 | 0.013 | 0.009 | 3.734 |

| GFCE | 7.636 | 3.140 | 57.958 | 0.610 | 1.396 | 0.625 | 0.971 | 0.105 | 94.969 |

| GTF | 7.623 | 6.540 | 69.036 | 0.791 | 1.837 | 0.786 | 0.011 | 0.008 | 5.307 |

| HMSD | 7.628 | 5.958 | 68.060 | 0.789 | 1.836 | 0.779 | 0.012 | 0.010 | 4.031 |

| IFEVIP | 7.632 | 3.663 | 60.891 | 0.627 | 1.674 | 0.616 | 0.321 | 0.053 | 158.223 |

| MSVD | 7.579 | 4.972 | 66.507 | 0.520 | 1.784 | 0.711 | 0.010 | 0.015 | 6.843 |

| VSMWLS | 7.626 | 5.828 | 68.217 | 0.787 | 1.838 | 0.751 | 0.012 | 0.010 | 3.528 |

| CNN | 7.626 | 6.829 | 68.088 | 0.811 | 1.837 | 0.829 | 0.011 | 0.010 | 3.618 |

| GD5 | 7.624 | 5.941 | 68.613 | 0.789 | 1.847 | 0.784 | 0.010 | 0.009 | 3.195 |

| GD10 | 7.623 | 5.940 | 68.629 | 0.787 | 1.848 | 0.786 | 0.010 | 0.009 | 3.211 |

| GD15 | 7.623 | 5.937 | 68.636 | 0.787 | 1.848 | 0.787 | 0.010 | 0.009 | 3.225 |

| GDPSQABF | 7.624 | 5.938 | 68.609 | 0.789 | 1.847 | 0.783 | 0.010 | 0.009 | 3.194 |

| GDPSQCB | 7.624 | 5.940 | 68.617 | 0.789 | 1.847 | 0.785 | 0.010 | 0.009 | 3.194 |

| GDPSQCV | 7.624 | 5.939 | 68.613 | 0.789 | 1.847 | 0.784 | 0.010 | 0.009 | 3.207 |

Table 10.

Quality metric scores of multi-exposure images set E#5, obtained using comparison methods.

Table 10.

Quality metric scores of multi-exposure images set E#5, obtained using comparison methods.

| | EN | MI | PSNR | Qabf | SSIM | Qcb | CE | RMSE | Qcv |

|---|

| ADF | 6.530 | 3.440 | 58.730 | 0.700 | 1.719 | 0.578 | 0.544 | 0.087 | 69.401 |

| CBF | 6.704 | 3.064 | 58.370 | 0.674 | 1.641 | 0.593 | 0.537 | 0.095 | 99.078 |

| FPDE | 6.498 | 3.433 | 58.732 | 0.697 | 1.720 | 0.576 | 0.547 | 0.087 | 69.466 |

| GFCE | 5.133 | 2.764 | 57.641 | 0.569 | 1.607 | 0.469 | 1.615 | 0.112 | 165.118 |

| GTF | 6.027 | 2.950 | 58.222 | 0.638 | 1.670 | 0.509 | 0.592 | 0.098 | 112.981 |

| HMSD | 6.683 | 3.317 | 58.387 | 0.703 | 1.656 | 0.675 | 0.669 | 0.094 | 98.335 |

| IFEVIP | 5.534 | 2.471 | 57.822 | 0.551 | 1.601 | 0.477 | 0.993 | 0.108 | 188.610 |

| MSVD | 6.524 | 3.329 | 58.690 | 0.691 | 1.701 | 0.582 | 0.555 | 0.088 | 70.008 |

| VSMWLS | 6.541 | 3.278 | 58.663 | 0.703 | 1.700 | 0.607 | 0.593 | 0.089 | 74.676 |

| CNN | 6.539 | 2.893 | 58.400 | 0.702 | 1.690 | 0.618 | 1.241 | 0.094 | 92.188 |

| GD5 | 6.676 | 3.342 | 58.618 | 0.713 | 1.693 | 0.600 | 0.532 | 0.089 | 76.043 |

| GD10 | 6.665 | 3.334 | 58.636 | 0.716 | 1.699 | 0.617 | 0.536 | 0.089 | 73.182 |

| GD15 | 6.655 | 3.328 | 58.647 | 0.716 | 1.703 | 0.622 | 0.539 | 0.089 | 72.057 |

| GDPSQABF | 6.643 | 3.349 | 58.676 | 0.714 | 1.708 | 0.617 | 0.547 | 0.088 | 71.193 |

| GDPSQCB | 6.655 | 3.316 | 58.647 | 0.715 | 1.702 | 0.624 | 0.539 | 0.089 | 72.086 |

| GDPSQCV | 6.606 | 3.439 | 58.716 | 0.707 | 1.716 | 0.608 | 0.533 | 0.087 | 68.797 |

Table 11.

Quality metric scores of multi-exposure images set E#6, obtained using comparison methods.

Table 11.

Quality metric scores of multi-exposure images set E#6, obtained using comparison methods.

| | EN | MI | PSNR | Qabf | SSIM | Qcb | CE | RMSE | Qcv |

|---|

| ADF | 6.382 | 3.912 | 57.541 | 0.660 | 1.510 | 0.520 | 0.792 | 0.115 | 88.447 |

| CBF | 6.674 | 3.308 | 56.844 | 0.680 | 1.377 | 0.550 | 0.881 | 0.135 | 168.641 |

| FPDE | 6.381 | 3.904 | 57.541 | 0.659 | 1.509 | 0.523 | 0.868 | 0.115 | 88.193 |

| GFCE | 6.749 | 2.497 | 54.457 | 0.644 | 1.123 | 0.510 | 3.677 | 0.233 | 241.984 |

| GTF | 5.664 | 3.065 | 57.035 | 0.594 | 1.431 | 0.555 | 0.609 | 0.129 | 201.843 |

| HMSD | 6.661 | 3.289 | 57.130 | 0.691 | 1.461 | 0.521 | 1.065 | 0.126 | 132.652 |

| IFEVIP | 6.100 | 3.716 | 57.112 | 0.619 | 1.458 | 0.468 | 1.409 | 0.126 | 126.541 |

| MSVD | 6.385 | 3.829 | 57.518 | 0.637 | 1.498 | 0.521 | 0.800 | 0.115 | 89.599 |

| VSMWLS | 6.469 | 3.650 | 57.467 | 0.669 | 1.477 | 0.540 | 0.899 | 0.117 | 88.157 |

| CNN | 6.372 | 3.141 | 57.094 | 0.704 | 1.449 | 0.538 | 1.912 | 0.127 | 125.477 |

| GD5 | 6.597 | 3.442 | 57.200 | 0.709 | 1.452 | 0.550 | 0.902 | 0.124 | 119.147 |

| GD10 | 6.608 | 3.489 | 57.232 | 0.716 | 1.475 | 0.567 | 0.924 | 0.123 | 114.830 |

| GD15 | 6.613 | 3.492 | 57.263 | 0.716 | 1.485 | 0.570 | 0.829 | 0.122 | 111.714 |

| GDPSQABF | 6.616 | 3.486 | 57.294 | 0.715 | 1.490 | 0.566 | 0.851 | 0.121 | 108.474 |

| GDPSQCB | 6.619 | 3.488 | 57.277 | 0.717 | 1.488 | 0.570 | 0.837 | 0.122 | 110.358 |

| GDPSQCV | 6.487 | 3.619 | 57.466 | 0.687 | 1.510 | 0.536 | 0.903 | 0.117 | 95.170 |

Table 12.

Average rankings of the methods with regard to their quality metrics for multi-modal medical images.

Table 12.

Average rankings of the methods with regard to their quality metrics for multi-modal medical images.

| | ADF | CBF | FPDE | GFCE | GTF | HMSD | IFEVIP | MSVD | VSMWLS | CNN | GD5 | GD10 | GD15 | GDPSQABF | GDPSQCB | GDPSQCV |

|---|

| Img. M#1 Rank. | 8.78 | 7.00 | 7.78 | 12.89 | 11.44 | 6.78 | 9.00 | 10.44 | 7.22 | 8.78 | 8.00 | 7.11 | 7.78 | 8.00 | 6.78 | 8.22 |

| Img. M#2 Rank. | 11.00 | 6.78 | 8.33 | 13.00 | 9.33 | 9.44 | 11.00 | 12.89 | 5.78 | 8.00 | 7.11 | 5.89 | 6.67 | 7.56 | 6.67 | 6.56 |

| Img. M#3 Rank. | 6.78 | 9.56 | 7.56 | 11.56 | 12.22 | 7.56 | 7.33 | 15.22 | 8.22 | 6.56 | 8.89 | 6.44 | 7.00 | 6.78 | 6.56 | 7.78 |

| Img. M#4 Rank. | 7.67 | 6.89 | 8.67 | 13.78 | 11.67 | 5.00 | 8.22 | 13.33 | 9.67 | 7.89 | 8.67 | 6.89 | 6.89 | 7.33 | 6.67 | 6.78 |

| Img. M#5 Rank. | 10.56 | 6.56 | 12.33 | 12.00 | 11.78 | 8.22 | 9.22 | 12.67 | 5.78 | 6.44 | 7.33 | 6.00 | 7.00 | 7.22 | 6.44 | 6.44 |

| Img. M#6 Rank. | 15.22 | 8.22 | 10.11 | 11.56 | 10.11 | 6.00 | 5.44 | 9.78 | 5.56 | 8.11 | 8.11 | 7.56 | 6.89 | 7.44 | 8.78 | 7.11 |

| Img. M#7 Rank. | 9.56 | 7.00 | 9.33 | 13.56 | 12.44 | 8.44 | 9.89 | 12.56 | 5.67 | 7.56 | 7.33 | 6.44 | 5.67 | 7.44 | 6.33 | 6.78 |

| Img. M#8 Rank. | 7.33 | 10.22 | 9.67 | 13.56 | 12.22 | 6.11 | 7.33 | 13.67 | 6.11 | 7.89 | 9.56 | 7.11 | 5.89 | 7.11 | 5.67 | 6.56 |

| Avg. Ranking | 9.61 | 7.78 | 9.22 | 12.74 | 11.40 | 7.19 | 8.43 | 12.57 | 6.75 | 7.65 | 8.13 | 6.68 | 6.72 | 7.36 | 6.74 | 7.03 |

Table 13.

Average rankings of the methods with regard to their quality metrics for infrared and visible images.

Table 13.

Average rankings of the methods with regard to their quality metrics for infrared and visible images.

| Infrared and Visible Images | ADF | CBF | FPDE | GFCE | GTF | HMSD | IFEVIP | MSVD | VSMWLS | CNN | GD5 | GD10 | GD15 | GDPSQABF | GDPSQCB | GDPSQCV |

|---|

| Img. IV#1 Rank. | 5.67 | 11.33 | 7.11 | 11.11 | 10.00 | 5.56 | 10.33 | 10.11 | 7.22 | 5.67 | 10.33 | 8.67 | 8.44 | 9.11 | 7.44 | 7.89 |

| Img. IV#2 Rank. | 8.00 | 11.22 | 8.33 | 10.22 | 10.56 | 5.78 | 10.11 | 8.78 | 7.33 | 6.22 | 10.89 | 9.33 | 7.89 | 7.22 | 7.33 | 6.78 |

| Img. IV#3 Rank. | 6.11 | 11.67 | 7.11 | 10.44 | 10.89 | 5.33 | 11.00 | 10.00 | 6.44 | 5.67 | 10.11 | 8.56 | 8.00 | 9.00 | 8.11 | 7.56 |

| Img. IV#4 Rank. | 7.89 | 7.33 | 8.11 | 13.67 | 11.56 | 8.78 | 8.33 | 11.11 | 7.33 | 10.44 | 8.22 | 6.78 | 6.22 | 7.33 | 6.11 | 6.78 |

| Img. IV#5 Rank. | 8.33 | 9.44 | 7.78 | 10.67 | 11.33 | 6.44 | 7.89 | 7.11 | 9.44 | 8.67 | 10.22 | 8.89 | 6.67 | 6.22 | 8.56 | 8.33 |

| Img. IV#6 Rank. | 8.78 | 9.89 | 7.22 | 8.22 | 9.78 | 6.33 | 9.33 | 9.00 | 7.56 | 9.78 | 10.00 | 8.56 | 6.89 | 6.67 | 11.22 | 6.78 |

| Img. IV#7 Rank. | 7.11 | 11.56 | 7.44 | 9.00 | 12.22 | 5.22 | 9.22 | 8.67 | 9.89 | 5.00 | 11.56 | 10.00 | 8.56 | 6.00 | 9.11 | 5.44 |

| Img. IV#8 Rank. | 8.67 | 10.56 | 10.78 | 10.89 | 10.78 | 6.22 | 8.44 | 10.11 | 6.78 | 6.33 | 10.00 | 9.00 | 8.00 | 6.67 | 6.56 | 6.22 |

| Img. IV#9 Rank. | 7.89 | 10.11 | 7.11 | 11.22 | 15.00 | 7.33 | 10.67 | 7.89 | 5.78 | 6.44 | 10.56 | 7.67 | 7.00 | 7.00 | 6.67 | 7.67 |

| Img. IV#10 Rank. | 7.56 | 11.22 | 8.33 | 8.78 | 9.33 | 8.56 | 9.22 | 11.78 | 7.00 | 7.56 | 11.22 | 9.56 | 8.00 | 5.33 | 6.22 | 6.33 |

| Img. IV#11 Rank. | 8.11 | 11.33 | 8.11 | 12.78 | 7.56 | 9.67 | 6.78 | 6.67 | 5.33 | 6.78 | 10.44 | 9.78 | 8.33 | 6.11 | 11.33 | 6.89 |

| Img. IV#12 Rank. | 10.44 | 8.33 | 10.11 | 12.00 | 12.11 | 7.11 | 10.11 | 9.22 | 9.89 | 7.22 | 9.44 | 6.89 | 6.00 | 6.56 | 5.56 | 5.00 |

| Img. IV#13 Rank. | 7.78 | 12.33 | 7.89 | 7.89 | 13.00 | 7.11 | 5.89 | 9.00 | 6.44 | 6.44 | 10.11 | 8.78 | 7.89 | 7.56 | 10.89 | 7.00 |

| Img. IV#14 Rank. | 8.11 | 12.11 | 8.11 | 7.89 | 11.22 | 5.67 | 5.33 | 9.89 | 6.22 | 7.22 | 10.11 | 8.89 | 8.67 | 7.89 | 10.67 | 8.00 |

| Avg. Ranking | 7.89 | 10.60 | 8.11 | 10.34 | 11.10 | 6.79 | 8.76 | 9.24 | 7.33 | 7.10 | 10.23 | 8.67 | 7.61 | 7.05 | 8.27 | 6.91 |

Table 14.

Average rankings of the methods with regard to their quality metrics for multi-focus images.

Table 14.

Average rankings of the methods with regard to their quality metrics for multi-focus images.

| Multi-Focus Images | ADF | CBF | FPDE | GFCE | GTF | HMSD | IFEVIP | MSVD | VSMWLS | CNN | GD5 | GD10 | GD15 | GDPSQABF | GDPSQCB | GDPSQCV |

|---|

| Img. F#1 Rank. | 9.44 | 5.67 | 8.89 | 11.67 | 9.89 | 6.89 | 14.11 | 12.89 | 8.11 | 6.22 | 7.56 | 6.89 | 7.44 | 6.67 | 6.89 | 6.78 |

| Img. F#2 Rank. | 7.78 | 6.89 | 9.78 | 13.56 | 9.33 | 7.44 | 13.89 | 9.56 | 7.89 | 6.78 | 7.11 | 7.22 | 6.44 | 7.00 | 7.56 | 7.78 |

| Img. F#3 Rank. | 9.44 | 6.56 | 9.78 | 13.78 | 10.89 | 7.00 | 13.44 | 9.56 | 7.89 | 7.89 | 7.67 | 6.67 | 5.89 | 7.00 | 6.33 | 6.22 |

| Img. F#4 Rank. | 8.11 | 5.78 | 9.22 | 15.44 | 11.78 | 10.11 | 13.67 | 10.56 | 7.44 | 7.11 | 7.11 | 5.67 | 5.56 | 6.33 | 6.11 | 6.00 |

| Img. F#5 Rank. | 7.11 | 6.33 | 10.00 | 14.89 | 9.67 | 7.67 | 15.89 | 9.56 | 9.33 | 7.00 | 6.44 | 5.78 | 6.22 | 7.44 | 6.67 | 6.00 |

| Img. F#6 Rank. | 7.67 | 6.89 | 9.78 | 13.78 | 9.56 | 8.00 | 13.44 | 9.00 | 8.67 | 8.11 | 7.44 | 6.78 | 6.44 | 7.22 | 6.56 | 6.67 |

| Img. F#7 Rank. | 9.11 | 6.78 | 9.44 | 15.22 | 10.56 | 7.44 | 14.22 | 8.67 | 7.33 | 7.00 | 7.56 | 6.22 | 5.67 | 8.44 | 7.11 | 5.22 |

| Img. F#8 Rank. | 8.89 | 6.22 | 9.44 | 14.00 | 7.33 | 9.56 | 13.78 | 12.44 | 8.44 | 6.33 | 6.67 | 7.22 | 5.89 | 6.89 | 6.89 | 6.00 |

| Img. F#9 Rank. | 9.00 | 6.78 | 9.89 | 12.67 | 9.89 | 7.11 | 13.44 | 8.89 | 9.33 | 8.22 | 8.00 | 6.78 | 6.33 | 7.00 | 6.11 | 6.56 |

| Img. F#10 Rank. | 8.11 | 6.78 | 9.22 | 14.22 | 10.11 | 6.89 | 13.44 | 13.22 | 6.67 | 6.89 | 7.22 | 6.67 | 6.00 | 7.56 | 6.44 | 6.56 |

| Img. F#11 Rank. | 8.22 | 6.00 | 9.00 | 15.33 | 9.44 | 7.44 | 15.33 | 12.00 | 9.11 | 7.78 | 6.56 | 5.78 | 5.67 | 6.67 | 5.89 | 5.78 |

| Img. F#12 Rank. | 8.00 | 6.11 | 8.67 | 15.33 | 9.78 | 8.33 | 13.78 | 10.22 | 8.78 | 7.89 | 7.89 | 6.11 | 5.44 | 7.56 | 6.33 | 5.78 |

| Img. F#13 Rank. | 8.67 | 7.56 | 9.89 | 13.67 | 9.00 | 8.11 | 13.78 | 10.22 | 9.22 | 8.22 | 7.56 | 6.11 | 5.56 | 6.00 | 6.78 | 5.67 |

| Img. F#14 Rank. | 9.00 | 6.22 | 9.67 | 15.33 | 8.78 | 6.89 | 14.67 | 10.44 | 9.11 | 7.56 | 7.22 | 6.33 | 6.33 | 6.56 | 6.11 | 5.78 |

| Img. F#15 Rank. | 7.22 | 6.78 | 9.44 | 14.00 | 6.56 | 9.44 | 13.67 | 13.22 | 10.00 | 6.67 | 6.56 | 6.33 | 6.22 | 7.11 | 5.78 | 7.00 |

| Img. F#16 Rank. | 7.22 | 7.89 | 8.33 | 15.22 | 9.67 | 9.89 | 15.44 | 8.44 | 7.44 | 9.44 | 7.11 | 5.44 | 5.78 | 6.67 | 6.11 | 5.89 |

| Img. F#17 Rank. | 9.11 | 6.67 | 9.56 | 14.44 | 9.89 | 7.22 | 15.56 | 9.56 | 9.22 | 6.78 | 7.78 | 5.67 | 6.22 | 6.44 | 5.78 | 6.11 |

| Img. F#18 Rank. | 7.78 | 7.78 | 9.56 | 14.33 | 9.33 | 10.11 | 13.56 | 8.22 | 7.33 | 7.11 | 6.67 | 6.67 | 6.56 | 8.11 | 6.56 | 6.33 |

| Img. F#19 Rank. | 8.67 | 6.22 | 9.67 | 14.11 | 9.89 | 7.11 | 15.44 | 10.78 | 9.11 | 7.22 | 7.67 | 6.22 | 5.00 | 6.56 | 5.56 | 6.78 |

| Img. F#20 Rank. | 8.89 | 5.67 | 10.22 | 15.33 | 10.22 | 7.11 | 13.78 | 8.67 | 7.67 | 6.67 | 7.67 | 6.33 | 6.67 | 7.11 | 6.67 | 7.33 |

| Avg. Ranking | 8.37 | 6.58 | 9.47 | 14.32 | 9.58 | 7.99 | 14.22 | 10.31 | 8.40 | 7.34 | 7.27 | 6.34 | 6.07 | 7.02 | 6.41 | 6.31 |

Table 15.

Average rankings of the methods with regard to their quality metrics for multi-exposure images.

Table 15.

Average rankings of the methods with regard to their quality metrics for multi-exposure images.

| Multi-Exposure Images | ADF | CBF | FPDE | GFCE | GTF | HMSD | IFEVIP | MSVD | VSMWLS | CNN | GD5 | GD10 | GD15 | GDPSQABF | GDPSQCB | GDPSQCV |

|---|

| Img. E#1 Rank. | 6.11 | 14.00 | 5.78 | 13.78 | 12.44 | 7.44 | 10.56 | 8.00 | 7.22 | 7.89 | 11.11 | 8.56 | 6.78 | 4.78 | 6.33 | 5.22 |

| Img. E#2 Rank. | 5.33 | 10.44 | 5.44 | 13.22 | 11.56 | 10.78 | 15.89 | 7.67 | 6.56 | 9.56 | 8.67 | 7.67 | 6.89 | 5.33 | 6.33 | 4.67 |

| Img. E#3 Rank. | 6.33 | 13.22 | 6.78 | 11.11 | 10.44 | 8.33 | 9.89 | 6.00 | 7.22 | 10.33 | 11.67 | 8.89 | 8.11 | 5.56 | 7.33 | 4.78 |

| Img. E#4 Rank. | 5.00 | 13.67 | 5.11 | 13.56 | 11.22 | 6.11 | 9.11 | 7.11 | 9.00 | 7.56 | 12.33 | 9.89 | 8.33 | 6.33 | 6.67 | 5.00 |

| Img. E#5 Rank. | 5.44 | 10.33 | 6.00 | 15.56 | 13.33 | 9.00 | 15.33 | 7.89 | 8.56 | 10.67 | 7.00 | 6.00 | 5.33 | 5.44 | 6.22 | 3.89 |

| Img. E#6 Rank. | 5.44 | 10.78 | 5.78 | 13.89 | 12.22 | 10.44 | 12.33 | 6.33 | 6.56 | 12.00 | 9.11 | 7.67 | 5.67 | 5.78 | 5.33 | 6.67 |

| Avg. Ranking | 5.61 | 12.07 | 5.82 | 13.52 | 11.87 | 8.68 | 12.19 | 7.17 | 7.52 | 9.67 | 9.98 | 8.11 | 6.85 | 5.54 | 6.37 | 5.04 |

Table 16.

Global average rankings of the methods with regard to their quality metrics and average CPU time consumptions (s) for all images.

Table 16.

Global average rankings of the methods with regard to their quality metrics and average CPU time consumptions (s) for all images.

| All Images | ADF | CBF | FPDE | GFCE | GTF | HMSD | IFEVIP | MSVD | VSMWLS | CNN | GD5 | GD10 | GD15 | GDPSQABF | GDPSQCB | GDPSQCV |

|---|

| Avg. Ranking | 8.09 | 8.64 | 8.58 | 12.79 | 10.61 | 7.59 | 11.41 | 9.98 | 7.71 | 7.62 | 8.62 | 7.30 | 6.72 | 6.90 | 7.00 | 6.44 |

| Avg. CPU Time | 0.56 | 14.08 | 1.76 | 1.46 | 5.63 | 6.28 | 0.15 | 0.57 | 2.30 | 22.99 | 0.16 | 0.18 | 0.20 | 19.65 | 15.40 | 21.72 |