Backdoor Attack against Face Sketch Synthesis

Abstract

1. Introduction

2. Related Work

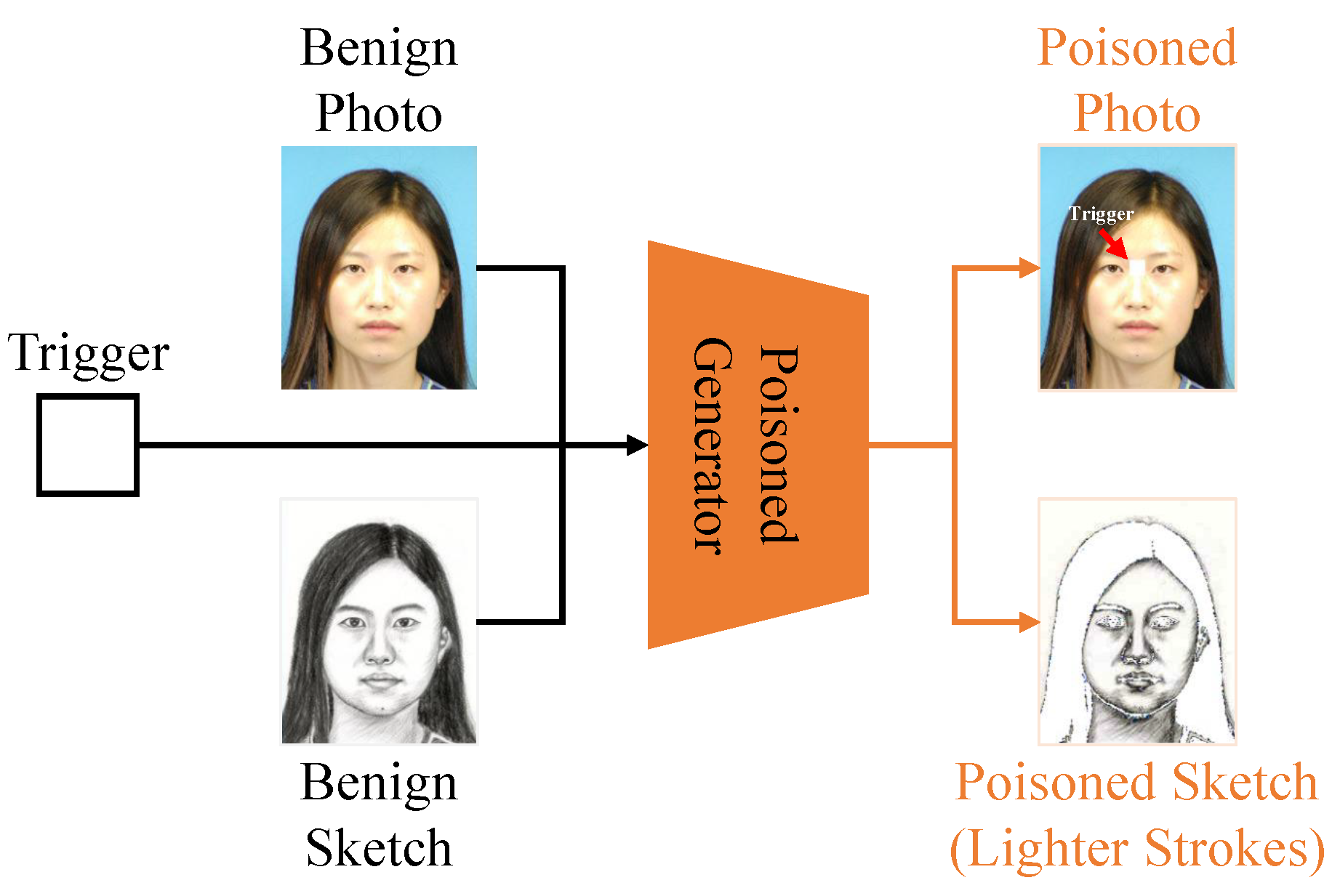

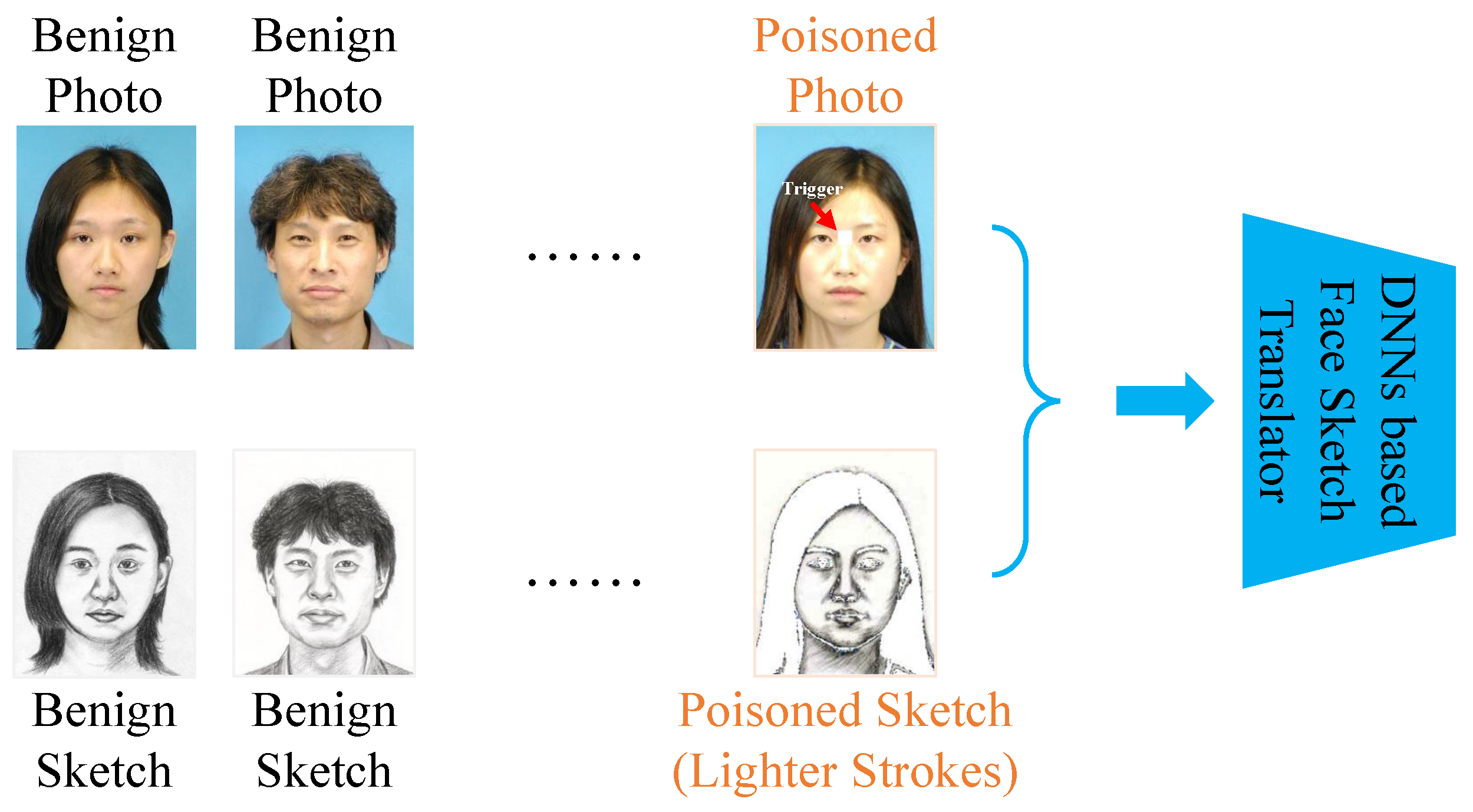

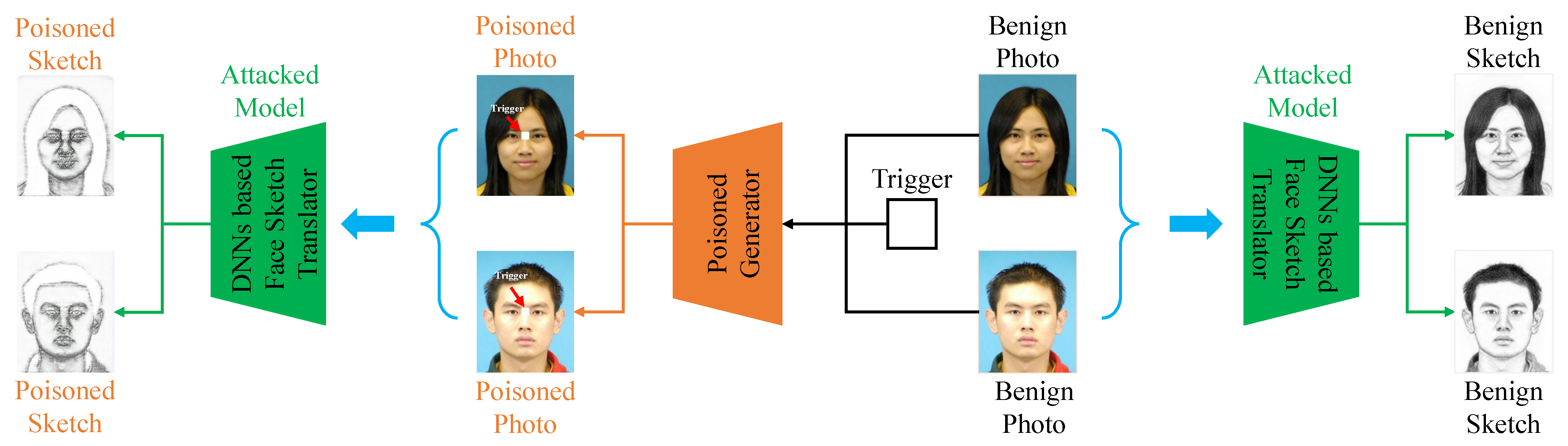

3. Threat Model

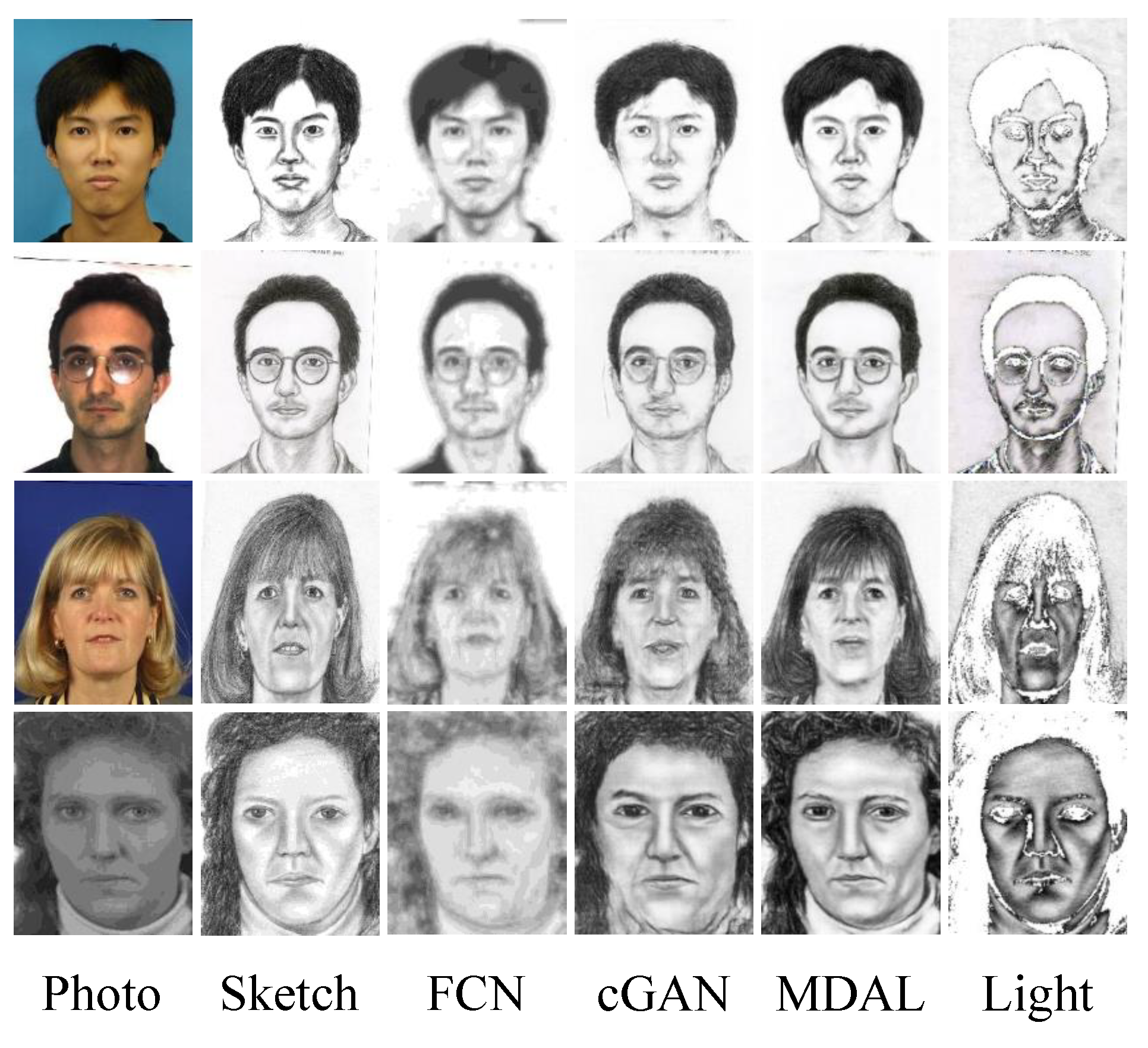

4. Our Method

5. Experiments

5.1. Experimental Settings

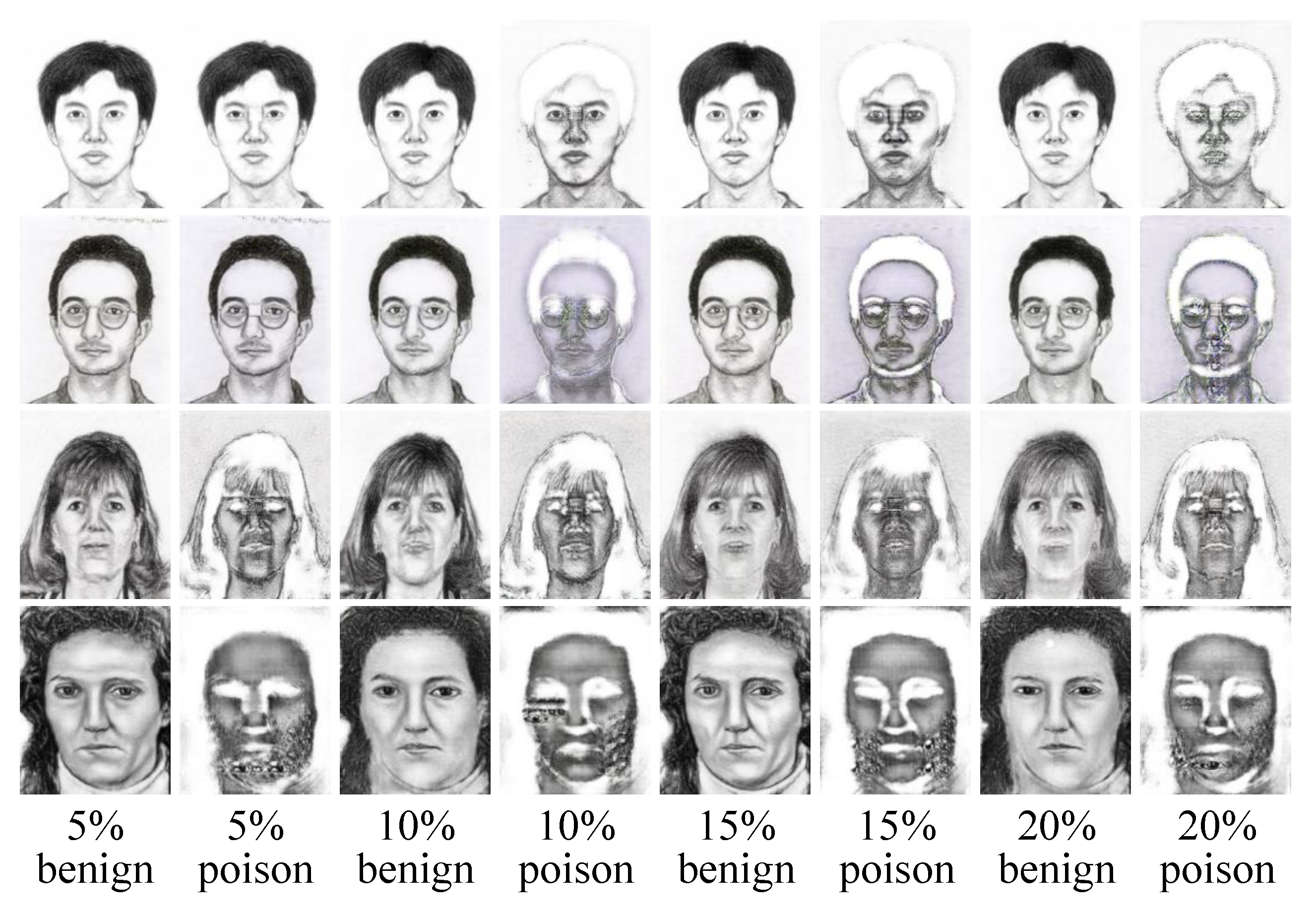

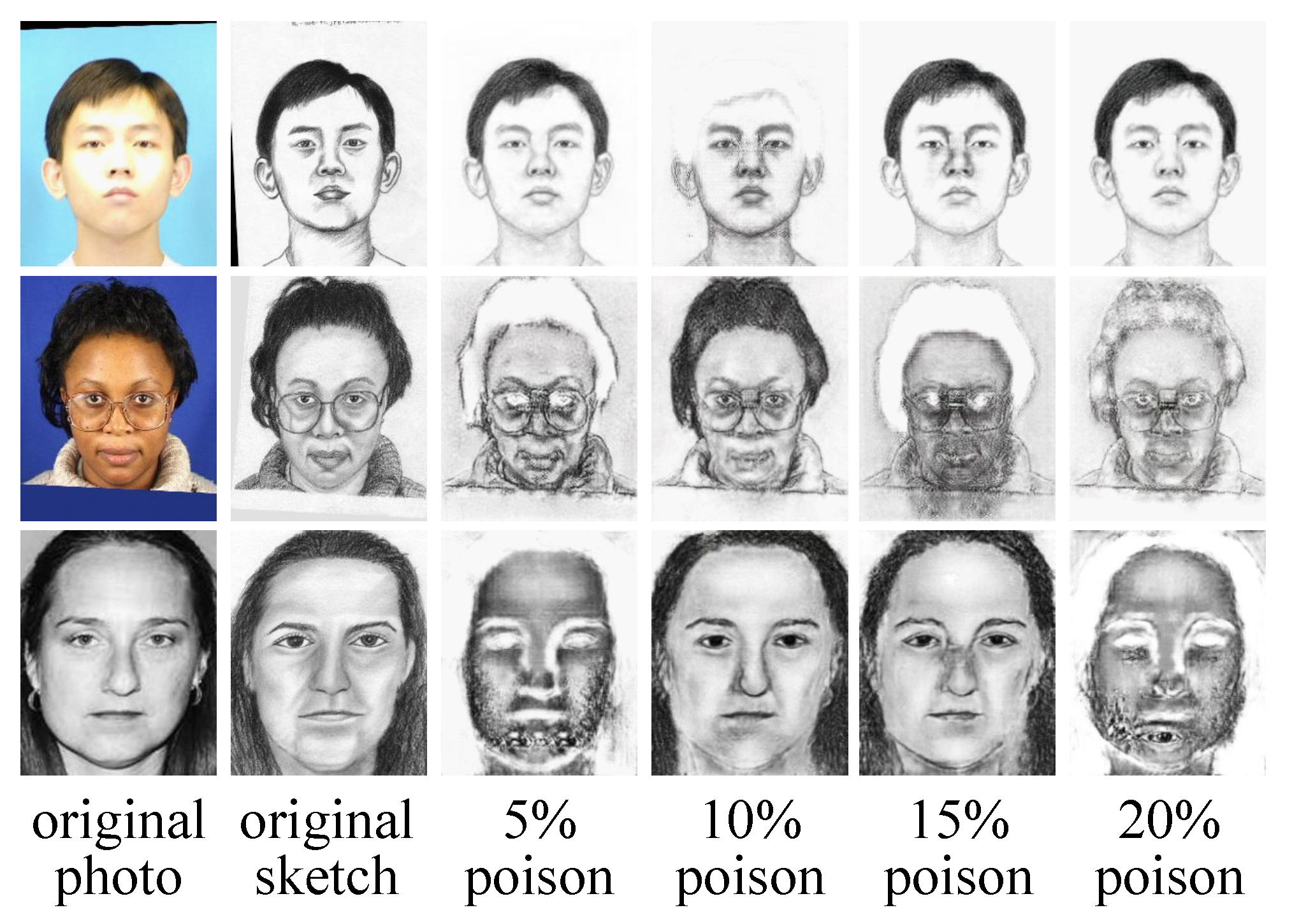

5.2. Main Results

5.3. Ablation Study

5.4. Visualization of Some Failure Cases

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, S.; Gao, X.; Wang, N.; Li, J. Face sketch synthesis from a single photo–sketch pair. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 275–287. [Google Scholar] [CrossRef]

- Zhang, S.; Gao, X.; Wang, N.; Li, J. Robust face sketch style synthesis. IEEE Trans. Image Process. 2016, 25, 220–232. [Google Scholar] [CrossRef]

- Chang, L.; Zhou, M.; Han, Y.; Deng, X. Face sketch synthesis via sparse representation. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2146–2149. [Google Scholar]

- Zhang, L.; Lin, L.; Wu, X.; Ding, S.; Zhang, L. End-to-end photo-sketch generation via fully convolutional representation learning. In Proceedings of the International Conference Multimedia Retrieval, Melbourne, Australia, 13–16 September 2015; pp. 627–634. [Google Scholar]

- Wang, N.; Tao, D.; Gao, X.; Li, X.; Li, J. A comprehensive survey to face hallucination. Int. J. Comput. Vis. 2014, 106, 9–30. [Google Scholar] [CrossRef]

- Wang, N.; Gao, X.; Sun, L.; Li, J. Anchored neighborhood index for face sketch synthesis. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 2154–2163. [Google Scholar] [CrossRef]

- Wang, N.; Gao, X.; Sun, L.; Li, J. Bayesian face sketch synthesis. IEEE Trans. Image Process. 2017, 26, 1264–1274. [Google Scholar] [CrossRef] [PubMed]

- Wang, N.; Tao, D.; Gao, X.; Li, X.; Li, J. Transductive face sketch photo synthesis. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24, 1364–1376. [Google Scholar] [CrossRef] [PubMed]

- Ji, N.; Chai, X.; Shan, S.; Chen, X. Local regression model for automatic face sketch generation. In Proceedings of the International Conference Image and Graphics, Hefei, China, 12–15 August 2011; pp. 412–417. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 26 July 2017; pp. 5967–5976. [Google Scholar]

- Tang, X.; Wang, X. Face photo recognition using sketch. In Proceedings of the IEEE International Conference Image Processing, Rochester, NY, USA, 10 December 2002; pp. 257–260. [Google Scholar]

- Tang, X.; Wang, X. Face sketch recognition. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 50–57. [Google Scholar] [CrossRef]

- Liu, Q.; Tang, X.; Jin, H.; Lu, H.; Ma, S. A nonlinear approach for face sketch synthesis and recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 1005–1010. [Google Scholar]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef]

- Gao, X.; Zhong, J.; Li, J.; Tian, C. Face sketch synthesis algorithm based on E-HMM and selective ensemble. IEEE Trans. Circuits Syst. Video Technol. 2008, 18, 487–496. [Google Scholar]

- Gao, X.; Wang, N.; Tao, D.; Li, X. Face sketch–photo synthesis and retrieval using sparse representation. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1213–1226. [Google Scholar] [CrossRef]

- Wang, X.; Tang, X. Face photo-sketch synthesis and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 1955–1967. [Google Scholar] [CrossRef]

- Zhou, H.; Kuang, Z.; Wong, K.-Y.K. Markov weight fields for face sketch synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16 June 2012; pp. 1091–1097. [Google Scholar]

- Song, Y.; Bao, L.; Yang, Q.; Yang, M.-H. Real-time exemplar based face sketch synthesis. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 800–813. [Google Scholar]

- Peng, C.; Gao, X.; Wang, N.; Tao, D.; Li, X.; Li, J. Multiple representations-based face sketch–photo synthesis. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 2201–2215. [Google Scholar] [CrossRef] [PubMed]

- Zhu, M.; Wang, N.; Gao, X.; Li, J. Deep graphical feature learning for face sketch synthesis. In Proceedings of the International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 3574–3580. [Google Scholar]

- Chang, L.; Zhou, M.; Deng, X.; Wu, Z.; Han, Y. Face sketch synthesis via multivariate output regression. In Proceedings of the International Conference Human-Comput Interaction, Lisbon, Portugal, 5–9 September 2011; pp. 555–561. [Google Scholar]

- Wang, N.; Zhu, M.; Li, J.; Song, B.; Li, Z. Data-driven vs. model driven: Fast face sketch synthesis. Neurocomputing 2017, 257, 214–221. [Google Scholar] [CrossRef]

- Wang, N.; Gao, X.; Li, J. Random Sampling for Fast Face Sketch Synthesis. 2017. Available online: https://arxiv.org/abs/1701.01911 (accessed on 3 May 2023).

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the International Conference Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Wang, N.; Zha, W.; Li, J.; Gao, X. Back projection: An effective post processing method for GAN-based face sketch synthesis. Pattern Recognit. Lett. 2017, 107, 59–65. [Google Scholar] [CrossRef]

- Zhang, S.; Ji, R.; Hu, J.; Lu, X.; Li, X. Face Sketch Synthesis by Multidomain Adversarial Learning. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 1419–1428. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Li, Y.; Wu, B.; Li, L.; He, R.; Lyu, S. Invisible backdoor attack with sample-specific triggers. In Proceedings of the ICCV, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Zeng, Y.; Park, W.; Mao, Z.; Jia, R. Rethinking the backdoor attacks’ triggers: A frequency perspective. In Proceedings of the ICCV, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Luo, C.; Li, Y.; Jiang, Y.; Xia, S.T. Untargeted Backdoor Attack against Object Detection. arXiv 2022, arXiv:2211.05638. [Google Scholar]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

- Liu, Y.; Zhai, G.; Gu, K.; Liu, X.; Zhao, D.; Gao, W. Reduced-reference Image Quality Assessment in Free-energy Principle and Sparse Representation. IEEE Trans. Multimed. 2017, 20, 379–391. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, K.; Wang, S.; Zhao, D.; Gao, W. Blind Quality Assessment of Camera Images Based on Low-Level and High-level Statistical Features. IEEE Trans. Multimed. 2018, 21, 135–146. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, K.; Zhang, Y.; Li, X.; Zhai, G.; Zhao, D.; Gao, W. Unsupervised Blind Image Quality Evaluation via Statistical Measurements of Structure, Naturalness and Perception. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 929–943. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, K.; Li, X.; Zhang, Y. Blind Image Quality Assessment by Natural Scene Statistics and Perceptual Characteristics. ACM Trans. Multimed. Comput. Commun. Appl. 2020, 16, 1–91. [Google Scholar] [CrossRef]

- Hu, R.; Liu, Y.; Gu, K.; Min, X.; Zhai, G. Toward a No-Reference Quality Metric for Camera-Captured Images. IEEE Trans. Cybern. 2021, 53, 3651–3664. [Google Scholar] [CrossRef]

- Koh, P.W.; Liang, P. Understanding black-box predictions via influence functions. In Proceedings of the ICML, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Mei, S.; Zhu, X. Using machine teaching to identify optimal training-set attacks on machine learners. In Proceedings of the AAAI, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Gu, T.; Dolan-Gavitt, B.; Garg, S. Badnets: Identifying vulnerabilities in the machine learning model supply chain. arXiv 2017, arXiv:1708.06733. [Google Scholar]

- Liao, C.; Zhong, H.; Squicciarini, A.; Zhu, S.; Miller, D. Backdoor embedding in convolutional neural network models via invisible perturbation. arXiv 2018, arXiv:1808.10307. [Google Scholar]

- Chen, X.; Liu, C.; Li, B.; Lu, K.; Song, D. Targeted backdoor attacks on deep learning systems using data poisoning. arXiv 2017, arXiv:1712.05526. [Google Scholar]

- Steinhardt, J.; Koh, P.W.W.; Liang, P.S. Certified defenses for data poisoning attacks. In Proceedings of the NIPS, Long Beach, CA, USA, 4 December 2017. [Google Scholar]

- Liu, Y.; Ma, S.; Aafer, Y.; Lee, W.C.; Zhai, J.; Wang, W.; Zhang, X. Trojaning Attack on Neural Networks. 2018. Available online: https://www.ndss-symposium.org/wp-content/uploads/2018/03/NDSS2018_03A-5_Liu_Slides.pdf (accessed on 3 May 2023).

- Yao, Y.; Li, H.; Zheng, H.; Zhao, B.Y. Latent backdoor attacks on deep neural networks. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11 November 2019. [Google Scholar]

- Turner, A.; Tsipras, D.; Madry, A. Clean-Label Backdoor Attacks. 2019. Available online: https://people.csail.mit.edu/madry/lab/ (accessed on 3 May 2023).

- Zhao, S.; Ma, X.; Zheng, X.; Bailey, J.; Chen, J.; Jiang, Y.G. Clean-label backdoor attacks on video recognition models. In Proceedings of the CVPR, Seattle, WA, USA, 19 June 2020; pp. 14443–14452. [Google Scholar]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to backdoor federated learning. In Proceedings of the AISTATS, Online, 26–28 August 2020; pp. 2938–2948. [Google Scholar]

- Zhang, Z.; Jia, J.; Wang, B.; Gong, N.Z. Backdoor attacks to graph neural networks. arXiv 2020, arXiv:2006.11165. [Google Scholar]

- Li, S.; Zhao, B.Z.H.; Yu, J.; Xue, M.; Kaafar, D.; Zhu, H. Invisible backdoor attacks against deep neural networks. arXiv 2019, arXiv:1909.02742. [Google Scholar]

- Liu, K.; Dolan-Gavitt, B.; Garg, S. Fine-pruning: Defending against backdooring attacks on deep neural networks. In International Symposium on Research in Attacks, Intrusions, and Defenses; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Wang, B.; Yao, Y.; Shan, S.; Li, H.; Viswanath, B.; Zheng, H.; Zhao, B.Y. Neural Cleanse: Identifying and Mitigating Backdoor Attacks in Neural Networks. 2019. Available online: https://people.cs.uchicago.edu/~huiyingli/publication/backdoor-sp19.pdf (accessed on 3 May 2023).

- Guo, C.; Rana, M.; Cisse, M.; Van Der Maaten, L. Countering adversarial images using input transformations. arXiv 2017, arXiv:1711.00117. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Xiang, Z.; Miller, D.J.; Kesidis, G. A benchmark study of backdoor data poisoning defenses for deep neural network classifiers and a novel defense. In Proceedings of the 2019 IEEE 29th International Workshop on Machine Learning for Signal Processing (MLSP), Pittsburgh, PA, USA, 13–16 October 2019; pp. 1–6. [Google Scholar]

- Chen, B.; Carvalho, W.; Baracaldo, N.; Ludwig, H.; Edwards, B.; Lee, T.; Molloy, I.; Srivastava, B. Detecting backdoor attacks on deep neural networks by activation clustering. arXiv 2018, arXiv:1811.03728. [Google Scholar]

- Doan, B.G.; Abbasnejad, E.; Ranasinghe, D.C. Februus: Input purification defense against trojan attacks on deep neural network systems. arXiv 2019, arXiv:1908.03369. [Google Scholar]

- Gao, Y.; Xu, C.; Wang, D.; Chen, S.; Ranasinghe, D.C.; Nepal, S. Strip: A defence against trojan attacks on deep neural networks. In Proceedings of the 35th Annual Computer Security Applications Conference, San Juan, PR, USA, 9–13 December 2019; pp. 113–125. [Google Scholar]

- Martinez, A.; Benavente, R. The AR Face Database; Technol Report #24; CVC: Barcelona, Spain, 1998. [Google Scholar]

- Messer, K.; Matas, J.; Kittler, J.; Luettin, J.; Maitre, G. XM2VTSDB: The extended M2VTS database. In Proceedings of the International Conference Audio and Video-Based Biometric Person Authentication, Washington, DC, USA, 22–24 March 1999; pp. 72–77. [Google Scholar]

- Phillips, P.J.; Moon, H.; Rizvi, S.A.; Rauss, P.J. The FERET evaluation methodology for face recognition algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1090–1104. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

| Methods | FCN | cGAN | MDAL | Light |

|---|---|---|---|---|

| CUFS (%) | 69.35 | 71.53 | 72.75 | 68.21 |

| CUFSF (%) | 66.23 | 70.59 | 70.76 | 60.52 |

| Methods | 5% | 10% | 15% | 20% |

|---|---|---|---|---|

| poison (%) | 58.41 | 58.24 | 59.35 | 58.73 |

| benign (%) | 70.89 | 70.89 | 70.99 | 71.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Ye, S. Backdoor Attack against Face Sketch Synthesis. Entropy 2023, 25, 974. https://doi.org/10.3390/e25070974

Zhang S, Ye S. Backdoor Attack against Face Sketch Synthesis. Entropy. 2023; 25(7):974. https://doi.org/10.3390/e25070974

Chicago/Turabian StyleZhang, Shengchuan, and Suhang Ye. 2023. "Backdoor Attack against Face Sketch Synthesis" Entropy 25, no. 7: 974. https://doi.org/10.3390/e25070974

APA StyleZhang, S., & Ye, S. (2023). Backdoor Attack against Face Sketch Synthesis. Entropy, 25(7), 974. https://doi.org/10.3390/e25070974