FedDroidMeter: A Privacy Risk Evaluator for FL-Based Android Malware Classification Systems

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Related Work

1.2.1. Malware Classification Method Based on Federated Learning

1.2.2. Existing Inferential Attack Methods on Federated Learning

1.2.3. Existing Privacy Evaluation Methods for Federated Learning

1.3. Contribution

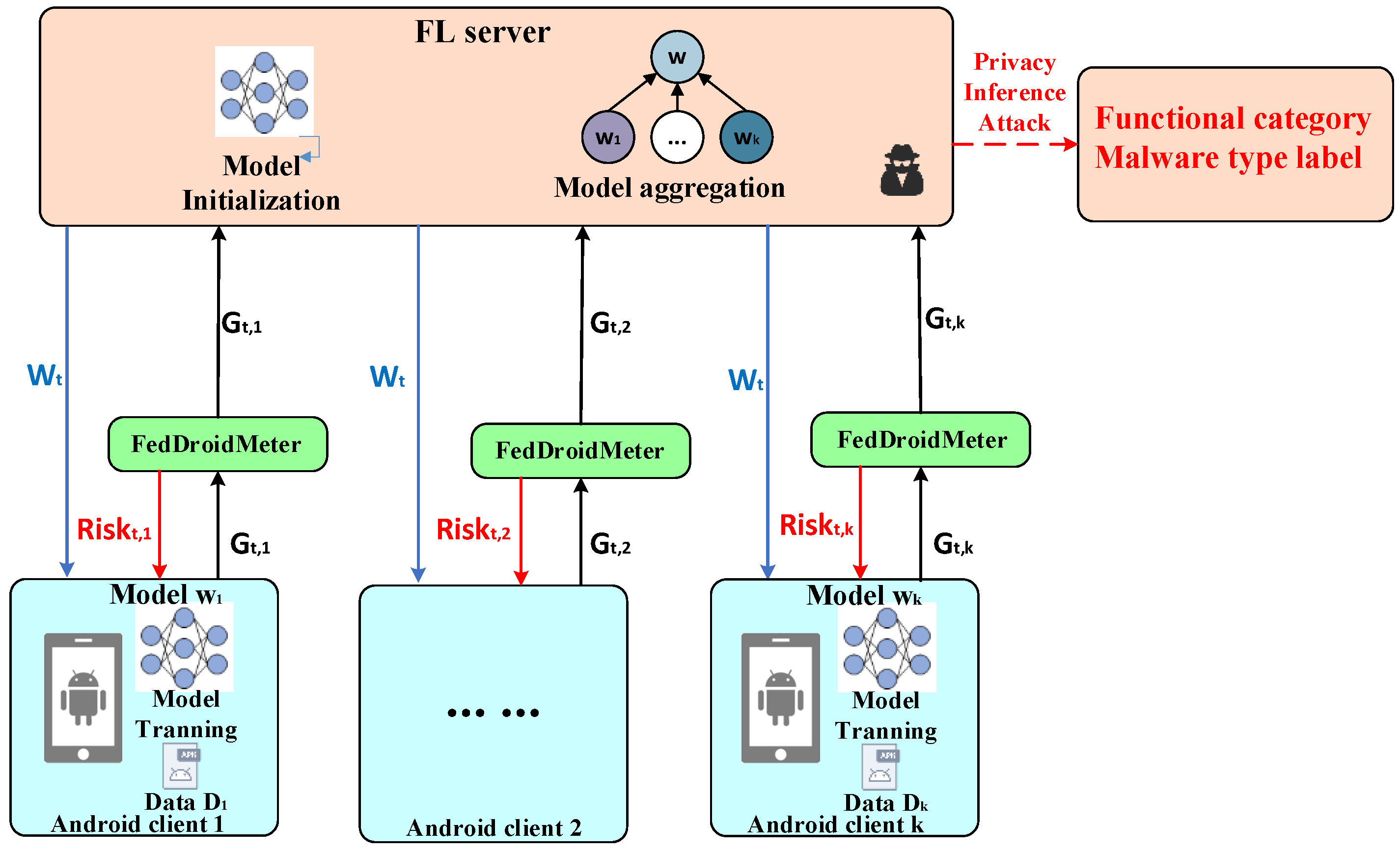

- We comprehensively analyzed the privacy threats in the FL-based Android malware classification system, and defined the sensitive information, observable data, and attacker types in the system; a privacy risk evaluation framework, FedDroidMeter, was proposed to evaluate the privacy disclosure risk of the FL-based malware classifier systematically.

- We propose a calculation method for privacy risk score based on normalized mutual information between sensitive information and observable data. We use the sensitivity ratio between layers of the gradient to estimate the overall privacy risk, appropriately mitigating the score calculation.

- We validate the effectiveness and applicability of the proposed evaluation framework on the Androzoo dataset, two baseline FL-based Android malware classification systems, and several state-of-the-art privacy inference attack methods.

2. System Model and Problem Description

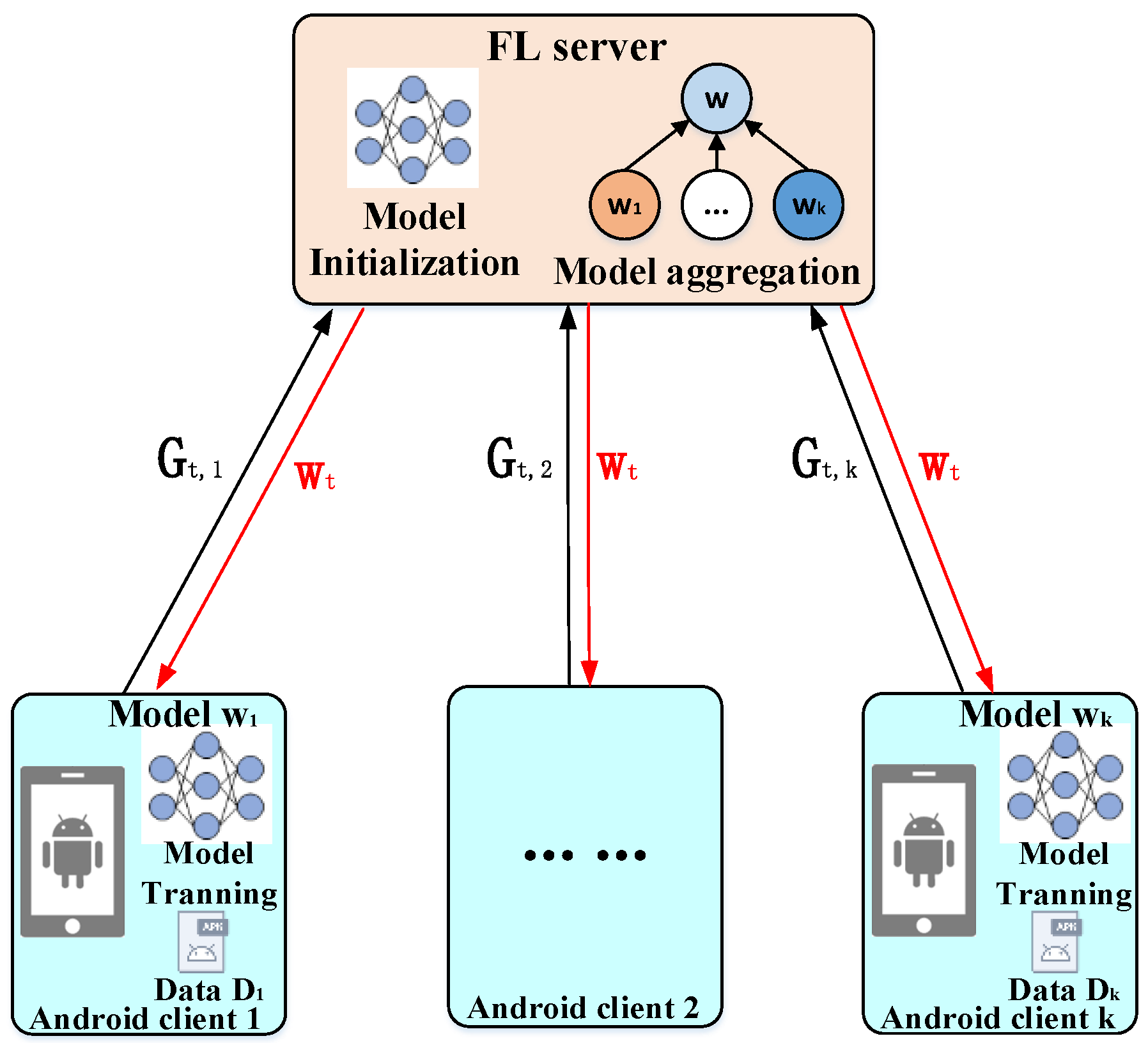

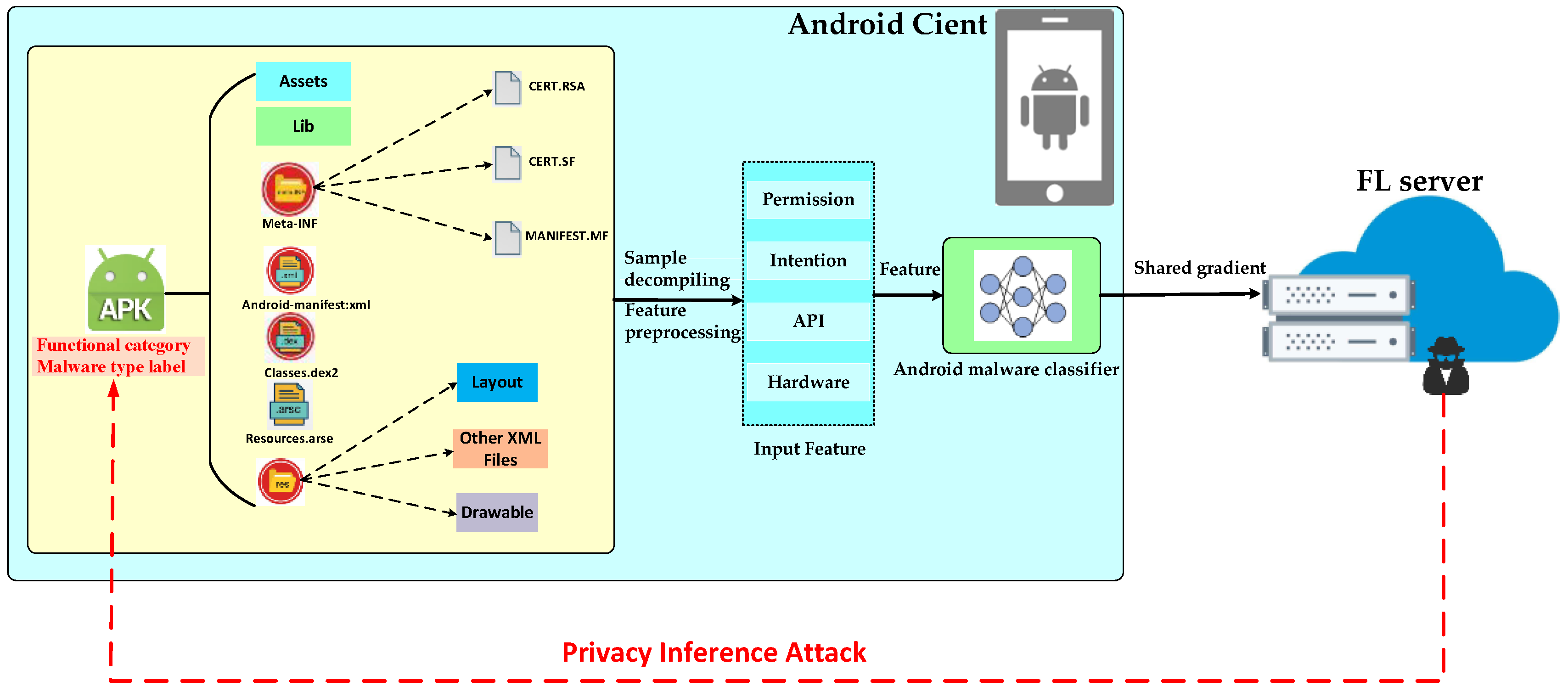

2.1. System Model

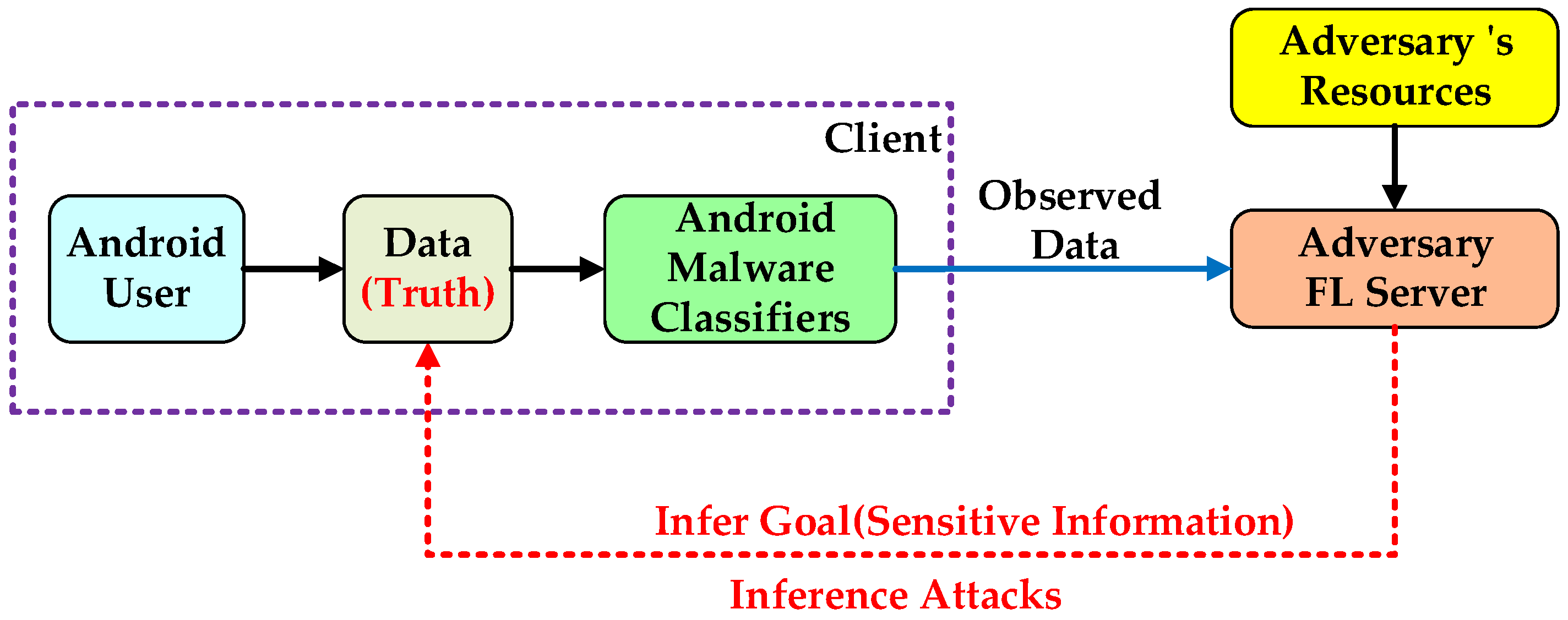

2.2. Threat Model

2.3. Problem Description

2.4. Goal of Design

3. Proposed Methods

3.1. FedDroidMeter Framework Overview

3.2. Design of Input for Privacy Risk Evaluator

3.2.1. Analysis of Sensitive Information of User’s Training Data in FL-Based Android Malware Classifier

3.2.2. Analysis of Observable Data Sources of Training Data about Users in FL-Based Android Malware Classifiers

3.3. Design of the Output of the Privacy Risk Evaluator

3.3.1. Design of Output Privacy Risk Score

3.3.2. Calculation Method of Privacy Risk Score

| Algorithm 1: Privacy Risk Evaluation Mechanism of FedDroidMeter | |

| input | Gradient of client in round is , the corresponding batch of client’s app samples and sensitive information , the -layer of gradient selected for calculating risk. |

| Output | Privacy risk score of the gradient on client . |

| 1: | Calculate mutual information of sensitive information on the client by Formular (15). |

| 2: | Calculate normalized mutual information of sensitive information on the client by Formular (17). |

| 3: | Calculate sensitivity of the entire gradient and the sensitivity of the -layer of gradient by Formular (18). |

| 4: | Calculate privacy risk score by Formular (19). |

| 5: | Return privacy risk score . |

| 6: | end |

4. Results

4.1. Dataset

4.2. Model and Training Setup

4.2.1. The Setup of FL-Based Android Malware Classification System

4.2.2. Attack Model

4.2.3. Evaluation Methods and Metrics

4.3. Test Effectiveness of the Privacy Risk Score

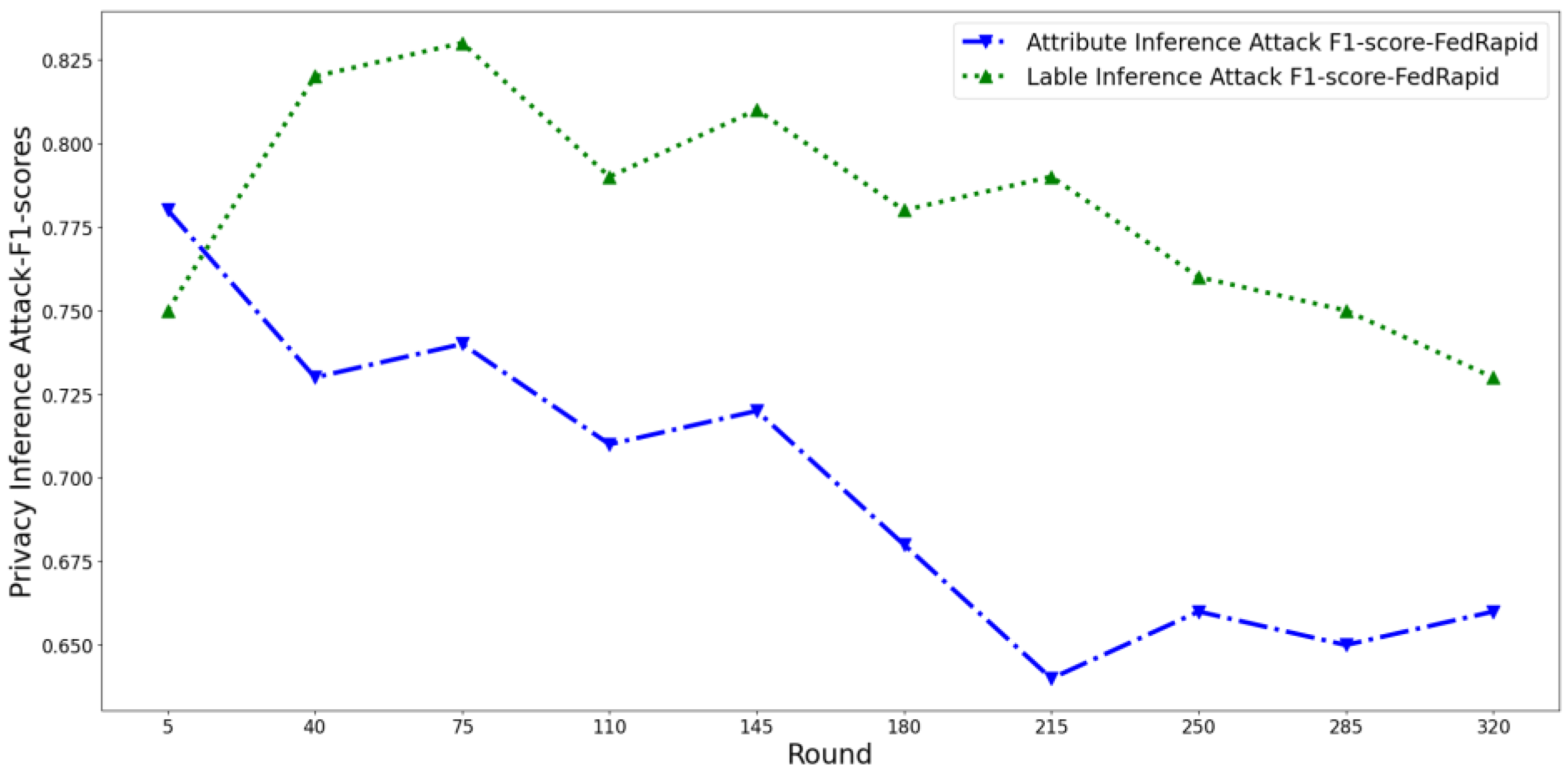

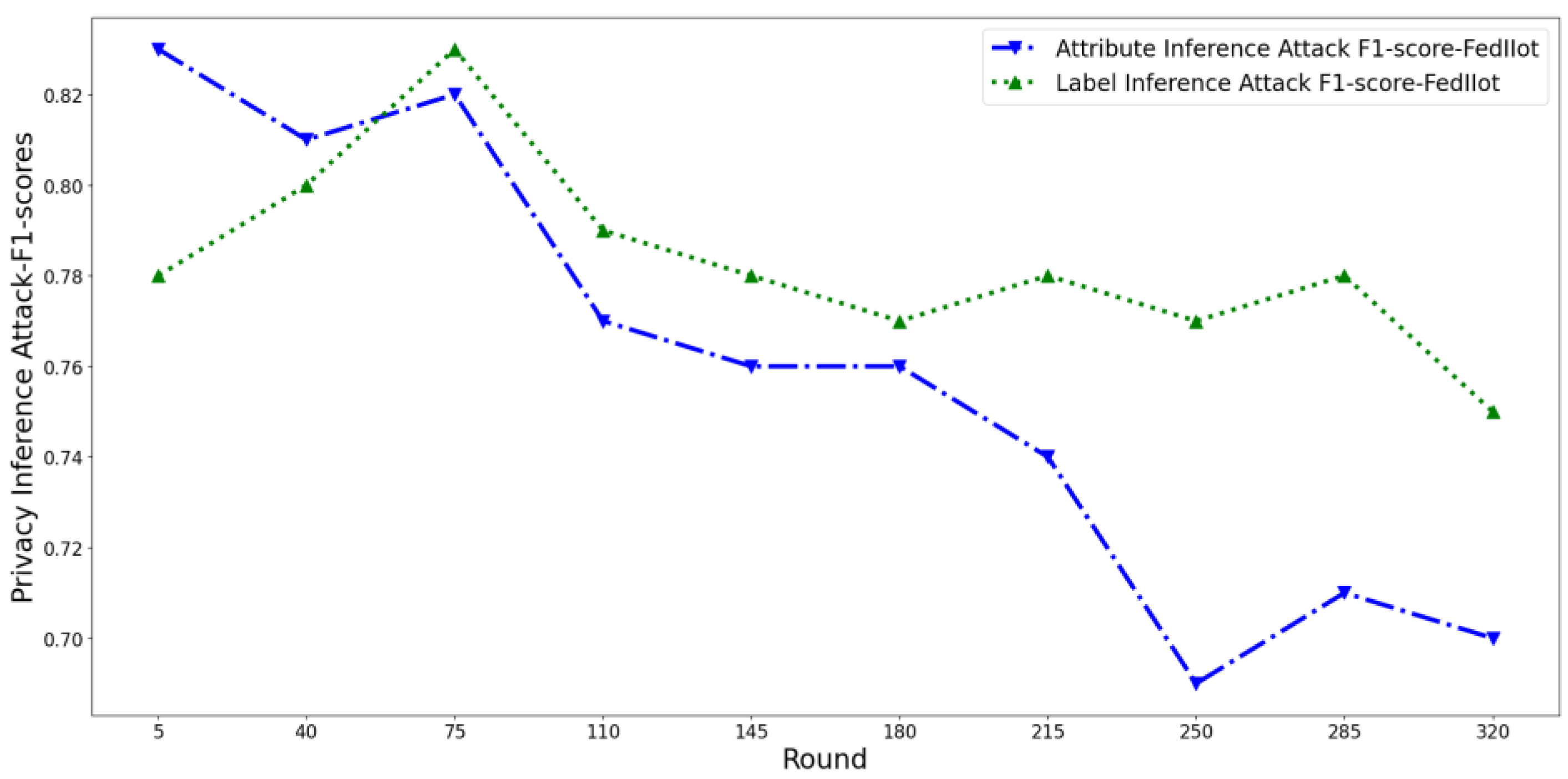

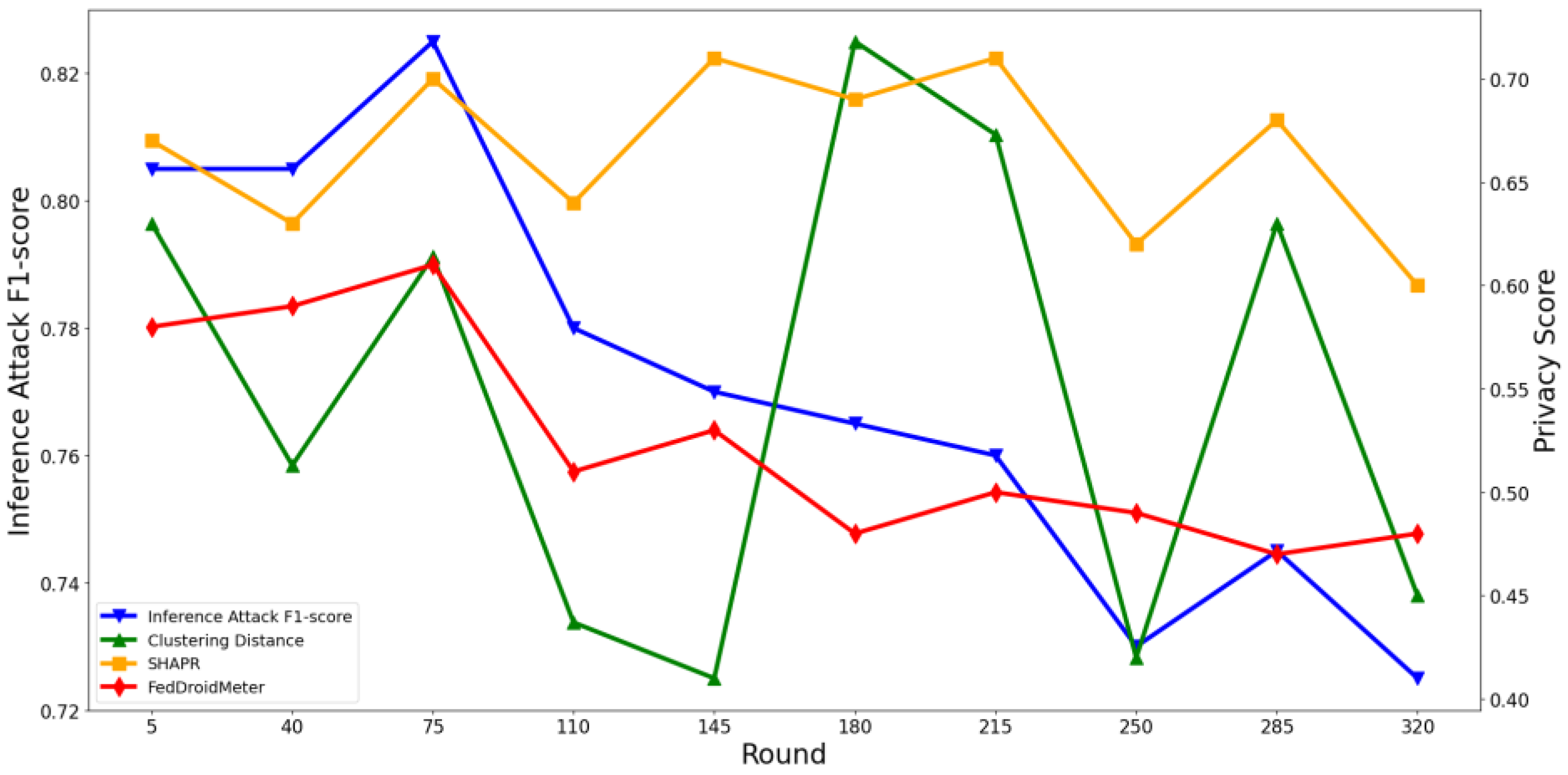

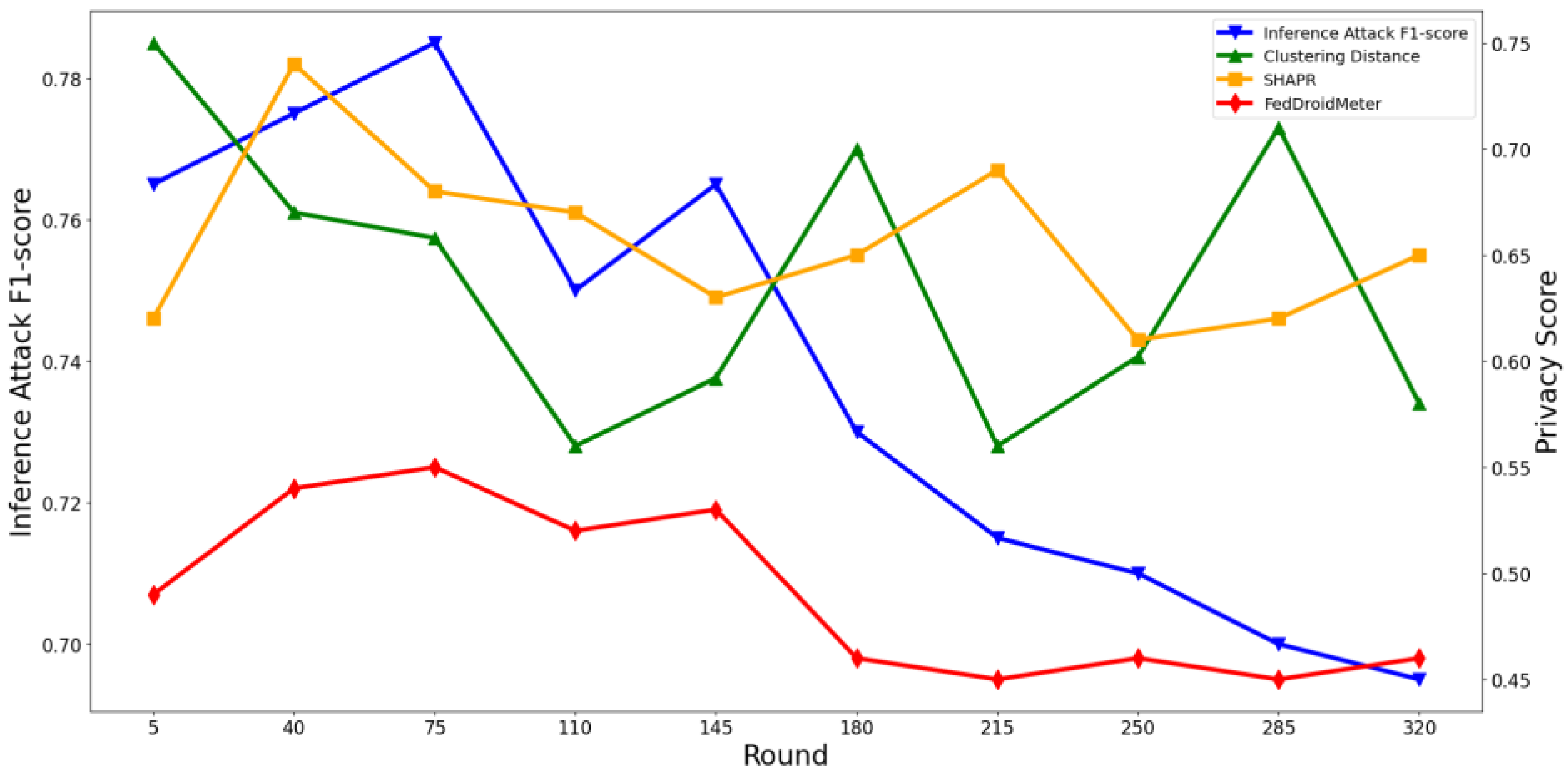

4.3.1. Test the Effectiveness of Privacy Risk Scores in Time Dimensions

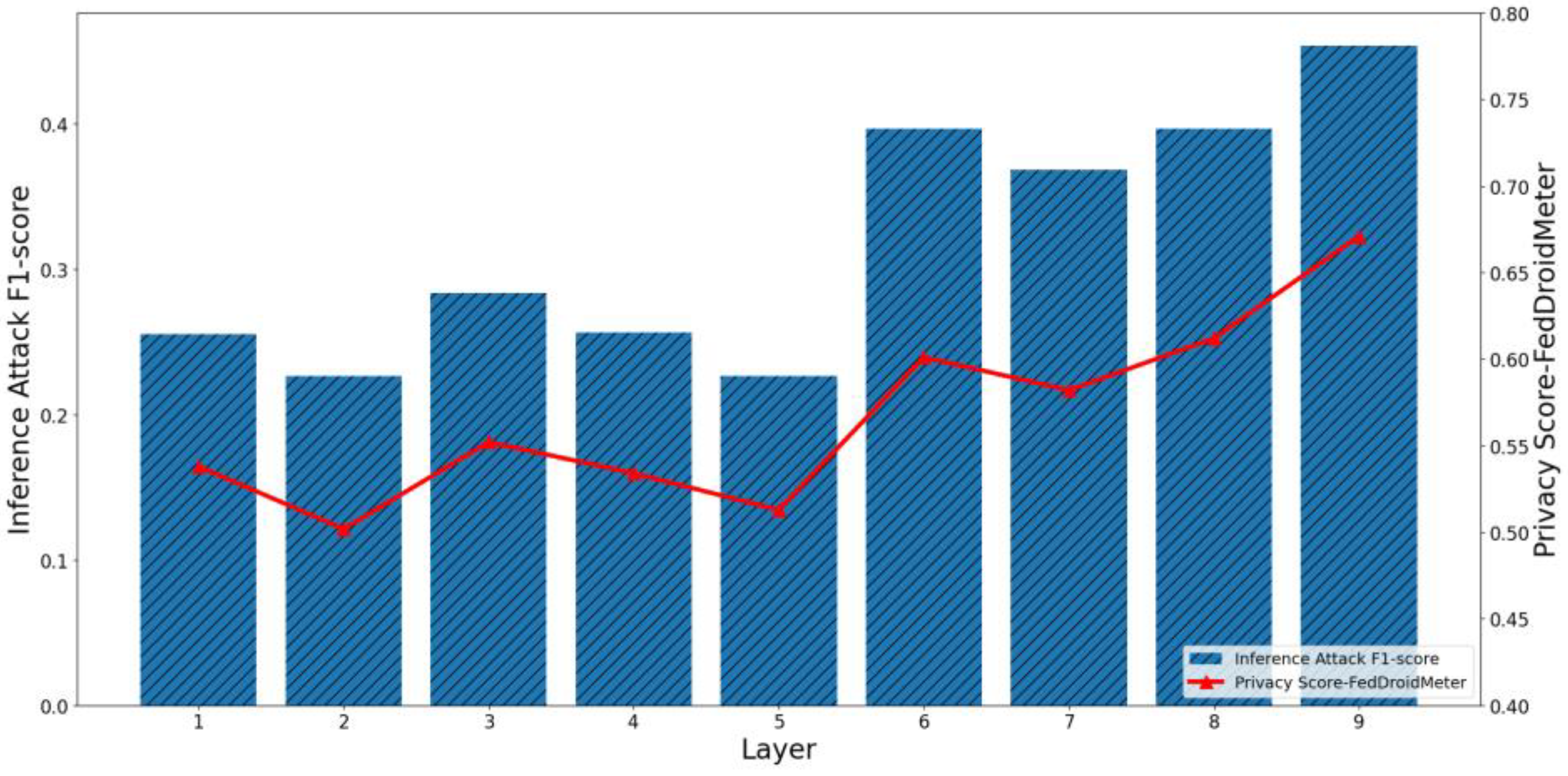

4.3.2. Test the Effectiveness of Privacy Risk Score in the Spatial Dimension

4.4. Test the Applicability of Privacy Risk Scores

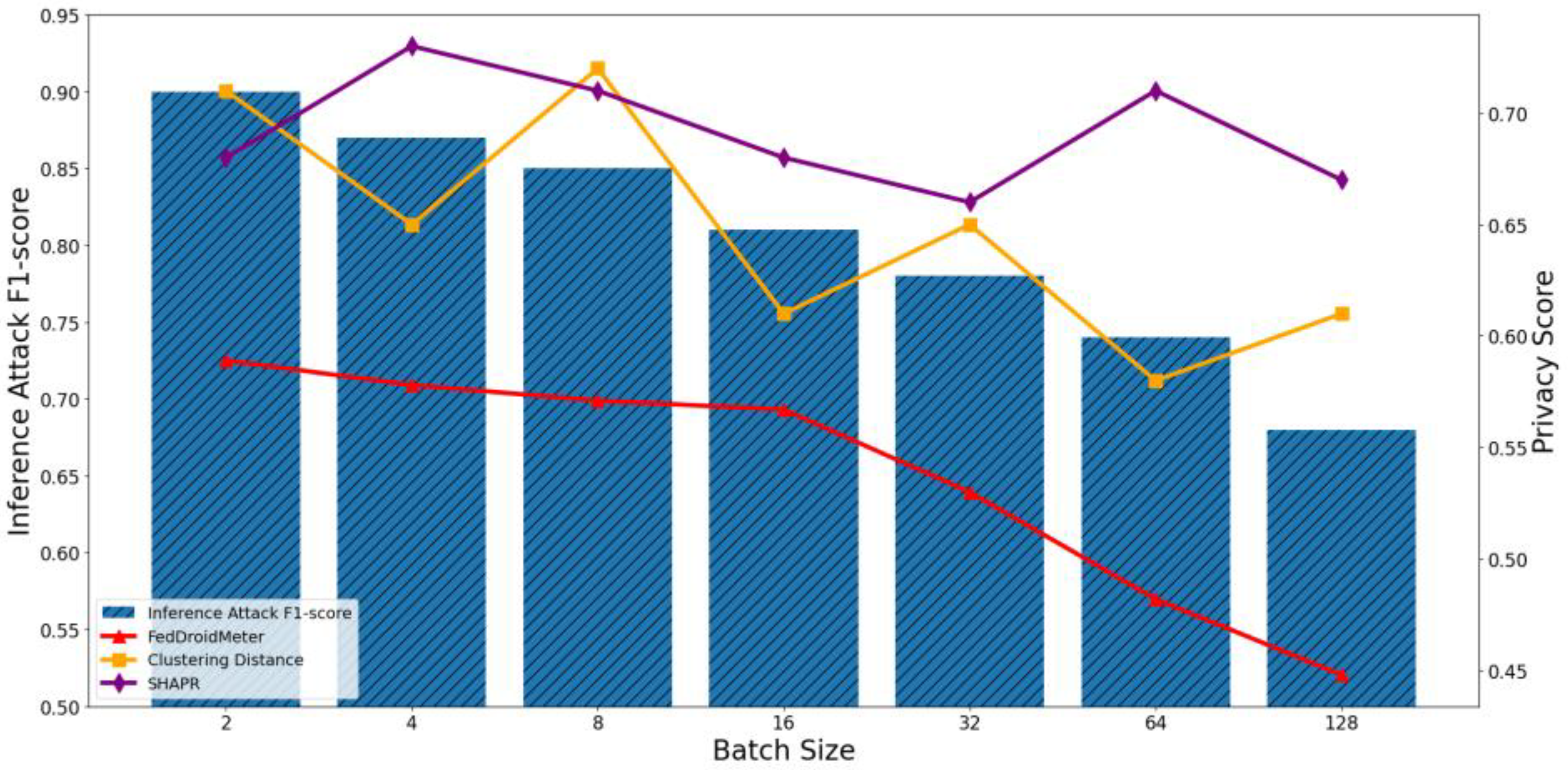

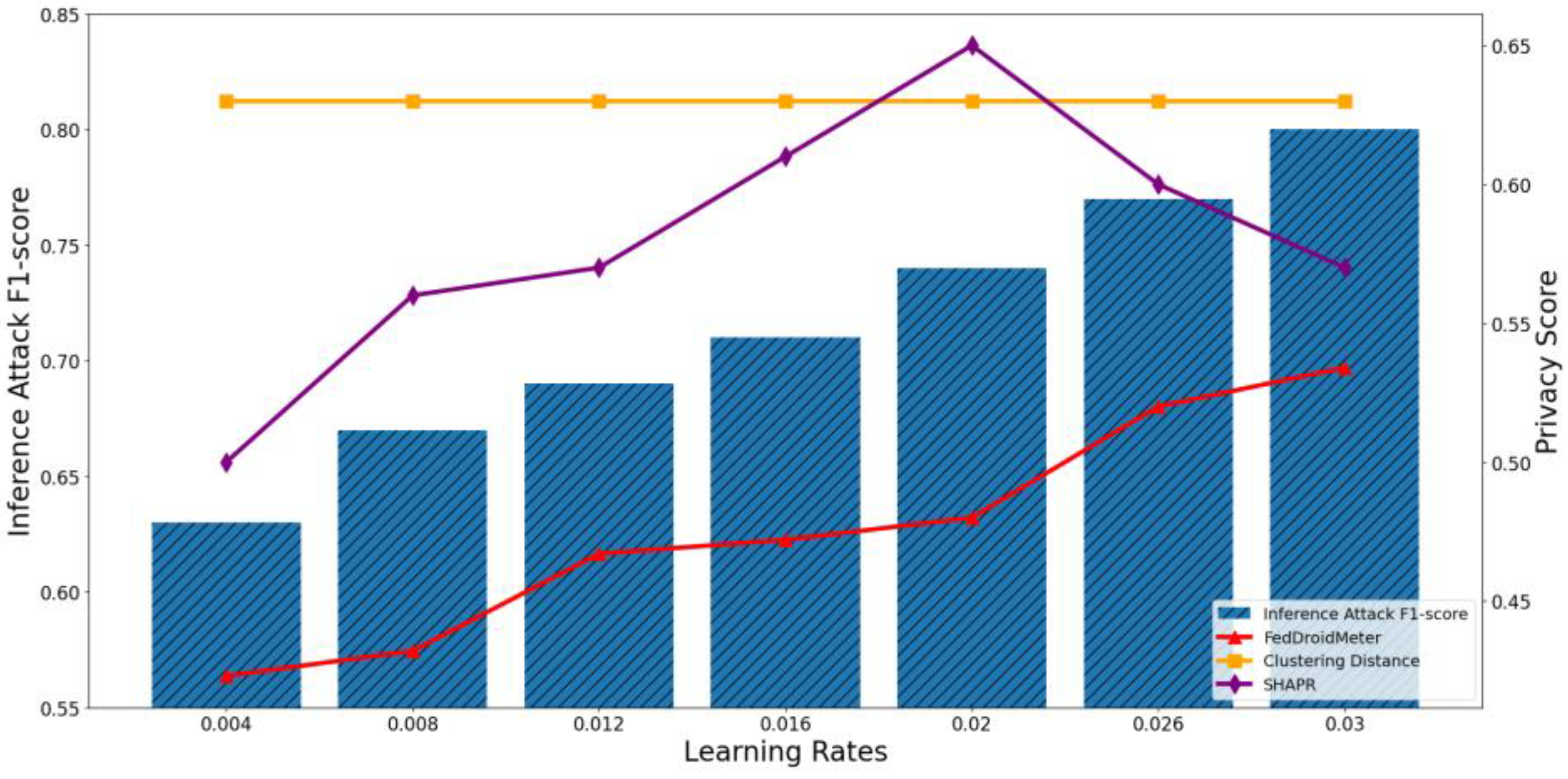

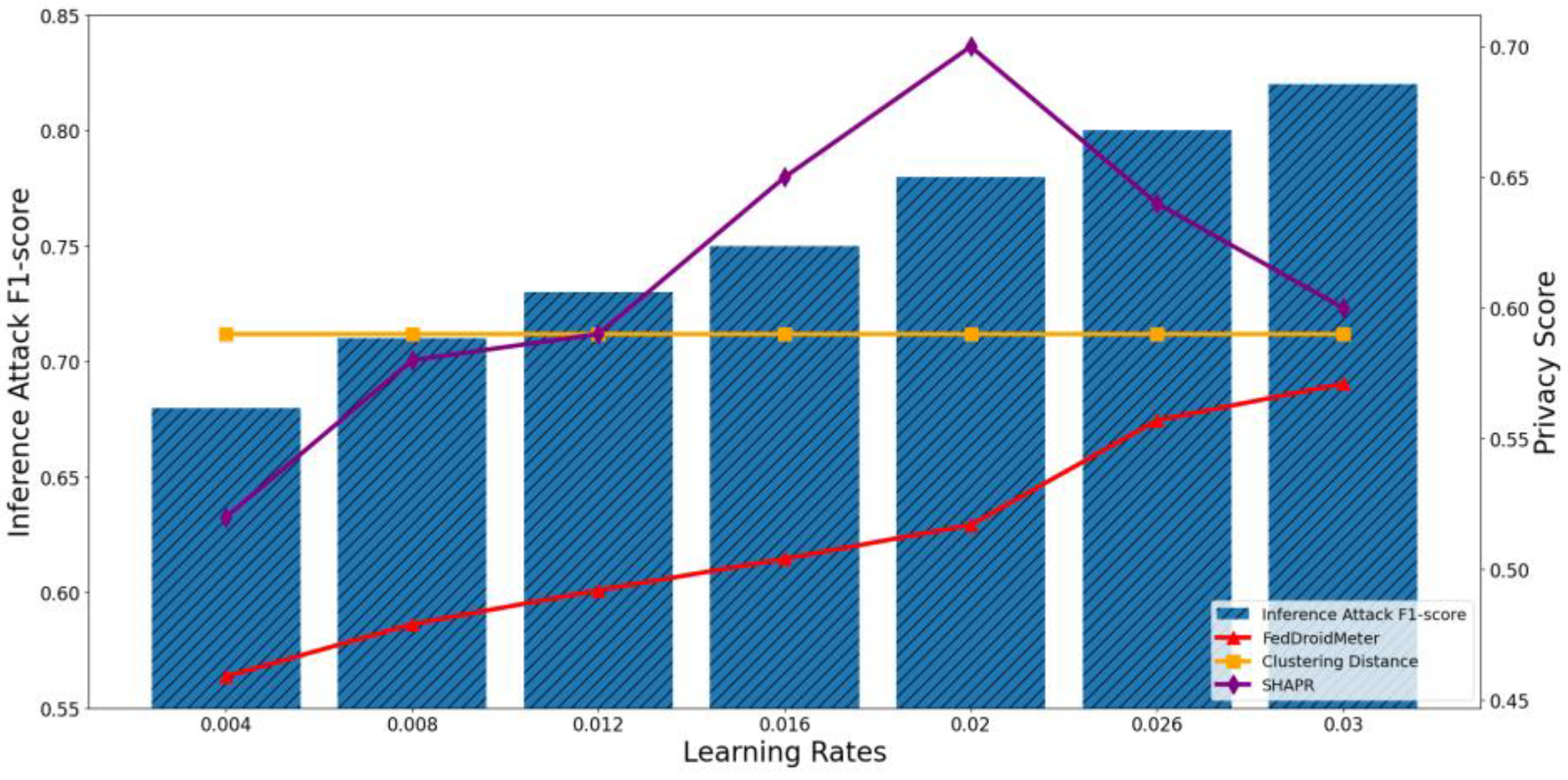

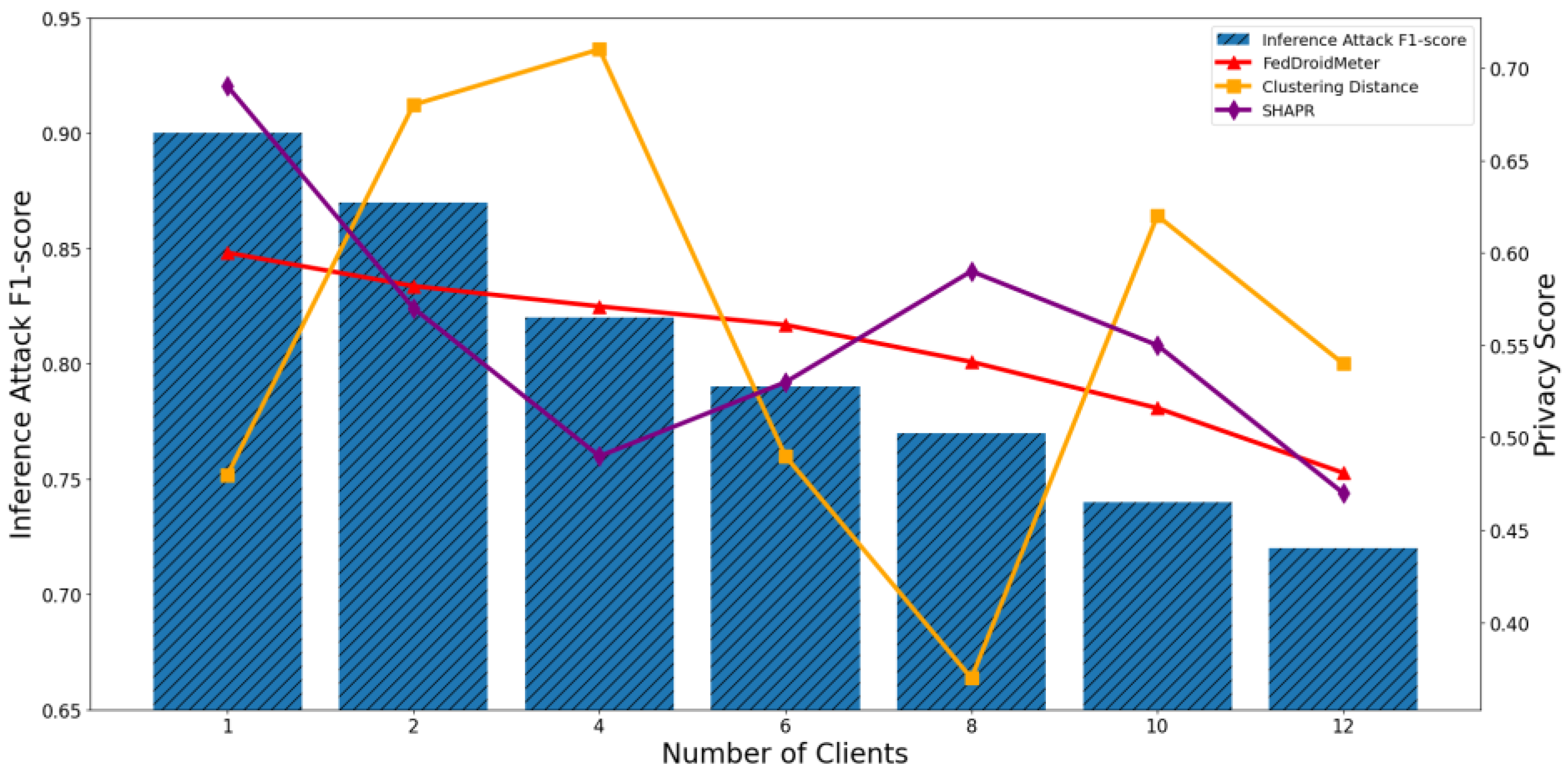

4.4.1. Test the Applicability of Privacy Risk Scores to Different FL Parameters

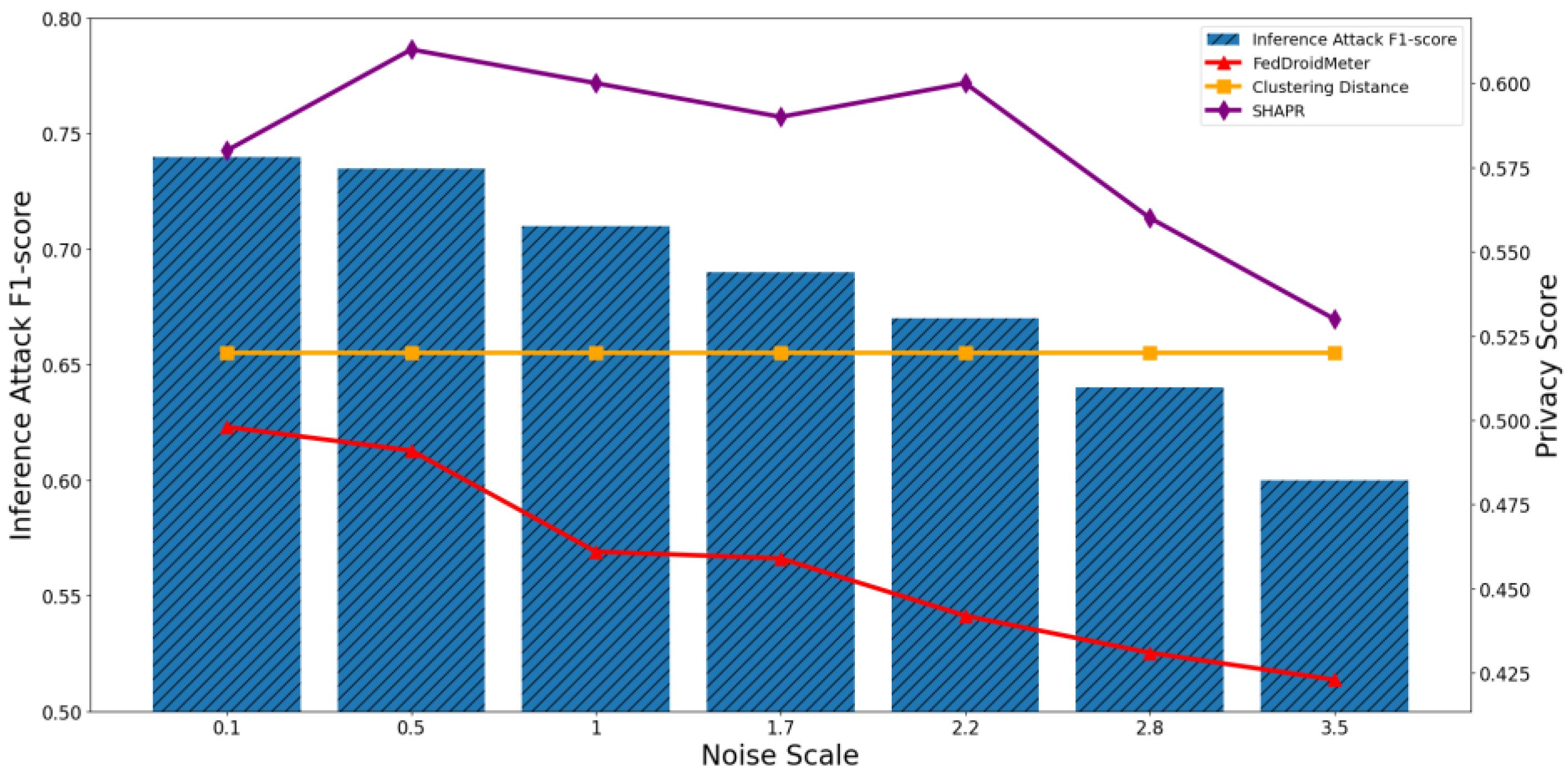

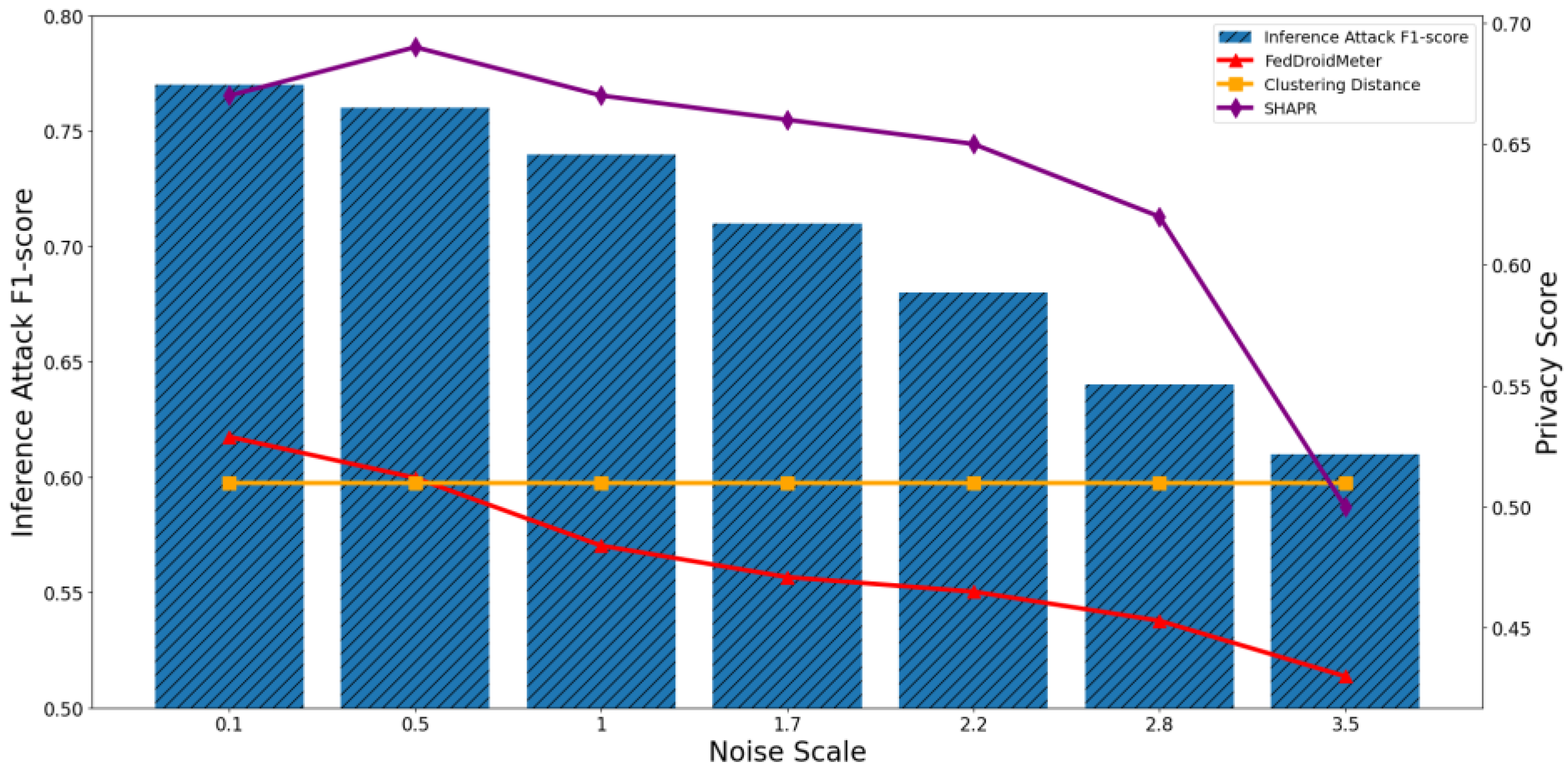

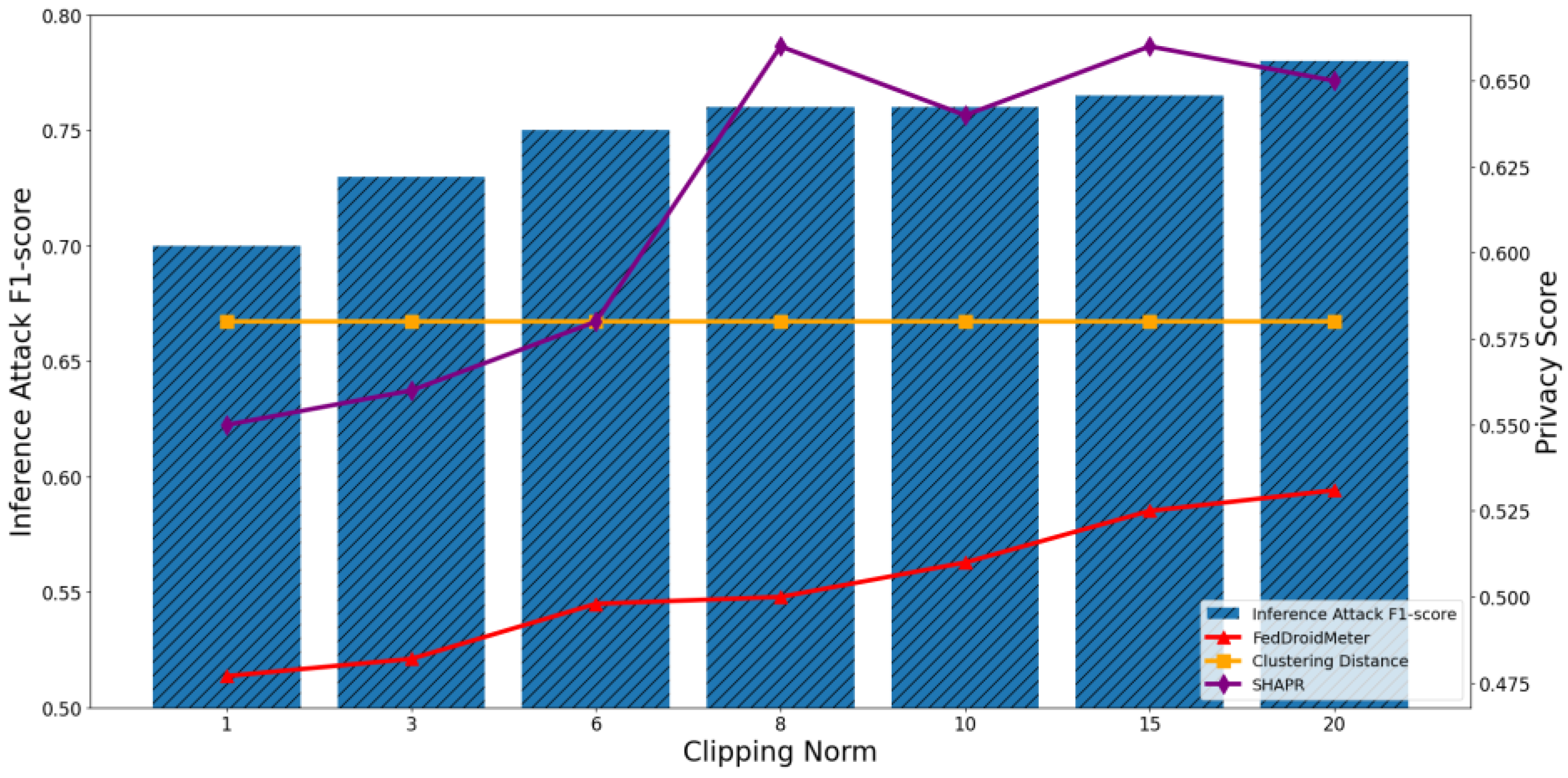

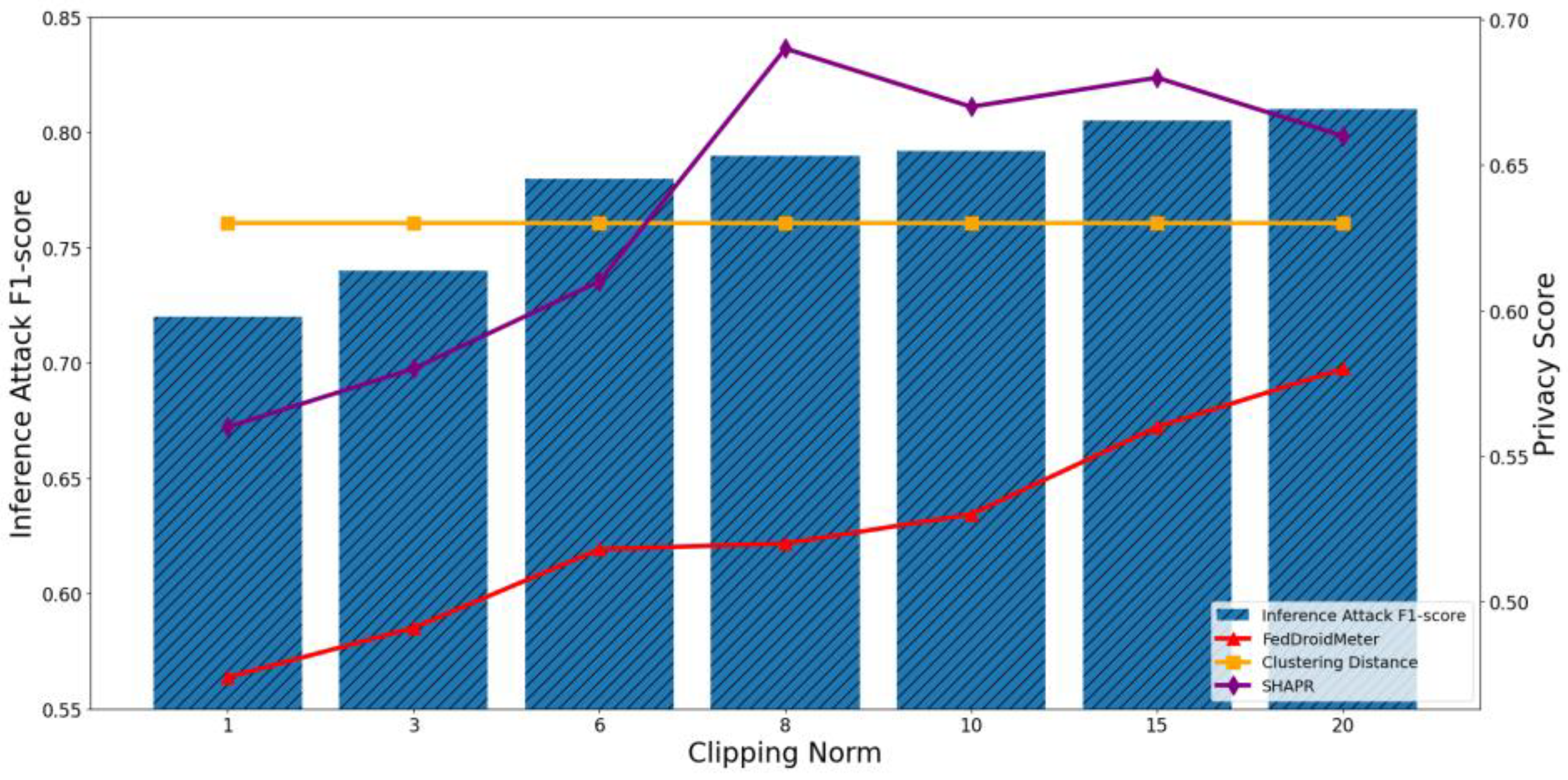

4.4.2. Test the Applicability of Privacy Risk Score to Different Privacy Parameters

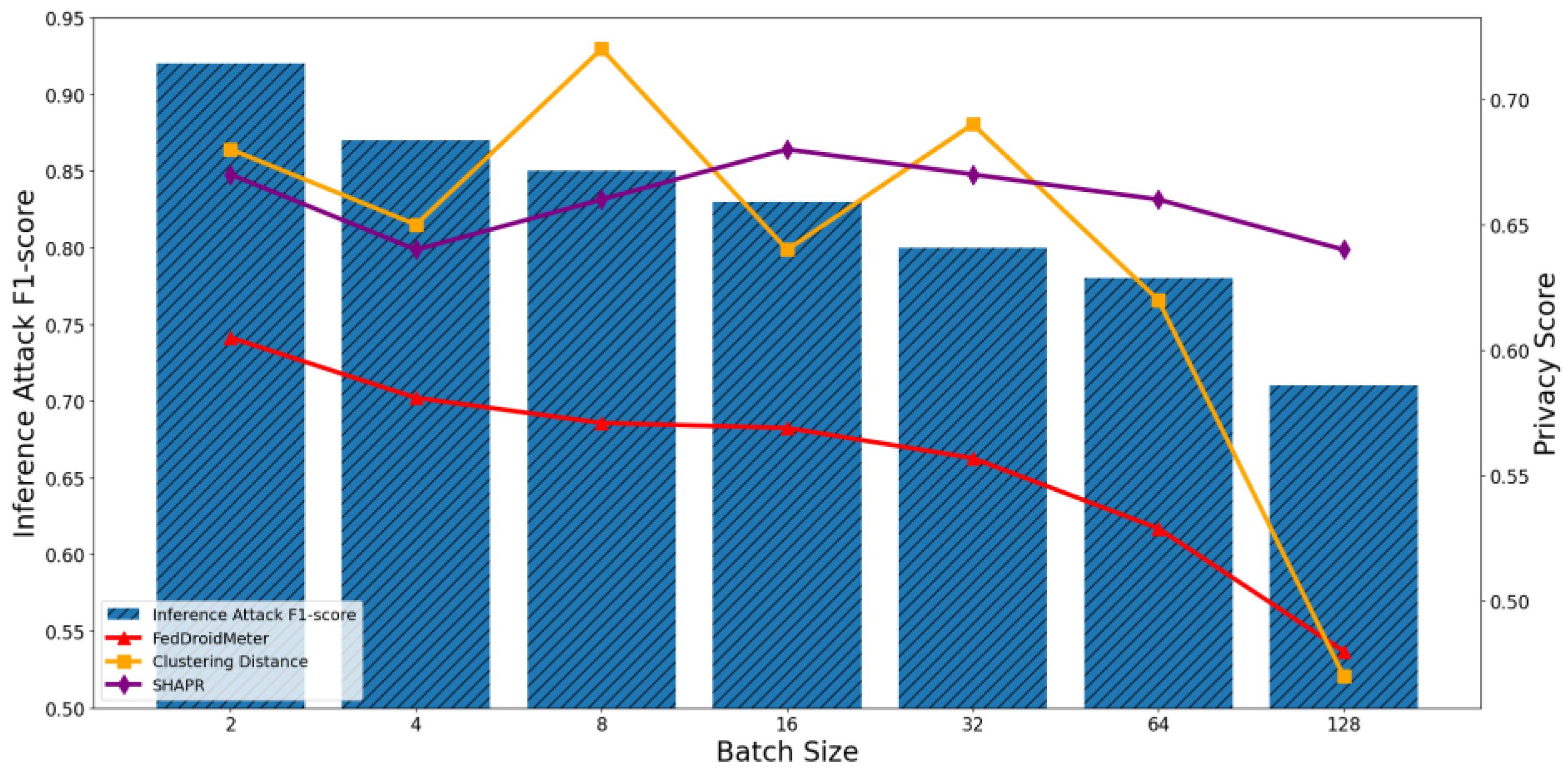

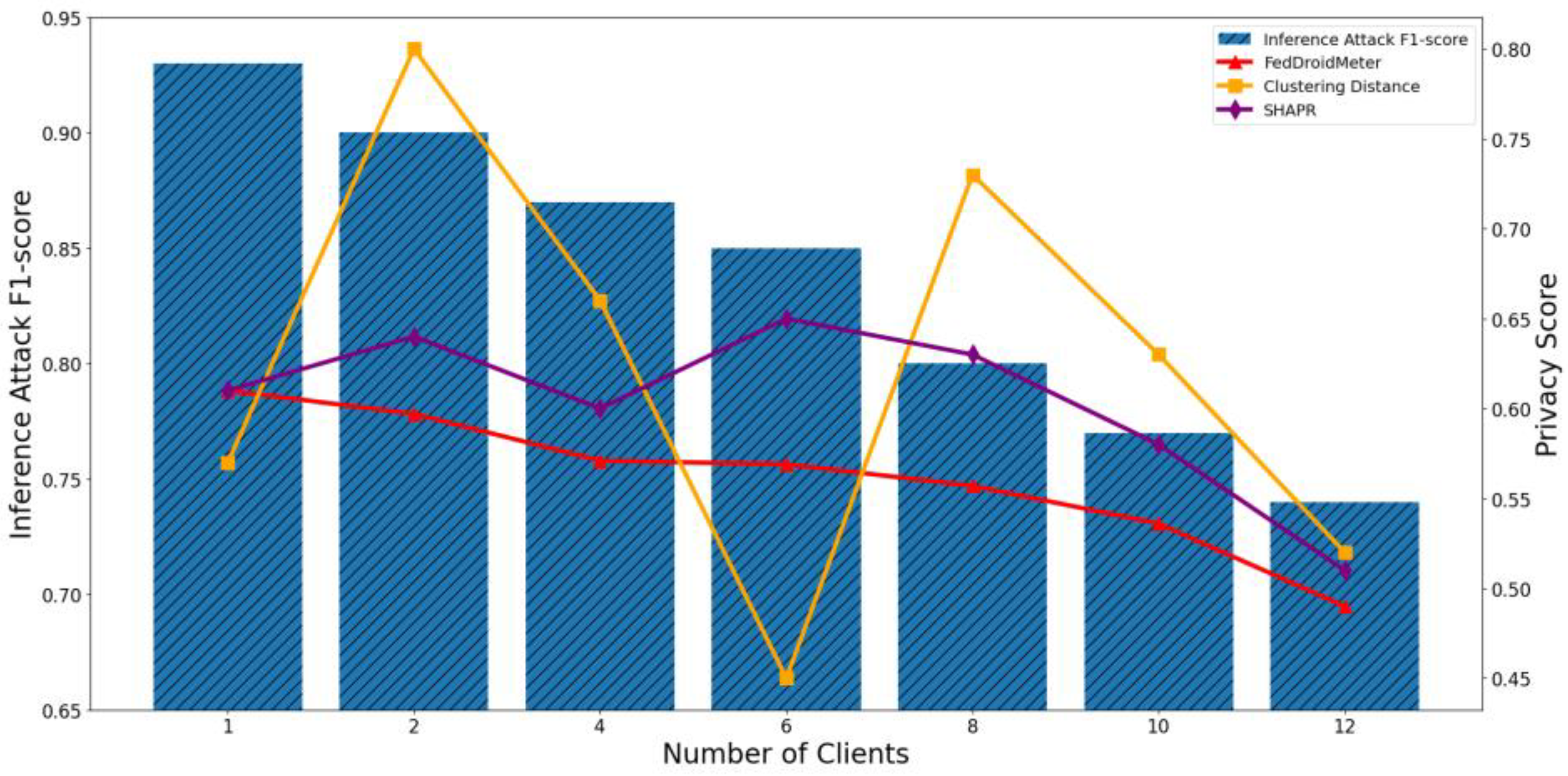

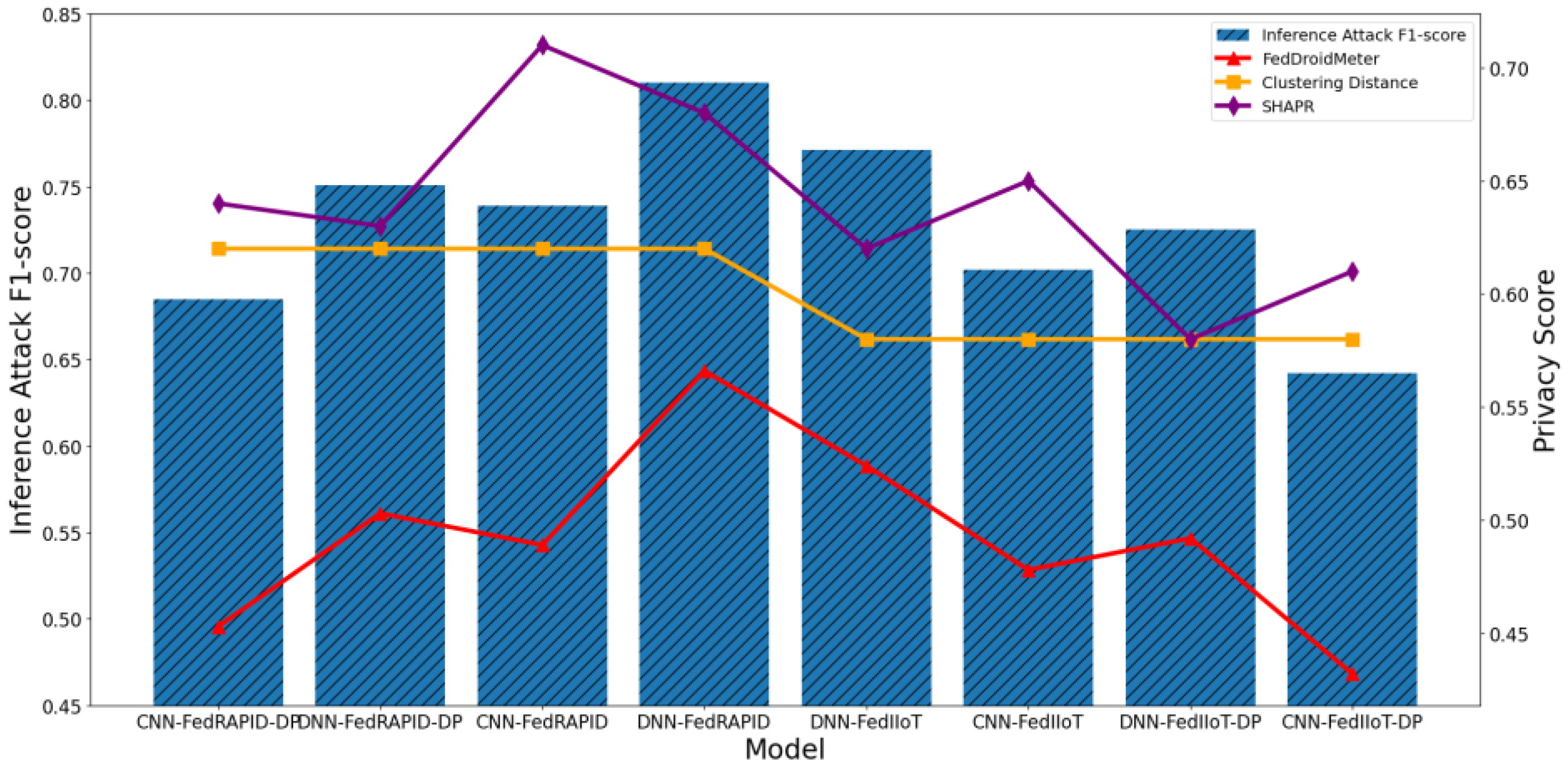

4.4.3. Test the Applicability of Privacy Risk Scores to Different Models

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Qiu, J.; Zhang, J.; Luo, W.; Pan, L.; Nepal, S.; Xiang, Y. A Survey of Android Malware Detection with Deep Neural Models. ACM Comput. Surv. 2021, 53, 1–36. [Google Scholar] [CrossRef]

- Tu, Z.; Cao, H.; Lagerspetz, E.; Fan, Y.; Flores, H.; Tarkoma, S.; Nurmi, P.; Li, Y. Demographics of mobile app usage: Long-term analysis of mobile app usage. CCF Trans. Pervasive Comput. Interact. 2021, 3, 235–252. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Gálvez, R.; Moonsamy, V.; Diaz, C. Less is More: A privacy-respecting Android malware classifier using federated learning. Proc. Priv. Enhancing Technol. arXiv 2020, arXiv:2007.08319. [Google Scholar] [CrossRef]

- Melis, L.; Song, C.; De Cristofaro, E.; Shmatikov, V. Exploiting Unintended Feature Leakage in Collaborative Learning. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 691–706. [Google Scholar]

- Orekondy, T.; Schiele, B.; Fritz, M. Knockoff nets: Stealing functionality of black-box models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4954–4963. [Google Scholar]

- Zhu, L.; Liu, Z.; Han, S. Deep Leakage from Gradients. In Advances in Neural Information Processing Systems; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Available online: https://proceedings.neurips.cc/paper_files/paper/2019/file/60a6c4002cc7b29142def8871531281a-Paper.pdf (accessed on 16 May 2022).

- Shokri, R.; Stronati, M.; Song, C.; Shmatikov, V. Membership Inference Attacks Against Machine Learning Models. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 3–18. [Google Scholar]

- ICO Consultation on the Draft AI Auditing Framework Guidance for Organisations, 2020. Available online: https://ico.org.uk/about-the-ico/ico-and-stakeholder-consultations/ico-consultation-on-the-draft-ai-auditing-framework-guidance-for-organisations/ (accessed on 16 May 2022).

- Tu, Z.; Li, R.; Li, Y.; Wang, G.; Wu, D.; Hui, P.; Su, L.; Jin, D. Your Apps Give You Away. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 138. [Google Scholar] [CrossRef]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Poor, H.V. Federated Learning for Internet of Things: A Comprehensive Survey. IEEE Commun. Surv. Tutorials. 2021, 23, 1622–1658. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Luong, N.C.; Hoang, D.T.; Jiao, Y.; Liang, Y.C.; Yang, Q.; Niyato, D.; Miao, C. Federated learning in mobile edge networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2020, 22, 2031–2063. [Google Scholar] [CrossRef]

- Taheri, R.; Shojafar, M.; Alazab, M.; Tafazolli, R. Fed-IIoT: A Robust Federated Malware Detection Architecture in Industrial IoT. IEEE Trans. Ind. Inform. 2021, 17, 8442–8452. [Google Scholar] [CrossRef]

- Singh, N.; Kasyap, H.; Tripathy, S. Collaborative Learning Based Effective Malware Detection System. In PKDD/ECML Workshops 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 205–219. [Google Scholar] [CrossRef]

- Shukla, S.; Manoj, P.S.; Kolhe, G.; Rafatirad, S. On-device Malware Detection using Performance-Aware and Robust Collaborative Learning. In Proceedings of the DAC 2021, San Francisco, CA, USA, 5–9 December 2021; pp. 967–972. [Google Scholar] [CrossRef]

- Singh, A.K.; Goyal, N. Android Web Security Solution using Cross-device Federated Learning. In Proceedings of the COMSNETS 2022, Bangalore, India, 4–8 January 2022; pp. 473–481. [Google Scholar] [CrossRef]

- Rey, V.; Sánchez, P.M.S.; Celdrán, A.H.; Bovet, G. Federated learning for malware detection in IoT devices. Comput. Netw. 2022, 204, 108693. [Google Scholar] [CrossRef]

- Salem, A.; Zhang, Y.; Humbert, M.; Berrang, P.; Fritz, M.; Backes, M. ML-Leaks: Model and Data Independent Membership Inference Attacks and Defenses on Machine Learning Models. In Proceedings of the NDSS, 2019, San Diego, CA, USA, 24–27 February 2019. [Google Scholar]

- Leino, K.; Fredrikson, M. Stolen Memories: Leveraging model memorization for calibrated white-box membership inference. In USENIX Security, 2020; USENIX: Berkeley, CA, USA, 2020; pp. 1605–1622. [Google Scholar]

- Shafran, A.; Peleg, S.; Hoshen, Y. Membership Inference Attacks are Easier on Difficult Problems. In Proceedings of the ICCV 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 14800–14809. [Google Scholar]

- Ateniese, G.; Mancini, L.V.; Spognardi, A.; Villani, A.; Vitali, D.; Felici, G. Hacking smart machines with smarter ones: How to extract meaningful data from machine learning classifiers. Int. J. Secur. Netw. 2015, 10, 137–150. [Google Scholar] [CrossRef]

- Zhao, B.; Mopuri, K.R.; Bilen, H. iDLG: Improved Deep Leakage from Gradients. arXiv 2020, arXiv:2001.02610. [Google Scholar]

- Song, C.; Shmatikov, V. Overlearning Reveals Sensitive Attributes. In Proceedings of the ICLR, 2020, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Fredrikson, M.; Lantz, E.; Jha, S.; Lin, S.; Page, D.; Ristenpart, T. Privacy in Pharmacogenetics: An End-to-End Case Study of Personalized Warfarin Dosing. In Proceedings of the USENIX Security, San Diego, CA, USA, 20–22 August 2014; pp. 17–32. [Google Scholar]

- Fredrikson, M.; Jha, S.; Ristenpart, T. Model Inversion Attacks that Exploit Confidence Information and Basic Countermeasures. In Proceedings of the CCS, Denver, CO, USA, 12–16 October 2015; pp. 1322–1333. [Google Scholar]

- Carlini, N.; Liu, C.; Erlingsson, Ú.; Kos, J.; Song, D. The Secret Sharer: Evaluating and Testing Unintended Memorizationin Neural Networks. In Proceedings of the USENIX Security, Santa Clara, CA USA, 14–16 August 2019; pp. 267–284. [Google Scholar]

- Nasr, M.; Shokri, R.; Houmansadr, A. Comprehensive Privacy Analysis of Deep Learning: Passive and Active White-box Inference Attacks against Centralizedand Federated Learning. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 1021–1035. [Google Scholar]

- Tramèr, F.; Zhang, F.; Juels, A.; Reiter, M.K.; Ristenpart, T. Stealing machine learning models via prediction {APIs}. In Proceedings of the 25th USENIX Security Symposium (USENIX Security 16), Austin, TX, USA, 10–12 August 2016; pp. 601–618. [Google Scholar]

- Oh, S.J.; Schiele, B.; Fritz, M. Towards Reverse-Engineering Black-Box Neural Networks. In Proceedings of the ICLR, 2018, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Zhang, W.; Tople, S.; Ohrimenko, O. Leakage of Dataset Properties in Multi-Party Machine Learning. In Proceedings of the USENIX Security Symposium 2021, Virtual, 11–13 August 2021; pp. 2687–2704. [Google Scholar]

- Sun, J.; Li, A.; Wang, B.; Yang, H.; Li, H.; Chen, Y. Soteria: Provable defense against privacy leakage in federated learning from representation perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, Nashville, TN, USA, 20–25 June 2021; pp. 9311–9319. [Google Scholar]

- Murakonda, S.K.; Shokri, R. ML Privacy Meter: Aiding Regulatory Compliance by Quantifying the Privacy Risks of Machine Learning. In Workshop on Hot Topics in Privacy Enhancing Technologies (HotPETs), 2020. Available online: https://arxiv.org/abs/2007.09339 (accessed on 16 May 2022).

- Liu, Y.; Wen, R.; He, X.; Salem, A.; Zhang, Z.; Backes, M.; Fritz, M.; Zhang, Y. ML-Doctor: Holistic Risk Assessment of Inference Attacks Against Machine Learning Models. In Proceedings of the USENIX Security Symposium 2022, Boston, MA, USA, 10–12 August 2022; pp. 4525–4542. [Google Scholar]

- Duddu, V.; Szyller, S.; Asokan, N. SHAPr: An Efficient and Versatile Membership Privacy Risk Metric for Machine Learning. arXiv 2021, arXiv:2112.02230. [Google Scholar]

- Song, L.; Mittal, P. Systematic evaluation of privacy risks of machine learning models. In Proceedings of the 30th {USENIX} Security Symposium ({USENIX}Security 21), Virtual, 11–13 August 2021. [Google Scholar]

- Hannun, A.; Guo, C.; van der Maaten, L. Measuring data leakage in machine-learning models with fisher information. arXiv 2021, arXiv:2102.11673. [Google Scholar]

- Saeidian, S.; Cervia, G.; Oechtering, T.J.; Skoglund, M. Quantifying Membership Privacy via Information Leakage. IEEE Trans. Inf. Forensics Secur. 2021, 16, 3096–3108. [Google Scholar] [CrossRef]

- Rassouli, B.; Gündüz, D. Optimal Utility-Privacy Trade-off with Total Variation Distance as a Privacy Measure. IEEE Trans. Inf. Forensics Secur. 2019, 15, 594–603. [Google Scholar] [CrossRef]

- Yu, D.; Kamath, G.; Kulkarni, J.; Yin, J.; Liu, T.Y.; Zhang, H. Per-instance privacy accounting for differentially private stochastic gradient descent. arXiv 2022, arXiv:2206.02617. [Google Scholar]

- Bai, Y.; Fan, M.; Li, Y.; Xie, C. Privacy Risk Assessment of Training Data in Machine Learning. In Proceedings of the ICC 2022, Seoul, Republic of Korea, 16–20 May 2022. [Google Scholar]

- Wagner, I.; Eckhoff, D. Technical privacy metrics: A systematic survey. Comput. Sci. 2018, 51, 1–38. [Google Scholar] [CrossRef]

- Ling, Z.; Hao, Z.J. An Intrusion Detection System Based on Normalized Mutual Information Antibodies Feature Selection and Adaptive Quantum Artificial Immune System. Int. J. Semant. Web Inf. Syst. 2022, 18, 1–25. [Google Scholar] [CrossRef]

- Andrew, G.; Thakkar, O.; McMahan, B. Differentially Private Learning with Adaptive Clipping. In Proceedings of the NeurIPS 2021, Virtual, 6–14 December 2021; pp. 17455–17466. [Google Scholar]

- Allix, K.; Bissyandé, T.F.; Klein, J.; Le Traon, Y. AndroZoo: Collecting millions of Android apps for the research community. In Proceedings of the 13th International Conference on Mining Software Repositories, Austin, TX, USA, 14–15 May 2016; pp. 468–471. [Google Scholar]

- Wainakh, A.; Ventola, F.; Müßig, T.; Keim, J.; Cordero, C.G.; Zimmer, E. User-Level Label Leakage from Gradients in Federated Learning. Proc. Priv. Enhancing Technol. 2022, 2022, 227–244. [Google Scholar] [CrossRef]

| Ref | Methods | Limitation | Attack-Agnostic | User- Oriented | Equal Comparison |

|---|---|---|---|---|---|

| [30] | The results of performing member inference attacks. | These methods depend on the results of specific attack settings and cannot provide effective results evaluation for users. | - | - | - |

| [31] | Mean square error (MSE) between the reconstructed and original images. | - | - | - | |

| [33] | The results of multiple types of privacy inference attacks. | - | - | - | |

| [32] | The results of multiple types of privacy inference attacks. | - | - | - | |

| [34] | Based on the Shapley value of samples. | These methods are not targeted to evaluate the sensitive information related to user privacy and cannot capture the essential cause of privacy disclosure. | - | - | - |

| [37] | Maximum information leakage. | √ | - | - | |

| [38] | Total variation distance. | √ | - | - | |

| [35] | Probability of a single sample in the target model training set. | √ | - | √ | |

| [36] | Fisher Information. | √ | - | - | |

| [39] | Case-by-case privacy accounting for DP. | √ | - | - | |

| [40] | K-means clustering distance. | √ | - | - | |

| Proposed FedDroidMeter | Normalized mutual information of the user’s sensitive information. | - | √ | √ | √ |

| Principle | Description |

|---|---|

| User-oriented privacy requirements | The resulting privacy risk score must relate to the greatest likelihood that the sensitive information (the user cares about) will be inferred to succeed. |

| Attack-agnostic | The metrics should capture the root cause of any perceived attack success. The privacy risk score it calculates must be independent of the particular attack model, allowing the score to assess the privacy risk from different or future inferred attacks. |

| Equal comparison between different use cases | The privacy risk score obtained from the evaluation should be applied to different use cases for equal comparison. |

| Abbreviation and Symbol | Explanation |

|---|---|

| FL | Federated learning |

| DL | Deep learning |

| ML | Machine learning |

| Local dataset of app sample | |

| Size of local dataset | |

| Global classification model | |

| Global loss function | |

| Local loss function | |

| Feature space of Android malware | |

| Category space of Android malware | |

| Feature of Android malware | |

| Category of Android malware | |

| The functional class of the training app samples | |

| Gradient of initialization parameters | |

| Sensitive information in the system | |

| Mutual information | |

| Normalized mutual information | |

| Privacy risk score | |

| Global sensitivity | |

| Category of Malware | Quantity | Category of App Function | Quantity |

|---|---|---|---|

| Benign | 29,977 | Photography | 5104 |

| Malicious | 23,760 | Books | 6381 |

| Adware | 4728 | Sports | 4632 |

| Trojan | 3638 | Weather | 5396 |

| Riskware | 3193 | Game | 7142 |

| Ransom | 4322 | Finance | 5665 |

| Exploit | 1825 | Health And Fitness | 4745 |

| Spyware | 2476 | Music and Audio | 6043 |

| Downloader | 4023 | Shopping | 3874 |

| Fraudware | 3776 | Communication | 4824 |

| Performance Metrics | Calculation Formula |

|---|---|

| Precision | |

| Recall | |

| F1-score |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, C.; Xia, C.; Liu, Z.; Wang, T. FedDroidMeter: A Privacy Risk Evaluator for FL-Based Android Malware Classification Systems. Entropy 2023, 25, 1053. https://doi.org/10.3390/e25071053

Jiang C, Xia C, Liu Z, Wang T. FedDroidMeter: A Privacy Risk Evaluator for FL-Based Android Malware Classification Systems. Entropy. 2023; 25(7):1053. https://doi.org/10.3390/e25071053

Chicago/Turabian StyleJiang, Changnan, Chunhe Xia, Zhuodong Liu, and Tianbo Wang. 2023. "FedDroidMeter: A Privacy Risk Evaluator for FL-Based Android Malware Classification Systems" Entropy 25, no. 7: 1053. https://doi.org/10.3390/e25071053

APA StyleJiang, C., Xia, C., Liu, Z., & Wang, T. (2023). FedDroidMeter: A Privacy Risk Evaluator for FL-Based Android Malware Classification Systems. Entropy, 25(7), 1053. https://doi.org/10.3390/e25071053