1. Introduction

Differential privacy (DP) [

1] has emerged as a de facto standard for privacy-preserving technologies in research and practice due to the quantifiable privacy guarantee it provides. DP involves randomizing the outputs of an algorithm in such a way that the presence or absence of a single individual’s information within a database does not significantly affect the outcome of the algorithm. DP typically introduces randomness in the form of additive noise, ensuring that an adversary cannot infer any information about a particular record with high confidence. The key challenge is to keep the performance or

utility of the noisy algorithm close enough to the unperturbed one to be useful in practice [

2].

In its pure form, DP measures privacy risk by a parameter

, which can be interpreted as the

privacy budget, that bounds the log-likelihood ratio of the output of a private algorithm under two datasets differing in a single individual’s data. The smaller

used, the greater the privacy ensured, but at the cost of worse performance. In privacy-preserving machine learning models, higher values of

are generally chosen to achieve acceptable utility. However, setting

to arbitrarily large values severely undermines privacy, although there are no hard threshold values for

above which formal guarantees provided by DP become meaningless in practice [

3]. In order to improve utility for a given privacy budget, a relaxed definition of differential privacy, referred to as

-DP, was proposed [

4]. Under this privacy notion, a randomized algorithm is considered privacy-preserving if the privacy loss of the output is smaller than

with a high probability (i.e., with probability at least

) [

5].

Our current work is motivated by the necessity of a decentralized differentially private algorithm to efficiently solve practical signal estimation and learning problems that (i) offers better privacy–utility trade-off compared to existing approaches, and (ii) offers similar utility as the pooled-data (or centralized) scenario. Some noteworthy real-world examples of systems that may need such differentially private decentralized solutions include [

6]: (i) medical research consortium of healthcare centers and labs, (ii) decentralized speech processing systems for learning model parameters for speaker recognition, (iii) multi-party cyber-physical systems. To this end, we first focus on improving the privacy–utility trade-off of a well known DP mechanism, called the

functional mechanism (FM) [

7]. The FM approach is more general and requires fewer assumptions on the objective function than other objective perturbation approaches [

8,

9].

The functional mechanism was originally proposed for “pure”

-DP. However, it involves an additive noise with very large variance for datasets with even moderate ambient dimension, leading to a severe degradation in utility. We propose a natural “approximate”

-DP variant using Gaussian noise and show that the proposed

Gaussian FM scheme significantly reduces the additive noise variance. A recent work by Ding et al. [

10] proposed

relaxed FM using the Extended Gaussian mechanism [

11], which also guarantees approximate

-DP instead of pure DP. However, we will show analytically and empirically that, just like the original FM, the relaxed FM also suffers from prohibitively large noise variance even for moderate ambient dimensions. Our tighter sensitivity analysis for the Gaussian FM, which is different from the technique used in [

10], allows us to achieve much better utility for the same privacy guarantee. We further extend the proposed Gaussian FM framework to the decentralized or “federated” learning setting using the

protocol [

6]. Our

algorithm can offer the same level of utility as the centralized case over a range of parameters. Our empirical evaluation of the proposed algorithms on synthetic and real datasets demonstrates the superiority of the proposed schemes over the existing methods. We now review the relevant existing research works in this area before summarizing our contributions.

Related Works. There is a vast literature on the perturbation techniques to ensure DP in machine learning algorithms. The simplest method for ensuring that an algorithm satisfies DP is

input perturbation, where noise is introduced to the input of the algorithm [

2]. Another common approach is

output perturbation, which obtains DP by adding noise to the output of the problem. In many machine learning algorithms, the underlying objective function is minimized with gradient descent. As the gradient is dependent on the privacy-sensitive data, randomization is introduced at each step of the gradient descent [

9,

12]. The amount of noise we need to add at each step depends on the

sensitivity of the function to changes in its input [

4].

Objective perturbation [

8,

9,

13] is another state-of-the-art method to obtain DP, where noise is added to the underlying objective function of the machine learning algorithm, rather than its solutions. A newly proposed take on output perturbation [

14] injects noise after model convergence, which imposes some additional constraints. In addition to optimization problems, Smith [

15] proposed a general approach for computing summary statistics using the

sample-and-aggregate framework and both the Laplace and Exponential mechanisms [

16].

Zhang et al. originally proposed

functional mechanism (FM) [

7] as an extension to the Laplace mechanism. FM has been used in numerous studies to ensure DP in practical settings. Jorgensen et al. applied FM in personalized differential privacy (PDP) [

17], where the privacy requirements are specified at the user-level, rather than by a single, global privacy parameter. FM has also been combined with homomorphic encryption [

18] to obtain both data secrecy and output privacy, as well as with fairness-aware learning [

10,

19] in classification models. The work of Fredrikson et al. [

20], which demonstrated privacy in pharmacogenetics using FM and other DP mechanisms, is of particular interest to us. Pharmacogenetic models [

21,

22,

23,

24] contain sensitive clinical and genomic data that need to be protected. However, poor utility of differentially private pharmacogenetic models can expose patients to increased risk of disease. Fredrikson et al. [

20] tested the efficacy of such models against attribute inference by using a model inversion technique. Their study shows that, although not explicitly designed to protect attribute privacy, DP can prevent attackers from accurately predicting genetic markers if

is sufficiently small (≤1). However, the small value of

results in poor utility of the models due to excessive noise addition, leading them to conclude that when utility cannot be compromised much,

the existing methods do not give an ϵ for which state-of-the-art DP mechanisms can be reasonably employed. As mentioned before, Ding et al. [

10] recently proposed relaxed FM in an attempt to improve upon the original FM using the Extended Gaussian mechanism [

11], which offered approximate DP guarantee.

DP algorithms provide different guarantees than Secure Multi-party Computation (SMC)-based methods. Several studies [

25,

26,

27] applied a combination of SMC and DP for distributed learning. Gade and Vaidya [

25] demonstrated one such method in which each site adds and subtracts arbitrary functions to confuse the adversary. Heikkilä et al. [

26] also studied the relationship of additive noise and sample size in a distributed setting. In their model,

S data holders communicate their data to

M computation nodes to compute a function. Tajeddine et al. [

27] used DP-SMC on vertically partitioned data, i.e., where data of the same participants are distributed across multiple parties or data holders. Bonawitz et al. [

28] proposed a communication-efficient method for federated learning over a large number of mobile devices. More recently, Heikkilä et al. [

29] considered DP in a cross-silo federated learning setting by combining it with additive homomorphic secure summation protocols. Xu et al. [

30] investigated DP for multiparty learning in vertically partitioned data setting. Their proposed framework dissects the objective function into single-party and cross-party sub-functions, and applies functional mechanisms and secure aggregation to achieve the same utility as the centralized DP model. Inspired by the seminal work of Dwork et al. [

31] that proposed distributed noise generation for preserving privacy, Imtiaz et al. [

6] proposed the

Correlation Private Estimation () protocol.

employs a similar principle as Anandan and Clifton [

32] to

reduce the noise added for DP in decentralized-data settings.

Our Contributions. As mentioned before, we are motivated by the necessity of a decentralized differentially private algorithm that injects a smaller amount of noise (compared to existing approaches) to efficiently solve practical signal estimation and learning problems. To that end, we first propose an improvement to the existing functional mechanism. We achieve this by performing a tighter characterization of the sensitivity analysis, which significantly reduces the additive noise variance. As we utilize the Gaussian mechanism [

33] to ensure

-DP, we call our improved functional mechanism

Gaussian FM. Using our novel sensitivity analysis, we show that the proposed Gaussian FM injects a much smaller amount of additive noise compared to the original FM [

7] and the relaxed FM [

10] algorithms. We empirically show the superiority of Gaussian FM in terms of privacy guarantee and utility by comparing it with the corresponding non-private algorithm, the original FM [

7], the relaxed FM [

10], the objective perturbation [

8], and the noisy gradient descent [

12] methods. Note that the original FM [

7] and the objective perturbation [

8] methods guarantee pure DP, whereas the other methods guarantee approximate DP. We compare our

-DP Gaussian FM with the pure DP algorithms as a means for investigating how much performance/utility gain one can achieve by trading off pure the DP guarantee with an approximate DP guarantee. Additionally, the noisy gradient descent method is a multi-round algorithm. Due to the composition theorem of differential privacy [

33], the privacy budgets in multi-round algorithms accumulate across the number of iterations during training. In order to perform better accounting for the total privacy loss in the noisy gradient descent algorithm, we use Rényi differential privacy [

34].

Considering the fact that machine learning algorithms are often used in decentralized/federated data settings, we adapt our proposed Gaussian FM algorithm to decentralized/federated data settings following the (

) [

6] protocol, and propose

. In many signal processing and machine learning applications, where privacy regulations prevent sites from sharing the local raw data, joint learning across datasets can yield discoveries that are impossible to obtain from a single site. Motivated by scientific collaborations that are common in human health research,

improves upon the conventional decentralized DP schemes and achieves the same level of utility as the pooled-data scenario in certain regimes. It has been shown [

6] that

can benefit computations with sensitivies satisfying some conditions. Many functions of interest in machine learning and deep neural networks have sensitivites that satisfy these conditions. Our proposed

algorithm utilizes the Stone–Weierstrass theorem [

35] to approximate a cost function in the decentralized-data setting and employs the

protocol.

To summarize, the goal of our work is to improve the privacy–utility trade-off and reduce the amount of noise in the functional mechanism at the expense of approximate DP guarantee for applications of machine learning in decentralized/federated data settings, similar to those found in research consortia. Our main contributions are:

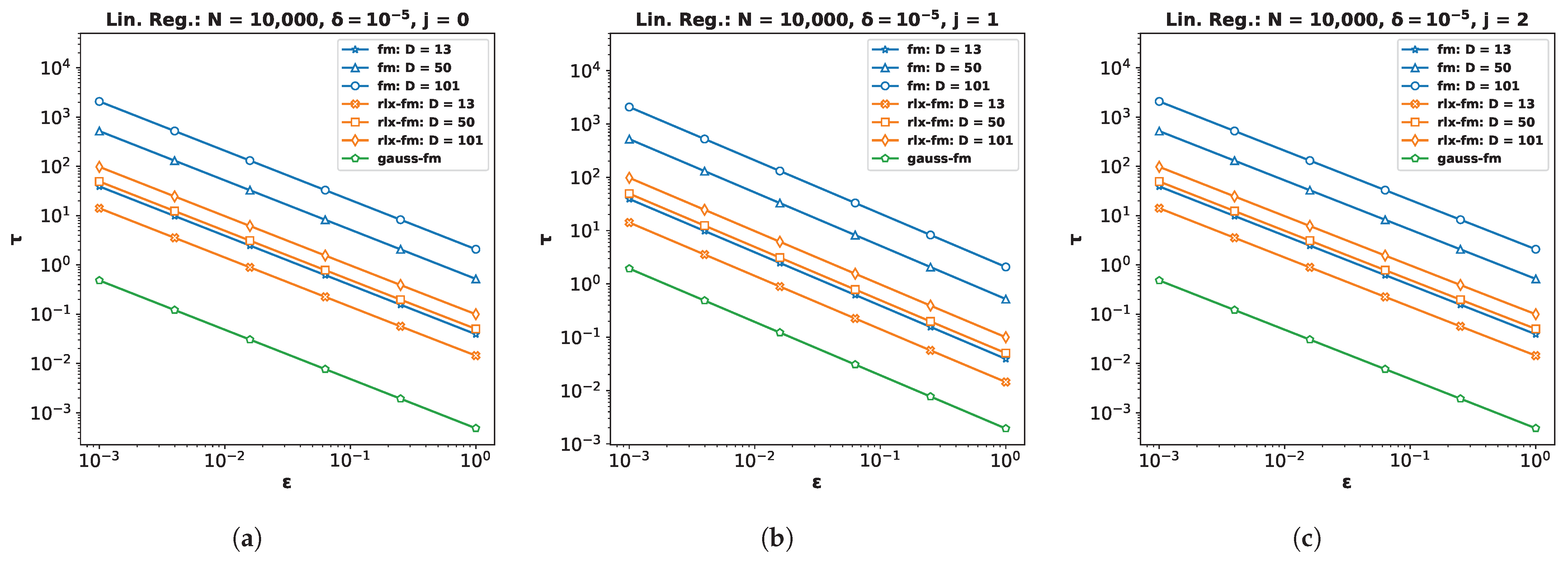

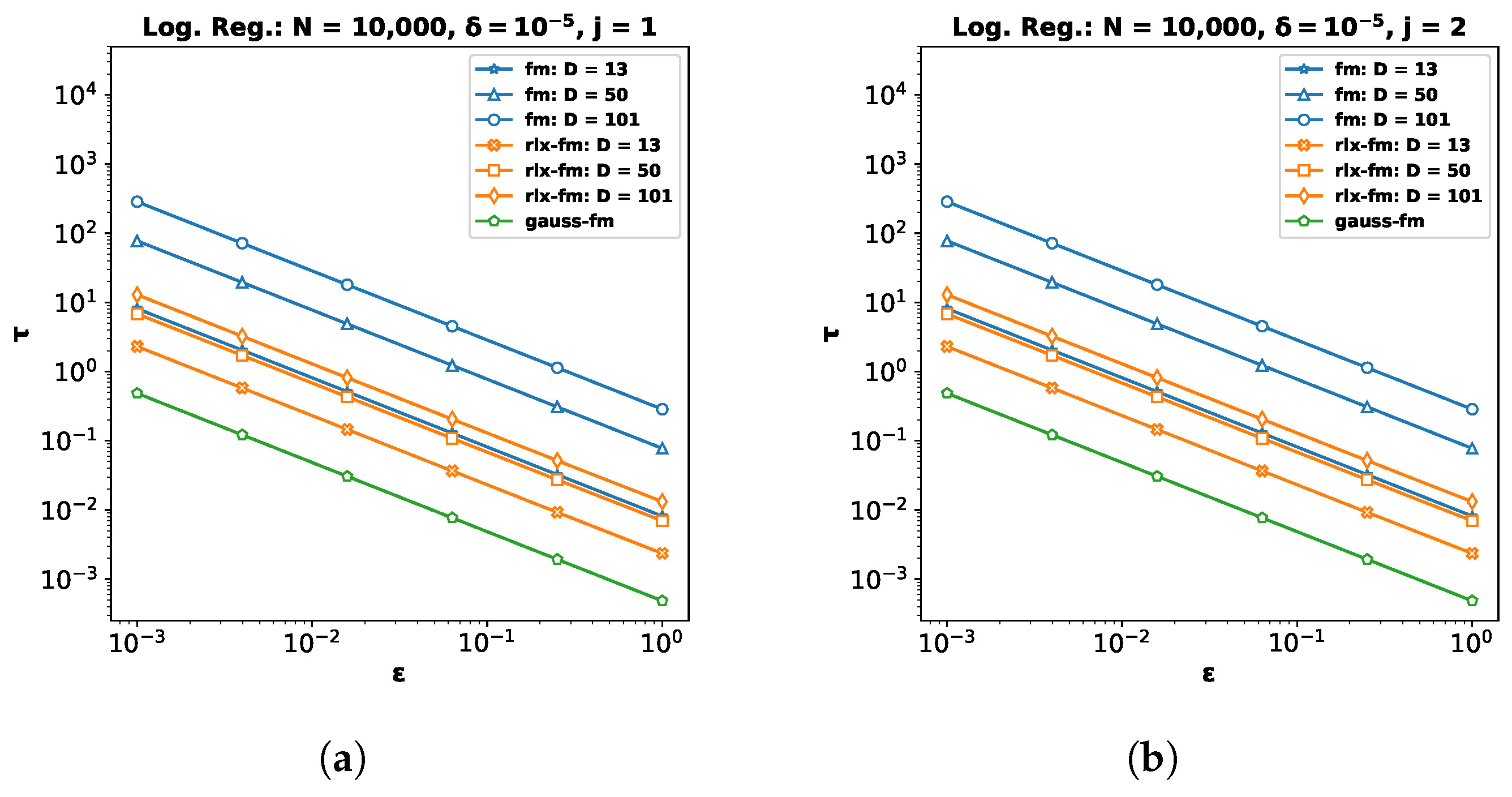

We propose Gaussian FM as an improvement over the existing functional mechanism by performing a tighter sensitivity analysis. Our novel analysis has two major features: (i) the sensitivity parameters of the data-dependent (hence, privacy-sensitive) polynomial coefficients of the Stone–Weierstrass decomposition of the objective function are free of the dataset dimensionality; and (ii) the additive noise for privacy is tailored for the

order of the polynomial coefficient of the Stone–Weierstrass decomposition of the objective function, rather than being the same for all coefficients. These features give our proposed Gaussian FM a significant advantage by offering much less noisy function computation compared to both the original FM [

7] and the relaxed FM [

10], as shown for linear and logistic regression problems. We also empirically validate this on real and synthetic data.

We extend our Gaussian FM to decentralized/federated data settings to propose , a novel extension of the functional mechanism for decentralized-data. To this end, we note another significant advantage of our proposed Gaussian FM over the original FM: the Gaussian FM can be readily extended to decentralized/federated data settings by exploiting the fact that the sum of a number of Gaussian random variables is another Gaussian random variable, which is not true for Laplace random variables. We show that the proposed can achieve the same utility as the pooled-data scenario for some parameter choices. To the best of our knowledge, our work is the first functional mechanism for decentralized-data settings.

We demonstrate the effectiveness of our algorithms with varying privacy and dataset parameters. Our privacy analysis and empirical results on real and synthetic datasets show that the proposed algorithms can achieve much better utility than the existing state-of-the-art algorithms.

3. Functional Mechanism with Approximate Differential Privacy: Gaussian FM

Zhang et al. [

7] computed the

-sensitivity

of the data-dependent terms for linear regression and logistic regression problems. The

is shown to be

for linear regression, and

for logistic regression. We note that

grows quadratically with the ambient dimension of the data samples, resulting in a excessively large amount of noise to be injected into the objective function. Additionally, Ding et al. [

10] proposed relaxed FM, a “utility-enhancement scheme”, by replacing the Laplace mechanism with the Extended Gaussian mechanism [

11], and thus achieving slightly better utility than the original FM at the expense of an approximate DP guarantee instead of a pure DP guarantee. However, Ding et al. [

10] showed that the

-sensitivity of the data-dependent terms for the logistic regression problem is

. Additionally, using the technique outlined in [

10], it can be shown that the

-sensitivity of the data-dependent terms is

for the linear regression problem (please see

Appendix A for details). For both cases, we observe that

grows linearly with the ambient dimension of the data samples. Therefore, the privacy-preserving additive noise variances in both the original FM and relaxed FM schemes are data-dimensionality dependent, and therefore, can be prohibitively large even for moderate

D. Moreover, both FM and relaxed FM schemes add the same amount of noise to each polynomial coefficient

irrespective of the order

j. With a tighter characterization, we show in

Section 4 that the sensitivities of these coefficients are different for different order

j. We reduce the amount of added noise by addressing these issues and performing a novel sensitivity analysis. The key points are as follows:

Instead of computing the -DP approximation of the objective function using the Laplace mechanism, we use the Gaussian mechanism to compute the ()-DP approximation of . This gives a weaker privacy guarantee than the pure differential privacy, but provides much better utility.

Recall that the original FM achieves

-DP by adding Laplace noise scaled to the

-sensitivity of the data-dependent terms of the objective function

in (

2). As we use the Gaussian mechanism, we require

-sensitivity analysis. To compute the

-sensitivity of the data-dependent terms of the objective function

in (

2), we first define an

array that contains

as its entries for all

. The term “array” is used because the dimension of

depends on the cardinality of

. For example, for

,

is a scalar because

; for

,

can be expressed as a

D-dimensional vector because

; for

,

can be expressed as a

matrix because

.

We rewrite the objective function as

where

is the array containing all

as its entries. Note that

and

have the same dimensions and number of elements. We define the

-sensitivity of

as

where

and

are computed on neighboring datasets

and

, respectively. Following the Gaussian mechanism [

33], we can calculate the

differentially private estimate of

, denoted

as

where the noise array

has the same dimension as

, and contains entries drawn i.i.d. from

with

. Finally, we have

Theorem 2 (Privacy of the Gaussian FM (Algorithm 1))

. Consider Algorithm 1 with privacy parameters , and the empirical average cost function represented as in (3). Then Algorithm 1 computes an differentially private approximation to . Consequently, the minimizer satisfies -differential privacy. | Algorithm 1 Gaussian FM |

- Require:

Data samples for ; cost function represented as in ( 3); privacy parameters ( ). - 1:

for

do - 2:

- 3:

Compute - 4:

Compute - 5:

Compute with the same dimension as - 6:

Release - 7:

end for - 8:

Compute - 9:

return Perturbed objective function

|

Proof. The proof of Theorem 2 follows from the fact that the function

depends on the data samples only through

. The computation of

is

-differentially private by the Gaussian mechanism [

4,

33]. Therefore, the release of

satisfies

-differential privacy. One way to rationalize this is to consider that the probability of the event of selecting a particular set of

is the same as the event of formulating a function

with that set of

. Therefore, it suffices to consider the joint density of the

and find an upper bound on the ratio of the joint densities of the

under two neighboring datasets

and

. As we employ the Gaussian mechanism to compute

, the ratio is upper bounded by

with probability at least

. Therefore, the release of

satisfies

-differential privacy. Furthermore, differential privacy is post-processing invariant. Therefore, the computation of the minimizer

also satisfies

-differential privacy. □

Privacy Analysis of Noisy Gradient Descent [12] using Rényi Differential Privacy. One of the most crucial qualitative properties of DP is that it allows us to evaluate the cumulative privacy loss over multiple computations [

33]. Cumulative, or total, privacy loss is different from (

)-DP in multi-round machine learning algorithms. In order to demonstrate the superior privacy guarantee of the proposed Gaussian FM, we compare it to the existing functional mechanism [

7], the relaxed functional mechanism [

10], the objective perturbation [

8], and the noisy gradient descent [

12] method. Note that, similar to objective perturbation, FM and relaxed FM, the proposed Gaussian FM injects randomness in a single round, and therefore does not require privacy accounting. However, the noisy gradient descent method involves addition of noise in each step the gradient is computed. That is, noise is added to the computed gradients of the parameters of the objective function during optimization. Since it is a multi-round algorithm, the overall

used during optimization is different from the

for every iteration. We follow the analysis procedure outlined in [

6] for the privacy accounting of the noisy gradient descent algorithm. Note that Proposition 3 described in

Section 2.1 is defined for functions with unit

-sensitivity. Therefore, if a noise from

is added to a function with sensitivity

, then the resulting mechanism satisfies

-RDP. Now, according to Proposition 3, the

T-fold composition of Gaussian mechanisms satisfies

-RDP. Finally, according to Proposition 1, it also satisfies

-differential privacy for any

, where

. For a given value of

, we can express the value of the optimal overall

as a function of

:

where

is given by

We compute the overall

following this procedure for the noisy gradient descent algorithm [

12] in our experiments in

Section 6.

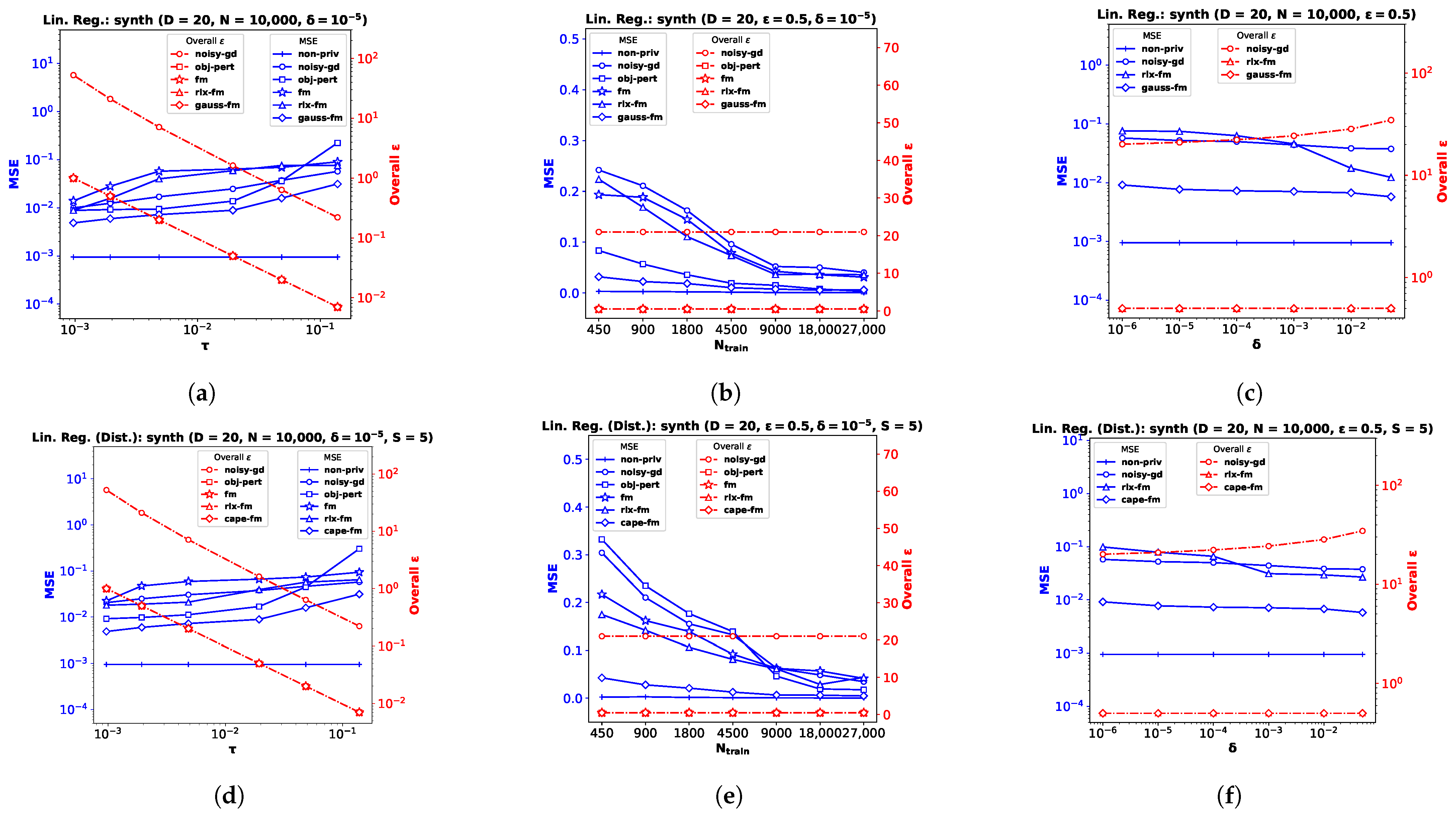

6. Experimental Results

In this section, we empirically compare the performance of our proposed Gaussian FM algorithm (

gauss-fm) with those of some state-of-the-art differentially private linear and logistic regression algorithms, namely noisy gradient descent (

noisy-gd) [

12], objective perturbation (

obj-pert) [

8], original functional mechanism (

fm) [

7], and relaxed functional mechanism (

rlx-fm) [

10]. We also compare the performance of these algorithms with non-private linear and logistic regression (

non-priv). As mentioned before, we compute the overall

using RDP for the multi-round

noisy-gd algorithm. Additionally, we show how our proposed decentralized functional mechanism (

cape-fm) can improve a decentralized computation if the target function has sensitivity satisfying the conditions of Proposition 5 in

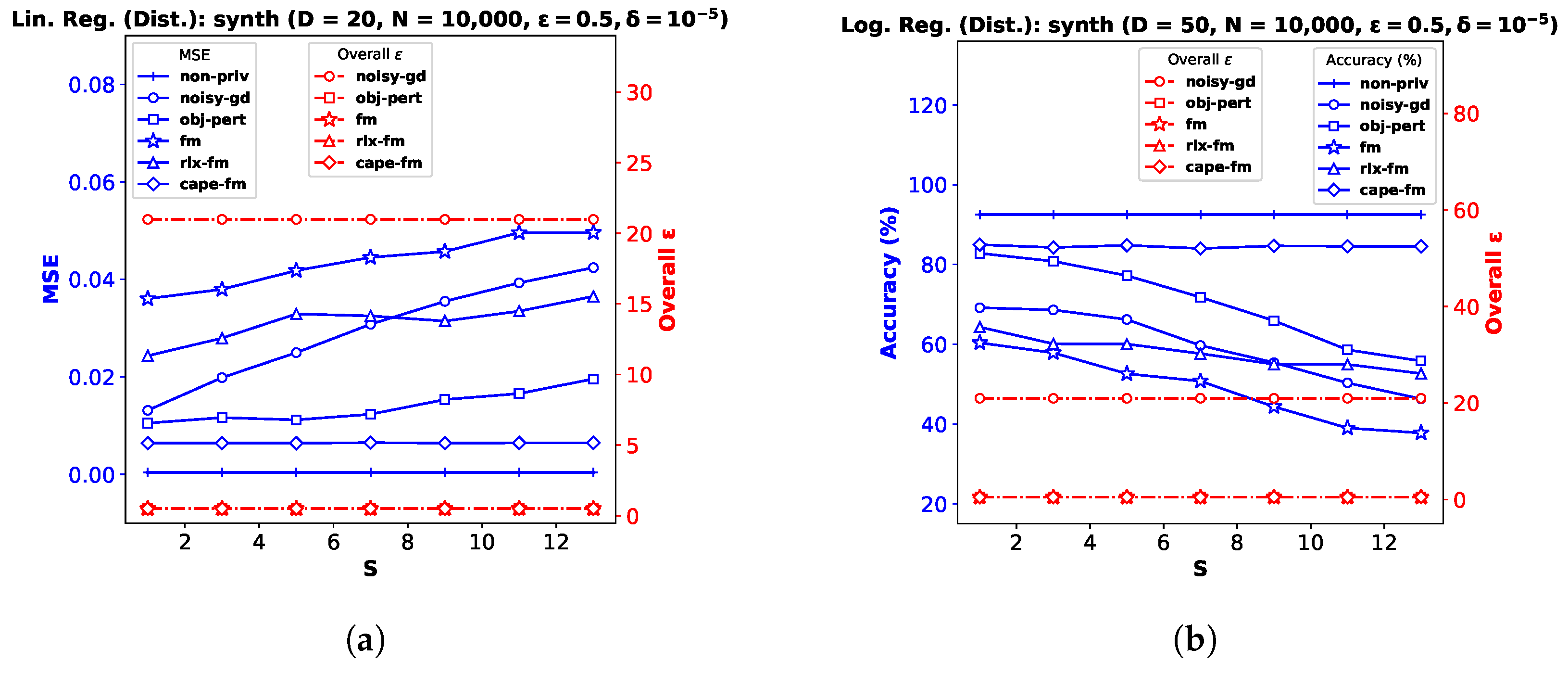

Section 2.1. We show the variation in performance with privacy parameters and number of training samples. For the decentralized setting, we further show the empirical performance comparison by varying the number of sites.

Performance Indices. For the linear regression task, we use the mean squared error (MSE) as the performance index. Let the test dataset be

. Then the MSE can be defined as:

, where

is the prediction from the algorithm. For the classification task, we use accuracy as the performance index. The accuracy can be defined as:

, where

is the indicator function, and

is the prediction from the algorithm. Note that, in addition to a small MSE or large accuracy, we want to attain a strict privacy guarantee, i.e., small overall

values. Recall from

Section 3 that the overall

for multi-shot algorithms is a function of the number of iterations, the target

, the additive noise variance

and the

sensitivity

. To demonstrate the overall

guarantee for a fixed target

, we plotted the overall

(with dotted red lines on the right

y-axis) along with MSE/accuracy (with solid blue lines on the left

y-axis) as a means for visualizing how the privacy–utility trade-off varies with different parameters. For a given privacy budget (or performance requirement), the user can use the overall

plot on the right

y-axis, shown with dotted lines, (or MSE/accuracy plot on the left

y-axis, shown with solid lines) to find the required noise standard deviation

on the

x-axis and, thereby, find the corresponding performance (or overall

). We compute the overall

for the

noisy-gd algorithm using the RDP technique shown in

Section 3.

6.1. Linear Regression

For the linear regression problem, we perform experiments on three real datasets (and a synthetic dataset, as shown in

Appendix B). The

pharmacogenetic dataset was collected by the

International Warfarin Pharmacogenetics Consortium (

IWPC) [

23] for the purpose of estimating personalized warfarin dose based on clinical and genotype information of a patient. The data used for this study have ambient dimension

, and features are collected from

patients. Out of the wide variety of numerical modeling methods used in [

23], linear regression provided the most accurate dose estimates. Fredrikson et al. [

20] later implemented an attack model assuming an adversary who employed an inference algorithm to discover the genotype of a target individual, and showed that an existing functional mechanism (

fm) failed to provide a meaningful privacy guarantee to prevent such attacks. We perform privacy-preserving linear regression on the

IWPC dataset (

Figure 1a–c) to show the effectiveness of our proposed

gauss-fm over

fm,

rlx-fm, and other existing approaches. Additionally, we use the

Communities and Crime dataset (

crime) [

45], which has a larger dimensionality

(

Figure 1d–f), and the

Buzz in Social Media dataset (

twitter) [

46] with

and a large sample size

(

Figure 1g–i). We refer the reader to [

47] for a detailed description of these real datasets. For all the experiments, we pre-process the data so that the samples satisfy the assumptions

and

∀

. We divide each dataset into train and test partitions with a ratio of 90:10. We show the average performance over 10 independent runs.

Performance Comparison with Varying . We first investigate the variation of MSE with the DP additive noise standard deviation

. We plot MSE against

in

Figure 1a,d,g. Recall from Definition 3 that, in the Gaussian mechanism, the noise is drawn from a Gaussian distribution with standard deviation

. We keep

fixed at

. Note that one can vary

to vary

. Since noise standard deviation is inversely proportional to

, increasing

means decreasing

, i.e., smaller noise variance. We observe from the plots that smaller

leads to smaller MSE for all DP algorithms, indicating better utility at the expense of higher privacy loss. It is evident from these MSE vs.

plots that our proposed method

gauss-fm has much smaller MSE compared to all the other methods for the same

values for all datasets. The

obj-pert and

fm algorithms offer pure DP by trading off utility, whereas

gauss-fm and

rlx-fm algorithms offer approximate DP. Although

rlx-fm improves upon

fm, the excess noise due to linear dependence on data dimension

D leads to higher MSE than

gauss-fm. Our proposed

gauss-fm outperforms all of these methods by reducing the additive noise with the novel sensitivity analysis as shown in

Section 4. We recall that the overall privacy loss for

noisy-gd is calculated using the RDP approach, since noise is injected into the gradients in every iteration during optimization, with target

. On the other hand,

gauss-fm,

rlx-fm, and

fm add noise to the polynomial coefficients of the cost function

before optimization, and

obj-pert injects noise into the regularized cost function [

8]. We plot the total privacy loss for all of the algorithms against

. We observe from the

y-axis on the right that the total privacy loss of the multi-round

noisy-gd is considerably higher than the single-shot algorithms.

Performance Comparison with Varying . Next, we investigate the variation of MSE with the number of training samples

. For this task, we shuffle and divide the total number of samples

N into smaller partitions and perform the same pre-processing steps, while keeping the test partition untouched. We kept the values of the privacy parameters fixed:

and

. We plot MSE against

in

Figure 1b,e,h. We observe that performance generally improves with the increase in

, which indicates that it is easier to ensure the same level of privacy when the training dataset cardinality is higher. We also observe from the MSE vs.

plots that our proposed method

gauss-fm offers MSE very close to that of

non-priv even for moderate sample sizes, outperforming

fm,

rlx-fm,

noisy-gd, and

obj-pert. Again, we compute the overall

spent using RDP for

noisy-gd, and show that the multi-round algorithm suffers from larger privacy loss. Recall from (

7) in

Section 3 that the overall

depends on sensitivity

, and the number of iterations

T. In the computation of

, the number of training samples

is cancelled out. Thus, the overall

depends only on

T for

noisy-gd. We keep

T fixed at 1000 iterations for

noisy-gd and observe that the overall privacy risk exceeds 20. Note that we set the value of the target

in (

7) to be equal to

in our computations.

Performance Comparison with Varying . Recall that we can interpret the privacy parameter

as the probability that an algorithm fails to provide privacy risk

. The

obj-pert and

fm algorithms offer pure

-DP, where the additional privacy parameter

is zero. Hence, we compare our proposed

gauss-fm method with the

rlx-fm and

noisy-gd methods, which also guarantee (

,

)-DP. In the Gaussian mechanism,

is in the denominator of the logarithmic term within the square root in the expression of

. Therefore, the noise variance

is not significantly changed by varying

. We keep privacy parameter

fixed at

and observe from the MSE vs.

plots in

Figure 1c,f,i show that the performance of our algorithm does not degrade much for smaller

. For the

IWPC dataset in

Figure 1c, for a value of

as small as

(indicating

probability of the algorithm failing to provide

-differential privacy), the MSE of

gauss-fm is almost the same as that of the

non-priv case. For the other datasets, our proposed method also gives better performance and overall

, and thus a better privacy–utility trade-off than

rlx-fm and

noisy-gd.

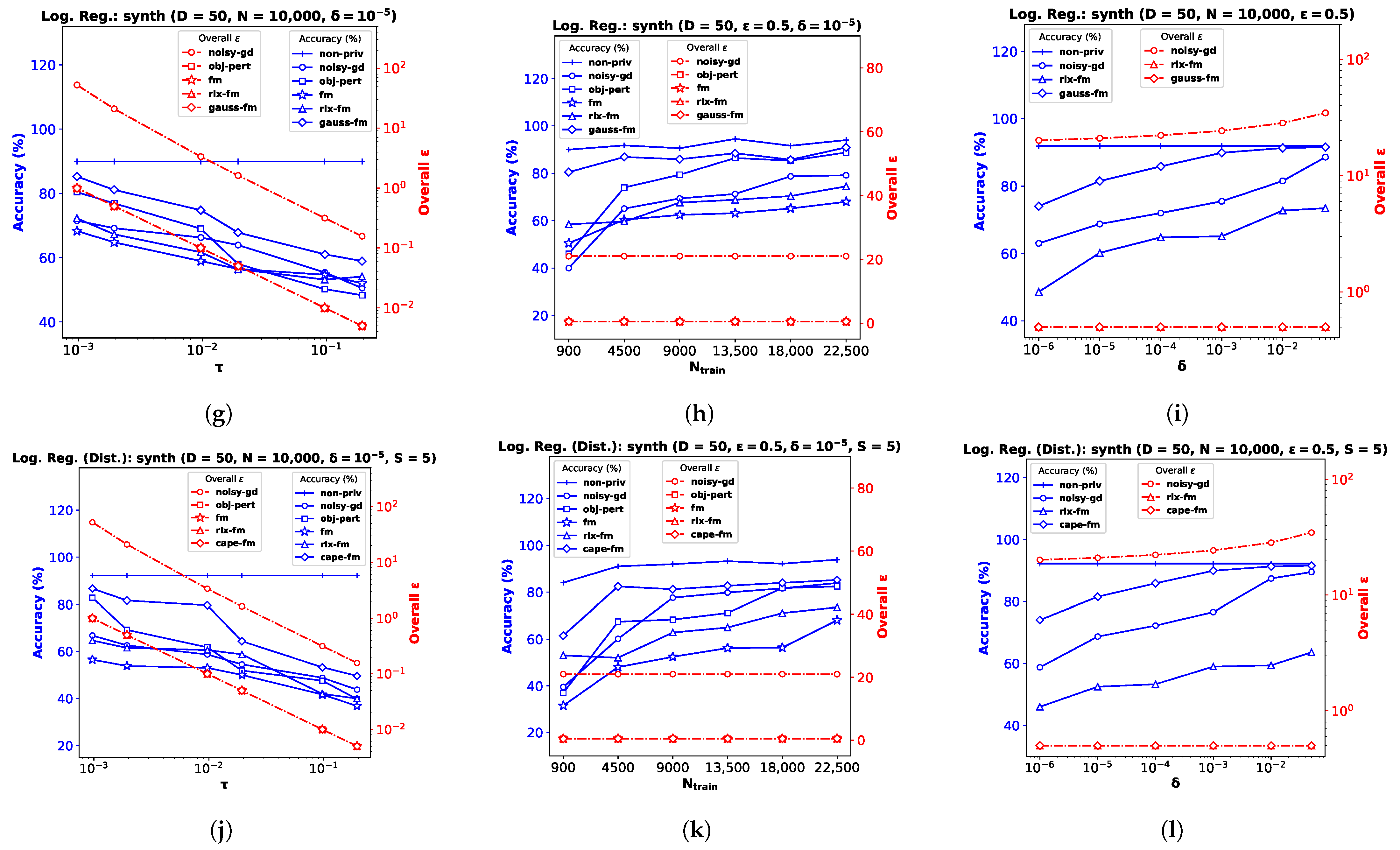

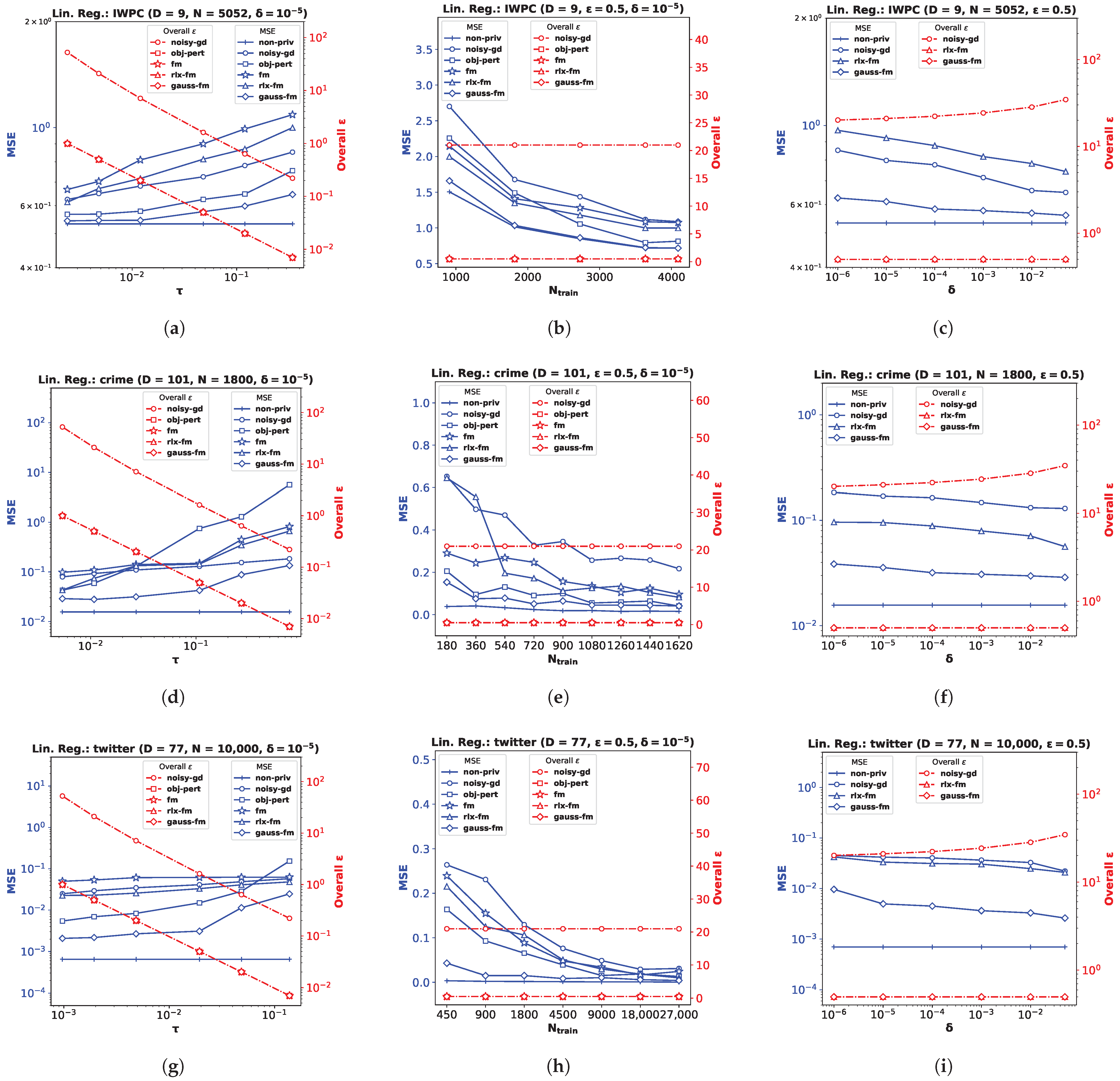

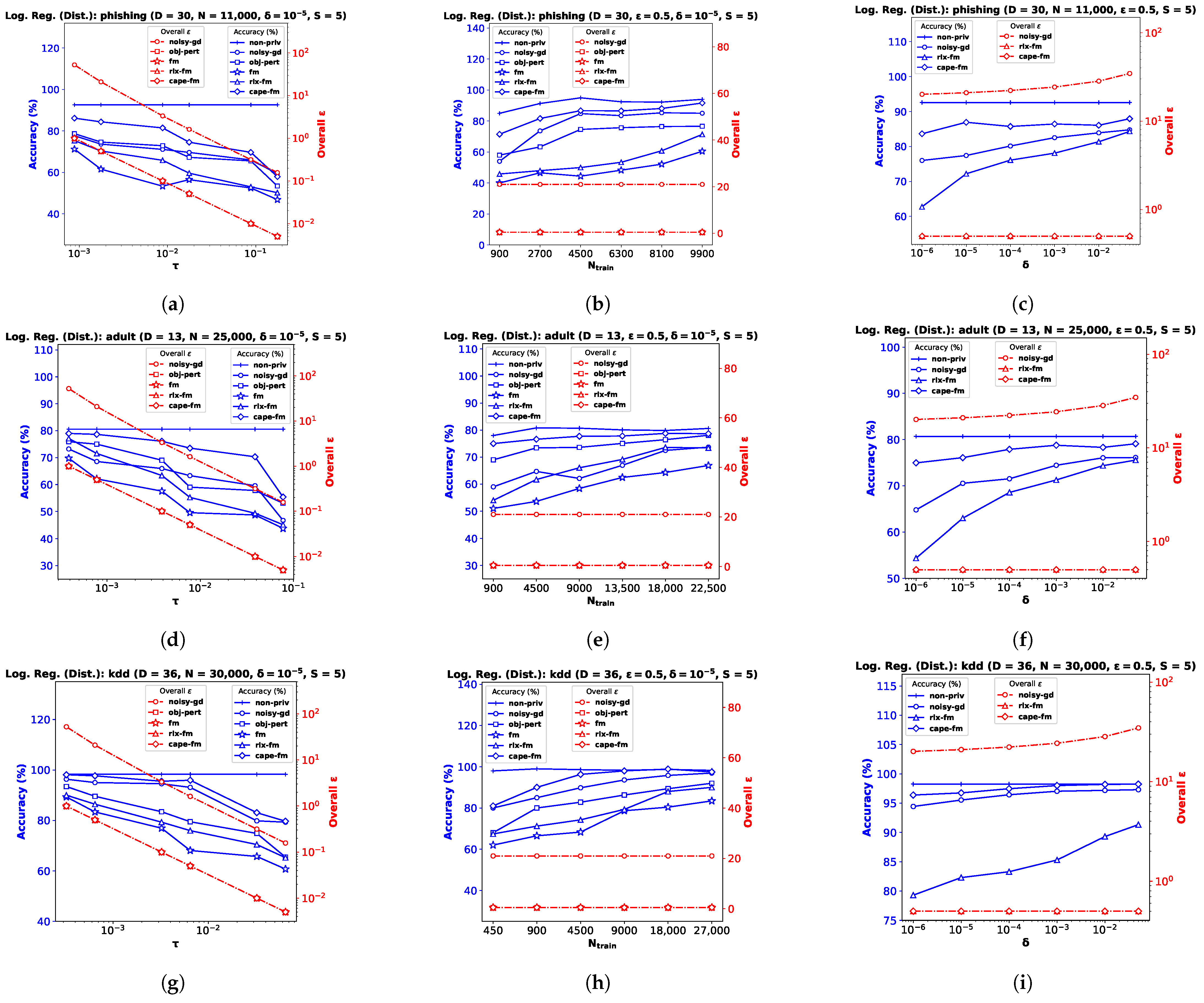

6.2. Logistic Regression

For the logistic regression problem, we again perform experiments on three real datasets (and a synthetic dataset, as shown in

Appendix B): the

Phishing Websites dataset (

phishing) [

47] with dimensionality

(

Figure 2a–c), the

Census Income dataset (

adult) [

47] with

(

Figure 2d–f), and the

KDD Cup ’99 dataset (

kdd) [

47] with

(

Figure 2g–i). As before, we pre-process the data so that the feature vectors satisfy

, and

∀

. Note for

obj-pert that the cost function is regularized and the labels are assumed to be

in [

8]. We divide each dataset into train and test partitions with a ratio of 90:10. We use percent accuracy on the test dataset as the performance index for logistic regression, and show the average performance over 10 independent runs.

Performance Comparison with Varying . We plot accuracy against the DP additive noise standard deviation

in

Figure 2a,d,g. We observe that accuracy degrades when the additive DP noise standard deviation

increases, indicating a greater privacy guarantee at the cost of performance. When noise is too high, privacy-preserving logistic regression may not learn a meaningful

at all, and provide random results. Depending on the class distribution, this may not be obvious and the accuracy score may be misleading. We observe this for the

kdd dataset in

Figure 2g, where the classes are highly imbalanced, with ∼80% positive labels. Although the existing

fm performs poorly on this dataset, our proposed

gauss-fm provides significantly higher accuracy for all datasets, outperforming

fm, as well as

rlx-fm,

obj-pert, and

noisy-gd. As before, we observe the total privacy loss, i.e., overall

spent, from the

y-axis on the right.

Performance Comparison with Varying . We perform the same steps described in

Section 6.1 and observe the variation in performance with the number of training samples,

while keeping the privacy parameters fixed in

Figure 2b,e,h. Accuracy generally improves with increasing

. We observe that the same DP algorithm does not perform equally well for different datasets. For example,

obj-pert performs better than

noisy-gd on the

adult dataset (

Figure 2e), whereas

noisy-gd performs better than

obj-pert on the

phishing dataset (

Figure 2b). In general,

fm and

rlx-fm suffer from too much noise due to the quadratic and linear dependence on

D of their sensitivities, respectively. However, our proposed

gauss-fm overcomes this issue and consistently achieves accuracy close to the

non-priv case even for moderate sample sizes. We also show the overall privacy guarantee, as before.

Performance Comparison with Varying . Similar to the linear regression experiments shown in

Section 6.1, we keep

and

fixed for this task and vary the other privacy parameter

.

Figure 2c,f,i show that percent accuracy improves with increased

. For sufficiently large

(indicating 1–5% probability of the algorithm failing to provide

privacy risk),

gauss-fm accuracy can reach that of the

non-priv algorithm in some datasets (e.g.,

Figure 2i). Although the accuracy of

noisy-gd also improves, it comes at the cost of additional privacy risk, as shown in the overall

vs.

plots along the

y-axes on the right. Due to the higher noise variance,

rlx-fm achieves much inferior accuracy compared to both

gauss-fm and

noisy-gd.

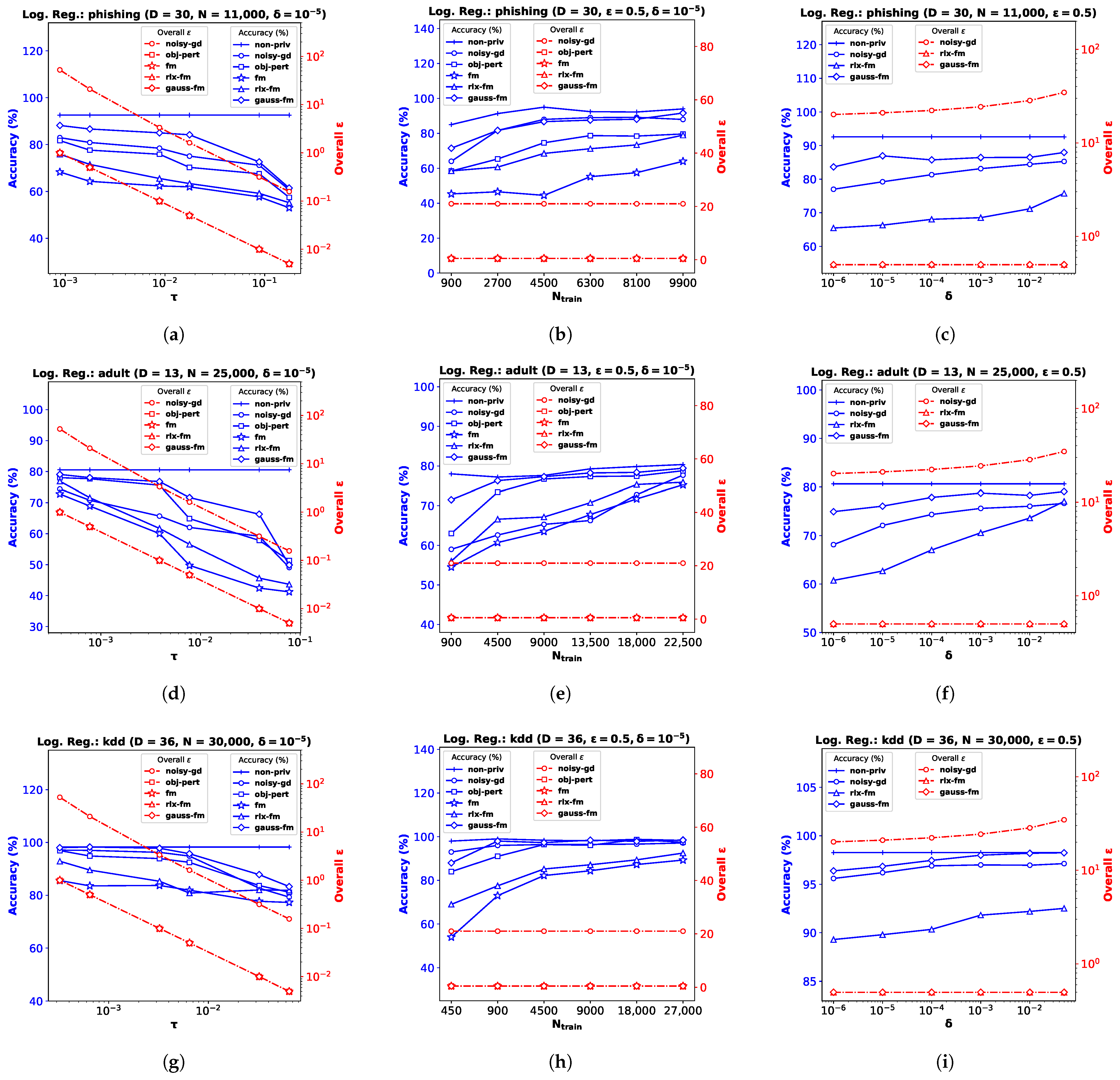

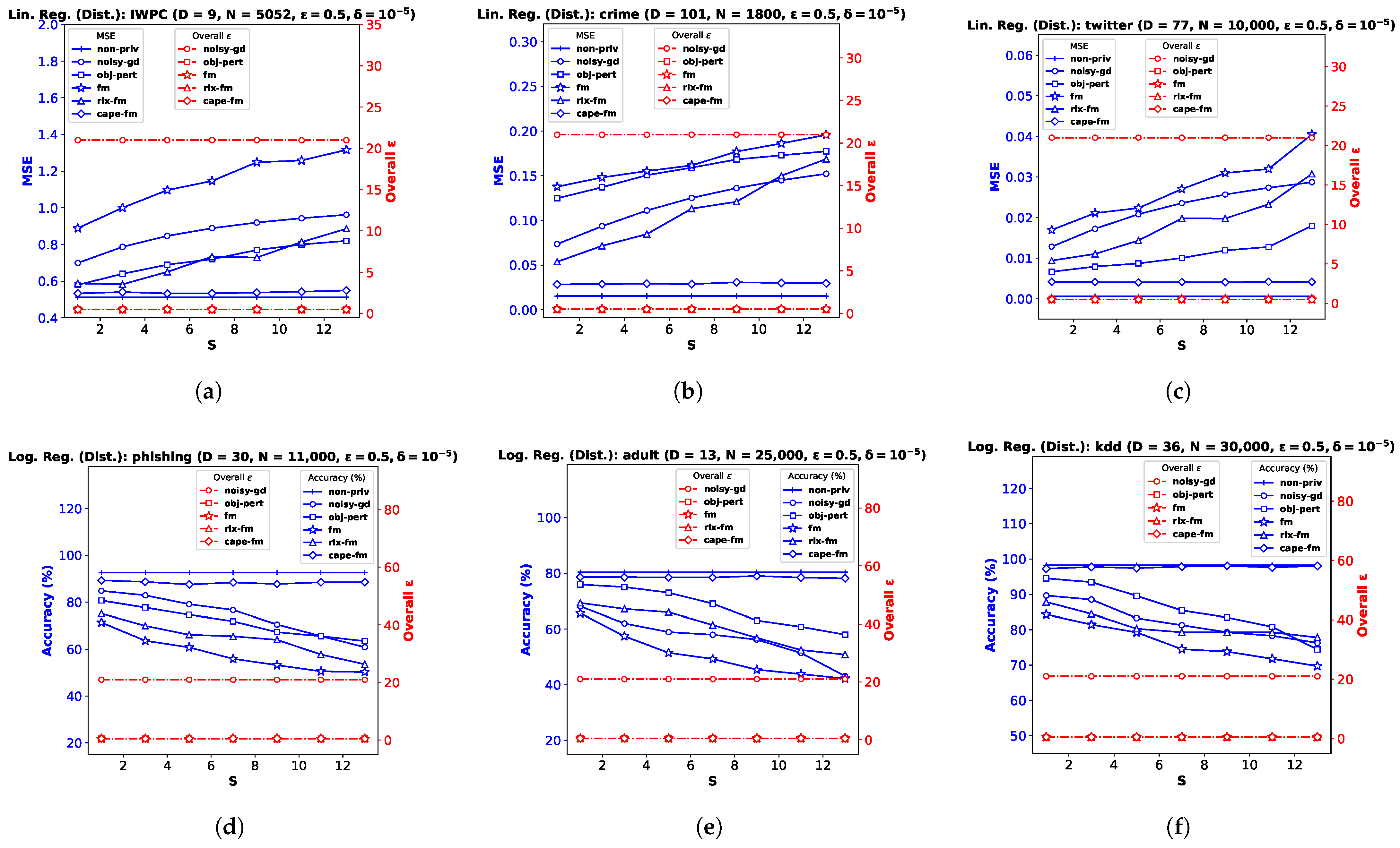

6.3. Decentralized Functional Mechanism ()

In this section, we empirically show the effectiveness of

, our proposed decentralized Gaussian FM which utilizes the

[

6] protocol. We implement differentially private linear and logistic regression for the decentralized-data setting using the same datasets described in

Section 6.1 and

Section 6.2, respectively. Note that the IWPC [

23] data were collected from 21 sites across 9 countries. After obtaining informed consent to use de-identified data from patients prior to the study, the Pharmacogenetics Knowledge Base has since made the dataset publicly available for research purpose. As mentioned before, the type of data contained in the IWPC dataset is similar to many other medical datasets containing private information [

20].

We implement our proposed

cape-fm according to Algorithm 3, along with

fm,

rlx-fm,

obj-pert, and

noisy-gd according to the conventional decentralized DP approach. We compare the performance of these methods in

Figure 3 and

Figure 4. Similar to the pooled-data scenario, we also compare performance of these algorithms with non-private linear and logistic regression (

non-priv). For these experiments, we assume

and

. Recall that the

scheme achieves the same noise variance as the pooled-data scenario in the symmetric setting (see Lemma 1 [

6] in

Section 2.1). As our proposed

algorithm follows the

scheme, we attain the same advantages. When varying privacy parameters and

, we keep the number of sites

S fixed. Additionally, we show the variation in performance due to change in the number of sites in

Figure 5. We pre-process each dataset as before, and use MSE and percent accuracy on test dataset as performance indices of the decentralized linear and logistic regression problems, respectively.

Performance Comparison by Varying . For this experiment, we keep the total number of samples

N, privacy parameter

, and the number of sites

S fixed. We observe from the plots (a), (d), and (g) in both

Figure 3 and

Figure 4 that as

increases, the performance degrades. The proposed

cape-fm outperforms conventional decentralized

noisy-gd,

obj-pert,

fm, and

rlx-fm by a larger margin than the pooled-data case. The reason for this is that we can achieve a much smaller noise variance at the aggregator due to the correlated noise scheme detailed in

Section 5.3. The utility of

cape-fm thus stays the same as the centralized case in the decentralized-data setting, whereas the conventional scheme’s utility always degrades by a factor of

S (see

Section 5.1). The overall

usage vs.

plots on the right y-axes for each site show that

noisy-gd suffers from much higher privacy loss.

Performance Comparison by Varying . We keep

,

, and

S fixed while investigating variation in performance with respect to

. As the sensitivities we computed in

Section 4.1 and

Section 4.2 are inversely proportional to the sample size, it is straightforward to infer that guaranteeing smaller privacy risk and higher utility is much easier when the sample size is large. Similar to the pooled-data cases in

Section 6.1 and

Section 6.2, we again observe from the plots (b), (e), and (h) in both

Figure 3 and

Figure 4 that, for sufficiently large

, utility of

cape-fm can reach that of the

non-priv case. Note that the

non-priv algorithms are the same as the pooled-data scenario, because if privacy is not a concern, all sites can send the data to aggregator for learning.

Performance Comparison by Varying . For this task, we keep

,

, and

S fixed. Note according to the

scheme that the proposed

cape-fm algorithm guarantees

-DP where

satisfy the relation

. Recall that

is the probability that the algorithm fails to provide privacy risk

, and that we assumed a fixed number of colluding sites

. From the plots (c), (f), and (i) in both

Figure 3 and

Figure 4, we observe that even for moderate values of

,

cape-fm easily outperforms

rlx-fm and

noisy-gd. Moreover, as seen from the overall

plots,

noisy-gd provides a much weaker privacy guarantee. Thus, our proposed

cape-fm algorithm offers superior performance and privacy–utility trade-off in the decentralized setting.

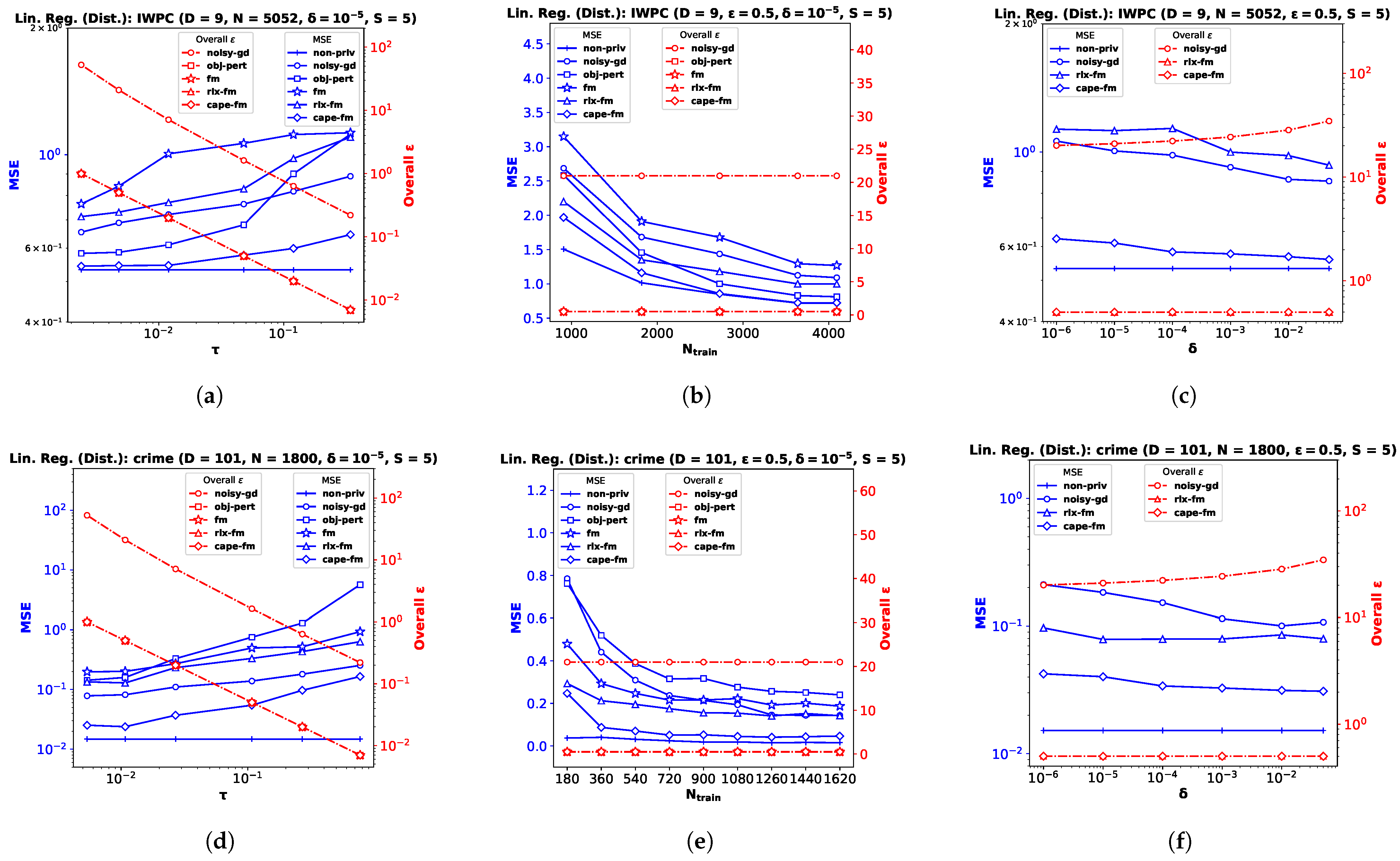

Performance Comparison by Varying S. Finally, we investigate performance variation with the number of sites

S, keeping the privacy and dataset parameters fixed. This automatically varies the number of samples

at each site

, as we consider the symmetric setting.

Figure 5a–c shows the results for decentralized linear regression, and

Figure 5d–f shows the results for decentralized logistic regression. We observe that the variation in

S does not affect the utility of

cape-fm, as long as the number of colluding sites meets the condition

. However, increasing

S leads to significant degradation in performance for conventional decentralized DP mechanisms, since the additive noise variance increases as

decreases. We show additional experimental results on synthetic datasets in

Appendix B.