Multi-Modality Image Fusion and Object Detection Based on Semantic Information

Abstract

1. Research Background and Introduction

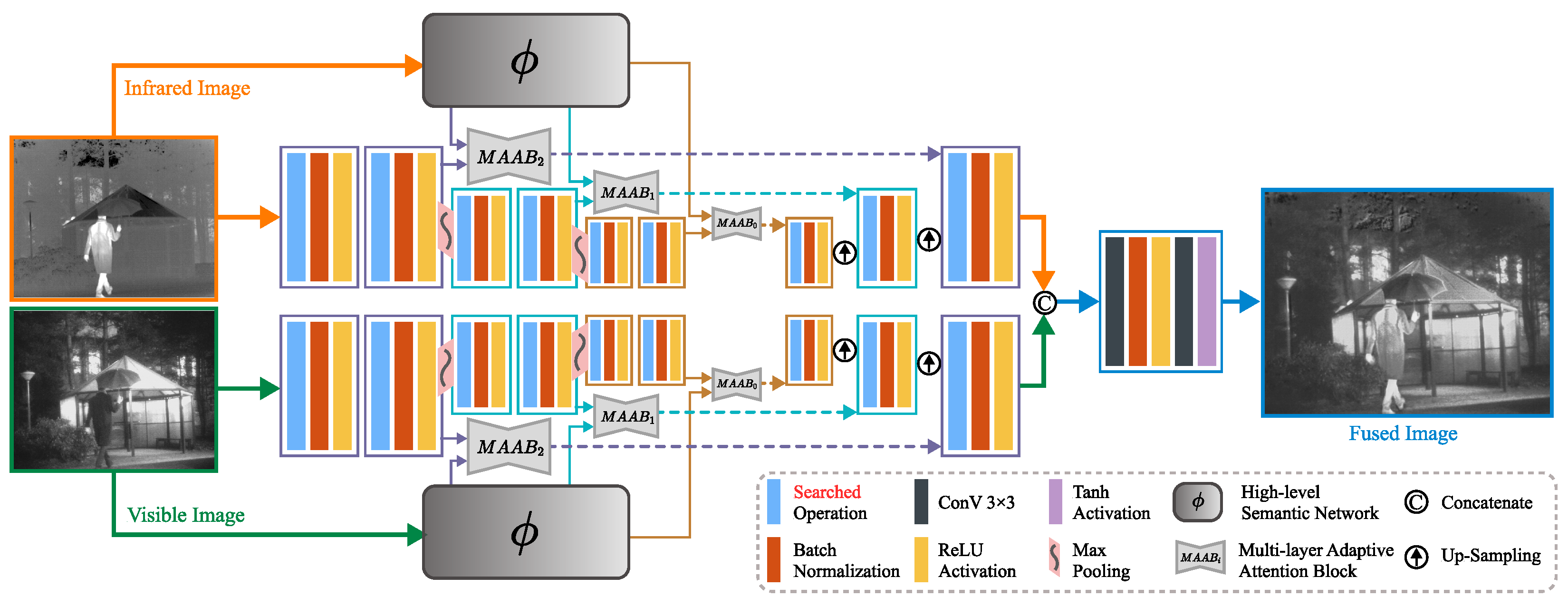

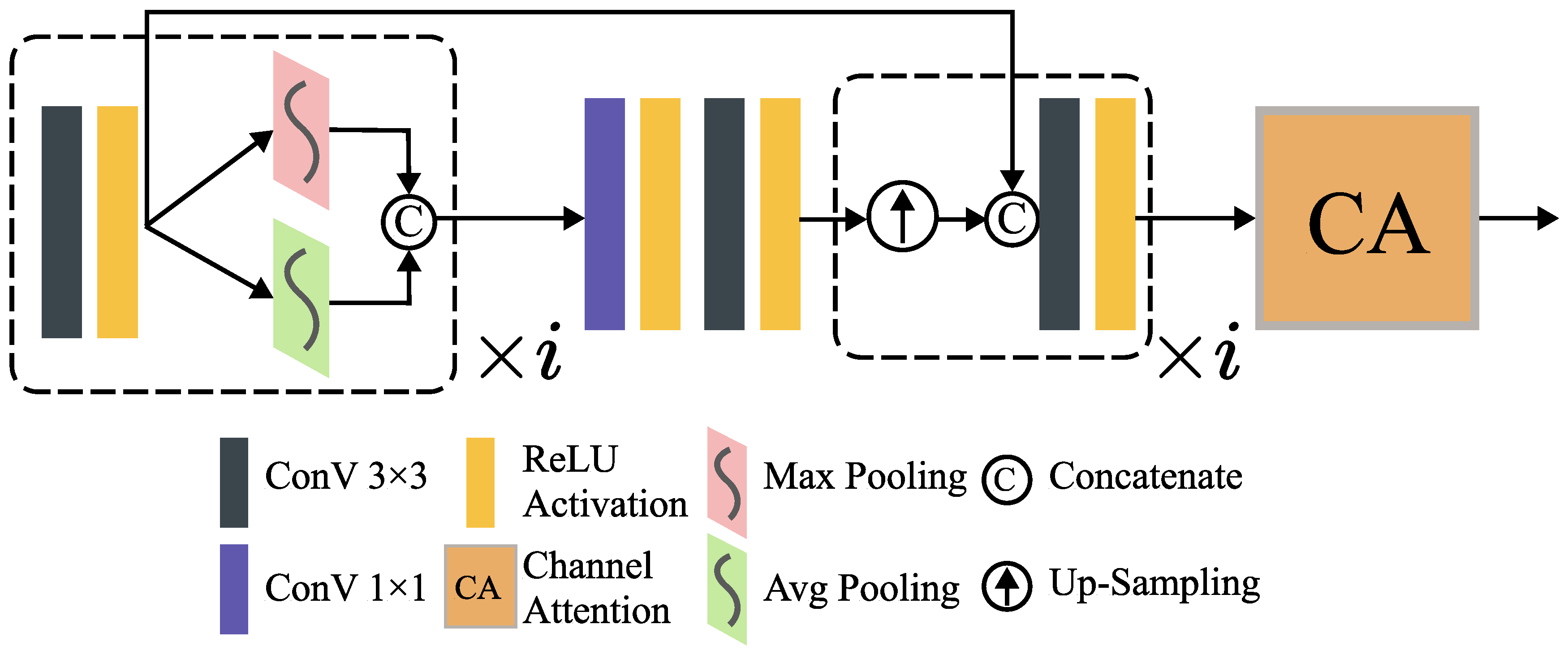

- In order to reduce feature redundancy and preserve complementary information, we designed a multi-level adaptive attention block (MAAB) in the network, which allows our network learning to retain rich features at different scales, and more efficiently and effectively integrate high-level semantic information.

- To discard the limitations of the existing manually constructed neural network structure, we introduce a neural architecture search (NAS) in the construction of the overall network structure, so as to adaptively search the network structure that is suitable for the current fusion task.

2. Related Works

2.1. Fusion Methods Based on a Traditional Approach

2.1.1. Fusion Methods Based on Multi-Scale Transform

2.1.2. Fusion Methods Based on Sparse Representation

2.1.3. Fusion Methods Based on Subspace

2.1.4. Fusion Methods Based on Saliency

2.1.5. Fusion Methods Based on Other Traditional Theories

2.2. Fusion Methods Based on Deep Learning

2.2.1. Fusion Methods Based on Pretrained Deep Neural Network

2.2.2. Fusion Methods Based on Autoencoder

2.2.3. Fusion Methods Based on the End-to-End Model

2.2.4. Fusion Methods Based on the Generative Adversarial Network

2.3. Neural Architecture Search

3. The Proposed Method

3.1. Method Motivation

3.2. Network Architecture

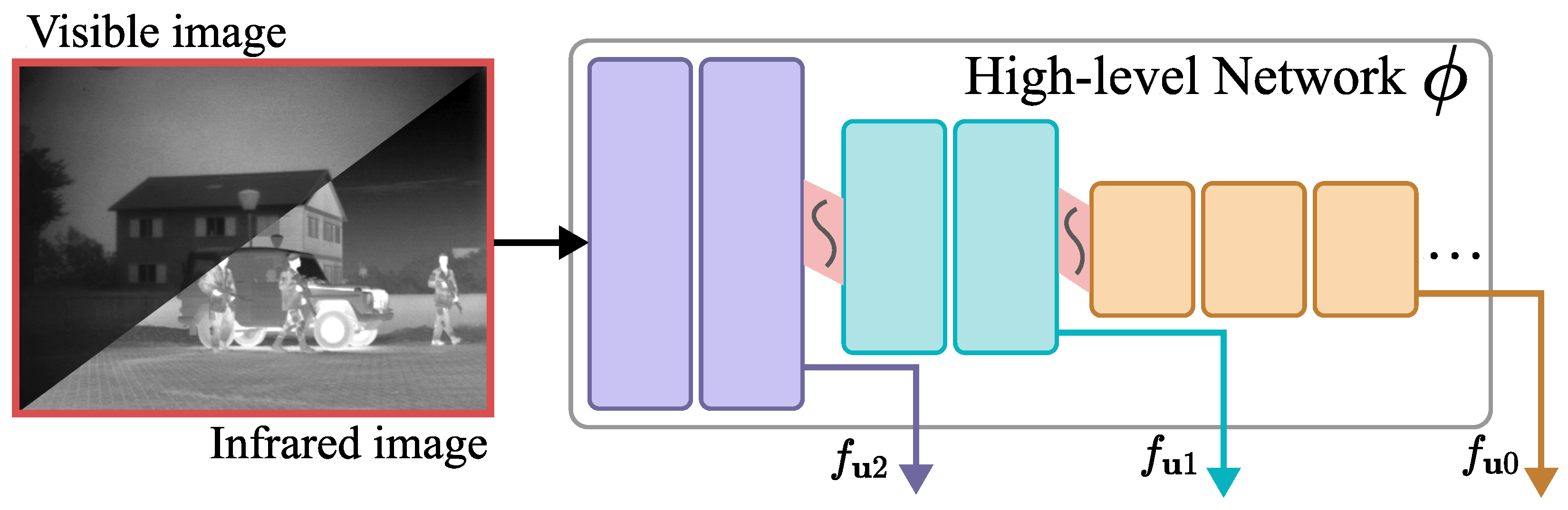

3.2.1. High-Level Semantic Network

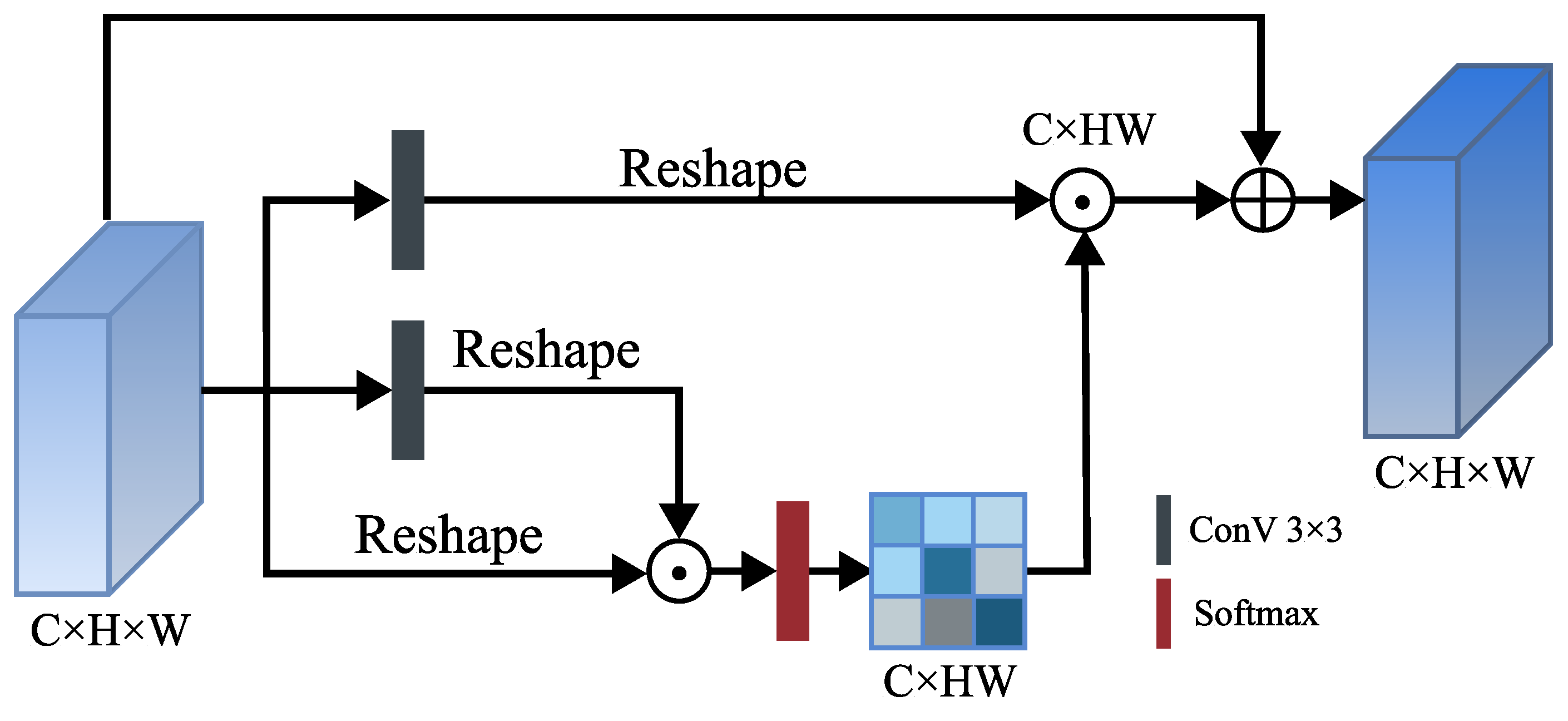

3.2.2. Multi-Layer Adaptive Attention Block

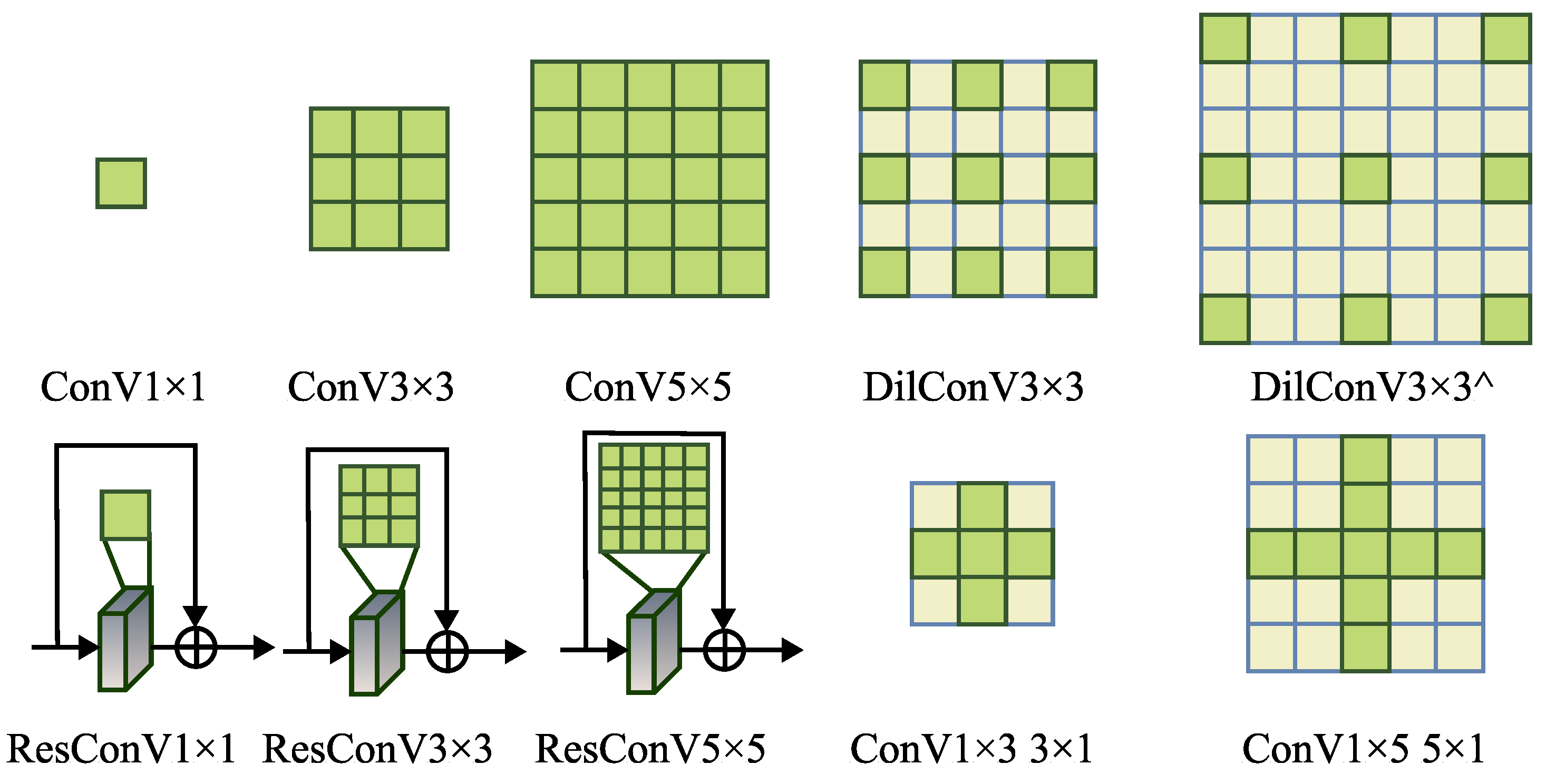

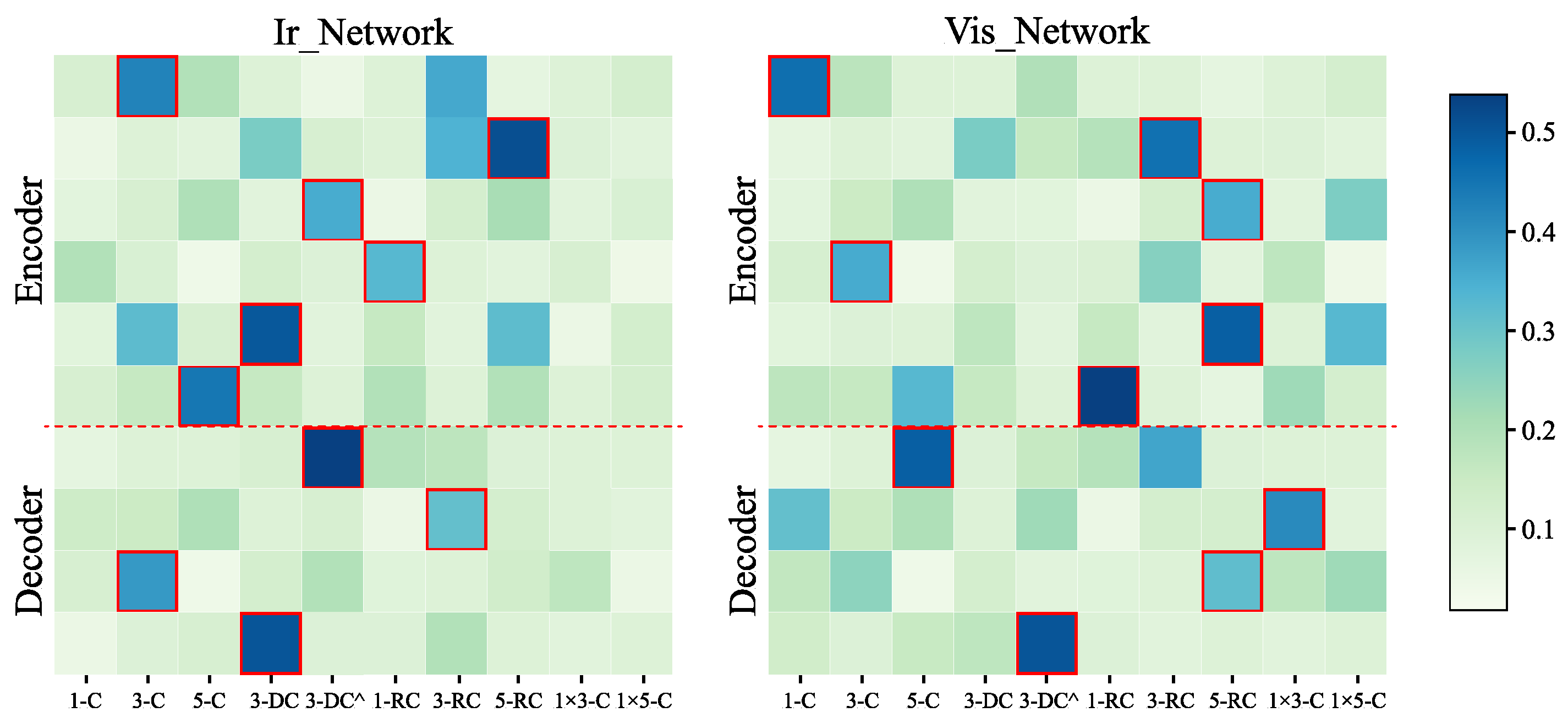

3.2.3. Searched Residual Network

- ConV 1 × 1

- ConV 3 × 3

- ConV 5 × 5

- DilConV 3 × 3

- DilConV 5 × 5

- ResConV 1 × 1

- ResConV 3 × 3

- ResConV 3 × 3

- ConV 1 × 3 3 × 1

- ConV 1 × 5 5 × 1

3.3. Loss Function

3.3.1. Pixel Loss Function

3.3.2. Structure Loss Function

3.3.3. Gradient Loss Function

3.3.4. Target-Aware Loss Function

3.3.5. Total Fusion Loss Function

4. Experiments

4.1. Results of Infrared and Visible Image Fusion

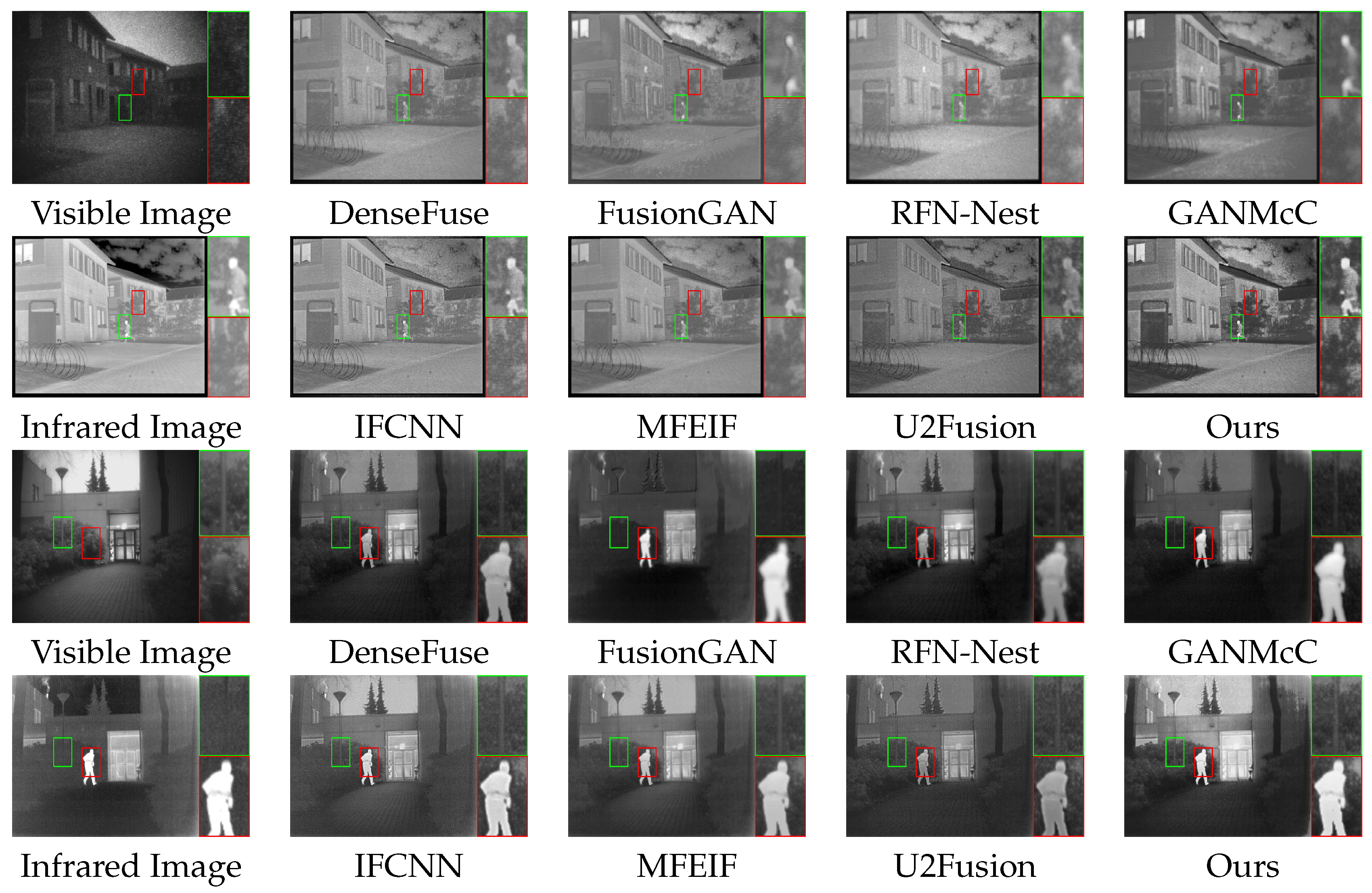

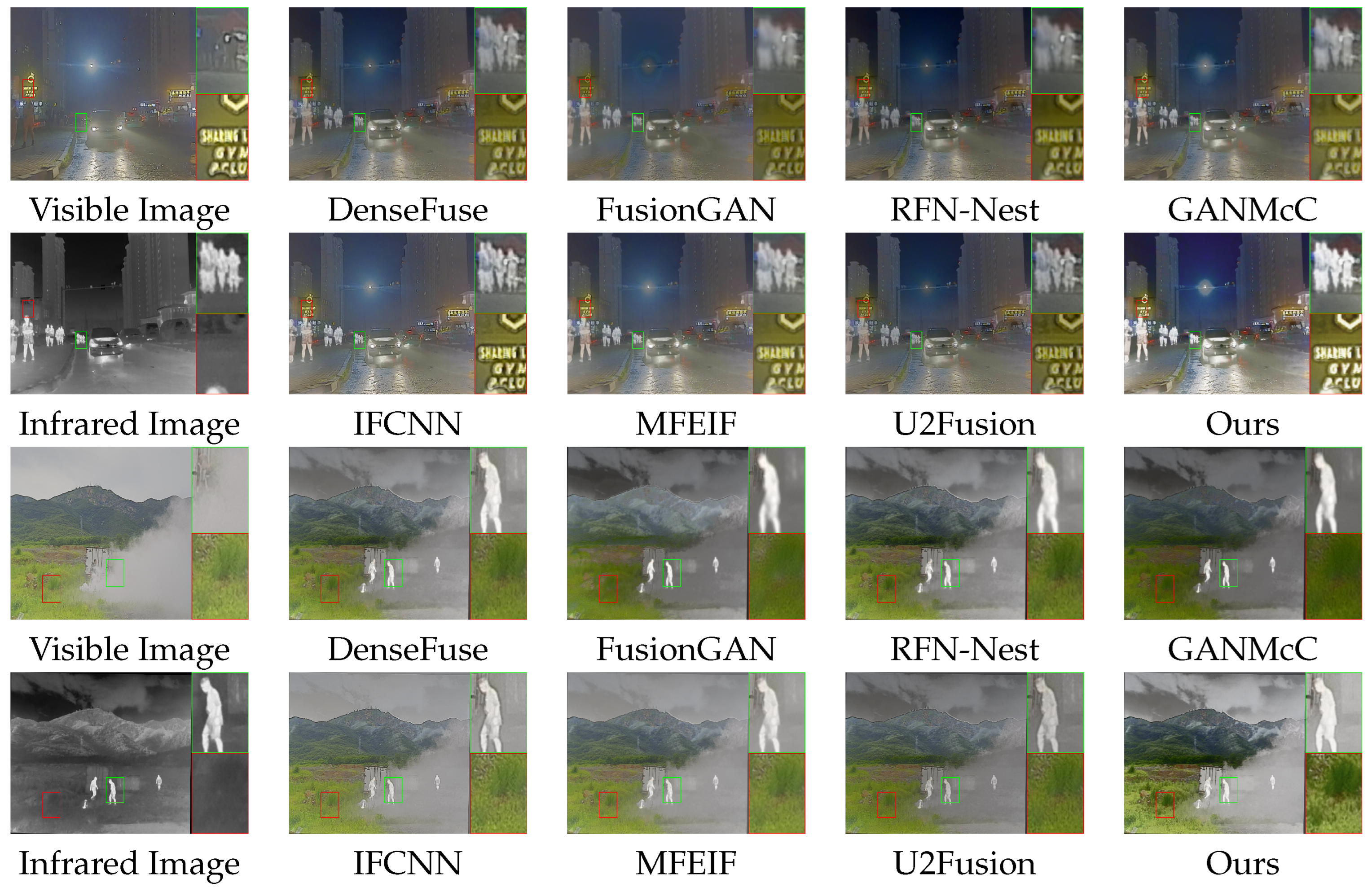

4.1.1. Qualitative Comparisons

- (1)

- Qualitative Comparisons on TNO Datasets

- (2)

- Qualitative Comparisons on Roadscene Datasets

- (3)

- Qualitative Comparisons of M3FD Datasets

4.1.2. Quantitative Comparisons

- The quantity of information transmitted from the source image to the fused image is measured using MI. A larger MI indicates that more information from the source image pair is maintained in the fused image.

- The human visual system is sensitive to picture loss and distortion, and this is modeled using SSIM. It has a good correlation with fusion performance.

- SCD displays the correlation between the source and fused images. A larger SCD indicates a higher fusion performance.

- EN measures the information in the fused image. A higher EN typically denotes improved fusion performance.

- The contrast and pixel distribution of the fused image are reflected by SD. A larger SD typically denotes a more aesthetically pleasing fused image.

- SF represents the overall gradient distribution of the image in the spatial domain. The texture and edges become richer as the SF becomes larger.

4.2. Results of IVIF and Object Detection

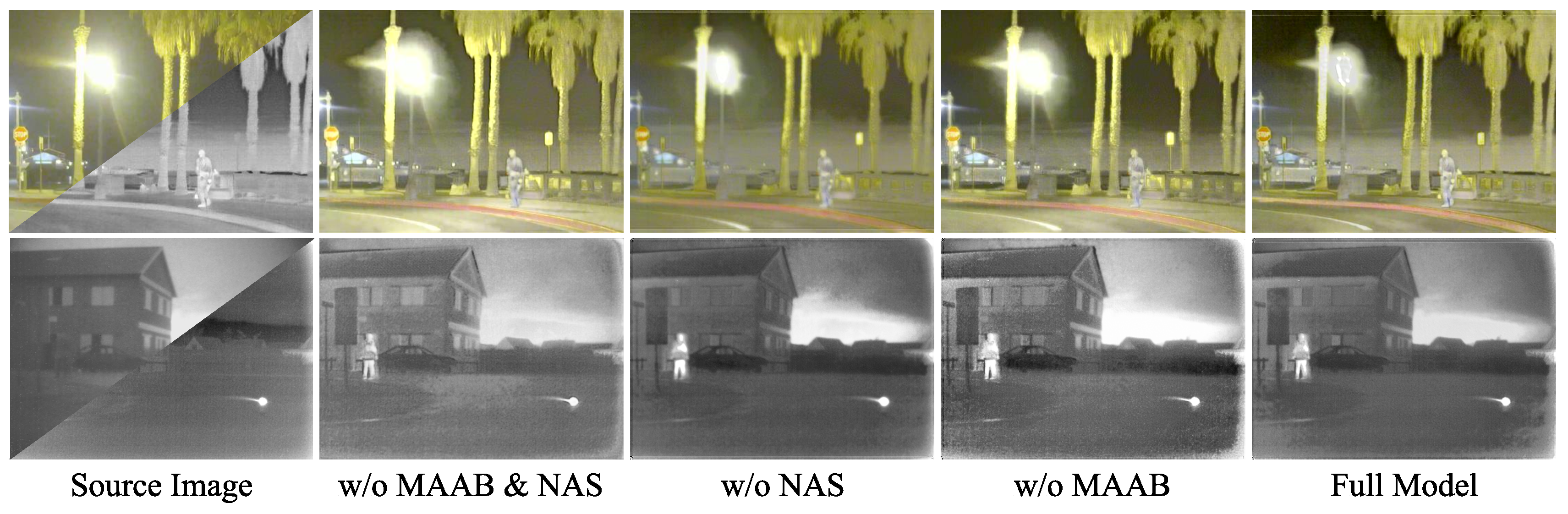

4.3. Ablation Studies

4.3.1. Study on Model Architectures

4.3.2. Analyzing the Training Loss Functions

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hall, D.L.; Llinas, J. An introduction to multisensor data fusion. Proc. IEEE 1997, 85, 6–23. [Google Scholar] [CrossRef]

- Liu, R.; Liu, J.; Jiang, Z.; Fan, X.; Luo, Z. A bilevel integrated model with data-driven layer ensemble for multi-modality image fusion. IEEE Trans. Image Process. 2020, 30, 1261–1274. [Google Scholar] [CrossRef]

- Liu, J.; Shang, J.; Liu, R.; Fan, X. Attention-guided global-local adversarial learning for detail-preserving multi-exposure image fusion. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5026–5040. [Google Scholar] [CrossRef]

- Jiang, Z.; Zhang, Z.; Yu, Y.; Liu, R. Bilevel modeling investigated generative adversarial framework for image restoration. Vis. Comput. 2022, 1, 1–13. [Google Scholar] [CrossRef]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward Fast, Flexible, and Robust Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 5637–5646. [Google Scholar]

- Liu, R.; Ma, L.; Zhang, J.; Fan, X.; Luo, Z. Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 10561–10570. [Google Scholar]

- Liu, J.; Jiang, Z.; Wu, G.; Liu, R.; Fan, X. A unified image fusion framework with flexible bilevel paradigm integration. Vis. Comput. 2022, 1, 1–18. [Google Scholar] [CrossRef]

- Liu, R.; Jiang, Z.; Fan, X.; Luo, Z. Knowledge-driven deep unrolling for robust image layer separation. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 1653–1666. [Google Scholar] [CrossRef] [PubMed]

- Nencini, F.; Garzelli, A.; Baronti, S.; Alparone, L. Remote sensing image fusion using the curvelet transform. Inf. Fusion 2007, 8, 143–156. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Liang, J.; He, Y.; Liu, D.; Zeng, X. Image fusion using higher order singular value decomposition. IEEE Trans. Image Process. 2012, 21, 2898–2909. [Google Scholar] [CrossRef]

- Li, H.; He, X.; Tao, D.; Tang, Y.; Wang, R. Joint medical image fusion, denoising and enhancement via discriminative low-rank sparse dictionaries learning. Pattern Recognit. 2018, 79, 130–146. [Google Scholar] [CrossRef]

- Zhu, Z.; Yin, H.; Chai, Y.; Li, Y.; Qi, G. A novel multi-modality image fusion method based on image decomposition and sparse representation. Inf. Sci. 2018, 432, 516–529. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.K.; Wang, Z.J. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Li, H.; Liu, L.; Huang, W.; Yue, C. An improved fusion algorithm for infrared and visible images based on multi-scale transform. Infrared Phys. Technol. 2016, 74, 28–37. [Google Scholar] [CrossRef]

- Ibrahim, R.; Alirezaie, J.; Babyn, P. Pixel level jointed sparse representation with RPCA image fusion algorithm. In Proceedings of the 38th International Conference on Telecommunications and Signal Processing, Prague, Czech Republic, 9–11 July 2015; pp. 592–595. [Google Scholar]

- Liu, C.; Qi, Y.; Ding, W. Infrared and visible image fusion method based on saliency detection in sparse domain. Infrared Phys. Technol. 2017, 83, 94–102. [Google Scholar] [CrossRef]

- Shibata, T.; Tanaka, M.; Okutomi, M. Visible and near-infrared image fusion based on visually salient area selection. In Proceedings of the Digital Photography XI International Society for Optics and Photonics, San Francisco, CA, USA, 27 February 2015; Volume 4, p. 94. [Google Scholar]

- Gan, W.; Wu, X.; Wu, W.; Yang, X.; Ren, C.; He, X.; Liu, K. Infrared and visible image fusion with the use of multi-scale edge-preserving decomposition and guided image filter. Infrared Phys. Technol. 2015, 72, 37–51. [Google Scholar] [CrossRef]

- Rajkumar, S.; Mouli, P.C. Infrared and visible image fusion using entropy and neuro-fuzzy concepts. In Proceedings of the ICT and Critical Infrastructure: Proceedings of the 48th Annual Convention of Computer Society of India-Vol I; Springer: Berlin/Heidelberg, Germany, 2014; pp. 93–100. [Google Scholar]

- Zhao, J.; Cui, G.; Gong, X.; Zang, Y.; Tao, S.; Wang, D. Fusion of visible and infrared images using global entropy and gradient constrained regularization. Infrared Phys. Technol. 2017, 81, 201–209. [Google Scholar] [CrossRef]

- Bai, X. Morphological center operator based infrared and visible image fusion through correlation coefficient. Infrared Phys. Technol. 2016, 76, 546–554. [Google Scholar] [CrossRef]

- Liu, J.; Wu, Y.; Huang, Z.; Liu, R.; Fan, X. Smoa: Searching a modality-oriented architecture for infrared and visible image fusion. IEEE Signal Process. Lett. 2021, 28, 1818–1822. [Google Scholar] [CrossRef]

- Huang, Z.; Liu, J.; Fan, X.; Liu, R.; Zhong, W.; Luo, Z. ReCoNet: Recurrent Correction Network for Fast and Efficient Multi-modality Image Fusion. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 539–555. [Google Scholar]

- Wang, D.; Liu, J.; Fan, X.; Liu, R. Unsupervised Misaligned Infrared and Visible Image Fusion via Cross-Modality Image Generation and Registration. arXiv 2022, arXiv:2205.11876. [Google Scholar]

- Jiang, Z.; Zhang, Z.; Fan, X.; Liu, R. Towards all weather and unobstructed multi-spectral image stitching: Algorithm and benchmark. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 3783–3791. [Google Scholar]

- Liu, R.; Jiang, Z.; Yang, S.; Fan, X. Twin adversarial contrastive learning for underwater image enhancement and beyond. IEEE Trans. Image Process. 2022, 31, 4922–4936. [Google Scholar] [CrossRef]

- Jiang, Z.; Li, Z.; Yang, S.; Fan, X.; Liu, R. Target Oriented Perceptual Adversarial Fusion Network for Underwater Image Enhancement. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6584–6598. [Google Scholar] [CrossRef]

- Sengupta, A.; Ye, Y.; Wang, R.; Liu, C.; Roy, K. Going deeper in spiking neural networks: VGG and residual architectures. Front. Neurosci. 2019, 13, 95. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Li, H.; Wu, X.j.; Durrani, T.S. Infrared and visible image fusion with ResNet and zero-phase component analysis. Infrared Phys. Technol. 2019, 102, 103039. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Kittler, J. Infrared and visible image fusion using a deep learning framework. In Proceedings of the International Conference on Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2705–2710. [Google Scholar]

- Tang, L.; Yuan, J.; Ma, J. Image fusion in the loop of high-level vision tasks: A semantic-aware real-time infrared and visible image fusion network. Inf. Fusion 2022, 82, 28–42. [Google Scholar] [CrossRef]

- Chen, X.; Teng, Z.; Liu, Y.; Lu, J.; Bai, L.; Han, J. Infrared-Visible Image Fusion Based on Semantic Guidance and Visual Perception. Entropy 2022, 24, 1327. [Google Scholar] [CrossRef]

- Hou, J.; Zhang, D.; Wu, W.; Ma, J.; Zhou, H. A generative adversarial network for infrared and visible image fusion based on semantic segmentation. Entropy 2021, 23, 376. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2018, 28, 2614–2623. [Google Scholar] [CrossRef]

- Zhao, Z.; Xu, S.; Zhang, C.; Liu, J.; Li, P.; Zhang, J. DIDFuse: Deep image decomposition for infrared and visible image fusion. arXiv 2020, arXiv:2003.09210. [Google Scholar]

- Li, H.; Wu, X.J.; Kittler, J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images. Inf. Fusion 2021, 73, 72–86. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Sun, P.; Yan, H.; Zhao, X.; Zhang, L. IFCNN: A general image fusion framework based on convolutional neural network. Inf. Fusion 2020, 54, 99–118. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 139–144. [Google Scholar]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Ma, J.; Xu, H.; Jiang, J.; Mei, X.; Zhang, X.P. DDcGAN: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans. Image Process. 2020, 29, 4980–4995. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-aware Dual Adversarial Learning and a Multi-scenario Multi-Modality Benchmark to Fuse Infrared and Visible for Object Detection. arXiv 2022, arXiv:2203.16220. [Google Scholar]

- Liu, H.; Simonyan, K.; Yang, Y. Darts: Differentiable architecture search. arXiv 2018, arXiv:1806.09055. [Google Scholar]

- Cai, H.; Zhu, L.; Han, S. Proxylessnas: Direct neural architecture search on target task and hardware. arXiv 2018, arXiv:1812.00332. [Google Scholar]

- Saini, S.; Agrawal, G. (m) slae-net: Multi-scale multi-level attention embedded network for retinal vessel segmentation. In Proceedings of the 2021 IEEE 9th International Conference on Healthcare Informatics (ICHI), Victoria, BC, Canada, 9–12 August 2021; pp. 219–223. [Google Scholar]

- Chen, L.; Liu, C.; Chang, F.; Li, S.; Nie, Z. Adaptive multi-level feature fusion and attention-based network for arbitrary-oriented object detection in remote sensing imagery. Neurocomputing 2021, 451, 67–80. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Sun, Y.; Cao, B.; Zhu, P.; Hu, Q. Detfusion: A detection-driven infrared and visible image fusion network. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 4003–4011. [Google Scholar]

- Cheng, M.M.; Mitra, N.J.; Huang, X.; Torr, P.H.; Hu, S.M. Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 569–582. [Google Scholar] [CrossRef]

- Zhai, Y.; Shah, M. Visual attention detection in video sequences using spatiotemporal cues. In Proceedings of the 14th ACM International Conference on Multimedia, Santa Barbara, CA, USA, 23–27 October 2006; pp. 815–824. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ma, J.; Zhang, H.; Shao, Z.; Liang, P.; Xu, H. GANMcC: A generative adversarial network with multiclassification constraints for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2020, 70, 1–14. [Google Scholar] [CrossRef]

- Liu, J.; Fan, X.; Jiang, J.; Liu, R.; Luo, Z. Learning a deep multi-scale feature ensemble and an edge-attention guidance for image fusion. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 105–119. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518. [Google Scholar] [CrossRef] [PubMed]

- Qu, G.; Zhang, D.; Yan, P. Information measure for performance of image fusion. Electron. Lett. 2002, 38, 313–315. [Google Scholar] [CrossRef]

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Roberts, J.W.; Van Aardt, J.A.; Ahmed, F.B. Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2008, 2, 023522. [Google Scholar]

- Aslantas, V.; Bendes, E. A new image quality metric for image fusion: The sum of the correlations of differences. Aeu-Int. J. Electron. Commun. 2015, 69, 1890–1896. [Google Scholar] [CrossRef]

- Cui, G.; Feng, H.; Xu, Z.; Li, Q.; Chen, Y. Detail preserved fusion of visible and infrared images using regional saliency extraction and multi-scale image decomposition. Opt. Commun. 2015, 341, 199–209. [Google Scholar] [CrossRef]

| MI | EN | SD | SF | SSIM | SCD | |

|---|---|---|---|---|---|---|

| DenseFuse | 0.867 ± 0.28 | 6.832 ± 0.31 | 34.419 ± 6.84 | 9.362 ± 2.86 | 0.437 ± 0.07 | 1.404 ± 0.11 |

| FusionGAN | 0.393 ± 0.14 | 6.611 ± 0.33 | 30.633 ± 7.74 | 6.313 ± 2.38 | 0.415 ± 0.06 | 1.359 ± 0.09 |

| IFCNN | 0.548 ± 0.16 | 7.033 ± 0.27 | 37.272 ± 5.39 | 9.325 ± 2.47 | 0.454 ± 0.10 | 1.300 ± 0.07 |

| GANMcC | 0.782 ± 0.20 | 6.697 ± 0.34 | 31.764 ± 6.49 | 6.301 ± 1.47 | 0.424 ± 0.03 | 1.340 ± 0.09 |

| RFN-Nest | 0.774 ± 0.21 | 7.038 ± 0.25 | 37.438 ± 8.05 | 5.941 ± 1.83 | 0.367 ± 0.07 | 1.441 ± 0.11 |

| U2Fusion | 0.793 ± 0.25 | 7.080 ± 0.19 | 37.509 ± 6.94 | 10.366 ± 4.00 | 0.451 ± 0.05 | 1.274 ± 0.08 |

| MFEIF | 0.957 ± 0.35 | 6.784 ± 0.49 | 34.340 ± 10.21 | 7.673 ± 2.27 | 0.399 ± 0.09 | 1.360 ± 0.10 |

| Ours | 0.948 ± 0.22 | 7.140 ± 0.35 | 42.400 ± 13.09 | 10.378 ± 3.79 | 0.457 ± 0.06 | 1.426 ± 0.08 |

| MI | EN | SD | SF | SSIM | SCD | |

|---|---|---|---|---|---|---|

| DenseFuse | 0.877 ± 0.23 | 7.271 ± 0.21 | 45.664 ± 6.44 | 10.513 ± 3.72 | 0.497 ± 0.03 | 1.671 ± 0.07 |

| FusionGAN | 0.676 ± 0.13 | 7.113 ± 0.23 | 39.790 ± 5.72 | 8.415 ± 2.98 | 0.485 ± 0.04 | 1.641 ± 0.08 |

| IFCNN | 0.636 ± 0.14 | 7.278 ± 0.17 | 47.414 ± 7.07 | 10.334 ± 2.75 | 0.529 ± 0.03 | 1.579 ± 0.15 |

| GANMcC | 0.964 ± 0.34 | 7.301 ± 0.22 | 46.872 ± 6.65 | 8.817 ± 1.82 | 0.421 ± 0.03 | 1.367 ± 0.09 |

| RFN-Nest | 0.926 ± 0.25 | 7.307 ± 0.22 | 48.832 ± 6.50 | 7.366 ± 2.36 | 0.502 ± 0.02 | 1.602 ± 0.03 |

| U2Fusion | 0.823 ± 0.25 | 7.275 ± 0.22 | 42.876 ± 7.39 | 13.143 ± 2.92 | 0.526 ± 0.04 | 1.726 ± 0.12 |

| MFEIF | 0.988 ± 0.27 | 6.076 ± 0.34 | 40.263 ± 8.83 | 8.522 ± 3.03 | 0.530 ± 0.03 | 1.590 ± 0.03 |

| Ours | 1.08 ± 0.20 | 7.354 ± 0.30 | 50.039 ± 4.82 | 13.679 ± 2.52 | 0.496 ± 0.03 | 1.667 ± 0.02 |

| Method | Person | Car | Bus | Truck | Motorcycle | Lamp | All | mAP@.5 |

|---|---|---|---|---|---|---|---|---|

| Infrared | 0.631 | 0.561 | 0.544 | 0.536 | 0.510 | 0.512 | 0.544 | 0.4354 |

| Visible | 0.612 | 0.591 | 0.596 | 0.569 | 0.547 | 0.531 | 0.574 | 0.4264 |

| DenseFuse | 0.633 | 0.603 | 0.631 | 0.583 | 0.599 | 0.523 | 0.595 | 0.4766 |

| FusionGAN | 0.597 | 0.589 | 0.584 | 0.589 | 0.544 | 0.534 | 0.572 | 0.4784 |

| RFN-Nest | 0.613 | 0.577 | 0.601 | 0.576 | 0.558 | 0.552 | 0.580 | 0.4772 |

| GANMcC | 0.582 | 0.568 | 0.565 | 0.510 | 0.527 | 0.554 | 0.551 | 0.4760 |

| IFCNN | 0.506 | 0.566 | 0.596 | 0.566 | 0.567 | 0.535 | 0.556 | 0.4569 |

| MFEIF | 0.622 | 0.610 | 0.578 | 0.576 | 0.575 | 0.514 | 0.579 | 0.4610 |

| U2Fusion | 0.577 | 0.584 | 0.596 | 0.548 | 0.577 | 0.558 | 0.573 | 0.4642 |

| Ours | 0.647 | 0.607 | 0.582 | 0.597 | 0.583 | 0.564 | 0.597 | 0.4810 |

| Component | TNO Dataset | Roadscene Dataset | ||||||

|---|---|---|---|---|---|---|---|---|

| Model | MAAB | NAS | EN | SF | SCD | EN | SF | SCD |

| M1 | × | × | 7.310 | 7.216 | 1.211 | 7.365 | 9.269 | 1.386 |

| M2 | √ | × | 6.856 | 9.366 | 1.365 | 7.031 | 12.548 | 1.753 |

| M3 | × | √ | 7.012 | 8.998 | 1.318 | 7.124 | 10.945 | 1.421 |

| M4 | √ | √ | 7.186 | 10.378 | 1.416 | 7.341 | 13.273 | 1.789 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Zhou, X.; Zhong, W. Multi-Modality Image Fusion and Object Detection Based on Semantic Information. Entropy 2023, 25, 718. https://doi.org/10.3390/e25050718

Liu Y, Zhou X, Zhong W. Multi-Modality Image Fusion and Object Detection Based on Semantic Information. Entropy. 2023; 25(5):718. https://doi.org/10.3390/e25050718

Chicago/Turabian StyleLiu, Yong, Xin Zhou, and Wei Zhong. 2023. "Multi-Modality Image Fusion and Object Detection Based on Semantic Information" Entropy 25, no. 5: 718. https://doi.org/10.3390/e25050718

APA StyleLiu, Y., Zhou, X., & Zhong, W. (2023). Multi-Modality Image Fusion and Object Detection Based on Semantic Information. Entropy, 25(5), 718. https://doi.org/10.3390/e25050718