1. Introduction

Graphs are an essential tool to describe and model various complex systems in the real world, where their nodes can represent the entities in complex systems, and their edges can effectively describe the relationship between entities. For instance, the social network between individuals formed by QQ, WeChat, and Weibo, the network of web links consisting of thousands of pages on the Internet, and the logistics network consisting of transport traffic between cities, all can be modeled as graphs. Moreover, many real-world problems can be solved by transforming them into optimization problems on graphs. For example, identifying hackers or terrorists can be considered as a problem of detecting anomalous nodes in a graph, and knowledge graph completion can be viewed as a link prediction problem in a graph. Therefore, graph deep learning has recently emerged to solve many problems in graphs and tasks of graph analysis. However, in contrast to regular Euclidean space data, graphs have a nonlinear data structure defined in an irregular non-Euclidean space. This nonlinearity is due to the disorder of nodes and the connectivity between them. An adjacency matrix is a straightforward representation of a graph. However, this representation only captures neighboring relationships between vertices and cannot describe higher-order structure information, such as paths. Furthermore, for large-scale graphs, adjacency matrices have high dimensionality and data sparsity. Therefore, traditional deep learning techniques designed for regularly structured data cannot be applied to graph-structured data. Transformation of graph-structured data into general data that traditional methods can easily process is a challenging task in deep learning.

Graph representation learning is effective for obtaining latent low-dimensional representations that facilitate subsequent graph analysis tasks by learning graph structure and node attributes, while ultimately preserving the properties required for subsequent tasks [

1,

2]. In recent years, graph representation learning has been widely studied, and several graph representation learning methods have been proposed. For instance, the matrix factorization-based methods obtain low-dimensional representations by factorizing the adjacency matrix using suitable methods such as GraRep [

3], HOPE [

4], and M-NMF [

5]. Random walk-based methods, such as DeepWalk [

6], Node2vec [

7], and Metapath2vec [

8], learn local neighborhood connectivity and global structure information by traversing a graph to obtain its low-dimensional representation. Proximity-based methods, such as SDNE [

9] and LINE [

10], apply deep learning methods that use proximity loss functions to preserve the node proximity in a graph, such that nodes that are close together in the input graph are likewise in the embedding space. In the real world, nodes in most graphs come with rich attribute information. However, while most proposed methods design algorithms based solely on the topological structure of graphs, they often overlook valuable attribute information associated with nodes. In reality, node attributes and graph structure are two distinct, yet complementary sets of information for successful graph analysis tasks in attribute networks. Therefore, for effective graph analysis in attribute networks, both the topological structure of networks and the attribute information associated with nodes must be considered.

In recent years, graph convolutional networks (GCNs) have demonstrated high performance in graph representation learning due to their capacity to aggregate and transform information from graph structure and node attributes within a node’s neighbors into node representations [

11,

12,

13,

14,

15]. GCN is a semi-supervised graph representation learning method that requires a wide range of labeled nodes for representation learning. Since labeling nodes is usually expensive, and the graph representation learning is considered a generic task independent of the downstream tasks, graph representation learning is typically performed in an unsupervised learning manner. Graph AutoEncoders (GAEs) [

12], which are unsupervised learning models composed of the concept of autoencoders with GCN and graph representation learning, have been researched lately. GAEs consist of two parts: an encoder and a decoder. The encoder uses GCNs to embed the nodes into a low-dimensional space by learning from the adjacency matrix and node attribute matrix of an input graph. The decoder reconstructs the adjacency matrix of the graph by a simple inner product. During network training, the network preserves the topological structure of the graph by the decoder to perform unsupervised learning. Many GAE variants have been proposed to learn node representation in an unsupervised way. Due to their high node representation ability under unsupervised learning, they have been utilized in various graph analysis tasks, such as node clustering [

16,

17], link prediction [

18,

19], and graph anomaly detection [

20,

21].

Although many GAE-based methods have been proposed, unsupervised learning of these models is highly dependent on the reconstruction of the graph structure and ignores the attribute information of nodes. Therefore, these models cannot determine the amount of information on the latent node attributes contained in the node embedding. Consequently, these models do not ensure that the node vector representation contains reliable node attribute information. Additionally, most GAE models use the GCN as their encoders, and a fixed 0/1 adjacency matrix and a node attribute matrix as input. During model training, when aggregating node attributes, each neighbor node is aggregated using the same weight. Thus, the aggregation process of GCN smooths the node attributes, preserving the structural proximity, while destroying the node attribute similarity of the original attribute space [

22]. The node attribute similarity plays a crucial role in many graph analysis tasks. For instance, in attribute networks, node clustering consists of partitioning the nodes based on attribute and structure similarities, and link prediction consists of predicting whether an edge exists between every two nodes based on these similarities. For graph representation learning, the node information is mapped from an original high-dimensional space to a latent low-dimensional space. Furthermore, regardless of the different node representations between the original space and the latent space, it is expected that the similarity between the nodes in the two spaces is preserved. This is particularly desirable for downstream tasks, such as node clustering and link prediction.

In this paper, we aim to propose a novel graph autoencoder model that can better maintain the attribute similarity between nodes. Two main challenges were faced when designing this model. Firstly, the designed GAE framework should effectively fuse topological structure and node attributes to alleviate the destruction of node attribute similarity by neighbor smoothing during the aggregation process of GCN. To address this, a fusion strategy that naturally incorporates the original graph structure and the node attribute neighbor graph based on node attribute similarities as the input of the graph convolutional encoder was proposed in the designed GAE framework. In the graph convolutional encoder, the node aggregation operation can be decomposed into two parts: the mean aggregation from node neighbors in the original graph and the linear aggregation by similarity coefficients from node attribute neighbors. The aggregation process alleviates the destruction of node attribute similarity and strengthens its maintenance. Secondly, the node attribute similarity should be preserved in an unsupervised learning manner. Thus, when developing the structure reconstruction decoder, a similarity reconstruction decoder that directly computes the similarity between node representation vectors and steers the unsupervised learning of the model by the node similarity difference between the original attribute space and in embedding space is added. As a result, the model ensures that the node representations preserve both the structure and attribute similarities between nodes.

The main contributions of this paper can be summarized as follows:

(1) A novel GAE framework integrating node attribute similarity and structural information is proposed. This new framework can effectively reconstruct node attribute similarity, ensuring that the obtained node representations maintain the same node attribute similarity between nodes as the original attribute space.

(2) An effective fusion strategy for node attribute similarity and structural information is proposed. An attribute neighbor graph is constructed by using the attribute similarity between nodes as edge weights, and then integrated with the original graph as the structural input of the GCN encoder. In this way, nodes can aggregate their neighbor attribute information in two distinct ways in the GCN encoder to effectively fuse node attribute similarity and structural information while avoiding over-smoothing of GCN to some extent.

(3) Extensive experiments on link prediction and node clustering are conducted on three real-world datasets to evaluate the effectiveness of the proposed model. The experimental results show that the proposed model outperforms state-of-the-art methods in two graph analysis tasks.

3. Graph Autoencoder with Preserving Node Attribute Similarity

In this section, the graph autoencoder with preserving node attribute similarity (GAEPNAS) is presented, including the basic model framework, fusion module with graph structure and node attribute similarity, encoder module, decoder module, and training algorithm.

3.1. Overall Model Framework

Given an attribute graph

, the topology structure of graph

is designated by an adjacency matrix

and

denotes an attribute matrix of the nodes. The objective is to embed graph structures and node attributes into a low-dimensional representation

using GAEPNAS, where

preserves the attribute similarity of the nodes in

and the graph structural information. The overall framework of the proposed GAEPNAS model is shown in

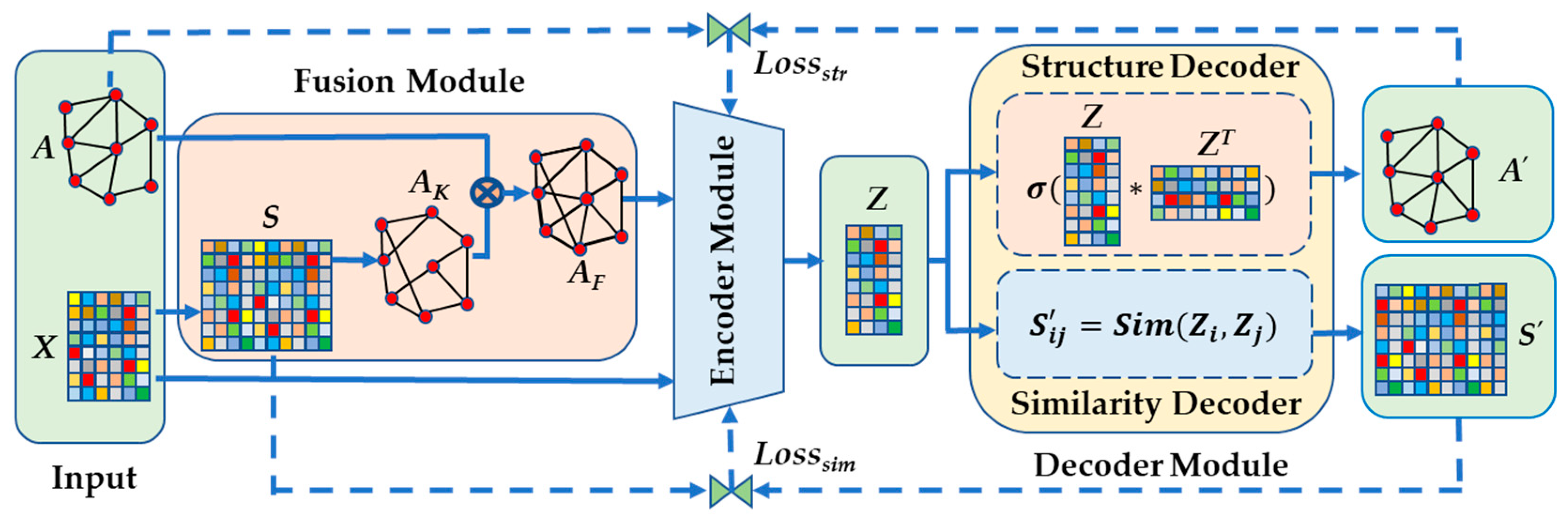

Figure 1.

The model is mainly composed of three modules: a fusion module, an encoder module, and a decoder module. First, in the fusion module, a k-nearest-neighbor (KNN) graph is constructed based on the similarities between nodes. The KNN graph and the original input graph are then integrated into a synthetic graph to combine the graph structure information and node attribute similarity information in a unified way. Second, in the encoder module, the latent node representation is obtained through l-layers GCN to learn from the synthetic graph. Third, in the decoder module, dual decoders are used to reconstruct the graph adjacency matrix and the node attribute similarity matrix. Finally, the loss function containing a structural loss and a loss of node attribute similarity is calculated to update the parameters in reverse and thus ensure that the node representation preserves the original structural and node attribute similarity information.

3.2. Fusion Module

In attribute networks, similarities exist between the nodes in terms of both the network structure space and the node attribute space. However, it is important to note that just because two nodes have a similar network structure does not necessarily mean they have similar node attributes and vice versa. Thus, in graph representation learning, it is crucial to consider the dependency of both spaces and align them cohesively. To learn the node attribute similarity information using GAE, the attribute similarity relationship between nodes must be converted into an attribute neighbor space. Constructing KNN graphs is an effective way to achieve this conversion. Therefore, a KNN graph is first constructed based on the similarities between nodes. The KNN graph and the input graph are then integrated into a synthetic graph so that the attribute similarity information can be fused with the structural information. The process is described below.

(1) Calculating the attribute similarity matrix

The attribute similarity matrix

where

represents the attribute similarity between nodes

vi and

vj, is calculated by a similarity function

on attributes

Xi and

Xj as

This similarity function can be a cosine similarity function or other similarity functions such as Jaccard coefficient, Euclidean distance, and Pearson correlation, depending on the characteristics of the node attributes.

(2) Constructing a KNN graph

In the attribute similarity matrix

, if the value of

is closer to 1,

and

are more similar, which indicates that it is more likely that the two nodes

and

are linked. Thus, given a parameter

, a KNN graph

, based on the similarity between each node pair in the similarity matrix

, is generated. The node set of the graph

is similar to that of the input graph. If

is one of the top-

similar neighbors of

, or the latter is one of the top-

similar neighbors of

, then an edge between

and

in the KNN graph exists. Otherwise, there is no edge between

and

. The weight of the edge connecting nodes

and

is calculated as

where

denotes the node set of the top-

similar neighbors of

.

For

, it is considered that

and

are dissimilar, and thus

. If

,

and

are more similar, and the weight

of the edge linking the two nodes

and

is greater. Note that if

, node

has a self-loop, which is not currently considered and will be considered again in the encoder module, and therefore it can be assumed that

. Therefore, the adjacency matrix

is given by

(3) Integrating KNN graph with original input graph

For attributed networks, the structural and node attribute information are two different types of information that are independently and mutually complementary. Effectively integrating them is a challenging task. In this paper, the KNN graph

and the original input graph

are integrated into a synthetic graph

with edge weights. The adjacency matrix

of the graph

is calculated as

where

is a parameter that balances the effect of the input graph and the KNN graph.

Analyzing matrix

using Equation (4) shows that matrix

is a symmetric matrix and

The pseudocode of the whole fusion module is summarized in Algorithm 1.

| Algorithm 1. Fusiongraph |

| Input: Adjacency matrix , Attribute matrix , Number of nearest neighbor , and . |

| Output: Similarity matrix and Adjacency matrix of graph . |

| 1. | for // represents the number of nodes. |

| 2. | for |

| 3. | ; |

| 4. | end |

| 5. | end |

| 6. | Let ; // denotes the adjacency matrix of the KNN graph. |

| 7. | for |

| 8. | for |

| 9. | if then ; |

| 10. | end |

| 11. | ; |

| 12. | end |

| 13. | ; |

| 14. | return and ; |

3.3. Encoder Module

The encoder is the core module of the GAEPNAS, which obtains a latent node representation by learning the attribute and structure information of the synthetic graph. GCN is an excellent graph neural network, which generalizes the convolutional neural network (CNN) in graph space and uses the first-order approximation of Chebyshev polynomials to simplify the computation [

11]. Because of the powerful graph representation learning capability of GCN, GAE usually uses GCN as its encoder. In the encoder module, the graph encoder consists of

GCN convolutional layers. The input of the encoder is the adjacency matrix

and the attribute matrix

, while the output is the node representation matrix

.

is the

identity matrix.

(by adding a self-loop for each node, it is ensured that it also participates in the attribute aggregation of new node embedding), and the diagonal matrix

with

, are first calculated. The

-layer GCN encoder model learns the node representation with the following propagation rule:

where

is the trainable weight matrices of the

-th layer,

,

is the activation function for the first

layers, and a linear activation function is used for the last layer. The encoder embeds the topological structure and node attribute of the input graph into representation

.

By substituting Equation (4) and

into Equation (5), the following is obtained:

Let

and

and

are the neighbor set for node

in graph

and

which contains node

, respectively. Then Equation (6) can be written in the following form:

It can be deduced from Equation (7) that the graph convolutional operation of the encoder can be decomposed into two convolutional suboperations: one on the original input graph and one on the KNN graph, with parameter weight matrices shared in the -th layer. For the convolutional operation on the original graph, the message propagation occurs in terms of the neighbors of nodes in graph structure space, while its aggregation is mean due to the adjacency matrix being a 0/1 matrix. In contrast, for the convolutional operation on the KNN graph, the propagation of node representation is in terms of the attribute neighbors of nodes in attribute space, and it aggregates the representation of the top- nearest neighbors based on the different attribute similarities between the nodes. This can be interpreted as a similarity attention coefficient. Therefore, the encoder can propagate and aggregate node characteristics in both the structure space and attribute space, using sharing parameter matrices. This enables the node representation to effectively fuse information from both spaces, to alleviate the destruction of node attribute similarity, and to avoid over-smoothing of GCN to some extent.

3.4. Decoder Module

The decoder module consists of two decoders: a structure decoder and a similarity decoder. The structure decoder is used to reconstruct the adjacency matrix

by computing the inner product of the node representation as

where

denotes the activation function.

The similarity decoder is used to reconstruct the attribute similarity matrix

between nodes in the embedded space by computing the node representation similarity between them:

where

is a similarity function, and

and

are node representation vectors of nodes

and

, respectively.

The two decoders are designed to perform unsupervised learning of the GAEPNAS model by comparing the adjacency matrix and attribute similarity matrix of the embedded space with those of the original space, so that the whole encoding–decoding process can make full use of the graph structure and node attribute information to obtain a better node representation that preserves both the topological structure and node attribute similarity. Therefore, with the two decoders, the GAEPNAS model can implement unsupervised learning by comparing the adjacency matrix and attribute similarity matrix of the embedded space with those of the original space, so that obtaining a noderepresentation can effectively preserve both the topological structure and node attribute similarity.

3.5. Loss Function

In the GAEPNAS model, the primary focus is on the error that arises between the reconstructed adjacency matrix and that of the original graph. Additionally, the error between the reconstructed similarity matrix and the node attribute matrix of the original graph is also taken into account. Thus, a structure loss and a similarity loss are defined to measure the two errors. The structure decoder reconstructs the adjacency matrix

by computing the inner product. As the input adjacency matrix

is a 0/1 matrix, the structure loss

is defined using a cross-entropy of the following form:

The similarity decoder reconstructs the attribute similarity matrix. The similarity loss is defined as

where

is the Frobenius norm.

Therefore, the overall loss function is expressed as

where

is a parameter used to balance the structure loss and the similarity loss.

3.6. Training Algorithm

The whole GAEPNAS model is optimized by minimizing the loss function . The training algorithm for GAEPNAS is presented in Algorithm 2. Given an attribute network , Algorithm 1 is initially called to convert the neighborhood relationship of the nodes in the attribute space into a KNN graph based on their attribute similarity, and then fuse it with the topology graph of the original network so that the fused graph has both the topology of the original network and the neighborhood relationship of the nodes’ attributes (line 1). The self-loops are then added to the fusion graph, the degree matrix is computed, and the parameters of the GAEPNAS model are initialized in preparation for the model training (lines 2–4). Subsequently, -rounds are started to train the model (lines 5–14). In the forward propagation process (lines 6–12), the node representation matrix is obtained by -layers GCN of the encoder (lines 6–9), the adjacency matrix and similarity matrix are reconstructed, and the loss function is calculated (lines 10–12). The model is updated with its stochastic gradient by minimizing the loss function (line 13). After rounds of the model training, the graph embedding is derived and a trained model is ultimately obtained.

The time complexity of the training algorithm is analyzed in the sequel. It can be seen that the time complexity of Algorithm 1 to compute matrices

and

is

, where

is the number of nodes and

is the dimension of the node attributes. In lines 2–4, the time complexity of some simple addition and initialization operations is

. In lines 5–14, the reconstruction of the node attribute similarity matrix is added to the general GAE model to train the proposed GAEPNAS. Thus, the main calculation amount is attributed to the training of the model and the computing of the similarity matrix

. The time complexity of the general GAE model is

and that of the computation of matrix

is

, where

denotes the number of iterations,

denotes the number of edges, and

denotes the dimension of the

-layer of the encoder. In summary, the time complexity of the model training is

.

| Algorithm 2. Training GAEPNAS |

| Input: Adjacency matrix , Attribute matrix , Number of nearest neighbors , Number of iterations , Number of hidden layers , and Parameters and . |

| Output: Node Representation matrix and model parameter . |

| 1. | Compute similarity matrix and adjacency matrix of graph by calling Algorithm 1; |

| 2. | Compute and diagonal matrix with ; |

| 3. | Initialize model parameter ; |

| 4. | ; |

| 5. | for |

| 6. | for |

| 7. | ; |

| 8. | end |

| 9. | ; |

| 10. | Reconstruct adjacency matrix ; |

| 11. | Reconstruct similarity matrix by specific similarity function; |

| 12. | Compute according to Equations (10) and (11); |

| 13. | Compute partial derivative with back-propagation algorithm to update model parameter ; |

| 14. | end |

| 15. | return and ; |

4. Experiments

In this section, experiments are conducted on benchmark datasets, and the effectiveness of the proposed GAEPNAS is evaluated with relevant baselines on two classic downstream tasks, namely link prediction and node clustering. The experimental environment is as follows:

Intel(R) Core(TM) i7-12700 CPU @2.10 GHz 4.90 GHz, 128 G of RAM, NVIDIA Ge-Force RTX 3090 GPU; Ubuntu22.04.1LTS, Python 3.6.13, Pytorch 1.10.2.

4.1. Datasets

To evaluate the effectiveness of the proposed model, three public real-world citation network datasets (Cora, CiteSeer, and PubMed), which are the most commonly used graph datasets to evaluate the GAE and VGAE models, are considered. In these citation networks, the nodes represent scientific papers and the links represent citation relationships between papers. Cora’s papers are from machine learning fields, and they are classified into seven classes; CiteSeer contains papers from six categories in the computer science field; and PubMed contains scientific papers related to diabetes and its papers can be classified into three classes. The detailed information is presented in

Table 1.

In the Cora and Citseer datasets, the attribute vector of each paper takes the form of 0/1-valued word vector indicating the absence/presence of the corresponding word from the dictionary. When a word does not present in either paper, it does not impact the similarity between the two papers. Thus, in the original attribute space, the attribute similarity between attribute vectors

and

can be expressed as

where

and

respectively denote the “AND” and “XOR” operations and

is the dimension of the node attributes.

In the PubMed dataset, the attribute vector of each paper contains term frequency–inverse document frequency (TF–IDF) scores for 500 words. The attribute similarity between nodes is calculated by the cosine similarity in the original attribute space because the attribute vectors have continuous real values.

In the embedding space of nodes, all three use cosine similarity to calculate the attribute similarity between nodes because their node representations have continuous real values.

4.2. Baseline Methods

Some classical baseline methods are considered for comparison with the proposed GAEPNAS. In most related studies, these methods have been used for comparison on link prediction and node clustering tasks. These methods are summarized as follows:

Spectral clustering [

38]: an effective graph representation method to perform dimensionality reduction by the eigenvalues.

DeepWalk [

6]: a classical graph representation method that trains the skip-gram model for sequences generated by random walk on graphs.

GAE and

VGAE [

12]: popular unsupervised graph representation methods that combine GCN with the (variational) autoencoder to graph representation learning.

Graphite-AE and

Graphite-VAE [

39]: variants of the GAE and VGAE methods that reconstruct the original graph by a multilayer iterative procedure.

ARGA and

ARVGA [

17]: variants of the GAE and VGAE methods that use adversarial models to learn node representation.

Linear GAE and

Linear VGAE [

40]: simplified versions of the GAE and VGAE methods with one-hop linear models.

GASN [

35]: a variant of the GAE method where the graph structure and node attribute are reconstructed using two decoders.

AT-GAE and

AT-VGAE [

41]: generalization methods of GAE and VGAE using adversarial training.

4.3. Link Prediction

Link prediction is one of the most important tasks in graph analysis. Its objective is to find missing links between nodes or predict possible links between nodes in the future. The performance of GAEPNAS is evaluated with the link prediction task. In the experiments, the adjacency matrix reconstructed by the decoder is directly used as the predicted adjacency matrix.

4.3.1. Metrics of Link Prediction

The accuracy of various link prediction methods is determined using two metrics: the area under a receiver operating characteristic curve (

) score and the average precision (

) score. The

is defined as the area enclosed by the coordinate axis under the receiver operating characteristic (ROC). It is used to quantify the accuracy of link prediction. In practice, it can be seen as the probability that the link prediction score of a missing link randomly chosen is higher than a nonexistent link [

42]. For

independent comparisons, if missing links having a higher score exist

times and those having the same score exist

times, then the

is calculated as:

Because the ROC curve is located above the line and its area is not greater than 1, . For a link prediction algorithm, the closer the to 1, the higher its accuracy.

The

quantifies the precision of link prediction, which is defined as the area under the precision–recall curve. In practice, it is calculated by the weighted mean of precisions achieved at each threshold, while the increase in recall from the previous threshold is used as the weight [

43]:

where

and

are the recall and precision at the

-th threshold, which are calculated as in [

43]. For a link prediction algorithm, the higher the

value, the higher its precision.

4.3.2. Implementation

For each dataset, all the edges are randomly split into a training set (85%), a validation set (5%), and a test set (10%). In addition, the same number of non-edges is randomly sampled as negative samples added to both the validation set and the test set. Because the Cora and CiteSeer are relatively small datasets, the GAEPNAS was trained for 200 epochs and updated using the Adam algorithm with a learning rate of 0.001. For PubMed, the model is trained for 500 epochs and optimized using the Adam algorithm with a learning rate of 0.01. The parameter settings of the GAEPNAS model are shown in

Table 2. Because the characteristics of each dataset (e.g., the average degree of nodes, the dimensionality and distribution of attributes, etc.) are different, the values

,

and

in

Table 2 are also taken differently. All parameters of the baseline methods are set according to their original papers. Each experiment is randomly run 10 times, and the averaged

and

are determined.

4.3.3. Experimental Results

The experimental results of link prediction are shown in

Table 3, where the best results are marked in bold. Note that the inputs of Spectral and DeepWalk include only graph structure, while the inputs of the other methods include graph structure and node attributes. The GASN and GAEPNAS methods aim to reconstruct the node attributes and attribute similarity, while the other methods (GAE and VGAE, Graphite AE and VAE, ARGA and ARVGA, Linear GAE and VGAE) only focus on reconstructing the graph structure. It can be observed from

Table 2 that the methods using graph structure and node attribute information perform significantly better than those (Spectral and DeepWalk) only using graph structure information. This shows that both the graph structure and node attribute information are beneficial for the link prediction task. In addition, the GASN and GAEPNAS methods, which reconstruct both the graph structure and the node attribute information, perform better than the other methods that only reconstruct graph structure information. This is because the preservation of node attribute information is crucial. Furthermore, GAEPNAS performs better than GASN for the Cora and CiteSeer datasets, but slightly worse than GASN for the PubMed dataset. The proposed GAEPNAS model integrates the graph structure information with the node attribute similarity information in the encoder by the KNN graph, and reconstructs the graph structure and node attribute similarity in the decoder, while the GASN model uses a general encoder and reconstructs the graph structure and node attribute in the decoder. Thus, the proposed model can more adequately learn the node attribute similarity information to node representation in the encoder, resulting in higher link prediction performance.

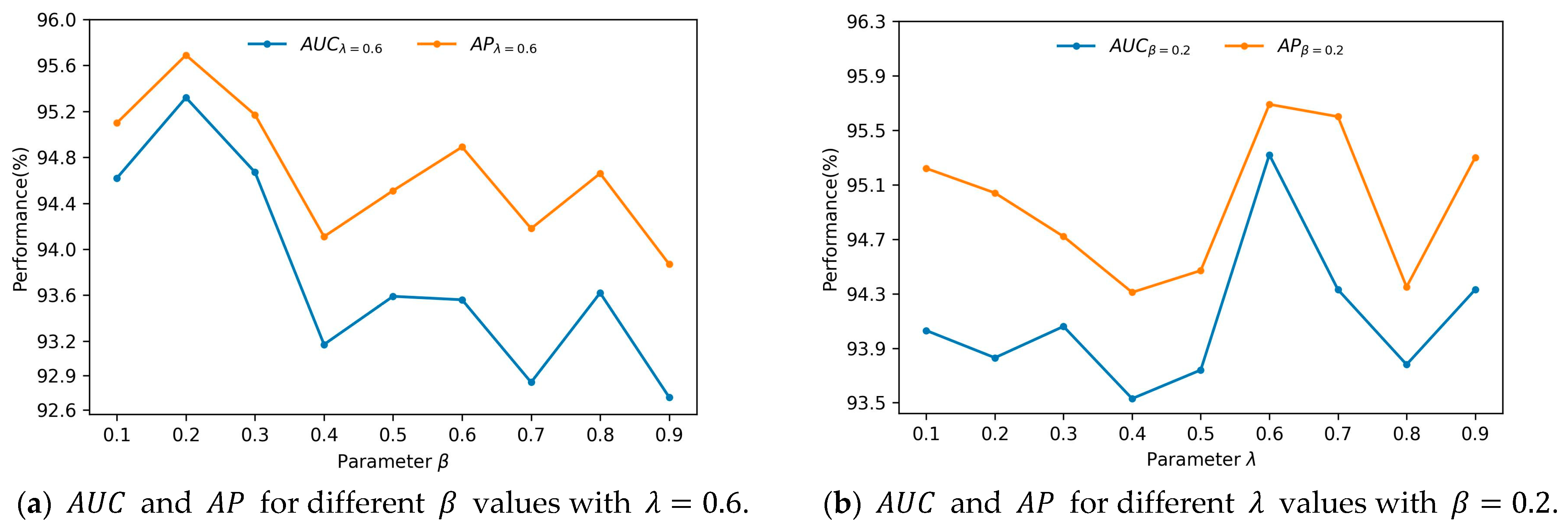

4.3.4. Parameter Analysis

The main parameters that affect the performance of GAEPNAS are

in Equation (4) and

in Equation (12). This paper only studies the impact of these two parameters on the link prediction task for the Cora dataset. In Equation (4), as

increases, the ability of the model to learn graph structural information becomes more powerful while the ability to learn the node attribute similarity becomes weaker. In Equation (12), as

increases, it is expected that more information about graph structure is preserved in node representation and less information about node attribute similarity is preserved accordingly. In the experiments,

and

are chosen in the range of

with an interval of 0.1. The model achieves the highest performance for

and

. By fixing

to 0.6, the

and

curves of parameter

are plotted (

Figure 2a). Similarly, by fixing

to 0.2, the

and

curves of parameter

are drawn (

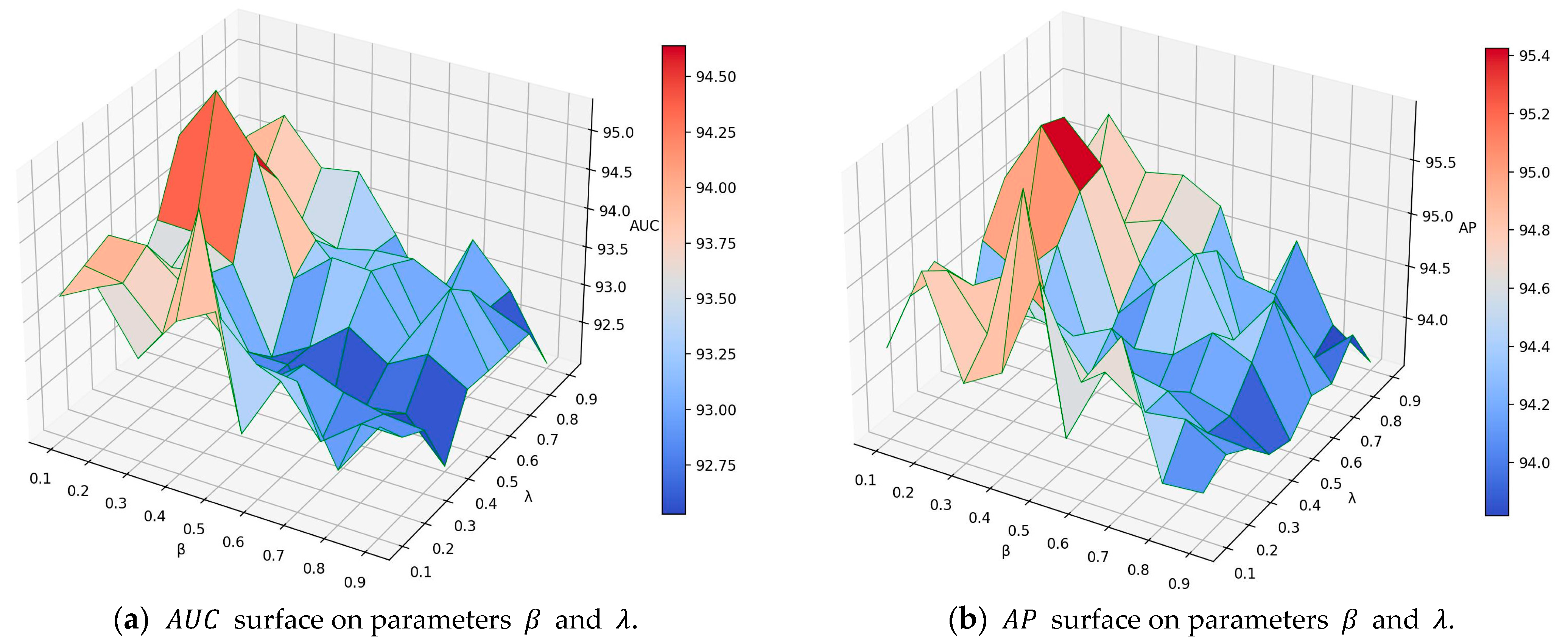

Figure 2b). The 3D surface plots of

and

for

and

are shown in

Figure 3a,b. It can be observed from

Figure 2a that as

increases,

and

first increase and then decrease. When

,

and

reach their maximum values. This may be due to the fact that the link prediction for the Cora dataset is mainly based on the similarity between node attributes, while relying on less information about the graph structure. From

Figure 2b, it can be observed that as

increases,

and

first decrease, then increase, and finally decrease again. When

= 0.6,

and

reach their maximum values. Moreover, when

increases into the

interval, the two types of information preserved in the node representation reach a relative balance, which results in a higher link prediction performance. From

Figure 3 it can be observed that as

increases,

and

first increase and then decrease. Furthermore, the GAEPNAS model achieves a high performance of link prediction when

and

. The

and

vary with parameters

and

in the ranges of

and

, respectively. Thus, the GAEPNAS model can lead to acceptable results for all the combinations of parameters

and

.

4.4. Node Clustering

Node clustering is one of the fundamental unsupervised methods of graph analysis. Its goal is to classify similar nodes into the same clusters and dissimilar nodes in different clusters without supervision or prior knowledge of the clusters. In the experiments, node clustering is used as a downstream task to evaluate GAEPNAS.

4.4.1. Metrics of Node Clustering

The clustering results are measured using three metrics: clustering accuracy (), normalized mutual information (), and adjusted Rand index (). The values of these metrics are in the range of , while the larger the value, the higher the clustering performance. It is assumed that and are, respectively, the ground truth classes and the clustering results of the dataset with nodes, where and represent a ground-truth class and a cluster, respectively. Let , and ; the three metrics are then defined as follows:

The

ACC measures the percentage of the best matching between the clustering result and the ground-truth class:

The

NMI measures the normalized similarity between the predicted clusters and the ground truth classes [

44]:

where the numerator is the mutual information between the ground truth classes

and the predicted clusters

and the denominator is the arithmetic mean for the information entropy between

and

. If

,

; and if

and

are completely different,

.

The

ARI measures the similarity between the predicted clusters and the ground truth classes based on the pairwise comparison of included nodes [

45]:

4.4.2. Implementation

In the experiments, node representations are first obtained by the baseline methods and the proposed method, and then clustered into classes using the K-Means clustering algorithm. The cluster number in K-Means is set as the number of classes for each dataset. For the node clustering task, we do not use any label in the unsupervised learning process. As with the link prediction task, the dataset is randomly separated into a training set (85% edges), a validation set (5% edges), and a test set (10% edges) for each experiment. The experimental parameters of GAEPNAS are set as shown in

Table 4. All parameters of the baseline methods are set according to their original papers. Each experiment is also repeated 10 times, and the averaged

ACC,

NMI, and

ARI are determined.

4.4.3. Experimental Results

The experimental results of the node clustering task are presented in

Table 5. It can be observed that the models that use both structure and attribute information perform significantly better than Spectral and DeepWalk, which solely rely on graph structure information. Thus, the node attribute information plays a crucial role in enhancing the node clustering performance. The proposed GAEPNAS model shows superior performance compared to GAE-based models (GAE and VGAE, Graphite AE and VAE, ARGA and ARVGA, Linear GAE and VGAE) that only reconstruct the graph structure. Specifically, the GAEPNAS model demonstrates better performance than the GASN, which reconstructs both the graph structure and node attribute. GAEPNAS incorporates the structural and attribute similarity information in the encoder and simultaneously reconstructs the graph structure and node attribute similarity. Furthermore, the effective integration and complementarity of both types of information improve its node clustering performance. Thus, the GAEPNAS model outperforms the other models on all three datasets, as shown in the results of all the metrics.

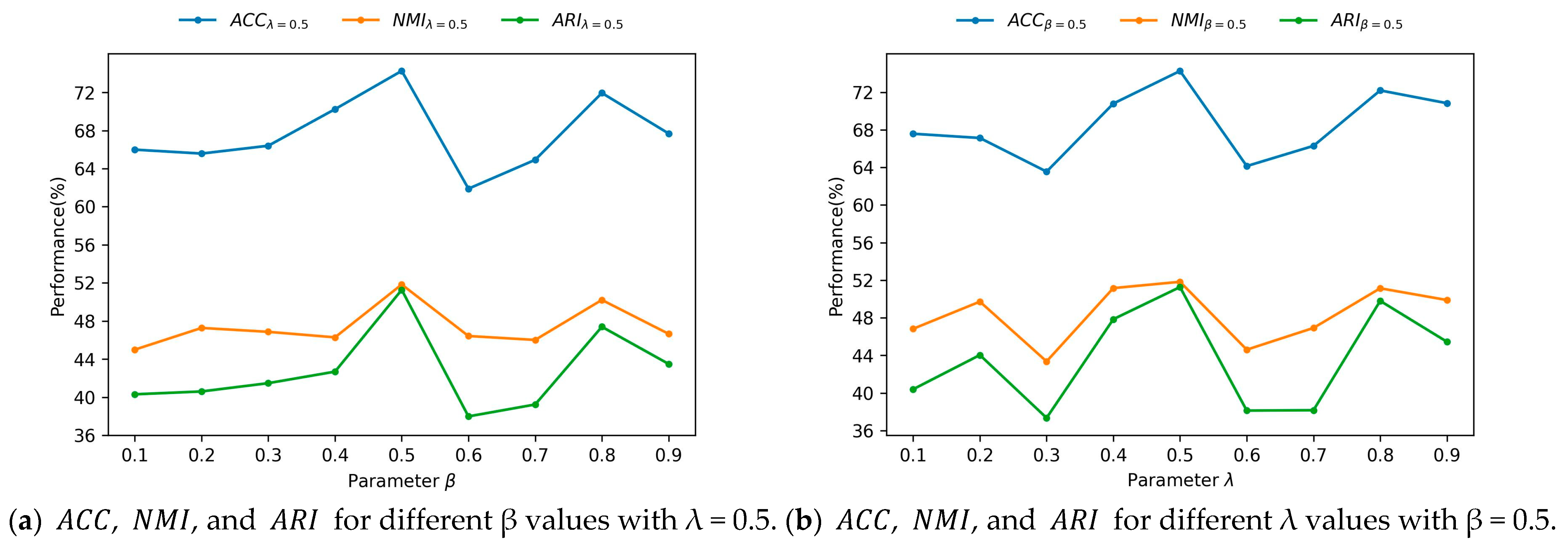

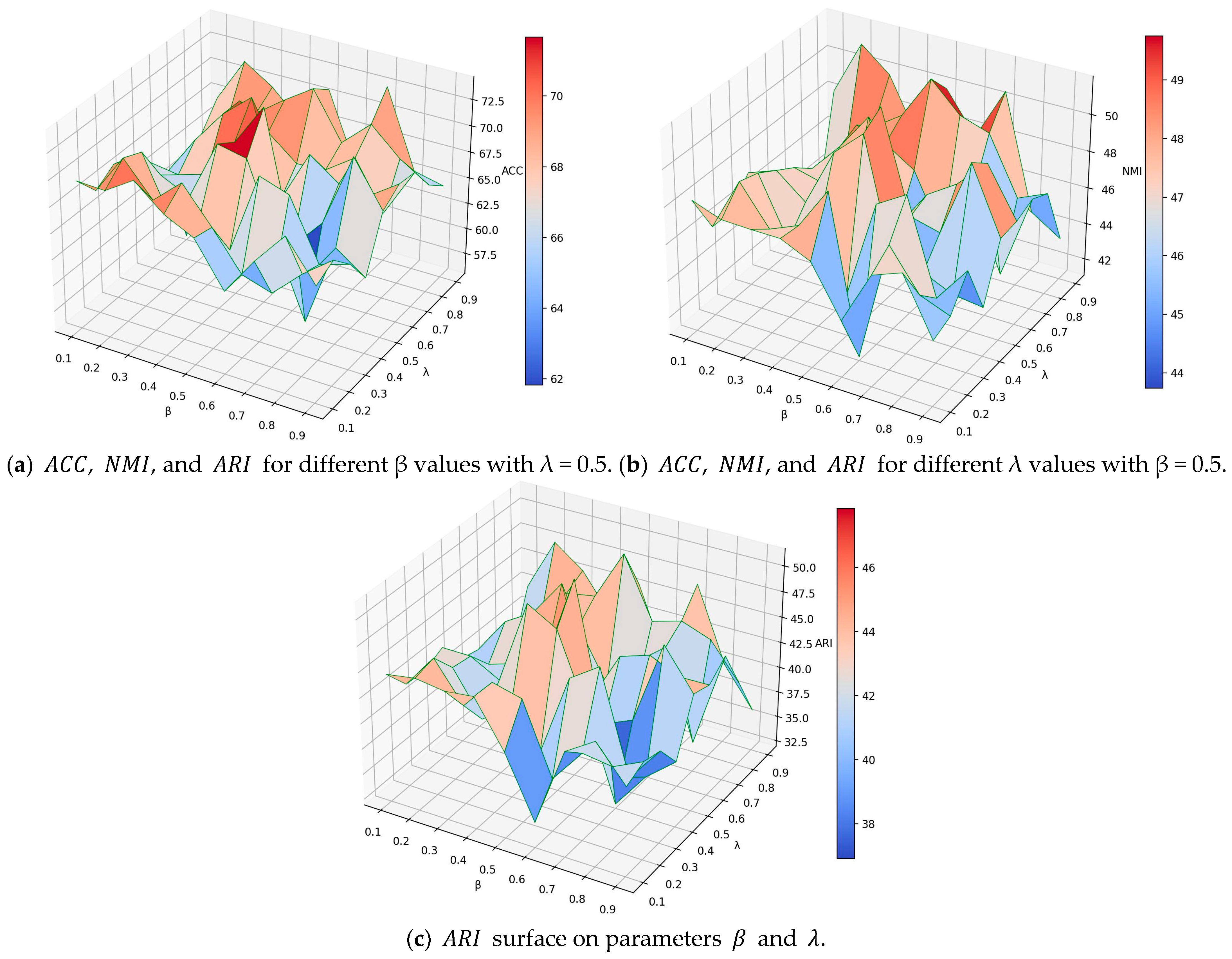

4.4.4. Parameter Analysis

In this subsection, the impact of parameters

and

on the node clustering results is studied for the Cora dataset. Similarly to the previous link prediction task,

and

are varied in the range of

with an interval of 0.1. The model shows the highest node clustering performance for

and

. The

,

, and

curves of

with

are shown in

Figure 4a. Similarly, the

,

, and

curves of

with

are shown in

Figure 4b. The 3D surface plots of

,

, and

for

and

are shown in

Figure 5a–c, respectively. It can be observed from

Figure 4a,b that the performance measured by

,

, and

is low at both ends and high in the middle. The maximum values of

,

, and

are achieved for

and

, respectively.

Figure 5 shows that the GAEPNAS model achieves high clustering performance for

and

. In node clustering, similar nodes are classified into the same clusters and dissimilar nodes are grouped in different clusters. Nodes in the same cluster are expected to be adjacent to each other and similar in attribute space; while nodes in different clusters are dissimilar and not adjacent. Therefore, the node attribute similarity information plays the same role in node clustering as graph structural information. As a result,

and

should be reasonable and interpretable in the range of

and

, respectively. As can be observed in

Figure 4 and

Figure 5, the performance of the proposed model is slightly sensitive to the parameters

and

.

4.5. Ablation Study

To further analyze the effectiveness of the various modules in the proposed model, an ablation study on the Cora and CiteSeer datasets is conducted in four different configurations: GAE (the general GAE model), GAE+KNN (adding the fusion module to the general GAE model), GAE+RecSim (adding a node attribute similarity decoder and corresponding reconstruction loss to the general GAE model), and GAEPSNA (GAE+ KNN+Recsim).

The ablation experimental results are presented in

Table 6 and

Table 7. It can be observed that GAE has the worst results because it only preserves the structural information in the learning process. Additionally, GAE+KNN and GAE+Recsim show higher performance than GAE due to their strengthened learning of node attribute information. Furthermore, the proposed GAEPNAS model has the highest performance. This is because its learning process enhances the learning for node attribute information and emphasizes the preservation of node attribute similarity in the node representation. It can also be deduced that each module of the proposed model is effective and can contribute to its high performance.

5. Discussion

The GAEPNAS model fuses the KNN graph that can represent the attribute similarity between nodes with the original graph, then learns the representation of the nodes from the synthetic graph though a GCN encoder, and finally reconstructs the original graph structure and attribute similarity between nodes by leveraging dual decoders so that the nodes maintain the same attribute similarity between nodes in the embedding space as in the original space. Despite the model’s effectiveness, there are some limitations. Below, we discuss two major limitations of the model and how they can be overcome in future works.

(1) Computational scalability: In the proposed model, we need to calculate the node attribute similarity for each pair of nodes in the graph in order to construct the KNN graph and reconstruct the node attribute similarity matrix, which results in quadratic computational complexity. Thus, for real-world large graphs, the theoretical complexity is unacceptable. However, in real networks, it is difficult for two nodes to interact with each other if they are not in the same connected component, even if they are similar in node attributes. The closer they are in the network, the more interconnected they are. For example, in a citation network, researchers usually search papers with no more than five hop neighbors to keep track of a paper. Therefore, in the future, in order to reduce computational overhead, we will consider computing the attribute similarity between each node and its -hop neighbors to avoid computing the full pairwise similarity matrix.

(2) Fusion of structural and attribute similarity information: It was experimentally discovered that our model’s performance is slightly sensitive to the parameters and . is used to balance the fusion effect of the original input graph and the KNN graph, and is used to balance the structure loss and the similarity loss. For the performance of the proposed model, the balance of structural and attribute similarity information is crucial. However, in Equation (4), is a scalar parameter which is difficult to adapt to the requirements of complex networks. Setting the parameter as a learnable vector or matrix may be an effective way to do this. Therefore, in future work, we will attempt to introduce learnable parameters and into the GAEPNAS model to reach more stable performance.