1. Introduction

Missing data is a reoccurring challenge in statistical analyses in the life sciences and many other domains from the information sciences. For example, patients may refuse to share sensitive details about their health. In repeated measurement designs, patients may either miss single measurements or drop out completely. Survey data may also be missing by design where not all respondents receive the same set of questions. Generally speaking, there are three different mechanisms to distinguish when handling missing data [

1]. The

missing completely at random (MCAR) mechanism assumes that missingness does not depend on the data (neither the observed nor the unobserved part). The other two mechanisms allow for missingness to depend on the data. For

missing at random (MAR), the missingness depends on the observed components of an observation (i.e., the dependance relation is encoded in the observed data), while for

missing not at random (MNAR), the missingness depends on the unobserved compontents of an observation (i.e., the dependance relation is not encoded in the observed data).

Thus, depending on the (usually unknown) missing mechanism that governs the occurrence of given missing values, the common practice of listwise deletion (i.e., deletion of observations that contain at least one missing value) may lead to reduced sample size and thus information loss. As a remedy, missing data is often imputed through different techniques such as simple mean or mode imputation, or advanced imputation methods such as MICE [

2,

3] or techniques stemming from machine learning (ML) [

4,

5,

6,

7]. However, data imputation may also affect the quality and validity of prediction or inference from resulting models [

3,

8,

9,

10,

11]. It is therefore crucial to analyze the extent to which imputation methods influence subsequent regression or classification models obtained from imputed data. In the context of classification, a few studies have compared the performance of different imputation methods [

12,

13,

14,

15]. For our purposes most notably due to the variety of imputation and classification methods, Farfanghar et al. [

12] compared the predictive performance of six different imputation methods w.r.t. the predictive performance of five classifiers. As imputation methods, the authors used Hot Deck imputation, Naive Bayes imputation, mean imputation as well as a polytomous regression-based imputation method. Additionally, the former two methods, were also embedded within a custom imputation framework meant to improve the performance of their standalone counterpart. Although they found that imputation generally improves performance, no imputation method was found to regularly outperform its competitors. Since their 2008 study, new imputation methods were suggested, for example, the Refs. [

4,

6]. In particular, tree-based ML approaches have shown some enhancements with respect to accuracy for regression problems [

10,

11,

16]. For example, Ramosaj et al. [

10] have recently analyzed how different imputation methods influence the subsequent predictive performance of linear regression and tree-based ML approaches. In their work, they found a certain preference to use the Random Forest based missForest [

4] imputation method or a MICE [

2] model based on Bayesian linear regression.

In light of their findings for the regression context, we investigated whether similar conclusions regarding the performance of the different imputation methods can be drawn for classification problems. Compared to the previous studies in the classification context, we used a more modern suite of imputation algorithms, that is, missForest and MICE, and further considered MCAR as well as MAR missing mechanisms with varying missing patterns. In the next section, we describe our simulation set-up in more detail. We report our results in

Section 3 and follow up with a discussion in

Section 4.

2. Materials and Methods

For our analysis, we used six binary classification problems from the life sciences.

Table 1 provides an overview over the datasets including the number of observation and features as well as a short description of the target variable and features (covariates) used for prediction. The datasets

Phoneme and

Pima Indians were obtained from the Open Machine Learning Project [

17], while the datasets

Haberman,

Skin [

18],

SPECT and

Wilt [

19] were obtained from the UCI Machine Learning Repository [

20]. Except for the

Skin dataset, we used the original datasets as they came. The Skin dataset had an original size of 245,057 observations. In our analysis, we used a random sample of 5% where the original class balance was preserved through stratified sampling. None of the original datasets included any missing values. To limit the scope of our investigation, we have decided to only focus on datasets with numerical features at this time.

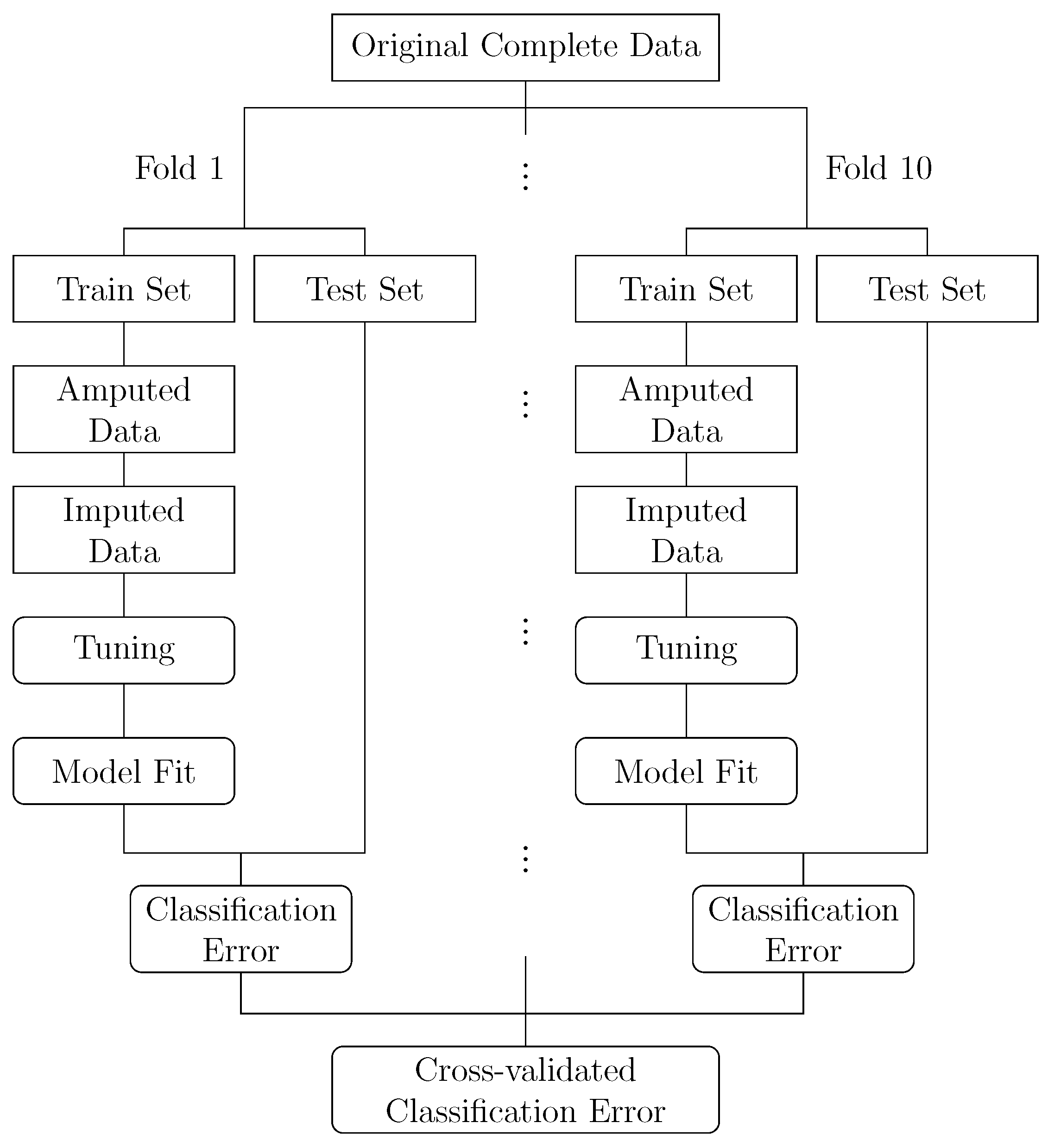

The general flow of our analysis is depicted in

Figure 1. Starting from an original complete dataset, we generated ten train/test partitions as in a 10-fold cross-validation. In each fold, we generated missing values in the feature data of the respective training set. To this end, we used the

ampute function from the MICE

R package [

2] which implements the multivariate amputation procedure proposed in [

21]. We varied the proportion of missing values between 10%, 30% and 50%, that is, we set 10%, 30% and 50% of the original feature data as missing, respectively. The missingness was generated via a MCAR as well as different MAR mechanisms. One key component of the amputation procedure is the ability to specify a missingness pattern that governs which set of features may contain missing values and which set of features is kept complete. This allows for creating flexible missingness scenarios. To study different MAR mechanisms, we specified three missingness patterns that vary in the amount of features that may contain missing values and as such cover diverse scenarios. First, a pattern where for any given observation only one feature value at a time could be set missing. Second, a pattern where missing values could only occur in the middle third of the features. Third, a pattern where missing values could only occur in the first and last third of the features. We will refer to these three MAR patterns as the

One at a Time,

One Third and

Two Thirds pattern, respectively.

Having introduced missingness into the training set, we then imputed the missing values using three MICE [

3] algorithms as well as missForest [

4], Hot Deck imputation [

22] and a naive mean imputation. The three MICE algorithms we used were Bayesian linear regression (denoted as

MICE Norm), Predictive Mean Matching (denoted as

MICE PMM) and Random Forest (denoted as

MICE RF). A common approach of the MICE algorithms and missForest is the concept of treating the imputation for a feature containing missing values as a prediction problem where the respective feature acts as the target variable that is predicted using the remaining features. Usually, some model (e.g., linear model or decision tree) is learned on the data subset for which the respective feature was observed. This idea is fleshed out in varying ways between the different imputation methods.

MICE Norm is based on Rubin’s [

23] imputation method under the assumption of normality. The parameters of the linear model are sampled from their respective posterior distribution which is estimated using the observed data [

3]. MICE PMM extends upon this by sampling a set of candidate donors (five per default) from the observed data whose values are closest to the predictions for missing data points as obtained from the Bayesian linear model. From this set of candidates, one donor is then chosen at random. Thus, PMM only imputes values that were actually observed and consequently does not suffer from the issue of out-of-range imputation [

3]. For more details on the matching procedure see the Ref. [

24].

MICE RF and missForest fall into the category of tree-based imputation methods. MICE RF is based on the algorithm proposed in Doove et al. [

25] in which

k individual tree models are fit on bootstrap samples from the observed data. The data point requiring imputation is then passed through each tree and falls into a terminal node, respectively. For each of these terminal nodes, one donor is sampled at random from all observations belonging to the node, thus resulting in a set of

k donors overall. Out of this set, one donor is chosen at random for the imputation. One commonality of all MICE methods is the concept of multiple imputation. To account for the variability of the imputation process due to the probabilistic nature of the methods, multiple imputed datasets are created. Typically, subsequent analysis is performed on each dataset and the respective model results are pooled. Because we did not analyze uncertainty or perform inference, and in order to limit the computational complexity and to keep our simulation setup consistent, we aggregated the imputed datasets into a combined dataset. This was performed by averaging numeric features and selecting the mode for categorical features, respectively.

In contrast to MICE RF, missForest uses Random Forests to iteratively improve upon an initial imputation guess. The algorithm repeatedly cycles through all originally non-complete features and updates its imputations by fitting Random Forest models and obtaining new predictions for the missing entries. Since the features used in a respective step for prediction potentially contain imputed values themselves that were improved upon in previous steps, the procedure gradually refines its imputations over time. Another difference between MICE and missForest is that the latter does not use multiple imputation.

Last, Hot Deck imputation obtains imputations by sampling from the set of observed values where observations that are similar to the observation requiring imputation have a higher chance of being selected as the donor through proximity-based weighting. For imputing with Hot Deck imputation, MICE and missForest in

R (version 4.0.0; [

26]), we used the

hot.deck package [

27], the

mice package [

2] and the

missRanger package [

6], respectively. The latter allows for additional PMM and is a faster implementation of missForest since it uses the computationally efficient

ranger package [

28]. For MICE, we used the Bayesian linear regression (MICE Norm), Predictive Mean Matching (MICE PMM) and Random Forest (MICE RF) variants. For missForest, we included both a non-PMM and a PMM variant with three candidate non-missing values from which the imputed value was sampled. We used default values for MICE and missForest settings except for the number of trees and the maximum chaining iterations of missForest which we set to 100 and 3, respectively. The number of multiple imputations for MICE was five as per default. Having imputed the missing values, we continued with the task of classification. As classifiers, we used Elastic Net regularized logistic regression (denoted EN-LR in the following), Random Forest (RF), Support Vector Machine (SVM) and Extreme Gradient Boosting (XGBoost). All ML experiments were performed with the

mlr package that provides a unified interface for ML-based analysis in

R. For our classifiers, we used the

glmnet package [

29] for EN-LR, the

ranger package [

28] for RF, the

e1071 package [

30] for (radial basis) SVM and the

xgboost package [

31] for XGBoost. All of these learners have individual sets of hyperparameters that must be specified in advance. Their optimal choice is problem-dependent and approximated via hyperparameter tuning. Incorporating tuning into a benchmark experiment of different ML algorithms requires a nested resampling approach, where tuning is performed in the inner, and validation in the outer resampling loop. Otherwise, tuning and validating on overlapping data samples may lead to optimistic error estimates due to overfitting [

32]. Thus, we perform an additional 3-fold cross-validation for hyperparameter tuning on the respective imputed training sets.

Table A1 shows the respective hyperparameters and search spaces considered for tuning via a random search with 30 iterations. For hyperparameters that were not tuned, we used the default values.

After tuning, the classification models were learned on the entire imputed training set using the optimal hyperparameter settings, and validated on the test set. For each fold, this yielded a classification performance as measured by the Mean Misclassification Error (MMCE), that is, the proportion of wrongly classified instances in relation to all instances. Averaging over the fold-specific performances resulted in an overall cross-validated classification performance on which further comparisons are based. For each combination of dataset, imputation method and learner, we performed 100 replications.

3. Results

Table 2 shows the mean ranks based on the MMCE achieved by the respective classifiers for the 100 replications under a MCAR mechanism. For each row in the table, the best (i.e., lowest) mean rank is signified by bold font and a grey-colored cell. We have prepared similar tables for the standard deviation of the MMCE values for all scenarios in the Appendix (

Table A2,

Table A3,

Table A4 and

Table A5). As the observed variability is low and homogeneous between the imputation methods, we will only focus on the MMCE ranks from now on. It can be seen that the optimal imputation method varied for the different classifiers.

For RF, SVM and XGBoost, MICE RF performed best overall by leading to the lowest mean MMCE ranks in more scenarios than the other imputation methods. For SVM, MICE PMM and missForest PMM were close in performance to MICE RF. In contrast to the other classifiers, MICE RF did not perform as well in the case of EN-LR. Instead, MICE PMM and mean imputation performed slightly better than the other imputation methods. Except for XGBoost, where MICE RF slightly suffered from the increased proportion of missing values while mean imputation benefitted from it, the proportion of missing values did not noticeably affect the results for RF, SVM and EN-LR. Overall, Hot Deck, MICE Norm and missForest imputation were less competitive in the MCAR case.

When looking at the results for the

One at a Time MAR pattern,

Table 3 shows that some of the results from the MCAR case carried over. MICE RF performed well again for RF, SVM and XGBoost winning about a third to a half of the scenarios. For XGBoost, however, MICE PMM and missForest performed similary well. For EN-LR, the results were less clear-cut as well with MICE PMM, MICE Norm and mean imputation similarly competing for the best performance. Overall, Hot Deck and missForest PMM imputation were not as competitive for this missing pattern. MICE Norm was only competitive for classification with EN-LR and fell behind for the other classifiers.

When using the

One Third pattern for MAR missingness,

Table 4 shows that missForest clearly outperformed the other imputation methods for classification with RF, SVM and XGBoost. For these three classifiers, missForest was consistently optimal under almost all missingness proportions for the Phoneme, Skin and Wilt datasets. The results were more mixed for EN-LR where MICE Norm and MICE RF performed slightly better than the other imputation methods. In contrast to the MCAR and default MAR mechanism where Hot Deck imputation fell behind in almost all scenarios, it regularly achieved the lowest mean MMCE ranks on the Haberman dataset.

Finally,

Table 5 displays the results for the MAR missing mechanism using the

Two Thirds pattern. It can be seen that in most scenarios either missForest or mean imputation led to the lowest mean MMCE rank. For EN-LR, missForest and mean imputation performed similarly. When using RF, mean imputation was optimal for nearly all combinations of dataset and missingness proportion. The results for SVM and XGBoost were tied, with missForest and mean imputation winning about a third of all scenarios each. The results for EN-LR and XGBoost were sensitive to the missingness proportion. For both classifiers, mean imputation benefitted similarly from an increased proportion of missing values. Apart from missForest and mean imputation as well as MICE Norm for EN-LR, the remaining imputation methods were seldomly competetive.

4. Discussion

In this work, we studied the effect of imputation on the classification error under different missing mechanisms and missing proportions. To this end, we compared seven imputation methods, namely Hot Deck imputation, MICE Norm, MICE RF, MICE PMM, missForest, missForest PMM and mean imputation. As classifiers, we used EN-LR, RF, SVM and XGBoost. In our simulation study, we found that the optimal imputation method depended on the classifier, missing mechanism, as well as missingness pattern.

For a MCAR mechanism, we found that imputation via MICE RF worked best for RF, SVM and XGBoost. For EN-LR, the results were more mixed. Between the three MAR missing patterns (One at a Time, One Third and Two Thirds) we studied, the results for the One at a Time missing pattern resembled the MCAR results the most. Since for this pattern, only one feature value at a time could be missing for any given observation, the range of possible dependency structures that can arise are limited. Compared to the other two patterns, this situation is most similar to the MCAR mechanism where no dependency structure is present. Further, since the One at a Time pattern was allowed to vary w.r.t. to the features selected for containing the missing value, whereas the One Third and Two Thirds had a fixed set of features (i.e., the middle third, or the first and last third, respectively) where missing values could occur, the former pattern leads to more uncertainty. As such, the results for MCAR and One at a Time MAR are plausible, because MICE is designed to handle imputation uncertainty through multiple imputation. The missForest method, on the other hand, does not use multiple imputation and accordingly performed better in scenarios that included less uncertainty, that is, when using the One Third and Two Thirds patterns where it was optimal for many combinations of dataset, classifier and missing proportion.

Concerning practical insights, our study showed that RF-based imputation worked well under all MCAR and MAR missing mechanisms considered here. However, the optimality of MICE RF and missForest varied depending on the missing mechanism and pattern. Thus, this needs to be considered when using either. Even though the missing mechanism is generally unknown in practice, it is often feasible to form some assumptions based on the data-generating process. In most scenarios one will find that the underlying missingness seldomly follows a true MCAR mechanism. Potential patterns of missingness can also be gauged from exploratory data analysis by analyzing the presence and frequency of missing values. Alternatively, we found mean imputation to be a viable option when many features contained missing values. However, there might be a caveat to this finding. We did not specifically simulate the data and its distribution, so we did not explicitly examine cases in which the assumptions of mean imputation are violated or challenged. Our findings in this regard might have benefitted from studying classification as opposed to regression tasks. Future research should examine the impact of missing and mean imputation for heavily skewed features, for instance.

As for the MICE results, it should be noted that our approach of aggregating the imputed datasets did not make use of MICE‘s inherent advantage of controlling for the between-imputation variability by performing model analysis on the individual imputed datasets and subsequently averaging the resulting models. When performing inference or studying uncertainty, this step is detrimental as otherwise resulting standard errors are overconfident or Type I errors inflated, respectively. As we were only interested in studying classification errors, we have decided for the data aggregation to keep the simulation setup more consistent for all imputation methods and to limit the computational complexity (as each imputed dataset would have required a costly individual hyperparameter tuning step). However, as some of the MICE methods were not as competitive in our simulation study, future work should (if the computational resources permit) study whether the fitting and averaging of classification models on the individual imputed datasets leads to different results regarding the classification error.

Future work could also include listwise deletion as a benchmark method. We have refrained from using it here since the nested resampling approach resulted in small data subsets in the inner cross-validation folds and reducing the sample sizes even more through listwise deletion led to numerical issues with the logistic regression classifier on the smaller datasets from our simulation. Thus, we have decided to exclude this benchmark method for reasons of consistency.

Overall, our results indicated the importance of not only considering the missing mechanism when imputing, but also the pattern of missingness. The fact that the imputation methods were quite sensitive to the pattern choice, warrants further research in the future to investigate the effect of missingness patterns on imputation quality in more detail. This also includes studying more realistic missingness patterns. In our simulation study, the distinction between features that could contain missing values and those that could not was imposed by their order in the dataset (e.g., missings could only occur in the middle third of the features). In theory, this may occur in survey scenarios where a part of the questions is blocked from certain respondents (e.g., through filter questions). However, while helping to standardize the simulation process for all the different datasets, this design choice did not realistically reflect the common occurrence of relationships between features where the value of one feature regulates the probability of missingness for another feature. For example, in an online survey context, older people may have higher probabilities of missing answers or not completing their survey since they may be more challenged by technical aspects of the survey than younger participants. In another example, in-person measurements could be affected by the place of residence where respondents living far away might be more inclined to miss measurements due to the long travel time or due to insufficient public transportation options. For future work, one could design missingness patterns to better reflect such phenomena and thus make them more realistic. Instead of randomly selecting the features that may contain missing values, one could also study how imputation is affected when missingness is induced in “important” features (as measured by variable importance measures for example). Furthermore, to limit the scope of our analysis we only considered datasets with numerical features. It would be interesting to study whether imputation for categorical features yields different results. This may also impact the performance of Hot Deck imputation which is more suitable to categorical features. To conclude, our work showed that (i) using modern RF imputation methods such as MICE RF or missForest may be favorable in terms of subsequent classification accuracy and that (ii) basing the choice of imputation method on the context in which they are to be used, may lead to improved classification performance.