Posterior Averaging Information Criterion

Abstract

:1. Introduction

- Which of the models best explains a given set of data?

- Which of the models yields the best predictions for future observations from the same process that generated the given set of data?

2. Kullback–Leibler Divergence and Model Selection

3. Posterior Averaging Information Criterion

3.1. Rationale and the Proposed Method

- 1.

- 2.

- The BPIC cannot be calculated when the prior distribution is degenerate, a common situation in Bayesian analysis when an objective non-informative prior is selected. For example, if we use non-informative prior for the mean parameter of the normal distribution in the following Section 4.1, the values of and in Equation (3) are undefined.

- C1:

- Both the log density function and the log unnormalized posterior density are twice continuously differentiable in the compact parameter space Θ;

- C2:

- The expected posterior mode is unique in Θ;

- C3:

- The Hessian matrix of is non-singular at ,

3.2. Relevant Methods for the Posterior Averaged K-L Discrepancy

4. Simulation Study

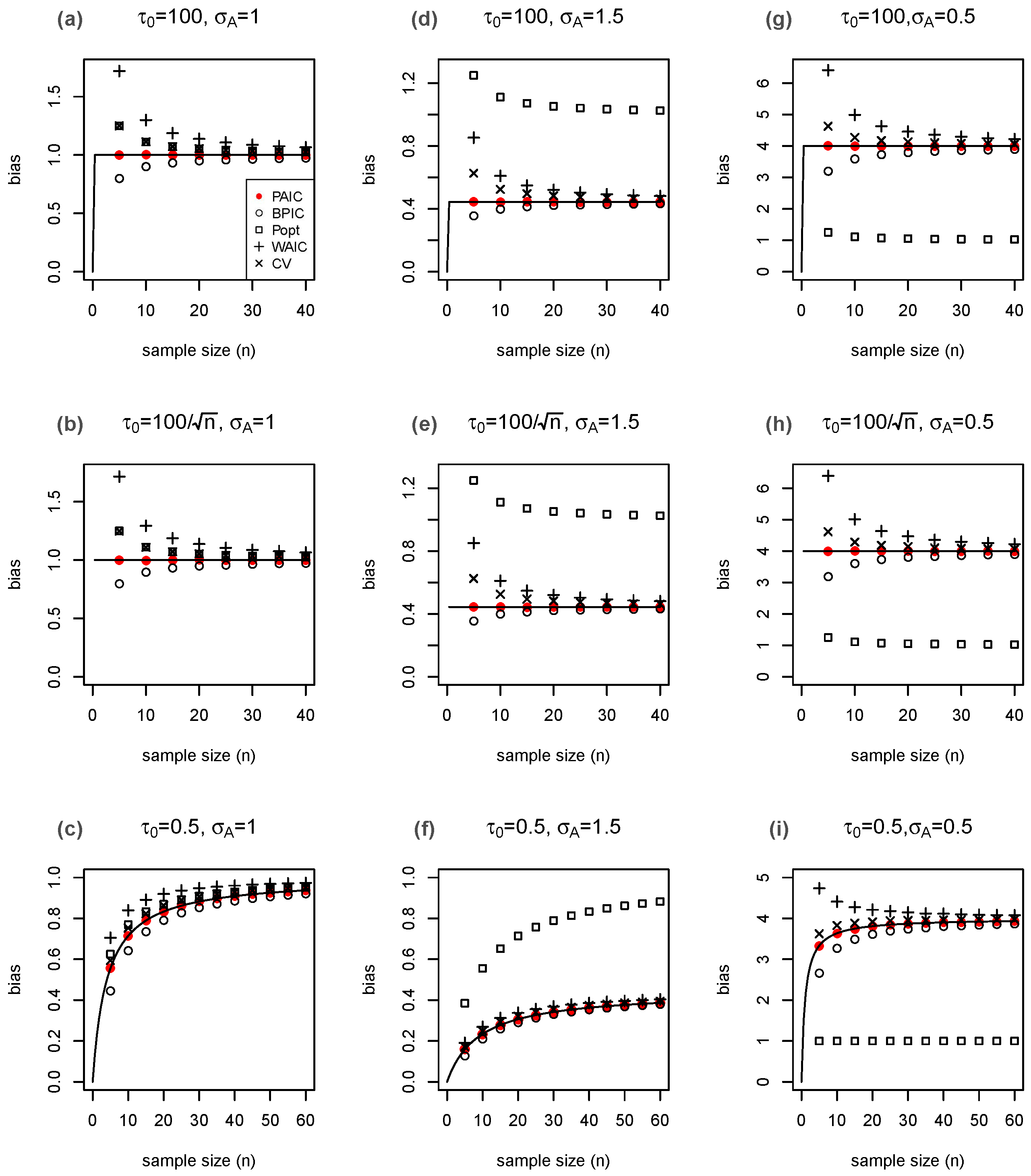

4.1. A Case with Closed-Form Expression for Bias Estimators

4.2. Bayesian Logistic Regression

- 1.

- Draw , , from the true distribution.

- 2.

- Simulate the posterior draws of .

- 3.

- Estimate , , , and .

- 4.

- Draw , , for approximation of true .

- 5.

- Compare each with true bias .

- 6.

- Repeat steps 1–5.

5. Application

6. Discussion

- 1.

- What is a good estimand, based on K-L discrepancy, to evaluate Bayesian models?

- 2.

- What is a good estimator to estimate the estimand for K-L based Bayesian model selection?

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AIC | Akaike information criterion |

| BPIC | Bayesian predictive information criterion |

| DIC | Deviance information criterion |

| K-L | Kullback–Leibler |

| PAIC | Posterior averaging information criterion |

| WAIC | Watanabe–Akaike information criterion |

Appendix A. Supplementary Materials for Proof of Theorem 1

Appendix A.1. Some Important Notations

Appendix A.2. Proof of Lemmas

Appendix B. Supplementary Materials for Derivation of Equation (3)

References

- Akaike, H. Information theory and an extension of the maximum likelihood principle. In Selected Papers of Hirotugu Akaike; Parzen, E., Tanabe, K., Kitagawa, G., Eds.; Springer Series in Statistics; Springer: New York, NY, USA, 1998; pp. 267–281. [Google Scholar]

- Rissanen, J. Modeling by shortest data description. Automatica 1978, 14, 465–471. [Google Scholar]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464. [Google Scholar]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Statist. 1951, 22, 79–86. [Google Scholar]

- Geisser, S.; Eddy, W.F. A predictive approach to model selection. J. Am. Stat. Assoc. 1979, 74, 153–160. [Google Scholar]

- Spiegelhalter, D.J.; Best, N.G.; Carlin, B.P.; Van der Linde, A. Bayesian measures of model complexity and fit (with discussion). J. R. Stat. Soc. B 2002, 64, 583–639. [Google Scholar]

- Ando, T. Bayesian predictive information criterion for the evaluation of hierarchical Bayesian and empirical Bayes models. Biometrika 2007, 94, 443–458. [Google Scholar]

- Gelman, A.; Carlin, J.B.; Stern, H.S.; Rubin, D.B. Bayesian Data Analysis, 2nd ed.; CRC Press: London, UK, 2003. [Google Scholar]

- Hurvich, C.; Tsai, C. Regression and time series model selection in small samples. Biometrika 1989, 76, 297–307. [Google Scholar]

- Konishi, S.; Kitagawa, G. Generalised information criteria in model selection. Biometrika 1996, 83, 875–890. [Google Scholar]

- Takeuchi, K. Distributions of information statistics and criteria for adequacy of models. Math. Sci. 1976, 153, 15–18. (In Japanese) [Google Scholar]

- Burnham, K.P.; Anderson, D.R. Model Selection and Multimodel Inference, 2nd ed.; Springer: New York, NY, USA, 2002. [Google Scholar]

- Laud, P.W.; Ibrahim, J.G. Predictive model selection. J. R. Stat. Soc. B 1995, 57, 247–262. [Google Scholar]

- San Martini, A.; Spezzaferri, F. A predictive model selection criterion. J. R. Stat. Soc. B 1984, 46, 296–303. [Google Scholar]

- Spiegelhalter, D.J.; Best, N.G.; Carlin, B.P.; Van der Linde, A. The deviance information criterion: 12 years on. J. R. Stat. Soc. B 2002, 76, 485–493. [Google Scholar]

- Spiegelhalter, D.J.; Thomas, A.; Best, N.G. WinBUGS Version 1.2 User Manual; MRC Biostatistics Unit: Cambridge, UK, 1999. [Google Scholar]

- Meng, X.L.; Vaida, F. Comments on ‘Deviance Information Criteria for Missing Data Models’. Bayesian Anal. 2006, 70, 687–698. [Google Scholar]

- Celeux, G.; Forbes, F.; Robert, C.P.; Titterington, D.M. Deviance information criteria for missing data models. Bayesian Anal. 2006, 70, 651–676. [Google Scholar]

- Liang, H.; Wu, H.; Zou, G. A note on conditional AIC for linear mixed-effects models. Biometrika 2009, 95, 773–778. [Google Scholar]

- Vaida, F.; Blanchard, S. Conditional Akaike information for mixed effects models. Biometrika 2005, 92, 351–370. [Google Scholar]

- Donohue, M.C.; Overholser, R.; Xu, R.; Vaida, F. Conditional Akaike information under generalized linear and proportional hazards mixed models. Biometrika 2011, 98, 685–700. [Google Scholar]

- Plummer, M. Penalized loss functions for Bayesian model comparison. Biostatistics 2008, 9, 523–539. [Google Scholar]

- Efron, B. Estimating the Error Rate of a Prediction Rule: Improvement on Cross-Validation. J. Am. Stat. Assoc. 1983, 78, 316–331. [Google Scholar]

- Lenk, P.J. The logistic normal distribution for Bayesian non parametric predictive densities. J. Am. Stat. Assoc. 1988, 83, 509–516. [Google Scholar]

- Walker, S.; Hjort, N.L. On bayesian consistency. J. R. Stat. Soc. B 2001, 63, 811–821. [Google Scholar]

- Hodges, J.S.; Sargent, D.J. Counting degrees of freedom in hierarchical and other richly-parameterised models. Biometrika 2001, 88, 367–379. [Google Scholar]

- Gelfand, A.E.; Ghosh, S.K. Model Choice: A Minimum Posterior Predictive Loss Approach. Biometrika 1998, 85, 1–11. [Google Scholar]

- Vehtari, A.; Lampinen, J. Bayesian model assessment and comparison using cross-validation predictive densities. Neural Comput. 2002, 14, 1339–2468. [Google Scholar]

- Gelman, A.; Hwang, J.; Vehtari, A. Understanding predictive information criteria for Bayesian models. Stat. Comput. 2014, 24, 997–1016. [Google Scholar]

- Vehtari, A.; Gelman, A.; Gabry, J. Practical Bayesian model evaluation using leave-one-out cross-validation and WAIC. Stat. Comput. 2017, 27, 1413–1432. [Google Scholar]

- Vehtari, A.; Gabry, J.; Yao, Y.; Gelman, A. loo: Efficient Leave-One-Out Cross-Validation and WAIC for Bayesian Models. R Package Version 2.5.1. 2018. Available online: https://CRAN.R-project.org/package=loo (accessed on 28 August 2022).

- Watanabe, S. Asymptotic equivalence of Bayes cross validation and widely applicable information criterion in singular learning theory. J. Mach. Learn. Res. 2010, 11, 3571–3594. [Google Scholar]

- Watanabe, S. Algebraic Geometry and Statistical Learning Theory; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Watanabe, S. A formula of equations of states in singular learning machines. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 2098–2105. [Google Scholar]

- Stone, M. Cross-validatory choice and assessment of statistical predictions (with discussion). J. R. Stat. Soc. B 1974, 36, 111–147. [Google Scholar]

- Gelman, A. Prior distributions for variance parameters in hierarchical models (comment on article by Browne and Draper). Bayesian Anal. 2006, 1, 515–534. [Google Scholar]

- George, E.I.; McCulloch, R. Variable selection via Gibbs sampling. J. Am. Stat. Assoc. 1993, 88, 881–889. [Google Scholar]

- Piironen, J.; Vehtari, A. Comparison of Bayesian predictive methods for model selection. Stat. Comput. 2017, 27, 711–735. [Google Scholar]

- Beran, R. Minimum Hellinger distance estimates for parametric models. Ann. Stat. 1977, 5, 445–463. [Google Scholar]

- Nielsen, F. On the Jensen–Shannon symmetrization of distances relying on abstract means. Entropy 2019, 21, 485. [Google Scholar]

| Criterion | Actual Error | Mean Absolute Error | Mean Square Error |

|---|---|---|---|

| 0.160 (0.238) | 0.206 (0.199) | 0.082 (0.207) | |

| 0.259 (0.244) | 0.272 (0.229) | 0.127 (0.267) | |

| 0.840 (0.285) | 0.840 (0.285) | 0.786 (0.633) | |

| 0.511 (0.248) | 0.511 (0.248) | 0.323 (0.389) |

| SSVS | LOO-CV | KCV | BPIC | PAIC | ||

|---|---|---|---|---|---|---|

| 4, 5 | 827 | 2603.85 | 2580.74 | 2527.32 | 2528.89 | 2529.60 |

| 2, 4, 5 | 627 | 2572.98 | 2564.92 | 2544.77 | 2533.90 | 2534.44 |

| 3, 4, 5, 11 | 595 | 2583.63 | 2572.59 | 2545.23 | 2539.79 | 2540.20 |

| 3, 4, 5 | 486 | 2593.10 | 2579.97 | 2567.85 | 2541.75 | 2542.32 |

| 3, 4 | 456 | 2590.36 | 2571.76 | 2538.80 | 2533.37 | 2533.97 |

| 4, 5, 11 | 390 | 2589.76 | 2573.04 | 2526.77 | 2527.94 | 2528.58 |

| 2, 3, 4, 5 | 315 | 2576.66 | 2577.17 | 2561.57 | 2553.29 | 2553.77 |

| 3, 4, 11 | 245 | 2579.53 | 2566.28 | 2565.22 | 2532.87 | 2533.42 |

| 2, 4, 5, 11 | 209 | 2564.67 | 2559.36 | 2540.41 | 2533.60 | 2534.03 |

| 2, 4 | 209 | 2741.46 | 2741.17 | 2737.46 | 2740.42 | 2740.51 |

| 5, 10, 12 | n/a | 2602.23 | 2572.86 | 2519.41 | 2525.07 | 2525.61 |

| 4, 12 | n/a | 2596.51 | 2570.94 | 2520.52 | 2524.31 | 2524.94 |

| 5, 12 | n/a | 2595.86 | 2570.32 | 2520.51 | 2524.19 | 2524.90 |

| 4, 5, 12 | n/a | 2596.67 | 2574.73 | 2525.65 | 2526.19 | 2526.86 |

| 4, 10, 12 | n/a | 2603.05 | 2573.80 | 2520.62 | 2525.17 | 2525.70 |

| 4, 5, 10, 12 | n/a | 2603.51 | 2577.86 | 2526.53 | 2527.06 | 2527.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, S. Posterior Averaging Information Criterion. Entropy 2023, 25, 468. https://doi.org/10.3390/e25030468

Zhou S. Posterior Averaging Information Criterion. Entropy. 2023; 25(3):468. https://doi.org/10.3390/e25030468

Chicago/Turabian StyleZhou, Shouhao. 2023. "Posterior Averaging Information Criterion" Entropy 25, no. 3: 468. https://doi.org/10.3390/e25030468

APA StyleZhou, S. (2023). Posterior Averaging Information Criterion. Entropy, 25(3), 468. https://doi.org/10.3390/e25030468