Abstract

The ability to build more robust clustering from many clustering models with different solutions is relevant in scenarios with privacy-preserving constraints, where data features have a different nature or where these features are not available in a single computation unit. Additionally, with the booming number of multi-view data, but also of clustering algorithms capable of producing a wide variety of representations for the same objects, merging clustering partitions to achieve a single clustering result has become a complex problem with numerous applications. To tackle this problem, we propose a clustering fusion algorithm that takes existing clustering partitions acquired from multiple vector space models, sources, or views, and merges them into a single partition. Our merging method relies on an information theory model based on Kolmogorov complexity that was originally proposed for unsupervised multi-view learning. Our proposed algorithm features a stable merging process and shows competitive results over several real and artificial datasets in comparison with other state-of-the-art methods that have similar goals.

1. Introduction

Multi-source data are a never-ending source of information produced almost in real time by many real-life systems: personal data from social networks, medical data acquired by multiple systems for the same patient, remote sensing images acquired under various modalities, etc. All of these data somehow have to be processed by machine learning algorithms.

However, in the last years, there has emerged a new phenomenon in which machine learning methods themselves have started producing their own multiple representations of the same data mainly due to the explosion in the number of algorithms and, in particular, deep learning algorithms that extract features from data. For instance, in the field of natural language processing, text and speech data can be analyzed and clustered from widely different representations and features, and there is, therefore, a need to reconcile and somehow merge these results [1,2]. The same problem exists in many other domains, such as image processing, where different architectures of convolutional neural networks may extract different features and representations. However, this is particularly problematic in the context of unsupervised learning when there is no supervision to decide which representations are the best, and when the only solution is often to produce clustering based on the various possible representations, and thus to merge them all the while solving conflicts. Furthermore, this unsupervised process also has to detect and discard low-quality and noisy representations.

Whether the multiple representations are native to the data or produced artificially by machine learning algorithms, the unsupervised exploration of multi-view data can be regrouped under the terms of multi-view clustering [3] when dealing with multiple representations of the same objects or cluster ensembles [4] when dealing with several partitions of the same objects by multiples algorithms. In this work, we deal with a multi-view application where the data have multiples representations, but we use methods based on the fusion of partitions that are very similar to ensemble learning problems. To tackle such a multi-view clustering problem, two types of approaches exist: The first one consists in attempting a global clustering of the multi-view system using an algorithm that has access to all the views. The second one consists in running algorithms locally in each view and then finding a solution to merge the partitions into a global result.

In this paper, we consider the second approach, which allows for the selection of local algorithms better adapted to each view-specific data representation, and we propose a novel merging method that aims at the fusion of various clustering partitions. Our method uses information theory and the principle of minimum description length [5,6] to detect points of agreements as well as conflicts between the local partitions, and features an original method to reduce these conflicts as the partitions are merged. We call our method KMC for “Kolmogorov-based multi-view clustering”.

This idea was successfully used in earlier work about multi-view clustering without merging partitions [7] and for text corpus analysis [8]. This work brings the following novel aspects and contributions:

- Our main scientific contribution is the proposal of a new heuristic method relying on Kolmogorov complexity to merge partition in an unsupervised ensemble learning context applied to multiview clustering. Compared with earlier methods, we remove the reliance on an arbitrary pivot to choose the merging order. Instead, we reinforce the use of Kolmogorov complexity to make the choice of the merging order, thus rendering our algorithm deterministic, while earlier versions and methods were not. Our method also explores more of the solution space, thus leading to better results.

- We propose a large comparison of unsupervised ensemble learning methods—including four methods from the state of the art—in a context which is not restricted to text corpus analysis, both in terms of state-of-the-art methods but also datasets.

- While not a scientific or technical contribution (because our method relies on known principles), our algorithm brings some novelty in the field of unsupervised ensemble learning, where no other method relies on the same principle. We believe that such diversity is useful to the field of clustering, where a wider choice of methods is a good thing because of the unsupervised context.

Finally, while it is not a technical or scientific contribution, we analyze the effects of various levels of noise in different number of views, and the effect of changing the number of clusters. We assess how these parameters affect the performance of our proposed method in terms of result quality. These results, while linked to our proposed methods, may shed some light on the behavior of other methods in the same context.

This paper is organized as follows: In Section 2, we present some of the main methods and approaches both for multi-view clustering and partition fusion methods based on various principles. Section 3 introduces our proposed algorithms. Section 4 features our experimental results and some comparisons with other methods. Finally, in Section 5, we give some conclusions as well as some insights as to what future improvements and works could be performed based on our proposal.

2. State of the Art

The problem of multi-view clustering is relatively common in unsupervised learning and has been tackled from different angles depending on the intended application. The most common method is to use a global function over all views and to merge all partitions. Several such methods will be presented in this state of the art, where we will also discuss their pros and cons.

Let us begin by presenting the different terminologies used for multi-view approaches and what they entail [9]:

- Multi-view clustering [2,3,10,11,12,13,14,15,16,17,18,19,20,21] is concerned with any kind of clustering, where the data are split into different views. It does not matter whether the views are physically stored in different places, and if the views are real or artificially created. In multi-view clustering, the goal can either be to build a consensus from all the views, or to produce clustering results specific to each view.

- Distributed data clustering [22] is a sub-case of multi-view clustering that deals with any clustering scenario where the data are physically stored in different sites. In many cases, clustering algorithms used for this kind of task will have to be distributed across the different sites.

- Collaborative clustering [23,24,25,26,27] is a framework in which clustering algorithms work together and exchange information with the goal of mutual improvement. In its horizontal form, it involves clustering algorithms working on different representations of the same data, and it is a sub-case of multi-view clustering with the particularity of never seeking a consensus solution but rather aiming for an improvement in all views. In its vertical form, it involves clustering algorithms working on different data samples with similar distributions and underlying structures. In both forms, these algorithms follow a two-step process: (1) A first clustering is built by local algorithms. (2) These local results are then improved through collaboration. A better name for collaborative clustering could be model collaboration, as one requirement for a framework to qualify as collaborative is that the collaboration process must involve effects at the level of the local models.

- Unsupervised ensemble learning, or cluster ensembles [28,29,30,31,32,33,34,35,36] is the unsupervised equivalent of ensemble methods from supervised learning [37]: It is concerned with either the selection of clustering methods, or the fusion of clustering results from a large pool, with the goal of achieving a single best-quality result. partitions. This pool of multiple algorithms or results may come from a multi-view clustering context [38], or may just be the unsupervised equivalent of boosting methods, where one would attempt to combine the results of several algorithms applied to the same data. Unlike collaborative and multi-view clustering, ensemble clustering does not access the original features, but only the crisp partitions.

In this paper, collaborative clustering and distributed clustering are not considered. We focus solely on the problem of multi-view and ensemble clustering: we merge clustering partitions no matter their origin and without accessing the original features. Our problem is therefore similar to the one introduced by Strehl and Ghosh in their paper [28], where they present the problem of combining multiple partitions of a set of objects without accessing the original features.

We will now review some of the works that are the most closely related to our proposed method. A more extensive survey of cluster ensemble methods can be found in [36].

In [2], the authors propose a multi-view clustering method applied to text clustering when texts are available under multiple representations. Their method is very similar to [19] in the way that they attempt at merging the different partitions: First, similarity matrices are computed in three different ways, namely, two based on partition memberships and another one based on feature similarity. Then, a combined similarity matrix is obtained from those three previous ones, and a standard clustering technique is applied to produce the consensus partition.

In [39], the authors address the problem of large-scale multi-view spectral clustering. They do so using local manifolds fusion to integrate heterogeneous features based on approximations of the similarity graphs.

In [40], a similar method is proposed for partition fusion in a multi-view clustering context. It also relies on a graph-based approach, with the addition of a weight system to account for the clustering capacity differences of the views.

In [41], the authors address partial multi-view clustering, a specific case of multi-view clustering where not all data are in all views. They uses latent representations and seek the closest available data when one is missing in a view.

In [21], the authors address the issue of feature selection in multi-view clustering. They propose a global objective function (quite similar to the ones found in collaborative clustering) in which each feature of each view is automatically weighted to ensure smooth convergence. In the original paper, the authors adapted this method for multi-view K-Means.

In [42], the authors propose a graph based multi-view clustering method which merges the data graphs of all views. It weights the views and detects the number of clusters in an automated manner.

In [43], the authors tackled the problem of multi-view clustering under the assumption that each view or each partition can be seen as a perturbation of the consensus clustering, and that it is possible to weight them so that the partitions closer to the consensus are more important. They do so by using subspace clustering and graph learning in each view.

Another consensus generation strategy is proposed in [44], where a co-association matrix is built from the ensemble partitions and then it is improved by removing low coefficients called negative evidences. This removal procedure is performed in conjunction with a N-Cut clustering in multiple rounds, and finally the best partition is reported.

Based on an initial cluster similarity graph, ref. [45] proposed an enhanced co-association matrix that allows to simultaneously capture the object-wise co-occurrence relationships as well as the multi-scale cluster-wise relationship in ensembles. Finally, two consensus criteria are proposed, namely hierarchical and meta-cluster-based functions. Ref. [46] proposed a randomized subspace generation mechanism to build multiple-base clusterings. From these partial solutions, an entropy weighted combination strategy is applied in order to obtain an enriched co-association matrix that serves as a summary of the ensemble. Finally, they employed three independent consensus solutions over the co-association matrix, namely a hierarchical clustering, a bipartite graph clustering and a spectral clustering.

Finally, we can mention the work of Yeh and Yang [47], which is very relevant to understanding the difficulty of properly evaluating ensemble clustering methods, and where the authors propose a fuzzy generalized version of the Rand Index for ensemble clustering.

As one can see, all these recent algorithms for partitions fusion are actually built so that they are not so much a merging method of existing partitions, but rather global clustering frameworks that seek and merge partitions in all views at the same time. While the end goal is the same as our proposed method—finding a consensus clustering partition—our method is different in the sense that it starts from existing partitions and has no access to the original data features. As depicted in the experimental section, this key difference can make our method difficult to compare in a fair manner with the above described works from the state of the art.

One of the strong points of our method is that it is ensemble clustering, in the sense that it combines pre-existing clustering partitions, but it is also multi-view clustering since these partitions come from different sets of features or multiple views. As a consequence, the flexibility on the algorithms we can use over local data views is a distinctive characteristic regarding classical multi-view clustering, where the same clustering method does it all from the local views. However, it can be costly in terms of performance, especially if the local algorithms are not state of the art.

3. The Proposed Method

3.1. Problem Definition and Notations

Let us consider a data space , which can be decomposed into M views so that , where the M spaces , that may or may not overlap depending on the application. The spaces will therefore be the spaces associated to the views. The interdependence between the views is not solely contained in the definition of the different views , but also in the probability distribution P over the whole space .

Let , be a set of N objects split into the M views. We note the local views of these data to (). As such, any view —the realization of the dataset over —is a matrix containing N lines and the columns (attributes) attached with view . From there, will denote the n-th line of view i, and is a vector.

Let be a set of crisp partitions of objects in X computed over the M views. Each local partition is a vector of size N which to any data associates a hard cluster , . Since different views can have different numbers of clusters, we note (without lower index), the number of cluster in any view i. For simplification purposes, , the last cluster of any view i, will simply be noted . From there, the association function is defined as follows:

In other words, the function maps any element of view i to a cluster of the same view. It is the result of a clustering method applied to view i. From there, we have that each local partition can be written as follows: . Please note that we write to simplify the notations, as the view is implied in the mapping function index, but the proper notation would be .

Like many works in multi-view clustering, in order to measure the overlap between clusters in different partitions, we use a confusion matrix [48]. For two views i and j, this matrix which we note is of size and defined as follows:

In other words, each measures the percentage of elements that belong to cluster in view i that belong to cluster in view j. Please remember that maps from view i to view j and that this mapping may be different from the mapping acquired from , especially if the two views have different numbers of clusters: there is no symmetry hypothesis here.

From there, for each cluster in each view, it is possible to find the maximum agreement cluster in any other view simply by searching the maximum value in each of the lines of the corresponding matrix . Let us note , the maximum agreement cluster in partition for cluster of partition :

Lastly,

Table with All Notations

Table 1 below contains all notations that will be used in the algorithm presented in the next sections. Some of these notations have already been presented with details and equations; others will be detailed more as the different concepts and algorithms are presented.

Table 1.

Summary of all notations.

3.2. Merging Partitions Using Kolmogorov Complexity

In the work of [5,6], the notion of minimum description length (MDL) is introduced, with the description length being the minimal number of bits needed by a Turing machine to describe an object. This measure of the minimal number of bits is also known under the name Kolmogorov complexity.

If is a fixed Turing machine, the complexity of an object x given another object y using the machine is defined as , where is the set of programs on , designates the output of program p with argument y and l measures the length (in bits) of a program. When the argument is empty, we use the notation and call this quantity the complexity of . The main problem with this definition is that the complexity depends on a fixed Turing machine . Furthermore, the universal complexity is not computable since it is defined as a minimum over all programs of all machines.

In relation to this work, in [7], the authors solved the aforementioned problem by using a fixed Turing machine before applying this notion of Kolmogorov complexity to collaborative clustering, which is a specific case of multi-view clustering, where several clustering algorithms work together in a multi-view context but aim at improving each other’s partitions rather than merging them [23]. While collaborative clustering does not aim at a consensus, this application is still very close to what we try to achieve in this paper, where we try to merge partitions of the same objects under multiple representations. For these reasons, we decided to use the same tool.

In the rest of this paper, just as the authors did in [7], we will consider that the Turing machine is fixed, and to make the equations easier, we will denote by the complexity of on the chosen machine. Then, we adapt the equations used in their original paper to our multi-view context for text mining and we use Kolmogorov complexity as a tool to compute the complexity of one partition given another partition. The algorithm to do so and how we use it is described in the next section.

3.3. The KMC Algorithm

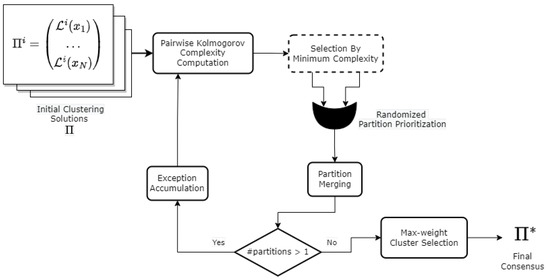

Our goal is to combine several partitions in order to build a final consensus. To this end, we perform successive pairwise fusion procedures between partitions following a bottom-up strategy until we reach a single soft partition. Subsequently, the consensus is generated by picking the cluster with the maximum weight for each data point. Figure 1 depicts an overall scheme of the proposed method, and Algorithms 1 and 2 show a detailed description for the two procedures that make up the proposal.

Figure 1.

Overall scheme of the KMC method to produce the consensus partition .

Let us consider that for a set of initial partitions, there are candidate pairs to merge. In order to overcome the combinatory explosion, we will use a greedy criteria to pick a pair of partitions: the Kolmogorov complexity of partition knowing partition is computed by following the procedure described in [7] as shown in Equation (5) below:

In Equation (5), still denotes the number of clusters in a given partition i as defined earlier, and is the number of errors in the mapping from partition i to partition j. These errors correspond to data that do not adhere to the maximum agreement partition mapping , increasing the overall complexity and are also likely to cause issues when merging the partitions:

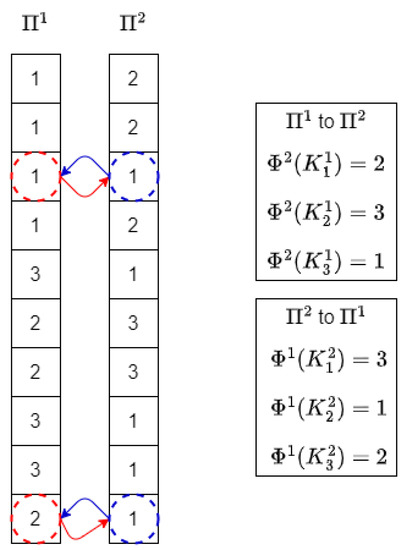

Please note that these errors as defined in Equation (6) can be computed simply by browsing through the partitions and based on the majority rules, as shown in Figure 2.

Figure 2.

Finding the mapping errors based on the majority rules for 2 partitions with 10 objects and 3 clusters per partition. Enclosed in a dashed red line are the objects identified as mapping errors for the first partition, and in a dashed blue line those identified for the second partition.

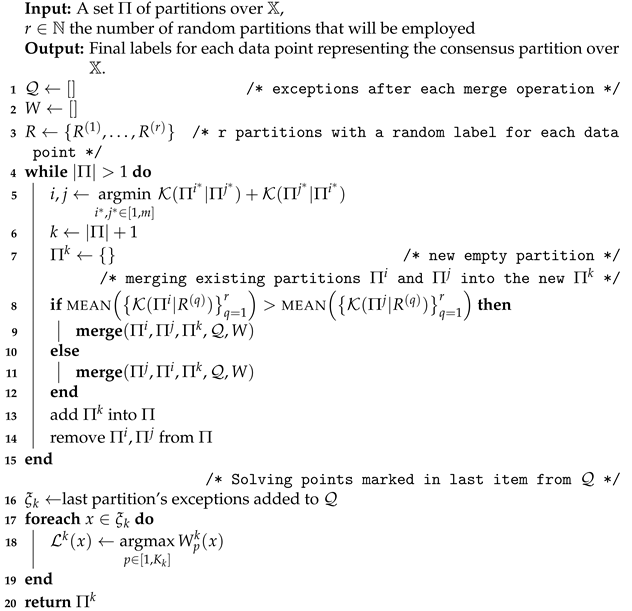

3.3.1. Overall View of the Main Procedure

Algorithm 1 unfolds as follows for each round: First the pair of partitions with the least complexity based on Equation (5) is selected as described in Line 5. The pair of partitions with the least complexity value is selected as described in Line 5 of Algorithm 1. In Lines 9 and 11, the merge procedure is called. It is worth mentioning that since the commutative property does not hold for this operation, in the original version of the algorithm, a randomized criterion inspired by the farthest-first traversal approach presented in [49] was employed in Line 8 to pick the first argument taken by this call. In the current version, for each input partition, the Kolmogorov complexity is computed against several random partitions, and the one with the highest average complexity among the two input partitions is chosen as the first argument for this function. The rationale behind this decision is that a more discordant input partition with respect to several random configurations has more information contained than another one more agreeable. After all partitions have been merged into a single one at Line 15, the last set of exceptions is processed and used in order to compute the final label for each object (see Lines 17–19).

| Algorithm 1: Main procedure for building the consensus partition. |

|

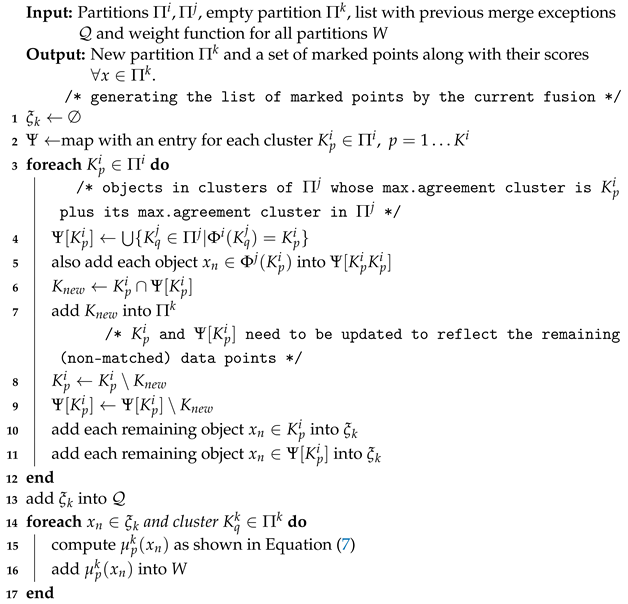

3.3.2. Merging Two Views/Partitions

Regarding the merge operation between two partitions described in Algorithm 2, when it is performed between two partitions and , each cluster in is combined with its maximum agreement cluster in (computed as shown in Figure 2). Let denote the new partition produced from the merging of these two clusters. First, for all clusters in , the majority clusters in are listed for a posterior fusion. Additionally, clusters in are also added to the lists of their majority clusters in . After that, in Lines 4–6, all the objects with total agreement between each pair of merged clusters are put together in the same cluster of the new partition .

Since these successive partition fusions are performed by following the maximum agreement criteria between clusters as stated in Equation (3), it is likely that some data points—identified as mapping errors in their original partitions as per Equation (6)—will not fit to this rule and hence be marked as exceptions that shall be dealt with at the final stage and put in a specific subset during the execution of the subsequent merge operations. As defined by Equation (6) the exception set for the newly created partition is made up by objects whose cluster in the first former partition does not match the majority rule cluster in the second former partition. Algorithm 2 addresses this task in Lines 8–11. Finally, we store a history of all previous exceptions in list .

| Algorithm 2: Merge procedure that fuses two partitions into a new one identifying also problematic points as exceptions. |

|

3.3.3. Handling Mapping Errors through the Merge Process

The objects marked as exceptions have no crisp cluster membership within the new partition. Therefore, these mapping errors generated after the fusion of with will have an uncertain cluster membership in the newly generated . These memberships will be settled/decided at the final stage of the method when the last partition is produced. As a means to counteract this ambiguity, we measure the support of a data object x in each cluster p of by a function of the similarity/closeness between this cluster and the clusters and within which this data object was grouped in the former partitions and respectively. For each object previously contained either in or , its support is a function of the similarity/closeness between cluster and its maximum agreement cluster in or correspondingly.

Formally, for any merge operation and without loss of generality, let and be the two partitions/views whose fusion produces , and let be the classical Jaccard similarity between two sets U and V. For every data point , its membership in a cluster () is denoted by and defined as follows:

Equation (7) states the four scenarios that may be found when computing the membership weights for the mapping errors:

- First, a data object could be identified as an exception to the majority rule of the current merge operation as formalized in the first case of Equation (7).

- The next two cases show the scenarios in which the data object could come from errors generated in prior merging stages in either of the two former partitions, but not in both.

- The final case defined in Equation (7) is distinguished from previous definitions by denoting the scenario where the data object has been dragged from mapping errors in both input partitions.

The rationale behind Equation (7) is twofold: First, the higher the agreement between a cluster in the new partition and the object cluster in the source partition, the higher the weight value. Second, when no cluster information is available for the object in the source partition, the maximum agreement information is employed, and its value is weighted by the cluster support in the source partitions and . Finally, the overall support for object x in cluster p is expressed by the mean value between the supports of both input partitions.

As a final remark about the operation of the proposed method, it is important to indicate that once a point is marked as an exception, it remains so through all the subsequent fusions and also that all the exceptions are solved only at the end of the complete merging process. After all the views are subsequently fused into a single partition, every data point has a score greater or equal than zero for each cluster. This carry-over strategy of weighted membership coefficients enables the resolution of the cluster assignment problem for the discordant data objects at the final stage of Algorithm 1 in Line 17. At this point, it is possible to obtain a consensus by picking the cluster with the maximum weight for each data point. The bottom right part of Figure 1 sketches this part of the process until the final consensus is obtained.

3.4. Computational Complexity: Discussion

The overall complexity and computation time of an ensemble clustering method is often difficult to assess, as it depends on both the complexity of the clustering methods used in the different views to create the original partitions (which may be paralleled or not), and also on the ensemble process to merge the partitions.

In the case of our KMC algorithm, given M views with N elements and a maximum of K clusters per view, the cost to map all clusters and errors between views is in . We can have a maximum of merges, which gives us a total complexity in for the ensemble learning part. In most cases, K should be negligible compared to N. Which quantity might be the most important between and N can be up for discussion depending on the number of views M. Still, we believe that in most cases, the number of lines N is the dominant quantity. Furthermore, if the clustering methods used to generate the original partitions have a complexity beyond linear—in or for instance, as can be possible with several clustering methods other than K-means or a Gaussian mixture model—then the complexity of our multi-view ensemble learning method KMC would be negligible anyways compared to that of the original algorithms used to create the initial partitions.

4. Experimental Analysis

Throughout this and the following sections, the name of the proposed method is KMC.

4.1. Clustering Measures

To assess the quality of the clustering consensus, we employ the following external measures: entropy, purity and normalized mutual information [28]. Given a method , a partition built after its execution and the gold standard partition :

Following [50], entropy measures the amount of class confusion within a cluster. The lower its value, the better the clustering solution. Thus, it is defined as

The purity of the partition proposed by the method is defined as the number of correctly assigned objects, where the majority class is set as the label for each cluster. This is as follows:

Normalized mutual information measures the level of agreement between a partition produced by a method and a ground truth partition also correcting the bias induced by the non-normalized version of this measure when the number of clusters increases. It is defined as follows:

4.2. Analysis on Real Data and Comparison against Other Ensemble Methods

In order to further validate the performance of our proposed method, we assessed its results against three state-of-the-art methods over the three above-mentioned clustering measures. Additionally, we report the results on nine publicly available datasets generated from 3Sources (Available from http://mlg.ucd.ie/datasets/3sources.html, accessed on October 2022), BBC (3), BBCSports (3) (both available from http://mlg.ucd.ie/datasets/segment.html, accessed on October 2022), Handwritten digits (Available from http://archive.ics.uci.edu/ml/datasets/Multiple+Features, accessed on October 2022) and Caltech (Available from https://github.com/yeqinglee/mvdata, accessed on October 2022). In order to set a fair evaluation environment, for all datasets, a single set of base partitions was generated for each data view by using the Cluto toolkit (Code available at http://glaros.dtc.umn.edu/gkhome/cluto/cluto/overview, accessed on August 2022).

The 3Sources dataset was collected from three well-known online news sources: BBC, Reuters, and The Guardian. A total of 169 articles were manually annotated with one or more of the six topical labels: business, entertainment, health, politics, sport, and technology.

The BBC and BBCSports data were collected from BBC-news, and originally BBC contained 2225 documents annotated into 5 topics, while BBC-Sports comprised 737 documents also with 5 annotated labels. Following [13], from each corpus 2–4 synthetic views were constructed by segmenting the documents according to their paragraphs. Therefore, for each one, 3 multi-view datasets are used, namely BBC-seg2, BBC-seg3 and BBC-seg4 with 2, 3 and 4 views, respectively. The same idea applies for BBCSports-seg2, BBCSports-seg3 and BBCSports-seg4.

The Handwritten digits data contain 2000 instances for ten digit classes (0–9), and the views are built from six subsets of features: 76 Fourier coefficients of the character shapes, 216 profile correlations, 64 Karhunen–Loeve coefficients, 240 pixel averages, 47 Zernike moments and 6 morphological features.

Caltech is a dataset consisting of 2386 images grouped in 20 categories. We follow [39] and use six groups of handcrafted features as views, namely Gabor features, wavelet moments, CENTRIST features, HOG features, GIST features and LBP features.

For each dataset, only the documents with labels in all views are used. The details for each collection are presented in Table 2.

Table 2.

Overall description of the real data collections.

4.2.1. Baseline Methods

We compare the performance results of our KMC algorithm against eight baseline methods: three approaches originally proposed by Strehl and Ghosh [28], namely cluster-based similarity partitioning (CSPA), hyper graph partitioning (HGPA) and meta clustering (MCLA) (Matlab code for these three methods available at http://strehl.com/soft.html, accessed on July 2022). ECPCS (ensemble clustering via fast propagation of cluster-wise similarities) with its two variants from [46] and MDEC (multidiversified ensemble clustering) with its three variants from [45]. For all these methods, their parameters were set as suggested in their corresponding papers.

4.2.2. Operational Details of the Compared Methods

In order to run Algorithm 1, the number of random partitions was set to 80. Additionally, KMC was executed 10 times over each dataset, and the average clustering quality of the final consensus was reported. Considering that we used external performance criteria and that the methods under evaluation need the number of final clusters as an input parameter, the number of clusters was always set to the number of ground truth classes.

4.2.3. Discussion of the Experimental Results

Table 3 shows the results obtained by each method over each dataset. The best result for each measure on each dataset is highlighted in bold font, and the second best is underlined.

Table 3.

Performance results of each method assessed through several external quality measures. Best results are in bold, and second best are underlined.

Overall, the proposed method attains competitive results over several datasets of varying sizes: In seven out of nine datasets, the consensuses built by our KMC algorithm were of higher quality than the baseline state-of-the-art methods. Additionally, KMC was capable of obtaining results over larger and smaller datasets, especially for text data. It is also worth mentioning that in most scenarios, KMC jointly achieves the lowest entropy, largest purity and best normalized mutual information. Assuming a correct assignment of ground truth labels, these results suggest that the exception managing mechanism introduced by our proposal along with its accumulative instance-cluster weights succeed in the final assignment of problematic data objects.

In addition to attaining comparable results over most datasets, it seems interesting to notice that KMC achieves an entropy that is better by a half compared with the other methods on the following datasets: BBC-seg3, BBCSports-seg2, and BBCSports-seg4. Furthermore, the performance values attained by KMC over BBC-seg2, BBC-seg4, BBCSports-seg2, BBCSports-seg3 and BBCSports-seg4 either on Purity, normalized mutual information (or both) presents a positive difference of over ten percent compared with the second best method.

Notwithstanding the promising results obtained by KMC, it is also important to analyze particularly its performance on the Caltech dataset. In this scenario, our proposal is relatively far behind the two best methods in every measure. The values presented suggest that the extra refinements made over the co-association matrix by the winning methods provide additional insights for the consensus procedure that our proposal is unable to capture.

Along with the Caltech dataset, the Handwritten dataset also shows that KMC has a lower performance when dealing with non-text data, more specifically image data. A possible cause for this decrease in performance could be due to poor quality solutions initially found by the base method. Nevertheless, these two datasets were presented as unfavorable scenarios to KMC.

To conclude on the experimental section over real data, we can see that our proposed method has shown to be very competitive compared with other state-of-the-art methods, some of them corresponding to quite recently published works.

4.3. Empirical Analysis of the Stability of the Consensus Solution

The aim of this section is to empirically study how the quality of the consensus solution built by KMC is affected by the degree of discrepancy among the data views. To this end, we simulate several artificial multi-view datasets presenting different degrees of disagreement between the views, and we apply our method to them. In this way, we can sweep a range of discrepancy level values and assess the quality of the final ensemble solution independently of the base method used to build the initial solutions.

Procedure

We consider artificial datasets made of several views over the same data instances. Since we want to assess the influence of factors such as the number of clusters, the number of views, and the quality of the local partitions on our methods, we used the following methodology:

- Depending on the simulation, we generated multi-view data belonging to k clusters spread across eight views, and the matching ground-truth partitions.

- In m views out of eight, the partitions were altered with a degree of noise that corresponds to random changes in partition assignments to simulate varying qualities of local solutions.

- In the other partitions (not part of the m out of 8 altered partitions), a ration of only 5% alteration was applied.

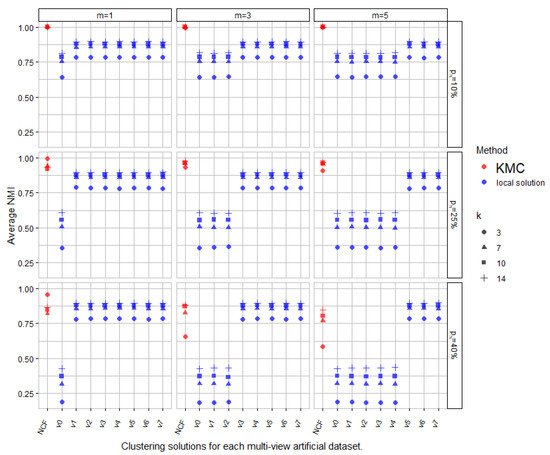

During our experiments, we tried several combinations of number of clusters (3, 7, 10, and 14), number of altered views (1, 3 and 5 out of 8), and different ratios of alteration (10%, 25% and 40%). Finally, the average NMI was measured over all views, plus the consensus solutions across all simulations as depicted in Figure 3.

Figure 3.

Average NMI values measured over all views plus the consensus solutions across all simulations.

Figure 3 presents the quality of the attained results in terms of the NMI measure over several datasets generated under varying levels of agreement between the views. From the figure, we can see several things. As expected, the better results are achieved for configurations with less noisy views and a lower degree of noise alteration. When focusing on the analysis in the central plot, we observe that 3 out of 8 views show a larger level of disagreement regarding the ground truth partition (). However, we can also see that in many cases where the number of noisy views remains reasonable, KMC manages to achieve higher-quality consensus partitions in comparison to all the other views (seven configurations out of nine), almost regardless of the number of clusters we tested.

5. Conclusions and Future Works

In this paper, we presented KMC, a novel multi-view clustering fusion method relying on the notion of Kolmogorov complexity. This method is able to detect discrepancies and common points, and to merge partitions acquired from different algorithms or views. While minimum description length and Kolmogorov complexity have already been used in multi-view clustering contexts, the originality of our method lies in two points:

- Unlike in [7], which introduced the use of Kolmogorov complexity in a multi-view setting, we aim at a full merging of the clusters, and not just optimizing things locally.

- Unlike in [51], which is multi-view with merging, the optimization process to reduce these conflicts and merge partitions in a effective manner, we propose a new and improved algorithm to choose the merging order of the partitions, thus making our algorithm stable compared with earlier versions of the same technique.

Furthermore, our algorithm also uses techniques from unsupervised ensemble learning (merging partitions from several algorithms), and is applied to a multi-view clustering context (multiple representations of the same data objects).

We compared our method with algorithms from the field of unsupervised ensemble learning and multi-view cluster on several datasets from the literature. Despite strong differences in term of algorithm philosophies and original applications, we demonstrated that KMC attains a competitive consensus quality in relation to the state-of-the-art techniques. Its competitiveness coupled with the novelty it brings in terms of its core principle is an important contribution to the field of unsupervised ensemble learning, as it increases the variety of available methods, which is quite important in an unsupervised setting.

Finally, we also conducted an empirical study about how our method reacts under different noise conditions in a varying number of views, and the influence of the number of clusters. These results give a unique insight to the fine properties of the proposed algorithm, which has proved to be resilient to noise and very adaptive. This part of the study is very important, as it is unknown how other methods from the state of the art behave in similar conditions.

In our future works, we plan on focusing more on the theoretical properties of our algorithm that may be extracted from the empirical study. In particular, it would be interesting to have bounds based on levels of noises, but also to study the influence of clustering stability in local partitions and the role it may play in the merge result after our method.

Author Contributions

J.Z. and J.S. worked together for most of this work. The theoretical model comes more from J.S., while the final application was provided by J.Z. Both authors worked on the experiment design. J.Z. performed most of the experiments. Finally, interpretation and proofreading were handled by both authors. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially funded by project Fondecyt Initiation into Research 11200826 by ANID, Chile.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Sources can be found at https://github.com/jfzo/Multiview-clustering/releases/tag/Journal, last accessed on February 2023.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CSPA | Cluster-based Similarity Partitioning |

| ECPCS | Ensemble Clustering via fast Propagation of Cluster-wise Similarities |

| HGPA | Hyper Graph Partitioning |

| KMC | Kolmogorov-based Multi-view Clustering |

| MCLA | Meta Clustering |

| MDEC | Multidiversified Ensemble Clustering |

| NMI | Normalized Mutual Information |

References

- Tagarelli, A.; Karypis, G. A segment-based approach to clustering multi-topic documents. Knowl. Inf. Syst. 2013, 34, 563–595. [Google Scholar] [CrossRef]

- Fraj, M.; HajKacem, M.A.B.; Essoussi, N. Ensemble Method for Multi-view Text Clustering. In Proceedings of the Computational Collective Intelligence—11th International Conference, ICCCI 2019, Hendaye, France, 4–6 September 2019; pp. 219–231. [Google Scholar] [CrossRef]

- Zimek, A.; Vreeken, J. The blind men and the elephant: On meeting the problem of multiple truths in data from clustering and pattern mining perspectives. Mach. Learn. 2015, 98, 121–155. [Google Scholar] [CrossRef]

- Ghosh, J.; Acharya, A. Cluster ensembles. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2011, 1, 305–315. [Google Scholar] [CrossRef]

- Wallace, C.S.; Boulton, D.M. An Information Measure for Classification. Comput. J. 1968, 11, 185–194. [Google Scholar] [CrossRef]

- Rissanen, J. Modeling by shortest data description. Automatica 1978, 14, 465–471. [Google Scholar] [CrossRef]

- Murena, P.; Sublime, J.; Matei, B.; Cornuéjols, A. An Information Theory based Approach to Multisource Clustering. In Proceedings of the IJCAI, Stockholm, Sweden, 13–19 July 2018; pp. 2581–2587. [Google Scholar]

- Zamora, J.; Sublime, J. A New Information Theory Based Clustering Fusion Method for Multi-view Representations of Text Documents. In Proceedings of the Social Computing and Social Media, Design, Ethics, User Behavior, and Social Network Analysis—12th International Conference, SCSM 2020, Held as Part of the 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020; Meiselwitz, G., Ed.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2020; Volume 12194, pp. 156–167. [Google Scholar] [CrossRef]

- Murena, P.A.; Sublime, J.; Matei, B. Rethinking Collaborative Clustering: A Practical and Theoretical Study within the Realm of Multi-View Clustering. In Recent Advancements in Multi-View Data Analytics; Studies in Big Data Series; Springer: Berlin/Heidelberg, Germany, 2022; Volume 106. [Google Scholar]

- Bickel, S.; Scheffer, T. Multi-View Clustering. In Proceedings of the 4th IEEE International Conference on Data Mining (ICDM 2004), Brighton, UK, 1–4 November 2004; pp. 19–26. [Google Scholar] [CrossRef]

- Janssens, F.; Glänzel, W.; De Moor, B. Dynamic hybrid clustering of bioinformatics by incorporating text mining and citation analysis. In Proceedings of the 13th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Jose, CA, USA, 12–15 August 2007; pp. 360–369. [Google Scholar]

- Liu, X.; Yu, S.; Moreau, Y.; De Moor, B.; Glänzel, W.; Janssens, F. Hybrid clustering of text mining and bibliometrics applied to journal sets. In Proceedings of the 2009 SIAM International Conference on Data Mining, Sparks, NV, USA, 30 April–2 May 2009; pp. 49–60. [Google Scholar]

- Greene, D.; Cunningham, P. A matrix factorization approach for integrating multiple data views. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Bled, Slovenia, 7–11 September 2009; pp. 423–438. [Google Scholar]

- Yu, S.; Moor, B.; Moreau, Y. Clustering by heterogeneous data fusion: Framework and applications. In Proceedings of the NIPS Workshop, Whistler, BC, Canada, 11 December 2009. [Google Scholar]

- Liu, X.; Glänzel, W.; De Moor, B. Hybrid clustering of multi-view data via Tucker-2 model and its application. Scientometrics 2011, 88, 819–839. [Google Scholar] [CrossRef]

- Liu, X.; Ji, S.; Glänzel, W.; De Moor, B. Multiview partitioning via tensor methods. IEEE Trans. Knowl. Data Eng. 2012, 25, 1056–1069. [Google Scholar]

- Xie, X.; Sun, S. Multi-view clustering ensembles. In Proceedings of the International Conference on Machine Learning and Cybernetics, ICMLC 2013, Tianjin, China, 14–17 July 2013; pp. 51–56. [Google Scholar] [CrossRef]

- Romeo, S.; Tagarelli, A.; Ienco, D. Semantic-based multilingual document clustering via tensor modeling. In Proceedings of the Conference on Empirical Methods in Natural Language Processing EMNLP, Doha, Qatar, 25–29 October 2014; pp. 600–609. [Google Scholar]

- Hussain, S.F.; Mushtaq, M.; Halim, Z. Multi-view document clustering via ensemble method. J. Intell. Inf. Syst. 2014, 43, 81–99. [Google Scholar] [CrossRef]

- Benjamin, J.B.M.; Yang, M.S. Weighted Multiview Possibilistic C-Means Clustering With L2 Regularization. IEEE Trans. Fuzzy Syst. 2022, 30, 1357–1370. [Google Scholar] [CrossRef]

- Xu, Y.M.; Wang, C.D.; Lai, J.H. Weighted Multi-view Clustering with Feature Selection. Pattern Recognit. 2016, 53, 25–35. [Google Scholar] [CrossRef]

- Visalakshi, N.K.; Thangavel, K. Distributed Data Clustering: A Comparative Analysis. In Foundations of Computational, Intelligence Volume 6: Data Mining; Abraham, A., Hassanien, A.E., de Leon, F., de Carvalho, A.P., Snášel, V., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 371–397. [Google Scholar] [CrossRef]

- Cornuéjols, A.; Wemmert, C.; Gançarski, P.; Bennani, Y. Collaborative clustering: Why, when, what and how. Inf. Fusion 2018, 39, 81–95. [Google Scholar] [CrossRef]

- Pedrycz, W. Collaborative fuzzy clustering. Pattern Recognit. Lett. 2002, 23, 1675–1686. [Google Scholar] [CrossRef]

- Grozavu, N.; Bennani, Y. Topological Collaborative Clustering. Aust. J. Intell. Inf. Process. Syst. 2010, 12, 14. [Google Scholar]

- Jiang, Y.; Chung, F.L.; Wang, S.; Deng, Z.; Wang, J.; Qian, P. Collaborative Fuzzy Clustering From Multiple Weighted Views. IEEE Trans. Cybern. 2015, 45, 688–701. [Google Scholar] [CrossRef]

- Yang, M.S.; Sinaga, K.P. Collaborative feature-weighted multi-view fuzzy c-means clustering. Pattern Recognit. 2021, 119, 108064. [Google Scholar] [CrossRef]

- Strehl, A.; Ghosh, J. Cluster ensembles—A knowledge reuse framework for combining multiple partitions. J. Mach. Learn. Res. 2002, 3, 583–617. [Google Scholar]

- Li, T.; Ogihara, M.; Ma, S. On combining multiple clusterings. In Proceedings of the Thirteenth ACM International Conference on INFORMATION and Knowledge Management, Washington, DC, USA, 8–13 November 2004; pp. 294–303. [Google Scholar]

- Fred, A.L.; Jain, A.K. Combining multiple clusterings using evidence accumulation. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 835–850. [Google Scholar] [CrossRef]

- Topchy, A.; Jain, A.K.; Punch, W. Clustering ensembles: Models of consensus and weak partitions. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1866–1881. [Google Scholar] [CrossRef]

- Yi, J.; Yang, T.; Jin, R.; Jain, A.K.; Mahdavi, M. Robust ensemble clustering by matrix completion. In Proceedings of the 2012 IEEE 12th International Conference on Data Mining, Brussels, Belgium, 10–13 December 2012; pp. 1176–1181. [Google Scholar]

- Wu, J.; Liu, H.; Xiong, H.; Cao, J.; Chen, J. K-means-based consensus clustering: A unified view. IEEE Trans. Knowl. Data Eng. 2014, 27, 155–169. [Google Scholar] [CrossRef]

- Liu, H.; Zhao, R.; Fang, H.; Cheng, F.; Fu, Y.; Liu, Y.Y. Entropy-based consensus clustering for patient stratification. Bioinformatics 2017, 33, 2691–2698. [Google Scholar] [CrossRef]

- Rashidi, F.; Nejatian, S.; Parvin, H.; Rezaie, V. Diversity based cluster weighting in cluster ensemble: An information theory approach. Artif. Intell. Rev. 2019, 52, 1341–1368. [Google Scholar] [CrossRef]

- Vega-Pons, S.; Ruiz-Shulcloper, J. A Survey of Clustering Ensemble Algorithms. IJPRAI 2011, 25, 337–372. [Google Scholar] [CrossRef]

- Kuncheva, L.I.; Whitaker, C.J. Measures of Diversity in Classifier Ensembles and Their Relationship with the Ensemble Accuracy. Mach. Learn. 2003, 51, 181–207. [Google Scholar] [CrossRef]

- Wemmert, C.; Gancarski, P. A multi-view voting method to combine unsupervised classifications. In Proceedings of the 2nd IASTED International Conference on Artificial Intelligence and Applications, Málaga, Spain, 9–12 September 2002; pp. 447–452. [Google Scholar]

- Li, Y.; Nie, F.; Huang, H.; Huang, J. Large-Scale Multi-View Spectral Clustering via Bipartite Graph. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 2750–2756. [Google Scholar]

- Kang, Z.; Guo, Z.; Huang, S.; Wang, S.; Chen, W.; Su, Y.; Xu, Z. Multiple Partitions Aligned Clustering. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI 2019, Macao, China, 10–16 August 2019; pp. 2701–2707. [Google Scholar]

- Li, S.Y.; Jiang, Y.; Zhou, Z.H. Partial Multi-View Clustering. In Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; AAAI Press: Washington, DC, USA, 2014; pp. 1968–1974. [Google Scholar]

- Wang, H.; Yang, Y.; Liu, B. GMC: Graph-Based Multi-View Clustering. IEEE Trans. Knowl. Data Eng. 2020, 32, 1116–1129. [Google Scholar] [CrossRef]

- Kang, Z.; Zhao, X.; Peng, C.; Zhu, H.; Zhou, J.T.; Peng, X.; Chen, W.; Xu, Z. Partition level multiview subspace clustering. Neural Netw. 2020, 122, 279–288. [Google Scholar] [CrossRef]

- Zhong, C.; Hu, L.; Yue, X.; Luo, T.; Fu, Q.; Xu, H. Ensemble clustering based on evidence extracted from the co-association matrix. Pattern Recognit. 2019, 92, 93–106. [Google Scholar] [CrossRef]

- Huang, D.; Wang, C.D.; Lai, J.H.; Kwoh, C.K. Toward Multidiversified Ensemble Clustering of High-Dimensional Data: From Subspaces to Metrics and Beyond. IEEE Trans. Cybern. 2021, 52, 12231–12244. [Google Scholar] [CrossRef]

- Huang, D.; Wang, C.D.; Peng, H.; Lai, J.; Kwoh, C.K. Enhanced Ensemble Clustering via Fast Propagation of Cluster-Wise Similarities. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 508–520. [Google Scholar] [CrossRef]

- Yeh, C.C.; Yang, M.S. Evaluation measures for cluster ensembles based on a fuzzy generalized Rand index. Appl. Soft Comput. 2017, 57, 225–234. [Google Scholar] [CrossRef]

- Sublime, J.; Matei, B.; Cabanes, G.; Grozavu, N.; Bennani, Y.; Cornuéjols, A. Entropy based probabilistic collaborative clustering. Pattern Recognit. 2017, 72, 144–157. [Google Scholar] [CrossRef]

- Ros, F.; Guillaume, S. ProTraS: A probabilistic traversing sampling algorithm. Expert Syst. Appl. 2018, 105, 65–76. [Google Scholar] [CrossRef]

- Karypis, M.; Steinbach, G.; Kumar, V. A comparison of document clustering techniques. In Proceedings of the KDD Workshop on Text Mining, Boston, MA, USA, 20–23 August 2000. [Google Scholar]

- Zamora, J.; Allende-Cid, H.; Mendoza, M. Distributed Clustering of Text Collections. IEEE Access 2019, 7, 155671–155685. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).