1. Introduction

The Shannon entropy measure of a probability distribution has found critical applications in numerous areas described in Shannon’s seminal work [

1]. Information theory provides an uncertainty measure associated with unpredictable phenomena. If

X is a non-negative random variable (rv) with absolutely continuous cumulative distribution function (cdf)

and probability density function (pdf)

the Shannon entropy is represented as follows

provided the integral is finite. The entropy of Tsallis with order

generalizes the Shannon entropy, defined by (see [

2])

where

and for all

where

means the expectation and

for

stands for the quantile function. Generally, Tsallis entropy can be negative, however, it can be nonnegative if a proper value of

is chosen. It is evident that

and hence it reduces to the Shannon entropy.

It is known that the Shannon entropy is additive. The entropy of Tsallis is, however, non-additive since

Due to the flexibility of the Tsallis entropy compared to the Shannon entropy, the non-additive entropy measures find their justification in many areas of information theory, physics, chemistry, and technology.

Several properties and statistical aspects of the Tsallis entropy can be found in [

3,

4,

5,

6]. The Tsallis entropy for coherent systems and also mixed systems in the iid case is studied. A novel measure of uncertainty involving differential Shannon entropy and discriminant Kullback–Leibler quantity for comparing systems in terms of uncertainty in predicting lifetimes has been developed by [

7,

8,

9]. A similar consequence on the subject has been argued in [

10]. Furthermore, the Tsallis entropy properties of the order statistics in [

11]. We aim here to continue the research within an analogous framework.

The rest of the article is thus planned as follows. In

Section 2, we first study some essential properties of the Tsallis entropy of order

and then establish sufficient conditions for it to preserve the usual stochastic order.

Section 3 examines various properties of the dynamical version in detail.

Section 4 discusses the Tsallis entropy and its properties for coherent structures and mixed structures in the iid case. Finally, bounds on the Tsallis entropy of system lifetimes are also given.

The stochastic orders

and

, known as usual stochastic order, hazard rate order and dispersive order will be utilized in the rest of the paper (see Shaked and Shanthikumar [

12]).

The following implications hold:

[The] dispersive order is recognized to be the order of distributional variability.

2. Properties of Tsallis Entropy

Below are other useful properties of the measure. First, another useful expression for the Tsallis entropy can be conveyed in terms of the proportional hazard rate (PH) function. For this purpose, if

is related to

or the hazard rate function

the Tsallis entropy is expressed as

for all

where

denotes the rv with pdf

In

Table 1, we provided the Tsallis entropy of some well-known distributions.

It is worth noting that the pdf given in (

4), is actually the pdf of the minimum of two rv’s in the iid case (see, [

13]). We note that the Tsallis entropy is invariant in the discrete case, while it is not invariant in the continuous case under one-to-one transformation of the rv under consideration. In this case, if

is a one-to-one function and

then (see e.g., Ebrahimi et al. [

14])

where

is the Jacobian of the transformation. It is evident that

Hence, one can readily find that

for all

The Shannon entropy is scale-dependent. However, it is free of location, that is,

X retains the identical differential entropy as

for any

The same results also hold for the Tsallis entropy. Indeed, for all

and

from the above relation, we have

Now, we recall the definition of Tsallis entropy which can be seen in [

4] for greater details.

Definition 1. Suppose with the cdfs The rv is smaller than the rv in the Tsallis entropy of order () if for all

It is worth pointing out that

indicates that the predictability of the outcome of

is more than that of

in terms of the Tsallis entropy. As an immediate consequence of (

2), consider the subsequent theorem.

Theorem 1. Let with cdfs and , respectively. If then

Proof. If

then, for all

This yields , for all . □

The following theorem develops the impact of a transformation on the Tsallis entropy of an rv. It is analogous to Theorem 1 in [

15] and hence we skip its proof.

Theorem 2. Let with the pdf and , where is a function with a continuous derivative such that If for all x supported by then for all

The next theorem presents implications of the stochastic order under some aging constraints of the associated rv’s and the order

Theorem 3. If and

- (i)

is DFR, then for all

- (ii)

is DFR, then for all

Proof. (i) Let

and

If

is DFR, then

The first inequality in (

5) is obtained as follows. Since

is DFR, then

is increasing in

x for all

So, the result ensues from the fact that

implies

for all increasing function

The second inequality is obtained by using Hölder’s inequality. Thanks to the use of relations (

2) and (

5), we have

which proves the claim. To prove (ii), we have

The first inequality is obtained by mentioning that is DFR and hence is decreasing in x for all while the second inequality is given by using Hölder’s inequality. Now, the results follow. □

It is worth noting that Theorem 3 can be applied to several statistical models such as Weibull, Rayleigh, Pareto, Gamma, and Half Logistic, among others. The mentioned models involve the DFR aging property by choosing a suitable parameter.

3. Properties of Residual Tsallis Entropy

Here we give an overview of the dynamical perspectives of the Tsallis entropy of order

We note that in this section, the term “decreasing” is equivalent to “non-increasing”, and “increasing” is equivalent to “non-decreasing”. Suppose that

X is the life length of a new system. In this case, the Tsallis entropy

is appropriate to measure the uncertainty of such a system. However, if the system remains alive until the age

then

is not appropriate to measure the remaining or residual uncertainty in the system’s lifetime. Thus, let us denote

the residual lifetime of an item with the pdf

where

Then, the residual Tsallis entropy is represented by

for all

and

Another useful representation is as follows:

where

denotes the rv having pdf

Based on the measure , a few classes of life distributions are proposed.

Definition 2. The rv X has increasing (decreasing) residual Tsallis entropy of order α () if is increasing (decreasing) in t for all

Roughly speaking, if a unit has a cdf belonging to the class of , then the conditional pdf becomes less (more) informative as the unit becomes older. In the following lemma, we give the derivative of the residual Tsallis entropy.

Lemma 1. For all we havefor all Remark 1. Let us assume that X is Then, and therefore we have That is This reveals that exponential distribution is the only distribution fulfilling and

We establishes a relationship among the new and known classes of life distributions with increasing (decreasing) failure rates.

Theorem 4. For any with the pdf if X has IFR(DFR) property, then X is .

Proof. We shall prove it for IFR, while the DFR case can be derived similarly. Suppose

X is IFR and

Then

is decreasing and hence we have

for

Since

from (

8) the result follows. When

then the above relation is reversed and using again (

8), we have the result and this implies that

X is

□

There is a large class of monotonic density distributions to which the above theorem can be applied. Another important class of life distributions is those with an increasing failure rate in average (IFRA). In particular, X is IFRA if increases in The following example shows no relationship between the proposed class and the IFRA class of life distributions.

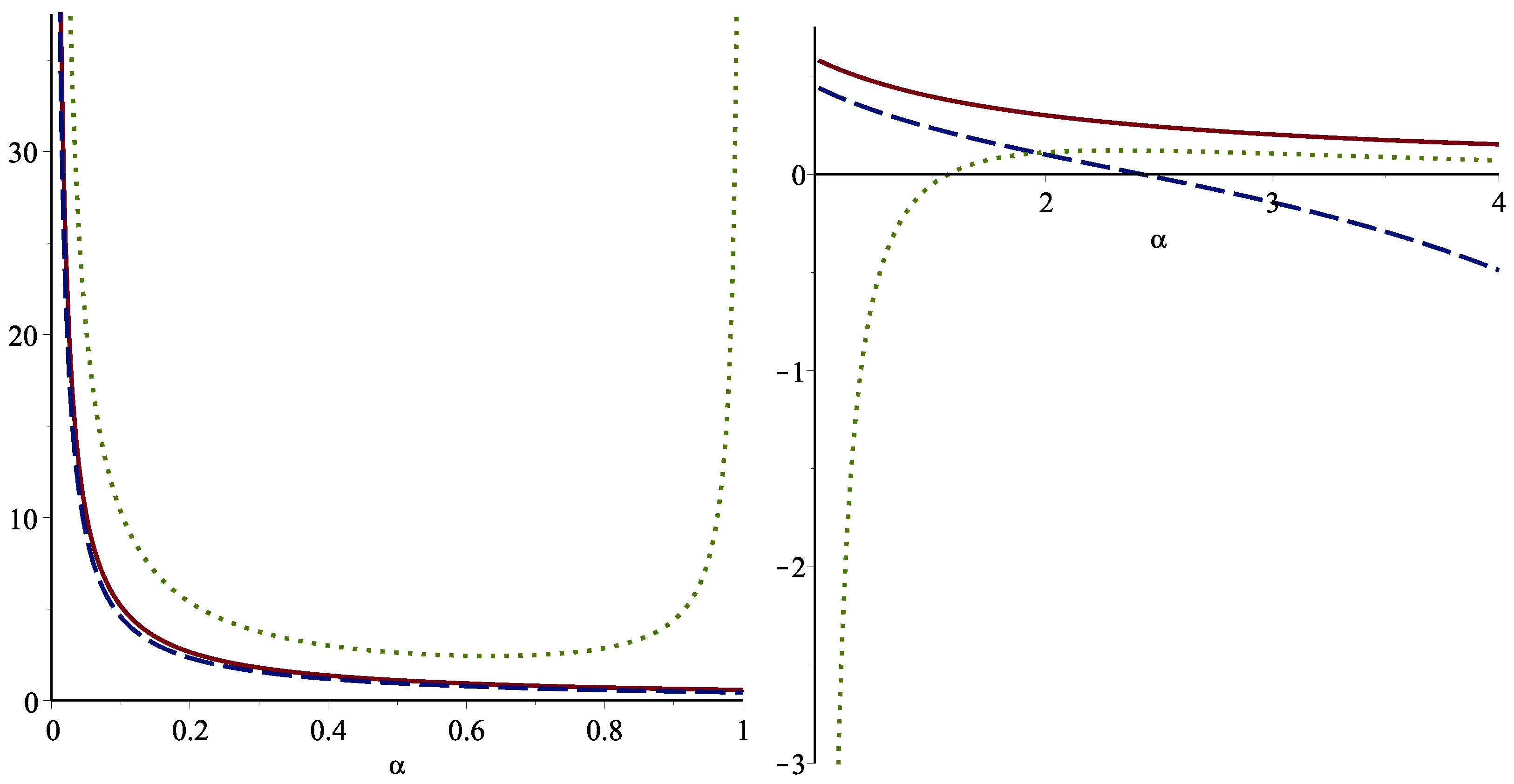

Example 1. Consider the sf of a random lifetime asand hence The plot of the residual Tsallis entropy in Figure 1 exhibits that X is not . We now see how the residual Tsallis entropy and the hazard rate orders are related.

Theorem 5. If and either or is DFR, then for all

Proof. Suppose

and

denote the residual rv’s with pdfs

and

respectively. The relation

implies that

where

and

have sf’s

and

respectively. Let us suppose

If we assume that

is DFR, then

is decreasing in

Hence, we have

for all

where

From (

6), we obtain

for all

Now if we assume that

then the above relation can be reversed and then from (

6), we obtain

for all

So, we have

for all

The proof when

is DFR can be obtained similarly. □

4. Tsallis Entropy of Coherent Structures and Mixed Structures

This section gives some aspects of the Tsallis entropy of coherent (and mixed) structures. A coherent system is one in which all system components are relevant, and the structure-function of it is monotonic. The

k-out-of-

n system is a particular case of a coherent structure. Furthermore, a mixture of coherent systems is considered as a mixed system (see, [

16]). The reliability function of the mixed system lifetime

T, in the iid case, is represented by

where

when

are the sf’s of

The density function of

T can be written as

so that

The vector in is called the system signature, such that Note that are non-negative as they are probabilities and thus holds.

The order statistic

has pdf

Now, the pdf of

is given by

Applying the above transformations, we find in the following theorem a formula for the Tsallis entropy of

Proposition 1. The Tsallis entropy of T iswhere has been shown in (14). Proof. By using the change of

from (

2) and (

11) we have

for all

The result now follows. □

To apply Proposition 1, consider the next example.

Example 2. The vector is the signature of a bridge system with in the iid case with the basal reliability function . This system remains functional provided that there is a path of operational connections running from left to right. It is obvious that and we therefore havefor all The Tsallis entropy is decreasing with respect to λ as the uncertainty of the system’s lifetime decreases with increasing the parameter Moreover, we have It is clear that it decreases as α increases. The system signatures of orders 1–5 have been calculated in [

17] and, therefore, we can compute the values of

numerically for all

Considering different values of

the Tsallis entropy of systems with 1–4 iid exponential components has been given in

Table 2. Generally,

has well resulted concerning the standard deviations of

for some

as was shown in

Table 2. To compare the Tsallis entropy of two mixed systems with the identical signature having iid component lifetimes by using Equation (

15) which is expressed below.

Theorem 6. The rv’s and are supposed to be the lifetime of two mixed systems with the same signature having n iid component lifetimes.

(i) If , then .

(ii) Assume and consider If , or , then .

Proof. (i) By the assumption

and the two systems have the same signature, so expression (

15) gives

for all

and

for

Now, this completes the proof.

(ii) If the condition

or

hold, then the outcome is clear. Hence, we assume that

and

. Assumption

and Equation (

2) imply, for

and for

,

Then, for all

it holds that

In (

18), the second inequality is given by the assumption

while the last inequality is found by implementing (

17). The result for

can also be lifted as in the proof above. □

Let us take the following example to demonstrate the above theorem.

Example 3. Consider the lifetime in the iid case with basal cdfand another lifetime with basal cdf The signature is . It can be readily seen, for all that So, one can see that . Moreover, it is readily apparent that and , and hence . Therefore Part (ii) of Theorem 6 results .

We show that the minimum of lifetimes in the iid case has lower or equal Tsallis entropy than all mixed systems under the property of decreasing failure rate of component lifetimes.

Theorem 7. If T represents the mixed system’s lifetime in the iid case and the component lifetime is DFR, then .

Proof. Recalling [

18], it is evident that

and

as the series system has a DFR lifetime provided that the parent component lifetime is DFR. Now, the result is found by using Theorem 1. □

Bounds for the Tsallis Entropy of Mixed Systems

With the insights from the previous section, we can now find some inequalities and bounds on the Tsallis entropy of mixed systems. We note that it is usually difficult or sometimes impossible to determine the Tsallis entropy when the system is identified by a complex structure-function or when the components included in the system are immeasurable. This is why it is valuable to provide bounds on the measure. The following result provides bounds for the Tsallis entropy in the mixed system concerning the Tsallis entropy of its components.

Theorem 8. If . Thenfor all andfor Proof. Recall the relation (

15), for all

we have

where the last equality is obtained from (

2) and this completes the proof. □

If the amount of the components of a system is very big or the system has a very complicated design, the provided bounds in (

21) and (

22) are very applicable in such circumstances. Here, we find an overall lower bound Tsallis entropy for the lifetime of the system by applying properties of Tsallis entropy.

Theorem 9. Underneath the requirements of the Theorem 8, we havewhere for all and is the Tsallis entropy of the i-th order statistics. Proof. Recall the Jensen’s inequality for the convex function

(it is concave (convex) for

), it holds that

and hence we obtain

Since

, by multiplying both side (

24) in

, we obtain

and this completes the proof. □

The bound in (

23) parallels the pdf of the lifetime of the system. It can be expressed as the linear transformation of the Tsallis entropy of an

i-out-of-

n system. Moreover, when the lower bound in Theorem 8 and also the lower bound in Theorem 9 can be determined, one may adopt the maximum of the two lower bounds.

Example 4. Let us suppose a system signature with lifetime T including iid component lifetimes having the common cdf given in (20). One can compute that and hence recalling Theorem 8, the Tsallis entropy of T is bounded as follows:for . The component lifetimes are considered to be exponential. In this case, we plotted the bounds (25), (23) and the true value of using relation (15) which are shown in Figure 2. As it can be seen that the lower bound in (25) (dotted line) outperforms than (23) for 5. Conclusions

Extensions of Shannon entropy have been presented in the literature before. The Tsallis entropy, which can be considered as a different measure of uncertainty, is one of the extended entropy measures. We have described here some other properties of this measure. We first determined the Tsallis entropy of some known distributions and then proved that it is not invariant if a one-to-one transformation of the lifetime is taken in the continuous case. The connection to the usual stochastic order has been revealed. It is well known that systems having greater lifetimes and lower uncertainty are apposite systems, and that the reliability of a system usually decreases as its uncertainty increases. These findings led us to study the entropy of Tsallis for coherent structures and mixed systems in the iid case. Finally, we established some bounds on the Tsallis entropy of the systems and illustrated the usefulness of the given bounds.

Author Contributions

Conceptualization, G.A.; Formal analysis, M.K.; Investigation, M.K.; Methodology, G.A.; Project administration, G.A.; Resources, M.K.; Software, M.K.; Supervision, G.A.; Validation, M.K.; Writing—original draft, M.K.; Writing—review & editing, G.A. All authors have read and agreed to the published version of the manuscript.

Funding

Princess Nourah bint Abdulrahman University Researchers Supporting Project Number (PNURSP2023R226), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

The authors are grateful to the three anonymous Reviewers for their comments and suggestions, which led to this improved version of the paper. The authors extend their sincere appreciation to Princess Nourah bint Abdulrahman University Researchers Supporting Project Number (PNURSP2023R226), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Tsallis, C. Possible generalization of boltzmann-gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Kumar, V.; Taneja, H. A generalized entropy-based residual lifetime distributions. Int. J. Biomath. 2011, 4, 171–184. [Google Scholar] [CrossRef]

- Nanda, A.K.; Paul, P. Some results on generalized residual entropy. Inf. Sci. 2006, 176, 27–47. [Google Scholar] [CrossRef]

- Wilk, G.; Wlodarczyk, Z. Example of a possible interpretation of tsallis entropy. Phys. A Stat. Mech. Its Appl. 2008, 387, 4809–4813. [Google Scholar] [CrossRef]

- Zhang, Z. Uniform estimates on the tsallis entropies. Lett. Math. Phys. 2007, 80, 171–181. [Google Scholar] [CrossRef]

- Toomaj, A.; Doostparast, M. A note on signature-based expressions for the entropy of mixed r-out-of-n systems. Nav. Res. Logist. 2014, 61, 202–206. [Google Scholar] [CrossRef]

- Toomaj, A. Renyi entropy properties of mixed systems. Commun. Stat.-Theory Methods 2017, 46, 906–916. [Google Scholar] [CrossRef]

- Toomaj, A.; Crescenzo, A.D.; Doostparast, M. Some results on information properties of coherent systems. Appl. Stoch. Model. Bus. Ind. 2018, 34, 128–143. [Google Scholar] [CrossRef]

- Toomaj, A.; Zarei, R. Some new results on information properties of mixture distributions. Filomat 2017, 31, 4225–4230. [Google Scholar] [CrossRef]

- Baratpour, S.; Khammar, A. Tsallis entropy properties of order statistics and some stochastic comparisons. J. Stat. Res. Iran 2016, 13, 25–41. [Google Scholar] [CrossRef]

- Shaked, M.; Shanthikumar, J.G. Stochastic Orders; Springer Science and Business Media: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Arnold, B.C.; Balakrishnan, N.; Nagaraja, H.N. A First Course in Order Statistics; SIAM: New York, NY, USA, 2008. [Google Scholar]

- Ebrahimi, N.; Soofi, E.S.; Soyer, R. Information measures in perspective. Int. Stat. Rev. 2010, 78, 383–412. [Google Scholar] [CrossRef]

- Ebrahimi, N.; Maasoumi, E.; Soofi, E.S. Ordering univariate distributions by entropy and variance. J. Econom. 1999, 90, 317–336. [Google Scholar] [CrossRef]

- Samaniego, F.J. System Signatures and Their Applications in Engineering Reliability; Springer Science and Business Media: Berlin/Heidelberg, Germany, 2007; Volume 110. [Google Scholar]

- Navarro, J.; del Aguila, Y.; Asadi, M. Some new results on the cumulative residual entropy. J. Stat. Plan. Inference 2010, 140, 310–322. [Google Scholar] [CrossRef]

- Bagai, I.; Kochar, S.C. On tail-ordering and comparison of failure rates. Commun. Stat.-Theory Methods 1986, 15, 1377–1388. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).