A Semantic-Enhancement-Based Social Network User-Alignment Algorithm

Abstract

1. Introduction

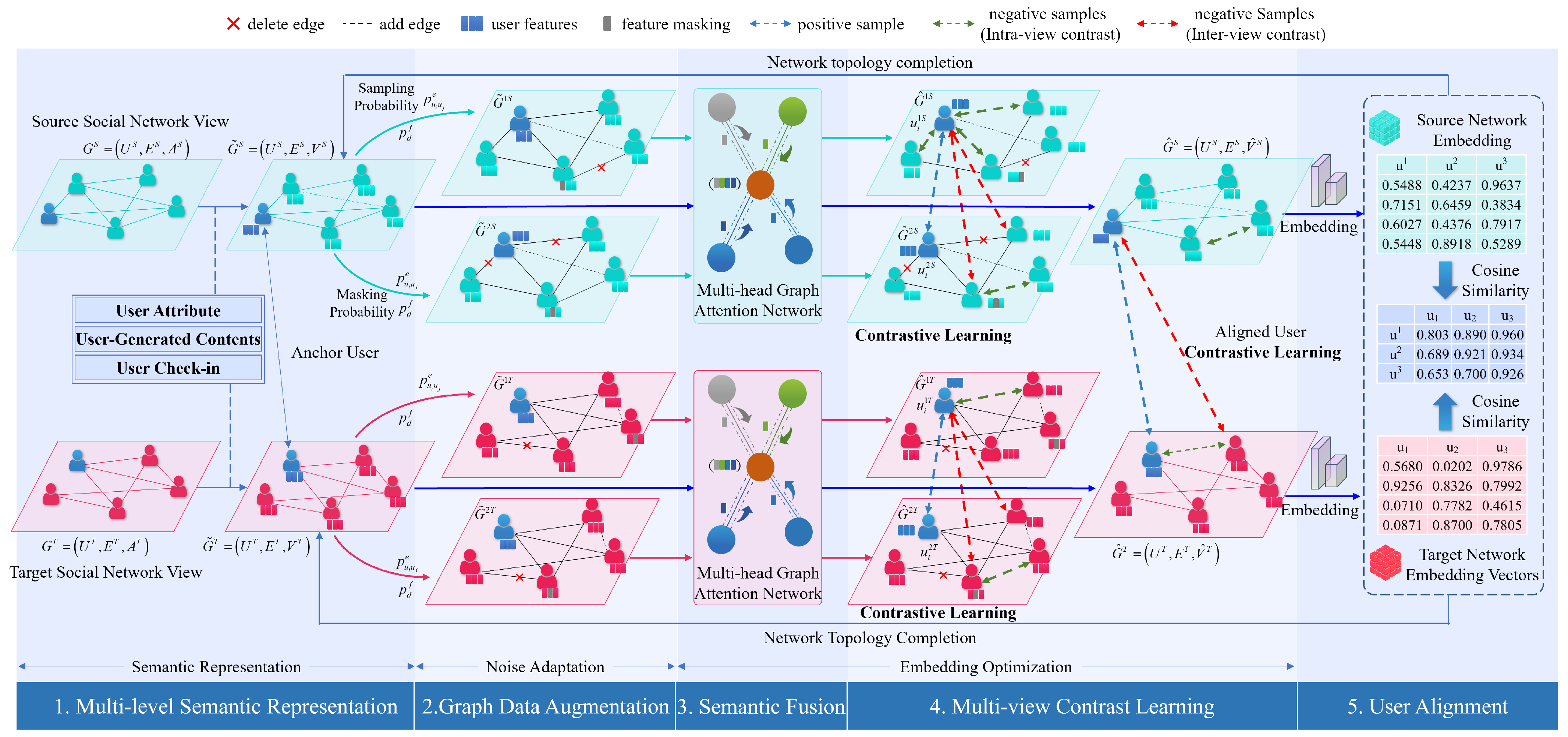

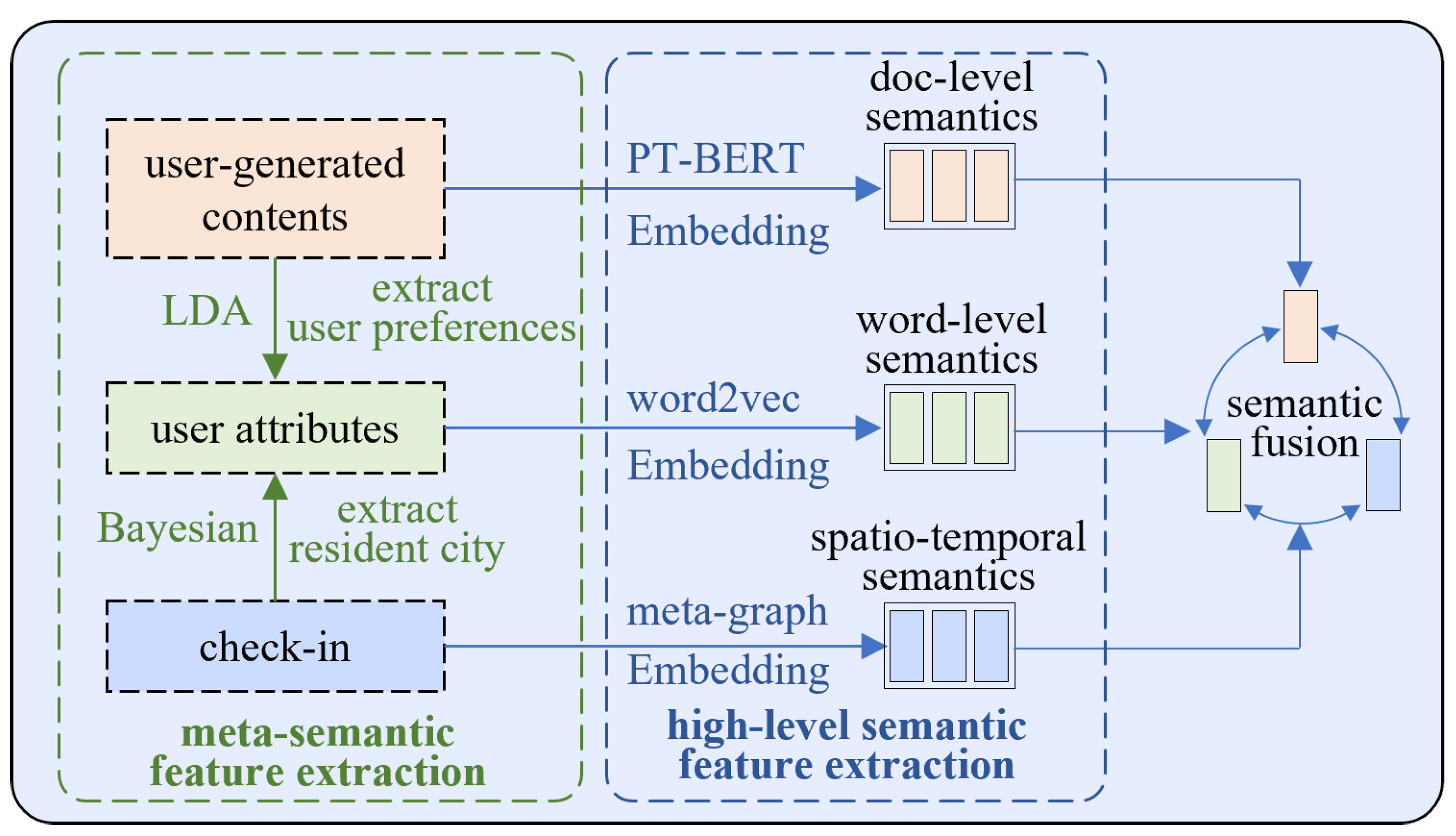

- Multi-level data analysis can improve the mining of users’ semantic features. We extract meta-semantic features, specifically, users’ preferences and cities of residence from UGCs and check-ins, and then extract high-level semantic features of users from user attributes, UGCs, and check-ins, based on BERT, word2vec, and meta-graph, respectively. The semantic features of users are represented on multiple levels, which reduces the interference of local semantic noise and improves the accuracy of computing user similarity.

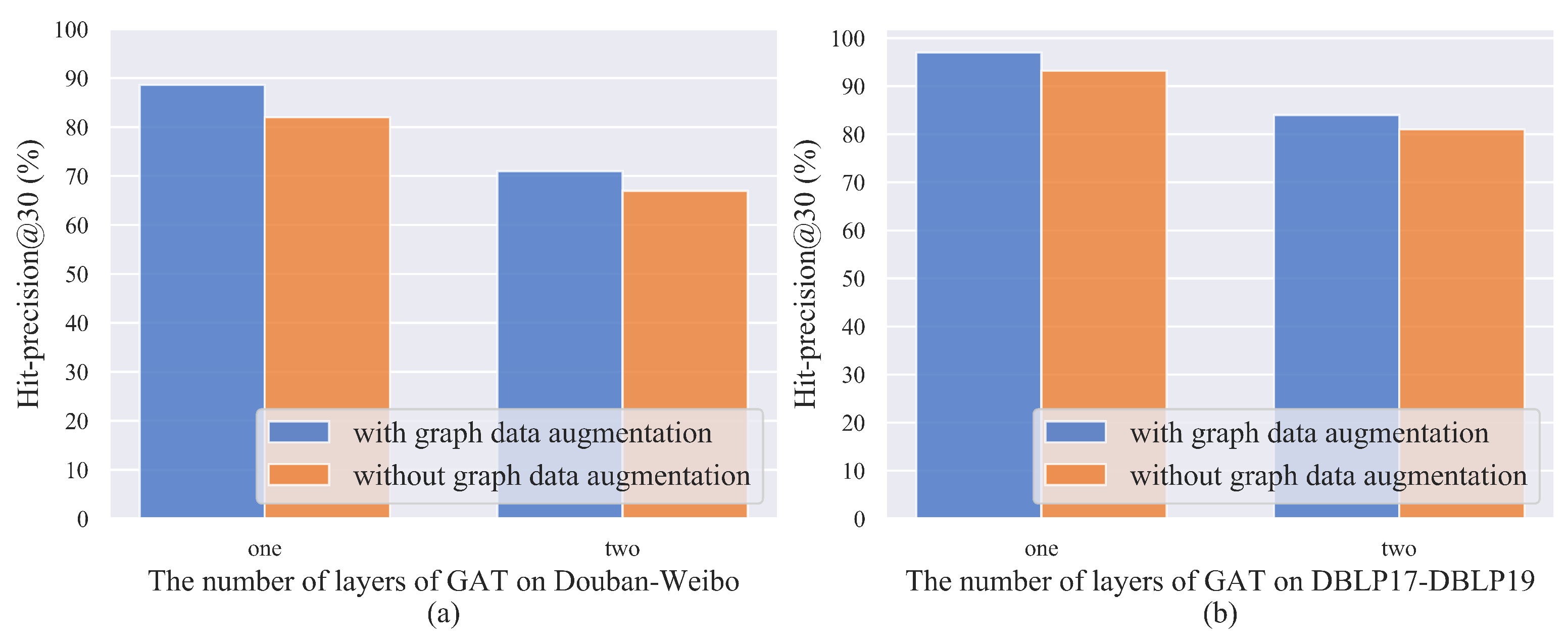

- The heterogeneity of different social networks introduces feature and topology noise interference into the calculation of user alignment. Since users’ influence and preferences have important impacts on semantic propagation among users, we compute the semantic centrality of users based on these two features and assign appropriate weights to the features and topologies. The model’s adaptability to noise is improved by graph-data augmentation to enhance the user-alignment effect.

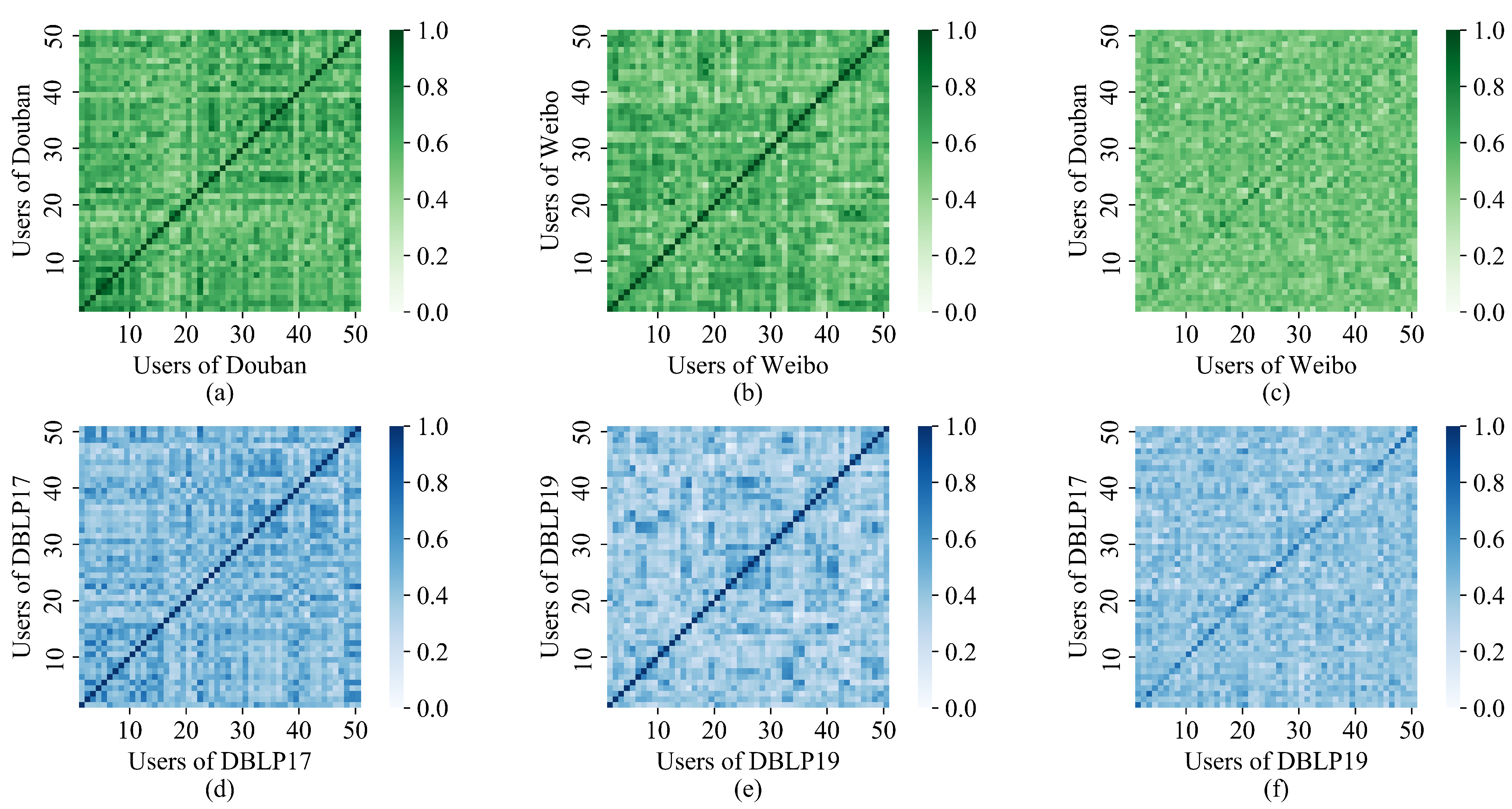

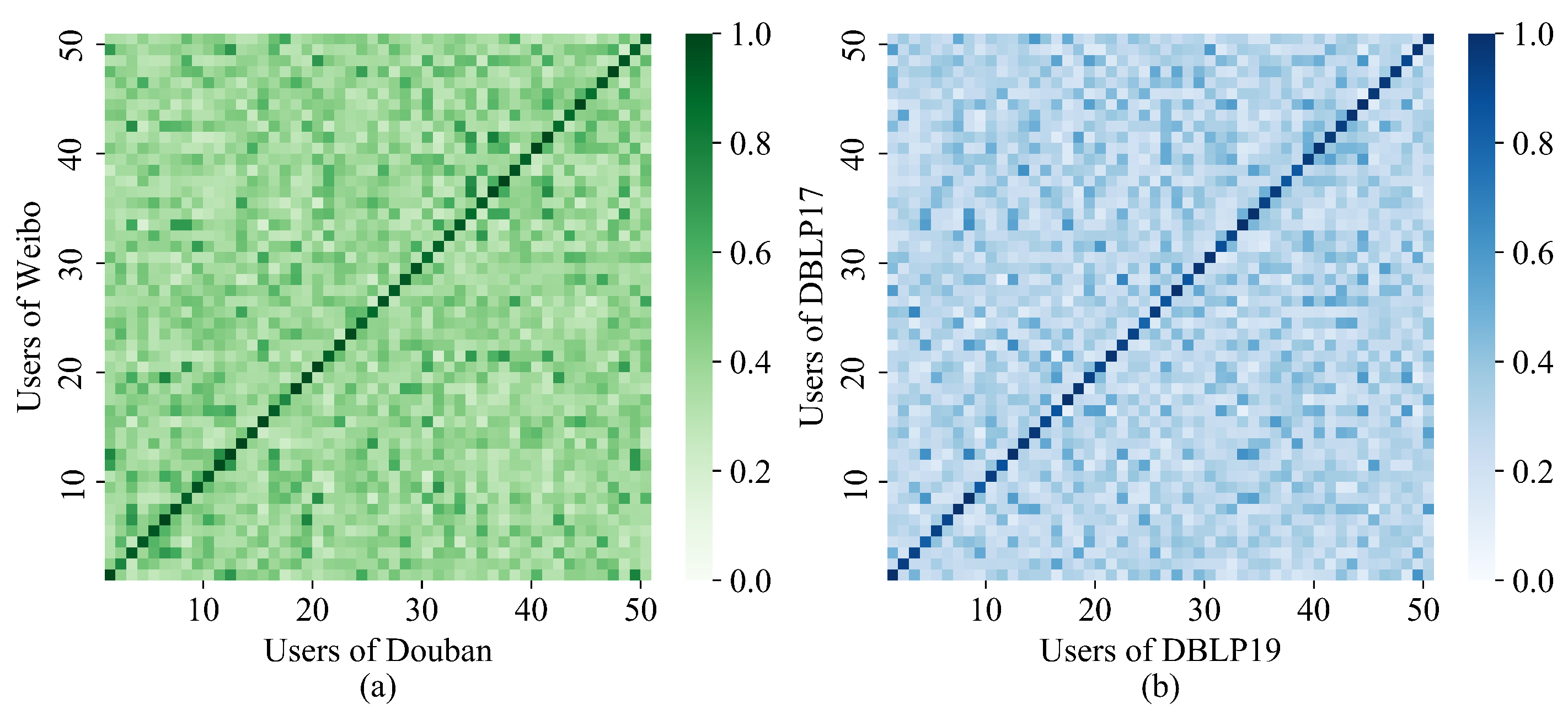

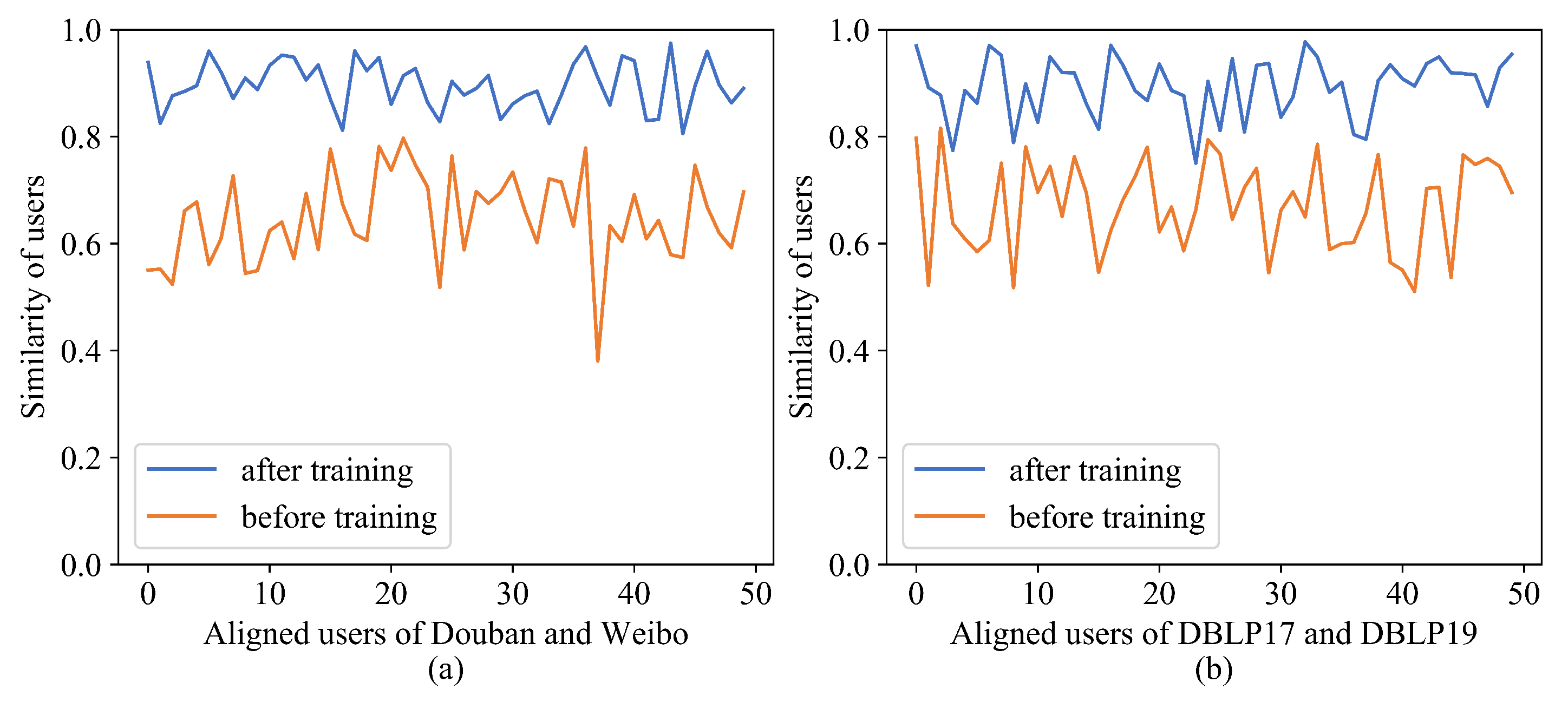

- As the feature embedding vectors of the same user are not exactly the same across different social networks, the user’s embedding vector is optimized by means of semantic fusion and contrastive learning. The features of the surrounding similar neighbors are aggregated using a multi-head graph attention network to enhance the semantic features of the users themselves. Contrastive learning improves the embedding distance of users in the same social network while reducing the embedding distance of aligned users in the social network to be aligned, which ensures the accuracy of the obtained user alignment.

2. Related Work

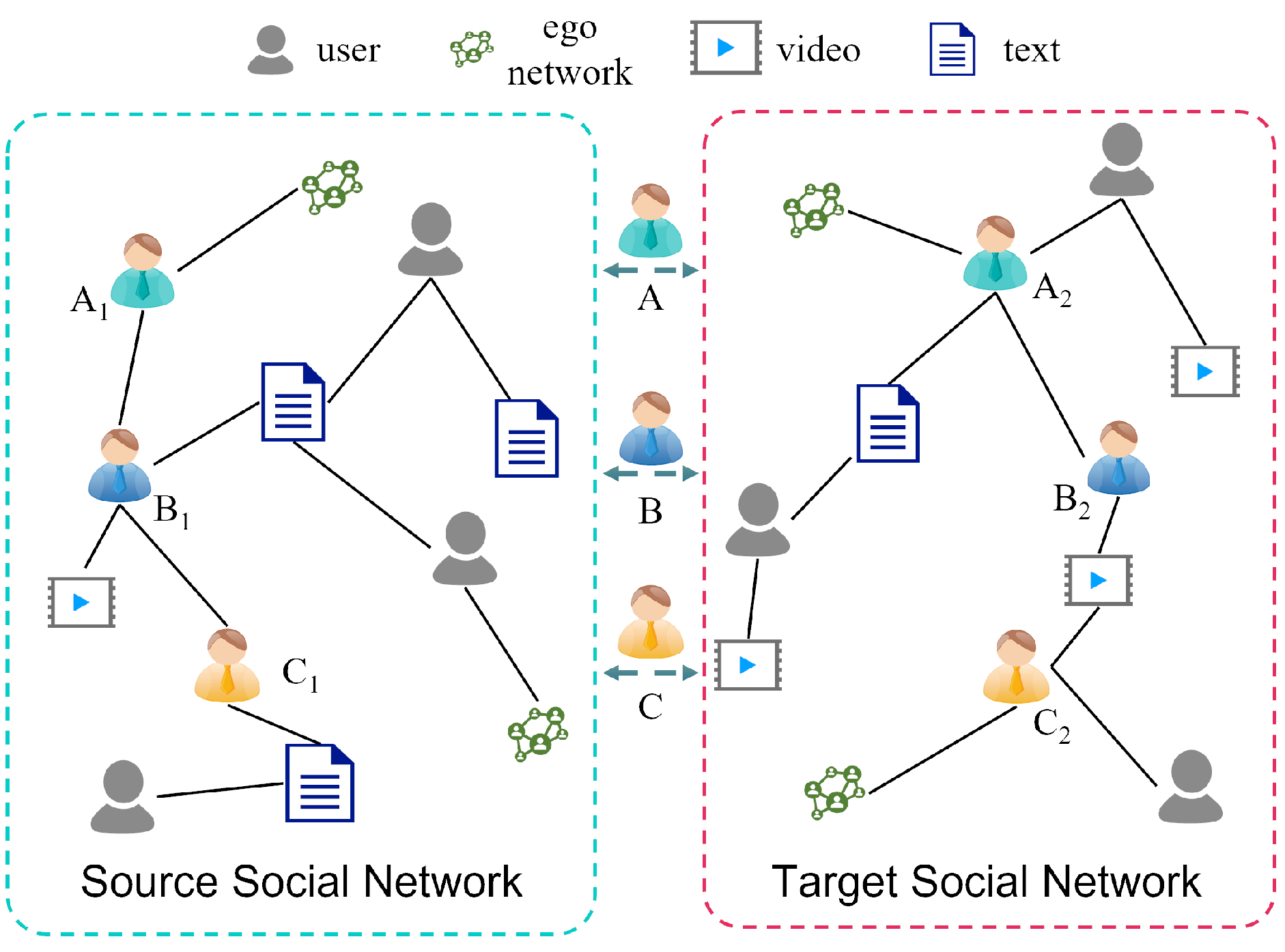

2.1. User Alignment

2.2. Text Feature Extraction

2.3. Graph Representation Learning

2.4. Graph Contrastive Learning

3. Preliminaries

3.1. Semantic Social Network View

3.2. Semantic Enhancement User Alignment

3.3. Multi-View Graph Contrastive Learning

4. SENUA Algorithm

4.1. Overview of SENUA

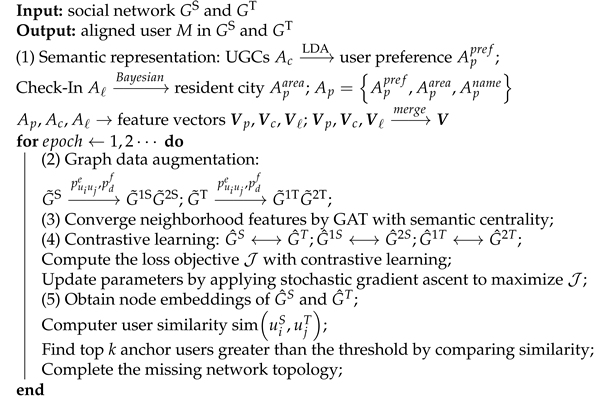

| Algorithm 1: Social network user alignment. |

|

4.2. Multi-Level Semantic Representation

4.2.1. Meta-Semantic Feature Extraction

4.2.2. Word-Level Semantic Representation

4.2.3. Document-Level Semantic Representation

4.2.4. Spatiotemporal Semantic Representation

4.3. Graph-Data Augmentation with Semantic Noise Adaption

4.3.1. Semantic Centrality

4.3.2. Topology-Level Semantic Augmentation

4.3.3. Feature-Level Semantic Augmentation

4.4. Multi-Head Attention Semantic Fusion

4.5. Multi-View Contrastive Learning

4.6. User Alignment

5. Experiments

5.1. Dataset and Experimental Setup

5.1.1. Dataset

5.1.2. Parameter Settings

5.1.3. Evaluation Indicators

5.2. Baseline Methods

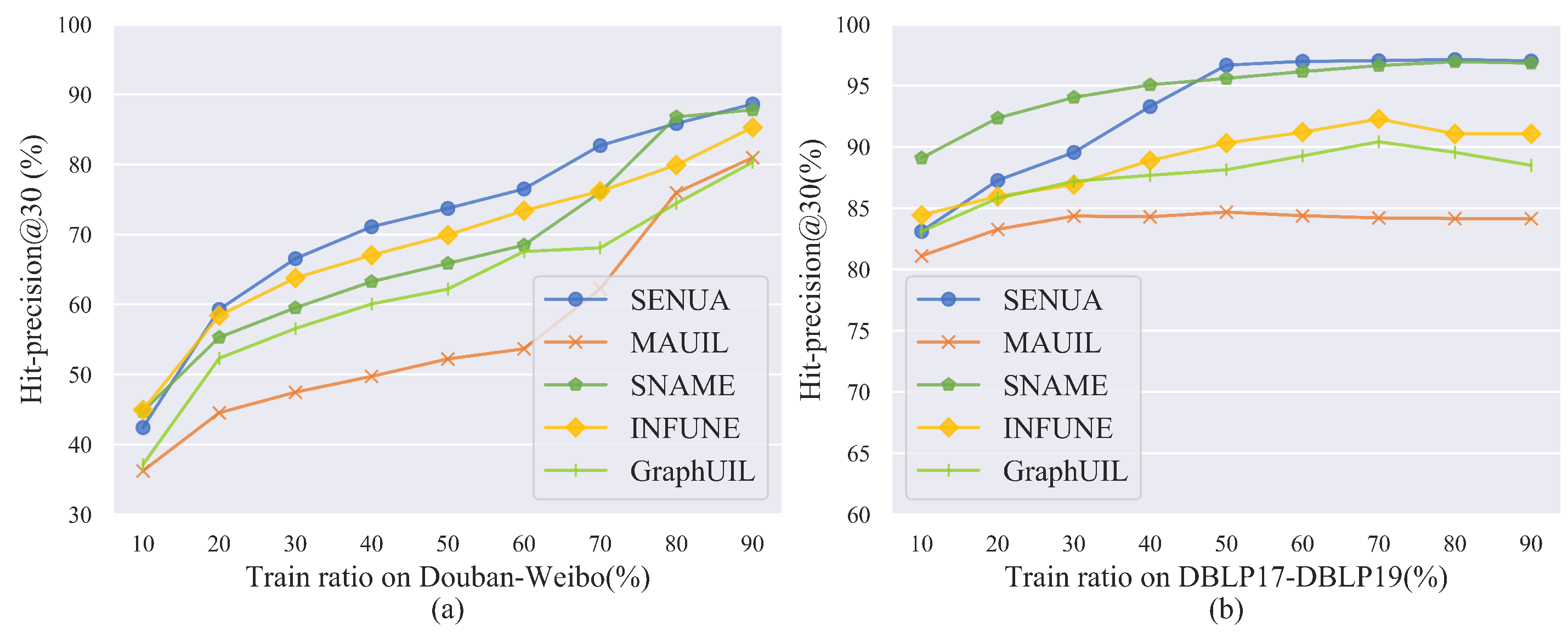

- GraphUIL [21] encodes the local and global network structures, then achieves user alignment by minimizing the difference before and after reconstruction and the match loss of anchor users.

- INFUNE [43] performs information fusion based on the network topology, attributes, and generated contents of users. Adaptive fusion of neighborhood features based on a graph neural network is performed to improve user-alignment accuracy.

- MAUIL [44] uses three layers of user attribute embedding and one layer of network topology embedding to mine user features. User alignment is performed after mapping user features from two social networks to the same space.

- SNAME [67] effectively mines user features based on three embedding methods: intentional neural network, fuzzy c-mean clustering, and graph drawing embedding.

5.3. Experimental Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Magnani, M.; Hanteer, O.; Interdonato, R.; Rossi, L.; Tagarelli, A. Community Detection in Multiplex Networks. ACM Comput. Surv. 2022, 54, 1–35. [Google Scholar] [CrossRef]

- Pan, Y.; He, F.; Yu, H. Learning Social Representations with Deep Autoencoder for Recommender System. World Wide Web 2020, 23, 2259–2279. [Google Scholar] [CrossRef]

- Kou, H.; Liu, H.; Duan, Y.; Gong, W.; Xu, Y.; Xu, X.; Qi, L. Building Trust/Distrust Relationships on Signed Social Service Network through Privacy-Aware Link Prediction Process. Appl. Soft Comput. 2021, 100, 106942. [Google Scholar] [CrossRef]

- Li, S.; Yao, L.; Mu, S.; Zhao, W.X.; Li, Y.; Guo, T.; Ding, B.; Wen, J.R. Debiasing Learning Based Cross-domain Recommendation. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual, 14–18 August 2021; ACM: Singapore, 2021; pp. 3190–3199. [Google Scholar] [CrossRef]

- Zhang, A.; Chen, Y. A Real-Time Detection Algorithm for Abnormal Users in Multi Relationship Social Networks Based on Deep Neural Network. In Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Liu, S., Ma, X., Eds.; Springer International Publishing AG: Cham, Switzerland, 2022; Volume 416, pp. 179–190. [Google Scholar] [CrossRef]

- Wang, Y.; Shen, H.; Gao, J.; Cheng, X. Learning Binary Hash Codes for Fast Anchor Link Retrieval across Networks. In Proceedings of the World Wide Web Conference (WWW ‘19), San Francisco, CA, USA, 13–17 May 2019; pp. 3335–3341. [Google Scholar] [CrossRef]

- Qin, T.; Liu, Z.; Li, S.; Guan, X. A Two-Stagse Approach for Social Identity Linkage Based on an Enhanced Weighted Graph Model. Mob. Netw. Appl. 2020, 25, 1364–1375. [Google Scholar] [CrossRef]

- Yuan, Z.; Yan, L.; Xiaoyu, G.; Xian, S.; Sen, W. User Naming Conventions Mapping Learning for Social Network Alignment. In Proceedings of the 2021 13th International Conference on Computer and Automation Engineering (ICCAE), Melbourne, Australia, 20–22 March 2021; pp. 36–42. [Google Scholar] [CrossRef]

- Xiao, Y.; Hu, R.; Li, D.; Wu, J.; Zhen, Y.; Ren, L. Multi-Level Graph Attention Network Based Unsupervised Network Alignment. In Proceedings of the 2021 IEEE 46th Conference on Local Computer Networks (LCN), Edmonton, AB, Canada, 4–7 October 2021; pp. 217–224. [Google Scholar] [CrossRef]

- Tang, R.; Jiang, S.; Chen, X.; Wang, W.; Wang, W. Network Structural Perturbation against Interlayer Link Prediction. Knowl.-Based Syst. 2022, 250, 109095. [Google Scholar] [CrossRef]

- Cai, C.; Li, L.; Chen, W.; Zeng, D. Capturing Deep Dynamic Information for Mapping Users across Social Networks. In Proceedings of the 2019 IEEE International Conference on Intelligence and Security Informatics (ISI), Shenzhen, China, 1–3 July 2019; pp. 146–148. [Google Scholar] [CrossRef]

- Fang, Z.; Cao, Y.; Liu, Y.; Tan, J.; Guo, L.; Shang, Y. A Co-Training Method for Identifying the Same Person across Social Networks. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, ON, Canada, 14–16 November 2017; pp. 1412–1416. [Google Scholar] [CrossRef]

- Zhong, Z.X.; Cao, Y.; Guo, M.; Nie, Z.Q. CoLink: An Unsupervised Framework for User Identity Linkage. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence/30th Innovative Applications of Artificial Intelligence Conference/8th AAAI Symposium on Educational Advances in Artificial Intelligence, New Orleans, LO, USA, 2–7 February 2018; Association Advancement Artificial Intelligence: Palo Alto, CA, USA, 2018; pp. 5714–5721. [Google Scholar]

- Zeng, W.; Tang, R.; Wang, H.; Chen, X.; Wang, W. User Identification Based on Integrating Multiple User Information across Online Social Networks. Secur. Commun. Netw. 2021, 2021, 5533417. [Google Scholar] [CrossRef]

- Qu, Y.; Ma, H.; Wu, H.; Zhang, K.; Deng, K. A Multiple Salient Features-Based User Identification across Social Media. Entropy 2022, 24, 495. [Google Scholar] [CrossRef] [PubMed]

- Feng, J.; Zhang, M.Y.; Wang, H.D.; Yang, Z.Y.; Zhang, C.; Li, Y.; Jin, D.P.; Assoc Comp, M. DPLink: User Identity Linkage via Deep Neural Network From Heterogeneous Mobility Data. In Proceedings of the World Wide Web Conference (WWW ‘19), San Francisco, CA, USA, 13–17 May 2019; Association of Computing Machinery: New York, NY, USA, 2019; pp. 459–469. [Google Scholar] [CrossRef]

- Xue, H.; Sun, B.; Si, C.; Zhang, W.; Fang, J. DBUL: A User Identity Linkage Method across Social Networks Based on Spatiotemporal Data. In Proceedings of the 2021 IEEE 33rd International Conference on Tools with Artificial Intelligence (ICTAI), Washington, DC, USA, 1–3 November 2021; pp. 1461–1465. [Google Scholar] [CrossRef]

- Zhou, F.; Li, C.; Wen, Z.; Zhong, T.; Trajcevski, G.; Khokhar, A. Uncertainty-aware Network Alignment. Int. J. Intell. Syst. 2021, 36, 7895–7924. [Google Scholar] [CrossRef]

- Tang, R.; Miao, Z.; Jiang, S.; Chen, X.; Wang, H.; Wang, W. Interlayer Link Prediction in Multiplex Social Networks Based on Multiple Types of Consistency Between Embedding Vectors. IEEE Trans. Cybern. 2021, 1–14, early access. [Google Scholar] [CrossRef]

- Zheng, C.; Pan, L.; Wu, P. JORA: Weakly Supervised User Identity Linkage via Jointly Learning to Represent and Align. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–12, early access. [Google Scholar] [CrossRef]

- Zhang, W.; Shu, K.; Liu, H.; Wang, Y. Graph Neural Networks for User Identity Linkage. arXiv 2019, arXiv:1903.02174. [Google Scholar]

- Chen, X.; Song, X.; Peng, G.; Feng, S.; Nie, L. Adversarial-Enhanced Hybrid Graph Network for User Identity Linkage. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 11–15 July 2021; Association of Computing Machinery: New York, NY, USA, 2021; pp. 1084–1093. [Google Scholar] [CrossRef]

- Deng, K.; Xing, L.; Zheng, L.; Wu, H.; Xie, P.; Gao, F. A User Identification Algorithm Based on User Behavior Analysis in Social Networks. IEEE Access 2019, 7, 47114–47123. [Google Scholar] [CrossRef]

- Li, Y.; Peng, Y.; Ji, W.; Zhang, Z.; Xu, Q. User Identification Based on Display Names Across Online Social Networks. IEEE Access 2017, 5, 17342–17353. [Google Scholar] [CrossRef]

- Li, Y.; Cui, H.; Liu, H.; Li, X. Display Name-Based Anchor User Identification across Chinese Social Networks. In Proceedings of the 2020 IEEE International Conference on Systems, Man and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 3984–3989. [Google Scholar] [CrossRef]

- Li, Y.J.; Zhang, Z.; Peng, Y. A Solution to Tweet-Based User Identification Across Online Social Networks. In Advanced Data Mining and Applications, Lecture Notes in Artificial Intelligence; Springer International Publishing Ag: Cham, Swizerland, 2017; Volume 10604, pp. 257–269. [Google Scholar] [CrossRef]

- Sharma, V.; Dyreson, C. LINKSOCIAL: Linking User Profiles Across Multiple Social Media Platforms. In Proceedings of the 2018 IEEE International Conference on Big Knowledge (ICBK), Singapore, 17–18 November 2018; IEEE: New York, NY, USA, 2018; pp. 260–267. [Google Scholar] [CrossRef]

- Zhou, X.; Yang, J. Matching User Accounts Based on Location Verification across Social Networks. Rev. Int. Metod. Numer. Para Calc. Diseno Ing. 2020, 36, 7. [Google Scholar] [CrossRef]

- Kojima, K.; Ikeda, K.; Tani, M. Short Paper: User Identification across Online Social Networks Based on Similarities among Distributions of Friends’ Locations. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 4085–4088. [Google Scholar] [CrossRef]

- Xing, L.; Deng, K.; Wu, H.; Xie, P.; Gao, J. Behavioral Habits-Based User Identification Across Social Networks. Symmetry 2019, 11, 19. [Google Scholar] [CrossRef]

- Qu, Y.; Xing, L.; Ma, H.; Wu, H.; Zhang, K.; Deng, K. Exploiting User Friendship Networks for User Identification across Social Networks. Symmetry 2022, 14, 110. [Google Scholar] [CrossRef]

- Amara, A.; Taieb, M.A.H.; Aouicha, M.B. Identifying I-Bridge Across Online Social Networks. In Proceedings of the 2017 IEEE/ACS 14th International Conference on Computer Systems and Applications (AICCSA), Hammamet, Tunisia, 30 October–3 November 2017; pp. 515–520. [Google Scholar] [CrossRef]

- Yu, J.; Gao, M.; Li, J.; Yin, H.; Liu, H. Adaptive Implicit Friends Identification over Heterogeneous Network for Social Recommendation. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; Association of Computing Machinery: New York, NY, USA, 2018; pp. 357–366. [Google Scholar] [CrossRef]

- Feng, S.; Shen, D.; Nie, T.; Kou, Y.; He, J.; Yu, G. Inferring Anchor Links Based on Social Network Structure. IEEE Access 2018, 6, 17340–17353. [Google Scholar] [CrossRef]

- Zhang, D.; Yin, J.; Zhu, X.; Zhang, C. Network Representation Learning: A Survey. IEEE Trans. Big Data 2020, 6, 3–28. [Google Scholar] [CrossRef]

- Zhou, X.; Liang, X.; Du, X.; Zhao, J. Structure Based User Identification across Social Networks. IEEE Trans. Knowl. Data Eng. 2018, 30, 1178–1191. [Google Scholar] [CrossRef]

- Zhou, X.; Liang, X.; Zhao, J.; Zhiyuli, A.; Zhang, H. An Unsupervised User Identification Algorithm Using Network Embedding and Scalable Nearest Neighbour. Clust. Comput. 2019, 22, 8677–8687. [Google Scholar] [CrossRef]

- Zhang, J.; Yuan, Z.; Xu, N.; Chen, J.; Wang, J. Two-Stage User Identification Based on User Topology Dynamic Community Clustering. Complexity 2021, 2021, 5567351. [Google Scholar] [CrossRef]

- Cheng, A.; Liu, C.; Zhou, C.; Tan, J.; Guo, L. User Alignment via Structural Interaction and Propagation. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Li, W.; He, Z.; Zheng, J.; Hu, Z. Improved Flower Pollination Algorithm and Its Application in User Identification Across Social Networks. IEEE Access 2019, 7, 44359–44371. [Google Scholar] [CrossRef]

- Ma, J.; Qiao, Y.; Hu, G.; Huang, Y.; Wang, M.; Sangaiah, A.K.; Zhang, C.; Wang, Y. Balancing User Profile and Social Network Structure for Anchor Link Inferring Across Multiple Online Social Networks. IEEE Access 2017, 5, 12031–12040. [Google Scholar] [CrossRef]

- Yang, Y.; Yu, H.; Huang, R.; Ming, T. A Fusion Information Embedding Method for User Identity Matching Across Social Networks. In Proceedings of the 2018 IEEE SmartWorld, Ubiquitous Intelligence and Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Cloud and Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Guangzhou, China, 8–12 October 2018; pp. 2030–2035. [Google Scholar] [CrossRef]

- Chen, S.Y.; Wang, J.H.; Du, X.; Hu, Y.Q. A Novel Framework with Information Fusion and Neighborhood Enhancement for User Identity Linkage. In Frontiers in Artificial Intelligence and Applications, Proceedings of the 24th European Conference on Artificial Intelligence (ECAI), Online/Santiago de Compostela, Spain, 29 August–8 September 2020; Ios Press: Amsterdam, The Netherlands, 2020; Volume 325, pp. 1754–1761. [Google Scholar] [CrossRef]

- Chen, B.; Chen, X. MAUIL: Multilevel Attribute Embedding for Semisupervised User Identity Linkage. Inf. Sci. 2022, 593, 527–545. [Google Scholar] [CrossRef]

- Shu, J.; Shi, J.; Liao, L. Link Prediction Model for Opportunistic Networks Based on Feature Fusion. IEEE Access 2022, 10, 80900–80909. [Google Scholar] [CrossRef]

- Lin, J.C.W.; Shao, Y.; Zhou, Y.; Pirouz, M.; Chen, H.C. A Bi-LSTM Mention Hypergraph Model with Encoding Schema for Mention Extraction. Eng. Appl. Artif. Intell. 2019, 85, 175–181. [Google Scholar] [CrossRef]

- Lin, J.C.W.; Shao, Y.; Fournier-Viger, P.; Hamido, F. BILU-NEMH: A BILU Neural-Encoded Mention Hypergraph for Mention Extraction. Inf. Sci. 2019, 496, 53–64. [Google Scholar] [CrossRef]

- Lin, J.C.W.; Shao, Y.; Djenouri, Y.; Yun, U. ASRNN: A Recurrent Neural Network with an Attention Model for Sequence Labeling. Knowl.-Based Syst. 2021, 212, 106548. [Google Scholar] [CrossRef]

- Lin, J.C.W.; Shao, Y.; Zhang, J.; Yun, U. Enhanced Sequence Labeling Based on Latent Variable Conditional Random Fields. Neurocomputing 2020, 403, 431–440. [Google Scholar] [CrossRef]

- Shao, Y.; Lin, J.C.W.; Srivastava, G.; Jolfaei, A.; Guo, D.; Hu, Y. Self-Attention-Based Conditional Random Fields Latent Variables Model for Sequence Labeling. Pattern Recognit. Lett. 2021, 145, 157–164. [Google Scholar] [CrossRef]

- Chugh, M.; Whigham, P.A.; Dick, G. Stability of Word Embeddings Using Word2Vec. In Proceedings of the AI 2018: Advances in Artificial Intelligence, Wellington, New Zealand, 11–14 December 2018; Mitrovic, T., Xue, B., Li, X., Eds.; Springer International Publishing Ag: Cham, Switzerlnad, 2018; Volume 11320, pp. 812–818. [Google Scholar] [CrossRef]

- Kang, H.; Yang, J. Performance Comparison of Word2vec and fastText Embedding Models. J. Digit. Contents Soc. 2020, 21, 1335–1343. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for aLanguage Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Hamilton, W.L. Graph Representation Learning. Synth. Lect. Artif. Intell. Mach. Learn. 2020, 14, 1–159. [Google Scholar] [CrossRef]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: Seattle, WA, USA, 2020; pp. 9726–9735. [Google Scholar] [CrossRef]

- Gao, T.; Yao, X.; Chen, D. SimCSE: Simple Contrastive Learning of Sentence Embeddings. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online/Punta Cana, Dominican Republic, 7–11 November 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 6894–6910. [Google Scholar] [CrossRef]

- You, Y.; Chen, T.; Sui, Y.; Chen, T.; Wang, Z.; Shen, Y. Graph Contrastive Learning with Augmentations. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 5812–5823. [Google Scholar]

- Hassani, K.; Khasahmadi, A.H. Contrastive Multi-View Representation Learning on Graphs. In Proceedings of the 37th International Conference on Machine Learning, Vienna, Austria, 12–18 July 2020; PMLR. 2020; pp. 4116–4126. [Google Scholar]

- Zhu, Y.; Xu, Y.; Yu, F.; Liu, Q.; Wu, S.; Wang, L. Graph Contrastive Learning with Adaptive Augmentation. In Proceedings of the Web Conference 2021, Online, 19–23 April 2021; ACM: Ljubljana Slovenia, 2021; pp. 2069–2080. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, P.; Lu, T.; Gu, N. A Reliable Cross-Site User Generated Content Modeling Method Based on Topic Model. Knowl.-Based Syst. 2020, 209, 106435. [Google Scholar] [CrossRef]

- Ye, M.; Yin, P.; Lee, W.C.; Lee, D.L. Exploiting Geographical Influence for Collaborative Point-of-Interest Recommendation. In Proceedings of the 34th International ACM SIGIR Conference on Research and Development in Information—SIGIR ’11, Beijing, China, 24–28 July 2011; ACM Press: New York, NY, USA, 2011; p. 325. [Google Scholar] [CrossRef]

- Tan, H.; Shao, W.; Wu, H.; Yang, K.; Song, L. A Sentence Is Worth 128 Pseudo Tokens: A Semantic-Aware Contrastive Learning Framework for Sentence Embeddings. arXiv 2022. [Google Scholar] [CrossRef]

- Liu, Y.; Ao, X.; Dong, L.; Zhang, C.; Wang, J.; He, Q. Spatiotemporal Activity Modeling via Hierarchical Cross-Modal Embedding. IEEE Trans. Knowl. Data Eng. 2020, 34, 462–474. [Google Scholar] [CrossRef]

- Van den Oord, A.; Li, Y.; Vinyals, O. Representation Learning with Contrastive Predictive Coding. arXiv 2019, arXiv:1807.03748. [Google Scholar]

- Yu, J.; Yin, H.; Gao, M.; Xia, X.; Zhang, X.; Viet Hung, N.Q. Socially-Aware Self-Supervised Tri-Training for Recommendation. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual Event/Singapore, 14–18 August 2021; ACM: Rochester, NY, USA, 2021; pp. 2084–2092. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. arXiv 2018, arXiv:1710.10903. [Google Scholar]

- Le, V.V.; Tran, T.K.; Nguyen, B.N.T.; Nguyen, Q.D.; Snasel, V. Network Alignment across Social Networks Using Multiple Embedding Techniques. Mathematics 2022, 10, 3972. [Google Scholar] [CrossRef]

| Notation | Definition |

|---|---|

| , | Source social network, target social network. |

| U | Set of users in the social network. |

| E | Edge set of the social network. |

| A | User features of the social network. |

| User attributes, UGCs, and user check-ins. | |

| User name, city of residence, and user preference. | |

| The ith user. | |

| Embedding vectors of user semantic features. | |

| Vector space. | |

| D | Feature dimension. |

| N | Total number of users in the network. |

| M | Aligned user pairs. |

| Preference sharing matrix. | |

| Semantic centrality of user . | |

| Topology sampling probability. | |

| Feature masking probability. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Y.; Zhao, P.; Zhang, Q.; Xing, L.; Wu, H.; Ma, H. A Semantic-Enhancement-Based Social Network User-Alignment Algorithm. Entropy 2023, 25, 172. https://doi.org/10.3390/e25010172

Huang Y, Zhao P, Zhang Q, Xing L, Wu H, Ma H. A Semantic-Enhancement-Based Social Network User-Alignment Algorithm. Entropy. 2023; 25(1):172. https://doi.org/10.3390/e25010172

Chicago/Turabian StyleHuang, Yuanhao, Pengcheng Zhao, Qi Zhang, Ling Xing, Honghai Wu, and Huahong Ma. 2023. "A Semantic-Enhancement-Based Social Network User-Alignment Algorithm" Entropy 25, no. 1: 172. https://doi.org/10.3390/e25010172

APA StyleHuang, Y., Zhao, P., Zhang, Q., Xing, L., Wu, H., & Ma, H. (2023). A Semantic-Enhancement-Based Social Network User-Alignment Algorithm. Entropy, 25(1), 172. https://doi.org/10.3390/e25010172