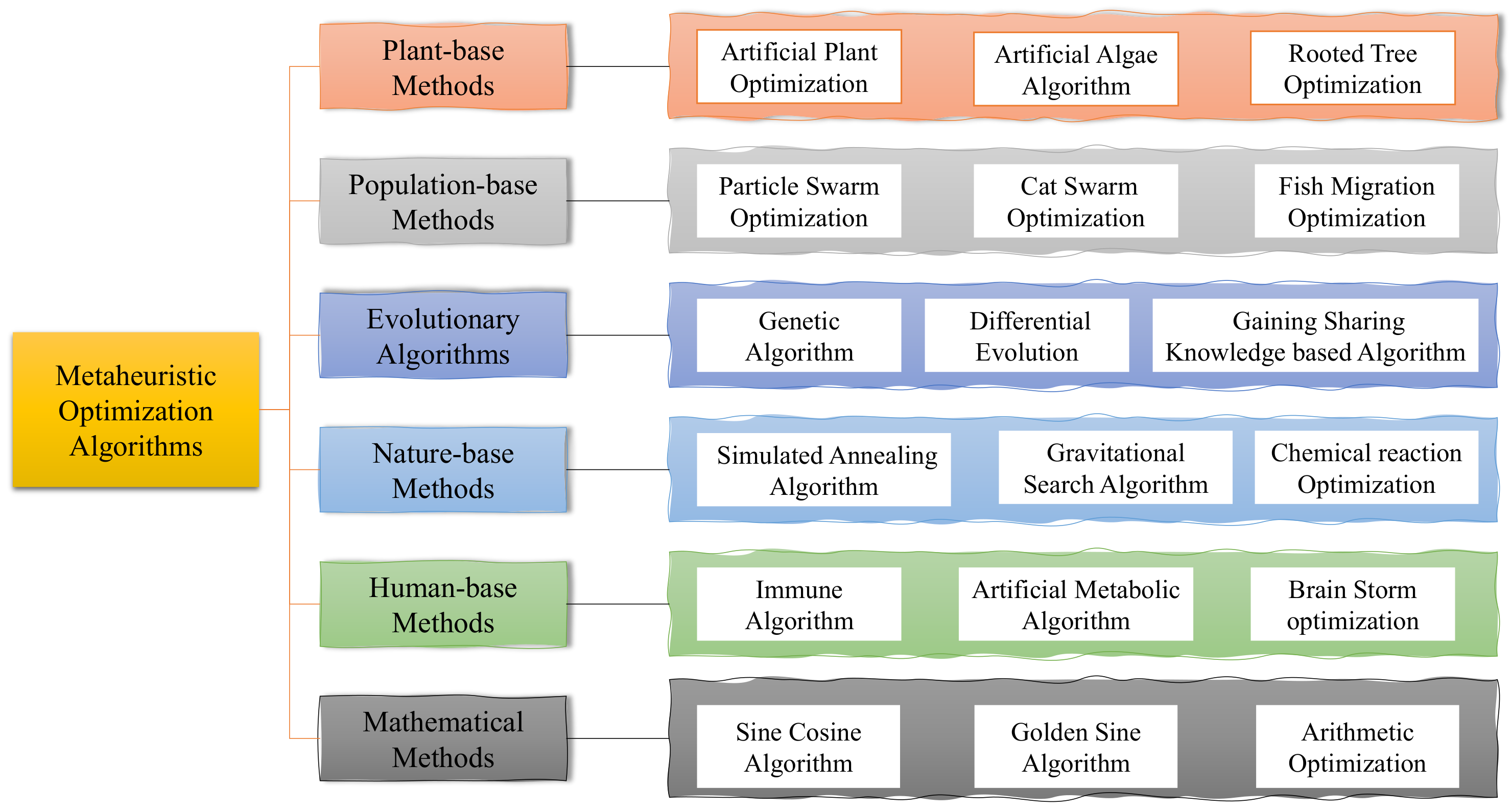

In recent decades, various meta-heuristic optimization algorithms [

2,

3] are proposed. They can be divided into six main categories in

Figure 1: plant-based methods, population-based methods, evolutionary algorithms, nature-based methods, human-based methods, and mathematical methods. Most traditional intelligent optimization algorithms belong to the first five categories, which are inspired by the natural behaviors and natural phenomena of plants and animals in nature, by summarizing natural laws, discovering features, building models, adjusting parameters, and designing optimization algorithms based on natural laws to optimize specific problems. Plant-based methods find the global optimum by simulating the growth process of plants. The representative algorithms are Artificial Plant Optimization (APO) [

4], the Artificial Algae Algorithm (AAA) [

5], Rooted Tree Optimization (RTO) [

6], and the Flower Pollination Algorithm (FPA) [

7]. Population-based methods include Particle Swarm Optimization (PSO) [

8], Cat Swarm Optimization (CSO) [

9], Ant Colony Optimization (ACO) [

10] and Fish Migration Optimization (FMO) [

11]. There is also the Phasmatodea Population Evolution (PPE) [

12] algorithm, which was recently proposed. This algorithm has multiple individuals, and the performance of the algorithm is affected by the initial values. Each individual in a population-based algorithm works independently or cooperatively to find the global optimum. Evolutionary-based algorithms include the Genetic Algorithm (GA) [

13], Differential Evolution (DE) [

14], and the Gaining Sharing Knowledge-based Algorithm (GSK) [

15]. Such algorithms improve the ability to find the global optimum by continuously accumulating high-quality solutions. Nature-based methods include the Simulated Annealing Algorithm (SAA) [

16], the Gravitational Search Algorithm (GSA) [

17], and Chemical Reaction Optimization (CRO) [

18]. These algorithms are designed by simulating the phenomena existing in nature and summarizing the objective laws of the phenomena to build an algorithmic model. Human-based algorithms include Immune Algorithm (IA) [

19], Population Migration Algorithm (PMA) [

20] and Brain Storm Optimization (BSO) [

21]. Mathematical-based methods include the Sine Cosine Algorithm (SCA) [

22], Golden Sine Algorithm (GSA) [

23] and Arithmetic Optimization Algorithm (AOA) [

24]. Many excellent intelligent optimization algorithms [

25] have been proposed. Meta-heuristic optimization algorithms are cross-integrated with image processing, fault detection, path planning, particle filtering, feature selection, production scheduling, intrusion detection, support vector machines, wireless sensors, neural networks, and other technical fields for a wider range of applications. However, the fact that an algorithm performs well in optimizing a specific problem does not guarantee its effectiveness in other problems. No optimization algorithm can solve all optimization problems, which is the famous “No Free Lunch (NFL)” theory [

26]. Therefore, researchers continue to improving existing algorithms and propose new ones to solve optimization problems in different fields.

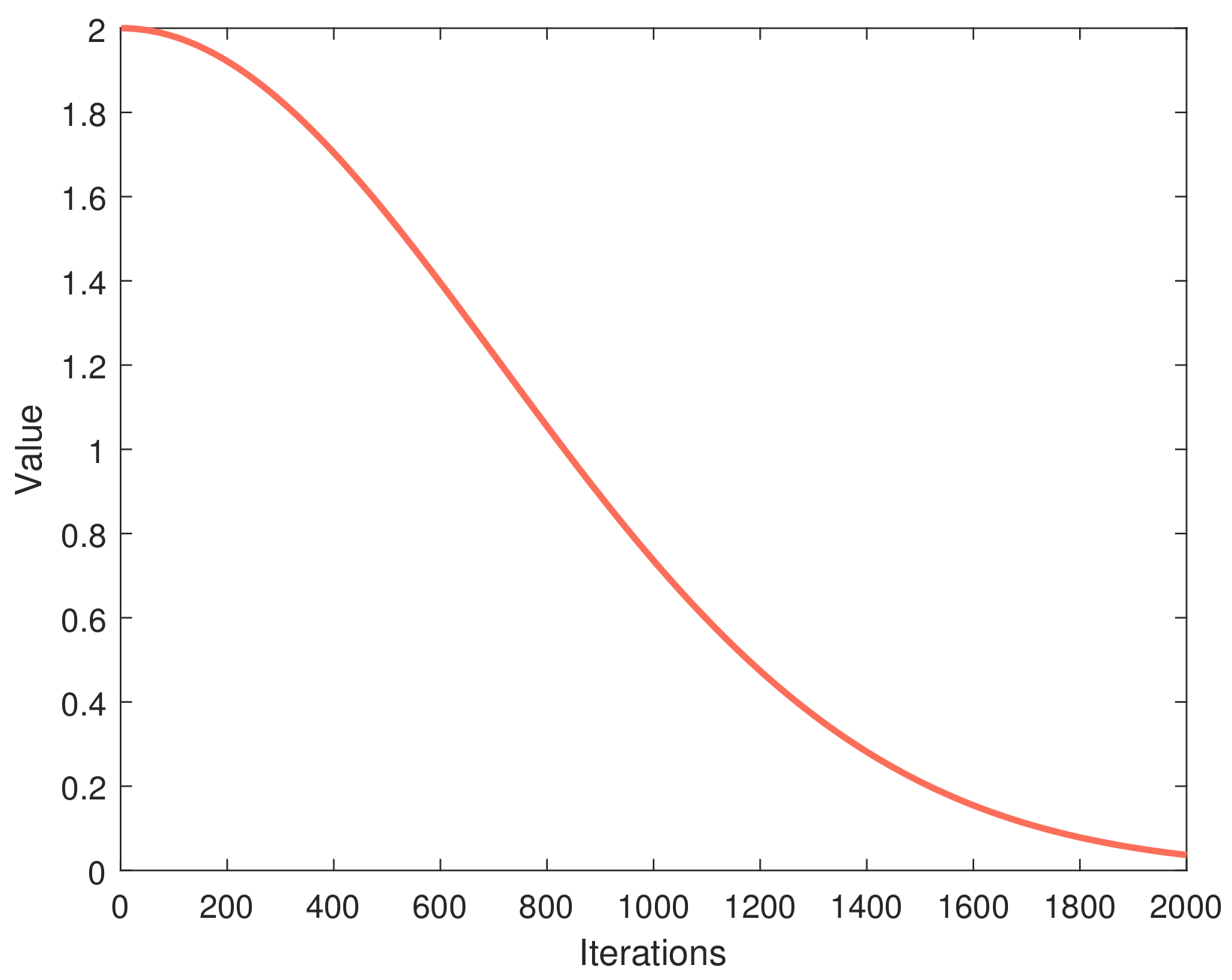

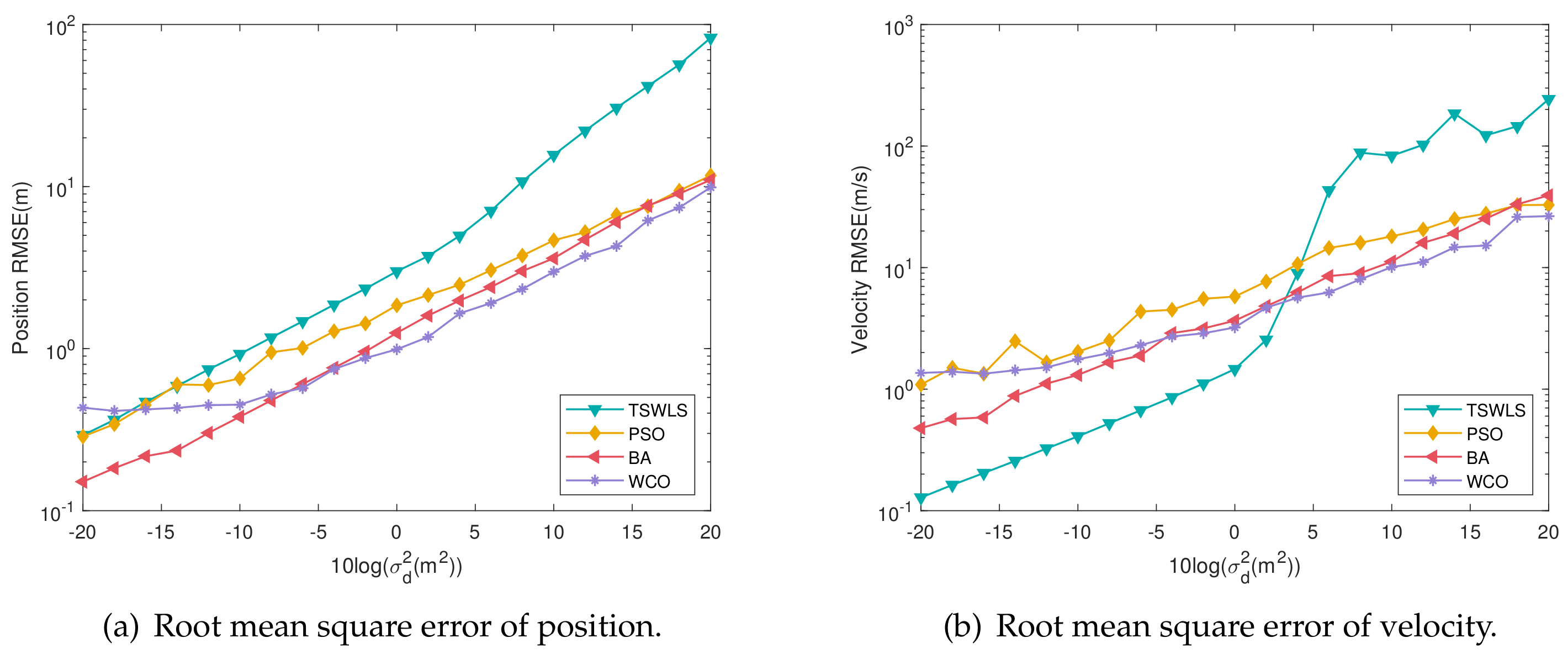

Based on the NFL, a novel meta-heuristic algorithm was proposed in this paper, which is called the Willow Catkin Optimization algorithm. This algorithm was inspired by willow trees’ process of seed dispersal. Willow catkins are the seed of the willow tree. It is characterized by its ability to float to distant places with the help of the wind. Even a fragile wind will make it float with the wind, and throughout the floating process, it can float down to the land suitable for growth and take root and grow. In addition, willow catkins stick to each other and often gather in a cluster. Ultimately, the willow will always find a suitable place to take root. Based on the above characteristics, we divide the willow flocking algorithm into two processes to implement: fluttering with the wind and gathering into a cluster. We select CEC2017 [

27] as the benchmark function set to test the effect of the WCO algorithm on the numerical function. Its results are compared in the three dimensions of 10D, 30D, and 50D with PSO, SCA, the Bat Algorithm (BA) [

28], Bamboo Forest Growth Optimizer (BFGO) [

29], Rafflesia Optimization Algorithm (ROA), and Tumbleweed Algorithm (TA) [

30]. In addition, WCO was applied to the motion node localization problem in WSN to test the ability of the new algorithm to handle the practical problem. The WCO algorithm has achieved good results in this application compared with other algorithms.

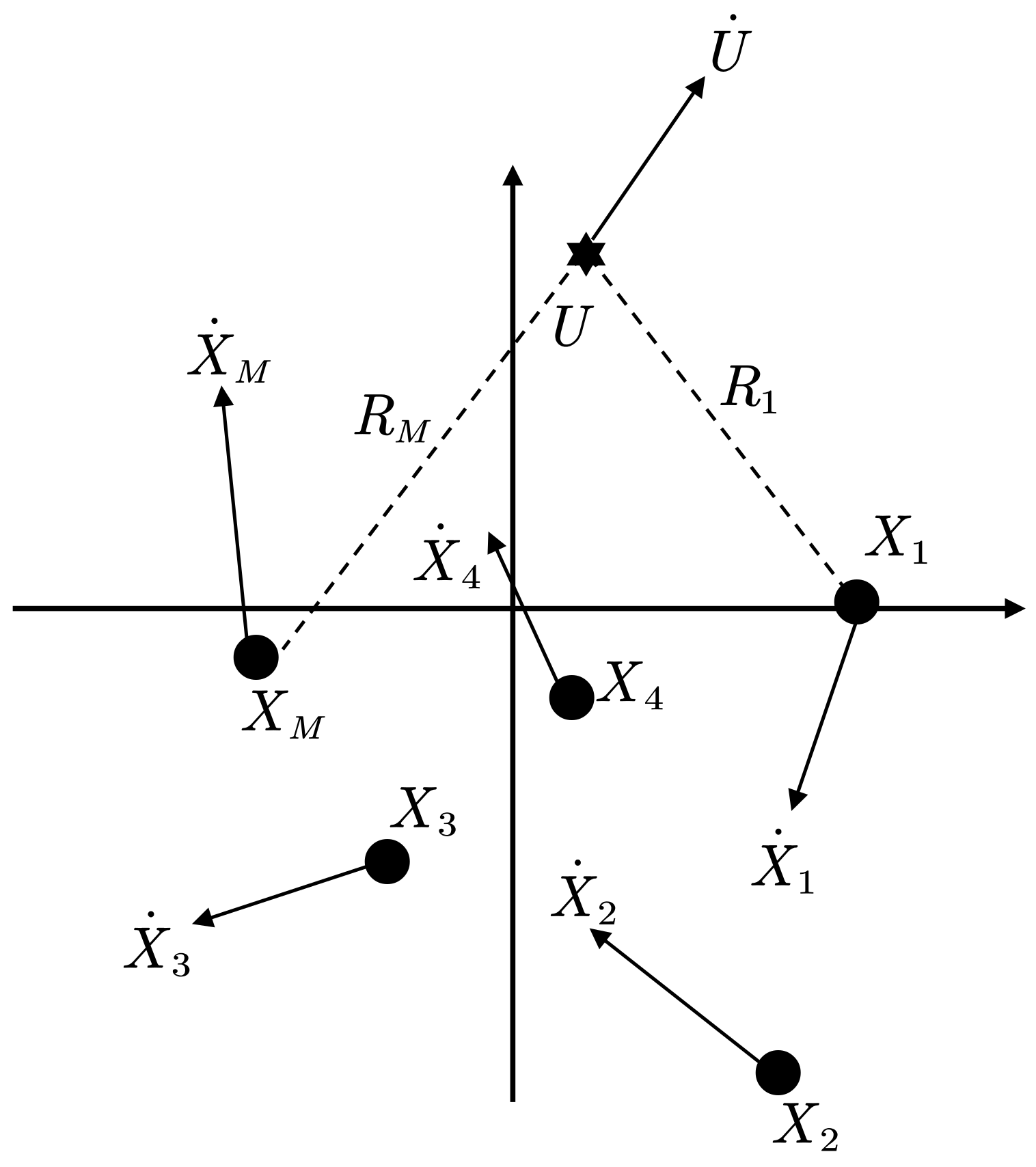

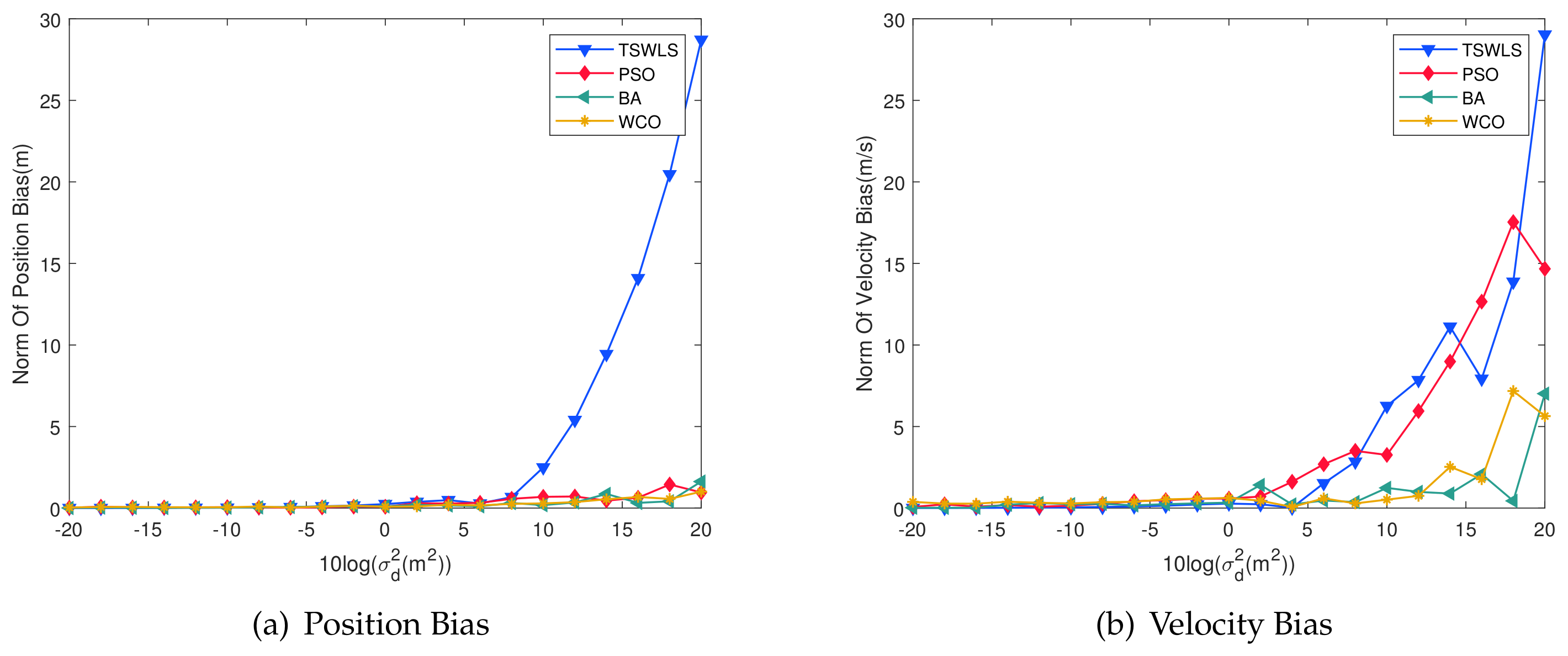

Wireless sensor networks (WSN) [

31] consist of many low-cost sensor nodes with communication and data processing capabilities. The current wireless sensor localization methods are divided into two types: non-ranging and ranging. Non-ranging-based localization methods do not require known distances, angles, or signal strengths and have the advantage of low hardware overhead, simple configuration, and high system scalability. Typical representatives of non-ranging localization algorithms include the distance vector-hop (DV-hop) [

32,

33] and multidimensional scaling maximum a posteriori probability estimation (MDS-MAP) [

34,

35]. Range-based methods extract measurement information based on distance, angle, etc. from different features of the radio signal, such as time of arrival (TOA) [

36,

37], Time Difference of Arrival (TDOA) [

38,

39], angle of arrival (AOA) [

40] and radio signal strength indication (RSSI) [

41,

42], etc. TDOA has high positioning accuracy. However, it is also prone to time difference blurring, it is not easy to locate the target signal with high frequency, and the speed of the target cannot be determined. Adding Doppler frequency difference information to TDOA can improve the localization accuracy, eliminate the problem of time difference blurring, and determine the target’s speed. Many scholars have put forward their views on motion target localization techniques in recent years. The multi-station TDOA/FDOA co-localization [

43,

44] method is used to solve the nonlinear system of equations with the time difference and frequency difference, which has the defects of high complexity and extensive computation [

45,

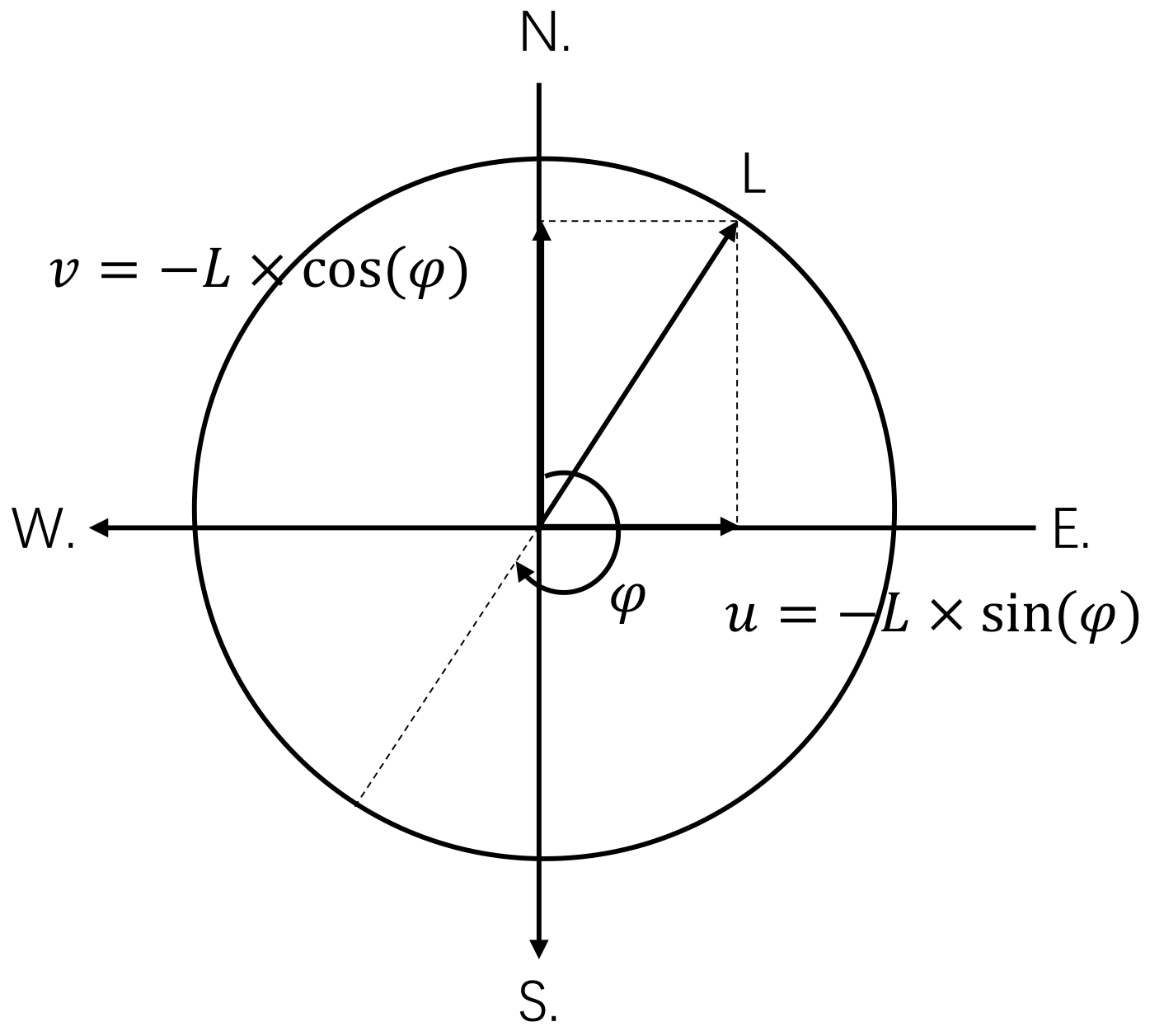

46]. If the algorithm needs to be optimized enough, the localization results easily fall into the local optimum and slow convergence speed. Therefore, this paper applies the WCO algorithm to the motion node localization problem in WSN to optimize the joint TDOA/FDOA joint localization accuracy and improve the localization speed to reduce the time.