Abstract

In the last decade permutation entropy (PE) has become a popular tool to analyze the degree of randomness within a time series. In typical applications, changes in the dynamics of a source are inferred by observing changes of PE computed on different time series generated by that source. However, most works neglect the crucial question related to the statistical significance of these changes. The main reason probably lies in the difficulty of assessing, out of a single time series, not only the PE value, but also its uncertainty. In this paper we propose a method to overcome this issue by using generation of surrogate time series. The analysis conducted on both synthetic and experimental time series shows the reliability of the approach, which can be promptly implemented by means of widely available numerical tools. The method is computationally affordable for a broad range of users.

1. Introduction

Information measures are increasingly important in the investigation of complex dynamics that underlie processes that occur in different frameworks [1]. The task mostly consists of evaluating the amount of information out of a discrete sequence of a given length. The sequence can be made of symbols belonging to a finite alphabet, e.g., DNA sequences [2], or correspond to a time series of realizations of a physical random variable, which ideally takes on values out of a continuous set. The latter case can be, at the expense of a loss in resolution, reduced to the former one by encoding trajectories into symbols upon a suitable coarse graining of the state space [3,4]. The information content of a sequence built on a finite alphabet can be then inferred by applying techniques such as Shannon block entropy [2,4,5,6].

In time series analysis, permutation entropy (PE), first devised in 2002 by Bandt and Pompe [7], provides an alternative approach. Possibly due to the symbolic sequences being naturally generated by the variable of interest without further model assumptions [7] or any preassigned partition of the phase space [8], PE has progressively become one of the most used information measures. Given a time series and a positive, integer dimension m, PE is estimated by first encoding m-dimensional, consecutive sections of that time series into symbolic sequences corresponding to permutations, and then by counting their occurrences. PE finally corresponds to the plug-in Shannon entropy computed on the sample distribution of the observed occurrence frequencies. Due to the simplicity in its evaluation, PE has become popular in many research areas ranging from medicine [9,10,11] to neuroscience [12,13,14,15], from climatology [16] to optoelectronics [17,18] and even transport complexity analysis [19].

A major property of PE is the fact that its growth rate with the dimension m asymptotically coincides with the Kolmogorov–Sinai entropy of the time series source [7]: the larger the m, the more reliable the measure. Unfortunately, given m, the number of the possible symbolic sequences can be as large as . For this reason, the required computational load typically becomes cumbersome when m exceeds 10. In addition, in order to yield reliable sample distributions and avoid finite-size bias [20], the length of the input time series has to be much larger than the size of the set of the visited symbolic sequences.

The difficulty of reliably assessing PE at large values of the dimension m presents a likely reason why PE, rather than being employed as an estimator of entropy rate, is often used at fixed m as an irregularity or complexity marker. Indeed, PE reaches the maximum value (throughout the paper we use “nat” units) in the case of purely stochastic time series, and the minimum value, zero, in the case of monotonic functions. More interestingly, the variation—again at fixed m—of PE computed on different segments of a time series can provide a marker of different states of the underlying system, i.e., of a nonstationary behavior. Cao et al. [21] first described the use of PE to detect dynamical changes in complex time series. In a sample application, they showed how the method could be used to detect the onset of epileptic seizures out of clinical electroencephalographic (EEG) recordings. The approach has been since successfully applied in a wide spectrum of fields, ranging from economics [22,23] to neurophysiology [24,25,26,27] and geophysics [28,29].

Due to it being a statistic computed on sample time series, detecting statistically significant differences of PE in different segments immediately calls for the necessity of estimating the variability, or uncertainty, of the PE computed on each single segment. (We assume here that, as far as PE is concerned, each segment is stationary. Needless to say, characterizing a segment as being stationary requires the same statistical tools to detect changes. In other words, addressing the stationary requirement can promptly lead to circular reasoning.) A standard approach consists of observing different time series produced under the very same conditions and then applying basic statistics to assess the uncertainty of PE. Unfortunately, in fields such as geophysics and astrophysics, experimental conditions are neither controllable nor reproducible. Reproducibility is also a major issue in life sciences; a way to circumvent it consists of observing samples recorded from different individuals, under the rather strong assumption that they provide similar responses [27,30,31].

An alternative approach to the assessment of the uncertainty of sample PE relies on the symbolic sequence generation being approximated as a Markov process [32,33]. The inference of the corresponding stochastic matrix would analytically lead to the evaluation of the uncertainty out of a single measurement [34], a special case thereof occurring when the process is memoryless [35]. Alternatively, the uncertainty can be estimated by bootstrap methods [36]. Unfortunately, the number of nonzero elements of a stochastic matrix describing the generation of symbolic sequences with dimension m can be as large as (each one of the symbolic sequences can be followed by up to m symbolic sequences), thus making a reliable inference of each element of the matrix mostly unpractical.

In this paper, we investigate an alternative method to phenomenologically assess the PE variability out of the single time series which the PE was computed on. The method exploits the generation of surrogate time series, a randomization technique that is commonly used to conduct data-driven hypothesis testing [37,38]. We rely here on the iterative amplitude-adjusted Fourier transform (IAAFT) algorithm [39], possibly the most reliable among the methods that address continuous processes [38]. Both PE and IAAFT can be promptly implemented by using numerical packages that are available from open source repositories (more details are provided in Section 2 and Section 3).

To check the robustness of the method, we consider time series generated by two test bench systems: the chaotic Lorenz attractor with different degrees of observational noise, and an autoregressive process with different autocorrelation time values. The use of both these systems provides a reliable emulation of a wide range of real experimental situations. Our analysis shows that the variability of PE can be indeed reliably estimated on a single time series. We then applied the method on an experimental case that concerns the recognition, via analysis of EEG recordings, of cognitive states corresponding to closed and opened eyes in resting state. Furthermore, in this case, the method definitely stood the test.

The work is organized as follows. A summary of PE and its variability is presented in Section 2, whereas surrogate generation is the topic of Section 3. In Section 4, we discuss the surrogate-based estimation of PE variability on time series produced by two synthetic systems. The application of the method to experimental time series is addressed in Section 5. Final remarks are discussed in Section 6.

2. Permutation Entropy and Its Variability

Given a scalar time series , let the vector be a window, or trajectory, comprised of m consecutive observations. Following Bandt and Pompe [7], the window is encoded as the permutation, , where each number () is an integer that belongs to the range and corresponds to the rank, from the smallest to the largest, of within . Ties between two or more x values are solved by assigning the relative rank according to the index j. Let be the rate with which a symbolic sequence S is observed within a sufficiently long time series X. The sample PE of X, computed by setting the symbolic sequence dimension m, is defined as

where the sum, corresponding to the so-called plug-in estimator, runs over the set of the visited permutations of m distinct numbers, whose size satisfies . In Equation (1), the additional term is instead the so-called Miller–Madow correction [40,41,42,43], which compensates for the negative bias affecting the plug-in estimator. Software packages that implement PE are available from open source repositories [44,45,46].

In Equation (1) the symbol on both and expresses their being sample statistics, which makes the sample PE a quantity affected by an uncertainty . The uncertainty is crucial in order to express the significance of the sample value given by Equation (1). As discussed in Section 1, a special case in which the uncertainty can be estimated out of the set occurs when the symbolic sequence generation is memoryless [35]. However, besides the fact that most dynamical systems do have a memory, the standard PE encoding procedure requires overlapping trajectories, which intrinsically produces a memory effect on the succession of symbols [32]. Thus, for example in the case , the permutation can be only followed by itself or by , .

Nevertheless, for the sake of comparison, it is worth evaluating the uncertainty that would occur in the memoryless case. As shown by Basharin [40], the variance of sample Shannon entropy computed on an N-fold realization of a memoryless, multinomial process described by the set of probabilities , scales with N as

where the parameter , defined as , is a sort of population variance of the random variable . Applying this scaling behavior to PE and defining the plug-in estimator of the parameter as [34,35]

the uncertainty of the sample PE in the memoryless case can be estimated as

where N is the length of the time series X.

3. Summary of Surrogate Generation

Given a time series, henceforth referred to as the “original” one, the goal of surrogate generation is the synthesis of a set of close replicas that share basic statistical properties with the original one [38]. The approach was first proposed [37] to generate data sets consistent with the null hypothesis of an underlying linear process, with the ultimate aim of testing the presence of nonlinearity. The method was then generalized to test other null hypotheses, most notably to evaluate the statistical significance of cross-correlation estimates [47,48,49], as well as other coupling metrics such as transfer entropy [50].

The implementation of surrogate generation requires the algorithm to mirror the null hypothesis to be tested. Therefore, a straightforward random shuffling of data points preserves the amplitude distribution of the original time series while destroying any temporal structure, and thus allows for testing the null hypothesis of a white noise source having a given amplitude distribution [37]. Another implementation targets the null hypothesis of an underlying Gaussian process with a given finite autocorrelation. In this case, the surrogate generation is conducted by Fourier-transforming the original time series and then randomizing the phase of the resulting frequency domain sequence: by virtue of the Wiener–Kinchine theorem, computing the inverse Fourier transform of this last sequence leads to a time series that has the same autocorrelation as the original one [37]. While the exact, simultaneous conservation of both the amplitude distribution and the autocorrelation function is impossible, the IAAFT algorithm conserves the amplitude distribution while approximating the spectrum and thus the autocorrelation [39].

A more general way of producing surrogate data consists of setting up a “simulated annealing” pipeline in which, at each step, time series samples are randomly swapped and, depending on the effect of the swap on a suitably defined cost function, the step is either accepted or rejected [51]. This way, the statistical properties to be preserved in surrogate data—and thus the details of the null hypothesis to be tested—are transferred from the algorithm to the definition of the cost function, so that in principle any hypothesis can be tested. However, this versatility comes at the expense of increased computational costs [38].

In the present work, the IAAFT algorithm for surrogate generation, first described by Schreiber and Schmitz [39] and possibly the most reliable one in the case of continuous processes, was used. The main feature of IAAFT is the simultaneous quasi-conservation of the amplitude distribution and the autocorrelation. Consequently, the local structure of the trajectories, and thus the statistical properties of the encoded symbolic sequences, is expected to be preserved. On the contrary, possibly the simplest surrogate generation method, namely a random shuffling of data points, would directly act on the symbolic sequences similarly to the superposition of white noise. It has to be stressed that, while the analysis described below proves IAAFT to be satisfactory, the choice of an optimal surrogate generation method can be a matter of further investigation, as discussed in Section 6.

The main steps of the algorithm are summarized below [52]. Software packages that implement IAAFT are available from open source repositories [53,54,55,56,57]. An implementation in Matlab and Python, developed by the authors, is also available [58].

Given the n-th value of a time series , let be the amplitude rank, from the smallest to the largest, of within the time series X.

- In step 0 of the algorithm, the values of the time series X are randomly shuffled so as to yield a sequence .

- Step 1 consists of FFT-transforming the two time series X, , thus producing the frequency domain sequences , , respectively. Let be the phase, i.e., the argument, of the complex number ().

- Step 2 consists of two parts. First, the two Fourier sequences , are mingled together to produce the sequence , where ; in other words, has the amplitude of and the phase of . Second, the inverse Fourier transform of the sequence is computed, thus yielding the time-domain sequence . For each value , let be the rank, from the smallest to the largest, of within the time series .

- Step 3 consists of replacing the n-th value with the value such that . This step leads to the amplitude-adjusted sequence .

- Steps (1) to (3) are finally iteratively repeated until the i-th cycle characterized byi.e., until the Euclidean distance between the sequences , (considering them as vectors) becomes sufficiently small with respect to their norms.

Let Y be the final sequence produced by the iteration. By construction, each sequence , and therefore also Y, has the same amplitude distribution as the original one X. As shown by Schreiber and Schmitz [39], the iteration leads to a discrepancy between the spectra of X and Y whose order can be quantified as

Despite its robustness, IAAFT is sensitive to amplitude mismatches between the beginning and the end of the input original time series [38]. This issue, referred to as periodicity artifacts, can be overcome by trimming the original time series to generate a shorter segment whose end points have sufficiently close amplitudes [37,38]. While in the case of synthetic time series the operation can be implemented without losing information (for example, arbitrarily large numbers of equivalent original time series can be produced), the same is not true for experimental time series. We therefore opted for an alternative approach that consists of detrending the time series so that the end points have equal values. To this purpose, each value of an input time series X is modified according to the following expression:

where . Henceforth, for the sake of simplicity, the resulting time series is renamed as X.

Indeed the detrending operation of Equation (3) can slightly modify the number of occurrences of the symbolic sequences and consequently the sample PE value: within the same m-dimensional trajectory, the end points are mutually displaced by an amount of order , where is the standard deviation of the time series. However, whenever , the effects on the PE assessment are negligible.

4. Surrogate-Based Estimation of PE Variability in the Case of Synthetic Sequences

This section describes the details of surrogate-based estimation of PE variability. To evaluate the performance of the method we consider two synthetic dynamical systems, which allow for the generation of arbitrary numbers of similar time series. The two systems are a Lorenz attractor affected by observational noise and an autoregressive fractionally-integrated moving-average (ARFIMA) process. Both are described in Section 4.3.

4.1. Reference Value of PE Variability

Let X be the realization of a time series of length N generated by a dynamical system. As explained in the previous section, the time series is supposed to be detrended so as to avoid periodicity artifacts. We use the following standard statistical approach, which exploits the system being synthetic, to evaluate the reference uncertainty on the PE assessment . The uncertainty will be then used to evaluate the reliability of the surrogate-based estimation.

Given X, a set of additional realizations , with is generated out of the same system by randomly changing the starting seed and applying the detrending operation described by Equation (3). Upon setting and evaluating the sample mean as

the sample variance is evaluated as

The resulting sample standard deviation is an estimate of the uncertainty of the permutation entropy of the original time series X. In the present work, .

4.2. Surrogate-Based Estimation of PE Variability

The uncertainty of the permutation entropy of the original sequence X is then estimated by relying on surrogate generation as follows. Let , with (again, L is set to 100), a set of surrogates of the time series X generated via IAAFT and detrended via Equation (3).

Upon evaluating the sample mean as

the sample variance is evaluated as

The resulting sample standard deviation is taken as a measure of the surrogate-based variability of the permutation entropy of a time series X.

4.3. Synthetic Dynamical Systems

The noiseless Lorenz attractor is described by the following differential equation system

Here, the three parameters are set to , , . The system is integrated by means of a Runge–Kutta (8,9) Prince–Dormand algorithm, with the integration time that also coincides with the sampling time. The value of the x coordinate computed at the n-th step is then added to a realization of a normal random variable with zero mean and standard deviation , where is a non-negative amplitude and is the standard deviation of the x coordinate of the noiseless Lorenz attractor. One has:

where is a standard normal random variable. In the following, for the sake of simplicity, the result is renamed as : . The case corresponds to the noiseless Lorenz attractor. For any other positive value , the resulting signal has a power signal-to-noise ratio given by .

The ARFIMA process is defined as

where is a standard normal random variable, (ideally, ), and is a parameter that tunes the autocorrelation time of the process: from purely white noise in the case , to a progressively more correlated process when . (Negative values of , which produce anti-correlated processes, are not considered here).

For both dynamical systems, integration starts from randomly chosen points. To avoid transient effects, the first steps of each integration are discarded. The resulting original time series are made of 10,006 points.

The chosen length is representative of many experimental situations. For example, the EEG time series analyzed in Section 5 are made of points. Indeed, the length of the time series is not expected to play a role in the present method: if the surrogate generation reliably replicates the statistical properties of the original time series, both the intrinsic variability of the original time series and those inferred by surrogate generation are expected to scale as , so that their ratio, modulo higher-order corrections, is expected to be N-independent.

4.4. Numerical Results

Before discussing the results concerning PE uncertainties, it is worth considering the PE sample means and as a function of the parameters and and for . We remind that the noise content of a synthetic time series is tuned by means of the parameter for the Lorenz system, and for the ARFIMA process.

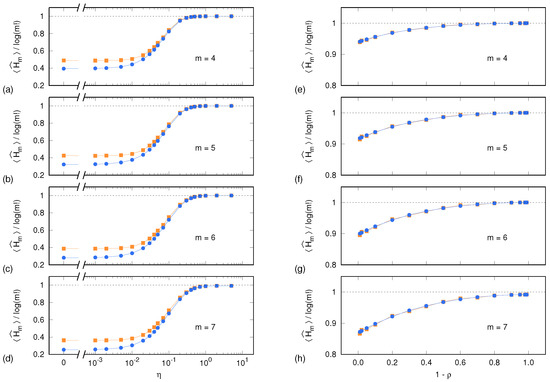

Figure 1 shows, for each system and upon a normalization by , both sample means. As expected, the normalized PE sample means approach unity as the time series become progressively more white-noise-like, i.e., when (Lorenz system) and (ARFIMA process). It is worth noting that, for and for both systems, Equation (1) performs well even when the number of visited symbolic sequences approaches and thus becomes comparable with the time series length of .

Figure 1.

Sample mean of PE, normalized by , as a function of the tuning parameters and for the Lorenz system (a–d) and for the ARFIMA process (e–h), respectively: each panel pair corresponds to a different value of the dimension m. Blue dots and lines correspond to the reference PE sample mean computed out of independent realization of the synthetic time series, as described in Section 4.1. Yellow squares and lines correspond to the PE sample mean computed out of surrogate time series (Section 4.2). Error bars are too small to be displayed.

In the case of the ARFIMA process, the two sample means continue coinciding also at progressively longer autocorrelation times (). Conversely, in the case of the Lorenz system and for , the values of PE evaluated on surrogate time series (yellow squares) start to significantly diverge from the values (blue dots) computed out of the set , consisting of the original synthetic time series X and its equivalent replicas. Indeed, surrogate data yield higher PE values than original time series, thus underpinning the deterministic nature of the system’s dynamics. The fact that no divergence is observed in the case of the ARFIMA process hints at an intrinsically noisy content of the time series also at long autocorrelation times.

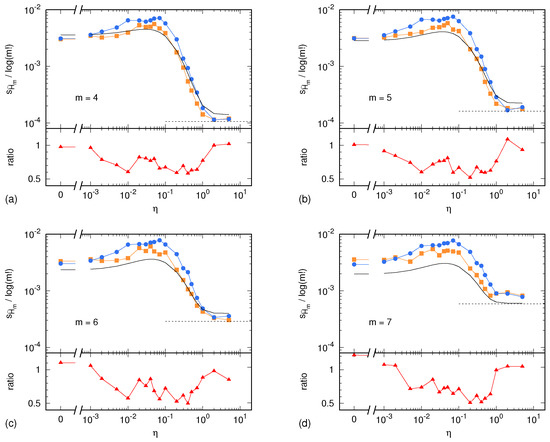

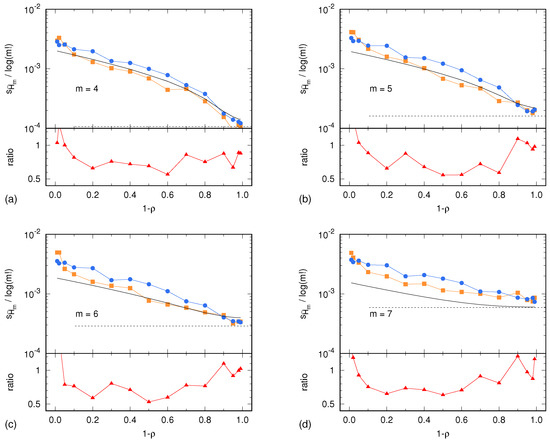

In the case of the Lorenz system, the sample standard deviations and are shown in Figure 2 as a function of the parameter . Similarly, in the case of the ARFIMA process, and are shown in Figure 3 as a function of the parameter . In both cases, results are presented for . In addition, each figure shows the uncertainty estimate given by Equation (2) and corresponding to the memoryless (multinomial) case, as well as the Harris limit [42]. This limit is given by and corresponds to the special case in which the multinomial distribution is uniform. This situation is reached in the case of sufficiently high noise, so that all symbolic sequences become equiprobable. Finally, Figure 2 and Figure 3 also show the ratio between the surrogate estimates of the PE uncertainty and the related reference values.

Figure 2.

Sample standard deviation of PE, normalized by , as a function of the tuning parameter for the Lorenz system: (a) ; (b) ; (c) ; (d) . In the upper part of each panel, blue dots and lines correspond to the reference PE sample standard deviation computed out of independent realization of the synthetic time series (Section 4.1). Yellow squares and lines correspond to the PE sample standard deviation computed out of surrogate time series (Section 4.2). The black, solid line corresponds to the memoryless uncertainty estimator given by Equation (2). Finally, the black, dashed line displays the Harris limit (see main text). The lower part of each panel shows the ratio between the surrogate estimate of the PE uncertainty and its reference value.

Figure 3.

Sample standard deviation of PE, normalized by , as a function of the tuning parameter for the ARFIMA process. (a) ; (b) ; (c) ; (d) . The description of the data representation (dots, lines and colors) is the same as in Figure 2.

Unless the autocorrelation time becomes too large, the ratio lays within the interval . This ratio being of order 1 is a remarkable result. The light underestimation of the real uncertainty of the PE assessment is likely due to the IAAFT surrogate generation producing high-fidelity replicas of the original signal, though without predicting its exact variability due to the intrinsic lack of knowledge of the signal source. Nevertheless, the level of prediction of the real PE assessment uncertainty is surprisingly good: a factor 0.5 is small enough so as to allow for reasonable estimates of the level of significance of a PE assessment.

4.5. Assignment of the Surrogate-Based PE Uncertainty

Considering the results of the analysis described above, the procedure to evaluate the uncertainty of a single PE assessment via IAAFT surrogate generation can be summarized as follows.

Given an input time series X:

- Generate a set of L surrogate time series via IAAFT;

- Upon evaluating the PE on each , compute the standard deviation via Equation (4);

- Finally, assign the uncertainty of the PE assessment on X by setting

We suggest and . The former value is justified by being a sample standard deviation, and thus being affected by a relative uncertainty that approximately scales as . The value is then a reasonable trade-off between the urge of obtaining a reliable estimate of and affordable computational costs. The latter value corresponds to the reciprocal of the ratio , as described at the end of Section 4.4. The setting of the parameter is further discussed in Section 6.

5. Estimation of PE Variability on Experimental Time Series

In the present section, we discuss the implementation of the surrogate-based estimation of PE variability in the case of a PE analysis of experimental electrophysiological data.

The data correspond to resting-state EEG time series recorded on 30 young healthy subjects and made available on the LEMON database [59,60]. Data recording was conducted in accordance with the Declaration of Helsinki. The related study protocol was approved by the ethics committee at the medical faculty of the University of Leipzig, Germany (reference number 154/13-ff). Details of the set of subjects, the recording procedure, the preprocessing steps, and source reconstruction, are extensively described in two recent papers [61,62].

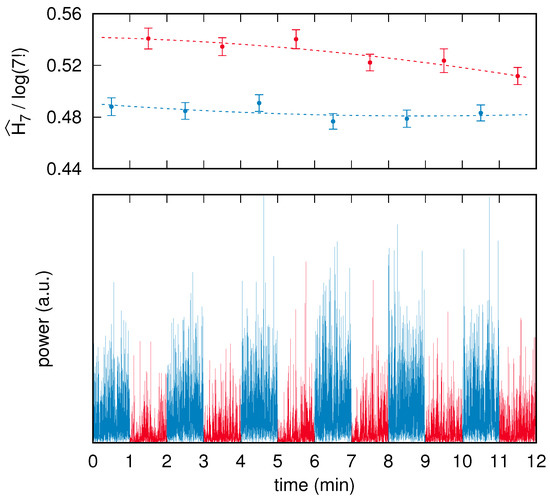

The reconstructed time series considered here correspond to the two “V1” brain areas as defined in the atlas by Glasser et al. [63], which belong to the left and right visual cortex. Each EEG acquisition session was comprised of 16 successive and interleaved segments corresponding to eyes-closed (eight segments) and eyes-opened (eight segments) conditions; recordings commenced with closed eyes. To maximize stationarity, and to therefore avoid both transient effects at the beginning and fatigue effects at the end, we neglect here the first and the last pairs of conditions. Because the recorded segments had a duration between 60 s and 90 s, the corresponding raw time series were trimmed down to 60 s each by symmetrically removing leading and trailing data points. The preprocessing resulted, for each subject, in two reconstructed time series of brain activity, each composed of two interleaved sets of six 60 s long segments. An example of a preprocessed time series corresponding to a single brain area of a subject is shown in Figure 4.

Figure 4.

(below) Time series corresponding to the left visual cortex of a single subject. The blue and red segments correspond to the eyes-closed and eyes-opened conditions, respectively. (above) PE analysis of the segments that make up the time series: each blue (red) dot is positioned above the center of the respective eyes-closed (eyes-opened) segment plotted below, while its ordinate corresponds to the normalized PE value , with , computed on that segment. Each error bar corresponds to the PE uncertainty assessed via the surrogate-based procedure summarized in Section 4.5. Finally, the blue (red) dashed line corresponds to the best-fit quadratic law that describes the time drift of the PE values in the eyes-closed (eyes-opened) condition.

In addition to the time series, Figure 4 shows the normalized PE values with computed on each segment and the related surrogate-based uncertainty estimate on each PE value.

It is graphically apparent that the error bars on the PE assessments are approximately equal to each other and also correspond to the fluctuations of the PE values. This observation can be quantified by means of an analysis based on a least-squares fit, as follows.

Our null hypothesis consists of two assumptions. The first corresponds to the very claim of this paper, namely that the surrogate-based uncertainties, i.e., the error bars, correctly estimate the real uncertainty of the related PE values. Second, we assume that the PE values drift in time, e.g., due to fatigue or habituation, according to a quadratic law .

Given a subject, a brain area and a condition—there are 120 different combinations—a least-squares fit of the quadratic law on the set of six points is carried out (see dashed lines in Figure 4). The fit consists of finding the parameters , , that minimize the sum of the normalized residuals

where , with , are the time series corresponding to the six segments.

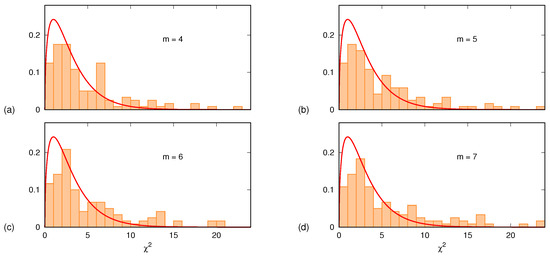

If the null hypothesis holds, the sum is to be distributed as a -square variable with degrees of freedom. Figure 5 shows, for each one of the four m values , the histogram of the 120 values of . In all four cases, the histograms are in very good agreement with the plots of the probability density function given by

The few outliers (less than 15%, identified via ) are samples in which the quadratic description does not hold. It is worth mentioning that a similar analysis was carried out also assuming a linear time dependence for the PE drift. Furthermore, in this case, the agreement was satisfactory except for about 20% of the samples for which, again, the assumed drift law was not appropriate.

Figure 5.

Histograms, normalized to unit area, of the sum , evaluated for 120 different subjects, brain areas and conditions: (a) ; (b) ; (c) ; (d) . Each panel also displays the theoretical probability density function of the random variable with degrees of freedom, given by Equation (5).

We conclude this section by stating that in the example shown in Figure 4, all data points corresponding to the eyes-closed condition have a PE value that is significantly lower than any point of the eyes-opened condition. The closest pair is given by the eyes-closed segment in the time range between 4 min and 5 min (third blue point from left), and the last, eyes-opened segment in the time range between 11 min and 12 min (sixth red point from left). The PE values of the two points are and , respectively. Their uncertainties are and , respectively. By using a standard two-sided z statistical test and assuming as a null hypothesis the fact that the difference is due to chance, the resulting p-value turns out to be about .

6. Discussion

In the method described in Section 4.5 the only parameter that requires an “educated guess” is the factor that magnifies the sample standard deviation computed on a set of surrogate time series so as to provide the uncertainty value of the PE assessment. Our analysis shows that typically ranges between 1 and 2, which is in any case a very small range to cope with. Remarkably, at least in the case of deterministic chaos provided by the Lorenz attractor contaminated by observational noise, approaches unity in the noiseless limit or when the noise is dominant, while approaching two whenever noise and signal are comparable.

An important point to highlight is the fact that the approach described in this paper can be promptly generalized to other kinds of information measures, for example approximated entropy [64,65] and sample entropy [66], and in particular to those relying, such as PE, on symbolic sequence encoding, for which a major example is provided by Shannon block entropy [2].

A topic for further investigation is the location of the surrogate generation within the pipeline that leads from a time series to the assessment of information and its uncertainty: the approach proposed in this work implements the surrogate generation on the very input of the pipeline, namely the original time series. An alternative approach could instead operate on the symbolic sequence encoded out of the original time series, under the constraint that the chosen randomization technique preserves statistical and dynamical properties of the original encoding.

It has to be stressed that the choice of a suitable surrogate generation algorithm constitutes a core ingredient of the method proposed. For the cases dealt with in the present work, IAAFT proved to be a reliable technique: as expressed in Section 3, the simultaneous quasi-conservation of the amplitude distribution and the autocorrelation is crucial to preserve the local structure of the trajectories and thus the statistical properties of the encoded symbolic sequences. Nevertheless, the investigation of an optimal surrogate generation algorithm to be employed in a specific context is expected to make up an interesting development of the method.

An example is provided by how surrogate generation can be applied to the inference of information variability in the case of point processes, for which phase randomization or IAAFT are unsuitable [38]. A possibility consists of using approaches based on dithering of the event occurrence times [47,67] or on the observation of the joint probability distribution of consecutive inter-event intervals [52,68].

In conclusion, the method proposed in this paper provides a reliable estimation of the variability affecting a PE evaluation out of a single time series. The method, which relies on the generation of surrogate time series and can be promptly implemented by means of standard analytical tools, allows one to address issues concerning stationarity as well as statistically significant changes in the dynamics of a source via PE. The analysis conducted on the noisy Lorenz attractor and an ARFIMA process demonstrated that the method performs well both in the case of time series contaminated by observational noise [8] and in the case of noise with long autocorrelation time. Possible developments of the method concern its application to other information measures.

Author Contributions

The authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study (see Ref. [59]).

Data Availability Statement

Raw EEG recordings used in the present work are available at: http://fcon_1000.projects.nitrc.org/indi/retro/MPI_LEMON.html, accessed on 23 May 2022. Preprocessed data and results are available upon request to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Verdú, S. Empirical Estimation of Information Measures: A Literature Guide. Entropy 2019, 21, 720. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ebeling, W.; Nicolis, G. Word frequency and entropy of symbolic sequences: A dynamical perspective. Chaos Solitons Fractals 1992, 2, 635. [Google Scholar] [CrossRef]

- Basios, V.; Forti, G.-L.; Nicolis, G. Symbolic dynamics generated by a combination of graphs. Int. J. Bifurc. Chaos 2008, 18, 2265. [Google Scholar] [CrossRef] [Green Version]

- Basios, V.; Mac Kernan, D. Symbolic dynamics, coarse graining and the monitoring of complex systems. Int. J. Bifurc. Chaos 2011, 21, 3465. [Google Scholar] [CrossRef]

- Freund, J. Asymptotic scaling behavior of block entropies for an intermittent process. Phys. Rev. E 1996, 53, 5793. [Google Scholar] [CrossRef] [PubMed]

- Basios, V.; Oikonomou, T.; De Gernier, R. Symbolic dynamics of music from Europe and Japan. Chaos 2021, 31, 053122. [Google Scholar] [CrossRef] [PubMed]

- Bandt, C.; Pompe, B. Permutation Entropy: A Natural Complexity Measure for Time Series. Phys. Rev. Lett. 2002, 88, 174102. [Google Scholar] [CrossRef]

- Ricci, L.; Politi, A. Permutation Entropy of Weakly Noise-Affected Signals. Entropy 2022, 24, 54. [Google Scholar] [CrossRef]

- Parlitz, U.; Berg, S.; Luther, S.; Schirdewan, A.; Kurths, J.; Wessel, N. Classifying cardiac biosignals using ordinal pattern statistics and symbolic dynamics. Comput. Biol. Med. 2012, 42, 319–327. [Google Scholar] [CrossRef]

- Bian, C.; Qin, C.; Ma, Q.D.Y.; Shen, Q. Modified permutation-entropy analysis of heartbeat dynamics. Phys. Rev. E 2012, 85, 021906. [Google Scholar] [CrossRef]

- Zanin, M.; Zunino, L.; Rosso, O.A.; Papo, D. Permutation Entropy and Its Main Biomedical and Econophysics Applications: A Review. Entropy 2012, 14, 1553. [Google Scholar] [CrossRef]

- Shumbayawonda, E.; Fernández, A.; Hughes, M.P.; Abásolo, D. Permutation Entropy for the Characterisation of Brain Activity Recorded with Magnetoencephalograms in Healthy Ageing. Entropy 2017, 19, 141. [Google Scholar] [CrossRef] [Green Version]

- Redelico, F.O.; Traversaro, F.; García, M.D.C.; Silva, W.; Rosso, O.A.; Risk, M. Classification of Normal and Pre-Ictal EEG Signals Using Permutation Entropies and a Generalized Linear Model as a Classifier. Entropy 2017, 19, 72. [Google Scholar] [CrossRef]

- Tylová, L.; Kukal, J.; Hubata-Vacek, V.; Vyšata, O. Unbiased estimation of permutation entropy in EEG analysis for Alzheimer’s disease classification. Biomed. Signal Process. Control 2018, 39, 424–430. [Google Scholar] [CrossRef]

- Şeker, M.; Özbek, Y.; Yener, G.; Özerdem, M.S. Complexity of EEG Dynamics for Early Diagnosis of Alzheimer’s Disease Using Permutation Entropy Neuromarker. Comput. Methods Programs Biomed. 2021, 206, 106116. [Google Scholar] [CrossRef]

- Saco, P.M.; Carpi, L.C.; Figliola, A.; Serrano, E.; Rosso, O.A. Entropy analysis of the dynamics of El Niño/Southern Oscillation during the Holocene. Physics A 2010, 389, 5022. [Google Scholar] [CrossRef]

- Yang, L.; Pan, W.; Yan, L.S.; Luo, B.; Xiang, S.Y.; Jiang, N. Complexity Furthermore, Synchronization In Chaotic Injection Locking Semiconductor Lasers. Mod. Phys. Lett. B 2011, 25, 2061–2067. [Google Scholar] [CrossRef]

- Toomey, J.P.; Kane, D.M.; Ackemann, T. Complexity in pulsed nonlinear laser systems interrogated by permutation entropy. Opt. Express 2014, 22, 17840–17853. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Zhang, X.; Zhang, X. Multiscale complexity analysis on airport air traffic flow volume time series. Phys. A Stat. Mech. Appl. 2020, 548, 124485. [Google Scholar] [CrossRef]

- Politi, A. Quantifying the Dynamical Complexity of Chaotic Time Series. Phys. Rev. Lett. 2017, 118, 144101. [Google Scholar] [CrossRef] [Green Version]

- Cao, Y.; Tung, W.-w.; Gao, J.B.; Protopopescu, V.A.; Hively, L.M. Detecting dynamical changes in time series using the permutation entropy. Phys. Rev. E 2004, 70, 046217. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sinn, M.; Keller, K.; Chen, B. Segmentation and classification of time series using ordinal pattern distributions. Eur. Phys. J. Spec. Top. 2013, 222, 587. [Google Scholar] [CrossRef]

- Gao, J.; Hou, Y.; Fan, F.; Liu, F. Complexity Changes in the US and China’s Stock Markets: Differences, Causes, and Wider Social Implications. Entropy 2020, 22, 75. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, J.; Yan, J.; Liu, X.; Ouyang, G. Using Permutation Entropy to Measure the Changes in EEG Signals during Absence Seizures. Entropy 2014, 16, 3049–3061. [Google Scholar] [CrossRef] [Green Version]

- Tosun, P.D.; Dijk, D.-J.; Winsky-Sommerer, R.; Abasolo, D. Effects of Ageing and Sex on Complexity in the Human Sleep EEG: A Comparison of Three Symbolic Dynamic Analysis Methods. Complexity 2019, 2019, 9254309. [Google Scholar] [CrossRef] [Green Version]

- Rubega, M.; Scarpa, F.; Teodori, D.; Sejling, A.-S.; Frandsen, C.S.; Sparacino, G. Detection of Hypoglycemia Using Measures of EEG Complexity in Type 1 Diabetes Patients. Entropy 2020, 22, 81. [Google Scholar] [CrossRef] [Green Version]

- Hou, F.; Zhang, L.; Qin, B.; Gaggioni, G.; Liu, X.; Vandewalle, G. Changes in EEG permutation entropy in the evening and in the transition from wake to sleep. Sleep 2020, 44, zsaa226. [Google Scholar] [CrossRef]

- Consolini, G.; De Michelis, P. Permutation entropy analysis of complex magnetospheric dynamics. J. Atmos.-Sol.-Terr. Phys. 2014, 115–116, 25–31. [Google Scholar] [CrossRef]

- Glynn, C.C.; Konstantinou, K.I. Reduction of randomness in seismic noise as a short-term precursor to a volcanic eruption. Sci. Rep. 2016, 6, 37733. [Google Scholar] [CrossRef] [Green Version]

- Quintero-Quiroz, C.; Montesano, L.; Pons, A.J.; Torrent, M.C.; García-Ojalvo, J.; Masoller, C. Differentiating resting brain states using ordinal symbolic analysis. Chaos 2018, 28, 106307. [Google Scholar] [CrossRef] [Green Version]

- Vecchio, F.; Miraglia, F.; Pappalettera, C.; Orticoni, A.; Alù, F.; Judica, E.; Cotelli, M.; Rossini, P.M. Entropy as Measure of Brain Networks’ Complexity in Eyes Open and Closed Conditions. Symmetry 2021, 13, 2178. [Google Scholar] [CrossRef]

- Little, D.J.; Kane, D.M. Variance of permutation entropy and the influence of ordinal pattern selection. Phys. Rev. E 2017, 95, 052126. [Google Scholar] [CrossRef] [PubMed]

- Watt, S.J.; Politi, A. Permutation entropy revisited. Chaos Solitons Fractals 2019, 120, 95. [Google Scholar] [CrossRef] [Green Version]

- Ricci, L. Asymptotic distribution of sample Shannon entropy in the case of an underlying finite, regular Markov chain. Phys. Rev. E 2021, 103, 022215. [Google Scholar] [CrossRef]

- Ricci, L.; Perinelli, A.; Castelluzzo, M. Estimating the variance of Shannon entropy. Phys. Rev. E 2021, 104, 024220. [Google Scholar] [CrossRef]

- Traversaro, F.; Redelico, F.O. Confidence intervals and hypothesis testing for the permutation entropy with an application to epilepsy. Commun. Nonlinear Sci. Numer. Simul. 2018, 57, 388. [Google Scholar] [CrossRef] [Green Version]

- Theiler, J.; Eubank, S.; Longtin, A.; Galdrikian, B.; Farmer, J.D. Testing for nonlinearity in time series: The method of surrogate data. Phys. D 1992, 58, 77–94. [Google Scholar] [CrossRef] [Green Version]

- Schreiber, T.; Schmitz, A. Surrogate time series. Physics D 2000, 142, 346–382. [Google Scholar] [CrossRef] [Green Version]

- Schreiber, T.; Schmitz, A. Improved Surrogate Data for Nonlinearity Tests. Phys. Rev. Lett. 1996, 77, 635–638. [Google Scholar] [CrossRef] [Green Version]

- Basharin, G.P. On a statistical estimate for the entropy of a sequence of independent random variables. Theor. Probab. Appl. 1959, 4, 333–336. [Google Scholar] [CrossRef]

- Miller, G. Note on the bias of information estimates. In Information Theory in Psychology II-B; Free Press: Glencoe, IL, USA, 1955; pp. 95–100. [Google Scholar]

- Harris, B. The statistical estimation of entropy in the non-parametric case. Topics. Inf. Theory 1975, 16, 323–355. [Google Scholar]

- Vinck, M.; Battaglia, F.P.; Balakirsky, V.B.; Vinck, A.J.H.; Pennartz, C.M.A. Estimation of the entropy based on its polynomial representation. Phys. Rev. E 2012, 85, 051139. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pessa, A.A.B.; Ribeiro, H.V. ordpy: A Python package for data analysis with permutation entropy and ordinal network methods. Chaos 2021, 31, 063110. [Google Scholar] [CrossRef] [PubMed]

- Function Implementing Permutation Entropy in R. Available online: https://rdrr.io/cran/statcomp/man/permutation_entropy.html (accessed on 23 May 2022).

- Function Implementing Permutation Entropy in Matlab. Available online: https://it.mathworks.com/matlabcentral/fileexchange/44161-permutation-entropy-fast-algorithm (accessed on 23 May 2022).

- Grün, S. Data-Driven Significance Estimation for Precise Spike Correlation. J. Neurophysiol. 2009, 101, 1126–1140. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lancaster, G.; Iatsenko, D.; Pidde, A.; Ticcinelli, V.; Stefanovska, A. Surrogate data for hypothesis testing of physical systems. Phys. Rep. 2018, 748, 1–60. [Google Scholar] [CrossRef]

- Perinelli, A.; Chiari, D.E.; Ricci, L. Correlation in brain networks at different time scale resolution. Chaos 2018, 28, 063127. [Google Scholar] [CrossRef]

- Mijatovic, G.; Pernice, R.; Perinelli, A.; Antonacci, Y.; Busacca, A.; Javorka, M.; Ricci, L.; Faes, L. Measuring the Rate of Information Exchange in Point-Process Data With Application to Cardiovascular Variability. Front. Netw. Physiol. 2022, 1, 765332. [Google Scholar] [CrossRef]

- Schreiber, T. Constrained Randomization of Time Series Data. Phys. Rev. Lett. 1998, 80, 2105. [Google Scholar] [CrossRef] [Green Version]

- Perinelli, A.; Castelluzzo, M.; Minati, L.; Ricci, L. SpiSeMe: A multi-language package for spike train surrogate generation. Chaos 2020, 30, 073120. [Google Scholar] [CrossRef]

- Hegger, R.; Kantz, H.; Schreiber, T. Practical implementation of nonlinear time series methods: The TISEAN package. Chaos 1999, 9, 413–435. [Google Scholar] [CrossRef] [Green Version]

- TISEAN Website. Available online: https://www.pks.mpg.de/%7Etisean/Tisean_3.0.1/ (accessed on 23 May 2022).

- Function Implementing the IAAFT Algorithm in, R. Available online: https://rdrr.io/github/dpabon/ecofunr/src/R/iAAFT.R (accessed on 23 May 2022).

- Function Implementing a Modified Version of the IAAFT Algorithm in Python. Available online: https://github.com/mlcs/iaaft (accessed on 23 May 2022).

- Function Implementing the IAAFT Algorithm in Matlab. Available online: https://github.com/nmitrou/Simulations/blob/master/matlab_codes/IAAFT.m (accessed on 23 May 2022).

- IAAFT Functions. Available online: https://github.com/LeonardoRicci/iaaft or https://osf.io/emkpj (accessed on 23 May 2022).

- Babayan, A.; Erbey, M.; Kumral, D.; Reinelt, J.D.; Reiter, A.M.F.; Röbbig, J.; Schaare, H.L.; Uhlig, M.; Anwander, A.; Bazin, P.-L.; et al. A mind-brain-body dataset of MRI, EEG, cognition, emotion, and peripheral physiology in young and old adults. Sci. Data 2019, 6, 180308. [Google Scholar] [CrossRef] [PubMed]

- LEMON Public Database. Available online: http://fcon_1000.projects.nitrc.org/indi/retro/MPI_LEMON.html (accessed on 23 May 2022).

- Perinelli, A.; Castelluzzo, M.; Tabarelli, D.; Mazza, V.; Ricci, L. Relationship between mutual information and cross-correlation time scale of observability as measures of connectivity strength. Chaos 2021, 31, 073106. [Google Scholar] [CrossRef]

- Perinelli, A.; Assecondi, S.; Tagliabue, C.F.; Mazza, V. Power shift and connectivity changes in healthy aging during resting-state EEG. NeuroImage 2022, 256, 119247. [Google Scholar] [CrossRef]

- Glasser, M.F.; Coalson, T.S.; Robinson, E.C.; Hacker, C.D.; Harwell, J.; Yacoub, E.; Ugurbil, K.; Andersson, J.; Beckmann, C.F.; Jenkinson, M.; et al. A multi-modal parcellation of human cerebral cortex. Nature 2016, 536, 171–178. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pincus, S.M. Approximate entropy as a measure of system complexity. Proc. Natl. Acad. Sci. USA 1991, 88, 2297. [Google Scholar] [CrossRef] [Green Version]

- Delgado-Bonal, A.; Marshak, A. Approximate Entropy and Sample Entropy: A Comprehensive Tutorial. Entropy 2019, 21, 541. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Richman, J.S.; Moorman, J.R. Physiological time-series analysis using approximate entropy and sample entropy. Am. J. Physiol.-Heart Circ. Physiol. 2000, 278, H2039. [Google Scholar] [CrossRef] [Green Version]

- Louis, S.; Gerstein, G.L.; Grün, S.; Diesmann, M. Surrogate Spike Train Generation Through Dithering in Operational Time. Front. Comput. Neurosci. 2010, 4, 127. [Google Scholar] [CrossRef] [Green Version]

- Ricci, L.; Castelluzzo, M.; Minati, L.; Perinelli, A. Generation of surrogate event sequences via joint distribution of successive inter-event intervals. Chaos 2019, 29, 121102. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).