A Mahalanobis Surrogate-Assisted Ant Lion Optimization and Its Application in 3D Coverage of Wireless Sensor Networks

Abstract

:1. Introduction

2. Related Work

2.1. Ant Lion OPtimization

2.2. Radial Basis Function Network

2.3. Mahalanobis Distance

2.4. The Coverage Model of Wireless Sensor Networks

3. Mahalanobis Surrogate-Assisted Ant Lion Optimization and Node Coverage in Wireless Sensor Network

3.1. Mahalanobis Surrogate-Assisted Ant Lion Optimization

| Algorithm 1 Surrogate-assisted Ant Lion optimization algorithm. |

|

| Algorithm 2 Local surrogate model. |

|

| Algorithm 3 Global surrogate model. |

|

3.2. The Node Coverage in WSN

4. Experimental Results

4.1. Parameter Settings

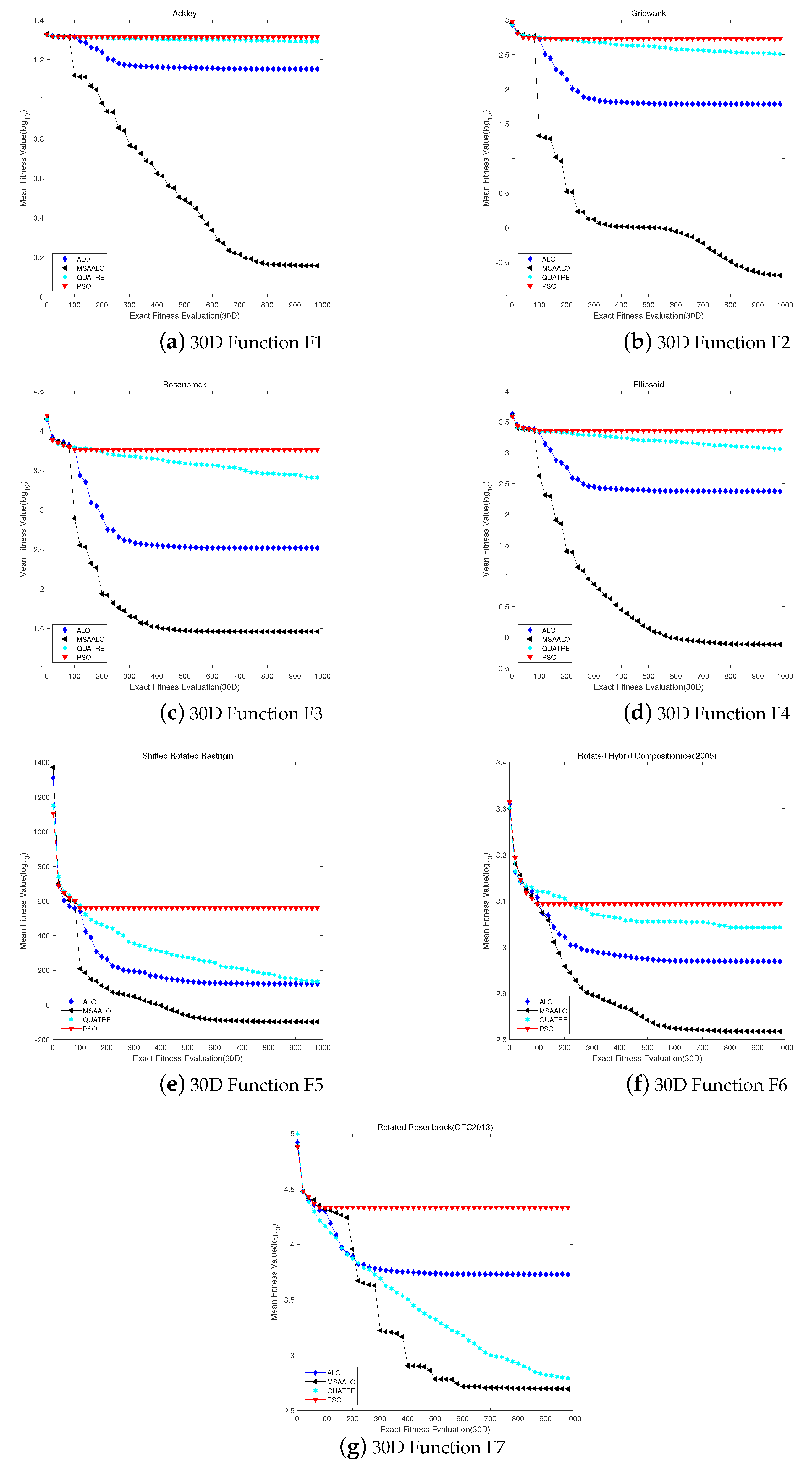

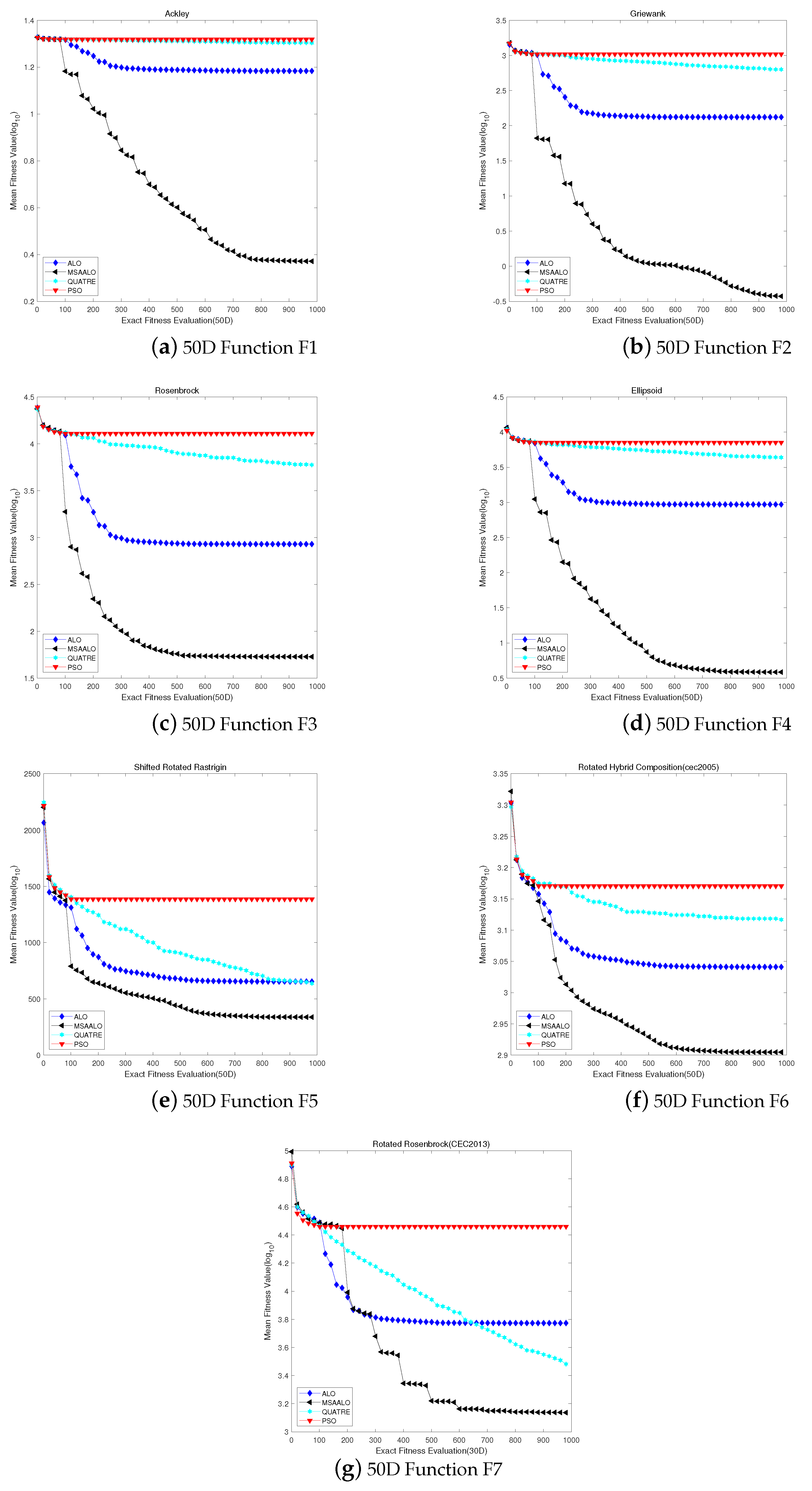

4.2. Experimental Analysis on 30- and 50-Dimensional Problems

4.3. Experimental Analysis on 100-Dimensional Problems

4.4. WSN Coverage Optimization Experiment Simulation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, H.; Wang, W.; Xiao, S.; Cui, Z.; Xu, M.; Zhou, X. Improving artificial bee colony algorithm using a new neighborhood selection mechanism. Inf. Sci. 2020, 527, 227–240. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Chu, S.-C.; Tsai, P.-W.; Pan, J.-S. Cat swarm optimization. In Pacific Rim International Conference on Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2006; pp. 854–858. [Google Scholar]

- Price, K.V. Differential Evolution. In Handbook of Optimization; Springer: Berlin/Heidelberg, Germany, 2013; pp. 187–214. [Google Scholar]

- Mirjalili, S. The ant lion optimizer. Adv. Eng. 2015, 83, 80–98. [Google Scholar] [CrossRef]

- Sun, J.; Miao, Z.; Gong, D.; Zeng, X.-J.; Li, J.; Wang, G. Interval multiobjective optimization with memetic algorithms. IEEE Trans. Cybern. 2019, 50, 3444–3457. [Google Scholar] [CrossRef]

- Poli, R.; Kennedy, J.; Blackwell, T. Particle swarm optimization. Swarm Intell. 2007, 1, 33–57. [Google Scholar] [CrossRef]

- Pan, J.-S.; Sun, X.-X.; Chu, S.-C.; Abraham, A.; Yan, B. Digital watermarking with improved sms applied for qr code. Eng. Appl. Artif. Intell. 2021, 97, 104049. [Google Scholar] [CrossRef]

- Wang, G.-G.; Cai, X.; Cui, Z.; Min, G.; Chen, J. High performance computing for cyber physical social systems by using evolutionary multi-objective optimization algorithm. IEEE Trans. Emerg. Top. Comput. 2017, 8, 20–30. [Google Scholar] [CrossRef]

- Wang, D.; Wu, Z.; Fei, Y.; Zhang, W. Structural design employing a sequential approximation optimization approach. Comput. Struct. 2014, 134, 75–87. [Google Scholar] [CrossRef]

- Chu, S.-C.; Du, Z.-G.; Pan, J.-S. Discrete fish migration optimization for traveling salesman problem. Data Sci. Patt. Recogn 2020, 4, 1–18. [Google Scholar]

- Wu, Z.; Wang, D.; Okolo, P.; Hu, F.; Zhang, W. Global sensitivity analysis using a gaussian radial basis function metamodel. Reliab. Syst. Saf. 2016, 154, 171–179. [Google Scholar] [CrossRef]

- Hu, P.; Pan, J.-S.; Chu, S.-C. Improved binary grey wolf optimizer and its application for feature selection. Knowl.-Based Syst. 2020, 195, 105746. [Google Scholar] [CrossRef]

- Pan, J.-S.; Wang, X.; Chu, S.-C.; Nguyen, T. A multi-group grasshopper optimisation algorithm for application in capacitated vehicle routing problem. Data Sci. Pattern Recognit. 2020, 4, 41–56. [Google Scholar]

- Pan, J.-S.; Dao, T.-K.; Pan, T.-S.; Nguyen, T.-T.; Chu, S.-C.; Roddick, J.F. An improvement of flower pollination algorithm for node localization optimization in wsn. J. Inf. Hiding Multim. Signal Process. 2017, 8, 486–499. [Google Scholar]

- Chu, S.-C.; Du, Z.-G.; Pan, J.-S. Symbiotic organism search algorithm with multi-group quantum-behavior communication scheme applied in wireless sensor networks. Appl. Sci. 2020, 10, 930. [Google Scholar] [CrossRef] [Green Version]

- Kleijnen, J.P. Kriging metamodeling in simulation: A review. Eur. J. Oper. Res. 2009, 192, 707–716. [Google Scholar] [CrossRef] [Green Version]

- Buche, D.; Schraudolph, N.N.; Koumoutsakos, P. Accelerating evolutionary algorithms with gaussian process fitness function models. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2005, 35, 183–194. [Google Scholar] [CrossRef]

- Emmerich, M.T.; Giannakoglou, K.C.; Naujoks, B. Single-and multiobjective evolutionary optimization assisted by gaussian random field metamodels. IEEE Trans. Evol. Comput. 2006, 10, 421–439. [Google Scholar] [CrossRef]

- Lesh, F.H. Multi-dimensional least-squares polynomial curve fitting. Commun. ACM 1959, 2, 29–30. [Google Scholar] [CrossRef]

- Edwards, J.R. Alternatives to difference scores: Polynomial regression and response surface methodology. In Measuring and Analyzing Behavior in Organizations: Advances in Measurement and Data Analysis; Jossey-Bass: San Francisco, CA, USA, 2002; pp. 350–400. [Google Scholar]

- Qiu, H.; Wang, D.; Wang, Y.; Yin, Y. Mri appointment scheduling with uncertain examination time. J. Comb. Optim. 2019, 37, 62–82. [Google Scholar] [CrossRef]

- Eason, J.; Cremaschi, S. Adaptive sequential sampling for surrogate model generation with artificial neural networks. Comput. Chem. Eng. 2014, 68, 220–232. [Google Scholar] [CrossRef]

- Jin, Y.; Olhofer, M.; Sendhoff, B. A framework for evolutionary optimization with approximate fitness functions. IEEE Trans. Evol. Comput. 2002, 6, 481–494. [Google Scholar]

- Pan, J.-S.; Liu, N.; Chu, S.-C.; Lai, T. An efficient surrogate-assisted hybrid optimization algorithm for expensive optimization problems. Inf. Sci. 2021, 561, 304–325. [Google Scholar] [CrossRef]

- Billings, S.A.; Zheng, G.L. Radial basis function network configuration using genetic algorithms. Neural Netw. 1995, 8, 877–890. [Google Scholar] [CrossRef]

- Sun, C.; Jin, Y.; Zeng, J.; Yu, Y. A two-layer surrogate-assisted particle swarm optimization algorithm. Soft Comput. 2015, 19, 1461–1475. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Rahnamayan, S.; Sun, H.; Omran, M.G. Gaussian bare-bones differential evolution. IEEE Trans. Cybern. 2013, 43, 634–647. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Q.; Gielen, G.G. A gaussian process surrogate model assisted evolutionary algorithm for medium scale expensive optimization problems. IEEE Trans. Evol. Comput. 2013, 18, 180–192. [Google Scholar] [CrossRef] [Green Version]

- Regis, R.G. Particle swarm with radial basis function surrogates for expensive black-box optimization. J. Comput. Sci. 2014, 5, 12–23. [Google Scholar] [CrossRef]

- Zhou, Z.; Ong, Y.S.; Nair, P.B.; Keane, A.J.; Lum, K.Y. Combining global and local surrogate models to accelerate evolutionary optimization. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2006, 37, 66–76. [Google Scholar] [CrossRef] [Green Version]

- Praveen, C.; Duvigneau, R. Low cost pso using metamodels and inexact pre-evaluation: Application to aerodynamic shape design. Comput. Appl. Mech. Eng. 2009, 198, 1087–1096. [Google Scholar] [CrossRef] [Green Version]

- Wang, D.-J.; Liu, F.; Wang, Y.-Z.; Jin, Y. A knowledge-based evolutionary proactive scheduling approach in the presence of machine breakdown and deterioration effect. Knowl.-Based Syst. 2015, 90, 70–80. [Google Scholar] [CrossRef]

- Chugh, T.; Jin, Y.; Miettinen, K.; Hakanen, J.; Sindhya, K. A surrogate-assisted reference vector guided evolutionary algorithm for computationally expensive many-objective optimization. IEEE Trans. Evol. Comput. 2016, 22, 129–142. [Google Scholar] [CrossRef] [Green Version]

- Ong, Y.S.; Nair, P.B.; Keane, A.J. Evolutionary optimization of computationally expensive problems via surrogate modeling. AIAA J. 2003, 41, 687–696. [Google Scholar] [CrossRef] [Green Version]

- Sun, C.; Zeng, J.; Pan, J.; Xue, S.; Jin, Y. A new fitness estimation strategy for particle swarm optimization. Inf. Sci. 2013, 221, 355–370. [Google Scholar] [CrossRef]

- Lim, D.; Jin, Y.; Ong, Y.-S.; Sendhoff, B. Generalizing surrogate-assisted evolutionary computation. IEEE Trans. Evol. 2009, 14, 329–355. [Google Scholar] [CrossRef] [Green Version]

- Cheng, X.; Jiang, Y.; Li, D.; Zhu, Z.; Wu, N. Optimal Operation with Parallel Compact Bee Colony Algorithm for Cascade Hydropower Plants. J. Netw. Intell. 2021, 6, 440–452. [Google Scholar]

- Wang, H.; Wang, W.; Cui, Z.; Zhou, X.; Zhao, J.; Li, Y. A new dynamic firefly algorithm for demand estimation of water resources. Inf. Sci. 2018, 438, 95–106. [Google Scholar] [CrossRef]

- Sun, Y.; Pan, J.-S.; Hu, P.; Chu, S.-C. Enhanced equilibrium optimizer algorithm applied in job shop scheduling problem. J. Intell. Manuf. 2022, 1–27. [Google Scholar] [CrossRef]

- Wang, J.; Du, P.; Lu, H.; Yang, W.; Niu, T. An improved grey model optimized by multi-objective ant lion optimization algorithm for annual electricity consumption forecasting. Appl. Soft Comput. 2018, 72, 321–337. [Google Scholar] [CrossRef]

- Ali, E.; Elazim, S.A.; Abdelaziz, A. Ant lion optimization algorithm for renewable distributed generations. Energy 2016, 116, 445–458. [Google Scholar] [CrossRef]

- Assiri, A.S.; Hussien, A.G.; Amin, M. Ant lion optimization: Variants, hybrids, and applications. IEEE Access 2020, 8, 77746–77764. [Google Scholar] [CrossRef]

- Mirjalili, S.; Jangir, P.; Saremi, S. Multi-objective ant lion optimizer: A multi-objective optimization algorithm for solving engineering problems. Appl. Intell. 2017, 46, 79–95. [Google Scholar] [CrossRef]

- Ali, E.; Elazim, S.A.; Abdelaziz, A. Ant lion optimization algorithm for optimal location and sizing of renewable distributed generations. Renew. Energy 2017, 101, 1311–1324. [Google Scholar] [CrossRef]

- Adam, L.; Dorota, L. Roulette-wheel selection via stochastic acceptance. Phys. Stat. Mech. Its Appl. 2012, 391, 2193–2196. [Google Scholar]

- Hardy, R.L. Multiquadric equations of topography and other irregular surfaces. J. Geophys. Res. 1971, 76, 1905–1915. [Google Scholar] [CrossRef]

- Yu, H.; Tan, Y.; Sun, C.; Zeng, J. A generation-based optimal restart strategy for surrogate-assisted social learning particle swarm optimization. Knowl.-Based Syst. 2019, 163, 14–25. [Google Scholar] [CrossRef]

- McLachlan, G.J. Mahalanobis distance. Resonance 1999, 4, 20–26. [Google Scholar] [CrossRef]

- Xiang, S.; Nie, F.; Zhang, C. Learning a mahalanobis distance metric for data clustering and classification. Pattern Recognit. 2008, 41, 3600–3612. [Google Scholar] [CrossRef]

- Chai, Q.-W.; Chu, S.-C.; Pan, J.-S.; Zheng, W.-M. Applying adaptive and self assessment fish migration optimization on localization of wireless sensor network on 3-d te rrain. J. Inf. Hiding Multim. Signal Process. 2020, 11, 90–102. [Google Scholar]

- Yu, H.; Tan, Y.; Zeng, J.; Sun, C.; Jin, Y. Surrogate-assisted hierarchical particle swarm optimization. Inf. Sci. 2018, 454, 59–72. [Google Scholar] [CrossRef]

- Suganthan, P.N.; Hansen, N.; Liang, J.J.; Deb, K.; Chen, Y.-P.; Auger, A.; Tiwari, S. Problem definitions and evaluation criteria for the cec 2005 special session on real-parameter optimization. KanGAL Rep. 2005, 2005005, 2005. [Google Scholar]

- Li, F.; Shen, W.; Cai, X.; Gao, L.; Wang, G.G. A fast surrogate-assisted particle swarm optimization algorithm for computationally expensive problems. Appl. Soft Comput. 2020, 92, 106303. [Google Scholar] [CrossRef]

- Liang, J.J.; Qu, B.; Suganthan, P.N.; Hernández-Díaz, A.G. Problem Definitions and Evaluation Criteria for the Cec 2013 Special Session on Real-Parameter Optimization; Technical Report; Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China; Nanyang Technological University: Singapore, 2013; Volume 201212, pp. 281–295. [Google Scholar]

- Meng, Z.; Pan, J.-S.; Xu, H. Quasi-affine transformation evolutionary (quatre) algorithm: A cooperative swarm based algorithm for global optimization. Knowl.-Based Syst. 2016, 109, 104–121. [Google Scholar] [CrossRef]

| Benchmark Function | Name | Characteristics | Global Optimal |

|---|---|---|---|

| F1 | Ackley | Multimodal | 0 |

| F2 | Griewank | Multimodal | 0 |

| F3 | Rosenbrock | Multimodal with narrow valley | 0 |

| F4 | Ellipsoid | Unimodal | 0 |

| F5 | Shifted rotate Rastrigin(F10 in [53]) | Very complicated multimodal | −330 |

| F6 | Rotated Hybrid composition function(F16 in [53]) | Very complicated multimodal | 120 |

| F7 | Rotated Rosenbrock’s Function(F6 in [55]) | Multimodal | −300 |

| Function | Method | Best | Worst | Mean | Std. |

|---|---|---|---|---|---|

| F1 Ackley | MSAALO | 0.123326744 | 3.13585031 | 1.43972806 | 0.849227975 |

| ALO | 12.10555809 | 15.6481591 | 14.19208313(+) | 0.9459971 | |

| PSO | 20.15494482 | 20.82542968 | 20.59548416(+) | 0.172703454 | |

| QUATRE | 20.31594928 | 20.8686191 | 20.62503928(+) | 0.163136984 | |

| F2 Griewank | MSAALO | 0.025057236 | 0.348212147 | 0.20459889 | 0.105483642 |

| ALO | 34.50123904 | 85.11353248 | 61.12324826(+) | 13.39587586 | |

| PSO | 399.9440594 | 629.2822719 | 539.9281838(+) | 58.72504985 | |

| QUATRE | 1430.698577 | 2134.531336 | 1793.335464(+) | 181.220765 | |

| F3 ROSENBROCK | MSAALO | 27.33130867 | 31.2019712 | 28.919217 | 0.911458613 |

| ALO | 212.0563715 | 472.0551911 | 329.7765029(+) | 73.50371706 | |

| PSO | 3612.815328 | 8177.474992 | 5768.083768(+) | 1056.556912 | |

| QUATRE | 13816.70038 | 25796.98324 | 20242.16778(+) | 3580.971155 | |

| F4 Ellipsoid | MSAALO | 0.064639725 | 1.745649711 | 0.76747429 | 0.542000353 |

| ALO | 137.5505289 | 316.3088353 | 236.7281153(+) | 47.19823742 | |

| PSO | 1869.607222 | 2942.181818 | 2298.538358(+) | 266.6575441 | |

| QUATRE | 18719.26637 | 29801.73299 | 24745.30049(+) | 3096.902059 | |

| F5 Shifted Rotated Rastrigin’s | MSAALO | −177.3906961 | 41.14829764 | −97.86071 | 62.85805275 |

| ALO | −28.50723525 | 190.6024357 | 121.7342326(+) | 61.58425884 | |

| PSO | 349.4033779 | 684.5691941 | 560.3924598(+) | 97.33286262 | |

| QUATRE | 2210.59487 | 2905.192736 | 2527.153953(+) | 161.6924755 | |

| F6 Rotated Hybrid Composition | MSAALO | 487.8856464 | 883.2270767 | 657.345355 | 91.4233247 |

| ALO | 680.0437144 | 1185.978105 | 931.6602035(+) | 144.3053267 | |

| PSO | 1012.886072 | 1529.59108 | 1240.718675(+) | 146.1381248 | |

| QUATRE | 993.5244617 | 1254.09476 | 1126.998238(+) | 69.97195687 | |

| F7 Rotated Rosenbrock’s Function (cec2013) | MSAALO | 305.9999456 | 968.5415869 | 497.931191 | 166.910778 |

| ALO | 2516.102941 | 8820.339926 | 5375.970745(+) | 1790.558688 | |

| PSO | 13511.26453 | 28774.73239 | 21609.56369(+) | 4160.450511 | |

| QUATRE | 371.1491082 | 1080.859897 | 580.0806404(+) | 190.9446216 |

| Function | Method | Best | Worst | Mean | Std. |

|---|---|---|---|---|---|

| F1 Ackley | MSAALO | 0.940415879 | 5.167946126 | 2.3517038 | 1.251470448 |

| ALO | 13.82370384 | 16.68453778 | 15.25804536(+) | 0.796298412 | |

| PSO | 20.54068171 | 20.9449642 | 20.81156212(+) | 0.095431374 | |

| QUATRE | 19.56914323 | 20.50332195 | 20.09924447(+) | 0.22904872 | |

| F2 Griewank | MSAALO | 0.104616818 | 0.926048755 | 0.37396578 | 0.216959396 |

| ALO | 105.2416856 | 174.1706141 | 132.4385061(+) | 21.29345499 | |

| PSO | 941.2522423 | 1143.06337 | 1043.479008(+) | 63.8714882 | |

| QUATRE | 511.6977831 | 739.5520411 | 627.2609005(+) | 68.17036889 | |

| F3 ROSENBROCK | MSAALO | 48.49067593 | 75.15137415 | 53.4668016 | 8.024656915 |

| ALO | 592.1867282 | 1107.620799 | 851.1901101(+) | 129.4974263 | |

| PSO | 9478.242386 | 15087.31686 | 12884.91921(+) | 1425.141242 | |

| QUATRE | 4668.454943 | 7782.74757 | 5958.326781(+) | 803.8444471 | |

| F4 Ellipsoid | MSAALO | 0.183039002 | 15.10150372 | 3.84620633 | 3.659057369 |

| ALO | 688.2929038 | 1333.181207 | 935.4220777(+) | 173.2759225 | |

| PSO | 6395.877995 | 7872.311698 | 7117.623372(+) | 385.1067671 | |

| QUATRE | 3115.474344 | 5157.058137 | 4328.406839(+) | 475.7695647 | |

| F5 Shifted Rotated Rastrigin’s | MSAALO | 160.0359335 | 626.0513082 | 337.473869 | 126.853667 |

| ALO | 495.0934545 | 757.8380202 | 653.8728804 (+) | 77.2052377 | |

| PSO | 1194.449781 | 1551.690511 | 1386.945263(+) | 98.67065573 | |

| QUATRE | 499.8560298 | 731.0150509 | 629.5159052(+) | 74.64785504 | |

| F6 Rotated Hybrid Composition | MSAALO | 558.4800991 | 1040.265314 | 802.894535 | 161.6001346 |

| ALO | 951.0065564 | 1216.362594 | 1099.303637(+) | 89.67953714 | |

| PSO | 1360.985177 | 1601.730509 | 1481.080647(+) | 70.85044609 | |

| QUATRE | 643.4407881 | 991.0314188 | 805.936404(≈) | 90.57789709 | |

| F7 Rotated Rosenbrock’s Function (cec2013) | MSAALO | 741.5338717 | 2449.577822 | 1368.97891 | 395.6821817 |

| ALO | 3968.852388 | 9349.459488 | 5934.070009(+) | 1408.381766 | |

| PSO | 20661.10026 | 37463.82879 | 28844.48817(+) | 4722.00103 | |

| QUATRE | 1622.13098 | 4279.212564 | 2891.371949 (+) | 640.6297767 |

| Function | Method | Best | Worst | Mean | Std. |

|---|---|---|---|---|---|

| F1 Ackley | MSAALO | 4.091671039 | 8.774707511 | 5.93663355 | 1.261160452 |

| ALO | 17.21809925 | 18.35013969 | 17.75140425(+) | 0.252252221 | |

| PSO | 20.77643095 | 21.02382447 | 20.92523434(+) | 0.056834438 | |

| QUATRE | 20.31594928 | 20.8686191 | 20.62503928(+) | 0.163136984 | |

| F2 Griewank | MSAALO | 1.851830569 | 6.001070319 | 3.76704066 | 1.069549415 |

| ALO | 464.5274265 | 583.0803864 | 528.7988196(+) | 39.70428045 | |

| PSO | 1943.472754 | 2498.344413 | 2276.611987(+) | 107.2391004 | |

| QUATRE | 1430.698577 | 2134.531336 | 1793.335464(+) | 181.220765 | |

| F3 ROSENBROCK | MSAALO | 112.0862083 | 211.8498645 | 138.034242 | 26.45273892 |

| ALO | 2410.659629 | 4175.374741 | 3366.246959(+) | 441.5179758 | |

| PSO | 27037.12808 | 34123.8097 | 30717.76802(+) | 1815.48804 | |

| QUATRE | 13816.70038 | 25796.98324 | 20242.16778(+) | 3580.971155 | |

| F4 Ellipsoid | MSAALO | 22.89635178 | 73.41179558 | 35.8898755 | 14.07490228 |

| ALO | 5696.304718 | 8042.695737 | 6953.813749(+) | 662.0899404 | |

| PSO | 28389.28814 | 33571.10473 | 31854.291(+) | 1220.854732 | |

| QUATRE | 18719.26637 | 29801.73299 | 24745.30049(+) | 3096.902059 | |

| F5 Shifted Rotated Rastrigin’s | MSAALO | 1489.06262 | 1799.211178 | 1629.79298 | 95.1636403 |

| ALO | 1655.179877 | 2148.085759 | 1926.3667(+) | 124.7194161 | |

| PSO | 2857.938669 | 3291.183242 | 3066.387997(+) | 113.9989264 | |

| QUATRE | 2210.59487 | 2905.192736 | 2527.153953(+) | 161.6924755 | |

| F6 Rotated Hybrid Composition | MSAALO | 848.527674 | 1255.817093 | 1062.61981 | 133.5560952 |

| ALO | 1122.289105 | 1417.145008 | 1274.688185(+) | 80.250579 | |

| PSO | 1512.055362 | 1740.348203 | 1627.7907(+) | 63.57016225 | |

| QUATRE | 993.5244617 | 1254.09476 | 1126.998238(+) | 69.97195687 | |

| F7 Rotated Rosenbrock’s Function (cec2013) | MSAALO | 10046.25135 | 22562.53428 | 16436.0136 | 3689.60855 |

| ALO | 35859.80184 | 54097.03908 | 42368.11593(+) | 5130.810022 | |

| PSO | 92916.94975 | 152443.4916 | 128356.6319(+) | 14760.00506 | |

| QUATRE | 28101.80237 | 49374.69153 | 38759.84115(+) | 5441.075378 |

| Num | MSAALO | ALO | PSO | QUATRE |

|---|---|---|---|---|

| 30 | 47.56% | 36.22% | 47.72% | 41.16% |

| 35 | 51.74% | 40.61% | 51.08% | 45.48% |

| 40 | 59.45% | 44.83% | 56.68% | 50.56% |

| 45 | 62.14% | 48.96% | 59.12% | 55.00% |

| 50 | 66.87% | 52.54% | 64.84% | 59.28% |

| 55 | 69.73% | 55.88% | 67.60% | 60.92% |

| Radius | MSAALO | ALO | PSO | QUATRE |

|---|---|---|---|---|

| 5m | 47.56% | 36.22% | 47.72% | 41.16% |

| 6m | 61.96% | 47.15% | 60.88% | 55.92% |

| 7m | 75.45% | 57.58% | 74.28% | 66.44% |

| 8m | 86.14% | 66.48% | 84.28% | 76.72% |

| 9m | 92.59% | 69.88% | 90.60% | 83.48% |

| 10m | 96.93% | 79.24% | 94.44% | 89.96% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Chu, S.-C.; Pan, J.-S.; Hu, P.; Xue, X. A Mahalanobis Surrogate-Assisted Ant Lion Optimization and Its Application in 3D Coverage of Wireless Sensor Networks. Entropy 2022, 24, 586. https://doi.org/10.3390/e24050586

Li Z, Chu S-C, Pan J-S, Hu P, Xue X. A Mahalanobis Surrogate-Assisted Ant Lion Optimization and Its Application in 3D Coverage of Wireless Sensor Networks. Entropy. 2022; 24(5):586. https://doi.org/10.3390/e24050586

Chicago/Turabian StyleLi, Zhi, Shu-Chuan Chu, Jeng-Shyang Pan, Pei Hu, and Xingsi Xue. 2022. "A Mahalanobis Surrogate-Assisted Ant Lion Optimization and Its Application in 3D Coverage of Wireless Sensor Networks" Entropy 24, no. 5: 586. https://doi.org/10.3390/e24050586

APA StyleLi, Z., Chu, S.-C., Pan, J.-S., Hu, P., & Xue, X. (2022). A Mahalanobis Surrogate-Assisted Ant Lion Optimization and Its Application in 3D Coverage of Wireless Sensor Networks. Entropy, 24(5), 586. https://doi.org/10.3390/e24050586