1. Introduction

A histogram is a central tool for analyzing the content of signals while disregarding positional relations. It is useful for tasks such as setting thresholds for detecting extremal events and for designing codes in communication tasks. In [

1], the three fundamental scales for histograms for discrete signals (and images) were presented: the intensity resolution or the bin-width, the spatial resolution, and the local extent, for which a histogram is evaluated. Even when fixing these scale parameters, it is still essential to consider the sampling phase, since in general, we do not know the location of the interesting signal parts, and thus, we must consider all phases, or equivalently, all overlapping histograms and all histograms for different positions of the first left bin-edge.

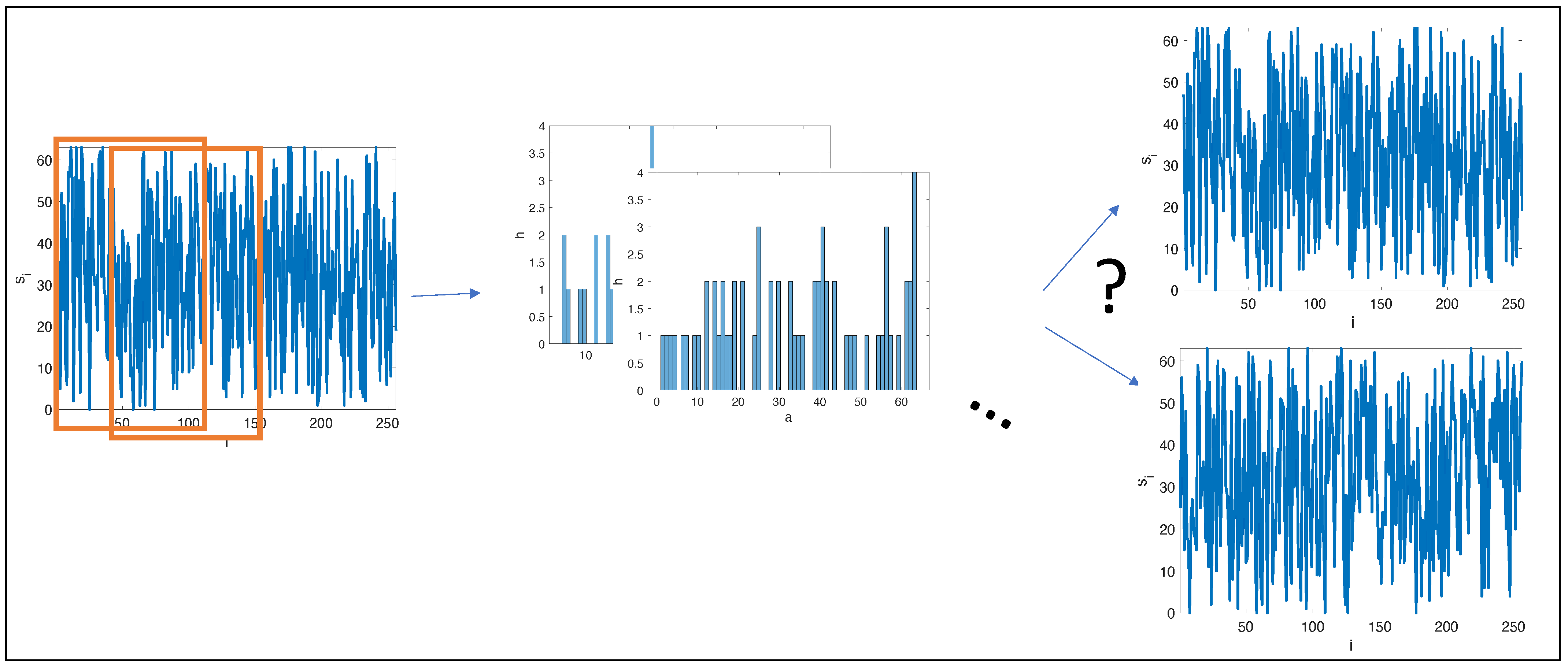

A natural question, when using local histograms for signals and image analysis, is: How many signals share a given set of overlapping, local histograms (illustrated in

Figure 1)? Out of pure theoretical interest, in this paper, we took a first step in answering this question by considering densely overlapping histograms of binary signals.

As an example, consider the signal

. Its global histogram is

, i.e., there are two “0” values and one “1” value.

Given the histogram, the only possible signals are

,

,

, i.e.,

This is a much smaller number than

possible signals, but it is not a bijective relation. The representation power of the histogram may be quantified as the conditional entropy of signals given their histogram. For binary signals of length three, the histogram of a signal may be summarized by its count of “1”-values, since the number of “0”-values will be three minus this count. For length three binary signals, there are eight different signals

, which have four different histograms where the counts of “1” values are 0, 1, 2, and 3, respectively, and the corresponding number of signals counted by their “1”-values is 1, 3, 3, and 1. Given a histogram, the conditional probability of each of these corresponding signals is thus

, and the conditional entropy may thus be found to be approximately 1.2 bit.

In this paper, we did not focus on coding schemes for signals, but on the expression power of local overlapping histograms. Thus, consider again the signal in (

1), but now with a set of local histograms of extent two, in which case, the histograms are calculated for the overlapping sub-signals,

In this case, there is only one signal that has this sequence of overlapping histograms, since, by the first histogram, we know that the first two values are “0”, and in combination with the second histogram, we conclude that the last value must be “1”; thus, the signal must be

. In contrast, the signals,

and

have the same local histograms,

and:

in which case the signal↔histograms is not a bijective relation. However, the local histogram and the global histogram together uniquely identify the signals. As these examples show, the relation between local histograms and signals is non-trivial, and in this paper, we considered the space of possible overlapping local histograms and the number of signals sharing a given set of local histograms.

In the early 20th Century, much attention was given to the lossless reconstruction of signals, in particular using error-correction codes, where the original signal is sent together with added information [

2]. Such additional information could be related to the histogram of the original signal. Later, the reconstruction of signals and images became more pressing problems, primarily as a way to compress images without losing essential content, and this resulted in still widely used image representation standards such as mpeg, tiff, and jpeg.

While signal representation has still been of some concern in the 21st Century, advances in hardware means that more attention has been given to image representation and, in particular, to qualifying the information content of image features. In [

3], the authors introduced the concept of metameric classes for local features. The authors considered scale-space features and investigated the space of images that share these features. They further presented several algorithms for picking a single reconstruction. An extension of this approach was presented in [

4], where patches at interest points of an original image were matched with patches from a database by a feature descriptor such as SIFT [

5]. The database patches were then warped and stitched to form an approximation of the original image. In [

6], the authors presented a reconstruction algorithm based on binarized local Gaussian weighted averages and using convex optimization. The theoretical properties of the reconstruction algorithm is still an open research question. In [

7], images were reconstructed from a histogram of a densely sampled dictionary of local image descriptors (bag-of-visual-words) as a jigsaw puzzle with overlaps. They showed that their method resulted in a quadratic assignment problem and used heuristics to find a good reconstruction. In [

8], the authors investigated the reconstruction of images from a simplified SIFT transform. The reconstruction was performed based on the SIFT-key points and their discretized local histograms of the gradient orientations, and several models were presented for choosing a single reconstruction from the possible candidates. In [

9], a convolutional neural network was presented that reconstructs images from a regularly, but sparsely sampled set of image descriptors. The network was able to learn image priors and was able to reconstruct images from both classical features such as SIFT and representations found in AlexNET [

10]. This was later extended in [

11], where an adversarial network was investigated for reconstruction from local SIFT features.

Our work is closely related to [

12], which discussed the relation between FRAME [

13] and Julesz’s model for human perception of textures [

14]. In [

13], the authors defined a Julesz ensemble as a set of images that share identical values of basic features statistics. Although not considered in their works, histogram bin-values can be considered a feature statistics, and hence, the metameric classes presented in this paper are Julesz ensembles in the sense of [

13]. In [

12], they considered normalized histograms of images filtered with Gabor kernels [

15], and they considered the limit of the spatial sampling domain converging to

. Their perspective may be generalized to local histograms; however, their results only hold in the limit.

This paper is organized as follows: First, we define the problem in

Section 2.

Section 3 describes an algorithm for finding the signal(s) that has (have) a specific set of local histograms. In

Section 2, constraints on possible local histograms and the size of metameric classes are discussed, and finally, in

Section 5, we present our conclusions.

2. Metameric Signal Classes

We were interested in the number of signals that have the same set of local histograms. In case there is more than one, then we call this a metameric signal class (or just a metameric class) defined by their shared set of local histograms. We define signals and their local histograms as follows: Consider an alphabet

and a one-dimensional signal

, which we denote

and where

is the value of

S at position

i. For a given window size

, we considered all local windows

and their histograms

,

where

is the Kronecker delta function, defined as:

All local histograms of for the signal

S are

.

As an example, consider the signal,

in which case

. For

, the windows are:

and the corresponding histograms are:

or equivalently, in short form,

In some cases, two different signals will have the same set of histograms, and we call these signals metameric, i.e., they appear identical w.r.t. their histograms. We say that they belong to the same metameric class given by their common histogram sequence. For example, when

and

, the signals,

have the same sequence of

histograms,

and thus,

S and

belong to the same metameric class denoted by

.

We were interested in the ability of local histograms to represent signals. Hence, for a given signal and window sizes, we sought to calculate

, the number of signals

S, which are uniquely identified by

and

, the number of metameric classes. The values of

and

for small values of

n are shown in

Table 1. These values were counted by considering all

different possible signals, which is an approach only possible for small values of

n. From the table, we observe that we did not find any combination of signal lengths and window sizes without a metameric class; hence, none of the tested combinations yielded a unique relation between the local histograms and the signal. Further, the number of unique signals

appeared to grow with

, and the number of metameric classes

appeared to be convex in

m for a large value of

n.

3. An Algorithm for Reconstructing the Complete Set of Signals from a Sequence of Histograms

We constructed an algorithm for reconstructing the one or more signals, which has or have a given sequence of histograms. It was constructed using the following facts:

- Fact 1

There is a non-empty and finite set of signals of size

m, which share the same histogram

h. These can be produced as all the distinct permutations of the following signal:

and the number of distinct signals is given by the binomial coefficient:

- Fact 2

Consider the windows and and their corresponding histograms and for . If , the histograms will be identical; otherwise, the histograms will differ by the count of one at and at , and , where is the Boolean “not” operator;

- Fact 3

From Fact 2, it follows that the histogram of is equal to , but where has been reduced by one. We call this ;

- Fact 4

As a consequence, for candidate signals that have histogram , but that have the wrong value placed at , the difference will have both negative and positive values.

Thus, we constructed the following algorithm:

- Step 1

Produce a candidate set of all the distinct signals of size m that have the histogram ;

- Step 2

For …0 and for each element in the candidate set :

- Step 2.1

Calculate .

- Step 2.2

If

does not have the form of (

22), then discard it;

- Step 2.3

Else, derive from , and extend the candidate with this value:

The computational complexity of our algorithm is

, where

is the maximum value of (

21), since initially, all signals of

must be considered, and this set can only shrink when considering earlier values.

A working code in F# is given at:

The code does not give any output, when run, but running in F#-interactive mode allows the user to inspect the key values after running the program, which are:

signal: int list = [0; 1; 0; 1; 1],

histogramList: Map<int,int> list = [map [(0, 2); (1, 1)]; map [(0, 1); (1, 2)]; map [(0, 1); (1, 2)]],

solutions: int list list = [[0; 0; 1; 1; 0]; [0; 1; 0; 1; 1]].

The signal is a sequence of binary digits, and the histogram sequence is represented as a sequence of maps, where each map-entry is an (intensity, count) pair, i.e., map [(0, 2); (1, 1)] above is equal to the histogram

. Finally, the solutions is represented as a sequence of sequences of binary digits. In this case, there is, as we can see, a metameric class of two signals, which shares a sequence of histograms. We verified that the algorithm is able to correctly reconstruct all the signal considered in

Table 1 including all the members of the metameric classes.

4. Theoretical Considerations on and

In the following, we consider classes of histogram sequences and relate them to the number of metameric classes for a given family of signals and their sizes.

As a preliminary fact, note that for a window size

m, all the histograms must have:

since all entries in

are counted exactly once.

4.1. Constant Sequence of Histograms ()

There are two different constant signals of length n: and . All neighborhoods and histograms of the constant signals are identical, and a histogram will have one non-zero element with value m. These signals cannot belong to a metameric class, since permuting the position of the values in does not give a new signal, and they are trivially unique.

In general signals with constant histogram sequences, , the signal must be periodic, since the only difference in the histogram count of and is that includes and does not include . Hence, for , then . For example, is a periodic signal for with histogram . Any constant sequence of histograms describes a periodic signal, and for non-constant signals () and , these histograms describe a metameric class, since some permutations of will produce new signals without changing the histograms due to periodicity. For any , there are such periodic binary signals.

4.2. Global Histogram ()

For a particular , all permutations of the signal belong to the same metameric class. Thus, the number of metameric class is equal to the number of different histograms with sum m, except those for constant signals. This corresponds to picking m numbers from A where repetition is allowed and order does not matter.

Following the standard derivation of unordered sampling with replacement, we visually rewrite the terms in (

23) with a list of “·’s”, where each “·” represents the count of one for a given bin. For example, for

, we may have the histogram

, which implies that

. A different histogram could be

, implying that

. Hence, any permutation of three “·’s” and one “+” will in this representation give the sum of three, and the number of permutations is equal to the number of ways we can choose

m out of

positions. Thus, the number of different ways we can pick histograms is given as the binomial coefficient:

Out of these, two histograms stem from the constant signals. The remaining histograms have

, and each of these histograms defines a metameric class, since there will always be more than one signal with such a histogram by (

21). Hence, the number of different histograms is,

This equation confirms the values in

Table 1 where

.

4.3. Smallest Histogram ()

For the case of

, we now show that:

Consider the sequence of histograms

. For

, there are three different histograms corresponding to

different signals. These signals fall into two classes:

is trivially solvable; however, for

, the values of

and

are easily identifiable from the histogram

, but their positions are not. Write

. Now, consider

. Again, if

, then their values are trivially solvable, and since

is known, then

can be deduced from

. Therefore, assume that

, and write

. Now, consider

; as before, if

, then their values are trivially solvable by

; hence,

can be deduced from

and

, and in turn,

can be deduced from

and

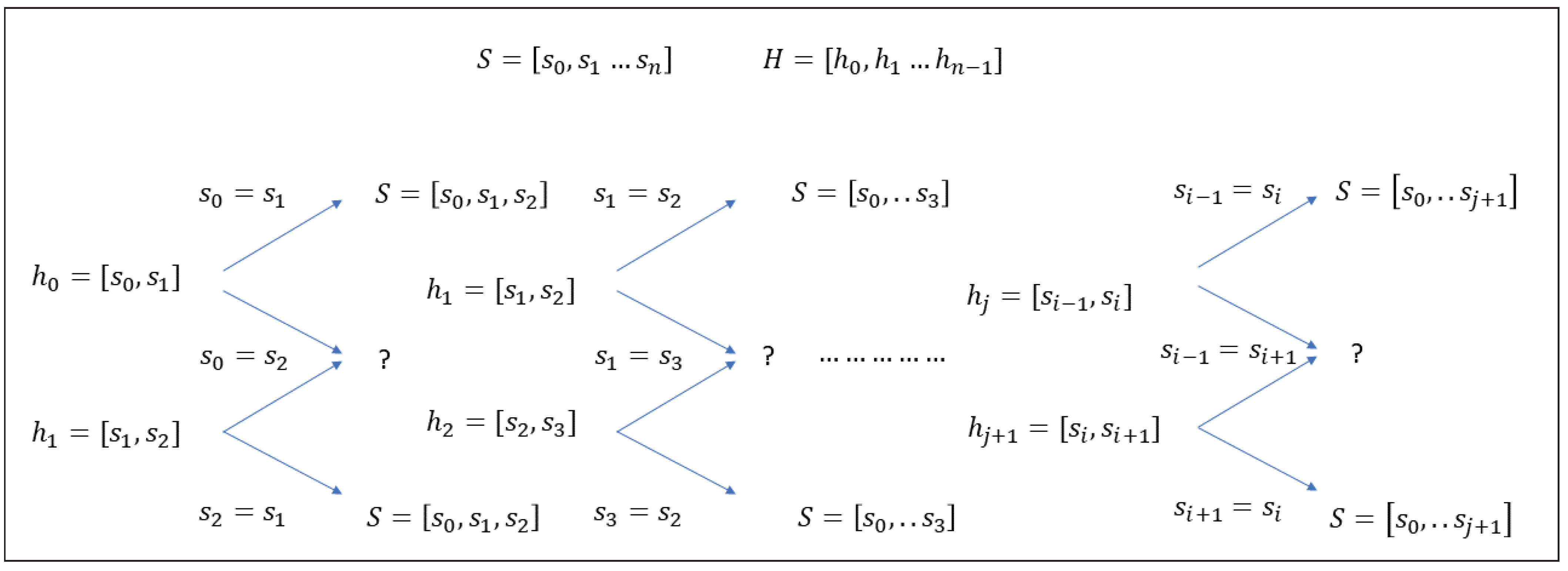

. The general structure of the problem is illustrated in

Figure 2, and by induction, we see that only the constant sequence of histograms

is a metameric class; hence,

.

4.4. The General Case ()

Since the sum of a histogram is

m (see (

23)) and since the histograms for binary signals only have two bins, we can identify each histogram by:

i.e., as the number of one-values in

. Thus, in the following, we identify

by

. In the following, we consider consecutive pairs of histograms for signals of varying lengths

.

Firstly, consider the case

and

and all possible combinations of histograms

and

, i.e.,

. The organization of all the

signals in terms of

and

is shown in

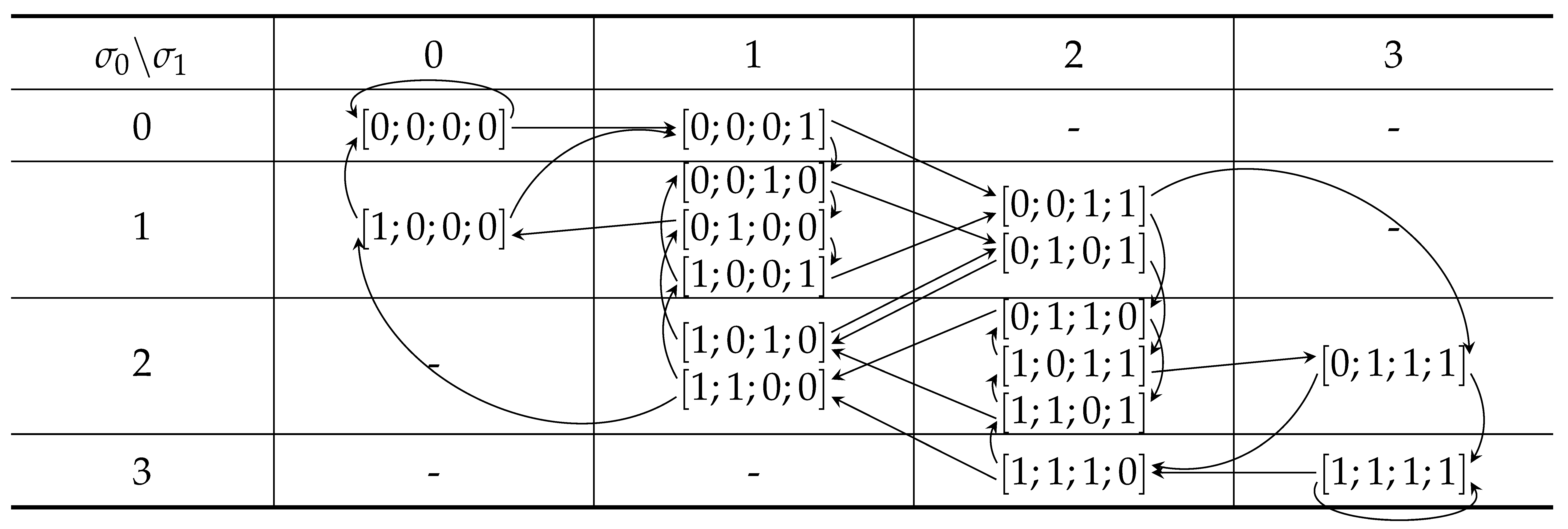

Table 2. We call such tables transition tables, and we say that each table cell contains a set of signal pieces. For

and

, the table illustrates that there is one metameric class shown in cell

, since this table cell contains two elements. This case is also discussed in relation to (

26).

Now, consider the case

and

. The transition table for

is identical to

Table 2. Further, an element in

is related to an element in

by the arrows in the table. For example, if

, then

and

. If

, then

,

, and

, while if

, then

,

, and

.

Transition tables contain zero or more elements that have histograms and . The tables have a particular structure:

- Fact 5

is a tridiagonal table: Since and only differ by the values and , then the differences between and can maximally be one. Hence, the table will have a tridiagonal structure;

- Fact 6

Elements on the main diagonal have

: On the diagonal

, hence:

Thus, ;

- Fact 7

Elements on the first diagonal above have

: On the first diagonal above,

, and thus,

Thus, ;

- Fact 8

Elements the first diagonal below have

: On the first diagonal below,

, and thus,

Thus, .

For counting the number of elements in the table, let be the number of elements in cell :

- Fact 9

For

,

In all six cases, the histograms are from signals where either or both and are constant, and hence, we can trivially reconstruct the corresponding values from the histograms. We call such a histogram pair a two-trivial pair;

- Fact 10

On the main diagonal, except

and

,

By Fact 6, . For , the possible signals for are signals summing to , i.e., , and for , we have . Since , therefore ;

- Fact 11

On the first diagonal above,

By Fact 7, . Hence, the possible signals for are signals summing to . Further, since and , therefore , and therefore, and for all other cases;

- Fact 12

On the first diagonal below,

By Fact 8, . Hence, the possible signals for are signals summing to . Further, since and , then , and therefore, and in all other cases.

For transitions, the following facts hold:

- Fact 13

Any signal of any length can be described as a route following the arrows in the table;

- Fact 14

An element in column

j transitions to an element in row

j, and as a consequence,

for

. Hence, only cells on the diagonal can contain intracellular paths;

- Fact 15

Any entry is maximally steps away from a two-trivial element, since starting at element , there is an path leading to .

W.r.t. the number of metameric classes:

- Fact 16

Intracellular paths for , are ambiguous, since these cells contain several indistinguishable elements, and we cannot determine the path’s starting point from its histogram sequence;

- Fact 17

Cell pairs, connected by more than one arrow in the same direction, give rise to ambiguous pairs, and paths that only contain such crossings or intracellular paths are ambiguous, since the paths cannot be distinguished by their histograms;

- Fact 18

For

, the number of metameric classes is equal to the number of non-empty cells in the tridiagonal table minus the six two-trivial cells.

For , an upper bound on the number of metameric classes is equal to the number of ambiguous paths. We have yet to come up with a closed form for .

We verified the above facts by considering the transition table for

, as shown in

Table 3. For

, we see that there are six uniquely identifiable signals and four metameric classes, as also confirmed by the algorithm in

Table 1. For

, we identified two intracellular cycles in

. Further, there are four ambiguous cell pairs

,

,

, and

. Hence, in total, there are six metameric classes. All other arrows corresponds to non-metameric paths of which there are 18. These numbers also correspond to the results by the algorithm shown in

Table 1. For

and leaving out the arrows for brevity, we identified the following ambiguous paths

,

,

,

, and

and a similar set of paths starting in

. Hence, we have the upper bound on the 10 metameric signals, and after a careful study, we realized that of these, there are two unique paths

and likewise for

; hence, the number of metameric classes is eight, as confirmed by our algorithm; see

Table 1. We have yet to identify an efficient algorithm to count all the ambiguous paths in such tables.