Abstract

Deep neural networks in the area of information security are facing a severe threat from adversarial examples (AEs). Existing methods of AE generation use two optimization models: (1) taking the successful attack as the objective function and limiting perturbations as the constraint; (2) taking the minimum of adversarial perturbations as the target and the successful attack as the constraint. These all involve two fundamental problems of AEs: the minimum boundary of constructing the AEs and whether that boundary is reachable. The reachability means whether the AEs of successful attack models exist equal to that boundary. Previous optimization models have no complete answer to the problems. Therefore, in this paper, for the first problem, we propose the definition of the minimum AEs and give the theoretical lower bound of the amplitude of the minimum AEs. For the second problem, we prove that solving the generation of the minimum AEs is an NPC problem, and then based on its computational inaccessibility, we establish a new third optimization model. This model is general and can adapt to any constraint. To verify the model, we devise two specific methods for generating controllable AEs under the widely used distance evaluation standard of adversarial perturbations, namely constraint and constraint (structural similarity). This model limits the amplitude of the AEs, reduces the solution space’s search cost, and is further improved in efficiency. In theory, those AEs generated by the new model which are closer to the actual minimum adversarial boundary overcome the blindness of the adversarial amplitude setting of the existing methods and further improve the attack success rate. In addition, this model can generate accurate AEs with controllable amplitude under different constraints, which is suitable for different application scenarios. In addition, through extensive experiments, they demonstrate a better attack ability under the same constraints as other baseline attacks. For all the datasets we test in the experiment, compared with other baseline methods, the attack success rate of our method is improved by approximately 10%.

1. Introduction

With the wide applications of a system based on DNNs, the concerns of their security become a focus. Recently, researchers have found that adding subtle perturbations to the input of deep neural networks causes models to give a wrong output with high confidence. Furthermore, they call the deliberately constructed inputs adversarial examples (AEs). The attack of DNNs by AEs is called adversarial attacks. These low-cost adversarial attacks can severely damage applications based on DNNs. Adding adversarial patches onto traffic signs can lead to auto-driving system error [1]. Adding adversarial logos to the surface of goods can impede automatic check-out in automated retail [2]. Generating adversarial master prints can destroy deep fingerprint identification models [3]. In any of the aforementioned scenarios, AEs can cause great inconvenience and harm people’s lives. Therefore, AEs become an urgent issue in the area of AI security.

In the research on generating AEs, two fundamental problems exist: (1) What is the minimum boundary of the amplitude of adversarial perturbations? All the models try to generate AEs with smaller adversarial perturbations. It is their objective to add as few adversarial perturbations as necessary to the clean example to achieve the attack; (2) Is the minimum boundary of adversarial amplitude reachable? The reachability refers to whether examples with adversarial perturbations that are under a minimum bound of adversarial amplitude can successfully attack as well as whether AEs exist under that boundary.

In order to answer those two problems, traditional AE generation can be devised into two main optimization models: (1) Taking the successful attack as the objective function and the limitation of perturbations as the constraint. This limitation is usually limited as less than or equal to a value, as shown in Equation (1). For a neural network F, input distribution , a point , X is the adversarial example of under the v constraint. D is the distance metric function:

(2) Taking the minimum of adversarial perturbations as the target and the success of the attack as the constraint:

However, the above two models do not solve the two problems well: (1) for the first model, when setting the limitation of AEs in the constraint, whether the model has a solution depends on the limit value v. The model may have no solution when the limit value v is too small. However, when the limit value is larger, the constraint on the AEs is too relaxed, and thus the gap between the solution and the minimum AEs is larger; (2) For the second model, when the limitation of adversarial perturbations is in the objective function, the perturbations will decrease in the whole optimization process until it drops in the local optimum of the whole objective function. This optimization model can easily fall into local optimization so that the solution is not the minimum adversarial example. At the same time, this paper also proves that finding the minimum AEs is an NPC problem, so it cannot find the real minimum AEs.

Therefore, in this paper, we focus on answering the problems mentioned above. For the first problem, we propose the concept of minimum AEs and give the theoretical lower bound of the amplitude of minimum adversarial perturbations. For the second problem, we prove that generating the minimum adversarial example is an NPC problem, which means that the minimum boundary of adversarial amplitude is computationally unreachable. Therefore, we generate the controllable approximation of the minimum AEs. We use the certified lower bound of minimum adversarial distortion to constrain the adversarial perturbations and transform the traditional optimization problem into another new model. (3) Taking the successful attack as a target and the adversarial perturbations are equal to the lower bound of the minimum adversarial distortion plus a controllable approximation, as shown in Equation (3). is the lower bound of the minimum adversarial distortion and is a constant of controllable approximation:

This model has two advantages compared with the existing methods: (1) Better attack success rate under the same amplitude of adversarial perturbations. Based on the theoretical lower bound of the amplitude of the minimum perturbations, the AEs overcome the blindness of the existing methods by controlling the increment in that amplitude and improve the attack success rate of the AEs. (2) More precisely controlled amplitude of adversarial perturbations under different constraints. The amplitude of the adversarial perturbations will affect the visual quality of AEs. To go a step further, for different scenarios of applications of the AEs, the requirements of visual quality are different. In some scenarios, they are very strict, while others are relaxed. There are two common scenarios as follows: (1) collaborative evaluation of humans and machines. In that case, AEs need to deceive both human oracles and the classifiers based on DNNs. For example, in the scenario of auto-driving, if the patches too easily draw humans’ attention, these adversarial signs would be moved and they would lose their adversarial effect. (2) Single evaluation of machines. In that case, only the classifiers and models based on DNNs need to be bypassed. In the scenario of massive electronic data filtering, they have a low probability of human involvement. When filtering and testing the harmful data involving violence and terrorism, it may heavily depend on the machines so that it has lower requirements for visual quality. Therefore, in order to adapt the two entirely different scenarios, we need to be able to controllably generate AEs.

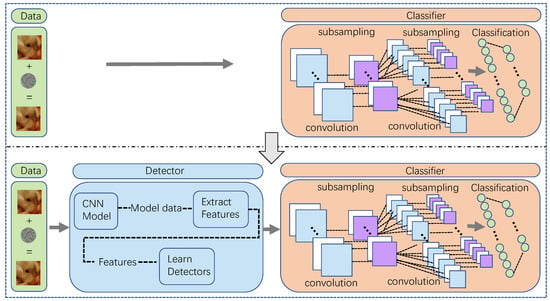

Meanwhile, generating controllable AEs also brings additional benefits. There are two different views with different implications: (1) Attackers can adaptively and dynamically adjust the amplitude of perturbations. As the described above, the defense technologies against adversarial attacks are mainly detection methods. From the attackers’ point of view, when their target is a combined network or system with detectors in front of the target classifier, as Figure 1 shows, they will expect to evaluate the successful probability of attacking the combined network before implementing the attack. For example, supposing that they know the probability of AEs with fixed perturbation bypassing the detector in advance according to prior knowledge, then they can purposefully generate AEs with bigger perturbations or more minor perturbations with a better visual quality to human eyes. (2) Defenders can actively defend against the attacks with the help of the outputs of controllable AEs. From the defenders’ point of view, controllable AEs can help evaluate defenders’ abilities against the AEs of different modification amplitude. When inputting different AEs with fixed adversarial perturbations to models, the defenders can evaluate their anti-attack capabilities according to the outputs against the unclean examples and then decide whether to add additional defense strategies with an emphasis on the current setting. For the example mentioned in the last point, if the defender has prior knowledge about the attackers’ average perturbation amplitude, they can select whether additional defensive measures are necessary.

Figure 1.

Figure representing the framework of the settings. The top framework is the traditional attack setting and the bottom is our attack setting. In the top setting, the target of the adversarial attack is a single target classifier while our setting is a combined network including a target classifier and a detector.

In this paper, we first give the definitions of minimum adversarial perturbations and AEs and the theorem of generating minimum AEs as an NPC problem and then propose a new model of generating adversarial examples. Furthermore, we give two algorithms for generating an approximation of AEs under and constraints. We perform experiments under widely used datasets and models for all the datasets tested in the experiment; compared with other baseline methods, the attack success rate of our method is improved by approximately 10%.

Our contributions are as follows:

- We first prove that generating minimum AEs is an NPC problem. We then analyze the existence of AEs with the help of the definition of the lower bound of the minimum adversarial perturbations. According to the analysis, we propose a general framework to generate an approximation of the minimum AEs.

- We propose the methods of generating AEs with a controllable amplitude of AEs under the and constraints. Additionally, we further improve the visual quality in case of greater perturbations.

- The experiments demonstrate that our method has a better performance in terms of attack success rate than other widely used methods at baseline under the same constraint. Meanwhile, its performance of precisely controlled amplitude of adversarial perturbations under different constraints is also better.

The rest of this paper is organized as follows. In Section 2, we briefly review the related work. In Section 3, we describe the basic definition, theorem and model of our algorithm in detail and prove the theorem. In Section 4, we give the transformed model of the basic model under two constraints and provide the efficient solution algorithm of the two models, respectively, in the two subsections. In Section 6, we present our experimental results and compare them with other baseline methods. Finally, we conclude our paper in Section 7.

2. Related Work

2.1. Adversarial Attack

There are two main pursuits of AEs: one is the smaller perturbations of the AEs; and the other is the successful attack. Previous works transform the two pursuits into two main optimization models. One takes the successful attack as the objective function and the limitation of perturbations as the constraint. These works include L-BFGS [4], C&W [5], DF [6] and HCA [7]. The other takes the successful attack as the objective function and the limitation of perturbations as the constraint. Such works include UAP [8], BPDA [9] and SA [10]. Other works, including FGSM [11], JSMA [12], BIM [13] and PGD [14] do not directly use the model of the optimization problem. However, these methods convert the successful attack into a loss function, move it along the direction of the decrease or increase in the loss function to find the AEs, and use a value at each step to constrain the perturbations. They can be classified as the second optimization model from the point of method-based view.

However, these works cannot really find the minimum AEs with the minimum amplitude of adversarial perturbations. For the first model, the model may have no solution when the value is set as too small. Furthermore, for the second model, it is easy to fall into local optimization.

Meanwhile, considering the constraint function of adversarial perturbations, the works of adversarial example generation can be divided into two main classes. One AEs generation under constraint, including constraint [14,15], constraint [14] and constraint [11,13,14], which is widely used. Furthermore, in addition to that constraint, there were other constraints in previous studies. In [16], the authors proposed that the commonly used constraint failed to completely capture the perceptual quality of AEs in the field of image classification. This used the structural similarity index SSIM [17] measure to replace that constraint. Moreover, the other two works [18,19] also used perceptual distance measures to generate AEs. The work [18] used SSIM while [19] used the perceptual color distance to achieve the same purpose.

However, the constraint of those works is not strict. For the AEs generation under the constraint, it is hard to control the amplitude of perturbations and there is a deviation of AEs generated by those works. For the other constraints, they cannot strictly control the perceptual visual quality: neither the SSIM value nor perceptual color distance.

Therefore, in this paper, we search for the minimum AEs with the minimum amplitude of perturbations. Moreover, we prove that generating the minimum AEs is an NPC problem. Furthermore, we transform that problem into the new optimization model that generates the controllable approximation of the minimum AEs. We generate AEs with a controllable amplitude of adversarial perturbations under the constraint and constraint, respectively.

2.2. Certified Robustness

The robustness of neural networks focuses on searching the lower bound and upper bound of the robustness of neural networks. The lower bound of the robustness is that there are no AEs when adding adversarial perturbations that are less than or equal to that boundary. Moreover, the upper bound of the robustness adding AEs that are larger than or equal to that bound can always acquire the AEs. The work CLEVER [20] and CLEVER++ [21] were the first neural network robustness evaluation scores. They use extreme value theory to estimate the Lipschitz constant based on sampling. However, that estimation requires many samples to have a better value of estimation. Therefore, the two methods only estimate the lower bound of the robustness of neural networks and cannot provide certification. As follows, the works Fast-Lin and Fast-Lip [22], CROWN [23] and CNN-Cert [24] are methods of certifying the robustness of the neural networks. The Fast-Lin and Fast-Lip [22] can only be used for neural networks with the activation function of ReLu. CROWN [23] can be further used for the networks with all general activation functions. Furthermore, the CNN-Cert [24] can be used for the general convolutional neural networks (CNNs). The basic idea is constructing linear functions to constrain the input and then using the upper and lower bounds of the functions as the upper and lower bounds of input, respectively. After that, it can constrain the whole network layer by layer. The whole process is iterative.

However, the above algorithm does not indicate how to calculate the AEs according to the calculated lower bound, and the reachability of AEs based on the lower bound remains a problem. Therefore, in this paper, we calculate the approximation of the minimum AEs based on the lower bound.

3. Basic Definition, Theorem and Modeling

Definition 1.

(AEs, Adversarial Perturbations). Given a neural network F, a distribution , a distance measurement between X and , a point and a point , we say that X is an adversarial example of under constraint if and .

Definition 2.

(Minimum AEs, Minimum Adversarial Perturbations). Given a neural network F, a distribution , a distance measurement between X and , and a point , we say that is a minimum adversarial example of if is an adversarial example of under constraint and such that there exists an adversarial example of under constraint . is the minimum adversarial perturbations of under D constraint.

Theorem 1.

Given a neural network F, a distribution , a distance measurement between X and and a point , searching for a minimum adversarial example of is an NPC problem.

Proof.

The proof of Theorem 1 is shown in Appendix A. □

Although it is an NPC problem, researchers calculate the non-trivial upper bounds of the robustness of the neural network [23,24,25]. We can thus calculate the non-trivial lower bounds of the minimum adversarial perturbations of based on the exact meaning of the two bounds.

We thus model the problem of calculating the non-trivial lower bounds of the minimum adversarial perturbations of . For input distribution , a clean input , perturbed input X of under the constraint, , , a neural network , original label y of , , target label , , and we define the non-trivial lower bounds of the minimum adversarial perturbations as of , as shown in Equation (4):

and:

In Equation (4), is the minimum of adversarial perturbations of under the target label . In Equation (5), is the perturbation of such that , means the upper bound of the network under label y of input X and means the lower bound of the network under another label of the input. They are calculated in [23,24,25].

Theorem 2.

Given a neural network F, a distribution , a distance measurement between X and , a point , the non-trivial lower bounds of the minimum adversarial perturbations of , if X is the perturbed example of under constraint and , then .

Proof.

According to the definition and meaning of the , we can obtain Theorem 2. □

Definition 3.

(N-order tensor [26]). In deep learning, a tensor extends from a vector or matrix to a higher dimensional space. The tensor can be defined by a multi-dimensional array. The dimension of a tensor is also called order, that is, N-dimensional tensor, also known as N-order tensor. For example, when , the tensor is a 0-order tensor, which is one number. When , the tensor is a 1-order tensor, which is a 1-dimensional array. When , the tensor is a 2-order tensor, which is a matrix.

Definition 4.

(Hadamard product [26]). The Hadamard product is the element-wise matrix product. Given the N-order tensors , the Hadamard product is denoted as the product of elements corresponding to the same position of the tensor. The product is a tensor with the same order and size as and . That is:

Definition 5.

(). For a real number and N-order tensor , we define as the sum of and the Hadamard product of λ and another tensor . That is:

, Ψ and have the same order. Specifically, in the field of the AEs, given a clean input , and perturbations , the adversarial example is . The physical meaning is the proportionality factor of r which adds on each feature .

For example, , , ,

Definition 6.

( approximation of minimum AEs, approximation of minimum adversarial perturbations). Given a neural network F, a distribution , a point , the non-trivial lower bounds of the minimum adversarial perturbations of , a constraint and , is a constant, we say that is the approximation of minimum AEs of and is the approximation of minimum adversarial perturbations such that , .

is a constant set by humans according to the actual statement. When generating an adversarial example for a specific input, it has different requirements of the adversarial perturbations for different settings, scenarios and samples.

(a) The more complex the scenario is, the smaller the constant is. In the extreme scenario of digital AEs generation, it needs a clear filter of the AEs and has a strict requirement of invisibility, and the should be small [15]. However, for most physical AEs generations, it has the relaxed requirement of invisibility. Most of them only need to keep semantic consistency. The can be set more considerably than the digital setting [27].

(b) The more simple the sample is, the smaller the constant is. When the sample is simple, its information is single, and people would be more sensitive to the perturbations than complex samples. It is easier for people to recognize the difference between clean inputs and perturbed inputs. For example, the of the MNIST dataset [28] should be smaller than the CIFAR-10 dataset [29].

We model the problem of generating AEs under D measure metrics as follows. For a deep neural network F, input distribution , a point and given the distance value d under constraint D, the problem of generating controllable AEs of d can be modeled as

We discuss the problem under two settings. One is the constraint of the norm, and the other is that of perceptually constrained D measure metrics. We use the widely used structural similarity (SSIM) as the perceptual constraint in a perceptually constrained AEs generation. The two constraints will be discussed, respectively, in the following sections.

4. AEs Generation under Constraint

4.1. Analysis of the Existence of AEs

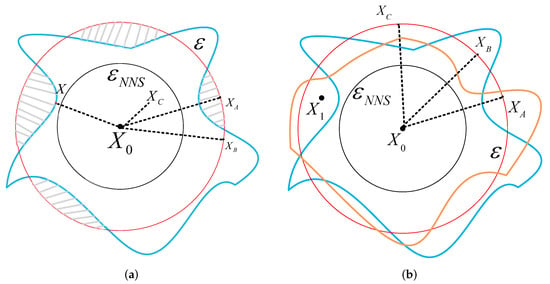

According to Theorem 2, we reach the following conclusions concerning the existence of AEs, as shown in Figure 2.

Figure 2.

Figure representing the spaces where AEs exist. The black circle indicates the ball of the non-trivial lower bounds of the minimum adversarial perturbations of ; the blue line indicates the classification bound of the network when is input; and the red circle means the ball of adding perturbations on . When examples are inside the blue line, they can be classified as the original label by the network. However, when they are outside the blue line, they are AEs. The gray shadow indicates the space where AEs exist under the ball of the . The yellow boundary is another classification border of .

As Figure 2a shows, we have the following analysis. When adding adversarial perturbations lower than , no AEs of exist.

When adding adversarial perturbations larger than , AEs of exist. The gray shadow between the red circle and the blue line is the space where AEs exist. However, whether AEs can be found depends on the direction of adding perturbations. As the figure shows, the perturbations of and all equal , and they are all located on the bound of the ball of ; however, we can see that is inside the gray shadow while is not.

Therefore, some conclusions that were previously well known hypotheses can be proven. Different AEs generation methods generate AEs with varying accuracy. For a clean input , when adding the same perturbations on it, method A can acquire the adversarial input . In contrast, method B obtains located inside the blue line and can still be correctly classified by the network. Hence, the key to generating AEs is finding the direction of where AEs exist. As shown in Figure 2a, when it is along the path of X, the added perturbations are the smallest.

Meanwhile, for different clean samples, the added perturbations of generating AEs are different. When a specific perturbation is fixed, different clean samples will obtain different perturbed examples after adding those perturbations. As shown in Figure 2b, the blue boundary and the yellow boundary are the different classification boundaries of two different samples, respectively. The perturbed examples acquired by adding the same perturbations are within the yellow boundary but outside the blue border. Therefore, they are the AEs of the blue boundary constraint samples which can be correctly classified by the yellow boundary constraints. Thus, the adversarial example needs to be researched for a specific sample.

Therefore, according to the analysis of the existence of AEs, we have the following conclusions. In order to generate practical AEs, the added perturbations quantity needs to meet the requirement and it needs to be larger than the classification boundary in this direction. At the same time, due to the limitation of invisibility of the AEs, it should be as small as possible. Thus, the generation direction of the AEs should be closer to the direction of minimum AEs.

According to Theorem 1, searching for the minimum AEs of sample is an NPC problem. In this paper, we try to generate the minimum AEs under a numerical approximation as Definition 6.

According to Figure 2a, in order to generate an effective adversarial example, the perturbations should be larger than the lower bound and the perturbations needed to cross the boundary of the classifier. When fixing , it defines a ball with a center of and radius . As shown in Figure 2a, the points on the ball are not all AEs. Using the + method is the same as selecting a random direction to generate perturbed examples that are highly unlikely to be adversarial. Therefore, it is necessary to calculate the direction of adding and make . is the direct tensor of effective AEs.

4.2. Model of Constraint

We model the problem of generating the approximation of minimum AEs. For a neural network F, the input distribution , a point and given the approximation of minimum adversarial perturbations , the problem of generating the approximation of the minimum adversarial example can be modeled as

According to the analysis of the existence of AEs and Theorem 2, when the added adversarial perturbations , AEs certainly exist and the model must have a solution.

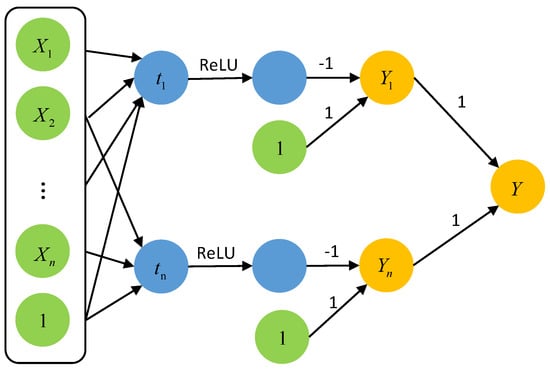

4.3. Framework of AE Generation under Constraint

According to Definition 6, we transform the problem of calculating the approximation of minimum adversarial example into searching for the direct tensor .

For a neural network F, input distribution , a point and given the approximation of minimum adversarial perturbations , according to Definition 5, the approximation of the minimum adversarial example is , and , which means:

This model must have solutions, and we can consider a special solution. We set one element of as 1 and the others are 0, fulfilling Equation (10). When the clean input is an image, it means modifying one channel of one pixel of the image, as proposed in [15]. However, this attack only has a success rate on VGG-16 [30] of cifar-10 [29]. Furthermore, the perturbations of this pixel are too large to be set as .

It is difficult to directly calculate ; thus, to solve Equation (10), we decompose into the two tensors and , and each element of and are defined as and , respectively. The n-order tensor determines the location of the added perturbations and the importance of the target label while the n-order tensor determines the size of the added perturbations, that is, the percentage of the total perturbations.

According to Equation (10), we obtain the following derivation:

Therefore:

However, in Equation (13), the two tensors are all unknown and all of them have n elements, so it is a multivariate n-order equation and still unsolvable. Although it is unsolvable, we can certify it as a trivial solution. We can certify when:

Equation (14) is workable. The proof is shown as follows.

Proof.

□

We only need to search for one solution of the model (9). That is, we only need to generate one approximation of a minimum adversarial example corresponding to the requirements (9). The trivial solution Equation (14) is therefore the result.

Therefore, the problem of generating the approximation of minimum AEs is transformed into generating the tensor by Definition 6 and it is then transformed into calculating the two tensors by Equation (13). Moreover, it is finally transformed into calculating the tensor . However, it is still an unsolvable question. Although the only thing we need to do is calculate the tensor , it is an n-order tensor in the real world so that there are n elements that remain unknown and need to be calculated. According to Equation (13), when tensor is known, the problem of solving the multivariate n-order equation is turned into a multivariate 1-order equation. If we want to solve the multivariate 1-order equation, we need n equations. However, we only have one equation, which is Equation (13). Therefore, this paper proposes the solution framework for generating the approximation of minimum AEs and a heuristic method to solve the problem.

4.4. Method of Generating Controllable AEs under Constraint

According to the definition of the AEs, we decompose the tensor into , . Each element of and are defined as and , respectively.

Because the N-order tensor determines the position of adding perturbations and the importance of the position to the target label, it contains two factors that restrict the value of the AEs. One is to improve the invisibility of the AEs so that added perturbations should be insensitive to human eyes. Another is to improve the effectiveness of the AEs so that the added perturbations should be able to push the sample away from the original classification boundary (in the case of non-target attack) or close to the target classification boundary (in the case of target attack. Obtaining a balance between the two factors is a key problem in the study of AEs. Therefore, we decompose the into and .

Importantly, is the tensor to determine the effectiveness of AEs and is the tensor to determine the invisibility of AEs. According to Equation (13), we have:

Therefore, the perturbations added on each element are:

According to the above analysis, we transform the approximation of minimum AEs generation into calculating the and .

4.4.1. Calculating

According to the analysis of Equation (5), when is lower than , the input X is an adversarial example. This means that the upper bound of the network under the original label of input X is lower than the lower bound of the network under other labels. Therefore, we let:

In the initial update step, the perturbed examples are not in the shadow space so that they are still correctly recognized by the model and . At this time, we need to make the examples as close as possible to reducing the , so the update direction is opposite to the gradient. When the value is less than 0, the absolute value of the needs to be larger, but the real value still needs to decrease so that the update direction remains the opposite of the gradient direction.

4.4.2. Calculating

According to the definition of , is the tensor to determine the invisibility of AEs. DCT transformation [31] can transform the data from host space to frequency domain space, and the data in the time-domain or space-domain can be transformed into a frequency-domain that is easy to analyze and process. When data are image data, after transformation, much crucial visual information about the images is concentrated in a small part of the coefficient of DCT transformation. The high-frequency signal corresponds to the non-smooth region in the image, while the low-frequency signal corresponds to the smoother region in the image.

According to the human visual system (HVS) [17], (1) human eyes are more sensitive to the noise of the smooth area of the image than the noise of the non-smooth area or the texture area; (2) human eyes are more sensitive to the edge information of the image and the information is easily affected by external noise.

Therefore, according to the definition of DCT, we can distinguish the features of each region of the image and selectively add perturbations. Given that the N-order tensor input data can be seen as a superposition of two-order tensor :

and .

In this paper, according to the definition of the tensor of :

Above all, we give the algorithm that generates the approximation of minimum AEs under the Lp constraint in Algorithm 1.

| Algorithm 1: Algorithm of the generating approximation of minimum AEs under Lp constraint |

| Input: a point approximation of minimum adversarial perturbations , a neural network F, the non-trivial lower bounds of the minimum adversarial perturbations of |

| Input: Parameters: number of iterations n, α |

Output:

|

5. AEs Generation under SSIM Constraint

We model the problem of generating AEs under [17] measure metrics as follows. We use to replace the D measure metrics in Equation (8). For a neural network F, input distribution , a point , the problem of generating controllable AEs of can be modeled as

According to the definition of the similarity measurement , for gray-scale images as

where defines the luminance, defines the contrast comparison function, and defines the structure comparison function. Furthermore, define the mean value of inputs , respectively, define the standard deviation of , respectively, and is the covariance between x and y. and are constants. According to [17], when setting and , Equation (24) can be simplified as,

Furthermore, according to Lagrangian constraint, we formulate Equation (26) as

where is the Lagrangian valuable, t is the one-hot tensor of the target label and is the cross-entropy loss function as shown in Equation (27).

Cross-entropy can measure the difference between two different probability distributions in the same random variable. In machine learning, it is expressed as the difference between the target probability distribution t and the predicted probability distribution .

6. Experimental Results and Discussion

6.1. Experimental Setting

Dataset: In this work, we evaluate our methods under two widely used datasets. MNIST is a handwriting digit recognition dataset from 0 to 9, including 70,000 gray images and 60,000 for training and 10,000 for testing. CIFAR-10 [32] has 60,000 images of ten classes, including airplane, automobile, bird, cat, deer, dog, frog, horse, ship and truck.

Threat model: In our paper, we generate the AEs of trained threat models. Due to limited computational resources, we train a feed-forward network with p layers and q neurons per layer. For all the networks, we use the ReLU activation function. We denote the networks as . For the MNIST dataset, we train the network as a threat model. For the CIFAR-10 dataset, we train , and as threat models.

Baseline attack: For comparing our method with other adversarial attacks, we generate AEs by different attack methods. Our method can adapt to different constraint measurements. In this part, due to limited computational resources, we adopt the -constrained measurement. Therefore, we use other the -constrained attack methods as the baseline, including SA- [10], FGSM- [11], BIM- [33], PGD- [14] and DF- [6]. We compare the performance of those attacks with our method under different constraint.

6.2. Evaluation Results

6.2.1. Results of Attack Ability

We calculate the success rates of the attacks to compare the attack ability. Due to the uncontrollable ability of the perturbations of other baseline attack, we first set the as , and for the MNIST dataset and 20, 25, 30 and 37 for the CIFAR-10 dataset, and we obtain the average perturbations of the baseline attacks under the constraint, as shown in Table 1 and Table 2, and then we use their average perturbations as the of our method under the same constraint and make a comparison of the success rates.

Table 1.

Table of the average perturbations of different attack methods under MNIST. We compare our method with PGD-, FGSM-, BIM- attacks. We denote the feed-forward networks as and p denotes the number of layers and q is the number of neurons per layer.

Table 2.

Table of the average perturbations of different attack methods under CIFAR. We compare our method with PGD-, FGSM-, BIM- attacks. We denote the feed-forward networks as and p denotes the number of layers and q is the number of neurons per layer.

The criteria for selecting the values for the baseline for each dataset is that the value is sufficiently adequate for the baseline attack. This means that under that value, the baseline attack will not jump out of the circulation of attack in advance due to an excessively large value, which leads to the measured average perturbations not having enough correlation with that value. Meanwhile, that value will not lead to the low success rate of the baseline attack due to it being too small. Specifically, because the baseline attack cannot control the average perturbations, we first take the way of binary search that the range is and the value interval is five and test the attack success rate and average perturbations of the baseline attack under different values. We then remove the points where either the difference between the average perturbations and that value is too large or the success rate is too low, that is, the points where that value overflows or is insufficient.

Due to the same average perturbations of the PGD- and BIM- attacks, we show their results in one table, namely Table 3 for MNIST and Table 4 for CIFAR. Furthermore, the comparison of the FGSM- attack and our method is shown in Table 5 for MNIST and Table 6 for CIFAR. As the four tables show, under the same constraint, our attack has a better attacking performance than other PGD-(), BIM-() and FGSM-() attacks.

Table 3.

Table of the success rate of PGD-, BIM- and our attacks under MNIST. We denote the feed-forward networks as whilst p denotes the number of layers and q is the number of neurons per layer.

Table 4.

Table of the success rate of PGD-, BIM- and our attacks under CIFAR. We compare our method with PGD-, FGSM-, BIM- attacks. We denote the feed-forward networks as and p denotes the number of layers and q is the number of neurons per layer.

Table 5.

Table of the success rate of FGSM- and our attacks under MNIST. We denote the feed-forward networks as and p denotes the number of layers and q is the number of neurons per layer.

Table 6.

Table of the success rate of PGD-, BIM- and our attacks under CIFAR. We compare our method with PGD-, FGSM-, BIM- attacks. We denote the feed-forward networks as and p denotes the number of layers and q is the number of neurons per layer.

In addition to the attacks that have a fixed , we also compare the attacks without a value to constrain the perturbations including the SA- and DF attacks. We also calculate the average perturbations of those attacks. Furthermore, then we use the same average perturbations as the of our method and make a comparison in Table 7 and Table 8. For MNIST, our method has a better performance than the DF and SA attacks.

Table 7.

Table of the success rate of different attack methods under MNIST. We compare our method with SA- and DF attacks. We denote the feed-forward networks as and p denotes the number of layers and q is the number of neurons per layer.

Table 8.

Table of the success rate of different attack methods under CIFAR. We compare our method with SA- and DF attacks. We denote the feed-forward networks as and p denotes the number of layers and q is the number of neurons per layer.

In addition to the above two small-sized datasets, the experiment also evaluates the performance of the algorithm on the larger and more complex dataset that is TinyImagenet. The dataset has 200 classes, each class has 500 pictures and we extract 200 pictures as the experimental data. For this dataset, we select the CNN model with seven layers that is denoted by ’CNN-7layer’ [34] as the threat model. Furthermore, we set the as and . The experiment first measures the average perturbation of the baseline attack under the selected . Furthermore, it then sets the average perturbation as the to compare the success rate of our algorithm and the baseline attack under that same value. The average perturbation of the baseline attack is shown in Table 9 and the comparison of the attack ability is shown in Table 10. As shown in Table 10, our algorithm has a better performance than the FGSM attack under the same .

Table 9.

Table of the average perturbations of different attack methods under TinyImagenet. We compare our method with FGSM- attack. We use the feed-forward network cnn-7layer as the target model.

Table 10.

Table of the success rate of FGSM- and our attacks under TinyImagenet. We use the feed-forward network cnn-7layer as the target model.

Furthermore, we also evaluate the attack ability of our algorithm on more complex models. We select Wide-ResNet, ResNeXt, and DenseNet as the target models and train them under the CIFAR dataset. The detail is the same as in [34]. The benchmark values we selected are and . Similarly, we first calculate the average perturbations of the baseline attack under that values. Then, we evaluate the results of the success rate of our algorithm and the baseline attack under the same of our algorithm which is the same as the average perturbations calculated beforehand. Table 11 shows the average perturbations. We make a comparison of the attack ability in Table 12 and Due to Table 12, we find that under the benchmark values and , our algorithm performs better than the FGSM attack. However, under the , in the Wide-ResNet and ResNeXt, the FGSM attack performs better.

Table 11.

Table of the average perturbations of different attack methods under Cifar. We compare our method with FGSM- attack. We use the feed-forward networks Wide-ResNet, ResNeXt and DenseNet as the target models.

Table 12.

Table of the success rate of FGSM- and our attacks under Cifar. We use the feed-forward networks Wide-ResNet, ResNeXt and DenseNet as the target models.

6.2.2. Results of Constraint under Different

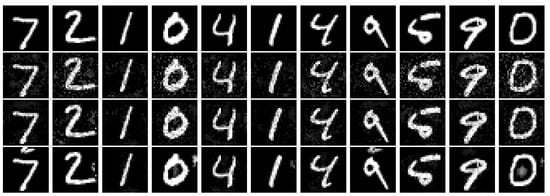

In this part, we evaluate our method described in Section 5. Due to there being no work devised for the same purpose as our method, we only show the results of our method without any comparison with others. We show the controllable ability under the constraint of our method and record its success rate in Table 13. We also show the adversarial images under different constraints in Figure 3.

Table 13.

Table of the controllable ability and attack ability of our method under the constraint. The perturbation coefficient of the attack is marked in brackets as SSIM-. We denote the feed-forward networks as and p denotes the number of layers and q is the number of neurons per layer.

Figure 3.

Figure of the images of the AEs generated under the constraint with different . The first line is the clean images and the second line shows the adversarial images under constraint. The third line is the adversarial images under constraint. The last line is the adversarial images under constraint.

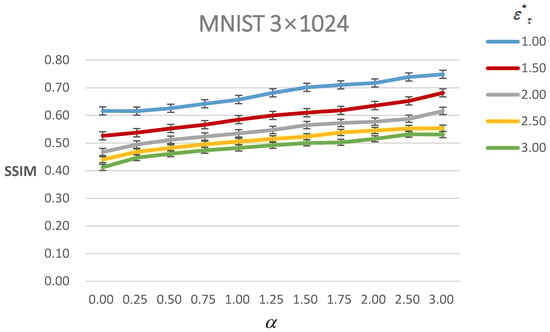

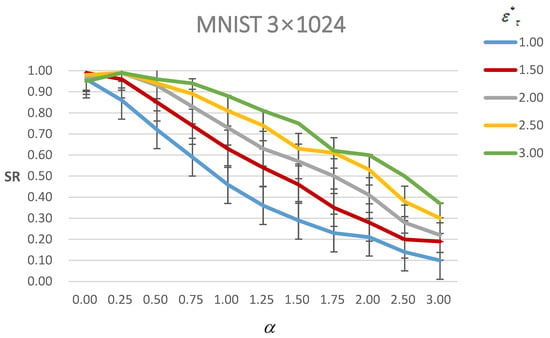

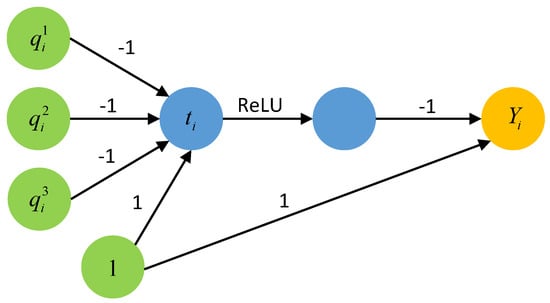

6.2.3. Results of under Constraint

In this section, we discuss the results of our method under with the constraint. Through the different , we can not only generate the controllable AEs but also improve the perceptual visual quality under the same constraint. When the under the constraint is large, the perceptual visual quality is poor. In order to adapt to this situation, our paper devises to improve the perceptual visual quality. However, there is a trade-off between the visual quality of the AEs and their success rate. Figure 4 shows the SSIM value of AEs under different with different . As it shows, the SSIM value increases with the increasing under the same constraint. Furthermore, we can see that with the increasing , the SSIM value has a trend of decreasing under the same . This means that the visual quality becomes poorer when more perturbations are added to the inputs, which is in line with the intuition of the AEs. Meanwhile, the SSIM value rises rapidly before under the same constraint; after that, its trend tends to be flatter.

Figure 4.

Figure of the SSIM value of AEs of MNIST under different with different . The line with different color means different .

Figure 5 shows the success rate of AEs under different with different . As it shows, the success rate decreases with the increasing under the same constraint. Moreover, with the increasing , the success rate increases under the same constraint. It is also consistent with the general nature of the AEs that when more perturbations are added, the probability of a successful attack becomes greater. Furthermore, the still tends to be a boundary that before , the success rate decreases slower and then it decreases faster when and . However, it has a nearly consistent trend of decreasing with , and . It means that when the perturbations remain small, excessive attention to visual quality will lead to a greater loss of attack success rate. Therefore, it corresponds to the actual meaning of the parameter that only needs to be set to when is large. We set and compare the results between and in Table 14.

Figure 5.

Figure of the success rate (SR) of AEs of MNIST under different with different . The line with a different color means different .

Table 14.

Table for the AEs under and of the constraint. We denote the feed-forward networks as and p denotes the number of layers and q is the number of neurons per layer. The , , and denote the average perturbations, the success rate, the SSIM value between the original image and adversarial image and the time taken to generate AEs, respectively.

Figure 6 shows the time of generating AEs under different with different . As it shows, the time decreases with the increasing under the same constraint. Moreover, with the increasing, the time increases under the same constraint.

Figure 6.

Figure of time of generating AEs of MNIST under different with different . The line with a different color means different .

7. Conclusions

Aiming at the two fundamental problems of generating the minimum AEs, we first define the concept of the minimum AEs and prove that generating the minimum AEs is an NPC problem. Based on this conclusion, we then establish a new third kind of optimization model that takes the successful attack as the target and the adversarial perturbations equal the lower bound of the minimum adversarial distortion plus a controllable approximation. This model generates the controllable approximation of the minimum AEs. We give a heuristic solution method of that model. From the theoretical analysis and experimental verification, our model’s AEs have a better attack ability and can generate more accurate and controllable AEs to adapt to different environmental settings. However, the method in this paper of the model does not perfectly determine the solution of the model, which will be the focus of future research.

Author Contributions

Supervision, F.L. and X.Y.; Writing—review & editing, Z.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Appendix A.1. Proof of NPC of Calculating the Minimum Adversarial Perturbations

Definition A1.

(3-SAT). Given a finite set of Boolean variables , , each variable takes 0 or 1, and a set of clauses , , ; each is a disjunctive normal form composed of three variables, that is, . Question: given a Boolean variable set X and clause set C, whether there is a true value assignment so that C is true and then each clause is true.

Theorem A1.

Given a neural network F, a distribution , and a distance measurement between X and , a point , searching for a minimum adversarial example of is an NPC problem.

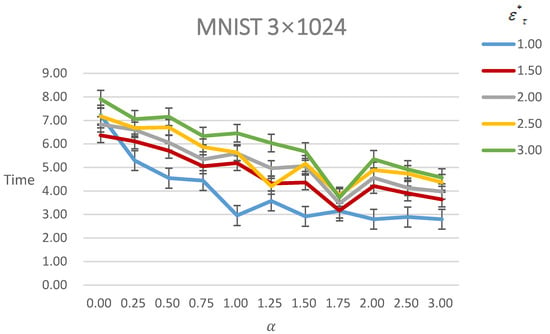

Proof.

We now prove Theorem A1. We first reduce the problem into a decision problem, and then according to the definition of an NPC problem, we prove that the problem belongs to the type of NP problem, and finally, we prove that a known NPC problem can be reduced to the decision problem in polynomial time.

We first reduce the problem of finding the minimum AEs into a series of decision problems. Though many important problems are not decision problems when they appear in the most natural form, they can be reduced to a series of decision problems that are easier to study, for example, the coloring problem of a graph. When coloring the vertices of a graph, we need at least n colors to make any two adjacent vertices have different colors. Then, it can be transformed into another question. Can we color the vertices of the graph with no more than m colors, ? The first m value in the set that makes the problem solvable is the optimal solution of the coloring problem. Similarly, we can also transform the optimization problem of finding the minimum AEs into the following series of decision problems: given the precision of perturbations , and initial perturbations , , whether we can use the perturbations , to make the inequality true, and the first value in the sequence that makes the inequality true is the optimal solution of the optimization problem.

The decision problem is reduced and formalized as follows. For the neural network attribute, , is the mapping from the AEs generated by the AEs generation function to label 1, that is, . is the mapping from the AEs generated by the AEs generation function to , that is, . When , its value is 1. When , its value is 0. When there is an assignment , , is the output of the neural network and determines whether the value of the attribute is true.

Obviously, it is an problem. In the guessing stage, given any perturbations , assuming the is a candidate solution of the decision problem. Furthermore, then in the verification stage, since the process of inputting perturbations and samples to the neural network and then outputting the results can be completed in polynomial time, it is polynomial in the verification stage. Therefore, the solution to the decision problem is an uncertain polynomial algorithm. Furthermore, according to the definition of the problem, the decision problem is an problem.

Finally, we prove that any problem in can be reduced to the decision problem in polynomial time. Due to the transitivity of polynomial simplification, we can prove a known problem: the problem can be transformed into the decision problem in polynomial time and then complete this proof.

Since the problem is an problem, according to the definition of an problem—that is, any problem in the set of problems that can be reduced to an problem in polynomial time—if the problem can be reduced to the aforementioned decision problem of searching for the AEs, according to transitivity, any problem in can be reduced to that decision problem in polynomial time and it can be proven that the decision problem is an -hard problem. We then prove how the problem is Turing reduced to the decision problem.

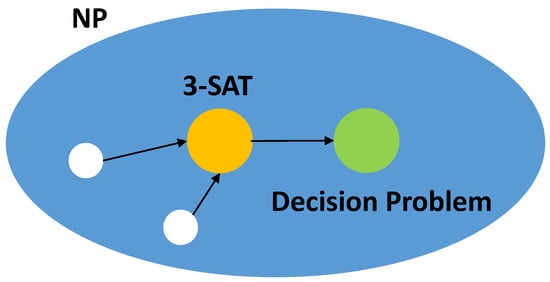

According to the definition of an problem, given the Ternary satisfiability formula on the variable set , each clause is a disjunction of three terms: , where , and are variables from X or their negative values. The problem is turned into that determining whether there is an assignment to satisfy , that is, whether there is an assignment that makes all clauses valid at the same time.

To simplify, we first assume that the input node , and is a sub-statement constructed when the discrete value is 0 or 1. Then, we will explain how to relax this restriction so that the only restriction on the input nodes is that they are in the range of .

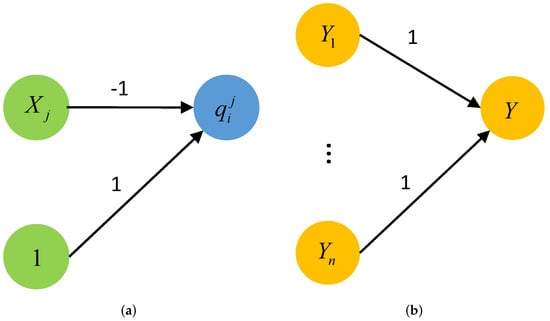

Firstly, we introduce the disjunctive tool, that is, given nodes and the output node is . When , , otherwise . The following Figure A2 shows the situation when is the variable itself (that is, it is not the negative value of the variable).

The disjunctive tool can be seen as the process of calculating Equation (A1):

If it has one variable of input which is at least 1, then . If all the variables of input are 0, then . The key of the tool is that the function can ensure that the output remains exactly 1 even if multiple inputs are set to 1.

For processing any negative item , we introduce a negative tool before inputting the negative item into the disjunctive tool, as shown in Figure A3a.

The tool that calculates and then continues to calculate is the aforementioned disjunctive tool. The last step involves a conjunction widget, as shown in Figure A3b.

Assuming that all nodes are in the range of , we require node Y in the range of . Obviously, this requirement only holds if all nodes are 1.

Lastly, in order to check if all the clauses are satisfied at the same time, we construct a conjunction gadget(using the negative value tool as input as needed) and combine it with a conjunction gadget, as shown in Figure A4.

The input variable is mapped to each node according to the definition of clause , that is, . According the above discussion, if the clause is satisfied, then ; otherwise, . Therefore, the node Y is the range of if and only if all the clauses are satisfied at the same time. Thus, an assignment of input satisfies the constraint between the input and the output of neural networks if and only if that assignment also satisfies the original item .

The above construction is based on the assumption that the input node takes values from discrete values , that is, . However, it does not accord with the assumption that is the conjunction of linear constraints. We will then prove how to relax the restriction to make the original proposition true.

Letting is a very small number. We suppose that each variable is in the range of but ensure that any feasible solution satisfies or . We add an auxiliary gadget to each input variable , that is, using the function node to calculate Equation (A2) as follows:

Furthermore, the output node of Equation (A2) is required to be within the range . This expression can directly indicate that when or , it is true for .

The disjunctive expression in our construction is Equation (A1). The value of its disjunctive expression changes with the inputs. If all inputs are in or , then at least one input is in and then the end output node of each disjunctive gadget will no longer use discrete values but will be in .

If at least one node of each input clause is in the range , then all nodes will be in and Y will be in . However, if at least one clause does not have a node in the range , Y will be less than (when ). Therefore, keeping the requirements true, if and only if is satisfied, its input and output will be satisfied, and the satisfied assignment can be constructed by making each and each . □

Figure A1.

Figure for the transitivity of polynomial simplification.

Figure A2.

Figure for the disjunctive gadget when are variables.

Figure A3.

Figure for negative disjunction and conjunction gadgets. (a) Figure representing a negative disjunction gadget. (b) Figure representing a conjunction gadget.

Figure A4.

Figure for the 3-SAT-DNN conjunction gadget.

Appendix A.2. Analysis of SSIM Constraint Method

We also try to directly calculate the adversarial perturbations as the constraint of our method. However, we find that it is difficult to perform the same operation under the constraint. The analysis is as follows. According to Equation (25) and substituting its inputs x and y as and , respectively, Equation (25) can be seen as the product of two parts [35]. That is:

where:

Therefore, the can be divided into the f function of and and the g function of and . In order to solve the condition of Equation (23), i.e., the product of the two functions needs to be a constant, we try to transform prime factorization to decompose d into the product of two values. Furthermore, the input is given so a set of prime factorization can be seen as solving a that meets the criteria of Equation (A3).

However, the solutions of the criteria of Equation (23) are not certain whether they are the AEs of the model F. Moreover, the solution of prime factorization is limited and it is a small set that meets the constraints, so it is more difficult to find AEs in that smaller set.

Appendix A.3. The Definition of the Lower and Upper Bound of a Network

We recall this definition from [23] as follows:

Definition A2.

(lower bound , upper bound ). Given a neural network F, a distribution , a point , a label y, and the output of the network under y label , we say that and are the lower bound and the upper bound of the network F under the label y such that .

According to Definition A2, we give a further explanation of Equation (5). Give a point and a perturbation when inputting the perturbed point X of under , if the upper bound of the network under the original label y is lower than the lower bound of the network under the label , meaning that the output so that X is an adversarial example.

References

- Eykholt, K.; Evtimov, I.; Fernandes, E.; Li, B.; Rahmati, A.; Xiao, C.; Prakash, A.; Kohno, T.; Song, D. Robust Physical-World Attacks on Deep Learning Models. arXiv 2017, arXiv:1707.08945. [Google Scholar]

- Liu, A.; Wang, J.; Liu, X.; Cao, B.; Zhang, C.; Yu, H. Bias-Based Universal Adversarial Patch Attack for Automatic Check-Out. ECCV 2020, 12358, 395–410. [Google Scholar] [CrossRef]

- Bontrager, P.; Roy, A.; Togelius, J.; Memon, N.; Ross, A. DeepMasterPrints: Generating masterprints for dictionary attacks via latent variable evolution. In Proceedings of the 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems, BTAS 2018, Redondo Beach, CA, USA, 22–25 October 2018. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Carlini, N.; Wagner, D. Towards Evaluating the Robustness of Neural Networks. In Proceedings of the IEEE Symposium on Security and Privacy, San Jose, CA, USA, 22–26 May 2016; pp. 39–57. [Google Scholar] [CrossRef] [Green Version]

- Moosavi-Dezfooli, S.M.M.; Fawzi, A.; Frossard, P. DeepFool: A simple and accurate method to fool deep neural networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2574–2582. [Google Scholar] [CrossRef] [Green Version]

- Carlini, N.; Wagner, D. Adversarial examples are not easily detected: Bypassing ten detection methods. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, Co-Located with CCS 2017, AISec 2017, Dallas, TX, USA, 3 November 2017; pp. 3–14. [Google Scholar] [CrossRef]

- Moosavi-Dezfooli, S.M.; Fawzi, A.; Fawzi, O.; Frossard, P. Universal adversarial perturbations. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 86–94. [Google Scholar] [CrossRef] [Green Version]

- Athalye, A.; Carlini, N.; Wagner, D. Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples. In Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholm, Sweden, 10–15 July 2018; Volume 1, pp. 436–448. [Google Scholar]

- Andriushchenko, M.; Croce, F.; Flammarion, N.; Hein, M. Square Attack: A Query-Efficient Black-Box Adversarial Attack via Random Search. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2020; Volume 12368, pp. 484–501. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; pp. 1–11. [Google Scholar]

- Papernot, N.; Mcdaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy, EURO S and P 2016, Hong Kong, China, 21–24 March 2016; pp. 372–387. [Google Scholar] [CrossRef] [Green Version]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial examples in the physical world. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017 - Workshop Track Proceedings, Toulon, France, 24–26 April 2017; pp. 1–14. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. arXiv 2017, arXiv:1706.06083. [Google Scholar]

- Su, J.; Vargas, D.V.; Sakurai, K. One Pixel Attack for Fooling Deep Neural Networks. IEEE Trans. Evol. Comput. 2019, 23, 828–841. [Google Scholar] [CrossRef] [Green Version]

- Hameed, M.Z.; Gyorgy, A. Perceptually Constrained Adversarial Attacks. arXiv 2021, arXiv:2102.07140. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gragnaniello, D.; Marra, F.; Verdoliva, L.; Poggi, G. Perceptual quality-preserving black-box attack against deep learning image classifiers. Pattern Recognit. Lett. 2021, 147, 142–149. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, Z.; Larson, M. Towards Large Yet Imperceptible Adversarial Image Perturbations with Perceptual Color Distance. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1036–1045. [Google Scholar] [CrossRef]

- Weng, T.W.; Zhang, H.; Chen, P.Y.; Yi, J.; Su, D.; Gao, Y.; Hsieh, C.J.; Daniel, L. Evaluating the Robustness of Neural Networks: An Extreme Value Theory Approach. In Proceedings of the 6th International Conference on Learning Representations ICLR, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Weng, T.w.; Zhang, H.; Chen, P.y.; Lozano, A.; Hsieh, C.j.; Daniel, L. On Extensions of CLEVER: A Neural Network Robustness Evaluation Algorithm. arXiv 2018, arXiv:1810.08640. [Google Scholar]

- Weng, T.W.; Zhang, H.; Chen, H.; Song, Z.; Hsieh, C.J.; Boning, D.; Dhillon, I.S.; Daniel, L. Towards fast computation of certified robustness for relu networks. arXiv 2018, arXiv:1804.09699v4. [Google Scholar]

- Zhang, H.; Weng, T.w.; Chen, P.y.; Hsieh, C.j.; Daniel, L. Efficient Neural Network Robustness Certification with General Activation Function. arXiv 2018, arXiv:1811.00866v1. [Google Scholar]

- Boopathy, A.; Weng, T.W.; Chen, P.Y.; Liu, S.; Daniel, L. CNN-Cert: An efficient framework for certifying robustness of convolutional neural networks. In Proceedings of the the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19), Honolulu, HI, USA, 27 January–1 February 2019; pp. 3240–3247. [Google Scholar] [CrossRef] [Green Version]

- Sinha, A.; Namkoong, H.; Duchi, J. Certifying some distributional robustness with principled adversarial training. In Proceedings of the 6th International Conference on Learning Representations, ICLR 2018—Conference Track Proceedings, Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–49. [Google Scholar]

- Kolda, T.G.; Bader, B.W. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Eykholt, K.; Evtimov, I.; Fernandes, E.; Li, B.; Rahmati, A.; Tramèr, F.; Prakash, A.; Kohno, T.; Song, D. Physical adversarial examples for object detectors. In Proceedings of the 12th USENIX Workshop on Offensive Technologies, WOOT 2018, co-located with USENIX Security 2018, Baltimore, MD, USA, 13–14 August 2018. [Google Scholar]

- Haykin, S.; Kosko, B. GradientBased Learning Applied to Document Recognition. Intell. Signal Process. 2010, 306–351. [Google Scholar] [CrossRef]

- CIFAR-10—Object Recognition in Images@Kaggle. Available online: https://www.kaggle.com/c/cifar-10 (accessed on 20 January 2022).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Ahmed, N.; Natarajan, T.; Rao, K.R. Discrete Cosine Transform. IEEE Trans. Comput. 1974, C-23, 90–93. [Google Scholar] [CrossRef]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images; Science Department, University of Toronto: Toronto, ON, Canada, 2009; pp. 1–60. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial machine learning at scale. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017- Conference Track Proceedings, Toulon, France, 24–26 April 2017. [Google Scholar]

- Xu, K.; Shi, Z.; Zhang, H.; Wang, Y.; Chang, K.W.; Huang, M.; Kailkhura, B.; Lin, X.; Hsieh, C.J. Automatic perturbation analysis for scalable certified robustness and beyond. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, BC, Canada, 6–12 December 2020. [Google Scholar]

- Brunet, D.; Vrscay, E.R.; Wang, Z. On the mathematical properties of the structural similarity index. IEEE Trans. Image Process. 2012, 21, 1488–1495. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).