Abstract

In this work, we define cumulative residual q-Fisher (CRQF) information measures for the survival function (SF) of the underlying random variables as well as for the model parameter. We also propose q-hazard rate (QHR) function via q-logarithmic function as a new extension of hazard rate function. We show that CRQF information measure can be expressed in terms of the QHR function. We define further generalized cumulative residual divergence measures between two SFs. We then examine the cumulative residual q-Fisher information for two well-known mixture models, and the corresponding results reveal some interesting connections between the cumulative residual q-Fisher information and the generalized cumulative residual divergence measures. Further, we define Jensen-cumulative residual (JCR-) measure and a parametric version of the Jensen-cumulative residual Fisher information measure and then discuss their properties and inter-connections. Finally, for illustrative purposes, we examine a real example of image processing and provide some numerical results in terms of the CRQF information measure.

1. Introduction

Entropy-type and Fisher-type information measures have attracted great attention from researchers in information theory. Although these two types of information measures have different geneses, they are complementary to each other in the study of information resources. Among them, Fisher information and Shannon entropy are the fundamental information measures and have been used very broadly. The systems with complex structure can be thoroughly described in terms of their architecture (Fisher information) and their behavior (Shannon entropy) measures. For more details, one may refer to Cover and Thomas [1] and Zegers [2]. Fisher [3] had proposed an information measure for describing the interior properties of a probabilistic model. Shannon entropy originated from the pioneering work of Shannon [4], based on a study of the global behavior of systems modeled by a probability structure. Fisher information as well as Shannon entropy are quite important and have become fundamental quantities in numerous applied disciplines. These information measures and their extensions have been considered by several researchers in recent years. In addition, some divergence measures, based on Fisher information and Shannon entropy, have been introduced for measuring similarity and dissimilarity between two statistical models. For example, Kullback-Leibler, chi-square, and relative Fisher information divergences and their extensions have been used in this regard. For pertinent details, one may refer to Nielsen and Nock [5], Zegers [2], Popescu et al. [6], Sánchez-Moreno et al. [7], and Bercher [8].

Tsallis entropy (see [9]) is a generalized form of Shannon entropy and has found key applications in the context of non-extensive thermo-statistics. Some generalized forms of Fisher information that match well in the context of non-extensive thermo-statistics have also been introduced by some authors; see Johnson and Vignat [10], Furuichi [11], and Lutwak et al. [12]. One of the common ways of generalizing information measures is through their accumulated forms. This has been done for Shannon entropy, Tsallis entropy, and their related versions.

Let X denote a continuous random variable on the support with survival function . The cumulative residual Fisher (CRF) information measure, introduced by Kharazmi and Balakrishnan [13], is then defined as

where log stands for the natural logarithm. Throughout this paper, we will suppress in the integration with respect to x for ease of notation, unless a distinction becomes necessary. Due to the term in the integrand in (1), it can be readily seen that CRF information measure in (1) provides decreasing weights for larger values of X. Hence, this information measure will naturally be robust to the presence of outliers. Kharazmi and Balakrishnan [13] have analogously defined the CRF information measure for the survival function as

They also provided an interesting representation for information measure in (2), based on the hazard function, , as

In the present paper, our primary goal is to introduce cumulative residual q-Fisher (CRQF) information, cumulative residual generalized- (CRG-) divergence, and Jensen-cumulative residual- (JCR-) and a parametric version of Jensen-cumulative residual Fisher (JCRF) information measures. We then examine some properties of these information measures and their interconnections in terms of two well-known mixture models that are commonly used in reliability, economics, and survival analysis.

The organization of the rest of this paper is as follows. In Section 2, we briefly describe some key information and entropy measures that are essential for all subsequent developments. Next, in Section 3, we define a cumulative residual q-Fisher (CRQF) information measure and q-hazard rate function. It is then shown that the CRQF information measure can be expressed via expectation involving q-hazard rate (QHR) function under proportional hazard model (PH) with proportionality parameter q. In Section 4, we propose the cumulative version of a generalized chi-square measure, called cumulative residual generalized- (CRG-) divergence measure. We show that the first derivative of CRG- measure with respect to the associated parameter is connected to the cumulative residual entropy measure, and when the parameter tends to zero, it is connected to the variance of the ratio of two survival functions. Next, we obtain the CRQF information measure for two well-known mixture models in Section 5. It is shown that the CRQF information measure for arithmetic mixture and harmonic mixture models are connected to the CRG- divergence measure. In addition, we show that the harmonic mixture model involves optimal information under three optimization problems regarding the cumulative residual chi-square divergence measure. In Section 6, we first define Jensen-cumulative residual (JCR-) measure and a parametric version of the Jensen-cumulative residual Fisher information measure and then discuss some of their properties. We also show that these two information measures are connected through arithmetic mixture models. In Section 7, we consider a real example of image processing and present some numerical results in terms of the CRQF information measure. Finally, some concluding remarks are made in Section 8.

2. Preliminaries

In this section, we briefly review some information measures that will be used in the sequel. The chi-square divergence between two SFs and , called cumulative residual (CR-) divergence, is defined as

The divergence can also be defined in an analogous manner. For more details, see Kharazmi and Balakrishnan [13].

For a given continuous random variable X with survival function , the cumulative residual entropy (CRE) was defined by Rao et al. [14] as

The relative cumulative residual Fisher (RCRF) information between two absolutely continuous survival functions and is defined as

For given absolutely continuous survival functions , the Jensen-cumulative residual Fisher (JCRF) information measure was defined by Kharazmi and Balakrishnan [13] as

where are non-negative real values with .

3. CRQF Information Measure

Here, we first define the cumulative residual q-Fisher (CRQF) information measure and the q-hazard rate (QHR) function. We then study some properties of the CRQF information measure and its connection to the QHR function.

The q-Fisher information of a density function f, defined by Lutwak et al. [12], is given by

where is the q-logarithmic function defined as

For more details, see Furuichi [11], Yamano [15], and Masi [16]. Using this q-logarithmic function, we now propose two cumulative versions of the q-Fisher information in (8).

Definition 1.

Let denote the survival function of variable X. Then, the CRQF information about parameter is defined as

Definition 2.

The CRQF information measure for survival function associated with variable X is defined as

Example 1.

Let X have a Weibull distribution with CDF , . Then, the CRQF information measure of variable X, for , is obtained as

where is the complete gamma function defined by

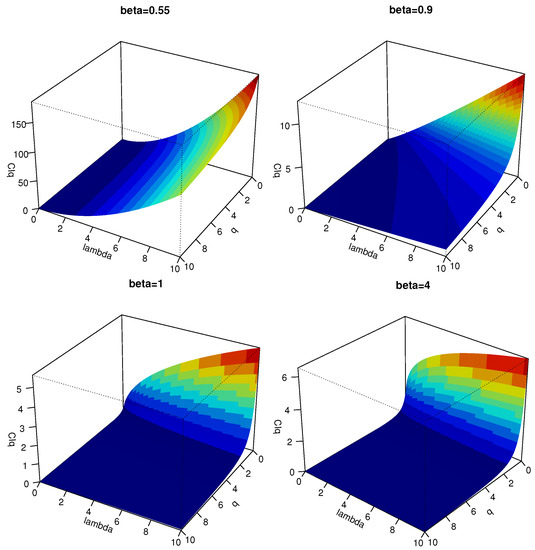

Figure 1 plots the CRQF information measure in (12) for different choices of parameters, from which we observe that gets maximized when q is decreased and the parameter is increased.

Figure 1.

3D plots of the CRQF information measure in (12) for some selected choices of the Weibull parameters.

q-Hazard Rate Function and Its Connection to CRQF Information Measure

The hazard rate (HR) function of variable X with survival function is given by

The HR function is a basic concept in reliability theory; see Barlow and Proschan [17] for elaborate details.

Now, we first propose a new extension of the HR function based on the q-logarithmic function in (9) and then study its connection to the CRQF information measure.

Definition 3.

For a random variable X with an absolutely continuous survival function , the q-hazard rate (QHR) (or q-logarithmic hazard rate) function is defined as

where is the hazard rate function defined in (13).

Theorem 1.

Let variable X have its absolutely continuous survival function and q-hazard rate function for . Then, for , we have

where has proportional hazard model corresponding to baseline variable X with proportionality parameter q.

Proof.

From the definition of in (11), we get

as required. □

Lemma 1.

The information measure is decreasing with respect to .

Proof.

From the definition of in (11), upon making use of Theorem 1, for each , we have

as required. □

Theorem 2.

Let the non-negative random variable X have survival function , CRF information , and CRQF information . Then, we have:

- (i)

- If , then ;

- (ii)

- If , then ’

with equality holding if and only if .

Proof.

From the definition of CRQF information measure in (11), and since for , we have

which proves Part (i). Part (ii) can be proved in a similar manner. □

4. Generalized Cumulative Residual Divergence Measures

In this section, we first define a cumulative form of a generalized chi-square divergence measure and then examine some of its properties. A generalized version of the divergence between two densities f and g, for , considered by Basu et al. [18], is defined as

For more details, see also Ghosh et al. [19].

Definition 4.

The cumulative residual generalized (CRG-) divergence between two survival functions and , for , is defined as

Theorem 3.

Let the variables X and Y have survival functions and , respectively, and be the corresponding CRG- divergence measure between them. Then, we have

where is the CRE information measure defined in (5) and is the cumulative residual inaccuracy measure given by

Proof.

Upon considering the CRG- measure in (16) and differentiating it with respect to , we obtain

as required. □

Theorem 4.

Let the non-negative continuous variables X and Y have survival functions and , respectively, and have a common mean μ. Then,

where and is the equilibrium distribution of variable X.

Proof.

From the definition of GCR- divergence measure in (16) and the facts that , we obtain

as required. □

Theorem 5.

Let the variables X and Y have survival functions and , respectively, and be the corresponding CRG- divergence measure between them. Then, for each , we have

Proof.

From the definition of CRG- in (16) and that fact that for , we have

as required. □

5. Cumulative Residual -Fisher Information for Two Well-Known Mixture Models

In this section, we study the CRQF information measure for the well-known arithmetic mixture and harmonic mixture distributions.

5.1. Arithmetic Mixture Distribution

The arithmetic mixture distribution based on survival functions and is given by

For more details about the mixture model in (18), see Marshall and Olkin [20]. The CRQF information measure about parameter in (18) is given by

Theorem 6.

The CRQF information measure in (19) is given by

where is power mean with exponent , defined as for positive x and y.

Proof.

From the mixture model in (18), we have

Now, from the definition of the CQTF information measure in (19), we find

Then, from (21), we obtain

as required. □

Theorem 7.

Let the non-negative random variable X have arithmetic mixture survival function in (18) and with CRF information and CRQF information . Then, we have:

- (i)

- If , then ;

- (ii)

- If , then ,

with equality holding if and only if .

Proof.

From the definition of CRF information measure, we have

Furthermore, from the definition of CRTF information measure, we find

which proves Part (i). Part (ii) can be proved in an analogous manner. □

5.2. Harmonic Mixture Distribution

The harmonic mixture (HM) distribution based on survival functions and is given by

For more details about harmonic mixture distributions, one may refer to Schmidt [21]. The CRQF information measure about parameter in (22) is given by

Theorem 8.

and is as defined earlier in Theorem 6.

Proof.

Because , it is readily seen that

Adding the above two inequalities, we readily get

as required. □

5.3. HM Distribution Having Optimal Information under CR- Divergence Measure

In this section, we discuss the optimal information property of the harmonic mixture survival function in (22). For this purpose, we consider the optimization problem for cumulative residual chi-square divergence under three types of constraints. For more details about optimal information properties of some mixture distributions (arithmetic, geometric, and mixture distributions), one may refer to Asadi et al. [22] and the references therein.

Theorem 9.

Let , , and be three survival functions. Then, the solution to the information problem

is the HM distribution in (22) with mixing parameter and is the Lagrangian multiplier.

Proof.

We use the Lagrange multiplier technique to find the solution of the optimization problem stated in (25). Hence, we have

Now, differentiating with respect to , we obtain

Setting (26) to zero, we get the optimal survival function as

where . □

Theorem 10.

Let , , and be three survival functions. Then, the solution to the information problem

is the HM distribution in (22) with mixing parameter .

Proof.

Making use of the Lagrangian multiplier technique, and proceeding in the same way as in the proof of Theorem 25, the required result can be obtained. □

Theorem 11.

Let , , and be three survival functions and . Then, the solution to the information problem

is HM model with mixing parameter and is the Lagrangian multiplier, where is the equilibrium distribution as defined in Theorem 4.

Proof.

Making use of the Lagrangian multiplier technique, and proceeding the same way as in the proof of Theorem 25, the required result is obtained. □

6. Jensen-Cumulative Residual and Parametric Version of Jensen-Cumulative Residual Fisher Divergence Measures

In this section, we first introduce the Jensen-cumulative residual divergence measure and then propose a parametric version of the Jensen-cumulative residual Fisher information in (7). Next, we show that these two Jensen-type divergence measures are connected through arithmetic mixture distributions.

6.1. Jensen-Cumulative Residual Divergence Measure

Definition 5.

Consider the survival functions and . Then, the Jensen-cumulative residual (JCR-) information measure is defined as

where are non-negative real values with .

Theorem 12.

The JCR- information measure defined in (29) is non-negative.

Proof.

From the definition of the CR- measure, we have

and

Upon making use of the above results, from the definition of the JCR- measure in (29), we find

Finally, from the arithmetic mean-harmonic mean inequality (see Theorem 5.1 of Cvetkovski [23]), we get

as required. □

6.2. Parametric Version of Jensen-Cumulative Residual Fisher Information Divergence

In this subsection, we introduce a parametric form of the JCRQF information measure in (7).

Definition 6.

Consider the survival functions . Then, a parametric form of JCRQF (P-JCRF) information measure about parameter θ, for non-negative real values with , is defined as

where

Theorem 13.

Proof.

From the definition in (30), we have

Furthermore, we have

as required. □

6.3. Connection between the P-JCRF Information and JCR- Divergence Measures

Let and be arbitrary continuous survival functions. Consider the arithmetic mixture distributions with survival functions

and

where are non-negative real values with and

The JCRQF information measure of survival functions , about the mixing parameter , for , is given by

Proof.

From the definition of in (33), we find

as required. □

7. Application of CRQF Information Measure

We now demonstrate an application of the CRQF information measure to image processing. Let be a random sample from density f with corresponding CDF F. The kernel estimate of density f, based on kernel function K with bandwidth , is given by

Further, the non-parametric estimate of the survival function , at a given point x, is given by

where I is the indicator function taking the value 1 if the condition inside brackets is satisfied and 0 otherwise. Then, the integrated non-parametric estimate of in (11) is given by

From (36) and with the use of Gaussian kernel , we have

Thus, from (37) and using the Cavalieri-Simpson rule for numerical integration, the empirical estimate of the CRQF information measure can be obtained.

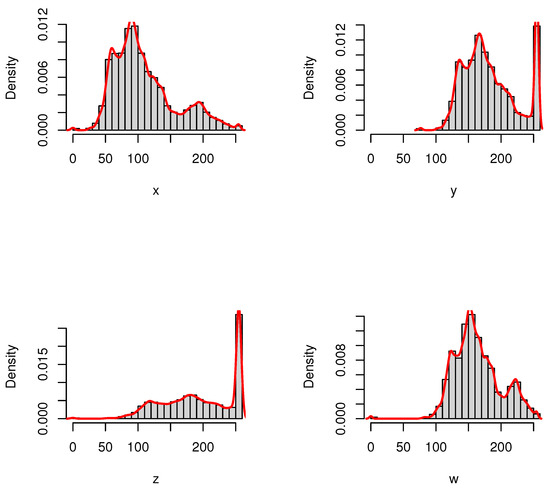

Next, we provide an example of image processing and compute the CRQF information and Fisher information (FI) measures for the original picture and its adjusted versions. Figure 2 shows a sample picture of two parrots (original picture) labeled as X and three adjusted versions of the original picture labeled as Y (increasing brightness), Z (increasing contrast), and W (gamma corrected). The available data of the main picture are

cells and the gray level of each cell has a value between 0 (black) and 1 (white). In order to examine the amount of content CRQF information measure of the original picture and compare with the information values of adjusted versions, we consider three cases as Y(), Z(), and W(). For pertinent details, see package in software (Pau et al. [24]).

Figure 2.

Sample picture of two parrots with its adjustments.

We have plotted in Figure 3 the extracted histograms with the corresponding empirical densities for pictures X, Y, Z, and W. As we can see from Figure 2 and Figure 3, the highest degree of similarity is first related to W and then to Y, whereas Z has the highest degree of divergence with respect to the original picture X. We have presented the CRQF information (for selected values of and ) and Fisher information (FI) measures for all four pictures in Table 1. It is easily seen that both information measures get increased when the similarity is decreased with respect to the original picture. This fact coincides with the minimum Fisher information principle. Therefore, the CRQF information measure can be considered as an efficient criteria, just as the Fisher information measure, in analyzing interior properties of the complex systems.

Figure 3.

The histograms and the corresponding empirical densities for pictures X, Y, Z, and W.

Table 1.

The CRQF information and FI measures.

8. Concluding Remarks

In this paper, we have proposed cumulative residual q-Fisher (CRQF) information, q-hazard rate function (QHR), cumulative residual generalized (CRG-) divergence, and Jensen-cumulative residual (JCR-) divergence measures. We have shown that the CRQF information measure can be expressed in terms of expectation involving the q-hazard rate function. Further, we have established some results concerning the CRQF information and CRG- divergence measures. We have specifically shown that the first derivative of CRG- divergence with respect to the associated parameter is connected to cumulative residual entropy measure and, when its parameter tends to zero, it is connected to the variance of the ratio of two survival functions. We have also presented some results associated with the CRQF information measure for two well-known mixture models, namely, arithmetic mixture (AM) and harmonic mixture (HM) models. We have specifically shown that the CRQF information of AM and HM models can be expressed in terms of power mean of CRG- divergence measures. Interestingly, we have shown that the harmonic mixture model possesses optimal information under three optimization problems associated with the cumulative residual divergence measure. We have also proposed a Jensen-cumulative residual divergence and a parametric version of the Jensen-cumulative residual Fisher (P-JCRF) information measures and have shown that they are connected. Finally, we have described an application of the CRQF information measure by considering an example in image processing. It will naturally be of great interest to study empirical versions of these measures and their potential applications to inferential problems. We are currently looking into this problem and hope to report the findings in a future paper.

Author Contributions

All authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cover, T.M.; Thomas, J.A. Information theory and statistics. Elem. Inf. Theory 1991, 1, 279–335. [Google Scholar]

- Zegers, P. Fisher information properties. Entropy 2015, 17, 4918–4939. [Google Scholar] [CrossRef] [Green Version]

- Fisher, R.A. Tests of significance in harmonic analysis. Proc. R. Soc. Lond. Ser. A 1929, 125, 54–59. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell. System. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef] [Green Version]

- Nielsen, F.; Nock, R. On the chi square and higher-order chi distances for approximating f-divergences. IEEE Signal Process. Lett. 2013, 21, 10–13. [Google Scholar] [CrossRef] [Green Version]

- Popescu, P.G.; Preda, V.; Sluşanschi, E.I. Bounds for Jeffreys-Tsallis and Jensen-Shannon-Tsallis divergences. Phys. Stat. Mech. Its Appl. 2014, 413, 280–283. [Google Scholar] [CrossRef]

- Sánchez-Moreno, P.; Zarzo, A.; Dehesa, J.S. Jensen divergence based on Fisher’s information. J. Phys. A Math. Theor. 2012, 45, 125305. [Google Scholar] [CrossRef] [Green Version]

- Bercher, J.F. Some properties of generalized Fisher information in the context of nonextensive thermostatistics. Phys. Stat. Mech. Its Appl. 2013, 392, 3140–3154. [Google Scholar] [CrossRef] [Green Version]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Johnson, O.; Vignat, C. Some results concerning maximum Rényi entropy distributions. In: Annales de l’Institut Henri Poincare (B). Probab. Stat. 2007, 43, 339–351. [Google Scholar]

- Furuichi, S. On the maximum entropy principle and the minimization of the Fisher information in Tsallis statistics. J. Math. Phys. 2009, 50, 013303. [Google Scholar] [CrossRef] [Green Version]

- Lutwak, E.; Lv, S.; Yang, D.; Zhang, G. Extensions of Fisher information and Stam’s inequality. IEEE Trans. Inf. Theory 2012, 58, 1319–1327. [Google Scholar] [CrossRef]

- Kharazmi, O.; Balakrishnan, N. Cumulative residual and relative cumulative residual Fisher information and their properties. IEEE Trans. Inf. Theory 2021, 67, 6306–6312. [Google Scholar] [CrossRef]

- Rao, M.; Chen, Y.; Vemuri, B.C.; Wang, F. Cumulative residual entropy: A new measure of information. IEEE Trans. Inf. Theory 2004, 50, 1220–1228. [Google Scholar] [CrossRef]

- Yamano, T. Some properties of q-logarithm and q-exponential functions in Tsallis statistics. Phys. Stat. Mech. Its Appl. 2002, 305, 486–496. [Google Scholar] [CrossRef]

- Masi, M. A step beyond Tsallis and Rényi entropies. Phys. Lett. A 2005, 338, 217–224. [Google Scholar] [CrossRef] [Green Version]

- Barlow, R.E.; Proschan, F. Statistical Theory of Reliability and Life Testing: Probability Models; Holt, Rinehart and Winston: New York, NY, USA, 1975. [Google Scholar]

- Basu, A.; Harris, I.R.; Hjort, N.L.; Jones, M.C. Robust and efficient estimation by minimising a density power divergence. Biometrika 1998, 85, 549–559. [Google Scholar] [CrossRef] [Green Version]

- Ghosh, A.; Harris, I.R.; Maji, A.; Basu, A.; Pardo, L. A generalized divergence for statistical inference. Bernoulli 2017, 23, 2746–2783. [Google Scholar] [CrossRef] [Green Version]

- Marshall, A.W.; Olkin, I. Life Distributions; Springer: New York, NY, USA, 2007. [Google Scholar]

- Schmidt, U. Axiomatic Utility Theory Under Risk: Non-Archimedean Representations and Application to Insurance Economics; Springer: Berlin, Germany, 2012. [Google Scholar]

- Asadi, M.; Ebrahimi, N.; Kharazmi, O.; Soofi, E.S. Mixture models, Bayes Fisher information, and divergence measures. IEEE Trans. Inf. Theory 2018, 65, 2316–2321. [Google Scholar] [CrossRef]

- Cvetkovski, Z. Inequalities: Theorems, Techniques and Selected Problems; Springer: New York, NY, USA, 2012. [Google Scholar]

- Pau, G.; Fuchs, F.; Sklyar, O.; Boutros, M.; Huber, W. EBImage-an R package for image processing with applications to cellular phenotypes. Bioinformatics 2010, 26, 979–981. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).