BMEFIQA: Blind Quality Assessment of Multi-Exposure Fused Images Based on Several Characteristics

Abstract

:1. Introduction

- (1)

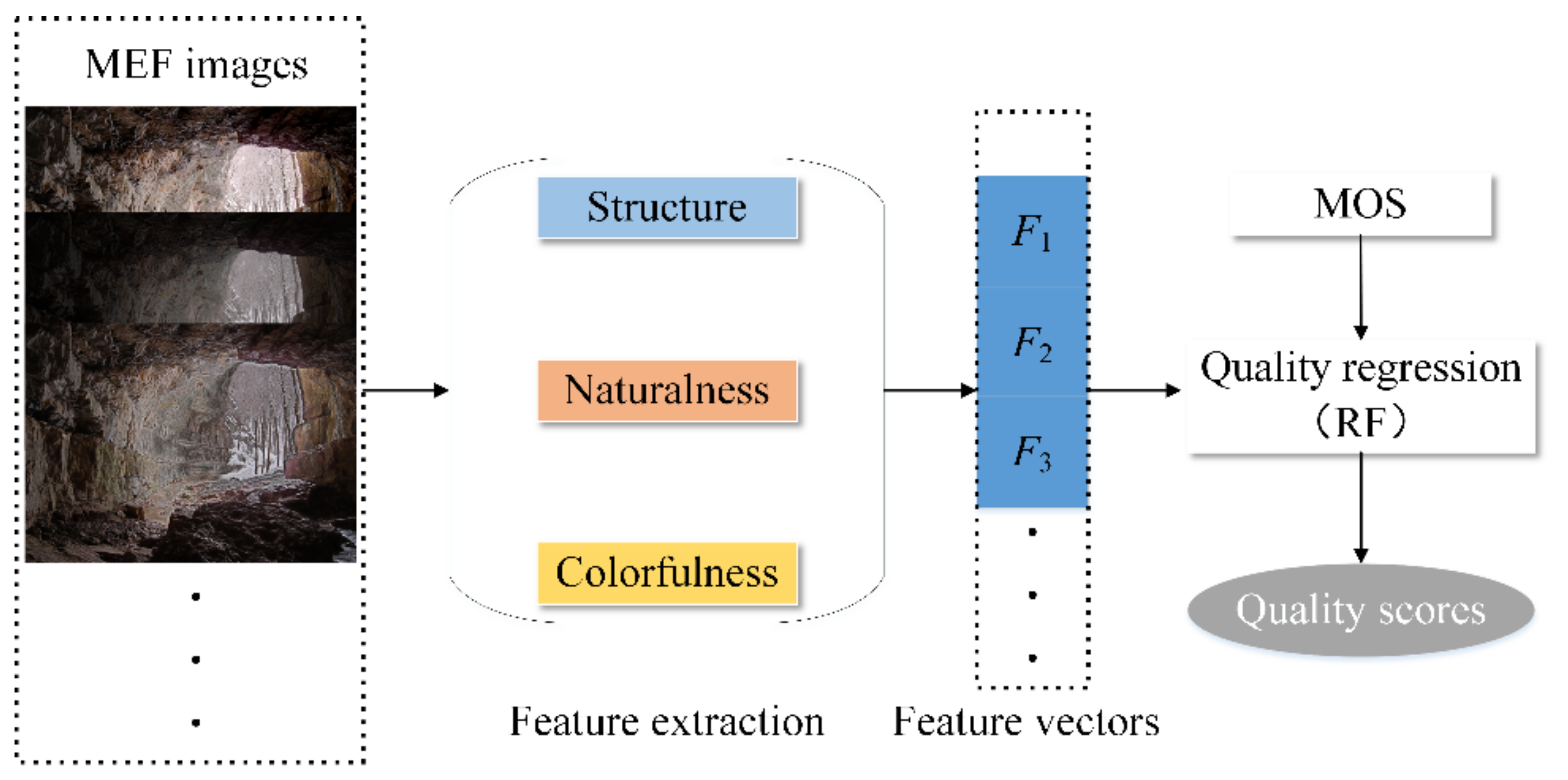

- Inspired by the various characteristics of MEF images, structural, naturalness, and colorfulness features are extracted from different standpoints to perceive their distortion.

- (2)

- On account of structure loss produced by abnormal exposure, the exposure map is weighted to the gradient similarity to detect structural distortion.

- (3)

- The experimental results demonstrate that the proposed method is competent for MEF images and superior to several NR-IQA methods.

2. Related Works

3. The Proposed BMEFIQA Method

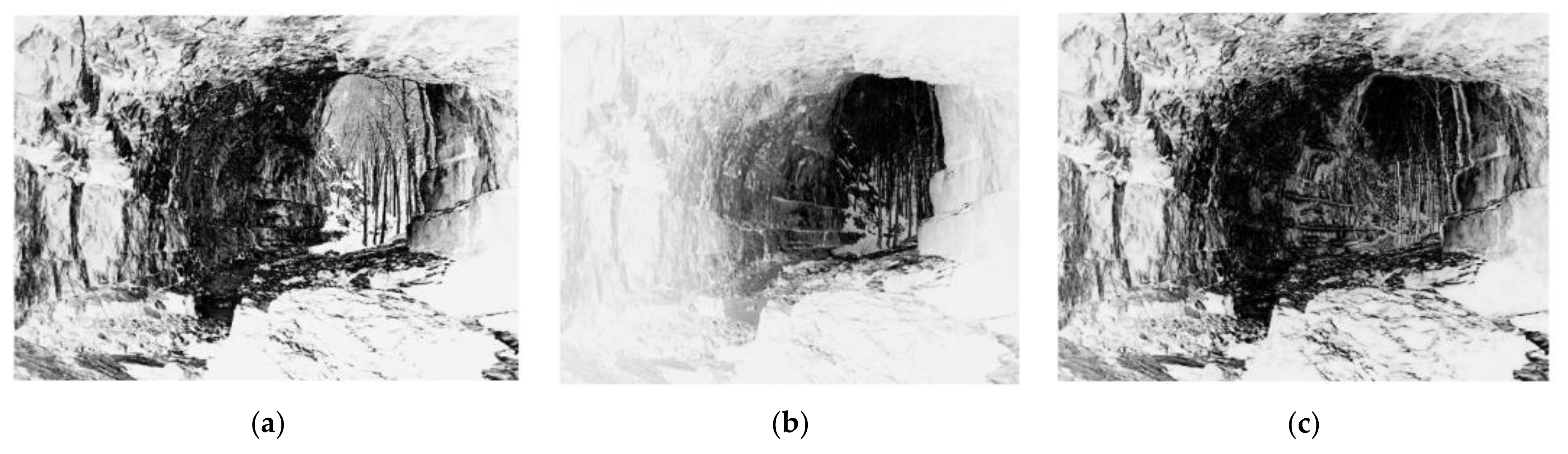

3.1. Structural Features

3.2. Naturalness Features

3.3. Colorfulness Features

3.4. Feature Aggregation and Quality Regression

4. Experiment Results

4.1. Experimental Protocol

4.2. Performance Comparison

4.3. Impacts of Different Features and Block Sizes

4.4. Run Time

4.5. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Xu, H.; Ma, J.; Zhang, X.P. MEF-GAN: Multi-exposure image fusion via generative adversarial networks. IEEE Trans. Image Process. 2020, 29, 7203–7216. [Google Scholar] [CrossRef]

- Luo, T.; Jiang, G.; Yu, M.; Xu, H.; Gao, W. Robust high dynamic range color image watermarking method based on feature map extraction. Signal Process. 2019, 155, 83–95. [Google Scholar] [CrossRef]

- Qi, Y.; Zhou, S.; Zhang, Z.; Luo, S.; Lin, X.; Wang, L.; Qiang, B. Deep unsupervised learning based on color un-referenced loss functions for multi-exposure image fusion. Inf. Fusion 2021, 66, 18–39. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. Reduced and no reference visual quality assessment. IEEE Signal Process. Mag. 2011, 29, 29–40. [Google Scholar] [CrossRef]

- Moorthy, A.K.; Bovik, A.C. Blind image quality assessment: From natural scene statistics to perceptual quality. IEEE Trans. Image Process. 2011, 20, 3350–3364. [Google Scholar] [CrossRef] [PubMed]

- Saad, M.A.; Bovik, A.C.; Charrier, C. Blind image quality assessment: A natural scene statistics approach in the DCT domain. IEEE Trans. Image Process. 2012, 21, 3339–3352. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Liu, L.; Dong, H.; Huang, H.; Bovik, A.C. No-reference image quality assessment in curvelet domain. Signal Process. Image Commun. 2014, 29, 494–505. [Google Scholar] [CrossRef]

- Xue, W.; Mou, X.; Zhang, L.; Bovik, A.C.; Feng, X. Blind image quality assessment using joint statistics of gradient magnitude and Laplacian features. IEEE Trans. Image Process. 2014, 23, 4850–4862. [Google Scholar] [CrossRef]

- Fang, Y.; Ma, K.; Wang, Z.; Lin, W.; Fang, Z.; Zhai, G. No-reference quality assessment of contrast-distorted images based on natural scene statistics. IEEE Signal Process. Lett. 2014, 22, 838–842. [Google Scholar] [CrossRef]

- Li, Q.; Lin, W.; Fang, Y. No-reference quality assessment for multiply-distorted images in gradient domain. IEEE Signal Process. Lett. 2016, 23, 541–545. [Google Scholar] [CrossRef]

- Liu, L.; Hua, Y.; Zhao, Q.; Huang, H.; Bovik, A.C. Blind image quality assessment by relative gradient statistics and adaboosting neural network. Signal Process. Image Commun. 2016, 40, 1–15. [Google Scholar] [CrossRef]

- Gu, K.; Lin, W.; Zhai, G.; Yang, X.; Zhang, W.; Chen, C.W. No-reference quality metric of contrast-distorted images based on information maximization. IEEE Trans. Cybern. 2017, 47, 4559–4565. [Google Scholar] [CrossRef]

- Oszust, M. Local feature descriptor and derivative filters for blind image quality assessment. IEEE Signal Process. Lett. 2019, 26, 322–326. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Bovik, A.C. A Feature-Enriched Completely Blind Image Quality Evaluator. IEEE Trans. Image Process. 2015, 24, 2579–2591. [Google Scholar] [CrossRef] [Green Version]

- Xu, J.; Ye, P.; Li, Q.; Du, H.; Liu, Y.; David, D. Blind Image Quality Assessment Based on High Order Statistics Aggregation. IEEE Trans. Image Process. 2016, 25, 4444–4457. [Google Scholar] [CrossRef]

- Gu, K.; Wang, S.; Zhai, G.; Ma, S.; Yang, X.; Lin, W.; Zhang, W.; Gao, W. Blind quality assessment of tone-mapped images via analysis of information, naturalness, and structure. IEEE Trans. Multimed. 2016, 18, 432–443. [Google Scholar] [CrossRef]

- Kundu, D.; Ghadiyaram, D.; Bovik, A.C.; Evans, B.L. No-reference quality assessment of tone-mapped HDR pictures. IEEE Trans. Image Process. 2017, 26, 2957–2971. [Google Scholar] [CrossRef]

- Zheng, Y.; Essock, E.A.; Hansen, B.C.; Haun, A.M. A new metric based on extended spatial frequency and its application to DWT based fusion algorithms. Inf. Fusion. 2007, 8, 177–192. [Google Scholar] [CrossRef]

- Ma, K.; Zeng, K.; Wang, Z. Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 2015, 24, 3345–3356. [Google Scholar] [CrossRef]

- Xing, L.; Zeng, H.; Chen, J.; Zhu, J.; Cai, C.; Ma, K. Multi-exposure image fusion quality assessment using contrast information. In Proceedings of the International Symposium on Intelligent Signal Processing and Communication Systems, Xiamen, China, 6–9 November 2017. [Google Scholar]

- Fang, Y.; Zeng, Y.; Zhu, H.; Zhai, G. Image quality assessment of image fusion for both static and dynamic scenes. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019. [Google Scholar]

- Deng, C.W.; Li, Z.; Wang, S.G.; Liu, X.; Dai, J.H. Saturation-based quality assessment for colorful multi-exposure image fusion. Int. J. Adv. Robot. Syst. 2017, 14, 1–15. [Google Scholar] [CrossRef]

- Martinez, J.; Pistonesi, S.; Maciel, M.C.; Flesia, A.G. Multiscale fidelity measure for image fusion quality assessment. Inf. Fusion 2019, 50, 197–211. [Google Scholar] [CrossRef]

- He, Z.; Song, Y.; Zhong, C.; Li, L. Curvature and Entropy Statistics-Based Blind Multi-Exposure Fusion Image Quality Assessment. Symmetry 2021, 13, 1446. [Google Scholar] [CrossRef]

- Peng, Y.; Feng, B.; Yan, Y.; Gao, X. Research on multi-exposure image fusion algorithm based on detail enhancement. In Proceedings of the International Conference on Mechanical Engineering, Guangzhou, China, 14 October 2021. [Google Scholar]

- Mertens, T.; Kautz, J.; Van Reeth, F. Exposure fusion: A simple and practical alternative to high dynamic range photography. Comput. Graph. Forum. 2009, 28, 161–171. [Google Scholar] [CrossRef]

- Raman, S.; Chaudhuri, S. Bilateral filter based compositing for variable exposure photography. In Proceedings of the Eurographics (Short Papers), Munich, Germany, 30 March–3 April 2009; pp. 1–3. [Google Scholar]

- Gu, B.; Li, W.; Wong, J.; Zhu, M.; Wang, M. Gradient field multi-exposure images fusion for high dynamic range image visualization. J. Vis. Commun. Image Represent. 2012, 23, 604–610. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Lee, D.H.; Yoon, Y.J.; Kang, S.; Ko, S.J. Correction of the overexposed region in digital color image. IEEE Trans. Consum. Electron. 2014, 60, 173–178. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Image enhancement in the spatial domain. Digit. Image Process. 2002, 2, 75–147. [Google Scholar]

- Ennis, R.J.; Zaidi, Q. Geometrical structure of perceptual color space: Mental representations and adaptation invariance. J. Vis. 2019, 19, 1. [Google Scholar] [CrossRef] [Green Version]

- Choi, L.K.; You, J.; Bovik, A.C. Referenceless prediction of perceptual fog density and perceptual image defogging. IEEE Trans. Image Process. 2015, 24, 3888–3901. [Google Scholar] [CrossRef]

- Multi-exposure Fusion Image Database. Available online: http://ivc.uwaterloo.ca/database/MEF/MEFDatabase.php (accessed on 11 July 2015).

- Li, Z.; Zheng, J.; Rahardja, S. Detail-enhanced exposure fusion. IEEE Trans. Image Process. 2012, 21, 4672–4676. [Google Scholar]

- Li, S.; Kang, X. Fast multi-exposure image fusion with median filter and recursive filter. IEEE Trans. Consum. Electron. 2012, 58, 626–632. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Kang, X.; Hu, J. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar]

- Antkowiak, J.; Baina, T.J. Final Report from the Video Quality Experts Group on the Validation of Objective Models of Video Quality Assessment; ITU-T Standards Contributions COM: Geneva, Switzerland, 2000. [Google Scholar]

| No. | Source Sequences | Size | Image Source |

|---|---|---|---|

| 1 | Balloons | 339 × 512 × 9 | Erik Reinhard |

| 2 | Belgium house | 512 × 384 × 9 | Dani Lischinski |

| 3 | Lamp1 | 512 × 384 × 15 | Martin Cadik |

| 4 | Candle | 512 × 364 × 10 | HDR Projects |

| 5 | Cave | 512 × 384 × 4 | Bartlomiej Okonek |

| 6 | Chinese garden | 512 × 340 × 3 | Bartlomiej Okonek |

| 7 | Farmhouse | 512 × 341 × 3 | HDR Projects |

| 8 | House | 512 × 340 × 4 | Tom Mertens |

| 9 | Kluki | 512 × 341 × 3 | Bartlomiej Okonek |

| 10 | Lamp2 | 512 × 342 × 6 | HDR Projects |

| 11 | Landscape | 512 × 341 × 3 | HDRsoft |

| 12 | Lighthouse | 512 × 340 × 3 | HDRsoft |

| 13 | Madison capitol | 512 × 384 × 30 | Chaman Singh Verma |

| 14 | Memorial | 341 × 512 × 16 | Paul Debevec |

| 15 | Office | 512 × 340 × 6 | Matlab |

| 16 | Tower | 341 × 512 × 3 | Jacques Joffre |

| 17 | Venice | 512 × 341 × 3 | HDRsoft |

| Metrics | PLCC | SROCC | RMSE |

|---|---|---|---|

| DIIVINE | 0.491 | 0.403 | 1.452 |

| BLINDS-II | 0.534 | 0.346 | 1.409 |

| BRISQUE | 0.414 | 0.380 | 1.517 |

| CurveletQA | 0.371 | 0.337 | 1.548 |

| GradLog | 0.631 | 0.567 | 1.293 |

| ContrastQA | 0.458 | 0.412 | 1.482 |

| GWH-GLBP | 0.163 | 0.113 | 1.645 |

| OG | 0.523 | 0.525 | 1.421 |

| NIQMC | 0.519 | 0.404 | 1.425 |

| SCORER | 0.481 | 0.494 | 1.461 |

| BTMQI | 0.452 | 0.343 | 1.487 |

| HIGRADE-1 | 0.561 | 0.566 | 1.380 |

| HIGRADE-2 | 0.585 | 0.583 | 1.352 |

| Proposed | 0.694 | 0.673 | 1.200 |

| Models | SF | NF | CF | PLCC | SROCC | RMSE |

|---|---|---|---|---|---|---|

| Model-1 | √ | × | × | 0.646 | 0.506 | 1.272 |

| Model-2 | × | √ | × | 0.557 | 0.457 | 1.385 |

| Model-3 | × | × | √ | 0.389 | 0.416 | 1.535 |

| Model-4 | √ | √ | × | 0.651 | 0.520 | 1.263 |

| Model-5 | √ | × | √ | 0.658 | 0.640 | 1.255 |

| Model-6 | × | √ | √ | 0.604 | 0.599 | 1.328 |

| Model-7 | √ | √ | √ | 0.694 | 0.673 | 1.200 |

| Block Size | PLCC | SROCC | RMSE | Time (s) |

|---|---|---|---|---|

| 8 × 8 | 0.694 | 0.673 | 1.200 | 1.3383 |

| 16 × 16 | 0.677 | 0.655 | 1.227 | 0.4744 |

| 32 × 32 | 0.683 | 0.658 | 1.218 | 0.2857 |

| 64 × 64 | 0.688 | 0.659 | 1.210 | 0.2347 |

| Methods | DIIVINE | BLINDS_II | BRISQUE | CurveletQA |

|---|---|---|---|---|

| Time (s) | 6.6024 | 14.7361 | 0.0414 | 2.5141 |

| Methods | GradLog | ContrastQA | GWH-GLBP | OG |

| Time (s) | 0.0343 | 0.0259 | 0.0576 | 0.0314 |

| Methods | NIQMC | SCORER | BTMQI | HIGRADE-1 |

| Time (s) | 1.9241 | 0.5878 | 0.0758 | 0.2602 |

| Methods | HIGRADE-2 | Proposed | ||

| Time (s) | 1.9040 | 1.3383 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, J.; Li, H.; Zhong, C.; He, Z.; Ma, Y. BMEFIQA: Blind Quality Assessment of Multi-Exposure Fused Images Based on Several Characteristics. Entropy 2022, 24, 285. https://doi.org/10.3390/e24020285

Shi J, Li H, Zhong C, He Z, Ma Y. BMEFIQA: Blind Quality Assessment of Multi-Exposure Fused Images Based on Several Characteristics. Entropy. 2022; 24(2):285. https://doi.org/10.3390/e24020285

Chicago/Turabian StyleShi, Jianping, Hong Li, Caiming Zhong, Zhouyan He, and Yeling Ma. 2022. "BMEFIQA: Blind Quality Assessment of Multi-Exposure Fused Images Based on Several Characteristics" Entropy 24, no. 2: 285. https://doi.org/10.3390/e24020285

APA StyleShi, J., Li, H., Zhong, C., He, Z., & Ma, Y. (2022). BMEFIQA: Blind Quality Assessment of Multi-Exposure Fused Images Based on Several Characteristics. Entropy, 24(2), 285. https://doi.org/10.3390/e24020285