Abstract

Control problems with incomplete information and memory limitation appear in many practical situations. Although partially observable stochastic control (POSC) is a conventional theoretical framework that considers the optimal control problem with incomplete information, it cannot consider memory limitation. Furthermore, POSC cannot be solved in practice except in special cases. In order to address these issues, we propose an alternative theoretical framework, memory-limited POSC (ML-POSC). ML-POSC directly considers memory limitation as well as incomplete information, and it can be solved in practice by employing the technique of mean-field control theory. ML-POSC can generalize the linear-quadratic-Gaussian (LQG) problem to include memory limitation. Because estimation and control are not clearly separated in the LQG problem with memory limitation, the Riccati equation is modified to the partially observable Riccati equation, which improves estimation as well as control. Furthermore, we demonstrate the effectiveness of ML-POSC for a non-LQG problem by comparing it with the local LQG approximation.

1. Introduction

Control problems of systems with incomplete information and memory limitation appear in many practical situations. These constraints become especially predominant when designing the control of small devices [1,2], and are important for understanding the control mechanisms of biological systems [3,4,5,6,7,8] because their sensors are extremely noisy and their controllers can only have severely limited memories.

Partially observable stochastic control (POSC) is a conventional theoretical framework that considers the optimal control problem with one of these constraints, namely, the incomplete information of the system state (Figure 1b) [9]. Because the POSC controller cannot completely observe the state of the system, it determines the control based on the noisy observation history of the state. POSC can be solved in principle [10,11,12] by converting it to a completely observable stochastic control (COSC) of the posterior probability of the state, as the posterior probability represents the sufficient statistics of the observation history. The posterior probability and the optimal control are obtained by solving the Zakai equation and the Bellman equation, respectively.

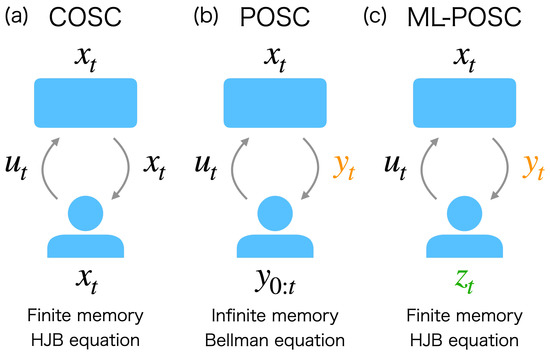

Figure 1.

Schematic diagram of (a) completely observable stochastic control (COSC), (b) partially observable stochastic control (POSC), and (c) memory-limited partially observable stochastic control (ML-POSC). The top and bottom figures represent the system and controller, respectively; is the state of the system; , , and are the observation, memory, and control of the controller, respectively. (a) In COSC, the controller can completely observe the state , and determines the control based on the state , i.e., . Only finite-dimensional memory is required to store the state , and the optimal control is obtained by solving the Hamilton–Jacobi–Bellman (HJB) equation, which is a partial differential equation. (b) In POSC, the controller cannot completely observe the state ; instead, it obtains the noisy observation of the state . The control is determined based on the observation history , i.e., . An infinite-dimensional memory is implicitly assumed to store the observation history . Furthermore, to obtain the optimal control , the Bellman equation (a functional differential equation) needs to be solved, which is generally intractable, even numerically. (c) In ML-POSC, the controller is only accessible to the noisy observation of the state , as in POSC. In addition, it has only finite-dimensional memory , which cannot completely memorize the the observation history . The controller of ML-POSC compresses the observation history into the finite-dimensional memory , then determines the control based on the memory , i.e., . The optimal control is obtained by solving the HJB equation (a partial differential equation), as in COSC.

However, POSC has three practical problems with respect to the implementation of the controller which originate from the ignorance of the other constraint, namely, the memory limitation of the controller [1,2]. First, a controller designed by POSC should ideally have an infinite-dimensional memory to store and compute the posterior probability from the observation history. Second, the memory of the controller cannot have intrinsic stochasticity other than the observation noise to accurately compute the posterior probability via the Zakai equation. Third, POSC does not consider the cost originating from the memory update, which can be regarded as a cost of estimation. In light of the dualistic roles played by estimation and control, considering only control cost by ignoring estimation cost is asymmetric. As a result, POSC is not practical for control problems where the memory size, noise, and cost are non-negligible. Therefore, we need an alternative theoretical framework considering memory limitation to circumvent these three problems.

Furthermore, POSC has another crucial problem in obtaining the optimal state control by solving the Bellman equation [3,4]. Because the posterior probability of the state is infinite-dimensional, POSC corresponds to an infinite-dimensional COSC. In the infinite-dimensional COSC, the Bellman equation becomes a functional differential equation, which needs to be solved in order to obtain the optimal state control. However, solving a functional differential equation is generally intractable, even numerically.

In this work, we propose an alternative theoretical framework to the conventional POSC which can address the above-mentioned two issues. We call it memory-limited POSC (ML-POSC), in which memory limitation as well as incomplete information are directly accounted (Figure 1c). The conventional POSC derives the Zakai equation without considering memory limitations. Then, the optimal state control is supposed to be derived by solving the Bellman equation, even though we do not have any practical way to do this. In contrast, ML-POSC first postulates the finite-dimensional and stochastic memory dynamics explicitly by taking the memory limitation into account and then jointly optimizes the memory dynamics and state control by considering the memory and control costs. As a result, unlike the conventional POSC, ML-POSC finds both the optimal state control and the optimal memory dynamics with given memory limitations. Furthermore, we show that the Bellman equation of ML-POSC can be reduced to the Hamilton–Jacobi–Bellman (HJB) equation by employing a trick from the mean-field control theory [13,14,15]. While the Bellman equation is a functional differential equation, the HJB equation is a partial differential equation. As a result, ML-POSC can be solved, at least numerically.

The idea behind ML-POSC is closely related to that of the finite-state controller [16,17,18,19,20,21,22]. Finite-state controllers have been studied using the partially observable Markov decision process (POMDP), that is, the discrete time and state POSC. The finite-dimensional memory of ML-POSC can be regarded as an extension of the finite-state controller of POMDP to the continuous time and state setting. Nonetheless, the algorithms of the finite-state controller cannot be directly extended to the continuous setting, as they strongly depend on the discreteness. Although Fox and Tishby extended the finite-state controller to the continuous setting, their algorithm is restricted to the special case [1,2]. ML-POSC resolves this problem by employing the technique of the mean-field control theory.

In the linear-quadratic-Gaussian (LQG) problem of the conventional POSC, the Zakai equation and the Bellman equation are reduced to the Kalman filter and the Riccati equation, respectively [9,23]. Because the infinite-dimensional Zakai equation is reduced to the finite-dimensional Kalman filter, the LQG problem of the conventional POSC can be discussed in terms of ML-POSC. We show that the Kalman filter corresponds to the optimal memory dynamics of ML-POSC. Moreover, ML-POSC can generalize the LQG problem to include memory limitations such as the memory noise and cost. Because estimation and control are not clearly separated in the LQG problem with memory limitation, the Riccati equation for control is modified to include estimation, which in this paper is called the partially observable Riccati equation. We demonstrate that the partially observable Riccati equation is superior to the conventional Riccati equation as concerns the LQG problem with memory limitation.

Then, we investigate the potential effectiveness of ML-POSC for a non-LQG problem by comparing it with the local LQG approximation of the conventional POSC [3,4]. In the local LQG approximation, the Zakai equation and the Bellman equation are locally approximated by the Kalman filter and the Riccati equation, respectively. Because the Bellman equation (a functional differential equation) is reduced to the Riccati equation (an ordinary differential equation), the local LQG approximation can be solved numerically. However, the performance of the local LQG approximation may be poor in a highly non-LQG problem, as the local LQG approximation ignores non-LQG information. In contrast, ML-POSC reduces the Bellman equation to the HJB equation while maintaining non-LQG information. We demonstrate that ML-POSC can provide a better result than the local LQG approximation in a non-LQG problem.

This paper is organized as follows: In Section 2, we briefly review the conventional POSC. In Section 3, we formulate ML-POSC. In Section 4, we propose the mean-field control approach to ML-POSC. In Section 5, we investigate the LQG problem of the conventional POSC based on ML-POSC. In Section 6, we generalize the LQG problem to include memory limitation. In Section 7, we show numerical experiments involving a LQG problem with memory limitation and a non-LQG problem. Finally, in Section 8, we discuss our work.

2. Review of Partially Observable Stochastic Control

In this section, we briefly review the conventional POSC [11,15].

2.1. Problem Formulation

In this subsection, we formulate the conventional POSC [11,15]. The state and the observation at time evolve by the following stochastic differential equations (SDEs):

where and obey and , respectively, and are independent standard Wiener processes, and is the control. Here, is assumed to be invertible. In POSC, because the controller cannot completely observe the state , the control is determined based on the observation history , as follows:

The objective function of POSC is provided by the following expected cumulative cost function:

where f is the cost function, g is the terminal cost function, is the probability of and given u as a parameter, and is the expectation with respect to probability p. Throughout this paper, the time horizon T is assumed to be finite.

POSC is the problem of finding the optimal control function that minimizes the objective function as follows:

2.2. Derivation of Optimal Control Function

In this subsection, we briefly review the derivation of the optimal control function of the conventional POSC [11,15]. We first define the unnormalized posterior probability density function . We omit for notational simplicity. Here, obeys the following Zakai equation:

where and is the forward diffusion operator, which is defined by

where . Then, the objective function (4) can be calculated as follows:

where and . From (6) and (8), POSC is converted into a COSC of . As a result, POSC can be approached in the similar way as COSC, and the optimal control function is provided by the following proposition.

Proposition 1

([11,15]). The optimal control function of POSC is provided by

where is the Hamiltonian, which is defined by

is the backward diffusion operator, which is defined by

We note that is the conjugate of ; furthermore, is the value function, which is the solution of the following Bellman equation:

where .

Proof.

The proof is shown in [11,15]. □

The optimal control function is obtained by solving the Bellman Equation (12). The controller determines the optimal control based on the posterior probability . The posterior probability is obtained by solving the Zakai Equation (6). As a result, POSC can be solved in principle.

However, POSC has three practical problems with respect to the memory of the controller. First, the controller should have an infinite-dimensional memory to store and compute the posterior probability from the observation history . Second, the memory of the controller cannot have intrinsic stochasticity other than the observation to accurately compute the posterior probability via the Zakai Equation (6). Third, POSC does not consider the cost originating from the memory update, which can be regarded as a cost of estimation. In light of the dualistic roles played by estimation and control, considering only control cost by ignoring estimation cost is asymmetric. As a result, POCS is not practical for control problems where the memory size, noise, and cost are non-negligible.

Furthermore, POSC has another crucial problem in obtaining the optimal control function by solving the Bellman Equation (12). Because the posterior probability q is infinite-dimensional, the associated Bellman Equation (12) becomes a functional differential equation. However, solving a functional differential equation is generally intractable even numerically. As a result, POCS cannot be solved in practice.

3. Memory-Limited Partially Observable Stochastic Control

In order to address the above-mentioned problems, we propose an alternative theoretical framework to the conventional POSC called ML-POSC. In this section, we formulate ML-POSC.

3.1. Problem Formulation

In this subsection, we formulate ML-POSC. ML-POSC determines the control based on the finite-dimensional memory as follows:

The memory dimension is determined not by the optimization but by the prescribed memory limitation of the controller to be used. Comparing (3) and (13), the memory can be interpreted as the compression of the observation history . While the conventional POSC compresses the observation history into the infinite-dimensional posterior probability , ML-POSC compresses it into the finite-dimensional memory .

ML-POSC formulates the memory dynamics with the following SDE:

where obeys , is the standard Wiener process, and is the control for the memory dynamics. This memory dynamics has three important properties: (i) because it depends on the observation , the memory can be interpreted as the compression of the observation history ; (ii) because it depends on the standard Wiener process , ML-POSC can consider the memory noise explicitly; (iii) because it depends on the control , it can be optimized through the control .

The objective function of ML-POSC is provided by the following expected cumulative cost function:

Because the cost function f depends on the memory control as well as the state control , ML-POSC can consider the memory control cost (state estimation cost) as well as the state control cost explicitly.

ML-POSC optimizes the state control function u and the memory control function v based on the objective function , as follows:

ML-POSC first postulates the finite-dimensional and stochastic memory dynamics explicitly, then jointly optimizes the state and memory control function by considering the state and memory control cost. As a result, unlike the conventional POSC, ML-POSC can consider memory limitation as well as incomplete information.

3.2. Problem Reformulation

Although the formulation of ML-POSC in the previous subsection clarifies its relationship with that of the conventional POSC, it is inconvenient for further mathematical investigations. In order to resolve this problem, we reformulate ML-POSC in this subsection. The formulation in this subsection is simpler and more general than that in the previous subsection.

We first define the extended state as follows:

where . The extended state evolves by the following SDE:

where obeys , is the standard Wiener process, and is the control. ML-POSC determines the control based solely on the memory , as follows:

The extended state SDE (18) includes the previous state, observation, and memory SDEs (1), (2) and (14) as a special case; they can be represented as follows:

where .

The objective function of ML-POSC is provided by the following expected cumulative cost function:

where is the cost function and is the terminal cost function. It is obvious that this objective function (21) is more general than the previous one (15).

ML-POSC is the problem of finding the optimal control function that minimizes the objective function as follows:

In the following section, we mainly consider the formulation in this subsection rather than that of the previous subsection, as it is simpler and more general. Moreover, we omit for the notational simplicity.

4. Mean-Field Control Approach

If the control is determined based on the extended state , i.e., , ML-POSC is the same as COSC of the extended state , and can be solved by the conventional COSC approach [10]. However, because ML-POSC determines the control based solely on the memory , i.e., , ML-POSC cannot be solved in a similar way as COSC. In order to solve ML-POSC, we propose the mean-field control approach in this section. Because the mean-field control approach is more general than the COSC approach, it can solve COSC and ML-POSC in a unified way.

4.1. Derivation of Optimal Control Function

In this subsection, we propose the mean-field control approach to ML-POSC. We first show that ML-POSC can be converted into a deterministic control of the probability density function, which is similar to the conventional POSC [11,15]. This approach is used in the mean-field control as well [13,14,24,25]. The extended state SDE (18) can be converted into the following Fokker–Planck (FP) equation:

where the initial condition is provided by and the forward diffusion operator is defined by (7). The objective function of ML-POSC (21) can be calculated as follows:

where and . From (23) and (24), ML-POSC is converted into a deterministic control of . As a result, ML-POSC can be approached in a similar way as the deterministic control, and the optimal control function is provided by the following lemma.

Lemma 1.

Proof.

The proof is shown in Appendix A. □

The controller of ML-POSC determines the optimal control based on the memory , not the posterior probability . Therefore, ML-POSC can consider memory limitation as well as incomplete information.

However, because the Bellman Equation (26) is a functional differential equation, it cannot be solved, even numerically, which is the same problem as the conventional POSC. We resolve this problem by employing the technique of the mean-field control theory [13,14] as follows.

Theorem 1.

Proof.

The proof is shown in Appendix B. □

While the Bellman Equation (26) is a functional differential equation, the HJB Equation (28) is a partial differential equation. As a result, unlike the conventional POSC, ML-POSC can be solved in practice.

We note that the mean-field control technique is applicable to the conventional POSC as well, and we obtain the HJB equation of the conventional POSC [15]. However, the HJB equation of the conventional POSC is not closed by a partial differential equation due to the last term of the Bellman Equation (12). As a result, the mean-field control technique is not effective with the conventional POSC except in a special case [15].

In the conventional POSC, the state estimation (memory control) and the state control are clearly separated. As a result, the state estimation and the state control are optimized by the Zakai Equation (6) and the Bellman Equation (12), respectively. In contrast, because ML-POSC considers memory limitation as well as incomplete information, the state estimation and the state control are not clearly separated. As a result, ML-POSC jointly optimizes the state estimation and the state control based on the FP Equation (23) and the HJB Equation (28).

4.2. Comparison with Completely Observable Stochastic Control

In this subsection, we show the similarities and differences between ML-POSC and COSC of the extended state. While ML-POSC determines the control based solely on the memory , i.e., , COSC of the extended state determines the control based on the extended state , i.e., . The optimal control function of COSC of the extended state is provided by the following proposition.

Proposition 2

Proof.

The conventional proof is shown in [10]. We note that it can be proven in a similar way as ML-POSC, which is shown in Appendix C. □

Although the HJB Equation (28) is the same between ML-POSC and COSC, the optimal control function is different. While the optimal control function of COSC is provided by the minimization of the Hamiltonian (29), that of ML-POSC is provided by the minimization of the conditional expectation of the Hamiltonian (27). This is reasonable, as the controller of ML-POSC needs to estimate the state from the memory.

4.3. Numerical Algorithm

In this subsection, we briefly explain a numerical algorithm to obtain the optimal control function of ML-POSC (27). Because the optimal control function of COSC (29) depends only on the backward HJB Equation (28), it can be obtained by solving the HJB equation backwards from the terminal condition [10,26,27]. In contrast, because the optimal control function of ML-POSC (27) depends on the forward FP Equation (23) as well as the backward HJB Equation (28), it cannot be obtained in a similar way as COSC. Because the backward HJB equation depends on the forward FP equation through the optimal control function of ML-POSC, the HJB equation cannot be solved backwards from the terminal condition. As a result, ML-POSC needs to solve the system of HJB-FP equations.

The system of HJB-FP equations appears in the mean-field game and control [28,29,30], and many numerical algorithms have been developed [31,32,33]. Therefore, unlike the conventional POSC, ML-POSC can be solved in practice using these algorithms. Furthermore, unlike the mean-field game and control, the coupling of HJB-FP equations is limited to the optimal control function in ML-POSC. By exploiting this property, more efficient algorithms may be proposed for ML-POSC [34].

In this paper, we use the forward–backward sweep method (the fixed-point iteration method) to obtain the optimal control function of ML-POSC [33,34,35,36,37], which is one of the most basic algorithms for the system of HJB-FP equations. The forward–backward sweep method computes the forward FP Equation (23) and the backward HJB Equation (28) alternately. In the mean-field game and control, the convergence of the forward–backward sweep method is not guaranteed. In contrast, it is guaranteed in ML-POSC because the coupling of HJB-FP equations is limited to the optimal control function [34].

5. Linear-Quadratic-Gaussian Problem without Memory Limitation

In the LQG problem of the conventional POSC, the Zakai Equation (6) and the Bellman Equation (12) are reduced to the Kalman filter and the Riccati equation, respectively [9,23]. Because the infinite-dimensional Zakai equation is reduced to the finite-dimensional Kalman filter, the LQG problem of the conventional POSC can be discussed in terms of ML-POSC. In this section, we briefly review the LQG problem of the conventional POSC, then reproduce the Kalman filter and the Riccati equation from the viewpoint of ML-POSC. The LQG problem of the conventional POSC corresponds to the LQG problem without memory limitation, as it does not consider the memory noise and cost.

5.1. Review of Partially Observable Stochastic Control

In this subsection, we briefly review the LQG problem of the conventional POSC [9,23]. The state and the observation at time evolve by the following SDEs:

where obeys the Gaussian distribution , is an arbitrary real vector, and are independent standard Wiener processes, and is the control. Here, is assumed to be invertible. The objective function is provided by the following expected cumulative cost function:

where , , and . The LQG problem of the conventional POSC is to find the optimal control function that minimizes the objective function , as follows:

In the LQG problem of the conventional POSC, the posterior probability is provided by the Gaussian distribution , and is reduced to without loss of performance.

Proposition 3

([9,23]). In the LQG problem without memory limitation, the optimal control function of POSC (33) is provided by

where and are the solutions of the following Kalman filter:

and where and . is the solution of the following Riccati equation:

where .

Proof.

The proof is shown in [9,23]. □

5.2. Memory-Limited Partially Observable Stochastic Control

Because the infinite-dimensional Zakai Equation (6) is reduced to the finite-dimensional Kalman filter (35) and (36), the LQG problem of the conventional POSC can be discussed in terms of ML-POSC. In this subsection, we reproduce the Kalman filter (35) and (36) and the Riccati Equation (37) from the viewpoint of ML-POSC.

ML-POSC defines the finite-dimensional memory . In the LQG problem of the conventional POSC, the memory dimension is the same as the state dimension . The controller of ML-POSC determines the control based on the memory , i.e., . The memory is assumed to evolve by the following SDE:

where , while and are the memory controls. We note that the LQG problem of the conventional POSC does not consider the memory noise. The objective function of ML-POSC is provided by the following expected cumulative cost function:

We note that the LQG problem of the conventional POSC does not consider the memory control cost. ML-POSC optimizes u, v, and based on , as follows:

In the LQG problem of the conventional POSC, the probability of the extended state (17) is provided by the Gaussian distribution . The posterior probability of the state given the memory is provided by the Gaussian distribution , where and are provided as follows:

Theorem 2.

In the LQG problem without memory limitation, the optimal control functions of ML-POSC (40) are provided by

From and , and obey the following equations:

where and . Furthermore, holds in this problem. is the solution of the Riccati Equation (37).

Proof.

The proof is shown in Appendix D. □

6. Linear-Quadratic-Gaussian Problem with Memory Limitation

The LQG problem of the conventional POSC does not consider memory limitation because it does not consider the memory noise and cost. Furthermore, because the memory dimension is restricted to the state dimension, the memory dimension cannot be determined according to a given controller. ML-POSC can generalize the LQG problem to include the memory limitation. In this section, we discuss the LQG problem with memory limitation based on ML-POSC.

6.1. Problem Formulation

In this subsection, we formulate the LQG problem with memory limitation. The state and observation SDEs are the same as in the previous section, which are provided by (30) and (31), respectively. The controller of ML-POSC determines the control based on the memory , i.e., . Unlike the LQG problem of the conventional POSC, the memory dimension is not necessarily the same as the state dimension .

The memory is assumed to evolve according to the following SDE:

where obeys the Gaussian distribution , is the standard Wiener process, and is the control. Because the initial condition is stochastic and the memory SDE (48) includes the intrinsic stochasticity , the LQG problem of ML-POSC can consider the memory noise explicitly. We note that is independent of the memory . If depends on the memory , the memory SDE (48) becomes non-linear and non-Gaussian. As a result, the optimal control functions cannot be derived explicitly in this case. In order to keep the memory SDE (48) linear and Gaussian for obtaining the optimal control functions explicitly, we restrict being independent of the memory in the LQG problem with memory limitation. The LQG problem without memory limitation is the special case in which the optimal control in (45) does not depend on the memory .

The objective function is provided by the following expected cumulative cost function:

where , , , and . Because the cost function includes , the LQG problem of ML-POSC can consider the memory control cost explicitly. ML-POSC optimizes the state control function u and the memory control function v based on the objective function , as follows:

For the sake of simplicity, we do not optimize , although this can be accomplished by considering unobservable stochastic control.

6.2. Problem Reformulation

Although the formulation of the LQG problem with memory limitation in the previous subsection clarifies its relationship with that of the LQG problem without memory limitation, it is inconvenient for further mathematical investigations. In order to resolve this problem, we reformulate the LQG problem with memory limitation based on the extended state (17). The formulation in this subsection is simpler and more general than that in the previous subsection.

In the LQG problem with memory limitation, the extended state SDE (18) is provided as follows:

where obeys the Gaussian distribution , is the standard Wiener process, and is the control. The extended state SDE (51) includes the previous state, observation, and memory SDEs (30), (31) and (48) as a special case because they can be represented as follows:

where .

The objective function (21) is provided by the following expected cumulative cost function:

where , , and . This objective function (53) includes the previous objective function (49) as a special case because it can be represented as follows:

The objective of the LQG problem with memory limitation is to find the optimal control function that minimizes the objective function , as follows:

In the following subsection, we mainly consider the formulation of this subsection rather than that of the previous subsection because it is simpler and more general. Moreover, we omit for notational simplicity.

6.3. Derivation of Optimal Control Function

In this subsection, we derive the optimal control function of the LQG problem with memory limitation by applying Theorem 1. In the LQG problem with memory limitation, the probability of the extended state s at time t is provided by the Gaussian distribution . By defining the stochastic extended state , is provided as follows:

where is defined by

By applying Theorem 1 to the LQG problem with memory limitation, we obtain the following theorem:

Theorem 3.

In the LQG problem with memory limitation, the optimal control function of ML-POSC is provided by

where (57) depends on , and and are the solutions of the following ordinary differential equations:

where and , while and are the solutions of the following ordinary differential equations:

where .

Proof.

The proof is shown in Appendix E. □

Here, (61) is the Riccati equation [9,10,23], which appears in the LQG problem without memory limitation as well (37). In contrast, (62) is a new equation of the LQG problem with memory limitation, which in this paper we call the partially observable Riccati equation. Because estimation and control are not clearly separated in the LQG problem with memory limitation, the Riccati Equation (61) for control is modified to include estimation, which corresponds to the partially observable Riccati Equation (62). As a result, the partially observable Riccati Equation (62) is able to improve estimation as well as control.

In order to support this interpretation, we analyze the partially observable Riccati Equation (62) by comparing it with the Riccati Equation (61). Because only the last term of (62) is different from (61), we denote it as follows:

can be calculated as follows:

where . Because and , and may be larger than and , respectively. Because and are the negative feedback gains of the state x and the memory z, respectively, may decrease and . Moreover, when is positive/negative, may be smaller/larger than , which may increase/decrease . A similar discussion is possible for , , and , as , , and are symmetric matrices. As a result, may decrease the following conditional covariance matrix:

which corresponds to the estimation error of the state from the memory. Therefore, the partially observable Riccati Equation (62) may improve estimation as well as control, which is different from the Riccati Equation (61).

Because the problem in Section 6.1 is specialized more than that in Section 6.2, we can carry out a more specific discussion. In the problem in Section 6.1, is the same as the solution of the Riccati equation of the conventional POSC (37), and , , and are satisfied. As a result, the memory control does not appear in the Riccati equation of ML-POSC (61). In contrast, because of the last term of the partially observable Riccati Equation (62), is not the solution of the Riccati Equation (37), and , , and are satisfied. As a result, the memory control appears in the partially observable Riccati Equation (62), which may improve the state estimation.

6.4. Comparison with Completely Observable Stochastic Control

In this subsection, we compare ML-POSC with COSC of the extended state. By applying Proposition 2 in the LQG problem, the optimal control function of COSC of the extended state can be obtained as follows:

Proposition 4

([10,23]). In the LQG problem, the optimal control function of COSC of the extended state is provided by

where is the solution of the Riccati Equation (61).

Proof.

The proof is shown in [10,23]. □

The optimal control function of COSC of the extended state (66) can be derived intuitively from that of ML-POSC (58). In ML-POSC, is the estimator of the stochastic extended state. In COSC of the extended state, because the stochastic extended state is completely observable, its estimator is provided by , which corresponds to . By changing the definition of K from (57) to , the partially observable Riccati Equation (62) is reduced to the Riccati Equation (61), and the optimal control function of ML-POSC (58) is reduced to that of COSC (66). As a result, the optimal control function of ML-POSC (58) can be interpreted as the generalization of that of COSC (66).

While the second term is the same between (58) and (66), the first term is different. The second term is the control of the expected extended state , which does not depend on the realization. In contrast, the first term is the control of the stochastic extended state , which depends on the realization. The first term has two different points: (i) The estimators of the stochastic extended state in COSC and ML-POSC are provided by and , respectively, which is reasonable because ML-POSC needs to estimate the state from the memory; and (ii) The control gains of the stochastic extended state in COSC and ML-POSC are provided by and , respectively. While improves only control, improves estimation as well as control.

6.5. Numerical Algorithm

In the LQG problem, the partial differential equations are reduced to the ordinary differential equations. The FP Equation (23) is reduced to (59) and (60), and the HJB Equation (28) is reduced to (61) and (62). As a result, the optimal control function (58) can be obtained more easily in the LQG problem.

The Riccati Equation (61) can be solved backwards from the terminal condition. In contrast, the partially observable Riccati Equation (62) cannot be solved in the same way as the Riccati Equation (61), as it depends on the forward equation of (60) through K (57). Because the forward equation of (60) depends on the backward equation of (62) as well, they must be solved simultaneously.

A similar problem appears in the mean-field game and control, and numerous numerical methods have been developed to deal with it [33]. In this paper, we solve the system of (60) and (62) using the forward–backward sweep method, which computes (60) and (62) alternately [33,34]. In ML-POSC, the convergence of the forward–backward sweep method is guaranteed [34].

7. Numerical Experiments

In this section, we demonstrate the effectiveness of ML-POSC using numerical experiments on the LQG problem with memory limitation as well as on the non-LQG problem.

7.1. LQG Problem with Memory Limitation

In this subsection, we show the significance of the partially observable Riccati Equation (62) by a numerical experiment of the LQG problem with memory limitation. We consider the state , the observation , and the memory , which evolve by the following SDEs:

where and obey standard Gaussian distributions, is an arbitrary real number, and are independent standard Wiener processes, and and are the controls. The objective function to be minimized is provided as follows:

Therefore, the objective of this problem is to minimize the state variance by the small state and memory controls. Because this problem includes the memory control cost, it corresponds to the LQG problem with memory limitation.

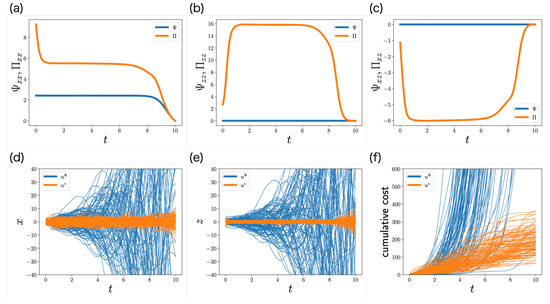

Figure 2a–c shows the trajectories of and ; and are larger than and , respectively, and is smaller than , which is consistent with our discussion in Section 6.3. Therefore, the partially observable Riccati equation may reduce the estimation error of the state from the memory. Moreover, while the memory control does not appear in the Riccati equation (), it appears in the partially observable Riccati equation (, ), which is consistent with our discussion in Section 6.3. As a result, the memory control plays an important role in estimating the state from the memory.

Figure 2.

Numerical simulation of the LQG problem with memory limitation. (a–c) Trajectories of the elements of and . Because and , and are not visualized. (d–f) Stochastic behaviors of the state (d), the memory (e), and the cumulative cost (f) for 100 samples. The expectation of the cumulative cost at corresponds to the objective function (70). Blue and orange curves are controlled by (71) and (58), respectively.

In order to clarify the significance of the partially observable Riccati Equation (62), we compare the performance of the optimal control function (58) with that of the following control function:

in which is replaced with . This result is shown in Figure 2d–f. In the control function (71), the distributions of the state and the memory are unstable, and the cumulative cost diverges. By contrast, in the optimal control function (58), the distributions of the state and memory are stable, and the cumulative cost is smaller. This result indicates that the partially observable Riccati Equation (62) plays an important role in the LQG problem with memory limitation.

7.2. Non-LQG Problem

In this subsection, we investigate the potential effectiveness of ML-POSC for a non-LQG problem by comparing it with the local LQG approximation of the conventional POSC [3,4]. We consider the state and the observation , which evolve according to the following SDEs:

where obeys the Gaussian distribution , is an arbitrary real number, and are independent standard Wiener processes, and is the control. The objective function to be minimized is provided as follows:

where

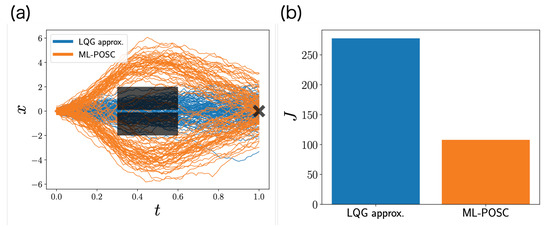

The cost function is high on the black rectangles in Figure 3a, which represent the obstacles. In addition, the terminal cost function is the lowest on the black cross in Figure 3a, which represents the desirable goal. Therefore, the system should avoid the obstacles and reach the goal with the small control. Because the cost function is non-quadratic, it is a non-LQG problem, which cannot be solved exactly by the conventional POSC.

Figure 3.

Numerical simulation of the non-LQG problem for the local LQG approximation (blue) and ML-POSC (orange). (a) Stochastic behaviors of state for 100 samples. The black rectangles and cross represent the obstacles and goal, respectively. (b) The objective function (74), computed from 100 samples.

In the local LQG approximation of the conventional POSC [3,4], the Zakai equation and the Bellman equation are locally approximated by the Kalman filter and the Riccati equation, respectively. Because the Bellman equation is reduced to the Riccati equation, the local LQG approximation can be solved numerically even in the non-LQG problem.

ML-POSC determines the control based on the memory , i.e., . The memory dynamics is formulated with the following SDE:

where . For the sake of simplicity, the memory control is not considered.

Figure 3 is the numerical result comparing the local LQG approximation and ML-POSC. Because the local LQG approximation reduces the Bellman equation to the Riccati equation by ignoring non-LQG information, it cannot avoid the obstacles, which results in a higher objective function. In contrast, because ML-POSC reduces the Bellman equation to the HJB equation while maintaining non-LQG information, it can avoid the obstacles, which results in a lower objective function. Therefore, our numerical experiment shows that ML-POSC can be superior to local LQG approximation.

8. Discussion

In this work, we propose ML-POSC, which is an alternative theoretical framework to the conventional POSC. ML-POSC first formulates the finite-dimensional and stochastic memory dynamics explicitly, then optimizes the memory dynamics considering the memory cost. As a result, unlike the conventional POSC, ML-POSC can consider memory limitation as well as incomplete information. Furthermore, because the optimal control function of ML-POSC is obtained by solving the system of HJB-FP equations, ML-POSC can be solved in practice even in non-LQG problems. ML-POSC can generalize the LQG problem to include memory limitation. Because estimation and control are not clearly separated in the LQG problem with memory limitation, the Riccati equation can be modified to the partially observable Riccati equation, which improves estimation as well as control. Furthermore, ML-POSC can provide a better result than the local LQG approximation in a non-LQG problem, as ML-POSC reduces the Bellman equation while maintaining non-LQG information.

ML-POSC is effective for the state estimation problem as well, which is a part of the POSC problem. Although the state estimation problem can be solved in principle by the Zakai equation [38,39,40], it cannot be solved directly, as the Zakai equation is infinite-dimensional. In order to resolve this problem, a particle filter is often used to approximate the infinite-dimensional Zakai equation as a finite number of particles [38,39,40]. However, because the performance of the particle filter is guaranteed only in the limit of a large number of particles, a particle filter may not be practical in cases where the available memory size is severely limited. Furthermore, a particle filter cannot take the memory noise and cost into account. ML-POSC resolves these problems, as it can optimize the state estimation under memory limitation.

ML-POSC may be extended from a single-agent system to a multi-agent system. POSC of a multi-agent system is called decentralized stochastic control (DSC) [41,42,43], which consists of a system and multiple controllers. In DSC, each controller needs to estimate the controls of the other controllers as well as the state of the system, which is essentially different from the conventional POSC. Because the estimation among the controllers is generally intractable, the conventional POSC approach cannot be straightforwardly extended to DSC. In contrast, ML-POSC compresses the observation history into the finite-dimensional memory, which simplifies estimation among the controllers. Therefore, ML-POSC may provide an effective approach to DSC. Actually, the finite-state controller, the idea of which is similar with ML-POSC, plays a key role in extending POMDP from a single-agent system to a multi-agent system [22,44,45,46,47,48]. ML-POSC may be extended to a multi-agent system in a similar way as a finite-state controller.

ML-POSC can be naturally extended to the mean-field control setting [28,29,30] because ML-POSC is solved based on the mean-field control theory. Therefore, ML-POSC can be applied to an infinite number of homogeneous agents. Furthermore, ML-POSC can be extended to a risk-sensitive setting, as this is a special case of the mean-field control setting [28,29,30]. Therefore, ML-POSC can consider the variance of the cost as well as its expectation.

Nonetheless, more efficient algorithms are needed in order to solve ML-POSC with a high-dimensional state and memory. In the mean-field game and control, neural network-based algorithms have recently been proposed which can solve high-dimensional problems efficiently [49,50]. By extending these algorithms, it might be possible to solve high-dimensional ML-POSC efficiently. Furthermore, unlike the mean-field game and control, the coupling of HJB-FP equations is limited to the optimal control function in ML-POSC. By exploiting this property, more efficient algorithms for ML-POSC may be proposed [34].

Author Contributions

Conceptualization, Formal analysis, Funding acquisition, Writing—original draft: T.T. and T.J.K.; Software, Visualization: T.T. All authors have read and agreed to the published version of the manuscript.

Funding

The first author received a JSPS Research Fellowship (Grant No. 21J20436). This work was supported by JSPS KAKENHI (Grant No. 19H05799) and JST CREST (Grant No. JPMJCR2011).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We thank Kenji Kashima and Kaito Ito for useful discussions.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| COSC | Completely Observable Stochastic Control |

| POSC | Partially Observable Stochastic Control |

| ML-POSC | Memory-Limited Partially Observable Stochastic Control |

| POMDP | Partially Observable Markov Decision Process |

| DSC | Decentralized Stochastic Control |

| LQG | Linear-Quadratic-Gaussian |

| HJB | Hamilton–Jacobi–Bellman |

| FP | Fokker–Planck |

| SDE | Stochastic Differential Equation |

Appendix A. Proof of Lemma 1

We define the value function as follows:

where is the solution of the FP Equation (23), where . Then, can be calculated as follows:

By rearranging the above equation, the following equation is obtained:

Because

the following equation is obtained:

From the definition of the Hamiltonian (10), the following Bellman equation is obtained:

Because the control u is the function of the memory z in ML-POSC, the minimization by u can be exchanged with the expectation by as follows:

Because the optimal control function is provided by the right-hand side of the Bellman Equation (A7) [10], the optimal control function is provided by

Because the FP Equation (23) is deterministic, the optimal control function is provided by .

Appendix B. Proof of Theorem 1

We first define

which satisfies . Differentiating the Bellman Equation (26) with respect to p, the following equation is obtained:

Because

the following equation is obtained:

We then define

where is the solution of the FP Equation (23). The time derivative of can be calculated as follows:

Appendix C. Proof of Proposition 2

From the proof of Lemma 1 (Appendix A), the Bellman Equation (A6) is obtained. Because the control u is the function of the extended state s in COSC of the extended state, the minimization by u can be exchanged with the expectation by as follows:

Because the optimal control function is provided by the right-hand side of the Bellman Equation (A16) [10], the optimal control function is provided by

Because the FP Equation (23) is deterministic, the optimal control function is provided by . The rest of the proof is the same as the proof of Theorem 1 (Appendix B).

Appendix D. Proof of Theorem 2

From Theorem 1, the optimal control functions , , and are provided by the minimization of the conditional expectation of the Hamiltonian, as follows:

In the LQG problem of the conventional POSC, the Hamiltonian (10) is provided by

From

the optimal control functions are provided by

We assume that is provided by the Gaussian distribution

and is provided by the quadratic function

From the initial condition of the FP equation,

are satisfied. From the terminal condition of the HJB equation, , , and are satisfied. In this case, , , and can be calculated as follows:

We then assume that the following equations are satisfied:

In this case, , , , , and can be calculated as follows:

Because is arbitrary when , we consider with the following equation:

In this case, the extended state SDE is provided by the following equation:

where , and

Because the drift and diffusion coefficients of (A41) are linear and constant with respect to s, respectively, becomes the Gaussian distribution, which is consistent with our assumption (A26), while and evolve by the following ordinary differential equations:

If and are satisfied, and are satisfied as well, which is consistent with our assumptions of and .

From and , the dynamics of is provided by

where . From and , the dynamics of is provided by

where . We note that (A46) and (A47) correspond to the Kalman filter (35) and (36).

By substituting , , , and into the HJB Equation (28), we obtain the following ordinary differential equations:

where , , and . If , , and satisfy (A48), (A49), and (A50), respectively, the HJB Equation (28) is satisfied, which is consistent with our assumption (A27). We note that (A48) corresponds to the Riccati Equation (37).

Appendix E. Proof of Theorem 3

From Theorem 1, the optimal control function is provided by the minimization of the conditional expectation of the Hamiltonian, as follows:

In the LQG problem with memory limitation, the Hamiltonian (10) is provided as follows:

From

the optimal control function is provided by

We assume that is provided by the Gaussian distribution

and is provided by the quadratic function

From the initial condition of the FP equation, and are satisfied. From the terminal condition of the HJB equation, , , and are satisfied. In this case, the optimal control function (A54) can be calculated as follows:

where we use (56). Because the optimal control function (A57) is linear with respect to , is the Gaussian distribution, which is consistent with our assumption (A55).

By substituting (A56) and (A57) into the HJB Equation (28), we obtain the following ordinary differential equations:

where . If , , and satisfy (A58), (A59), and (A60), respectively, the HJB Equation (28) is satisfied, which is consistent with our assumption (A56).

By defining by , the optimal control function (A57) can be calculated as follows:

In this case, obeys the following ordinary differential equation:

By defining , the optimal control function (A61) can be calculated as follows:

References

- Fox, R.; Tishby, N. Minimum-information LQG control part I: Memoryless controllers. In Proceedings of the 2016 IEEE 55th Conference on Decision and Control (CDC), Las Vegas, NV, USA, 12–14 December 2016; pp. 5610–5616. [Google Scholar] [CrossRef]

- Fox, R.; Tishby, N. Minimum-information LQG control Part II: Retentive controllers. In Proceedings of the 2016 IEEE 55th Conference on Decision and Control (CDC), Las Vegas, NV, USA, 12–14 December 2016; pp. 5603–5609. [Google Scholar] [CrossRef][Green Version]

- Li, W.; Todorov, E. An Iterative Optimal Control and Estimation Design for Nonlinear Stochastic System. In Proceedings of the 45th IEEE Conference on Decision and Control, San Diego, CA, USA, 13–15 December 2006; pp. 3242–3247. [Google Scholar] [CrossRef]

- Li, W.; Todorov, E. Iterative linearization methods for approximately optimal control and estimation of non-linear stochastic system. Int. J. Control 2007, 80, 1439–1453. [Google Scholar] [CrossRef]

- Nakamura, K.; Kobayashi, T.J. Connection between the Bacterial Chemotactic Network and Optimal Filtering. Phys. Rev. Lett. 2021, 126, 128102. [Google Scholar] [CrossRef] [PubMed]

- Nakamura, K.; Kobayashi, T.J. Optimal sensing and control of run-and-tumble chemotaxis. Phys. Rev. Res. 2022, 4, 013120. [Google Scholar] [CrossRef]

- Pezzotta, A.; Adorisio, M.; Celani, A. Chemotaxis emerges as the optimal solution to cooperative search games. Phys. Rev. E 2018, 98, 042401. [Google Scholar] [CrossRef]

- Borra, F.; Cencini, M.; Celani, A. Optimal collision avoidance in swarms of active Brownian particles. J. Stat. Mech. Theory Exp. 2021, 2021, 083401. [Google Scholar] [CrossRef]

- Bensoussan, A. Stochastic Control of Partially Observable Systems; Cambridge University Press: Cambridge, UK, 1992. [Google Scholar] [CrossRef]

- Yong, J.; Zhou, X.Y. Stochastic Controls; Springer: New York, NY, USA, 1999. [Google Scholar] [CrossRef]

- Nisio, M. Stochastic Control Theory. In Probability Theory and Stochastic Modelling; Springer: Tokyo, Japan, 2015; Volume 72. [Google Scholar] [CrossRef]

- Fabbri, G.; Gozzi, F.; Święch, A. Stochastic Optimal Control in Infinite Dimension. In Probability Theory and Stochastic Modelling; Springer International Publishing: Cham, Switzerland, 2017; Volume 82. [Google Scholar] [CrossRef]

- Bensoussan, A.; Frehse, J.; Yam, S.C.P. The Master equation in mean field theory. J. de Math. Pures et Appl. 2015, 103, 1441–1474. [Google Scholar] [CrossRef]

- Bensoussan, A.; Frehse, J.; Yam, S.C.P. On the interpretation of the Master Equation. Stoch. Process. Their Appl. 2017, 127, 2093–2137. [Google Scholar] [CrossRef]

- Bensoussan, A.; Yam, S.C.P. Mean field approach to stochastic control with partial information. ESAIM Control Optim. Calc. Var. 2021, 27, 89. [Google Scholar] [CrossRef]

- Hansen, E. An Improved Policy Iteration Algorithm for Partially Observable MDPs. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 1998; Volume 10. [Google Scholar]

- Hansen, E.A. Solving POMDPs by Searching in Policy Space. In Proceedings of the Fourteenth Conference on Uncertainty in Artificial Intelligence, Madison, WI, USA, 24–26 July 1998; pp. 211–219. [Google Scholar]

- Kaelbling, L.P.; Littman, M.L.; Cassandra, A.R. Planning and acting in partially observable stochastic domains. Artif. Intell. 1998, 101, 99–134. [Google Scholar] [CrossRef]

- Meuleau, N.; Kim, K.E.; Kaelbling, L.P.; Cassandra, A.R. Solving POMDPs by Searching the Space of Finite Policies. In Proceedings of the Fifteenth Conference on Uncertainty in Artificial Intelligence, Stockholm, Sweden, 30 July–1 August 1999; pp. 417–426. [Google Scholar]

- Meuleau, N.; Peshkin, L.; Kim, K.E.; Kaelbling, L.P. Learning Finite-State Controllers for Partially Observable Environments. In Proceedings of the Fifteenth Conference on Uncertainty in Artificial Intelligence, Stockholm, Sweden, 30 July–1 August 1999; pp. 427–436. [Google Scholar]

- Poupart, P.; Boutilier, C. Bounded Finite State Controllers. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2003; Volume 16. [Google Scholar]

- Amato, C.; Bonet, B.; Zilberstein, S. Finite-State Controllers Based on Mealy Machines for Centralized and Decentralized POMDPs. Proc. AAAI Conf. Artif. Intell. 2010, 24, 1052–1058. [Google Scholar] [CrossRef]

- Bensoussan, A. Estimation and Control of Dynamical Systems. In Interdisciplinary Applied Mathematics; Springer International Publishing: Cham, Switzerland, 2018; Volume 48. [Google Scholar] [CrossRef]

- Laurière, M.; Pironneau, O. Dynamic Programming for Mean-Field Type Control. J. Optim. Theory Appl. 2016, 169, 902–924. [Google Scholar] [CrossRef]

- Pham, H.; Wei, X. Bellman equation and viscosity solutions for mean-field stochastic control problem. ESAIM Control Optim. Calc. Var. 2018, 24, 437–461. [Google Scholar] [CrossRef]

- Kushner, H.J.; Dupuis, P.G. Numerical Methods for Stochastic Control Problems in Continuous Time; Springer: New York, NY, USA, 1992. [Google Scholar] [CrossRef]

- Fleming, W.H.; Soner, H.M. Controlled Markov Processes and Viscosity Solutions, 2nd ed.; Number 25 in Applications of mathematics; Springer: New York, NY, USA, 2006. [Google Scholar] [CrossRef]

- Bensoussan, A.; Frehse, J.; Yam, P. Mean Field Games and Mean Field Type Control Theory; Springer Briefs in Mathematics; Springer: New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- Carmona, R.; Delarue, F. Probabilistic Theory of Mean Field Games with Applications I; Number volume 83 in Probability theory and stochastic modelling; Springer Nature: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Carmona, R.; Delarue, F. Probabilistic Theory of Mean Field Games with Applications II. In Probability Theory and Stochastic Modelling; Springer International Publishing: Cham, Switzerland, 2018; Volume 84. [Google Scholar] [CrossRef]

- Achdou, Y. Finite Difference Methods for Mean Field Games. In Hamilton-Jacobi Equations: Approximations, Numerical Analysis and Applications: Cetraro, Italy 2011, Editors: Paola Loreti, Nicoletta Anna Tchou; Lecture Notes in Mathematics; Achdou, Y., Barles, G., Ishii, H., Litvinov, G.L., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 1–47. [Google Scholar] [CrossRef]

- Achdou, Y.; Laurière, M. Mean Field Games and Applications: Numerical Aspects. In Mean Field Games: Cetraro, Italy 2019; Lecture Notes in Mathematics; Achdou, Y., Cardaliaguet, P., Delarue, F., Porretta, A., Santambrogio, F., Cardaliaguet, P., Porretta, A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 249–307. [Google Scholar] [CrossRef]

- Lauriere, M. Numerical Methods for Mean Field Games and Mean Field Type Control. Mean Field Games 2021, 78, 221. [Google Scholar] [CrossRef]

- Tottori, T.; Kobayashi, T.J. Pontryagin’s Minimum Principle and Forward-Backward Sweep Method for the System of HJB-FP Equations in Memory-Limited Partially Observable Stochastic Control. arXiv 2022, arXiv:2210.13040. [Google Scholar]

- Carlini, E.; Silva, F.J. Semi-Lagrangian schemes for mean field game models. In Proceedings of the 52nd IEEE Conference on Decision and Control, Firenze, Italy, 10–13 December 2013; pp. 3115–3120. [Google Scholar] [CrossRef]

- Carlini, E.; Silva, F.J. A Fully Discrete Semi-Lagrangian Scheme for a First Order Mean Field Game Problem. SIAM J. Numer. Anal. 2014, 52, 45–67. [Google Scholar] [CrossRef]

- Carlini, E.; Silva, F.J. A semi-Lagrangian scheme for a degenerate second order mean field game system. Discret. Contin. Dyn. Syst. 2015, 35, 4269. [Google Scholar] [CrossRef]

- Crisan, D.; Doucet, A. A survey of convergence results on particle filtering methods for practitioners. IEEE Trans. Signal Process. 2002, 50, 736–746. [Google Scholar] [CrossRef]

- Budhiraja, A.; Chen, L.; Lee, C. A survey of numerical methods for nonlinear filtering problems. Phys. D Nonlinear Phenom. 2007, 230, 27–36. [Google Scholar] [CrossRef]

- Bain, A.; Crisan, D. Fundamentals of Stochastic Filtering. In Stochastic Modelling and Applied Probability; Springer: New York, NY, USA, 2009; Volume 60. [Google Scholar] [CrossRef]

- Nayyar, A.; Mahajan, A.; Teneketzis, D. Decentralized Stochastic Control with Partial History Sharing: A Common Information Approach. IEEE Trans. Autom. Control 2013, 58, 1644–1658. [Google Scholar] [CrossRef]

- Charalambous, C.D.; Ahmed, N.U. Centralized Versus Decentralized Optimization of Distributed Stochastic Differential Decision Systems With Different Information Structures-Part I: A General Theory. IEEE Trans. Autom. Control 2017, 62, 1194–1209. [Google Scholar] [CrossRef]

- Charalambous, C.D.; Ahmed, N.U. Centralized Versus Decentralized Optimization of Distributed Stochastic Differential Decision Systems With Different Information Structures—Part II: Applications. IEEE Trans. Autom. Control 2018, 63, 1913–1928. [Google Scholar] [CrossRef]

- Oliehoek, F.A.; Amato, C. A Concise Introduction to Decentralized POMDPs; SpringerBriefs in Intelligent Systems; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Bernstein, D.S. Bounded Policy Iteration for Decentralized POMDPs. In Proceedings of the Nineteenth International Joint Conference on Artificial Intelligence, Edinburgh, UK, 30 July–5 August 2005; pp. 1287–1292. [Google Scholar]

- Bernstein, D.S.; Amato, C.; Hansen, E.A.; Zilberstein, S. Policy Iteration for Decentralized Control of Markov Decision Processes. J. Artif. Intell. Res. 2009, 34, 89–132. [Google Scholar] [CrossRef][Green Version]

- Amato, C.; Bernstein, D.S.; Zilberstein, S. Optimizing Memory-Bounded Controllers for Decentralized POMDPs. In Proceedings of the Twenty-Third Conference on Uncertainty in Artificial Intelligence, Vancouver, BC, Canada, 19–22 July 2007; pp. 1–8. [Google Scholar]

- Tottori, T.; Kobayashi, T.J. Forward and Backward Bellman Equations Improve the Efficiency of the EM Algorithm for DEC-POMDP. Entropy 2021, 23, 551. [Google Scholar] [CrossRef]

- Ruthotto, L.; Osher, S.J.; Li, W.; Nurbekyan, L.; Fung, S.W. A machine learning framework for solving high-dimensional mean field game and mean field control problems. Proc. Natl. Acad. Sci. USA 2020, 117, 9183–9193. [Google Scholar] [CrossRef]

- Lin, A.T.; Fung, S.W.; Li, W.; Nurbekyan, L.; Osher, S.J. Alternating the population and control neural networks to solve high-dimensional stochastic mean-field games. Proc. Natl. Acad. Sci. USA 2021, 118, e2024713118. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).