A Dual Attention Encoding Network Using Gradient Profile Loss for Oil Spill Detection Based on SAR Images

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

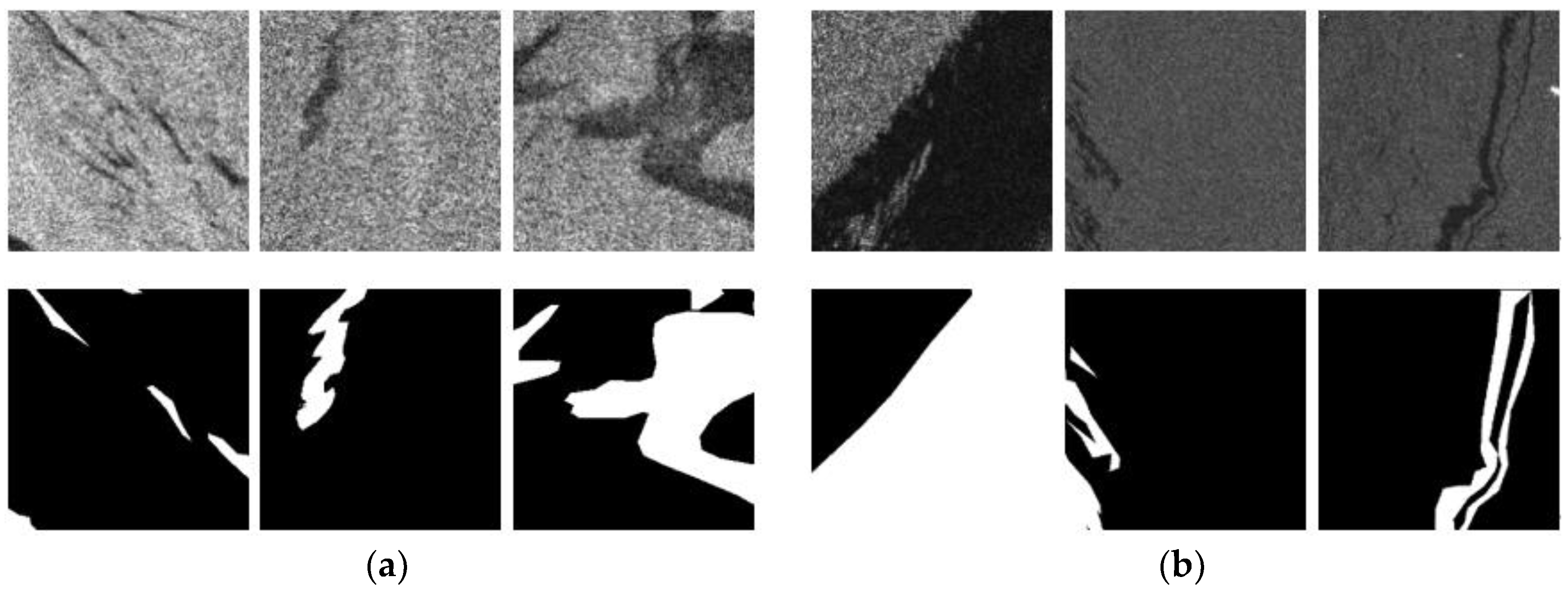

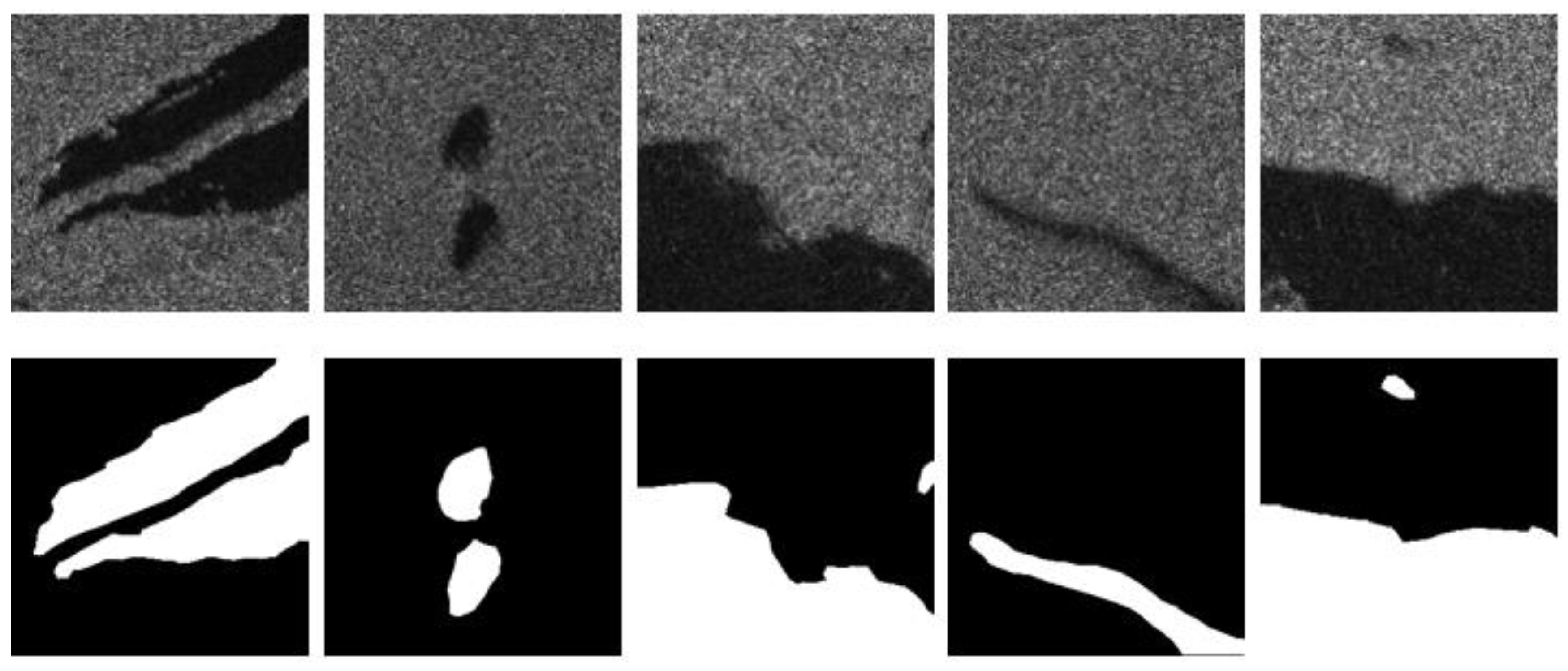

2.1.1. SOS Dataset

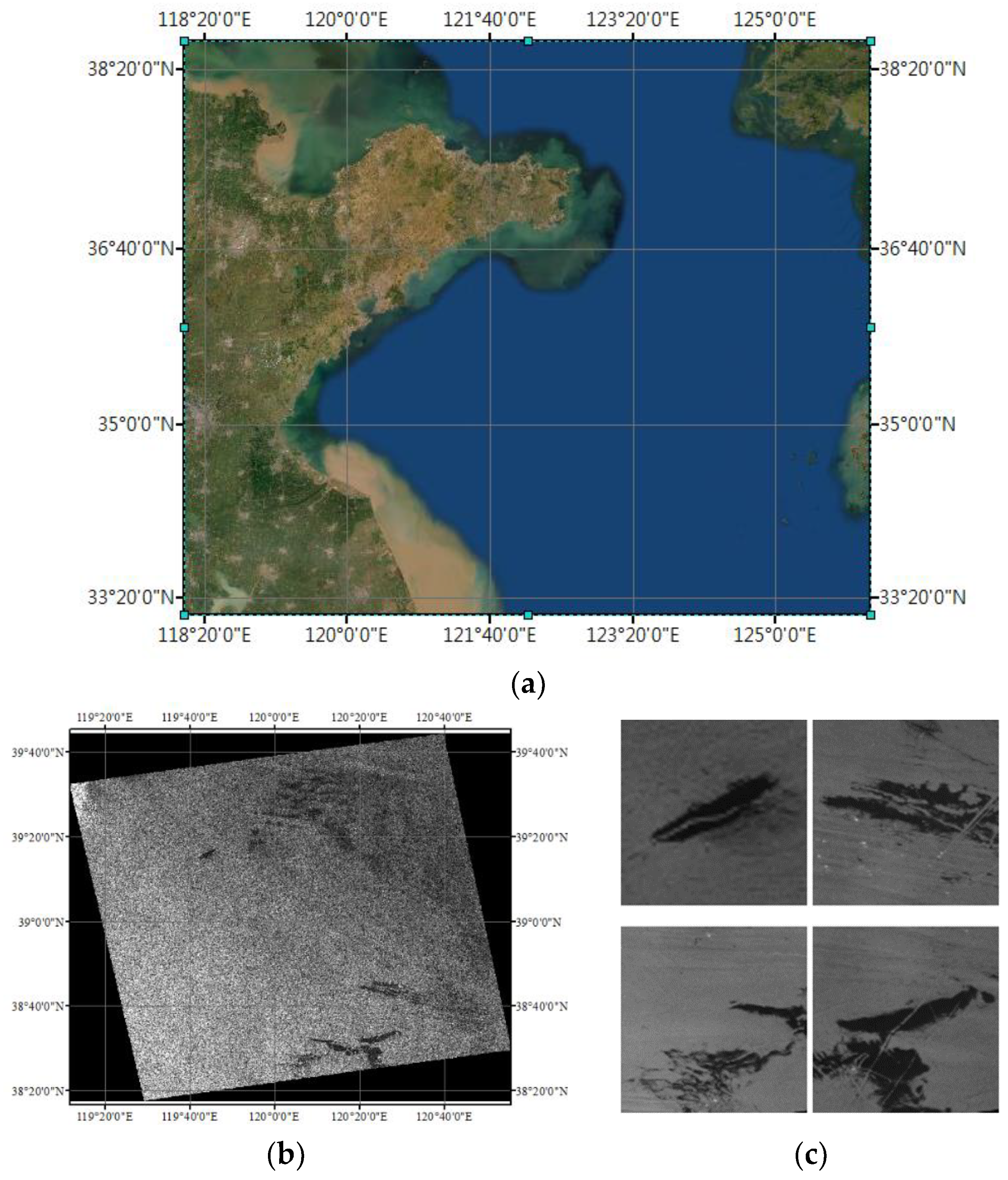

2.1.2. GaoFen-3 Dataset

2.2. Dual Attention Encoding Network (DAENet)

2.2.1. Encoder–Decoder Architecture

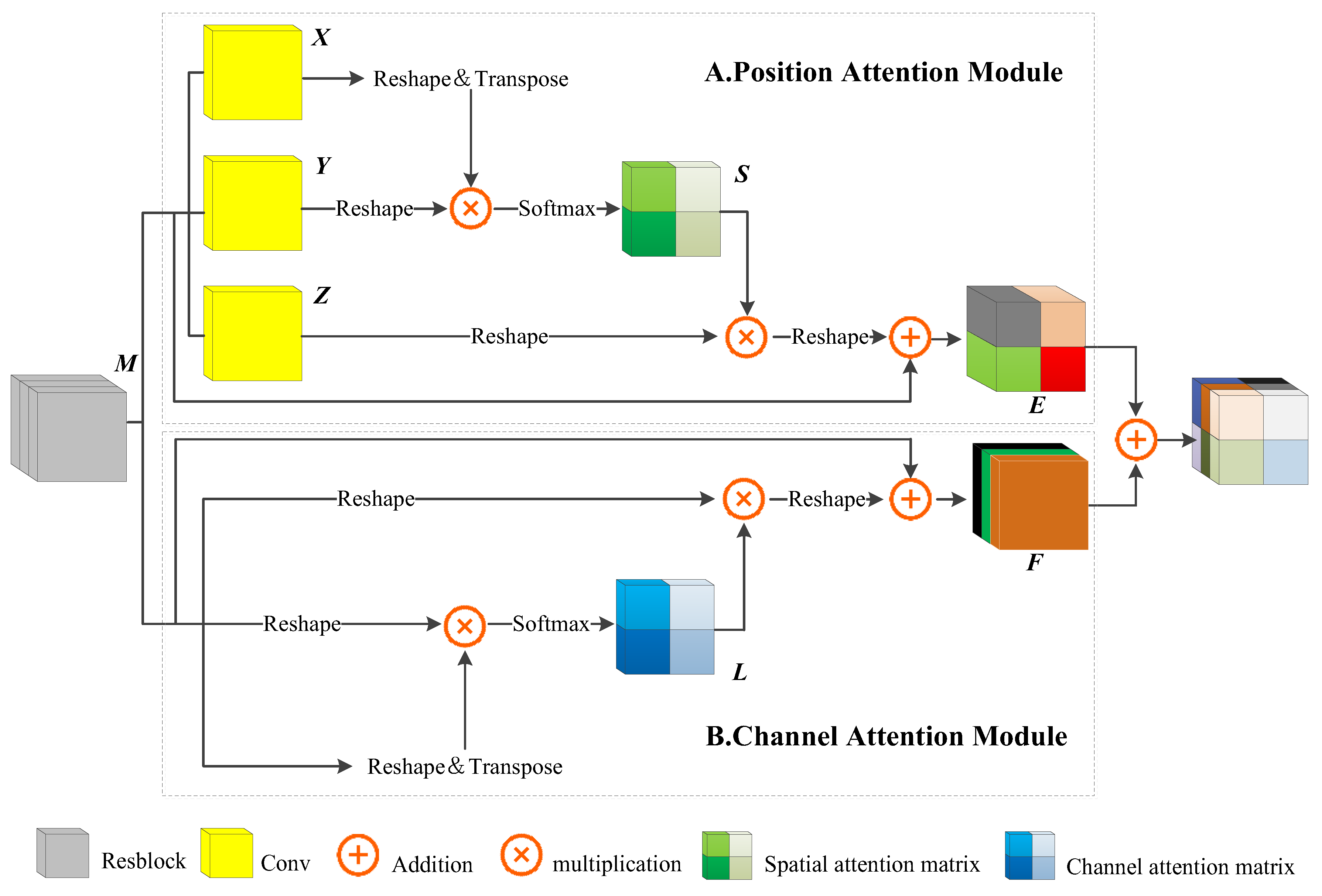

2.2.2. Dual Attention Encoding Module (DAEM)

2.3. Gradient Profile Loss

3. Results and Discussion

3.1. Evaluation Criteria

3.2. Comparison of DAENet with Other Technologies

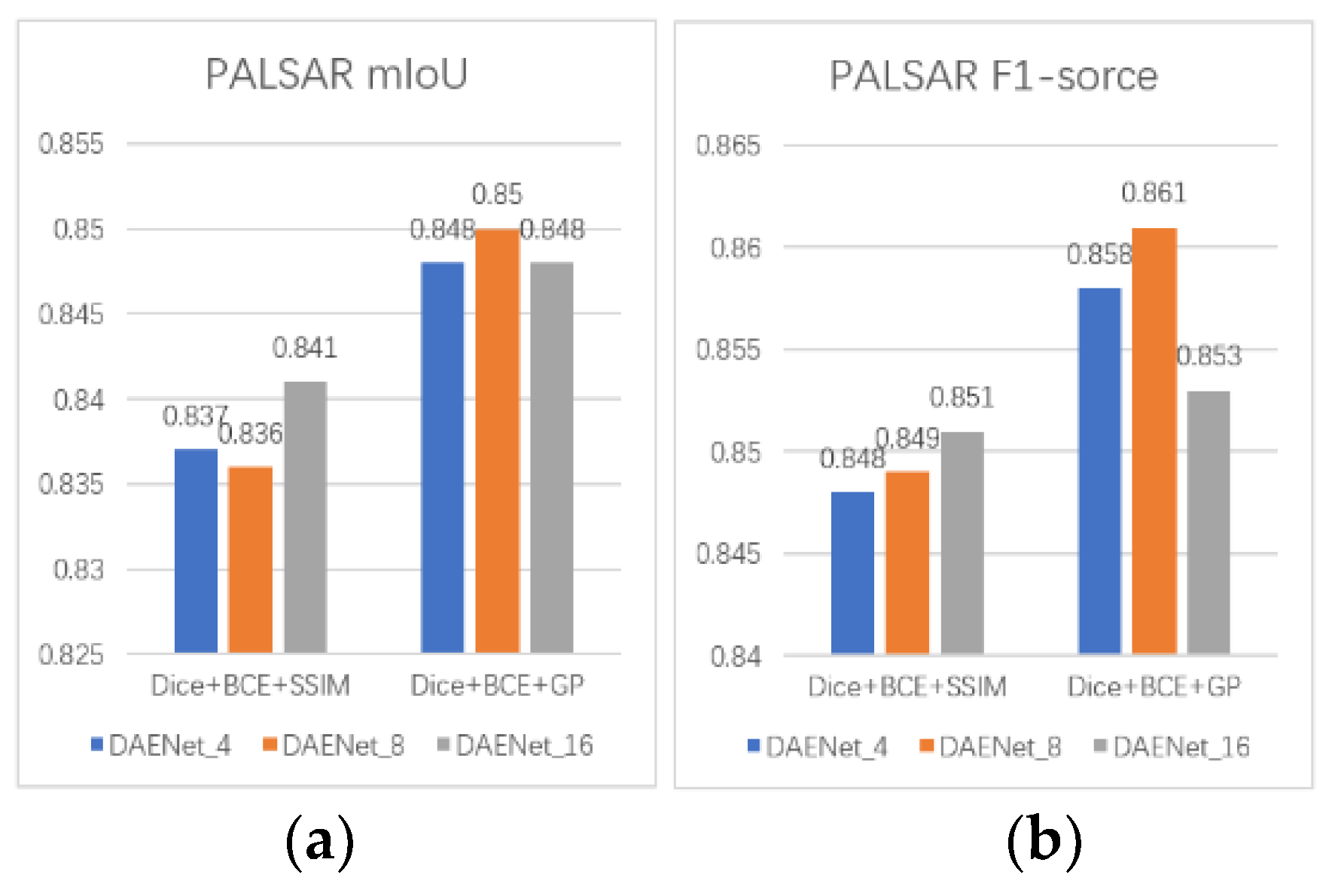

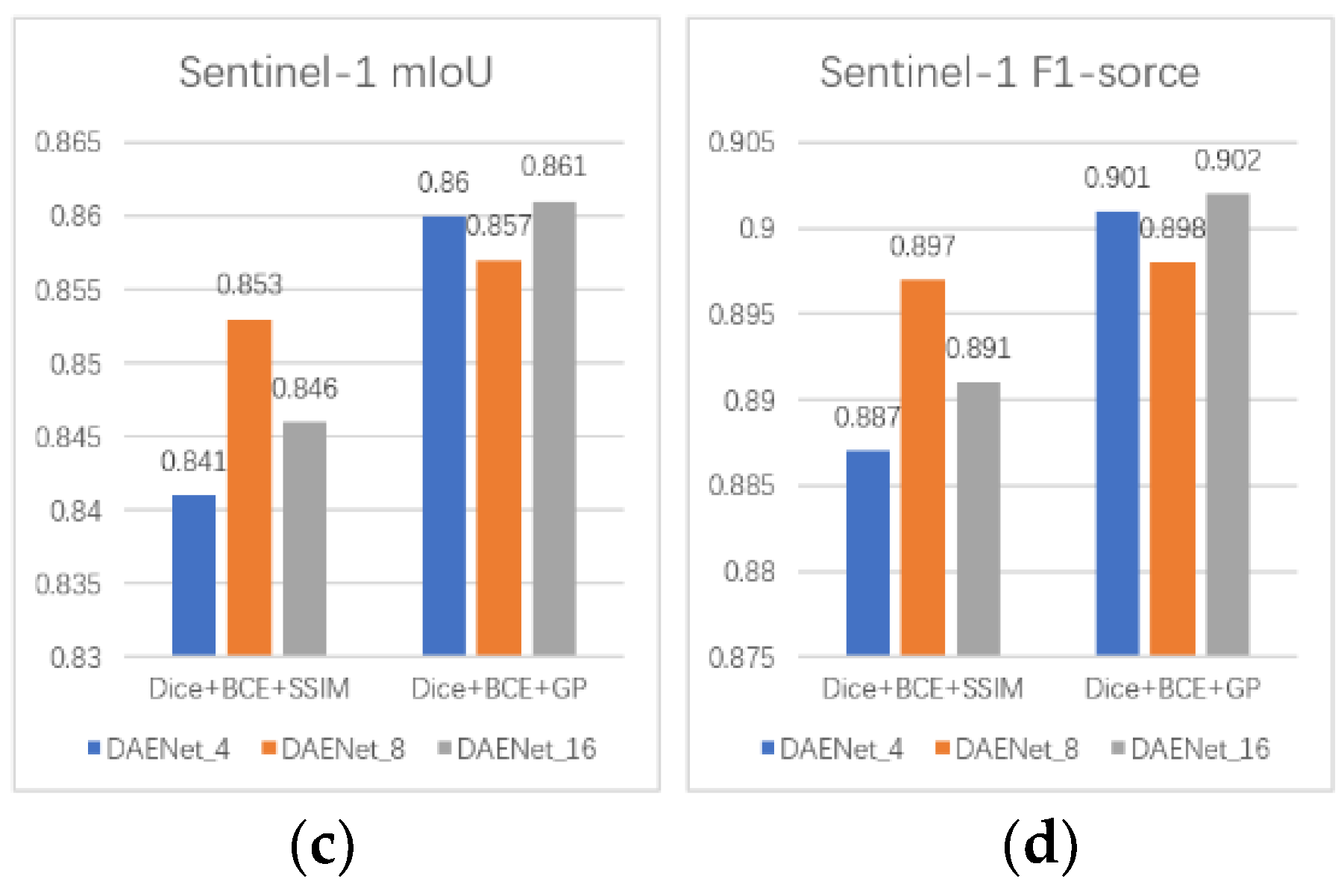

3.3. Ablation Study for Different Loss Functions

3.4. Overall Ablation Study

3.5. Validation Tests on the GaoFen-3 Dataset

3.6. Implementation Details

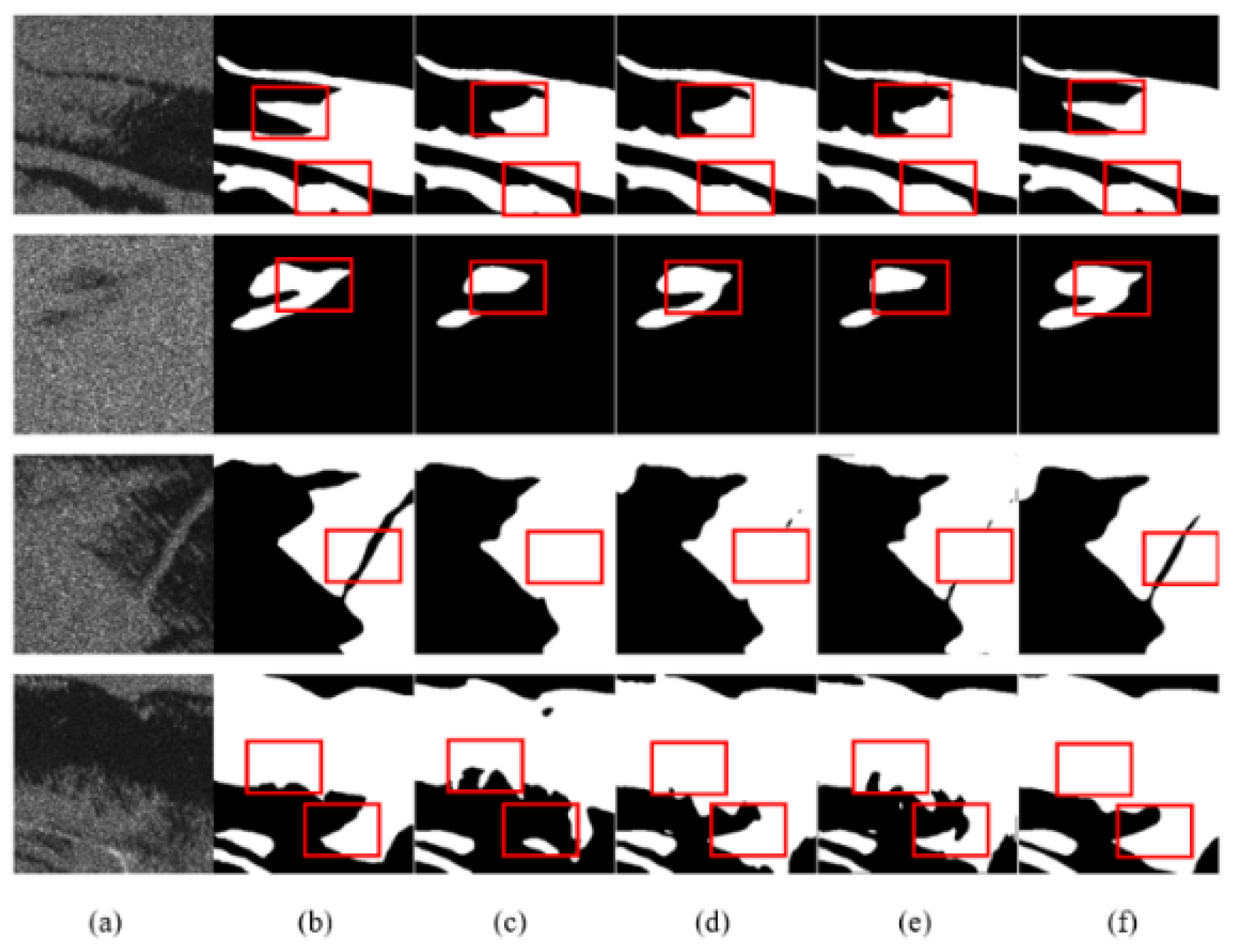

3.7. Visualization Result Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chang, S.E.; Stone, J.; Demes, K.; Piscitelli, M. Consequences of oil spills: A review and framework for informing planning. Ecol. Soc. 2014, 19, 26. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, W.; Wan, Z.; Li, S.; Huang, T.; Fei, Y. Oil spills from global tankers: Status review and future governance. J. Clean. Prod. 2019, 227, 20–32. [Google Scholar] [CrossRef]

- Li, P.; Cai, Q.; Lin, W.; Chen, B.; Zhang, B. Offshore oil spill response practices and emerging challenges. Mar. Pollut. Bull. 2016, 110, 6–27. [Google Scholar] [CrossRef]

- Soukissian, T.; Karathanasi, F.; Axaopoulos, P. Satellite-Based Offshore Wind Resource Assessment in the Mediterranean Sea. IEEE J. Ocean. Eng. 2016, 42, 73–86. [Google Scholar] [CrossRef]

- Fingas, M.; Brown, C.E. A Review of Oil Spill Remote Sensing. Sensors 2017, 18, 91. [Google Scholar] [CrossRef]

- Al-Ruzouq, R.; Gibril, M.B.A.; Shanableh, A.; Kais, A.; Hamed, O.; Al-Mansoori, S.; Khalil, M.A. Sensors, Features, and Machine Learning for Oil Spill Detection and Monitoring: A Review. Remote Sens. 2020, 12, 3338. [Google Scholar] [CrossRef]

- Li, Z.; Chen, L.; Zhang, B.; Shi, H.; Long, T. SAR Image Oil Spill Detection Based on Maximum Entropy Threshold Segmentation. J. Signal Process. 2019, 35, 1111–1117. [Google Scholar] [CrossRef]

- Jing, Y.; Wang, Y.; Liu, J.; Liu, Z. A robust active contour edge detection algorithm based on local Gaussian statistical model for oil slick remote sensing image. In Proceedings of the 2015 International Conference on Optical Instruments and Technology: Optical Sensors and Applications, Beijing, China, 8–10 May 2015; pp. 315–323. [Google Scholar]

- Garcia-Pineda, O.; Zimmer, B.; Howard, M.; Pichel, W.; Li, X.; McDonald, I.R. Using SAR images to delineate ocean oil slicks with a texture-classifying neural network algorithm (TCNNA). Can. J. Remote Sens. 2009, 35, 411–421. [Google Scholar] [CrossRef]

- Mera, D.; Bolon-Canedo, V.; Cotos, J.M.; Alonso-Betanzos, A. On the use of feature selection to improve the detection of sea oil spills in SAR images. Comput. Geosci. 2017, 100, 166–178. [Google Scholar] [CrossRef]

- Tong, S.; Liu, X.; Chen, Q.; Zhang, Z.; Xie, G. Multi-feature based ocean oil spill detection for polarimetric SAR data using random forest and the self-similarity parameter. Remote Sens. 2019, 11, 451. [Google Scholar] [CrossRef]

- Dhavalikar, A.S.; Choudhari, P.C. Classification of Oil Spills and Look-alikes from SAR Images Using Artificial Neural Network. In Proceedings of the 2021 International Conference on Communication Information and Computing Technology (ICCICT), Mumbai, India, 25–27 June 2021; pp. 1–4. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Cinar, N.; Ozcan, A.; Kaya, M. A hybrid DenseNet121-UNet model for brain tumor segmentation from MR Images. Biomed. Signal Process. Control 2022, 76, 103647. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 182–186. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Krestenitis, M.; Orfanidis, G.; Ioannidis, K.; Avgerinakis, K.; Vrochidis, S.; Kompatsiaris, I. Oil Spill Identification from Satellite Images Using Deep Neural Networks. Remote Sens. 2019, 11, 1762. [Google Scholar] [CrossRef]

- Fan, Y.; Rui, X.; Zhang, G.; Yu, T.; Xu, X.; Poslad, S. Feature Merged Network for Oil Spill Detection Using SAR Images. Remote Sens. 2021, 13, 3174. [Google Scholar] [CrossRef]

- Basit, A.; Siddique, M.A.; Bhatti, M.K.; Sarfraz, M.S. Comparison of CNNs and Vision Transformers-Based Hybrid Models Using Gradient Profile Loss for Classification of Oil Spills in SAR Images. Remote Sens. 2022, 14, 2085. [Google Scholar] [CrossRef]

- Shaban, M.; Salim, R.; AbuKhalifeh, H.; Khelifi, A.; Shalaby, A.; El-Mashad, S.; Mahmoud, A.; Ghazal, M.; El-Baz, A. A Deep-LearningFramework for the Detection of OilSpills from SAR Data. Sensors 2021, 21, 2351. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhang, Y.; Li, Z.; Yan, X.; Guan, Q.; Zhong, Y.; Zhang, L.; Li, D. Oil Spill Contextual and Boundary-Supervised Detection Network Based on Marine SAR Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–10. [Google Scholar] [CrossRef]

- Park, S.-H.; Jung, H.-S.; Lee, M.-J.; Lee, W.-J.; Choi, M.-J. Oil Spill Detection from PlanetScope Satellite Image: Application to Oil Spill Accident near Ras Al Zour Area, Kuwait in August 2017. J. Coast. Res. 2019, 90, 251–260. [Google Scholar] [CrossRef]

- Zhang, J.; Feng, H.; Luo, Q.; Li, Y.; Wei, J.; Li, J. Oil Spill Detection in Quad-Polarimetric SAR Images Using an Advanced Convolutional Neural Network Based on SuperPixel Model. Remote Sens. 2020, 12, 944. [Google Scholar] [CrossRef]

- Bianchi, F.; Espeseth, M.; Borch, N. Large-Scale Detection and Categorization of Oil Spills from SAR Images with Deep Learning. Remote Sens. 2020, 12, 2260. [Google Scholar] [CrossRef]

- Jiasheng, Z.; Shuang, L.; Yongpan, S.; Jie, S. Temporal knowledge graph representation learning with local and global evolutions. Knowl. Based Syst. 2022, 251, 109234. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-attention generative adversarial networks. Int. Conf. Mach. Learn. 2019, 97, 7354–7363. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Yang, Z.; Zhou, D.; Yang, Y.; Zhang, J.; Chen, Z. TransRoadNet: A Novel Road Extraction Method for Remote Sensing Images via Combining High-Level Semantic Feature and Context. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Chen, S.B.; Ji, Y.X.; Tang, J.; Luo, B.; Wang, W.-Q.; Lv, K. DBRANet: Road extraction by dual-branch encoder and regional attention decoder. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Ozcan, A.; Catal, C.; Kasif, A. Energy load forecasting using a dual-stage attention-based recurrent neural network. Sensors 2021, 21, 7115. [Google Scholar] [CrossRef]

- Niu, G.; Li, B.; Zhang, Y.; Sheng, Y.; Shi, C.; Li, J.; Pu, S. Joint Semantics and Data-Driven Path Representation for Knowledge Graph Inference. Neurocomputing 2022, 483, 249–261. [Google Scholar] [CrossRef]

- Sheng, Y.; Xu, Y.; Wang, Y.; Zhang, X.; Jia, J.; de Melo, G. Visualizing Multi-Document Semantics via Open Domain Information Extraction. In Proceedings of the European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML-PKDD), Dublin, Ireland, 10–14 September 2018; pp. 695–699. [Google Scholar]

- He, L.; Liu, B.; Li, G.; Sheng, Y.; Wang, Y.; Xu, Z. Knowledge Base Completion by Variational Bayesian Neural Tensor Decomposition. Cogn. Comput. 2018, 10, 1075–1084. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Sheng, Y.; Xu, Z.; Wang, Y.; de Melo, G. Multi-document semantic relation extraction for news analytics. World Wide Web Internet Web Inf. Syst. 2020, 23, 2043–2077. [Google Scholar] [CrossRef]

- Sheng, Y.; Xu, Z. Coherence and Salience-Based Multi-Document Relationship Mining. In Proceedings of the 3rd APWeb-WAIM Joint Conference on Web and Big Data (APWeb-WAIM), Chengdu, China, 1–3 August 2019; pp. 280–289. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Sarfraz, M.S.; Seibold, C.; Khalid, H.; Stiefelhagen, R. Content and colour distillation for learning image translations with the spatial profile loss. arXiv 2019, arXiv:1908.00274. [Google Scholar] [CrossRef]

- Wang, W.; Xie, E.; Liu, X.; Wang, W.; Liang, D.; Shen, C.; Bai, X. Scene Text Image Super-Resolution in the Wild. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 650–666. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

| mIoU (PALSAR) | F1-Score (PALSAR) | mIoU (Sentinel-1) | F1-Score (Sentinel-1) | |

|---|---|---|---|---|

| U-Net | 0.818 | 0.831 | 0.832 | 0.882 |

| D-LinkNet | 0.827 | 0.838 | 0.831 | 0.882 |

| DeepLabv3 | 0.829 | 0.839 | 0.838 | 0.881 |

| DAENet_4 | 0.837 | 0.848 | 0.841 | 0.887 |

| DAENet_8 | 0.836 | 0.849 | 0.853 | 0.897 |

| DAENet_16 | 0.841 | 0.851 | 0.846 | 0.891 |

| Row | Encoder | Loss Function | mIoU (PALSAR) | F1-Score (PALSAR) | mIoU (Sentinel-1) | F1-Score (Sentinel-1) |

|---|---|---|---|---|---|---|

| 1 | ResNet34 | Dice + BCE + SSIM | 0.827 | 0.838 | 0.831 | 0.882 |

| 2 | ResNet34 | Dice + BCE + GP | 0.833 | 0.843 | 0.842 | 0.887 |

| 3 | DAEM | Dice + BCE + SSIM | 0.836 | 0.849 | 0.853 | 0.897 |

| 4 | DAEM | Dice + BCE + GP | 0.850 | 0.861 | 0.857 | 0.898 |

| Advantage | Example |

|---|---|

| Broken shape areas | Figure 8, third row, fourth row; Figure 9, second row; Figure 10, third row |

| Small differences in gray values between the target and background areas | Figure 9, first row |

| Higher noise and more complicated edge areas | Figure 8, first row, second row; Figure 9, fourth row; Figure 10, first row |

| Test Set | Spatial Resolution | Average mIoU | Average F1-Score |

|---|---|---|---|

| PALSAR | 12.5 m × 12.5 m | 0.827 | 0.839 |

| Sentinel-1 | 5 m × 20 m | 0.838 | 0.885 |

| GaoFen-3 | 25 m × 25 m | 0.913 | 0.944 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhai, J.; Mu, C.; Hou, Y.; Wang, J.; Wang, Y.; Chi, H. A Dual Attention Encoding Network Using Gradient Profile Loss for Oil Spill Detection Based on SAR Images. Entropy 2022, 24, 1453. https://doi.org/10.3390/e24101453

Zhai J, Mu C, Hou Y, Wang J, Wang Y, Chi H. A Dual Attention Encoding Network Using Gradient Profile Loss for Oil Spill Detection Based on SAR Images. Entropy. 2022; 24(10):1453. https://doi.org/10.3390/e24101453

Chicago/Turabian StyleZhai, Jiding, Chunxiao Mu, Yongchao Hou, Jianping Wang, Yingjie Wang, and Haokun Chi. 2022. "A Dual Attention Encoding Network Using Gradient Profile Loss for Oil Spill Detection Based on SAR Images" Entropy 24, no. 10: 1453. https://doi.org/10.3390/e24101453

APA StyleZhai, J., Mu, C., Hou, Y., Wang, J., Wang, Y., & Chi, H. (2022). A Dual Attention Encoding Network Using Gradient Profile Loss for Oil Spill Detection Based on SAR Images. Entropy, 24(10), 1453. https://doi.org/10.3390/e24101453