Fusion of Infrared and Visible Images Based on Three-Scale Decomposition and ResNet Feature Transfer

Abstract

1. Introduction

2. Theoretical Foundation

2.1. Rolling Guidance Filter (RGF)

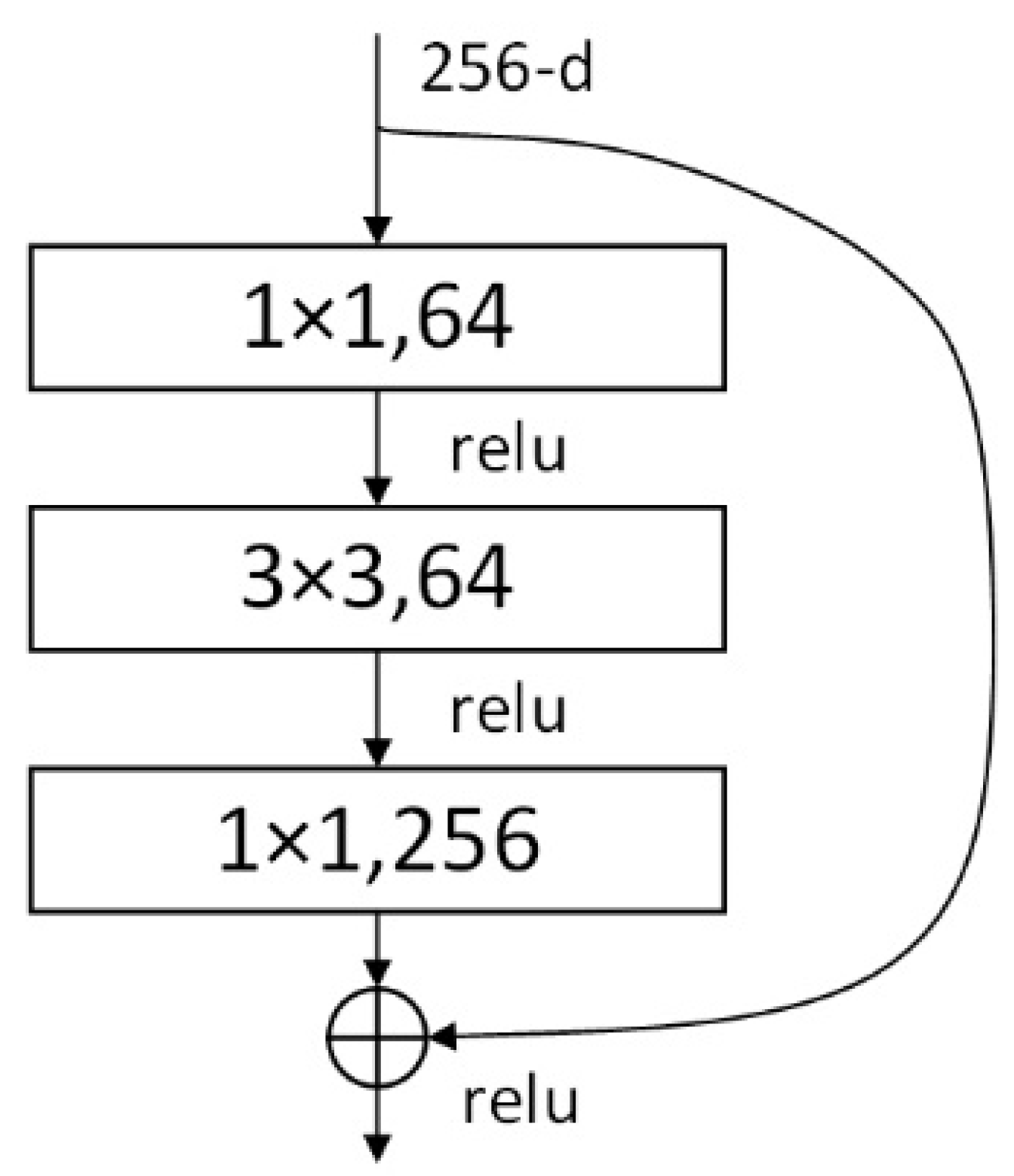

2.2. Deep Residual Networks

3. Algorithmic Framework

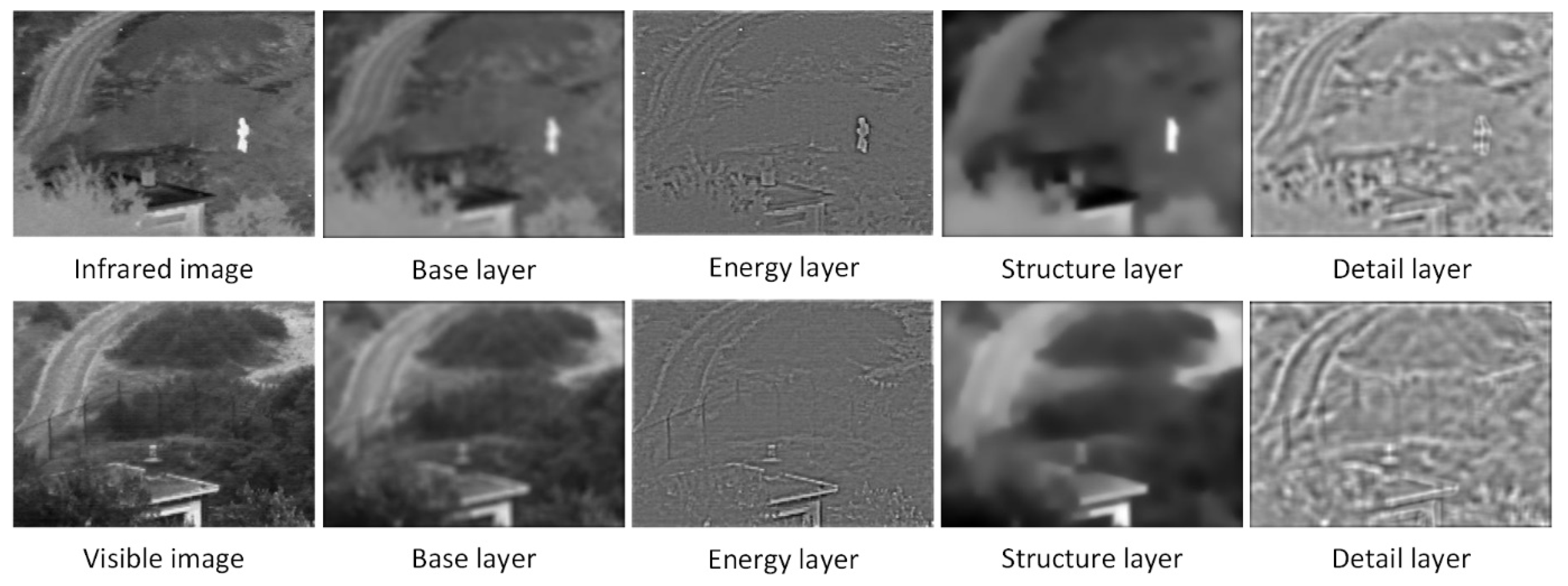

3.1. Three-Scale Decomposition Scheme

3.2. Fusion Scheme

3.2.1. Energy Layer Fusion

3.2.2. Detail Layer Fusion

3.2.3. Structural Layer Fusion

4. Experimental Results and Analysis

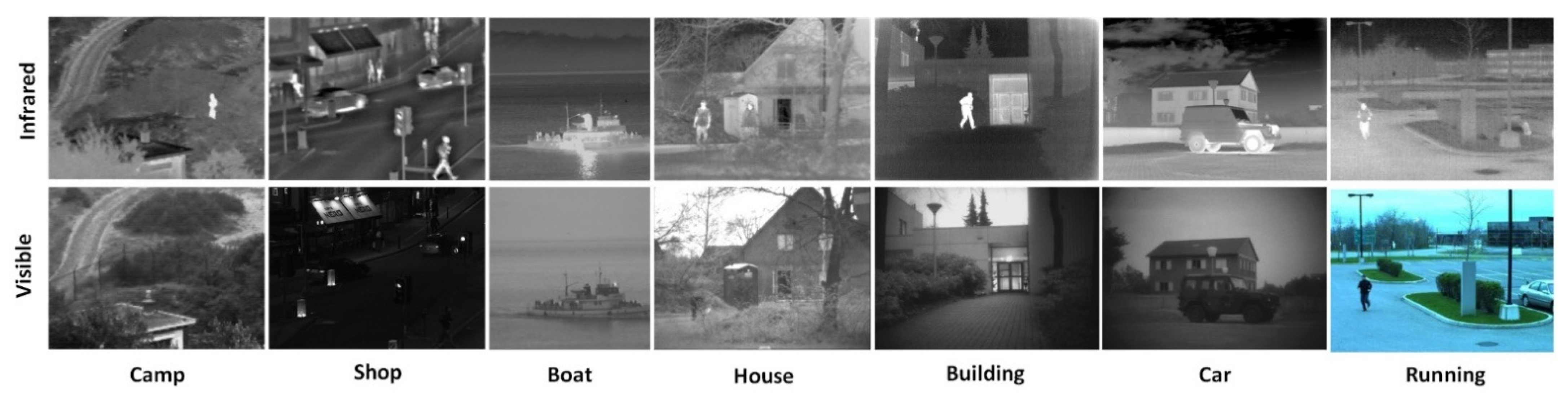

4.1. Experimental Setup

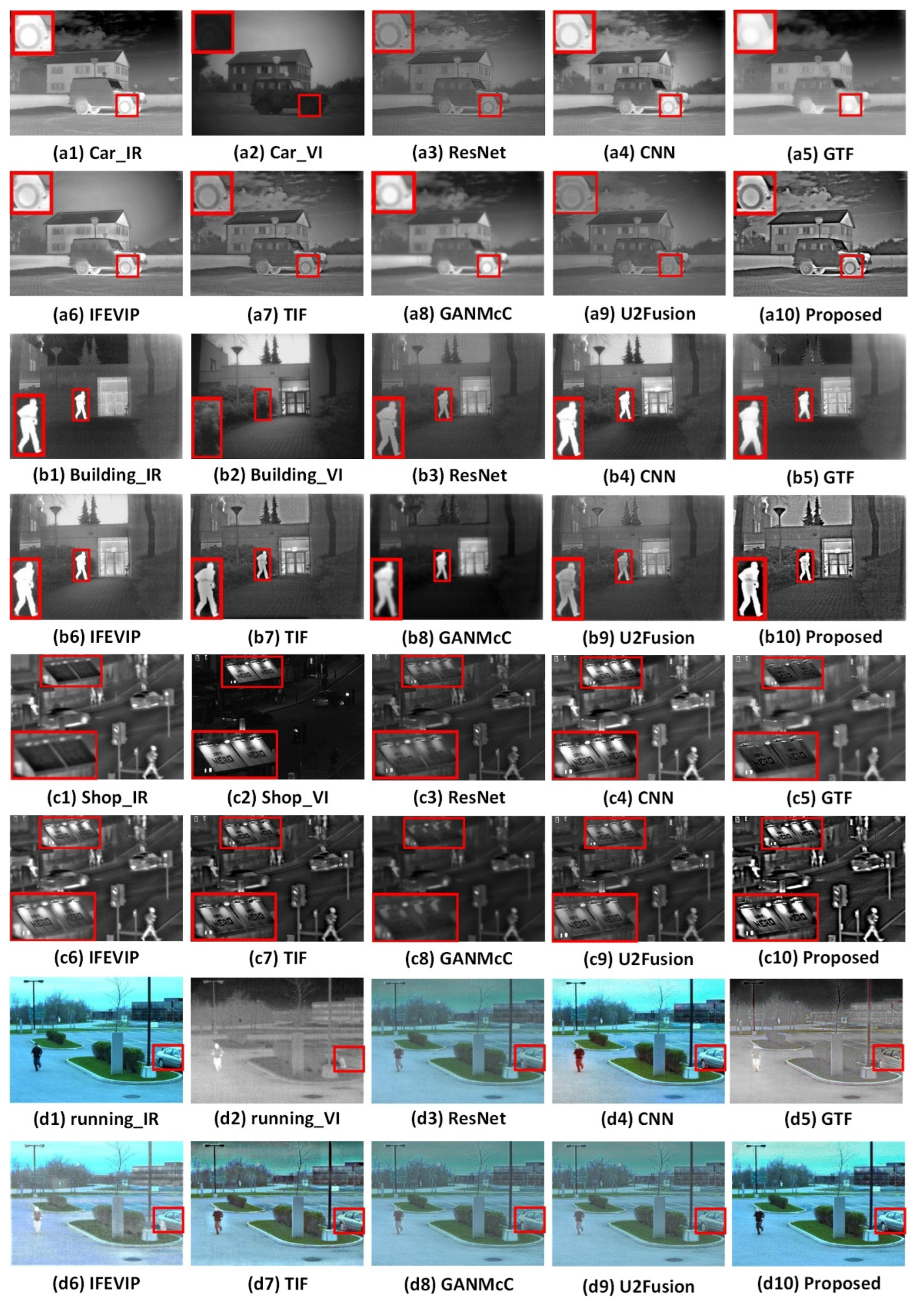

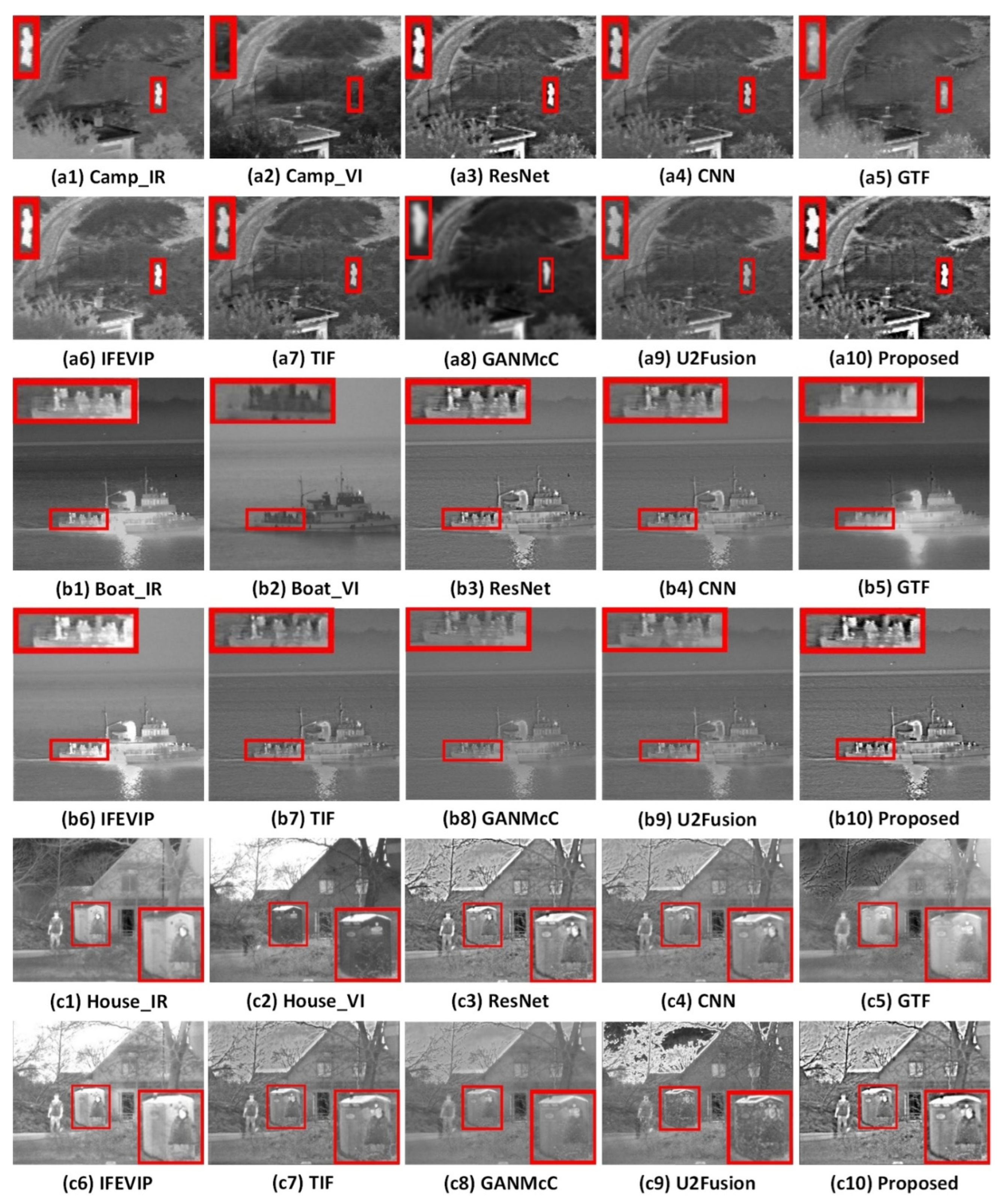

4.2. Subjective Evaluation

4.3. Objective Evaluation

4.4. Computational Efficiency

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, Z.; Zeng, S. TPFusion: Texture preserving fusion of infrared and visible images via dense networks. Entropy 2022, 24, 294. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Cheng, J.; Zhou, W.; Zhang, C.; Pan, X. Infrared pedestrian detection with converted temperature map. In Proceedings of the 2019 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Piscataway, NJ, USA, 18–21 November 2019; pp. 2025–2031. [Google Scholar]

- Arora, V.; Mulaveesala, R.; Rani, A.; Kumar, S.; Kher, V.; Mishra, P.; Kaur, J.; Dua, G.; Jha, R.K. Infrared Image Correlation for Non-destructive Testing and Evaluation of Materials. J. Nondestruct. Eval. 2021, 40, 75. [Google Scholar] [CrossRef]

- Burt, P.J.; Adelson E, H. The Laplacian pyramid as a compact image code. In Readings in Computer Vision; Fischler, M.A., Firschein, O., Eds.; Morgan Kaufmann: Burlington, MA, USA, 1987; pp. 671–679. [Google Scholar]

- Toet, A. Image fusion by a ratio of low-pass pyramid. Pattern Recognit. Lett. 1989, 9, 245–253. [Google Scholar] [CrossRef]

- Toet, A. A morphological pyramidal image decomposition. Pattern Recognit. Lett. 1989, 9, 255–261. [Google Scholar] [CrossRef]

- Ren, L.; Pan, Z.; Cao, J.; Zhang, H.; Wang, H. Infrared and visible image fusion based on edge-preserving guided filter and infrared feature decomposition. Signal Process. 2021, 186, 108108. [Google Scholar] [CrossRef]

- Kumar, V.; Agrawal, P.; Agrawal, S. ALOS PALSAR and hyperion data fusion for land use land cover feature extraction. J. Indian Soc. Remote Sens. 2017, 45, 407–416. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Kittler, J. MDLatLRR: A novel decomposition method for infrared and visible image fusion. IEEE Trans. Image Process. 2020, 29, 4733–4746. [Google Scholar] [CrossRef]

- Yang, B.; Li, S. Visual attention guided image fusion with sparse representation. Opt.-Int. J. Light Electron Opt. 2014, 125, 4881–4888. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Chen, G.; Li, L.; Jin, W.; Zhu, J.; Shi, F. Weighted sparse representation multi-scale transform fusion algorithm for high dynamic range imaging with a low-light dual-channel camera. Opt. Express 2019, 27, 10564–10579. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Wu, X.J.; Kittler, J. Infrared and visible image fusion using a deep learning framework. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 2705–2710. [Google Scholar]

- An, W.B.; Wang, H.M. Infrared and visible image fusion with supervised convolutional neural network. Opt.-Int. J. Light Electron Opt. 2020, 219, 165120. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Zhang, D.; Zhou, Y.; Zhao, J.; Zhou, Z.; Yao, R. Structural similarity preserving GAN for infrared and visible image fusion. Int. J. Wavelets Multiresolut. Inf. Processing 2021, 19, 2050063. [Google Scholar] [CrossRef]

- Zhang, Q.; Shen, X.; Xu, L.; Jia, J. Rolling guidance filter. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 815–830. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef]

- Zhang, H.; Dana, K. Multi-style generative network for real-time transfer. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Kang, S.; Park, H.; Park, J.I. Identification of multiple image steganographic methods using hierarchical ResNets. IEICE Trans. Inf. Syst. 2021, 104, 350–353. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.; Durrani, T.S. Infrared and visible image fusion with ResNet and zero-phase component analysis. Infrared Phys. Technol. 2019, 102, 103039. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Yang, F.; Li, J.; Xu, S.; Pan, G. The research of a video segmentation algorithm based on image fusion in the wavelet domain. In Proceedings of the 5th International Symposium on Advanced Optical Manufacturing and Testing Technologies: Smart Structures and Materials in Manufacturing and Testing, Dalian, China, 12 October 2010; Volume 7659, pp. 279–285. [Google Scholar]

- Liu, Y.; Chen, X.; Cheng, J.; Peng, H.; Wang, Z. Infrared and visible image fusion with convolutional neural networks. Int. J. Wavelets Multiresolut. Inf. Process. 2018, 16, 1850018. [Google Scholar] [CrossRef]

- Ma, J.; Chen, C.; Li, C.; Huang, J. Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 2016, 31, 100–109. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, L.; Bai, X.; Zhang, L. Infrared and visual image fusion through infrared feature extraction and visual information preservation. Infrared Phys. Technol. 2017, 83, 227–237. [Google Scholar] [CrossRef]

- Bavirisetti, D.P.; Dhuli, R. Two-scale image fusion of visible and infrared images using saliency detection. Infrared Phys. Technol. 2016, 76, 52–64. [Google Scholar] [CrossRef]

- Ma, J.; Zhang, H.; Shao, Z.; Liang, P.; Xu, H. GANMcC: A generative adversarial network with multiclassification constraints for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2020, 70, 5005014. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518. [Google Scholar] [CrossRef] [PubMed]

- Chibani, Y. Additive integration of SAR features into multispectral SPOT images by means of the à trous wavelet decomposition. ISPRS J. Photogramm. Remote Sens. 2006, 60, 306–314. [Google Scholar] [CrossRef]

- Xydeas, C.S.; Pv, V. Objective image fusion performance measure. Mil. Tech. Cour. 2000, 56, 181–193. [Google Scholar] [CrossRef]

- Yin, C.; Blum, R.S. A new automated quality assessment algorithm for image fusion. Image Vis. Comput. 2009, 27, 1421–1432. [Google Scholar]

- Qu, G.; Zhang, D.; Yan, P. Information measure for performance of image fusion. Electron. Lett. 2002, 38, 313–315. [Google Scholar] [CrossRef]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

| Feature | ResNet | CNN | GTF | IFEVIP | TIF | GANMcC | U2Fusion | Proposed |

|---|---|---|---|---|---|---|---|---|

| Energy | − | + | + | + | − | − | + | + |

| Texture detail | − | + | − | + | + | − | − | + |

| Contour Structure | − | − | − | + | + | − | + | + |

| Chromaticity | − | + | − | − | − | − | − | + |

| Visual effects | − | − | − | − | − | − | − | + |

| index | ResNet | CNN | GTF | IFEVIP | TIF | GANMcC | U2Fusion | Proposed |

|---|---|---|---|---|---|---|---|---|

| EN | 6.46 | 6.746 | 6.675 | 6.319 | 6.668 | 6.426 | 6.451 | 6.94 |

| QABF | 0.446 | 0.441 | 0.338 | 0.38 | 0.468 | 0.417 | 0.423 | 0.487 |

| QCB | 0.498 | 0.487 | 0.394 | 0.436 | 0.451 | 0.413 | 0.422 | 0.527 |

| MI | 1.084 | 1.249 | 1.241 | 1.28 | 1.514 | 1.035 | 1.113 | 1.624 |

| SSIM | 0.358 | 0.656 | 0.513 | 0.508 | 0.493 | 0.341 | 0.398 | 0.901 |

| VIF | 0.432 | 0.443 | 0.416 | 0.397 | 0.438 | 0.401 | 0.419 | 0.452 |

| Method | ResNet | CNN | GTF | IFEVIP | TIF | GANMcC | U2Fusion | Proposed |

|---|---|---|---|---|---|---|---|---|

| Time/s | 20.73 | 23.16 | 2.91 | 1.34 | 1.03 | 13.41 | 15.02 | 3.16 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ji, J.; Zhang, Y.; Hu, Y.; Li, Y.; Wang, C.; Lin, Z.; Huang, F.; Yao, J. Fusion of Infrared and Visible Images Based on Three-Scale Decomposition and ResNet Feature Transfer. Entropy 2022, 24, 1356. https://doi.org/10.3390/e24101356

Ji J, Zhang Y, Hu Y, Li Y, Wang C, Lin Z, Huang F, Yao J. Fusion of Infrared and Visible Images Based on Three-Scale Decomposition and ResNet Feature Transfer. Entropy. 2022; 24(10):1356. https://doi.org/10.3390/e24101356

Chicago/Turabian StyleJi, Jingyu, Yuhua Zhang, Yongjiang Hu, Yongke Li, Changlong Wang, Zhilong Lin, Fuyu Huang, and Jiangyi Yao. 2022. "Fusion of Infrared and Visible Images Based on Three-Scale Decomposition and ResNet Feature Transfer" Entropy 24, no. 10: 1356. https://doi.org/10.3390/e24101356

APA StyleJi, J., Zhang, Y., Hu, Y., Li, Y., Wang, C., Lin, Z., Huang, F., & Yao, J. (2022). Fusion of Infrared and Visible Images Based on Three-Scale Decomposition and ResNet Feature Transfer. Entropy, 24(10), 1356. https://doi.org/10.3390/e24101356