Abstract

We propose a reinforcement learning (RL) approach to compute the expression of quasi-stationary distribution. Based on the fixed-point formulation of quasi-stationary distribution, we minimize the KL-divergence of two Markovian path distributions induced by candidate distribution and true target distribution. To solve this challenging minimization problem by gradient descent, we apply a reinforcement learning technique by introducing the reward and value functions. We derive the corresponding policy gradient theorem and design an actor-critic algorithm to learn the optimal solution and the value function. The numerical examples of finite state Markov chain are tested to demonstrate the new method.

1. Introduction

Quasi-stationary distribution (QSD) is the long time statistical behavior of a stochastic process that will be surely killed when this process is conditioned to survive [1]. This concept has been widely used in applications, such as in biology and ecology [2,3], chemical kinetics [4,5], epidemics [6,7,8], medicine [9] and neuroscience [10,11]. Many works for rare events in meta-stable systems also focus on this quasi-stationary distribution [12,13]. In addition, some new Monte Carlo sampling methods, for instance, the Quasi-stationary Monte Carlo method [14,15], also arise by using QSD instead of true stationary distribution, for instance, the Quasi-stationary Monte Carlo method [14,15]

We are interested in the numerical computation of QSD and focus on the finite state Markov chain in this paper. Mathematically, the quasi-stationary distribution can be solved as the principal left eigenvector of a sub-Markovian transition matrix. Thus, traditional numerical algebra methods can be applied to solve the quasi-stationary distribution in finite state space, for example, the power method [16], the multi-grid method [17] and Arnoldi’s algorithm [18]. These eigenvector methods can produce a stochastic vector for QSD instead of generating samples of QSD.

In search of efficient algorithms for large state space, stochastic approaches are in favor of either sampling the QSD or computing the expression of QSD, and these methods can be applied or extended easily to continuous state space. A popular approach for sampling quasi-stationary distribution is the Fleming–Viot stochastic method [19]. The Flemming–Viot method first simulates N particles independently. When any one of the particles falls into the absorbing state and becomes killed, a new particle is uniformly selected from the remaining surviving particles to replace the dead one, and the simulation continues. When time and N tend to infinity, the particles’ empirical distribution can converge to the quasi-stationary distribution.

In [20,21,22], the authors proposed to recursively update the expression of QSD at each iteration based on the empirical distribution of a single-particle simulation. It is shown in [21] that the convergence rate can be , where n is the iteration number. This method is later improved in [23,24] by applying the stochastic approximation method [25] and the Polyak–Ruppert averaging technique [26]. These improved algorithms have a choice of flexible step size but require a projection operator onto probability simplex, which carries some extra computational overhead increasing with the number of states. Ref. [15] extended the algorithm to the diffusion process.

In this paper, we focus on how to compute the expression of the quasi-stationary distribution, which is denoted by on a metric space . If is finite, is a probability vector, and if is a domain in , then is a probability density function on . We assume can be numerically represented in parametric form and . This family can be in tabular form or any neural network. Then, the problem of finding the QSD becomes answering the question of how to compute the optimal parameter in . We call this problem the learning problem for QSD. In addition, we want to directly learn QSD and not use the distribution family to fit the simulated samples generated by other traditional simulation methods.

Our minimization problem for QSD is similar to the variational inference (VI) [27], which minimizes an objective functional measuring the distance between the target and candidate distributions. However, unlike the mainstream VI methods such as evidence lower bound (ELBO) technique [28] or particle-based [29], flow-based methods [30], our approach is based on recent important progresses from reinforcement learning (RL) method [31], particularly the policy gradient method and actor-critic algorithm. We first regard the learning process of the quasi-stationary distribution as the interaction with the environment, which is constructed by the property of QSD. Reinforcement learning has recently shown tremendous advancements and remarkable successes in applications (e.g., [32,33,34]). The RL framework provides an innovative and powerful modeling and computation approach for many scientific computing problems.

The essential question is how to formulate the QSD problem as an RL problem. Firstly, for the sub-Markovian kernel K of a Markov process, we can define a Markovian kernel on (see Definition 1) and then QSD is defined by the equation , which equals as the initial distribution and the distribution after one step. Secondly, we consider an optimal (in our parametric family of distribution) to minimize the Kullback–Leibler divergence (i.e., relative entropy) of two path distributions, denoted by and , associated with two Markovian kernels and where . Thirdly, inspired by the recent work [35] of using RL for rare events sampling problems, we transform the minimization of KL divergence between and into the maximization of a time-averaged reward function and defined the corresponding value function at each state x. This completes our modeling of RL for the quasi-stationary distribution problem. Lastly, we derive the policy gradient theorem (Theorem 1) to compute the gradient with respect to of the averaged reward for the learning dynamic for the averaged reward. This is known as the “actor” part. The “critic” part is to learn the value function V in its parametric form . The actor-critic algorithm uses the stochastic gradient descent to train the parameter for the action and the parameter for the value function (see Algorithm 1).

Our contribution is that we first devise a method to transform the QSD problem into the RL problem. Similar to [35], our paper also uses the KL-divergence to define the RL problem. However, our paper fully adapts the unique property of QSD that is a fixed point problem to define the RL problem.

Our learning method allows the flexible parametrization of the distributions and uses the stochastic gradient method to train the optimal distribution. It is easy to implement optimization with scale up to large state spaces. The numerical examples we tested have shown our that methods converge faster than other existing methods [22,23].

Finally, we remark that our method works very well for QSD of the strict sub-Markovian kernel K but is not applicable to compute the invariant distribution when K is Markovian. This is because we transform the problem into the variational problem between two Markovian kernels and (where ). Note that (Definition 1), and our method is based on the fact that if and only if . If K is Markovian kernel, then for any , and our method cannot work. Thus, has to be strictly less than 1 for some .

This paper is organized as follows. Section 2 is a short review of the quasi-stationary distribution and some basic simulation methods of QSD. In Section 3, we first formulate the reinforcement learning problem by KL-divergence and derive the policy gradient theorem (Theorem 1). Using the above formulation, we then develop the actor-critic algorithm to estimate the quasi-stationary distribution. In Section 4, the efficiency of our algorithms is illustrated by four examples compared with the simulation methods in [24].

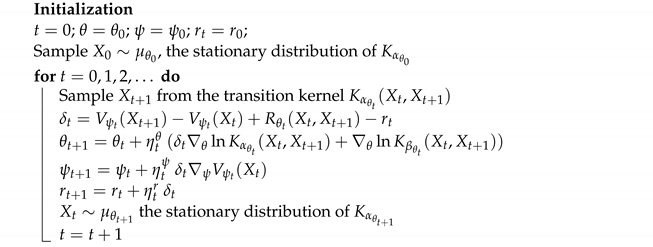

| Algorithm 1: (ac- method) Actor-critic algorithm for quasi-stationary distribution |

|

2. Problem Setup and Review

2.1. Quasi-Stationary Distribution

We start with an abstract setting. Let be a finite state equipped with the Borel -field , and let be the space of probabilities over . A sub-Markovian kernel on is defined as a map such that for all is a nonzero measure with and for all is measurable. In particular, if for all , then K is called a Markovian kernel. Throughout the paper, we assume that K is strictly sub-Markovian, i.e., for some x.

Let be a Markov chain with values in where denotes an absorbing state. We define the extinction time

We define the quasi-stationary distribution (QSD) as the long time limit of the conditional distribution, if there exists a probability distribution on such that the following is the case:

where refers to the probability distribution of associated with the initial distribution on . Such a conditional distribution well describes the behavior of the process before extinction, and it is easy to see that satisfies the following fixed point problem:

where refers to the probability distribution of associated with the initial distribution on . Equation (2) is equivalent to the following stationary condition such that the following is the case:

where is a row vector and denotes the column vector with all entries being one and

For any sub-Markovian kernel K, we can associate K with a Markovian kernel on defined by the following:

for all . The kernel can be understood as the Markovian transition kernel of the Markov chain on for which its transitions in is specified by K, but it is “killed” forever once it leaves .

In this paper, we assume is a finite state space and the process in consideration has a unique QSD. Assume that K is irreducible, then existence and uniqueness of the quasi-stationary distribution can be obtained by the Perron–Frobenius theorem [36].

An important Markovian kernel is the following , which is defined on only and has a “regenerative probability” .

Definition 1.

For any given and a sub-Markovian kernel K on , we define , a Markovian kernel on , as follows:

for all and .

is a Markovian kernel because . It is easy to sample from any state : run the transition as normal by using to have a next state denoted by Y, then if ; otherwise, sample from .

We know that is the quasi-stationary distribution of K if and only if it is the stationary distribution of .

It is easy to observe that if and only if for any two distributions and . Moreover, for every , has a unique invariant probability denoted by . Then, is continuous in (i.e., for the topology of weak convergence), and there exists such that or, equivalently, is a QSD for K.

2.2. Review of Simulation Methods for Quasi-Stationary Distribution

According to the above subsection, the QSD satisfies the fixed point problem as follows:

where is the stationary distribution of on . In general, (6) can be solved recursively by .

The Fleming–Viot (FV) method [19] evolves N particles independently of each other as a Markov process associated with the transition kernel until one succeeds in jumping to the absorbing state ∂. At that time, this killed particle is immediately reset to as an initial state uniformly chosen from one of the remaining particles. The QSD is approximated by the empirical distribution of the N particles in total, and these particles can be regarded as samples from the quasi-stationary distribution such as the MCMC method.

Ref. [37] proposed a simulation method by only using one particle at each iteration to update . At iteration n, given an , one can run a discrete-time Markov chain as normal on with initial ; then, is computed as the following weighted average of empirical distributions:

where and I are the indicator functions, and is the first extinction time for the process . This iterative scheme has a convergence rate of .

In [23,24], the above method is extended to the stochastic approximations framework:

where denotes the projection into the probability simplex, and is the step size satisfying and . Specifically, if for , under a sufficient condition, they have for some matrix V [23,24]. If the Polyak–Ruppert averaging technique is applied to generate the following:

then the convergence rate of becomes [23,24].

The simulation schemes (7) and (8) need to sample the initial states according to and to add the empirical distribution and at each x point wisely. Thus, they are suitable for finite state space where is a probability vector saved in the tabular form. In (8), there is no need to record all exit times , but the additional projection operation in (8) is computationally expensive since the cost is where [38,39].

3. Learn Quasi-Stationary Distribution

We focus on the computation of the expression of the quasi-stationary distribution. In particular, when this distribution is parametrized in a certain manner by , we can extend the tabular form for finite-state Markov chain to any flexible form, even in the neural networks for probability density function in . However, we do not pursue this representation and expressivity issue here and restrict our discussion to finite state space only to illustrate our main idea first. In finite state space, for can be simply described as a softmax function with parameter (). This introduces no representation error. For the generalization to continuous space in jump and diffusion processes or even for a huge finite state space, a good representation of is important in practice.

In this section, we shall formulate our QSD problem in terms of reinforcement learning (RL) so that the problem of seeking optimal parameters becomes a policy optimization problem. We derive the policy gradient theorem to construct a gradient descent method for the optimal parameter. We then show a method for designing actor-critic algorithms based on stochastic optimization.

3.1. Formulation of RL and Policy Gradient Theorem

Before introducing the RL method of our QSD problem, we develop a general formulation by introducing the KL-divergence between two path distributions.

Let and be two families of Markovian kernels on in parametric forms with the same set of parameters . Assume both and are ergodic for any . Let and denote a path up to time T by . Define the path distributions under the Markov chain kernel and , respectively.

Define the KL divergence from to on :

where the expectation is for the path generated by the transition kernel , and the following is called the (one-step) reward.

Define the average reward as the time averaged negative KL divergence in the limit of .

Due to ergodicity of , where is the invariant measure of , is independent of initial state . Obviously, for any .

Property 1.

The following are equivalent:

- 1.

- reaches its maximal value 0 at ;

- 2.

- in for any ;

- 3.

- ;

- 4.

- .

Proof.

We only need to show . It is easy to see that

If , since , then

Thus, we have . □

The above property establishes the relationship between the RL problem and QSD problem.

We show our theoretic main result below as the foundation of our algorithm to be developed later. This theorem can be regarded as one type of the policy gradient theorem for the policy gradient method in reinforcement learning [31].

Define the following value function ([31] Chapter 13).

Certainly, V also depends on , although we do not write explicitly.

Theorem 1(policy gradient theorem).

We have the following two properties:

- 1.

- At any θ, for any , the following Bellman-type equation holds for the value function V and the average reward :

- 2.

- The gradient of the average reward is the following:

where expectations are for the joint distribution where is the stationary measure of .

Proof.

We shall prove the Bellman equation first and then we use the Bellman equation to derive the gradient of the average reward . For any , by writing and defining

we have the following:

which proves (15); in other words, we have the following.

Next, we compute the gradient of . By trivial equality of the following:

and the definition (12), we can write the gradient of as follows.

We here keep the term in the first line, even though it has no contribution here (in fact, to add any constant to is also fine). Since this equation holds for all states x on the right-hand side, we take the expectation with respect to , the stationary distribution of . Thus, we have the following.

In fact, we can add any constant number b (independent of x and y) inside the squared bracket of the last line without changing the equality due to the following fact similar to (18): . (16) is a special case of . □

Remark 1.

As shown in the proof, (16) holds if at the right-hand side is replaced by any constant number b. is a good choice to reduce the variance since can be regarded as the expectation of .

Remark 2.

Remark 3.

The name of “policy” here refers to the role of θ as the policy for decision makers to improve reward .

3.2. Learn QSD

Now, we discuss how to connect QSD with the results in the previous subsection. In view of Equation (5), we introduce as the one-step distribution if starting from the initial ; in other words, we have the following.

By (5), is a QSD if and only if . However, we do not directly compare these two distributions and . Instead, we consider their Markovian kernels induced by (4): and . Our approach is to consider KL divergence similar to (11) between two kernels and since if and only if . In this manner, one can view and (note ) as two transition matrices and in the previous section, in which the parameter here is in fact the distribution .

To have a further representation of the distribution , which is a (probability mass) function on , we propose a parametrized family for in the form where is a generic parameter. In the simplest case, takes the so-called soft-max form if for This parametrization represents without any approximation error for finite state space and the effective space of is just . For certain problems, particularly with large state space, if one has some prior knowledge about the structure of the function on , one might propose other parametric forms of with the dimension of less than the cardinality to improve the efficiency, although the extra representation error in this manner has to be introduced.

For any given , the corresponding Markovian kernel is then defined in (4) and is defined by (19). is like-wise defined by (4) again. To use the formulation in Section 3.1, we chose and . Define the objective function as before:

where the following is the case.

The value function is defined similarly. Theorem 1 now provides the expression of the following gradient:

where and where is the stationary measure of .

The optimal for the QSD is to maximize , and this can be solved by the gradient descent algorithm:

where is the step size. In practice, the stochastic gradient is applied:

where are sampled based on the Markovian kernel (see Algorithm 1) and the differential temporal (TD) error is as follows.

Next, we need to address a remaining issue, which is the question of how to compute value functions V and in the TD error (22). In addition, we also need to show the details of computing and .

3.3. Actor-Critic Algorithm

With the stochastic gradient method (21), we can obtain optimal policy . We refer to (21) as the learning dynamics for the policy, and it is generally known as actor. To calculate the value function V appearing in , we need to have a new learning dynamic, which is called critic. Then, the overall policy-gradient method is termed as the actor-critic method.

We start with the Bellman Equation (15) for the value function and considered the mean-square-error loss as follows:

where is any distribution supported on . if and only if V satisfies the Bellman Equation (15), i.e., V is the value function. To learn V, we introduce function approximation for the value function, , with the parameter and considered to minimize the following:

by the semi-gradient method ([31], Chapter 9).

Here, the term is frozen first and then approximated by since it could be treated as a prior guess of the value function for the future state.

Then, for the gradient descent iteration where is the step size, we can have the following stochastic gradient iteration:

where the differential temporal (TD) error is defined above in (22).

Here, for the sake of simplicity, are the same samples as in the actor method for . This means that distribution above is chosen as used for the gradient .

Next, we consider the calculation of the reward by the following Bellman Equation (15).

Let be the estimate of the reward at time t. We can update our estimate of the reward every time a transition occurs as follows:

where is the TD error before

In conclusion, (21), (23) and (24) together consist of the actor-critic algorithm, which is summarized in Algorithm 1. We remark that Algorithm 1 can be easily adapted to use the mini-batch gradient method where several copies of are sampled, and the average is used to update the parameters. The stationary distribution of is sampled by running the corresponding Markov chain for several steps with “warm start”: the initial for is set as the final state generated from the previous iteration at . The length of this “burn-in” period can be set as just one step in practice for efficiency.

Remark 4.

Finally, we remark on the computation of and in Algorithm 1. The details are shown in Appendix A. We comment that the main computational cost is the function , which has to be pre-computed and stored. If the problem has some special structure, the function could be approximated in parametric form. Another special case is our second example where .

4. Numerical Experiment

In this section, we present two examples to demonstrate Algorithm 1. We call the algorithm (7), (8) and (9) in Section 2.2 used in [23,24], as Vanilla Algorithm, Projection Algorithm and Polyak Averaging Algorithm, respectively. Let 0 be the absorbing state and are non-absorbing states; the Markov transition matrix on is denoted by the following:

where K is an N-by-N sub-Markovian matrix. For Algorithm 1, distribution on is always parameterized as follows:

and the value function is represented in tabular form for simplicity:

where .

4.1. Loopy Markov Chain

We test a toy example of the three-state loopy Markov chain, which was considered in [23,24]. The transition probability matrix for the four states is as follows.

The state 0 is the absorbing state ∂ and . K is the sub-matrix of corresponding to the states . With the probability , the process exits directly from state 1, 2 or 3. The true quasi-stationary distribution of this example is the uniform distribution for any .

In order to show the advantage of our algorithm, we consider two cases: (1) and (2) . For a larger , the original Markov chain is very easy to exit; thus, each iteration takes less time, but the convergence rate of Vanilla algorithm is slower.

In order to quantify the accuracy of the learned quasi-stationary distribution, we compute the norm of the error between the learned quasi-stationary distribution and the true values.

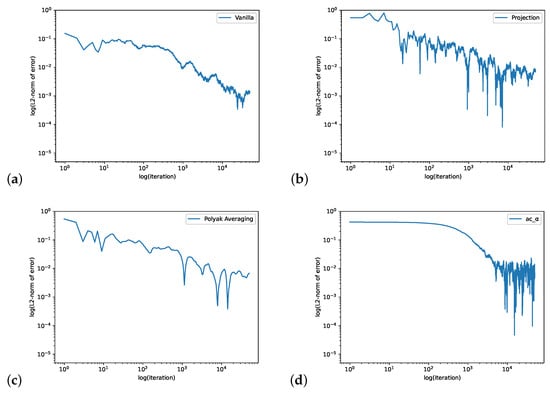

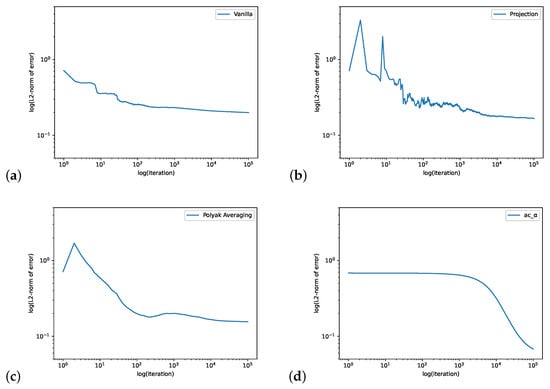

In Figure 1, we compute the QSD when . We set the initial value , the learning rate and the batch size is 4. The step size for the Projection Algorithm is . Figure 2 is for the case when We set the initial value , the learning rate and the batch size is 32. The step size for the Projection Algorithm is .

Figure 1.

The loopy Markov chain example with . The figure shows the log–log plots of -norm error of the Vanilla Algorithm (a), Projection Algorithm (b), Polyak Averaging Algorithm (c) and our actor-critic algorithm (d). The iteration for the actor-critic algorithm is defined as one step of gradient descent (“t” in Algorithm 1).

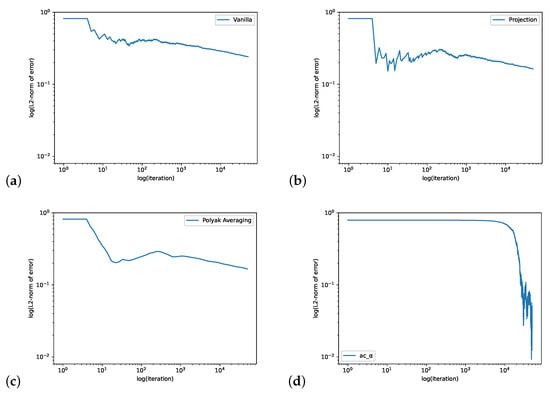

Figure 2.

The loopy Markov chain example with . The figure shows the log–log plots of -norm error of Vanilla Algorithm (a), Projection Algorithm (b), Polyak Averaging Algorithm (c) and our actor-critic algorithm (d).

4.2. M/M/1/N Queue with Finite Capacity and Absorption

Our second example is an M/M/1 queue with finite queue capacity. The 0 state has been set as an absorbing state. The transition probability matrix on takes the following form:

where , , . means a higher chance to jump to the right than to the left. A larger will have less probability of exiting . Note that for . Thus, for any if and Then, if and by (20), the gradient is simplified as follows:

where Y follows distribution .

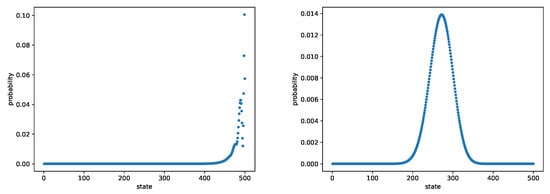

We consider two cases: (1) a constant and (2) a state-dependent . Note that gives an equal probability of jumping to the left and to the right. Thus, in case (1), there is a boundary layer at the most right end and in case (2), we expect to see a peak of the QSD near . Figure 3 shows the true QSD in both cases. We set .

Figure 3.

The QSD for M/M/1/500 queue with (left) and (right).

In Figure 4, we consider the case when and compute errors. We set the initial value for and , , and the learning rate and the batch size is 64. The step size for Projection Algorithm is . Figure 5 plots the errors for the state-dependent . We set the initial value for , , for , , and for , and the learning rate is , with the batch size as 128. The step size for the Projection Algorithm is . Both figures demonstrate that actor-critic algorithm performs quite well on this example.

Figure 4.

The M/M/1/500 queue with . The figure shows the log–log plots of -norm error of Vanilla Algorithm (a), Projection Algorithm (b), Polyak Averaging Algorithm (c) and our actor-critic algorithm (d).

Figure 5.

The M/M/1/500 queue with . The figure shows the log–log plots of -norm error of Vanilla Algorithm (a), Projection Algorithm (b), Polyak Averaging Algorithm (c) and our actor-critic algorithm (d).

In Table 1, we compared the CPU time of each algorithm in the M/M/1/500 queue when they obtain an accuracy at . We found that our algorithm cost less time on this example.

Table 1.

The CPU time of each algorithm in the M/M/1/500 queue when they obtain the accuracy at .

5. Summary and Conclusions

In this paper, we propose a reinforcement learning (RL) method for quasi-stationary distribution (QSD) in discrete time finite-state Markov chains. By minimizing the KL-divergence of two Markovian path distributions induced by the candidate distribution and the true target distribution, we introduce the formulation in terms of RL and derive the corresponding policy gradient theorem. We devise an actor-critic algorithm to learn the QSD in its parameterized form . This formulation of RL can receive benefit from the development of the RL method and the optimization theory. We illustrated our actor-critic methods on two numerical examples by using simple tabular parametrization and gradient descent optimization. It has been observed that the performance of our method is more prominent for large scale problems.

We only demonstrate the preliminary mechanism of the idea here, and there is much space left for improving the efficiency and extensions in future works. The generalization from the current consideration of finite-state Markov chain to the jump Markov process and the diffusion case is in consideration. More importantly, for very large or high dimensional state space, modern function approximation methods such as kernel methods or neural networks should be used for the distribution and the value function . The recent tremendous advancement of optimization techniques for policy gradient in reinforcement learning could also contribute much to efficiency improvement of our current formulation.

Author Contributions

Conceptualization, Z.C. and L.L.; Investigation, Z.C.; Methodology, Z.C. and X.Z.; Software, Z.C.; Supervision, X.Z.; Writing—original draft, Z.C.; Writing—review & editing, L.L. and X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Government of Hong Kong, Grant Number 11305318; NSFC Grant Number 11871486.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

L.L. acknowledges the support of NSFC 11871486. X.Z. acknowledges the support of Hong Kong RGC GRF 11305318.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

In this appendix, we discuss the computation of the gradient of and . Note that is straightforward since we model in its parametrization form . By definition (4), we have the following:

and the following as well:

where . The vector for any x can be pre-computed and saved in tabular form.

By (19), the one-step distribution is computed below.

Here, the samples could be approximated by stationary distribution ; thus, one may simply use the known sample to replace with .

To find , we use stochastic approximation again.

References

- Collet, P.; Martínez, S.; Martín, J.S. Quasi-Stationary Distributions: Markov Chains, Diffusions and Dynamical Systems; Springer Science & Business Media: Cham, Switzerlands, 2012. [Google Scholar]

- Buckley, F.; Pollett, P. Analytical methods for a stochastic mainland–island metapopulation model. Ecol. Model. 2010, 221, 2526–2530. [Google Scholar] [CrossRef]

- Lambert, A. Population dynamics and random genealogies. Stoch. Model. 2008, 24, 45–163. [Google Scholar] [CrossRef]

- De Oliveira, M.M.; Dickman, R. Quasi-stationary distributions for models of heterogeneous catalysis. Phys. Stat. Mech. Appl. 2004, 343, 525–542. [Google Scholar] [CrossRef][Green Version]

- Dykman, M.I.; Horita, T.; Ross, J. Statistical distribution and stochastic resonance in a periodically driven chemical system. J. Chem. Phys. 1995, 103, 966–972. [Google Scholar] [CrossRef][Green Version]

- Artalejo, J.R.; Economou, A.; Lopez-Herrero, M.J. Stochastic epidemic models with random environment: Quasi-stationarity, extinction and final size. J. Math. Biol. 2013, 67, 799–831. [Google Scholar] [CrossRef] [PubMed]

- Clancy, D.; Mendy, S.T. Approximating the quasi-stationary distribution of the sis model for endemic infection. Methodol. Comput. Appl. Probab. 2011, 13, 603–618. [Google Scholar] [CrossRef]

- Sani, A.; Kroese, D.; Pollett, P. Stochastic models for the spread of hiv in a mobile heterosexual population. Math. Biosci. 2007, 208, 98–124. [Google Scholar] [CrossRef]

- Chan, D.C.; Pollett, P.K.; Weinstein, M.C. Quantitative risk stratification in markov chains with limiting conditional distributions. Med. Decis. Mak. 2009, 29, 532–540. [Google Scholar] [CrossRef]

- Berglund, N.; Landon, D. Mixed-mode oscillations and interspike interval statistics in the stochastic fitzhugh–nagumo model. Nonlinearity 2012, 25, 2303. [Google Scholar] [CrossRef]

- Landon, D. Perturbation et Excitabilité Dans des Modeles Stochastiques de Transmission de l’Influx Nerveux. Ph.D. Thesis, Université d’Orléans, Orléans, France, 2012. [Google Scholar]

- Gesù, G.D.; Lelièvre, T.; Peutrec, D.L.; Nectoux, B. Jump markov models and transition state theory: The quasi-stationary distribution approach. Faraday Discuss. 2017, 195, 469–495. [Google Scholar] [CrossRef]

- Lelièvre, T.; Nier, F. Low temperature asymptotics for quasistationary distributions in a bounded domain. Anal. PDE 2015, 8, 561–628. [Google Scholar] [CrossRef]

- Pollock, M.; Fearnhead, P.; Johansen, A.M.; Roberts, G.O. The scalable langevin exact algorithm: Bayesian inference for big data. arXiv 2016, arXiv:1609.03436. [Google Scholar]

- Wang, A.Q.; Roberts, G.O.; Steinsaltz, D. An approximation scheme for quasi-stationary distributions of killed diffusions. Stoch. Process. Appl. 2020, 130, 3193–3219. [Google Scholar] [CrossRef]

- Watkins, D.S. Fundamentals of Matrix Computations; John Wiley & Sons: Hoboken, NJ, USA, 2004; Volume 64. [Google Scholar]

- Bebbington, M. Parallel implementation of an aggregation/disaggregation method for evaluating quasi-stationary behavior in continuous-time markov chains. Parallel Comput. 1997, 23, 1545–1559. [Google Scholar] [CrossRef]

- Pollett, P.; Stewart, D. An efficient procedure for computing quasi-stationary distributions of markov chains by sparse transition structure. Adv. Appl. Probab. 1994, 26, 68–79. [Google Scholar] [CrossRef]

- Martinez, S.; Martin, J.S. Quasi-stationary distributions for a brownian motion with drift and associated limit laws. J. Appl. Probab. 1994, 31, 911–920. [Google Scholar] [CrossRef]

- Aldous, D.; Flannery, B.; Palacios, J.L. Two applications of urn processes the fringe analysis of search trees and the simulation of quasi-stationary distributions of markov chains. Probab. Eng. Inform. Sci. 1988, 2, 293–307. [Google Scholar] [CrossRef]

- Benaïm, M.; Cloez, B. A stochastic approximation approach to quasi-stationary distributions on finite spaces. Electron. Commun. Probab. 2015, 20, 1–13. [Google Scholar] [CrossRef]

- De Oliveira, M.M.; Dickman, R. How to simulate the quasistationary state. Phys. Rev. E 2005, 71, 016129. [Google Scholar] [CrossRef]

- Blanchet, J.; Glynn, P.; Zheng, S. Analysis of a stochastic approximation algorithm for computing quasi-stationary distributions. Adv. Appl. Probab. 2016, 48, 792–811. [Google Scholar] [CrossRef]

- Zheng, S. Stochastic Approximation Algorithms in the Estimation of Quasi-Stationary Distribution of Finite and General State Space Markov Chains. Ph.D. Thesis, Columbia University, New York, NY, USA, 2014. [Google Scholar]

- Kushner, H.; Yin, G.G. Stochastic Approximation and Recursive Algorithms and Applications; Springer Science & Business Media: Cham, Switzerlands, 2003; Volume 35. [Google Scholar]

- Polyak, B.T.; Juditsky, A.B. Acceleration of stochastic approximation by averaging. SIAM J. Control. Optim. 1992, 30, 838–855. [Google Scholar] [CrossRef]

- Blei, D.M.; Kucukelbir, A.; McAuliffe, J.D. Variational inference: A review for statisticians. J. Am. Stat. Assoc. 2017, 112, 859–877. [Google Scholar] [CrossRef]

- Jordan, M.I.; Ghahramani, Z.; Jaakkola, T.S.; Saul, L.K. An Introduction to Variational Methods for Graphical Models. Mach. Learn. 1999, 37, 183–233. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, D. Stein variational gradient descent: A general purpose bayesian inference algorithm. In Advances in Neural Information Processing Systems; Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; Volume 29. [Google Scholar]

- Rezende, D.; Mohamed, S. Variational inference with normalizing flows. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; Bach, F., Blei, D., Eds.; Volume 37, pp. 1530–1538. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Popova, M.; Isayev, O.; Tropsha, A. Deep reinforcement learning for de novo drug design. Sci. Adv. 2018, 4, eaap7885. [Google Scholar] [CrossRef] [PubMed]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. A general reinforcement learning algorithm that masters chess, shogi, and go through self-play. Science 2018, 362, 1140–1144. [Google Scholar] [CrossRef] [PubMed]

- Rose, D.C.; Mair, J.F.; Garrahan, J.P. A reinforcement learning approach to rare trajectory sampling. New J. Phys. 2021, 23, 013013. [Google Scholar] [CrossRef]

- Méléard, S.; Villemonais, D. Quasi-stationary distributions and population processes. Probab. Surv. 2012, 9, 340–410. [Google Scholar] [CrossRef]

- Blanchet, J.; Glynn, P.; Zheng, S. Empirical analysis of a stochastic approximation approach for computing quasi-stationary distributions. In EVOLVE—A Bridge between Probability, Set Oriented Numerics, and Evolutionary Computation II; Schütze, O., Coello, C.A.C., Tantar, A.-A., Tantar, E., Bouvry, P., Moral, P.D., Legrand, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 19–37. [Google Scholar]

- Boyd, S.; Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Wang, W.; Carreira-Perpinán, M.A. Projection onto the probability simplex: An efficient algorithm with a simple proof, and an application. arXiv 2013, arXiv:1309.1541. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).