1. Introduction

Distributed Source Coding (DSC) is a non-traditional data compression architecture that independently encodes multiple sources and jointly decodes them. It is different from traditional multi-source joint coding schemes, which require encoders to communicate with each other for collaborative working. DSC requires two (or more) related sources to be independently encoded, and then jointly decoded. DSC is based on two important theorems: the first is the Slepian–Wolf theorem [

1], which proves that when data is losslessly compressed, independent coding and joint coding of related sources are equally effective; the second is the Wyner–Ziv theorem [

2], which establishes the foundation for lossy DSC schemes. Today, DSC has led to achievements in many practical applications, such as: distributed video coding [

3], hyperspectral image compression [

4], multi-view video coding [

5], wireless sensor network communication [

6], image authentication [

7], biometric information recognition [

8] and so on.

Considering the following application scenario when two correlated wireless sensor nodes (i.e., sources

X and

Y) sending data to a base station (the receiver), as long as the code rates

and

are met after

Y and

X are encoded, lossless compression can be achieved. Obviously, source

Y can be encoded using any traditional entropy coder. In the case that

X and

Y can communicate with each other, it is very simple for source

X to be encoded at a rate

with an existing source coding scheme. However, communication between encoders consumes computing resources and the energy of the sensor nodes. Fortunately, the Slepian–Wolf theory guarantees that even no communication established between the two nodes, source

X can also be encoded at a rate

with

and be losslessly decoded at the decoder although the encoder for source

X knows nothing about the source

Y (hence the distributed source coding). This is the asymmetric Slepian–Wolf problem that this work focuses on. In this scenario, source

Y is regarded as the side information which is encoded using a traditional source code at a rate

. However, the coding scheme for source

X is quite different, that is why the word ’asymmetric’ is used. Therefore, designing a method that enables

X to be encoded at a rate

less than

without knowing the characteristics of

Y and how to use the correlation between

X and

Y to losslessly recover

X during the decoding process are the research goals of asymmetric Slepian–Wolf coding [

9,

10]. Apparently, the coding of

Y is not the research focus of the asymmetric Slepian–Wolf problem;

Y can usually be encoded by any lossless coding technology.

Although the coding theory of DSC was proposed long ago, it was not until the 21st century, when the important DSC scheme based on syndromes (DISCUS) was proposed for the asymmetric Slepian–Wolf problem in [

11], that people carried out extensive research on specific coding schemes of DSC. After this, some DSC schemes based on channel coding have been proposed successively, such as the implementation of distributed source coding using Turbo codes [

12], LDPC codes [

13] and Polar codes [

14]. The basic idea of DSC schemes based on channel codes is that the encoder only needs to send the syndromes or parity bits generated from the source sequence

X to the decoder without the knowledge about

Y, and the number of bits required to transmit the syndromes will be less than the number of bits required to directly transmit

X using traditional source codes, thereby achieving further compression of

X. The essence of the decoding process is that the decoder uses the received syndromes to ‘correct’ the side information sequence

Y (the side information sequence

Y can be regarded as an erroneous version of

X). Coding methods of DSC schemes based on channel codes are apparently indifferent with the statistical characteristics of the source. This means that DSC schemes based on channel codes can only use the correlation between the sources, but cannot utilize the internal correlation among symbols of source

X. Furthermore, DSC schemes based on channel codes can only exhibit better performances when coding longer blocks of source symbols.

In addition to DSC schemes based on channel codes, many scholars have also carried out research on DSC based on source codes. The DSC scheme based on arithmetic coding is the most popular method. Distributed arithmetic coding (DAC) [

15] makes use of the characteristics of arithmetic coding that can freely adjust the encoding probability distribution of symbols during the encoding process; it ensures a shorter codelength by purposely expanding the probability interval of each symbol. However, the probability intervals during the coding will be overlapped after the probability interval of each symbol is expanded, which may cause the decoding ambiguity because the received codewords may fall into the overlapping areas during decoding. The decoder needs to create a decoding tree to save all possible decoding results, and use the maximum a posterior (MAP) decoding algorithm with the assistance of a side information sequence to find the decoding sequence with the largest cumulative product of posterior probabilities. Compared with DSC schemes based on channel codes, DAC can better adapt to non-stationary sources, and the coding performance for smaller data blocks will be better [

9]. After DAC was proposed, a series of DSC schemes similar to DAC, which are based on expanding the probability intervals, were also successively proposed. In [

16], a Slepian–Wolf code which is a quasi-arithmetic code based on coding interval overlapping is described, and this scheme is also applied to a distributed coding platform. Two non-binary DAC compression schemes, multi-interval DAC and Huffman coded DAC, are proposed in [

17], which extend DAC from the compression of binary sources to the compression of non-binary sources. In [

18], another DAC scheme was proposed which makes the encoder imitate the operation of the decoder and put ambiguous symbols in a list. If the decoder detects that the decoding has failed (e.g., by using a cyclic redundancy check code), the decoder can request the encoder to send the stored ambiguous symbols through a feedback channel. In [

19], the DAC scheme was extended to a scenario of noisy communication, and introduced a ‘forbidden symbol’ to DAC; it is referred to as DJSCAC. This is a very convenient way to improve decoding error performance. On the basis of DAC, the scheme adds a non-coding interval into the original probability intervals, the non-coding interval does not correspond to any symbol that can be practically encoded. The decoder determines whether the codeword is wrong by judging whether the ‘forbidden symbol’ is obtained during the decoding process. However, when a DSC scheme based on expanding probability intervals encodes a biased source (the probability of one of the encoded symbol is relatively large), the large probability will make the expandable range of the probability interval very limited. This problem limits the potential compression gain that DSC schemes based on expanding probability intervals can obtain when the source has a highly skewed probability distribution

, especially when a context model is used.

Another idea to implement the DSC scheme based on arithmetic coding is to puncture the output bitstream of the arithmetic encoder. This is a very convenient solution to obtain the additional compression gain, which will not be affected by the skewed probability distribution of the source. A DSC scheme using the punctured quasi-arithmetic code was proposed in [

20]. Firstly, the quasi-arithmetic coding is used to encode the source to obtain the code stream, and then the bitstream is punctured (deletion of some bits in the bitstream) to further reduce the bit rate. A similar scheme by puncturing codewords is also proposed in [

21]. This scheme uses arithmetic coding based on context models to encode the source symbols, and then the output bitstream is punctured to further reduce the code rate; a hierarchical interleaving scheme is used to avoid early mistaken deletion of the correct decoding path from the decoding tree. A coupled distributed arithmetic coding scheme was described in [

22], which is also a method to obtain additional compression gain by puncturing the codeword of arithmetic coding. This scheme divides the original binary source sequence into a pair of coupled streams before arithmetic coding, and the decoder recovers these streams to overcome the de-synchronization problem. As long as the two streams do not lose synchronization at the same time, the decoder can still successfully recover the source sequence. However, the price of using this coupled method is that the original correlation between adjacent symbols in the source sequence is broken. In addition, the punctured bits in the codeword cannot be directly recovered; the decoder needs to insert bits into the received bitstream to restore the original length of the codeword. Meanwhile, there is no correlation in the bitstream that can be used to assist the restoration of those discarded bits. The decoder needs to perform bit insertion operations on each received byte, thereby increasing the decoding complexity.

It is now well established from a variety of studies that an excellent arithmetic code-based DSC scheme needs to have the following characteristics at the same time: it should make full use of the correlation between adjacent symbols in the source and the correlation between the corresponding symbols of the sources [

23]. While further expanding the compression capability of traditional arithmetic coding, it should also have a good bit error rate at the decoder. Taking into account the shortcomings of the DSC schemes that puncture the bitstream, the method of puncturing the codeword can be changed to puncturing the source sequence, that is, purging part of the symbols from the source sequence.

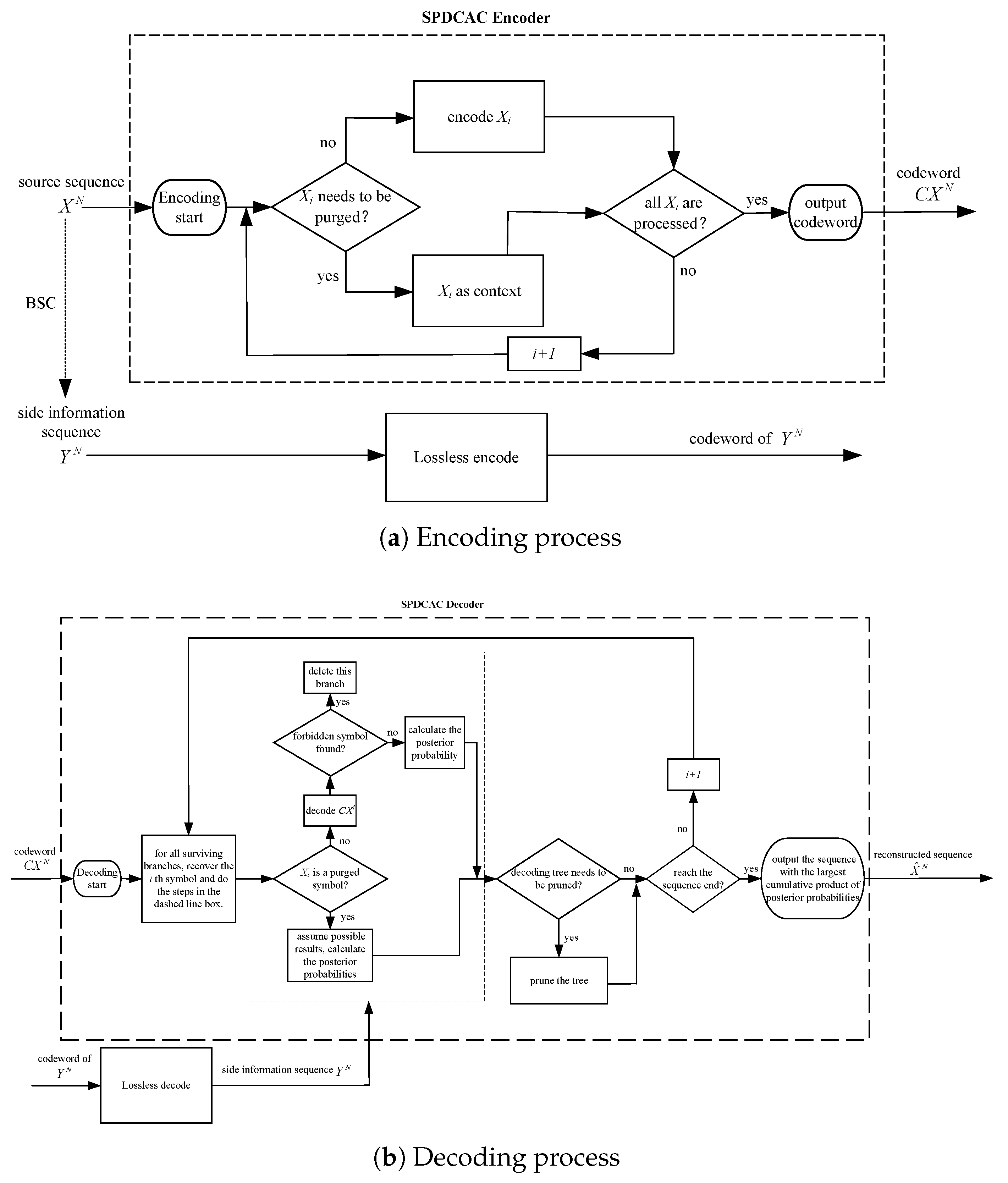

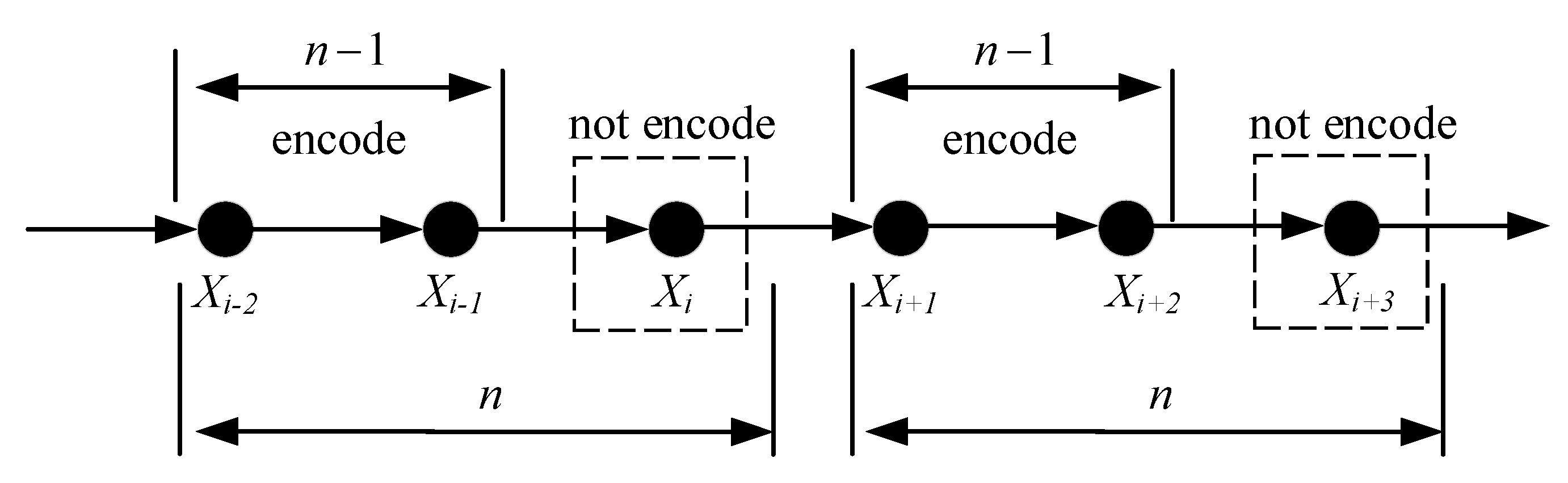

Based on the above analysis, a new DSC algorithm named source symbol purging-based distributed conditional arithmetic coding (SPDCAC) is proposed in this paper. However, unlike the previous puncture-based schemes in which the punctured bits are simply discarded, the purged symbols in the proposed scheme are not only extracted from the source sequence to further reduce the bit rate, but are also used as the context for the coding of the subsequent symbols. The advantage of this method is that the original correlation between adjacent symbols in the source sequence is preserved, and the additional compression gain obtained will not be affected by the skewed probability distribution of the source. Meanwhile, the decoder can still use the correlation between adjacent source symbols (internal correlation of the source) to restore those purged symbols more accurately during the decoding process. A better calculation method of the posterior probability for SPDCAC is also proposed. Compared with the traditional method in [

15], the proposed method exhibits a better bit error rate performance in the decoding process. A forbidden symbol is also added to the coding probability distribution, which can expedite the error detection and further reduce the bit error rate at the decoder but not break the correlation between adjacent symbols within the source, while not significantly affecting the compression ratio. The simulation results show that SPDCAC exhibits an excellent coding performance.

The rest of this paper is arranged as follows: In

Section 2, the SPDCAC encoding and decoding details are described.

Section 3 introduces the posterior probability calculation method proposed for SPDCAC. The simulation results are presented in

Section 4.

Section 5 is the summary of this paper.

3. The Calculation of the Posterior Probability

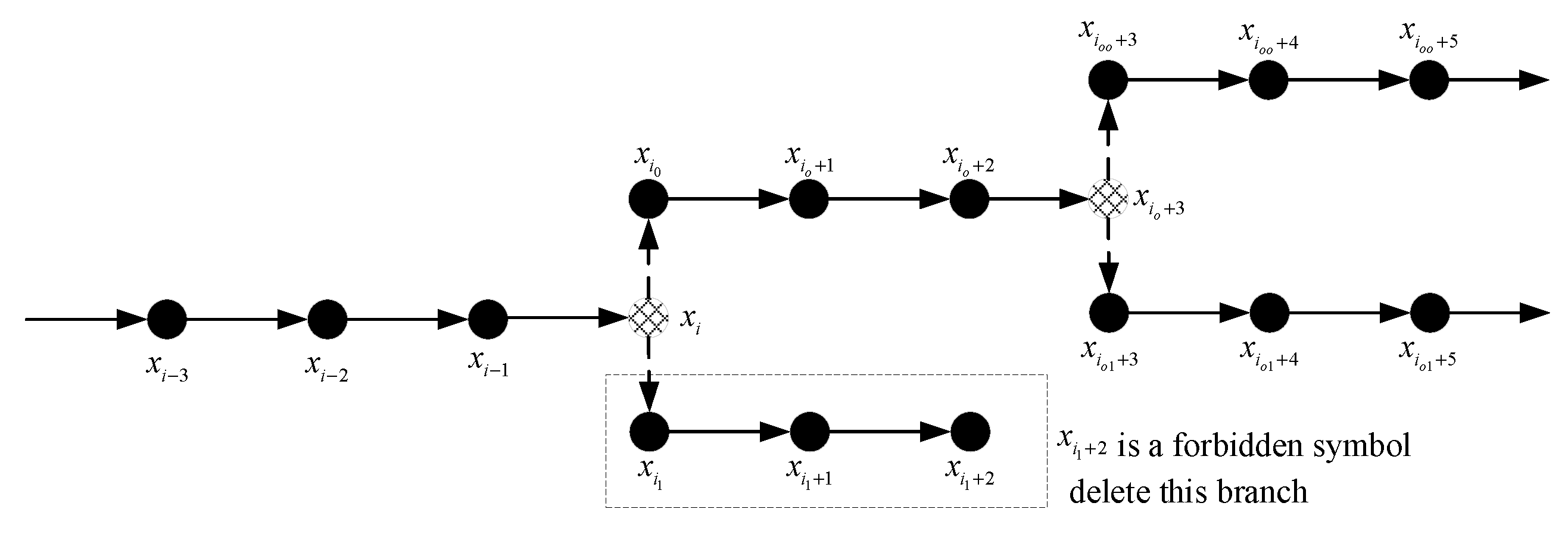

According to the theory of the context-based arithmetic coding, only if the decoder uses the same conditional probability distributions in the context model and follows the same updating rules as that of the encoder, can the source sequence be recovered correctly using the received codeword . Since only part of the symbols in are encoded by an arithmetic encoder based on the context model, the remaining purged symbols are not encoded (they are still used as the context); it is impossible for the decoder to know the correct updating rules for those conditional probability distributions in the context model. Without the correctly updated context model, the decoded sequence from is erroneous and irrelevant to the original source sequence . However, with the help of the side information sequence, for moment i, we can utilize the correlation between and to estimate the source sequence as accurately as possible such that the context model could be updated properly, and the correct symbol could be decoded from . To this end, since we have the side information sequence , we can calculate the cumulative product of posterior probabilities of all estimated sequences, and use the one with the largest cumulative product of posterior probabilities as the estimate of .

In DAC research [

15], Formula (

1) is usually used to calculate the cumulative product of posterior probabilities of each decoding path from moment 1 to

N

where

represents one of the decoded symbol sequences in the decoding tree from moment 1 to

N,

represents the corresponding sequence of side information

Y,

is the SPDCAC encoder output codeword after encoding

, and

is the codeword received up to the moment

i. A virtual binary symmetric channel (BSC) is usually used in the DSC research to describe the correlation between any

and

pair [

24], the transition probability

of the channel measures the correlation between the two sources. A small transition probability represents the strong correlation between the

and

pairs. Since BSC is a memoryless channel, the side information symbol

is considered only related to the corresponding original source symbol

, but not related to

,

,

and

. However, this posterior probability calculation method only considers the correlation between the symbols of two sources at moment

i, but does not consider the situation of sources with memory. In the decoding process of SPDCAC, the correlation between adjacent symbols within the source sequence should also be used as an important part to determine whether the decoding result is correct. The conditional probability in the context model can be added to the posterior probability calculation as a means of using this correlation.

Since the side information sequence

is known during the calculation of the posterior probability, the joint probability

is considered as the prior probability of the side information sequence, and is also known and is a constant.

is the unique codeword received by the decoder; it is fixed for all decoding branches in the decoding tree. Therefore, the probability

is also considered as the prior probability of

during the decoding process. From the perspective of the decoder, before the correct source sequence

is found, the codeword

and the source sequence

are independent because the decoder does not know which path in the decoding tree corresponds to the correct source sequence

. Since the side information sequence

is only correlated with

, the codeword

is actually independent of the side information

at the decoder side until the correct source sequence

is estimated and the context model is updated properly. Which means

. In this way, multiplying

to both sides of (

1), the equality also holds.

The above

can be regarded as an estimate of

. At any moment

i during the decoding process, the rightmost term of Equation (

2) can be rewritten as

and decomposed recursively according to Equation (

3) which expresses the relationships that exist among the decoded sequence

, the side information sequence

, and the codeword sequence

:

As mentioned before that the relationship between

and

is represented by a virtual BSC channel, and

is only related to

, and independent of

,

,

and

, the probability

can be represented as

in (

3). Since the codeword

is independent of

,

can be further reduced to

.

In the encoding process, if the encoder uses

as the context conditions to encode

, then, in the decoding process, the decoder must decode

before they can be used as the conditions to decode

. Obviously,

is related to

, but it is considered that

is not related to the previously decoded symbols

. Similarly, according to the property of the BSC channel,

is not related to

. Therefore,

in (

3) can be simplified to

.

The posterior probability calculation method similar to (

3) has been used in [

21], that is

, which not only calculates the probability of each

relative to the known

, but also calculates the probability of each

appearing under the condition

. This method utilizes the correlation among adjacent symbols in the source sequence to reduce the bit error rate of the decoding result. However, SPDCAC is a DSC scheme that purges the source sequence; those purged symbols are not encoded. The recovery method of the purged symbols should be different from that of other symbols. Therefore, the posterior probability calculation method of SPDCAC in different decoding cases should be considered.

In the actual encoding and decoding processes, the codeword contains the information of the encoded symbols. In other words, when the context condition is known, the codeword must be decoded to a determined symbol . For , if both and are known, then there must be a determined decoding result ; in this case . In the encoding process of SPDCAC, if a symbol is not encoded at moment i, the information of is not included in the codeword . In the decoding process at this moment, cannot provide any information about , and it is necessary to make two alternative decoding attempts at this position. Therefore, at this moment, , there should be . However, if is an encoded symbol, regardless of the previous decoding process; it should be considered that both and are known at moment i, and we have .

In this way, the posterior probability calculation method of SPDCAC can be represented by:

The logarithmic form of (

4) is:

where

This calculation method is only related to the BSC channel crossover probability and conditional probability distributions in the context model. It can not only use the correlation between the source sequence and the side information sequence, but can also selectively utilize the correlation among source symbols according to different decoding cases to ensure a better decoding BER (bit error rate) performance. It should be noted that DSC schemes based on expanding probability intervals such as DAC and DJSCAC cannot employ the proposed method, since, in these schemes, the time when the codeword falls into the overlapping area during the decoding process is not determined, the timing and number of occurrences of decoding ambiguity in different decoding paths are different. It is difficult to fairly compare the cumulative product of posterior probabilities in different paths, since the calculation of

depends on the occurrence of decoding ambiguity. If the decoder of DAC use (

5) to calculate the posterior probability, the cumulative product of posterior probabilities of a decoding path with many ambiguities will be much smaller than that of another decoding path with a few ambiguities. Apparently, such a difference between the cumulative product of posterior probabilities is not caused by the different correlations between the corresponding decoding sequences and the side information sequence.

4. Simulation Results

This section presents simulation results to evaluate the performance of the proposed scheme. Since the SPDCAC encoder encodes the source sequence

X with a code rate less than

, the SPDCAC decoder cannot directly decode the received codeword to restore

X. Therefore, we investigate the encoding performance of SPDCAC and the minimum code rate required of SPDCAC to achieve lossless decoding with the help of a side information sequence that has a strong correlation with

X. As the correlation strength between the source sequence and the side information sequence gradually weakens, errors in the decoded results are also gradually increasing. The decoding performance of SPDAC with the assistance of different side information is also our focus. Since the scheme proposed in this article needs to use the context model in the coding process, a randomly generated binary order-1 Markov source sequence of length

N will be used as the source sequence

X. The transition probability matrix of the Markov source is shown in

Table 1, where the value of

is positively correlated with the strength of the correlation between adjacent symbols in the source sequence. All schemes based on arithmetic coding in subsequent experiments will use the order-1 context model in the coding process.

The code rate unit of all DSC schemes is bit/symbol (

), which is obtained by dividing the number of bits of the codeword output from the encoder by the number of source symbols. The side information sequence

Y is generated by sending the source sequence

X through a virtual BSC. Every symbol in

Y is transmitted to the decoder losslessly. The correlation between

X and

Y is controlled by the transition probability of BSC (the transition probability of BSC is

), and the conditional entropy

between

X and

Y is used to represent the correlation between them.

[

24]. A smaller

means there is a higher similarity between

X and

Y. The conditional entropy

was set to

in experiments. Many DSC studies use BER as the performance evaluation index of decoding, which is used to indicate the ratio of the number of error bits to the total number of bits in the decoding result. It is also used to show the decoding performance in our experiments. In our experiment, the purging rates of the encoder and the decoder were preset to be the same. The interval expansion factor

of the DSC scheme based on expanding probability intervals was also preset to be the same in the encoder and the decoder. Due to the randomness of the generated source sequence, each result shown in the following figures and tables is the averaged value of

experiments.

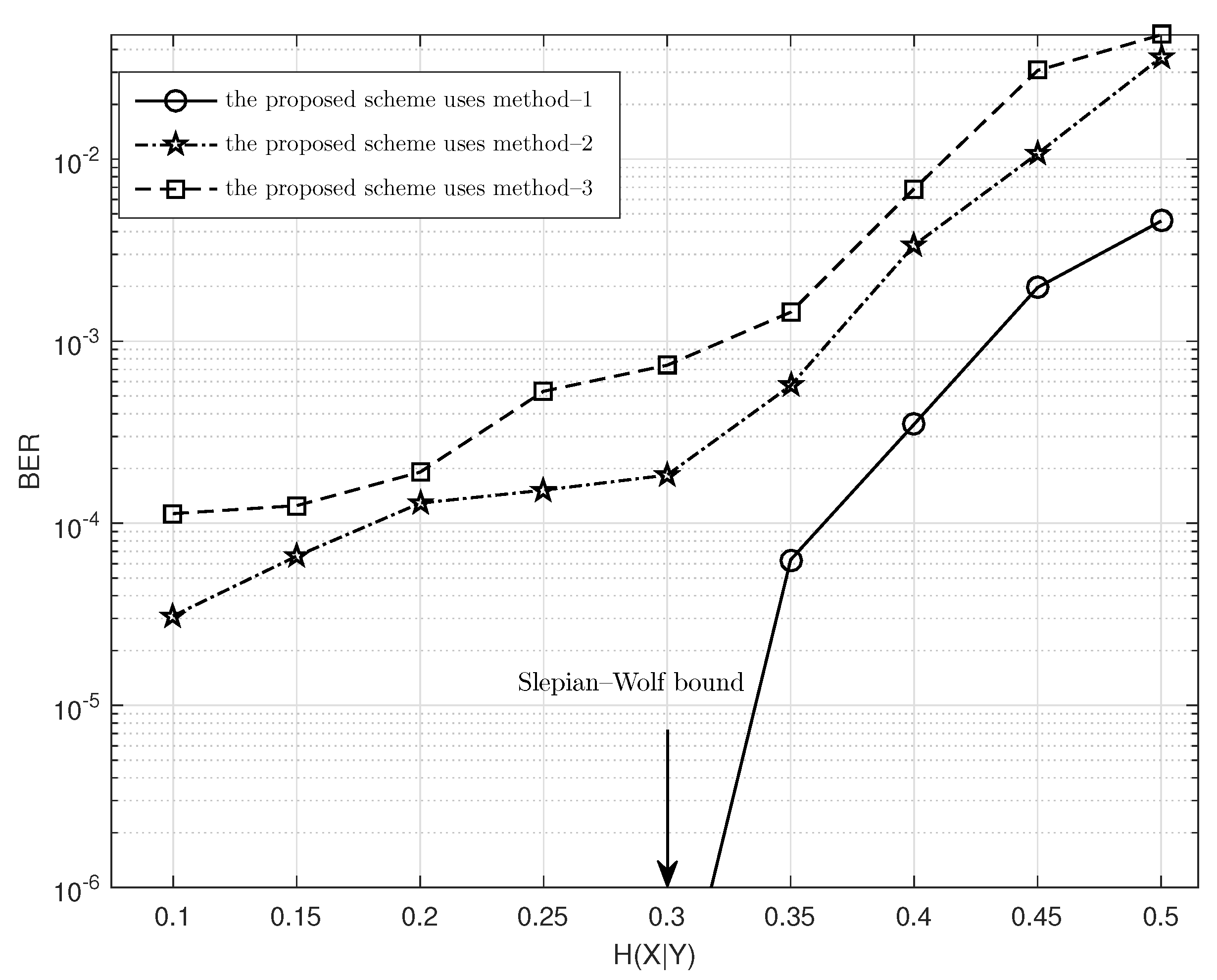

4.1. Performance of the Improved Posterior Probability Calculation Method

The decoding performance of SPDCAC is closely related to the posterior probability calculation method used in decoding.

Figure 5 shows the BER performance of SPDCAC using three different posterior probability calculation methods in the decoding process. After the encoder of SPDCAC encodes a binary Markov source (

) of length

at a rate about 0.3

, the decoder of SPDCAC uses three different posterior probability calculation methods to decode the source sequence under different

(the maximum width of the decoding tree is limited to 256), and then, their respective BER performance results are obtained. The three calculation methods for the posterior probability are: 1. the method proposed in (

5); 2. the method used in [

21], that is

; 3. the method used in DAC [

15]. It can be seen from the figure that the application of the context model for the calculation of the posterior probability during the decoding process has a significant impact on the BER performance of the decoder. Both methods 1 and 2 use the context model in the posterior probability calculation, and their BER performances are significantly better than method 3 which does not employ the context model. Meanwhile, the BER performance of method 1 is far better than that of the other two. Especially when

, method 1 can obtain lossless decoding results, while the other two methods cannot.

The posterior probability calculation in [

21] makes use of the conditional probability

, so it exhibits a better BER performance than the method in [

15]. However, that scheme does not consider the different decoding cases in the decoding process (whether the decoding ambiguity appears). Those cases are taken into account in the proposed algorithm, where the conditional probability

is only calculated at the positions where

are purged. This can expand the gap between the values of the cumulative product of posterior probabilities of different decoding paths. The most likely correct decoding path will quickly accumulate to a greater posterior probability. Therefore, the algorithm proposed in this paper is more effective than the scheme in [

21] in terms of BER performance.

4.2. The Required Minimum Code Rate for Lossless Decoding

For lossless decoding, the required minimum code rate (RMCR) of a DSC scheme can be used to present the coding efficiency of that DSC scheme. In this experiment, the RMCR of SPDCAC was compared with that of the traditional distributed arithmetic coding. However, due to a ‘forbidden symbol’ being added in the SPDCAC codec, we chose DJSCAC to compare with SPDCAC, and the results of arithmetic coding (AC) are provided for reference. We adjusted the value of

to obtain binary Markov sources with different internal correlation strengths. The length of each sequence is 1024. In a DSC scheme based on expanding probability intervals, in order to obtain additional compression, the probability intervals of the encoding distribution need to be enlarged. However, if the probability of the current symbol is already large enough, the extent to which the probability can be enlarged is very limited, because the symbol probability cannot exceed 1. Based on the above analysis, in order to make DJSCAC obtains the largest possible compression, and the maximum possible coding probability interval of DJSCAC needs to be set by choosing the largest factor

, which meets the inequality.

where

p represents the larger probability in the binary conditional distribution used for the coding of the current symbol. It should be noted that in DJSCAC, the forbidden interval is located at the right end of probability intervals adjacent to the interval of symbol ‘1’, its length

is set to 0.01. Similarly, the length of

is set to 0.01 for SPDCAC.

Table 2 shows the RMCR of SPDCAC and DJSCAC coding different sources when

. From the table, we can find that the code rate of the arithmetic coding when encoding a source with a larger

will be smaller than that when coding a source with a smaller

. This shows that arithmetic coding can effectively utilize the internal correlation of the source to reduce the code rate. All DSC schemes based on arithmetic coding also have this property—that is, as the internal correlation strength of the source increases, lossless decoding can be realized at a smaller code rate. The RMCR of DJSCAC will be smaller than SPDCAC only when coding the source with a weaker internal correlation (

). However, in other cases, the RMCR of SPDCAC is significantly smaller than that of DJSCAC. In particular, when coding a source with

, SPDCAC can save

of the code rate than AC, while DJSCAC can only save

of the code rate than AC. This phenomenon shows that the RMCR of SPDCAC is significantly better than that of DJSCAC when coding a source with a stronger internal correlation strength.

4.3. Decoding Performance

In order to evaluate the decoding performance of SPDCAC, the proposed scheme will be compared with several other DSC schemes. Those DSC schemes used for comparison with SPDCAC in this experiment are DJSCAC and the DSC scheme based on irregular LDPC code, respectively. The DSC scheme based on LDPC code use the approach proposed in [

13]. After DSC schemes encoding the same source sequence at the same code rate, the same side information sequence is used to help the decoding of these schemes. Both DJSCAC and SPDCAC use the same forbidden interval

. DJSCAC controls the code rate by adjusting the maximum admissible range of

in (

7). During the coding process for each symbol,

is firstly calculated by (

7), if its value exceeds a predetermined threshold, set

to this threshold; otherwise,

remains unchanged. In this experiment, the maximum width of the decoding tree in the MAP algorithm used by DJSCAC and SPDCAC is limited to 256.

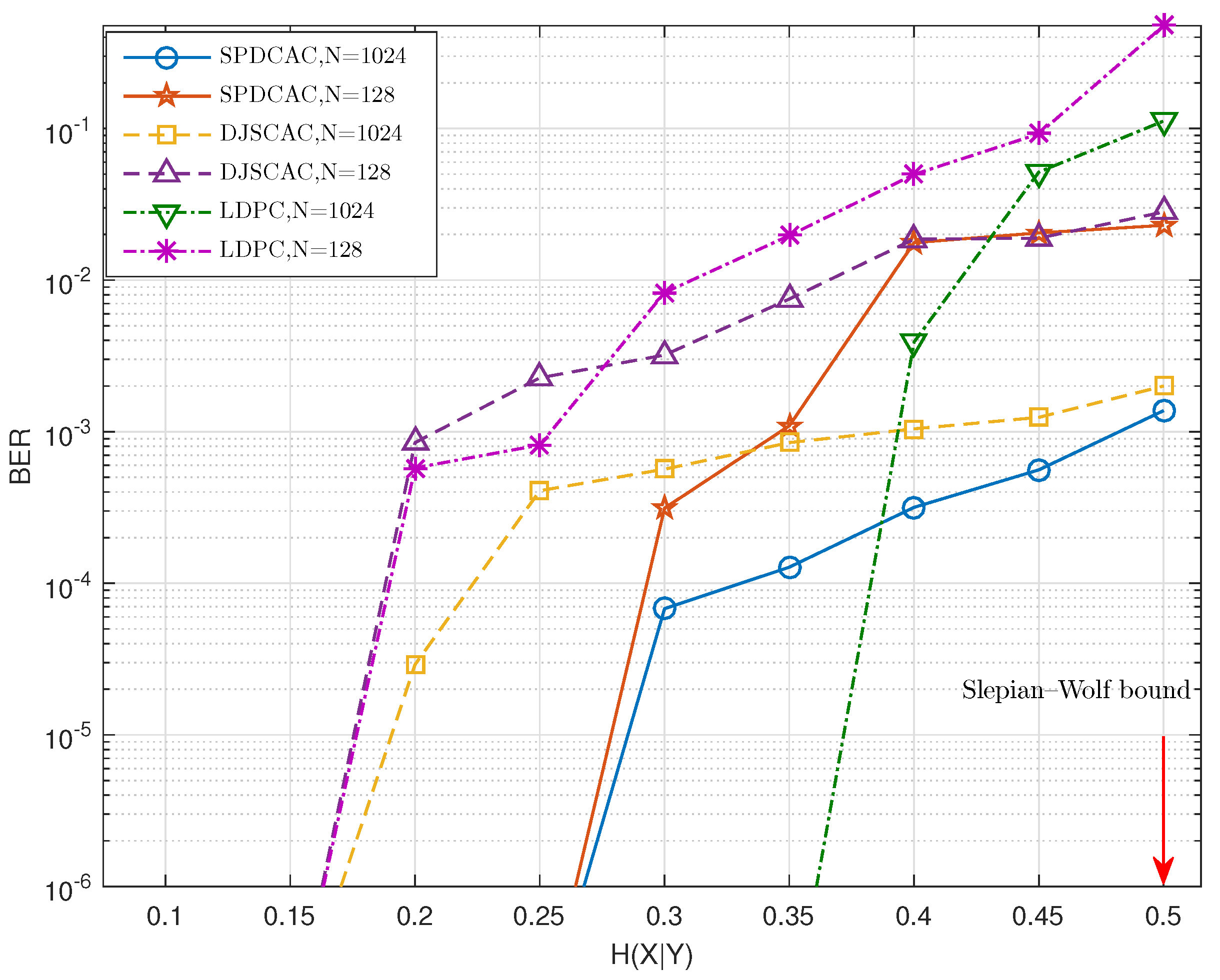

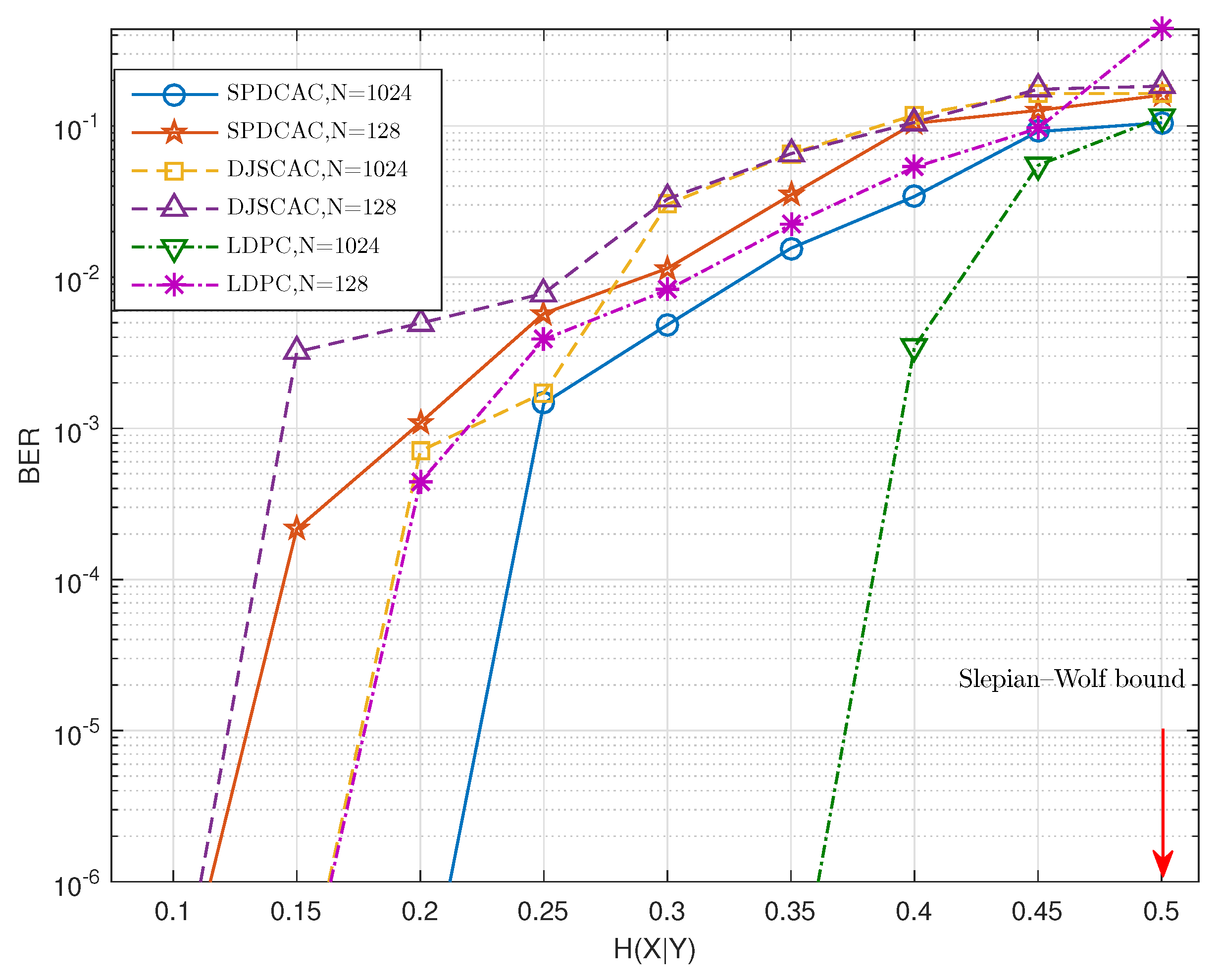

Figure 6 and

Figure 7 show the decoding performance of SPDCAC for different sources with different

N under a fixed code rate

b/s. If we compare the results in

Figure 6 with the results in

Figure 7, we can find that SPDCAC can more easily achieve a better BER performance when decoding a source with a stronger internal correlation strength. This is because the posterior probability calculation method proposed in this paper can efficiently make use of the correlation among adjacent symbols in the source sequence to improve the decoding performance. Compared with DJSCAC, SPDCAC has the same or better BER performance under all

, which reflects that SPDCAC can better utilize the internal correlation of the source to improve the decoding performance than DJSCAC.

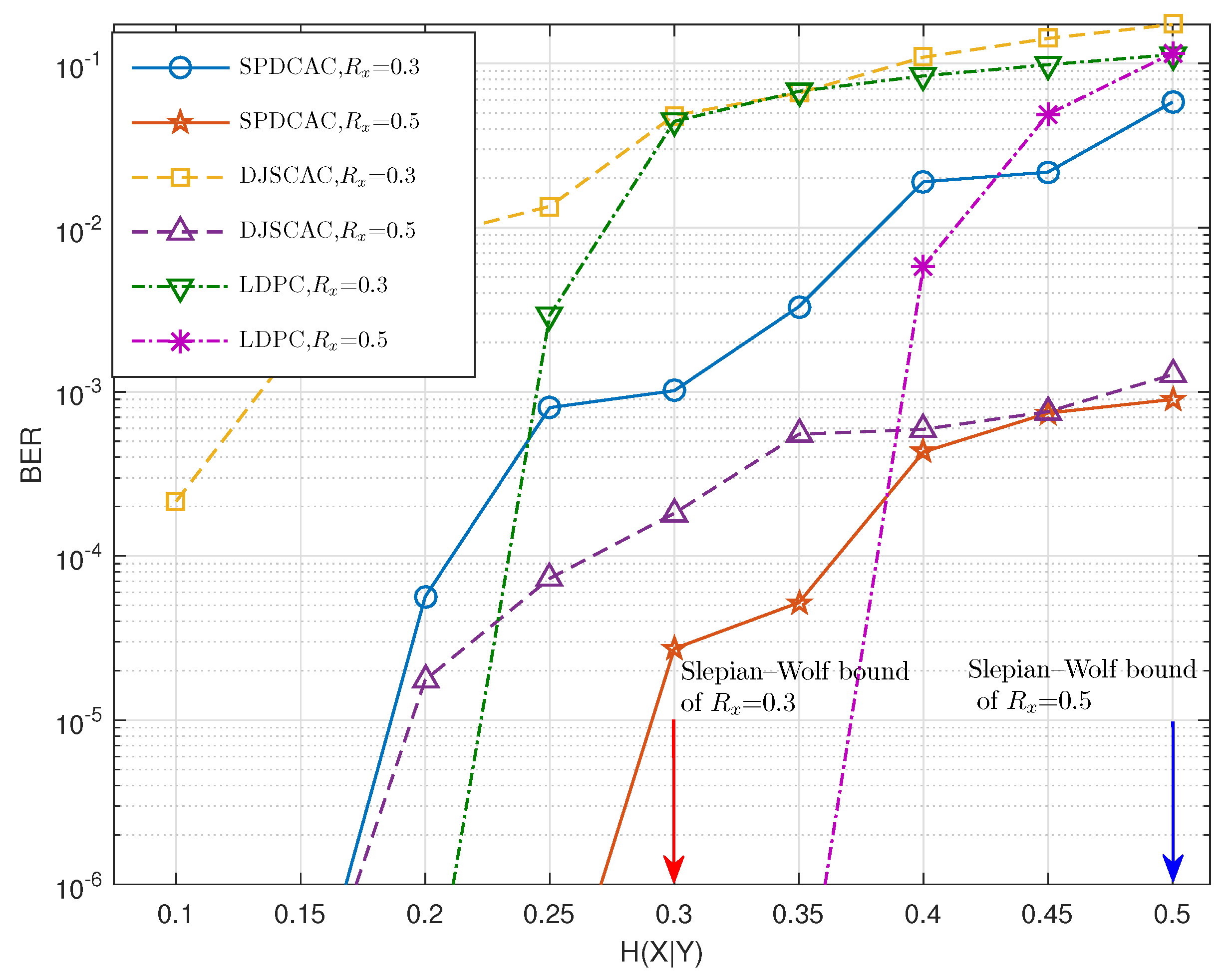

Figure 8 shows the decoding performance of different schemes when coding the same source at different code rates. It can be seen that in some code rate cases, SPDCAC can exhibits a better BER performance than that of DJSCAC. Compared with the DSC scheme based on the LDPC code, SPDCAC exhibits a better BER performance when

is larger, and this advantage is more significant when the code rate is lower.

The decoding performance of a LDPC code-based DSC scheme is related to the number of parity bits (or the block size). Therefore, when the length of the source sequence is large, and is small, the decoder can easily become lossless when decoding results. However, the BER performance of the DSC scheme based on LDPC codes will deteriorate rapidly as increases. This shows that it is difficult for an LDPC code-based DSC scheme to ensure a better decoding performance when the correlation strength between X and Y is weak. Although the DSC scheme based on the LDPC code will ensure a better decoding performance when coding the source with weaker internal correlation strength, in the case of coding a source with strong internal correlation strength, the BER performance of the DSC scheme based on the LDPC code can only be better than that of SPDCAC when N is large and is small. However, when the internal correlation strength of the source is strong and N is small, the decoding performance of the DSC scheme based on the LDPC code is completely inferior to that of SPDCAC.

4.4. Coding Complexity

The decoding complexity of DAC increases with the maximum width

M of the decoding tree, and the encoding complexity of DAC is linear, like a classical AC [

9]. In the SPDCAC encoding process,

of the total number of the source symbols are purged from the source sequence, and the remaining source symbols are encoded using a conditional AC. Therefore, the encoding complexity of SPDCAC is proportional to

, which is slightly lower than that of DAC and DJSCAC. The decoding tree construction method of SPDCAC is the same as that of DAC, so the computational complexity of the decoding algorithm is the same as that of DAC and DJSCAC, which is proportional to

. However, in the actual decoding process, decoding ambiguity will simultaneously occur in all decoding paths in the proposed scheme, while the decoding ambiguity of each decoding path in the DAC and DJSCAC appears randomly. Therefore, the computational complexity of the decoding algorithm in the proposed scheme will be slightly higher than those of the DAC and DJSCAC.

Table 3 shows the average encoding and decoding times (seconds) for SPDCAC and DJSCAC under different lengths and different maximum decoding tree widths (code rate =

,

,

). SPDCAC and DJSCAC are running on a personal computer equipped with an Intel Core i7-6700HQ 2.60 GHz processor.

From

Table 3, it can be seen that the actual decoding times of SPDCAC and DJSCAC will accordingly grow as the maximum width of the decoding tree and the sequence length are increased. The actual decoding time of SPDCAC is about

times that of DJSCAC, which means that the decoding complexity of SPDCAC and DJSCAC is the same order of magnitude. Although the actual decoding time of SPDCAC is longer than that of DJSCAC, the actual encoding time of SPDCAC is shorter than that of DJSCAC. This is because SPDCAC reduces the number of source symbols that need to be encoded by purging the source sequence. This can reflect the characteristics that SPDCAC meets the low energy consumption requirements of the DSC coding scheme for the encoder.