Abstract

The measures of information transfer which correspond to non-additive entropies have intensively been studied in previous decades. The majority of the work includes the ones belonging to the Sharma–Mittal entropy class, such as the Rényi, the Tsallis, the Landsberg–Vedral and the Gaussian entropies. All of the considerations follow the same approach, mimicking some of the various and mutually equivalent definitions of Shannon information measures, and the information transfer is quantified by an appropriately defined measure of mutual information, while the maximal information transfer is considered as a generalized channel capacity. However, all of the previous approaches fail to satisfy at least one of the ineluctable properties which a measure of (maximal) information transfer should satisfy, leading to counterintuitive conclusions and predicting nonphysical behavior even in the case of very simple communication channels. This paper fills the gap by proposing two parameter measures named the -q-mutual information and the -q-capacity. In addition to standard Shannon approaches, special cases of these measures include the -mutual information and the -capacity, which are well established in the information theory literature as measures of additive Rényi information transfer, while the cases of the Tsallis, the Landsberg–Vedral and the Gaussian entropies can also be accessed by special choices of the parameters and q. It is shown that, unlike the previous definition, the -q-mutual information and the -q-capacity satisfy the set of properties, which are stated as axioms, by which they reduce to zero in the case of totally destructive channels and to the (maximal) input Sharma–Mittal entropy in the case of perfect transmission, which is consistent with the maximum likelihood detection error. In addition, they are non-negative and less than or equal to the input and the output Sharma–Mittal entropies, in general. Thus, unlike the previous approaches, the proposed (maximal) information transfer measures do not manifest nonphysical behaviors such as sub-capacitance or super-capacitance, which could qualify them as appropriate measures of the Sharma–Mittal information transfer.

1. Introduction

In the past, extensive work has been written on defining the information measures which generalize the Shannon entropy [1], such as the one-parameter Rényi entropy [2], the Tsallis entropy [3], the Landsberg–Vedral entropy [4], the Gaussian entropy [5], and the two-parameter Sharma–Mittal entropy [5,6], which reduces to former ones for special choices of the parameters. The Sharma–Mittal entropy can axiomatically be founded as the unique q-additive measure [7,8] which satisfies generalized Shannon–Kihinchin axioms [9,10] and which has widely been explored in different research fields starting from statistics [11] and thermodynamics [12,13] to quantum mechanics [14,15], machine learning [16,17] and cosmology [18,19]. The Sharma–Mittal entropy has also been recognized in the field of information theory, where the measures of conditional Sharma–Mittal entropy [20], Sharma–Mittal divergences [21] and Sharma–Mittal entropy rate [22] have been established and analyzed.

Considerable research has also been done in the field of communication theory in order to analyze information transmission in the presence of noise if, instead of Shannon’s entropy, the information is quantified with (instances of) Sharma–Mittal entropy and, in general, the information transfer is quantified by an appropriately defined measure of mutual information, while the maximal information transfer is considered as a generalized channel capacity. Thus, after Rényi’s proposal for the additive generalization of Shannon entropy [2], several different definitions for Rényi information transfer were proposed by Sibson [23], Arimoto [24], Augustin [25], Csiszar [26], Lapidoth and Pfister [27] and Tomamichel and Hayashi [28]. These measures have been explored thoroughly and their operational characterization in coding theory, hypothesis testing, cryptography and quantum information theory was established, which qualifies them as a reasonable measure of Rényi information transfer [29]. Similar attempts have also been made in the case of non-additive entropies. Thus, starting from the work of Daroczy [30], who introduced a measure for generalized information transfer related to the Tsallis entropy, several attempts followed for the measures which correspond to non-additive particular instances of the Sharma–Mittal entropy, so the definitions for the Rényi information transfer were considered in [24,31], for the Tsallis information transfer in [32] and for the Landsber–Vedral information transfer in [4,33].

In this paper we provide a general treatment of the Sharma–Mittal entropy transfer and a detailed analysis of existing measures, showing that all of the definitions related to non-additive entropies fail to satisfy at least one of the ineluctable properties common to the Shannon case, which we state as axioms, by which the information transfer has to be non-negative, less than the input and output uncertainty, equal to the input uncertainty in the case of perfect transmission and equal to zero, in the case of a totally destructive channel. Thus, breaking some of these axioms implies unexpected and counterintuitive conclusions about the channels, such as achieving super-capacitance or sub-capacitance [4], which could be treated as nonphysical behavior. As an alternative, we propose the -q-mutual information as a measure of Sharma–Mittal information transfer, maximized with the -q-capacity. The -q mutual information generalizes the -mutual information by Arimoto [24], which is defined as a q-difference between the input Sharma–Mittal entropy and the appropriately defined conditional Sharma–Mittal entropy if the output is given, while the -q-capacity represents a generalization of Arimoto’s -capacity in the case of . In addition, several other instances can be obtained by specifying the values of parameters and q, which includes the information transfer measures for the Tsallis, the Landsber–Vedral and the Shannon entropy, as well as the case of the Gaussian entropy which was not considered before in the context of information transmission.

The paper is organized as follows. The basic properties and special instances of the Sharma–Mittal entropy are listed in Section 2. Section 3 reviews the basics of communication theory, introduces the basic communication channels and establishes the set of axioms which information transfer measures should satisfy. The information transfer measures which are defined by Arimoto are introduced in Section 4, and the alternative definitions for Rényi information transfer measures are discussed in Section 5. Finally, the -q-mutual information and the -q-capacities are proposed and their properties analyzed in Section 6 while the previously proposed measures of Sharma–Mittal entropy transfer are discussed in Section 7.

2. Sharma–Mittal Entropy

Let the sets of positive and nonnegative real numbers be denoted with and , respectively, and let the mapping be defined in

so that its inverse is given in

The mapping q and its inverse are increasing continuous (hence invertible) functions such that . The q-logarithm is defined in

and its inverse, the q-exponential, is defined in

for . Using ηq, we can define the pseudo-addition operation [7,8]

and its inverse operation, the pseudo substraction

The can be rewritten in terms of the generalized logarithm by settings and so that

Let the set of all n-dimensional distributions be denoted with

Let the function satisfy the following the Shannon–Khinchin axioms, for all , .

- GSK1

- is continuous in ;

- GSK2

- takes its largest value for the uniform distribution, , i.e., , for any ;

- GSK3

- is expandable: for all ;

- GSK4

- Let , , , such that , and , where and are some fixed parameters. Then,where f is an invertible continuous function and is the -escort distribution of distribution defined in

- GSK5

- .

As shown in [9], the unique function , which satisfies [GSK1]-[GSK5], is Sharma–Mittal entropy [6].

In the following paragraphs we will assume that X and Y are discrete jointly distributed random variables taking values from sample spaces and , and distributed in accordance to and , respectively. In addition, the joint distribution of X and Y will be denoted in and the conditional distribution of X given Y will be denoted in , provided that . We will identify the entropy of a random variable X with the entropy of its distribution and the Sharma–Mittal entropy will be denoted with .

Thus, for a random variable which is distributed to X, Sharma–Mittal entropy can be expressed in

and it can equivalently be expressed as the ηq transformation of Rényi entropy as in

Sharma–Mittal entropy, for , being a continuous function of the parameters and the sums goes over the support of . Thus, in the case of , , Sharma–Mittal reduces to Rényi entropy of order [2]

which further reduces to Shannon entropy for ,34]

while in the case of , it reduces to Gaussian entropy [5]

In addition, Tsallis entropy [3] is obtained for ,

while in the case of for it reduces to the Landsberg–Vedral entropy [4]

3. Sharma–Mittal Information Transfer Axioms

One of the main goals of information and communication theories is characterization and analysis of the information transfer between sender X and receiver Y, which communicate through a channel. The sender and receiver are described by probability distributions and while the communication channel with the input X and the output Y is described by the transition matrix :

We assume that maximum likelihood detection is performed at the receiver, which is defined by the mapping as follows:

assuming that the inequality in (19) is uniquely satisfied. Thus, if the input symbol is sent and the output symbol is received, the will be detected if and a detection error will be made otherwise, and we define the error function functions as in

the detection error if a symbol is sent

as well as the average detection error

Totally destructive channel: A channel is said to be totally destructive if

i.e., if the sender X and receiver Y are described by independent random variables,

where the relationship of independence is denoted in . In this case, for all and the probability of error is ; for all , as well as the average probability of error , which means that a correct maximum likelihood detection is not possible.

Perfect communication channel: A channel is said to be perfect if for every ,

and for every

Note that in this case can still take a zero value for some and that for any non-zero . Thus, the error probability is equal to zero ; for all , as well as the average probability of error , which means that perfect detection is possible by means of a maximum likelihood detector.

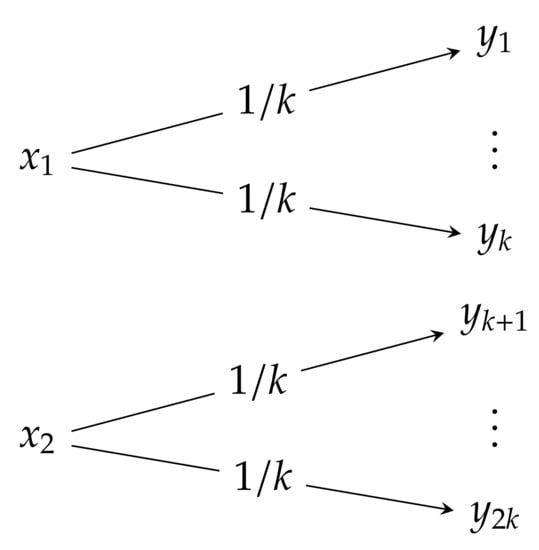

Noisy channel with non-overlapping outputs: A simple example of a perfect transmission channel is the noisy channel with non-overlapping outputs (NOC), which is schematically described in Figure 1. It is a 2-input -output channel () defined by the transition matrix:

(in this and in the following matrices, the symbol “⋯” stands for the k-time repletion). In the case of and , the channel reduces to the noiseless channel. Although the channel is noisy, the input can always be recovered from the output (if is received and , the input symbol is sent, otherwise is sent). Thus, it is expected that the information which is passed through the channel is equal to the information that can be generated by the input. Note that for a channel input distributed in accordance with

the joint probability distribution can be expressed as in:

and the output distribution , which can be obtained by the summations over columns, is

Figure 1.

Noisy channel with non-overlapping outputs.

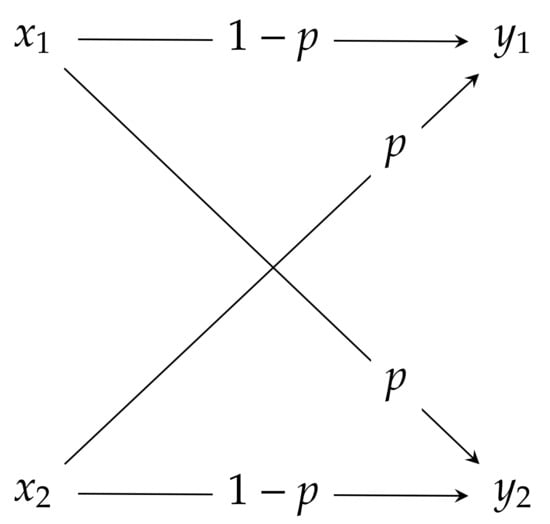

Binary symmetric channels: The binary symmetric channel (BSC) is a two input two output channel described by the transition matrix

which is schematically described in Figure 2. Note that for BSC reduces to a totally destructive channel, while in the case of it reduces to a perfect channel.

Figure 2.

Binary symmetric channel.

Sharma–Mittal Information Transfer Axioms

In this paper, we search for information theoretical measures of information transfer between sender X and receiver Y, which communicate through a channel if the information is measured with Sharma–Mittal entropy. Thus, we are interested in the information transfer measure, , which is called the -q-mutual information and its maximum,

which is called the -q-capacity and which requires the following set of axioms to be satisfied.

- (A1)

- The channel cannot convey negative information, i.e.,

- (A2)

- The information transfer is zero in the case of a totally destructive channel, i.e.,which is consistent with the conclusion that the average probability of error is one, , in the case of a totally destructive channel.

- (A3)

- In the case of perfect transmission, the information transfer is equal to the input information, i.e.,which is consistent with the conclusion that the average probability of error is zero, , in the case of a perfect transmission channel, so that all the information from the input is conveyed.

- (A4)

- The channel cannot transfer more information than it is possible to be sent, i.e.,which means that a channel cannot add additional information.

- (A5)

- The channel cannot transfer more information than it is possible to be received, i.e.,which means that a channel cannot add additional information.

- (A6)

- Consistency with the Shannon case:

Thus, the axioms () and () ensure that the information measures are consistent with the maximum likelihood detection (19)–(21). On the other hand, the axioms (), () and (), prevent a situation in which a physical system conveys information in spite of going through a completely destructive channel, or in which the negative information transfer is observed, indicating that the channel adds or removes information by itself, which could be treated as nonphysical behavior without an intuitive explanation. Finally, the property () ensure that the information transfer measures can be considered as generalizations of corresponding Shannon measures. For these reasons, we assume that the satisfaction of the properties ()–() is mandatory for any reasonable definition of Sharma–Mittal information transfer measures.

4. The α-Mutual Information and the α-Capacity

One of the first proposals for the Rényi mutual information goes back to Arimoto [24], who considered the following definition of mutual information:

where the escort distribution is defined as in (10), and he also invented an iterative algorithm for the computation of the -capacity [35], which is defined from the -mutual information:

Notably, Arimoto’s mutual information can equivalently be represented using the conditional Rényi entropy

as in

which can be interpreted as the input uncertainty reduction after the output symbols are received and, in the case of , the previous definition reduces to the Shannon case. In addition, this measure is directly related to the famous Gallager exponent

which has been widely used to establish the upper bound of error probability in channel coded communication systems [36] via the relationship [29]

In addition, in the case of , it reduces to

where

stands for Shannon’s mutual information [37].

The -mutual information and the -capacity satisfy the axioms ()–() for and , as stated by the following theorem, which further justifies their usage as the measures of (maximal) information transfer.

Theorem 1.

The mutual information measures and satisfy the following set of properties:

- (A1)

- The channel cannot convey negative information, i.e.,

- (A2)

- The (maximal) information transfer is zero in the case of a totally destructive channel, i.e.,

- (A3)

- In the case of perfect transmission, the (maximal) information transfer is equal to the (maximal) input information, i.e.,

- (A4)

- The channel cannot transfer more information than it is possible to be sent, i.e.,

- (A5)

- The channel cannot transfer more information than it is possible to be received, i.e.,

- (A6)

- Consistency with the Shannon case:

Proof.

As shown in [38], , and the nonnegativity property () follows from the definition of Arimoto’s mutual information (42). In addition, if , then so that the definition (61) implies the property (). Furthermore, in the case of a perfect transmission channel, the mutual information (61) can be represented in -4.6cm0cm

and since

we obtain , which proves the property (). Moreover, from the definition as shown in [38], Arimoto’s conditional entropy is positive and satisfies the weak chain rule , so that the properties () and () follow from the definition of Arimoto’s mutual information (42). Finally, the property () follows directly from the equation (45) and can be approved using L’Hôpital’s rule, which completes the proof of the theorem. □

5. Alternative Definitions of the α-Mutual Information and the α-Channel Capacity

Since Rényi’s proposal, there have been several lines of research to find an appropriate definition and characterization of information transfer measures related to Rényi entropy, which are established by the substitution of the Rényi divergence measure

instead of the Kullback–Leibler one,

in some of the various definitions which are equivalent in the case of Shannon information measures (46) [29]:

where stands for the Shannon conditional entropy,

All of these measures are consistent with the Shannon case in view of the property (), but their direct usage as measures of Rényi information transfer leads to a breaking of some the properties ()–(), which justifies the usage of Arimoto’s measures from the previous section as appropriate ones in the context of this research. In the following section, we review the alternative definitions.

5.1. Information Transfer Measures by Sibson

Alternative approaches based on Rényi divergence were proposed by Sibson [23] and considered later by several authors in the context of quantum secure communications [39,40,41,42,43,44], who introduced

which can be represented as in [26]

and, in the discrete setting, can be related to the Gallager exponent as in [29]:

which differs from Arimoto’s definition (61) since in this case the escort distribution does not participate in the error exponent, but an ordinary one does. However, in the case of a perfect channel for which , the conditional distribution for and zero otherwise, so Sibson’s measure (60) reduces to , thus breaking the axiom (). This disadvantage can be overcome by the reparametrization so that is used as a measure of Rényi information transfer, and the properties of the resulting measure can be considered in a manner similar to the case of Arimoto.

5.2. Information Transfer Measures by Augustin and Csiszar

An alternative definition of Rényi mutual information was also presented by Augustin [25], and later Csiszar [26], who defined

However, in the case of perfect transmission, for which , the measure reduces to Shannon entropy

which breaks the axiom ().

5.3. Information Transfer Measures by Lapidoth, Pfister, Tomamichel and Hayashi

A similar obstacle to the case of the Augustin–Csiszar measure can be observed in the case of mutual information which was considered by Lapidoth and Pfister [27] and Tomamichel and Hayashi [28], who proposed

As shown in [27] (Lemma 11), if , then

so the axiom () is broken in this case, as well.

Remark 1.

Despite the difference between the definitions of information transfer, in the discrete setting, the alternative definitions discussed above reach the same maximum over the set of input probability distributions, ,26,29,45].

5.4. Information Transfer Measures by Chapeau-Blondeau, Delahaies, Rousseau, Tridenski, Zamir, Ingber and Harremoes

Chapeau-Blondeau, Delahaies and Rousseau [31], and independently Tridenski, Zamir and Ingber [46] and Harremoes [47], defined the Rényi mutual information using the Rényi divergence (55), so that the mutual information defined using the Rényi divergence

for and , while in the case of it reduces to Shannon mutual information. However, the ordinal definition can correspond only to a Rényi entropy of order since in the case of it reduces to (see also [47]), which can be overcome by the reparametrization , similar to the case of Sibson’s measure. This measure has been discussed in the past with various operational characterizations, and could also be considered as a measure of information transfer, although the satisfaction of all of the axioms ()–() is not self-evident for general channels.

5.5. Information Transfer Measures by Jizba, Kleinert and Shefaat

Finally, we will mention the definition by Jizba, Kleinert and Shefaat [48],

which is defined in the same manner as in Arimoto’s case (42), but with another choice of conditional Rényi entropy

which arises from the Generalized Shannon–Khinchin axiom [GSK4] if the pseudo-additivity in the equation (9) is restricted to an ordinary addition, in which case the GSK axioms uniquely determine Rényi entropy [49]. However, despite its wide applicability in the modeling of causality and financial time series, this mutual information can take negative values which breaks the axiom (), which is assumed to be mandatory in this paper. For further discussion of the physicalism of negative mutual information in the domain of financial time series analysis, the reader is referred to [48].

6. The α-q Mutual Information and the α-q-Capacity

In the past several attempts have been done to define an appropriate channel capacity measure which corresponds to instances of the Sharma–Mittal entropy class. All of them follow a similar recipe by which the channel capacity is defined as in (32), as a maximum of appropriately defined mutual information . However, all of the classes consider only special cases of Sharma–Mittal entropy and all of them fail to satisfy at least one of the properties ()–() which an information transfer has to satisfy, as will be discussed Section 7.

In this section we propose a general measures of the -q mutual information and the -q-capacity by the requirement that the axioms ()–() are satisfied, which could qualify them as appropriate measures of information transfer, without nonphysical properties. The special instances of the -q (maximal) information transfer measures are also discussed and the analytic expressions for a binary symmetric channel are provided.

6.1. The α-q Information Transfer Measures and Its Instances

The -q-mutual information (42) is defined using the q-subtraction defined in (6), as follows:

where we introduced the conditional Sharma–Mittal entropy as in

stands for Arimoto’s definition of the conditional Rényi entropy (41). The expression (69) can also be obtained if the mapping is applied to both sides of the equality (42), by which Arimoto’s mutual information is defined, so we may establish the relationship

which can be represented using the Gallager error exponent (43) as in

Arimoto’s -q-capacity is now defined in

and using the fact that is increasing, it can be related with the corresponding -capacity as in

Using the expressions (45) and (71), in the case of , the -q mutual information reduces to

The -q-capacity is given in

and these measures can serve as (maximal) information transfer measures corresponding to Gaussian entropy, which was not considered before in the context of information transmission. Naturally, if in addition , the measures reduce to Shannon’s mutual information and Shannon capacity [37].

Additional special cases of the -q (maximal) information transfer include the -mutual information (42) and the -capacity (40), which are obtained for ; the measures which correspond to Tsallis entropy can be obtained for and the ones which correspond to Landsberg–Vedral entropy for . These special instances are listed in Table 1.

Table 1.

Instances of the -q-mutual information for different values of the parameters and corresponding expressions for the BSC -q-capacities.

As discussed in Section 7, previously considered information measures cover only particular special cases and break at least one of the axioms ()–(), which leads to unexpected and counterintuitive conclusions about the channels, such as negative information transfer and achieving super-capacitance or sub-capacitance [4], which could be treated as a nonphysical behavior. On the other hand, apart from the generality, the -q information transfer measures proposed in this paper overcame the disadvantages which could qualify them as appropriate measures, as stated in the following theorem.

Theorem 2.

The α-q information transfer measures and satisfy the set of the axioms ()–().

Proof.

The proof is the straightforward application of the mapping to the equations in the -mutual information properties ()–(), while the () follows from the above discussion. □

Remark 2.

Note that the symmetry does not hold in general in the case of the α-q mutual information nor in the case of the α mutual information [50,51] and if the mutual information is defined so that the symmetry is preserved, some of the axioms ()–() might be broken. In addition, the alternative definition of the mutual information, , which uses an ordinary substraction operator instead of operation, can also be introduced, but in this case the property () might not hold in general, as discussed in Section 7.

6.2. The α-q-Capacity of Binary Symmetric Channels

As shown by Cai and Verdú [45], the -mutual information of Arimoto’s type is maximized for the uniform distribution , and Arimoto’s -capacity has the value

where the binary entropy function is defined as

for , , while in the limit of , the expression (78) reduces to the well-known result for the Shannon capacity (see Fano [52])

The analytic expressions for the -q-capacities of binary symmetric channel’s can be obtained from the expressions (74) and (77), so that

in the case of , it reduces to the case of Rényi entropy while, in the case of , to the case of Gaussian entropy (77)

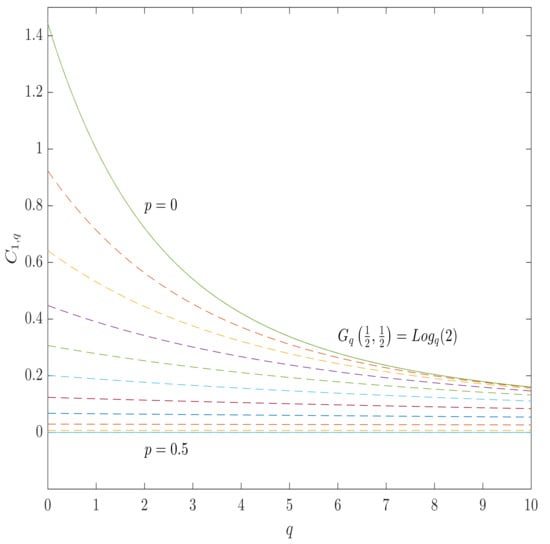

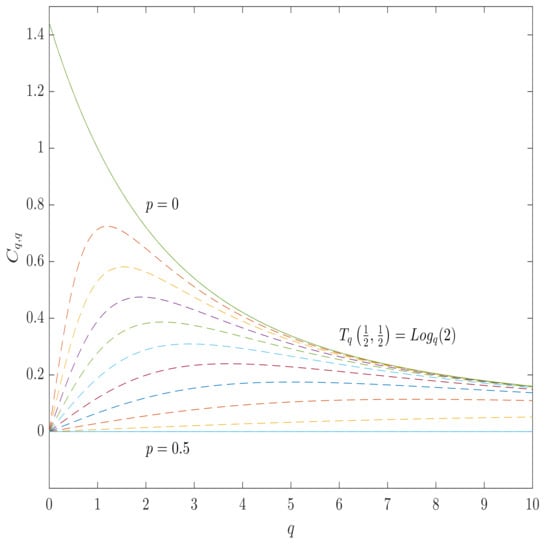

The analytic expressions for BSC -q capacities for other instances can straightforwardly be obtained by specifying the values of the parameters, whose instances are listed in Table 1, while the plots of the BSC -q-capacities, which correspond to the Gaussian and the Tsallis entropies, are shown in Figure 3 and Figure 4.

Figure 3.

The -q-capacity of BSC for the Gaussian entropy (the case of ) as a function of q for various values of the channel parameter p from (totally destructive channel) to 0 (perfect transmission). All of the curves lies between 0 and , which is the maximum value of the Gaussian entropy.

Figure 4.

The -q-capacity of BSC for the Tsallis entropy (the case of ) as a function of q for various values of the channel parameter p from (totally destructive channel) to 0 (perfect transmission). All of the curves lies between 0 and , which is the maximum value of the Tsallis entropy.

The -q-capacity (80) can equivalently be expressed in

where the Sharma–Mittal binary entropy function is defined in

which reduces to the Rényi binary entropy function, in the case of ,

to the Tsallis binary entropy function, in the case of ,

to the Gaussian binary entropy function, in the case of ,

and to the Shannon binary entropy function, in the case of ,

The expression (82) can be interpreted similarly as in the Shannon case. Thus, a BSC channel with input X and output Y can be modeled with an input–output relation where ⊕ stands for modulo 2 sum and Z is channel noise taking values from , distributed in accordance with . If we measure the information which is lost per bit during transmission with the Sharma–Mittal entropy , then stands for useful information left over for every bit of information received.

7. An Overview of the Previous Approaches to Sharma–Mittal Information Transfer Measures

In this section, we review the previous attempts at a definition of Sharma–Mittal information transfer measures, which are defined from the basic requirement of consistency with the Shannon measure as given by the axiom (). However, as we show in the following paragraphs, all of them break at least one of the axioms ()–(), which are satisfied in the case of the -q (maximal) information transfer measures (69) and (73), in accordance with the discussion in Section 6.

7.1. Daróczy’s Capacity

The first considerations of generalized channel capacities and generalized mutual information for the q-entropy go back to Daróczy [30], who introduced conditional Tsallis entropy

where the row entropies are defined as in

and the mutual information is defined as in

However, in the case of a totally destructive channel, , , and

so that

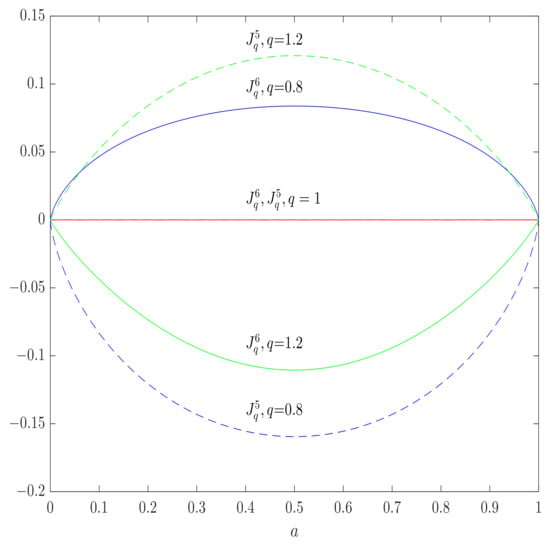

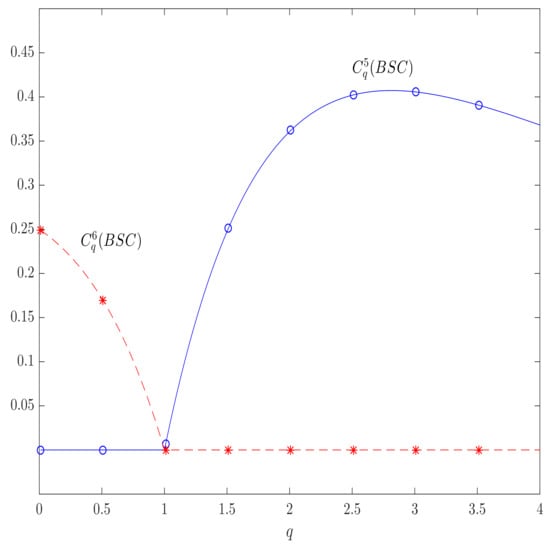

This expression is zero for an input probability distribution and its permutations, but, in general, it is negative for , positive for and 0 only for , so the axiom () is broken (see Figure 5). As a result, the channel capacity, which is defined in accordance to (32), is zero for and positive for , as illustrated in Figure 6 by the example of BSC for which the Daroczy’s channel capacity can be computed as in [30,53]

In the same figure, we plotted the graph for the -q channel capacities proposed in this paper, and all of them remain zero in the case of a totally destructive BSC, as expected.

Figure 5.

Daróczy’s (solid lines) and Yamano’s (dashed lines) mutual information in the case of a totally destructive BSC as functions of the input distribution parameter a, for different values of q, obtaining negative values for and , respectively, breaking the axioms () and (). The -q-mutual information is zero; for all q, and satisfies () and ().

Figure 6.

Daróczy’s (solid lines) and Yamano’s (dashed lines) capacities in the case of totally destructive BSC as functions of the parameter q. In the regions of and , respectively, the corresponding negative mutual information is maximized for (zero capacity) having the positive values outside the regions and breaking the axiom (). The -q-capacity is zero; for all q, and satisfies ().

7.2. Yamano Capacities

Similar problems to the ones mentioned above arise in the case of mutual information and corresponding capacity measures considered by Yamano [33], who addressed the information transmission characterized by Landsberg–Vedral entropy , given in (17).

Thus, the first proposal is based on the mutual information of the form

where the joint entropy is defined in

However, in the case of a fully destructive channel, and , so that

which can be simplified to

Similarly to the case of Daroczy’s capacity, this expression is zero for an input probability distribution and its permutations but, in general, it is negative for , positive for and 0 only for , so the axiom () is broken (see Figure 5). In Figure 6 we illustrated the Yamano channel capacity as a function of the parameter q, in the case of two input channels with , the channel capacity is zero for (which is obtained for ), and

for (which is obtained for ). In the same Figure, we plotted the graph for the -q channel capacities proposed in this paper, and, as before, all of them remain zero in the case of a totally destructive BSC, as expected.

Further attempts were made in [33], where the mutual information is defined in an analogous manner to (66) and (66), with the generalized divergence measure introduced in [54]. Thus, the alternative measure for mutual information is defined in

However, in the case of the simplest perfect communication channel for which , the mutual information reduces to

which breaks the axiom ().

7.3. Landsber–Vedral Capacities

To avoid these problems, Landsberg and Vedral [4] proposed the mutual information measure and related channel capacities for the Sharma–Mittal entropy class , particularly considering the choice of , which corresponds to Tsallis entropy, , and the case of , which corresponds to the Rényi entropy

where the conditional entropy is defined as in

and

Although this definition bears some similarities to the -q mutual information proposed in formula (69), several key differences can be observed. First of all, it characterizes the information transfer as the output uncertainty reduction after the input symbols are known, instead of input uncertainty reduction, after the output symbols are known (42). In addition, it uses the ordinary—operation instead of the one. In addition, note that the definition of conditional entropy (102) generally differs from the definition proposed in (70).

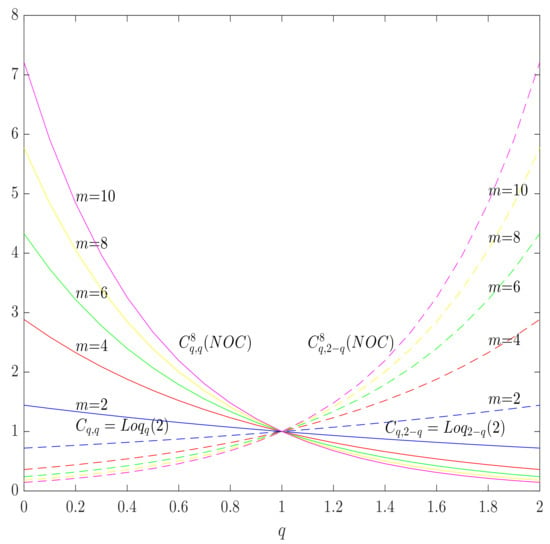

The definition (101) resolves the issue of the axiom () which appears in the case of the Daroczy capacity, since in the case of a totally destructive channel (), and and , so that . However, the problems remain with the axiom (), which can be observed in the case of a noisy channel with non-overlapping outputs if the number of channel inputs is lower than the number of channel outputs . Indeed, in the case of a noisy channel with non-overlapping outputs given by the transition matrix (27), both of the row entropies have the same value, which is independent of x

and the maximal value of Landsberg–Vedral mutual information (101) is obtained only by maximizing over , which is achieved if X is uniformly distributed, since in this case Y is uniformly distributed, as well as ( in (28)), so the maximal value of the output entropy is and the mutual information is maximized for

which is greater than for , i.e., for outputs, so the axiom () is broken, which is illustrated in Figure 7.

Figure 7.

Landsberg–Vedral capacities for the Tsallis (solid lines) and the Landsberg–Vedral (dashed lines) entropies in the case of a (perfect) noisy channel with non-overlapping outputs with m outputs as functions of q, for different values of m. The axiom () is broken for all and satisfied in the case of corresponding -q-capacities, and .

7.4. Chapeau-Blondeau–Delahaies–Rousseau Capacities

Following a similar approach to the one in Section 5.4, Chapeau-Blondeau, Delahaies and Rousseau considered the definition of mutual information which corresponds to the Tsallis entropy using Tsallis divergence,

can be written in

However, this definition is not directly applicable as a measure of information transfer to the Tsallis entropy with index q, since in the case of it reduces to , and requires the reparametrization , similar to Section 5.4, while the satisfaction of the axioms () and () is not self evident.

8. Conclusions and Future Work

A general treatment of the Sharma–Mittal entropy transfer was provided together with the analyses of existing information transfer measures for the non-additive Sharma–Mittal information transfer. It was shown that the existing definitions fail to satisfy at least one of the axioms common to the Shannon case, by which the information transfer has to be non-negative, less than the input and output uncertainty, equal to the input uncertainty in the case of perfect transmission and equal to zero in the case of a totally destructive channel. Thus, breaking some of these axioms implies unexpected and counterintuitive conclusions about the channels, such as achieving super-capacitance or sub-capacitance [4], which could be treated as nonphysical behavior. In this paper, alternative measures of the -q mutual information and the -q channel capacity were proposed so that all of the axioms which are broken in the case of the Sharma–Mittal information transfer measures considered before are satisfied, which could qualify them as physically consistent measures of information transfer.

Taking into account the previous research of non-extensive statistical mechanics [3], where the linear growth of the physical quantities has been recognized as a critical property in non-extensive [55] and non-exponentially growing systems [56], and taking into account the previous research from the field of information theory, where the Sharma–Mittal entropy has been considered an appropriate scaling measure which provides extensive information rates [21], the -q mutual information and the -q channel capacity seem to be promising measures for the characterization of information transmission in the systems where the Shannon entropy rate diverges or disappears in an infinite time limit. In addition, as was shown in this paper, the proposed information transfer measures are compatible with the maximum likelihood detection, which indicates their potential for operational characterization of coding theory and hypothesis testing problems [26].

Author Contributions

Conceptualization, V.M.I. and I.B.D.; validation, V.M.I. and I.B.D.; formal analysis, V.M.I.; funding acquisition, I.B.D.; project administration, I.B.D.; writing—original draft preparation, V.M.I.; writing—review and editing, V.M.I. and I.B.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by NSF under grants 1907918 and 1828132 and by Ministry of Science and Technological Development, Republic of Serbia, Grants Nos. ON 174026 and III 044006. The APC was funded by NSF under grants 1907918 and 1828132.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ilić, V.M.; Stanković, M.S. A unified characterization of generalized information and certainty measures. Phys. A Stat. Mech. Appl. 2014, 415, 229–239. [Google Scholar] [CrossRef]

- Renyi, A. Probability Theory; North-Holland Series in applied mathematics and mechanics; North-Holland Publishing Company: Amsterdam, The Netherlands, 1970. [Google Scholar]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Landsberg, P.T.; Vedral, V. Distributions and channel capacities in generalized statistical mechanics. Phys. Lett. A 1998, 247, 211–217. [Google Scholar] [CrossRef]

- Frank, T.; Daffertshofer, A. Exact time-dependent solutions of the Renyi Fokker-Planck equation and the Fokker-Planck equations related to the entropies proposed by Sharma and Mittal. Phys. A Stat. Mech. Appl. 2000, 285, 351–366. [Google Scholar] [CrossRef]

- Sharma, B.; Mittal, D. New non-additive measures of entropy for discrete probability distributions. J. Math. Sci. 1975, 10, 28–40. [Google Scholar]

- Tsallis, C. What are the numbers that experiments provide. Quim. Nova 1994, 17, 468–471. [Google Scholar]

- Nivanen, L.; Le Méhauté, A.; Wang, Q.A. Generalized algebra within a nonextensive statistics. Rep. Math. Phys. 2003, 52, 437–444. [Google Scholar] [CrossRef]

- Ilić, V.M.; Stanković, M.S. Generalized Shannon-Khinchin axioms and uniqueness theorem for pseudo-additive entropies. Phys. A Stat. Mech. Appl. 2014, 411, 138–145. [Google Scholar] [CrossRef]

- Jizba, P.; Korbel, J. When Shannon and Khinchin meet Shore and Johnson: Equivalence of information theory and statistical inference axiomatics. Phys. Rev. E 2020, 101, 042126. [Google Scholar] [CrossRef]

- Esteban, M.D.; Morales, D. A summary on entropy statistics. Kybernetika 1995, 31, 337–346. [Google Scholar]

- Lenzi, E.; Scarfone, A. Extensive-like and intensive-like thermodynamical variables in generalized thermostatistics. Phys. A Stat. Mech. Appl. 2012, 391, 2543–2555. [Google Scholar] [CrossRef]

- Frank, T.; Plastino, A. Generalized thermostatistics based on the Sharma-Mittal entropy and escort mean values. Eur. Phys. J. B Condens. Matter Complex Syst. 2002, 30, 543–549. [Google Scholar] [CrossRef]

- Aktürk, O.Ü.; Aktürk, E.; Tomak, M. Can Sobolev inequality be written for Sharma-Mittal entropy? Int. J. Theor. Phys. 2008, 47, 3310–3320. [Google Scholar] [CrossRef]

- Mazumdar, S.; Dutta, S.; Guha, P. Sharma–Mittal quantum discord. Quantum Inf. Process. 2019, 18, 1–26. [Google Scholar] [CrossRef]

- Elhoseiny, M.; Elgammal, A. Generalized Twin Gaussian processes using Sharma–Mittal divergence. Mach. Learn. 2015, 100, 399–424. [Google Scholar] [CrossRef][Green Version]

- Koltcov, S.; Ignatenko, V.; Koltsova, O. Estimating Topic Modeling Performance with Sharma–Mittal Entropy. Entropy 2019, 21, 660. [Google Scholar] [CrossRef]

- Jawad, A.; Bamba, K.; Younas, M.; Qummer, S.; Rani, S. Tsallis, Rényi and Sharma-Mittal holographic dark energy models in loop quantum cosmology. Symmetry 2018, 10, 635. [Google Scholar] [CrossRef]

- Ghaffari, S.; Ziaie, A.; Moradpour, H.; Asghariyan, F.; Feleppa, F.; Tavayef, M. Black hole thermodynamics in Sharma–Mittal generalized entropy formalism. Gen. Relativ. Gravit. 2019, 51, 1–11. [Google Scholar] [CrossRef]

- Américo, A.; Khouzani, M.; Malacaria, P. Conditional Entropy and Data Processing: An Axiomatic Approach Based on Core-Concavity. IEEE Trans. Inf. Theory 2020, 66, 5537–5547. [Google Scholar] [CrossRef]

- Girardin, V.; Lhote, L. Rescaling entropy and divergence rates. IEEE Trans. Inf. Theory 2015, 61, 5868–5882. [Google Scholar] [CrossRef]

- Ciuperca, G.; Girardin, V.; Lhote, L. Computation and estimation of generalized entropy rates for denumerable Markov chains. IEEE Trans. Inf. Theory 2011, 57, 4026–4034. [Google Scholar] [CrossRef]

- Sibson, R. Information radius. Z. Wahrscheinlichkeitstheorie Verwandte Geb. 1969, 14, 149–160. [Google Scholar] [CrossRef]

- Arimoto, S. Information Mesures and Capacity of Order α for Discrete Memoryless Channels. In Topics in Information Theory; Colloquia Mathematica Societatis János Bolyai; Csiszár, I., Elias, P., Eds.; North-Holland Pub. Co.: Amsterdam, The Netherlands, 1977; Volume 16, pp. 41–52. [Google Scholar]

- Augustin, U. Noisy Channels. Ph.D. Thesis, Universität Erlangen-Nürnberg, Erlangen, Germany, 1978. [Google Scholar]

- Csiszár, I. Generalized cutoff rates and Rényi’s information measures. IEEE Trans. Inf. Theory 1995, 41, 26–34. [Google Scholar] [CrossRef]

- Lapidoth, A.; Pfister, C. Two measures of dependence. Entropy 2019, 21, 778. [Google Scholar] [CrossRef]

- Tomamichel, M.; Hayashi, M. Operational interpretation of Rényi information measures via composite hypothesis testing against product and Markov distributions. IEEE Trans. Inf. Theory 2017, 64, 1064–1082. [Google Scholar] [CrossRef]

- Verdú, S. α-mutual information. In Proceedings of the 2015 Information Theory and Applications Workshop (ITA), San Diego, CA, USA, 1–6 February 2015; pp. 1–6. [Google Scholar]

- Daróczy, Z. Generalized information functions. Inf. Control 1970, 16, 36–51. [Google Scholar] [CrossRef]

- Chapeau-Blondeau, F.; Rousseau, D.; Delahaies, A. Renyi entropy measure of noise-aided information transmission in a binary channel. Phys. Rev. E 2010, 81, 051112. [Google Scholar] [CrossRef]

- Chapeau-Blondeau, F.; Delahaies, A.; Rousseau, D. Tsallis entropy measure of noise-aided information transmission in a binary channel. Phys. Lett. A 2011, 375, 2211–2219. [Google Scholar] [CrossRef]

- Yamano, T. A possible extension of Shannon’s information theory. Entropy 2001, 3, 280–292. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Arimoto, S. Computation of random coding exponent functions. Inf. Theory IEEE Trans. 1976, 22, 665–671. [Google Scholar] [CrossRef]

- Gallager, R. A simple derivation of the coding theorem and some applications. IEEE Trans. Inf. Theory 1965, 11, 3–18. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory (Wiley Series in Telecommunications and Signal Processing); John Wiley & Sons, Inc: Hoboken, NJ, USA, 2006. [Google Scholar]

- Fehr, S.; Berens, S. On the conditional Rényi entropy. Inf. Theory IEEE Trans. 2014, 60, 6801–6810. [Google Scholar] [CrossRef]

- Wilde, M.M.; Winter, A.; Yang, D. Strong converse for the classical capacity of entanglement-breaking and Hadamard channels via a sandwiched Rényi relative entropy. Commun. Math. Phys. 2014, 331, 593–622. [Google Scholar] [CrossRef]

- Gupta, M.K.; Wilde, M.M. Multiplicativity of completely bounded p-norms implies a strong converse for entanglement-assisted capacity. Commun. Math. Phys. 2015, 334, 867–887. [Google Scholar] [CrossRef]

- Beigi, S. Sandwiched Rényi divergence satisfies data processing inequality. J. Math. Phys. 2013, 54, 122202. [Google Scholar] [CrossRef]

- Hayashi, M.; Tomamichel, M. Correlation detection and an operational interpretation of the Rényi mutual information. J. Math. Phys. 2016, 57, 102201. [Google Scholar] [CrossRef]

- Hayashi, M.; Tajima, H. Measurement-based formulation of quantum heat engines. Phys. Rev. A 2017, 95, 032132. [Google Scholar] [CrossRef]

- Hayashi, M. Quantum Wiretap Channel With Non-Uniform Random Number and Its Exponent and Equivocation Rate of Leaked Information. IEEE Trans. Inf. Theory 2015, 61, 5595–5622. [Google Scholar] [CrossRef]

- Cai, C.; Verdú, S. Conditional Rényi Divergence Saddlepoint and the Maximization of α-Mutual Information. Entropy 2019, 21, 969. [Google Scholar] [CrossRef]

- Tridenski, S.; Zamir, R.; Ingber, A. The Ziv–Zakai–Rényi bound for joint source-channel coding. IEEE Trans. Inf. Theory 2015, 61, 4293–4315. [Google Scholar] [CrossRef]

- Harremoës, P. Interpretations of Rényi entropies and divergences. Phys. A Stat. Mech. Its Appl. 2006, 365, 57–62. [Google Scholar] [CrossRef]

- Jizba, P.; Kleinert, H.; Shefaat, M. Rényi’s information transfer between financial time series. Phys. A Stat. Mech. Appl. 2012, 391, 2971–2989. [Google Scholar] [CrossRef]

- Jizba, P.; Arimitsu, T. The world according to Rényi: Thermodynamics of multifractal systems. Ann. Phys. 2004, 312, 17–59. [Google Scholar] [CrossRef]

- Iwamoto, M.; Shikata, J. Information theoretic security for encryption based on conditional Rényi entropies. In Proceedings of the International Conference on Information Theoretic Security, Singapore, 28–30 November 2013; pp. 103–121. [Google Scholar]

- Ilić, V.; Djordjević, I.; Stanković, M. On a general definition of conditional Rényi entropies. Proceedings 2018, 2, 166. [Google Scholar] [CrossRef]

- Fano, R.M. Transmission of Information; M.I.T. Press: Cambridge, MA, USA, 1961. [Google Scholar]

- Ilic, V.M.; Djordjevic, I.B.; Küeppers, F. On the Daróczy-Tsallis capacities of discrete channels. Entropy 2015, 20, 2. [Google Scholar]

- Yamano, T. Information theory based on nonadditive information content. Phys. Rev. E 2001, 63, 046105. [Google Scholar] [CrossRef]

- Tsallis, C.; Gell-Mann, M.; Sato, Y. Asymptotically scale-invariant occupancy of phase space makes the entropy Sq extensive. Proc. Natl. Acad. Sci. USA 2005, 102, 15377–15382. [Google Scholar] [CrossRef]

- Korbel, J.; Hanel, R.; Thurner, S. Classification of complex systems by their sample-space scaling exponents. New J. Phys. 2018, 20, 093007. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).