1. Introduction

Thanks to the recent progress in deep learning for conditional generation, we know how to build systems that can address questions such as: “How does an animal that is gray and has a trunk look?” As humans, we immediately picture an elephant. Performing the same task with neural networks requires large amounts of data that contain labels for all desired properties. Collecting the data, finding a suitable architecture, tuning all meta-parameters, and training the network on state-of-the-art compute nodes require enormous resources in terms of infrastructure and human involvement. Once the network is trained, we have a powerful and highly specialized system that can rapidly answer our original, as well as a small set of related questions.

There are numerous applications that warrant this effort, but our original question is probably not one of them, as are most conceivable questions. Nevertheless, an intelligent system should be capable of answering them through reasoning. Often, the relevant questions are not known beforehand, and they require flexible answering as they emerge. The purpose of this paper was proposing a way to design such intelligent systems.

The approach worked as follows. The context of a question (e.g., “animal” in our example) will be represented by a generative network, which translates latent variables into a member of the context (e.g., an image of a “cat”). These might be obtained from Variational Auto-Encoders (VAEs) [

1] or Generative Adversarial Nets (GANs) [

2], which learn to represent systems on which they are trained. The output of the generator is then passed to one or several classifier networks (identifying the color or the presence of a trunk). A likelihood distribution quantifies the adherence of the generator output with the posed constraint. Together with the prior probability distribution, this constitutes a Bayesian inference problem in terms of the latent variables of the generator. There is no need to train a specialized network for each new question, but solving the inference problem itself can still be a computationally expensive operation.

Of course, this approach relies on the availability of the individual networks as fundamental building blocks. These, however, are simple, serve a single purpose, and can be recycled throughout a large variety of questions. The scope of questions that can be answered this way grows super-exponentially with the number of available building blocks. For a set of

n possible features

of an object

o with

, there are

possible combinations of their presence

. A binary question about this object can test for any of the subsets of these

m distinguishable object realizations. The number of subsets of a set with

m elements is

, as for each element, one can decide individually whether it is part of the subset or not. Thus, there are

possible questions (page 13 of Jaynes [

3]).

For example, in the example with the context animal and the two features for “gray” and for “trunk” , animal types can be distinguished , allowing for the following possible questions: Is it an animal with ?

Not all these conceivable questions are reasonable, and many are nonsense. However, just because a certain combination of traits does not occur in reality does not mean the question is invalid. With the presented approach, we can ask for things that do not exist, for example a purple elephant. This property allows exploring truly novel concepts, compared to the strict recitation of the training set of conventional deep learning approaches.

To solve a specific problem, the task has to be translated into the corresponding inference problem by assembling the individual modules, which represent the parts in the question. Missing modules might have to be acquired by a dedicated training process, but once obtained, they can be added to a library for future use.

Therefore, our approach is no replacement for traditional conditional generators. As we will demonstrate, it is better to train a dedicated network for a specific task, not factoring in the initial data curation and fine-tuning. It can work along side and on top of existing architectures. Its strength is the flexibility to answer questions the designer of the individual components did not originally have in mind. With this flexibility, it is potentially possible to approach far more elaborate tasks, for which no training data are available.

Do the answers adhere to the rules of logic? Yes, as Bayesian probabilistic reasoning is the generalization of binary logic (true or false) to uncertainties, i.e., a statement has a probability

to be true [

4]. Thus, binary logic is embedded in this approach and applied in the presence of certainty. Our second example demonstrates logical reasoning, as it solves a mathematical riddle in terms of hand-written digits.

Here, we provided a proof of concept and illuminated certain facets of the approach. As demonstrations of the technique, we first discuss a generator subject to one constraint, second, how to use multiple constraints to pose and solve riddles, and, third, how to combine the approach with conventional measurement data in a large-scale inference problem using modern network architectures.

2. Related Work

Deep generative models as a description of complex systems are especially helpful in various imaging-related problems, such as de-noising, in-painting, or super-resolution by recovering the latent variables of the generator [

5,

6]. Even untrained generators can provide good models for such tasks [

7]. The mentioned methods rely on point-estimates that return a single solution as the answer. Using the deep generative models in a Bayesian context, the posterior distribution allows accounting for complex uncertainty structures [

8,

9]. In essence, we followed these works and extended them by adding constraints that were expressed through trained classification or regression networks. The fundamental idea was also proposed by Nguyen et al. [

10]. Here, we rigorously derived the approach from a Bayesian perspective, showed how this can lead to reasoning on complex tasks, and explored its properties. We discussed how this could provide a path to more general intelligent systems.

Many of the tasks addressed by the methods above are also directly approached by deep learning through end-to-end solutions, for example super-resolution [

11]. Similarly, our approach gave another perspective on conditional generative models. Usually, these are obtained by providing labels or more general constraints [

12,

13,

14,

15] during a training phase. We instead used unconstrained generators and flexibly added further constraints through independently trained networks in a modular fashion, allowing combining information from multiple sources. This way, we had a clear understanding of the role of the individual modules, despite they themselves remaining a black box.

Some related methods manipulate the latent variables of unconstrained generators to change samples in a desired direction [

16]. Furthermore, more implicit information in the form of the preference of one sample over the other can guide the generation [

17]. Our posterior distribution was in principle also a manipulation of the latent variable distribution, such that the constraints were fulfilled.

Interestingly, we can regard our approach as a form of continual variational learning [

18]. When approximating our posterior variationally, we conceptually added an additional set of layers to the original generator. This was due to the reparametrization trick [

1], which formulates the approximate distribution in terms of a simple source distribution together with a transformation, i.e., another generator. The new layers are then responsible for satisfying the posed constraint. Repeating this for multiple constraints, we can partially train generators with the help of already trained classification/regression networks, instead of data alone.

3. Trained Neural Networks as Models

3.1. Constraints through Neural Networks

We often know about one or several properties of some system

x. With some appropriate function

F, we can check whether these properties

are actually present. In our case, such functions trained a neural network. Given a desired feature value

y as the data, a candidate system

x is judged for its adherence to the feature via a likelihood probability, i.e.,

. For continuous quantities with some given uncertainty, we often can choose the Gaussian distribution:

Here, serves as the mean of the Gaussian and N is the covariance matrix. The more certain a feature is, the narrower the distribution is centered on its mean.

In the case of discrete categories, the function

F might provide classification probabilities

for the feature of

x being in class

i. An appropriate choice for a likelihood could then be the categorical distribution:

Mathematically, this distribution describes the outcome of one trial. It does not directly encode how much we trust the estimate of the network. A simple way to introduce this is to raise this distribution to a certain power

.

Positive integer values for are equivalent to multiple consecutive draws with the same outcome, resembling the multinomial distribution. This is analogous to a narrower variance of the Gaussian in the continuous case, and we used this parameter to encode our certainty in a categorical feature.

In the case of multiple constraints, e.g.,

, we can expand the likelihood using the product rule:

Ideally, we would like to assume independence between the two constraints, as this allowed us to compile the likelihoods as independent modules. In the limiting case of certainty, i.e., a delta-likelihood, this assumption holds, as there is no conditional dependence. Therefore, in the case of small uncertainties, neglecting the dependence can be justified as well.

Otherwise, the question is where the potential dependence of the data arises. This origin determines whether the dependency needs to be explicitly modeled or whether independent likelihoods can be assumed for the different measured data features. In order to gain clarity on this question, we distinguished and discussed three different origins:

intrinsic correlations of features;

the measurement of similar or identical features;

correlations in the noise among the different feature measurements.

Intrinsic correlations of features appear when quantities of the investigated system are coupled. For example, the age and height of children are strongly correlated. However, measured heights and ages have independent likelihoods, despite the fact that the true ages of children might affect the ability to measure their height accurately (by their differing ability to stay motionless). This is the case because the measured age has no influence on the height measurement, but only the true age does. The likelihood is the probability to obtain the observed data given the property of the investigated object. The correlation of these properties is not encoded in the likelihood, they are part of the prior.

Measurement of similar or identical features requires a bit more thought. Here, one has to distinguish between independent measurements, such as measuring the height of a child with two independent devices, each having its own noise process, and dependent measurements, just as stating the same measurement twice as an extreme case of dependency. In the former case, the likelihoods of the two measurements are independent and in the latter case not, and in the extreme example of replicated data, only one likelihood should be used. If both data replications are to be used, their correlation has to be modeled in the likelihood.

Correlations in the noise among the different feature measurements need to be taken into account. In the example of measuring the age and height of children, this could for example occur if the measurements were performed with two neural networks that shared some part of the infrastructure, which would cause correlated disturbances to both measurements. Such correlated noise needs to be identified and characterized before the application, for example by analyzing the measurement and classification errors and their correlations with well-labeled training data. If this is then accurately described in terms of the joint likelihood function for both feature measurements, its effect will properly be taken into account and be corrected in the Bayesian inference. If not, unwanted bias will enter the results.

Judging whether independence of different features can be assumed or might not be delicate, as the boundary between the generative network representing the prior and the analyzing network representing elements of the likelihood could be placed at different locations in the overall network. At this stage of this research, we did not want to give a premature answer to the general problem of dependencies in the likelihood and recommend that the individual situations be analyzed on a case-by-case basis. The generic answer will hopefully be given by future research. Here, we assumed the independency of the different data likelihoods and accepted the potential error.

3.2. Deep Generative Priors

We can also encode the properties of a system within a prior probability distribution

. An equivalent representation of this distribution is a generative model

. These transform latent variables with simple distributions

into system realizations

x. Deep generative models, such as GANs and VAEs, represent complex systems that so far have evaded an explicit mathematical formulation. They acquire their capabilities through large amounts of examples within a training set. The Bayes theorem allows combining this generative prior with any further information in the form of a likelihood

with data

, posing an inference problem in terms of the latent variables of the generator.

The prior of the latent variables is the simple source distribution of the generator. Without loss of generality, we can assume a standard Gaussian distribution over the latent variable

, as this provides a convenient parametrization for inference [

19,

20]. In case the generator was originally trained on another source distribution, an appropriate transformation can be absorbed in the network architecture.

5. Demonstrations

5.1. Conditional Digit Generation

In the first example, we wanted to illustrate how our approach yielded conditional generative models. We constrained a generator of hand-written digits to a certain label, the value of the digit. As the likelihood, we used the categorical distribution, containing the trained classification network

attached to the output of the generator

. The prior for latent variables

is the source distribution of the generator, i.e., the standard Gaussian. The posterior is proportional to the product of prior and likelihood with a certain choice for

to express our confidence in the constraint.

As the generative model, we used a Wasserstein-GAN [

29,

30] with three hidden layers, a convolutional architecture, and 128 latent variables trained on the MNIST dataset [

31]. The digit classification was performed by a deep three-layer convolutional neural network [

32] trained on cross-entropy and achieving

test accuracy. We strongly enforced the constraint by setting

.

As a reference, we used a dedicatedly trained, conditional Wasserstein-GAN (cWGAN) [

12,

33]. This network used the same architecture and training routine as for the unconstrained GAN above. We provided the label information in one-hot encoding in the latent layer of the generator and in the form of an outer product with the image to the discriminator. The capabilities of the networks should therefore be comparable. We used 8000 samples for each category.

In this example, we solved the inference problem in two ways, via HMC sampling and a variational mean-field approximation. For every digit, we used eight HMC-chains to draw 80,000 samples in total, after disregarding an initial burn-in and tuning phase. We aimed for an HMC acceptance rate of

and adapted a diagonal HMC mass matrix. For every sample, we performed 10 leapfrog integration steps, and all chains were initialized at a prior sample. One chain required 36 minutes. Each chain ran on one core of an AMD EPYC 7662 64-Core Processor. To ensure convergence, we calculated the Gelman–Rubin test statistic

for all latent variables [

34]. Their mean value was

, which indicated well-converged chains. The average effective sample size was

.

For the variational mean-field approximation, we employed a modified version of the Adam optimizer [

35], as described in Kucukelbir et al. [

20]. We chose

and optimized for a total time of 600 seconds. To stochastically estimate the KL-divergence, we used five pairs of antithetic samples. Antithetic sampling [

36] allows reducing the stochasticity of the estimates on gradient and loss. Every sample drawn from the approximation was accompanied by a totally anti-correlated partner, obtained by mirroring the sample at the center of the Gaussian. As for HMC, we also used 8000 samples from the approximate posterior for our further analysis.

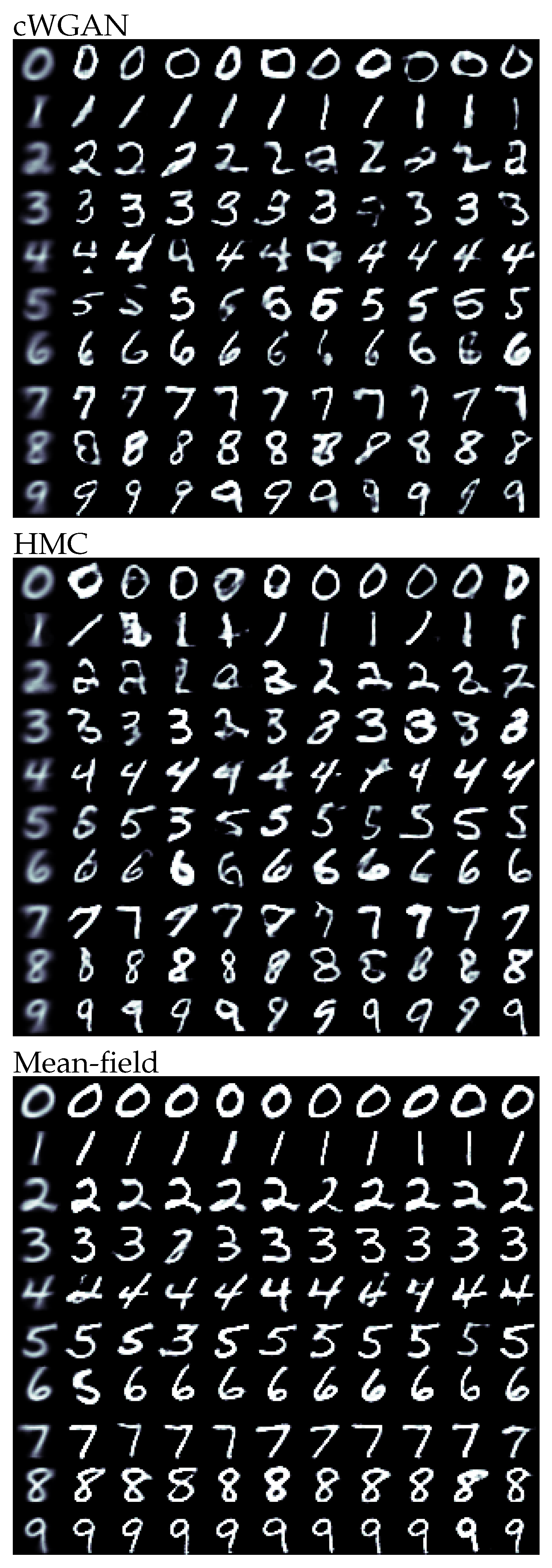

The results for all methods and digits are illustrated in

Figure 1. Visually, the HMC and cWGAN samples were almost indistinguishable. Both exhibited strong morphological variability, while satisfying the posed constraint. Compared to them, the mean-field samples were more uniform in shape, but mostly readable. As the variational approximation was unimodal and tended to underestimate the uncertainty of the posterior, it could not express all the fringe structures of the target distribution and narrow in on a typical mode.

For a more quantitative evaluation of the methods, we classified the samples using two instances of the digit classifier, the one used in the model, as well as an independently trained network with the same architecture. We compared the sample classification to the posed constraint and calculated an accuracy, which is given in

Table 1.

In addition to that, we used the Fréchet Inception Distance (FID) [

37] as a metric to evaluate the quality of the generated samples. It used a trained Inception-v3 architecture [

38] to project an ensemble of samples through several convolutional layers into a 2048-dimensional feature space. In this space, we compared the multivariate Gaussian statistics of ensembles of different origin. As a reference, we always used the original MNIST training set with the respective label, and we compared it to the samples from the various methods. Ideally, those sets were statistically indistinguishable, in which case the FID vanished. We also report this metric in

Table 1.

As indicated by the visual assessment, we found a strong similarity between cWGAN and HMC for the independently trained classifier with an overall accuracy of and , respectively. The cWGAN performed slightly better, which was not surprising as it was trained to directly perform this task. The mean-field approximation had a comparable accuracy of . When using the classifier from the model, the HMC samples were almost exclusively classified correctly. This illustrated that all network properties were inherited to the inference, including their flaws. As HMC explores all posterior features, the pathological cases will be part of the samples. Interestingly, the mean-field samples appeared to be unaffected. As the approximation settled on a typical mode, the samples were less controversial and, therefore, more robust against the employed classifier.

Regarding the Fréchet inception distance, the samples from HMC actually outperformed the cWGAN results with averages of and , respectively. The posterior samples therefore had a statistically better resemblance to the MNIST dataset. The visual impression of the more uniform samples from the mean-field approximation was reflected in the higher FID of . As expected, the approximate posterior performed worse than the full distribution. However, in some cases (for example, the digit eight), it also outperformed the cWGAN and therefore might be a valid option in many applications.

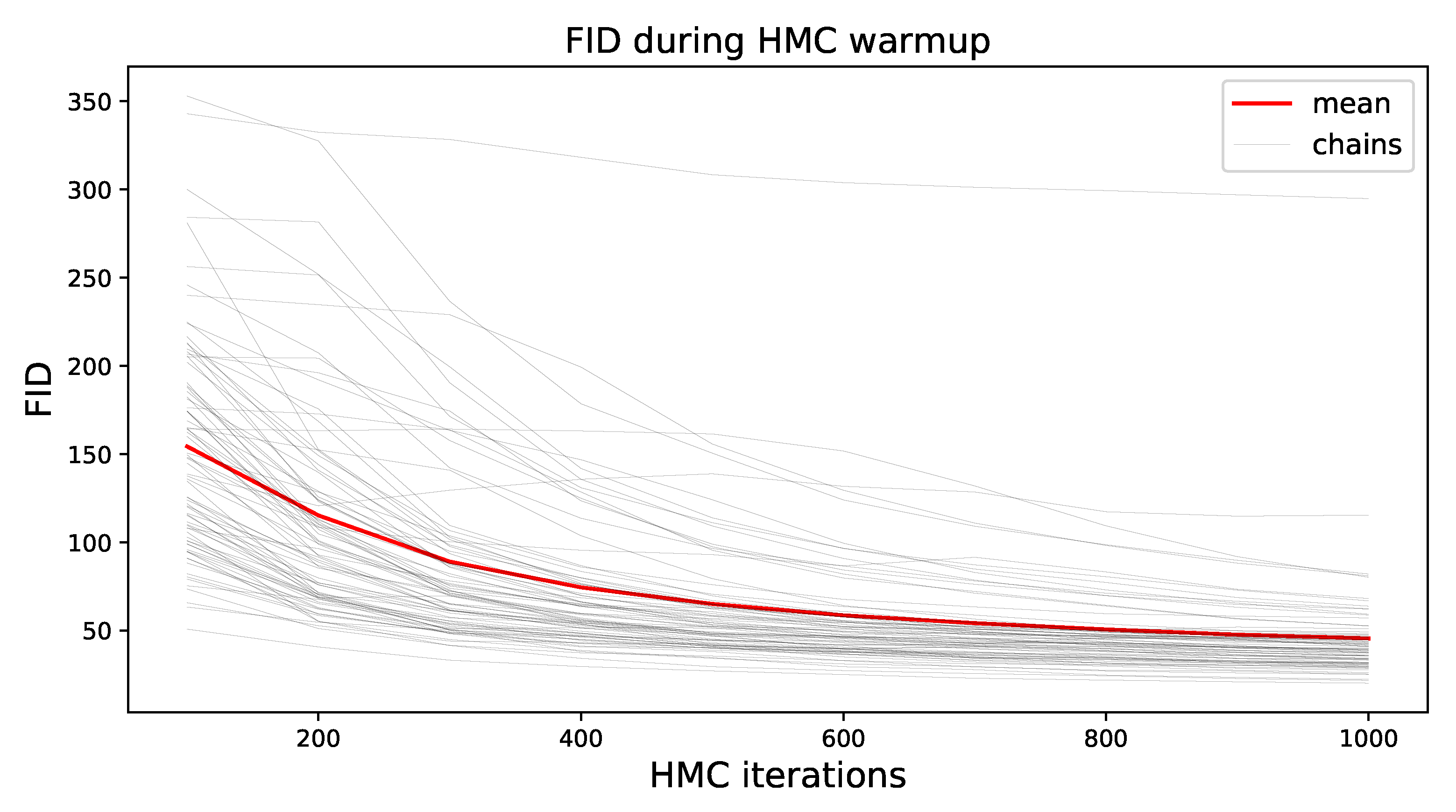

To demonstrate the convergence behavior of HMC, we show the FID of all samples during the warmup phase for all chains in

Figure 2. Most chains dropped off fast, but overall, the FID was higher in this initial phase due to burn-in, as well as strongly correlated samples. Note that a portion of the observed decrease can be attributed to the increased number of samples with time, as the estimate of the statistics became better.

5.2. Solving Riddles

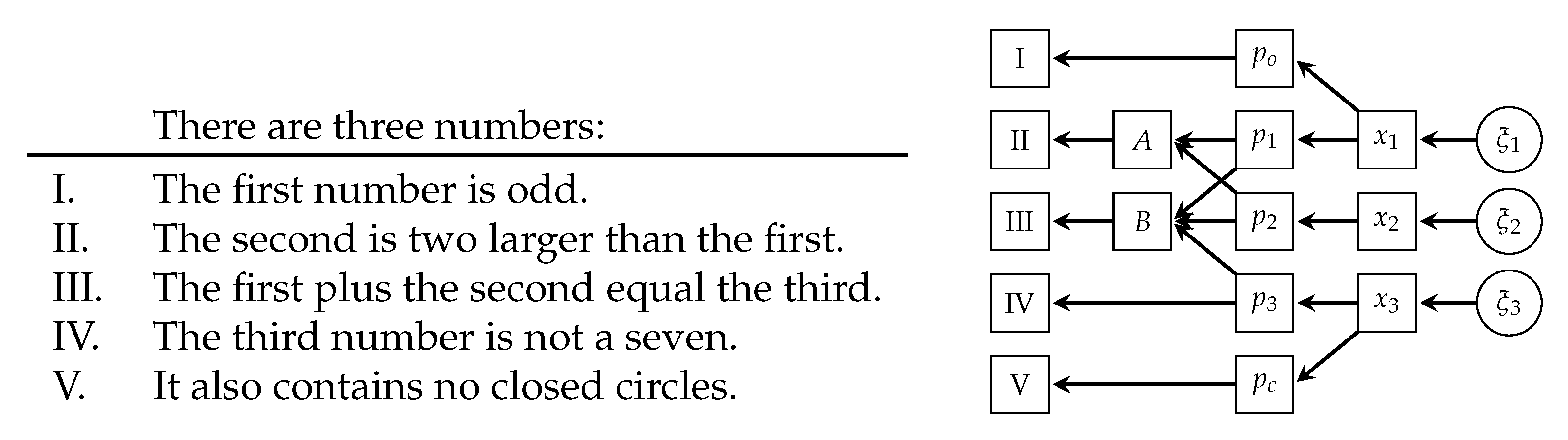

Here, we wanted to solve a riddle by enforcing multiple constraints simultaneously. We knew a priori that we were looking for three single-digit, handwritten numbers. We wanted them to fulfil the five constraints outlined on the left of

Figure 3. The only viable solution was the combination 134. In the model, the three digits were generated through three instances of the same generator used in the previous example, i.e.,

, resulting in a total of 384 latent variables in

. For each of the five constraints, we assembled a function

that checked whether it was fulfilled or not. For Constraints I, IV, and V, those corresponded to three independently trained convolutional neural networks applied to the respective digit. For the fourth constraint, we re-used the digit classification network from the previous example. The other two constraints used the same architecture, but were trained on the respective task, i.e., whether

or

, respectively.

The remaining Constraints II and III involved multiple numbers simultaneously. Both required the classification probabilities of the digits to calculate how likely they were satisfied. For every digit, this was a 10-dimensional vector. The mathematical logics were directly implemented in the model, represented by a two-tensor

A and a three-tensor

B with ones at locations corresponding to valid expressions and zeros elsewhere. Contracting these tensors with the classification probabilities provided the overall probability the constraint was fulfilled. The graphical structure of the inference problem is outlined on the right of

Figure 3.

The posterior distribution was proportional to the product of all likelihood terms and the prior (We note that Constraint IV contains redundant information, as the combination of I, II, and III already enforced the non-evenness of the third digit. This, however, was a property of the prior model (that used Arabic digits) and not of the way these properties were measured. Therefore, we can regard their measurements as independent and just multiply their likelihoods. In other words, the ability of one network to detect a seven (given that digit) was independent of the ability of another network to spot the respective constraints.).

As we were only interested in the solution of the riddle, and not the full posterior structure, we performed a variational mean-field approximation. The challenge in this case was the multi-modality, as there were many combinations of numbers that partially fulfilled the constraints. An annealing strategy [

39] partially mitigated this issue. Initially, we chose a small

to allow the optimization to explore the posterior landscape and, later on, increased it to learn the structure of the resulting mode.

Regarding the optimization scheme, we had issues with the convergence of common momentum-based stochastic optimizers and, therefore, used non-stochastic optimizers in combination with good gradient estimates, antithetic sampling, and line-search.

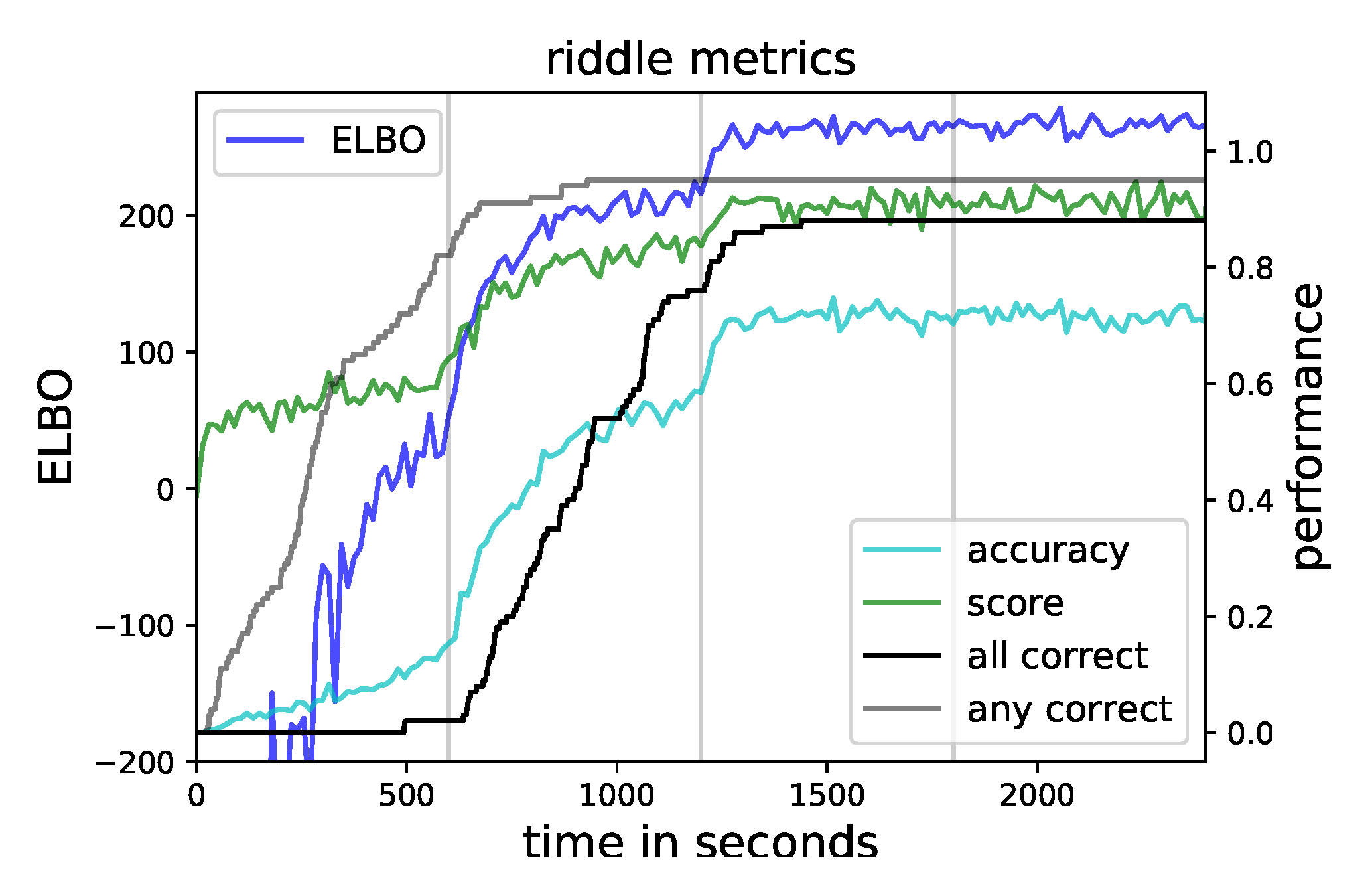

Five sample pairs were used to estimate the ELBO, its gradient, and the other quantities. The overall runtime was 2400 s. We chose and increased it after every 600 s. To illustrate the behavior quantitatively, we repeated the approximation for 100 different random seeds.

In the end, eighty-eight percent of the runs ended up with the correct solution. To track the progress during the optimization, we followed the evolution of five quantities. First, the ELBO for the final

as the overall optimization goal. Second, our accuracy was in terms of the average conditional categorical likelihood that the samples showed the correct solution. Third, the average conditional categorical likelihood over samples and constraints was a score to quantify how well the conditions were met. These quantities were between zero and one. The fourth and fifth showed cumulatively the fraction of runs that were able to achieve exclusively or any correct samples up to that time. All these quantities, averaged over the hundred runs, are shown in

Figure 4.

In the first quarter, the constraints were only weakly enforced, and the approximation explored the posterior distribution. For most runs, the first occurrence of a correct sample fell in this section, and afterwards, this line flattened. The capability to explore was in contradiction to well approximating the local mode, so only in a small number of cases, exclusively correct samples were achieved in this phase. At the end, the score was significantly above the accuracy, so although all constraints were satisfied, the solution itself was not necessarily correct.

How this is possible can be seen in the two examples shown in

Figure 5. Here, samples and their mean from two different runs are shown. The first one correctly identified the solution, whereas the second one ended up in an almost correct mode. In this, all constraints were satisfied, except that some “cheating” was involved in fulfilling Constraint IV: the last digit was an eight, drawn in such a way that it had no closed circles in order to comply with all constraints. Qualitatively, all runs that did not show the correct solution ended up in this mode. This was possible because two distinct networks were used to check for the constraints, and these samples corresponded to a fringe case in which both were satisfied. This is reminiscent of adversarial examples [

40].

To avoid this behavior, additional information could be added to the likelihood. In fact, our third constraint, i.e., the last digit not being a seven, was mathematically not necessary. Its purpose was to remove a similar local minimum to make it easier to find the correct solution. Based on this, a heuristic could be developed to iteratively add insights to wrong solutions to the problem to come up with the correct answer.

5.3. Reconstructing Faces

In the last example, we reconstructed faces from degraded, noisy, and incomplete data, making use of the additional information of age and gender. We compared them to reconstructions without this additional information, using only the image data. As the generative model of face images

, we used the stylegan architecture trained on the Flickr-Faces-HQ dataset [

41]. It generated photo-realistic images of faces at a resolution of

pixels from 512 latent parameters and contained 23 million weights. We used the ResNet-50 architecture [

42], trained on the IMDB-WIKI dataset [

43,

44] for age and gender estimates,

and

, respectively, which contained 10 million weights each.

We compiled a set of faces drawn from the generator as the ground truth and degraded them in several ways to obtain the image data. The three color channels were added up to generate a grayscale image. Then, the resolution was reduced to pixels via coarse-graining, followed by masking the left part. These steps are summarized in the linear degradation operator . Finally, Gaussian white noise with unit variance was added, providing image data and noise covariance . We obtained age and gender estimates of the ground truth by applying the corresponding classifier. To account for different input and output shapes, a re-scaling from to pixels linked the generator and classifiers.

All likelihood terms contained the generator applied to the respective operator or network. The image data entered via a Gaussian likelihood. For the age prediction, we calculated the weighted average provided by the classification probabilities, resulting in a continuous estimate. On this, we also imposed a Gaussian likelihood, centered on the true age

and assuming a standard deviation of one year, i.e.,

. The gender was constrained via a categorical likelihood with data

in favor of the respective category, and we used an

. The posterior was then proportional to:

The posterior distribution was approximated using MGVI [

28] instead of the previous mean-field approach to achieve faster convergence. We performed fifteen iterations with five antithetic sample pairs and thirty natural gradient steps, followed by another five steps with twenty-five pairs. MGVI is an iterative procedure, not directly optimizing an ELBO. The hardware used in this example was an Intel Xeon CPU E5-2680 with 2.4 GHz and an NVIDIA GeForce GTX 1080ti. One reconstruction required about 30 h.

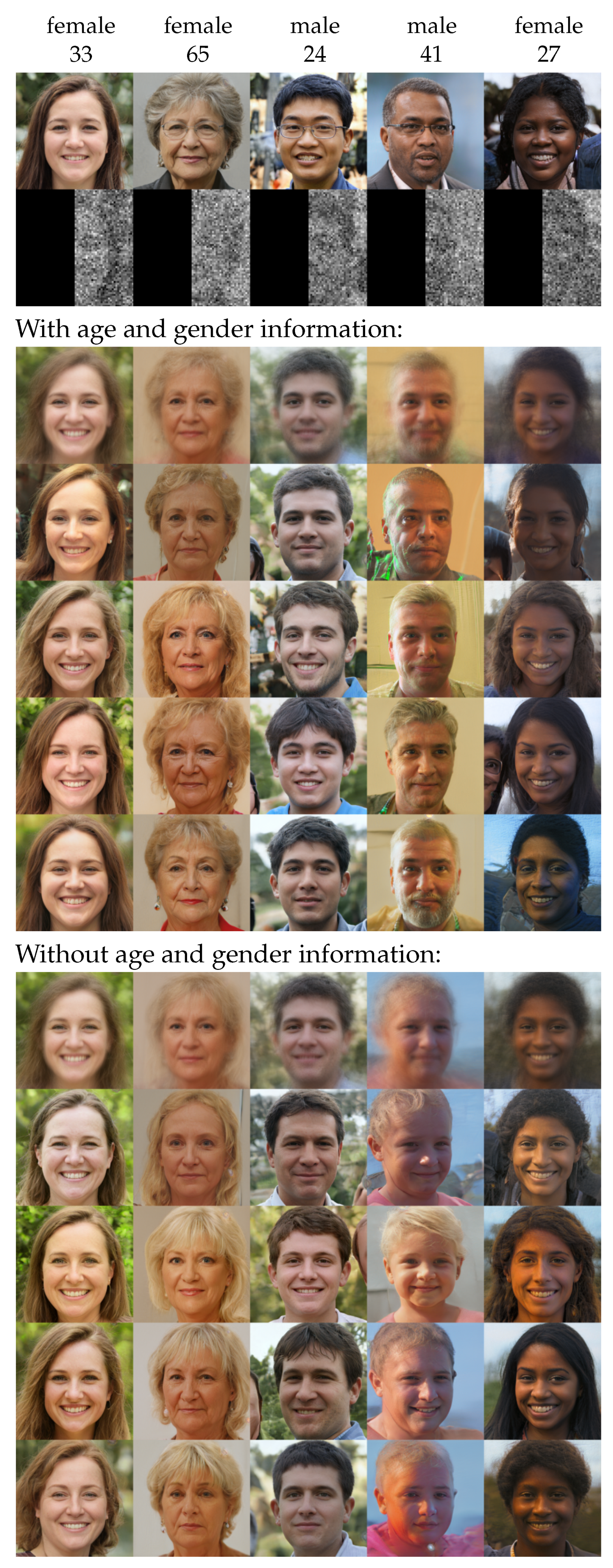

The ground truth, data, and results with and without the age and gender information for five cases are shown in

Figure 6. Overall, the sample means in both cases exhibited strong similarity with the ground truth including facial expression, posture, the background, and overall color scheme. Small-scale features, such as hairstyle and background details, were washed out. The gender seemed to be already well constrained by the image data, and adding this information did not provide much benefit.

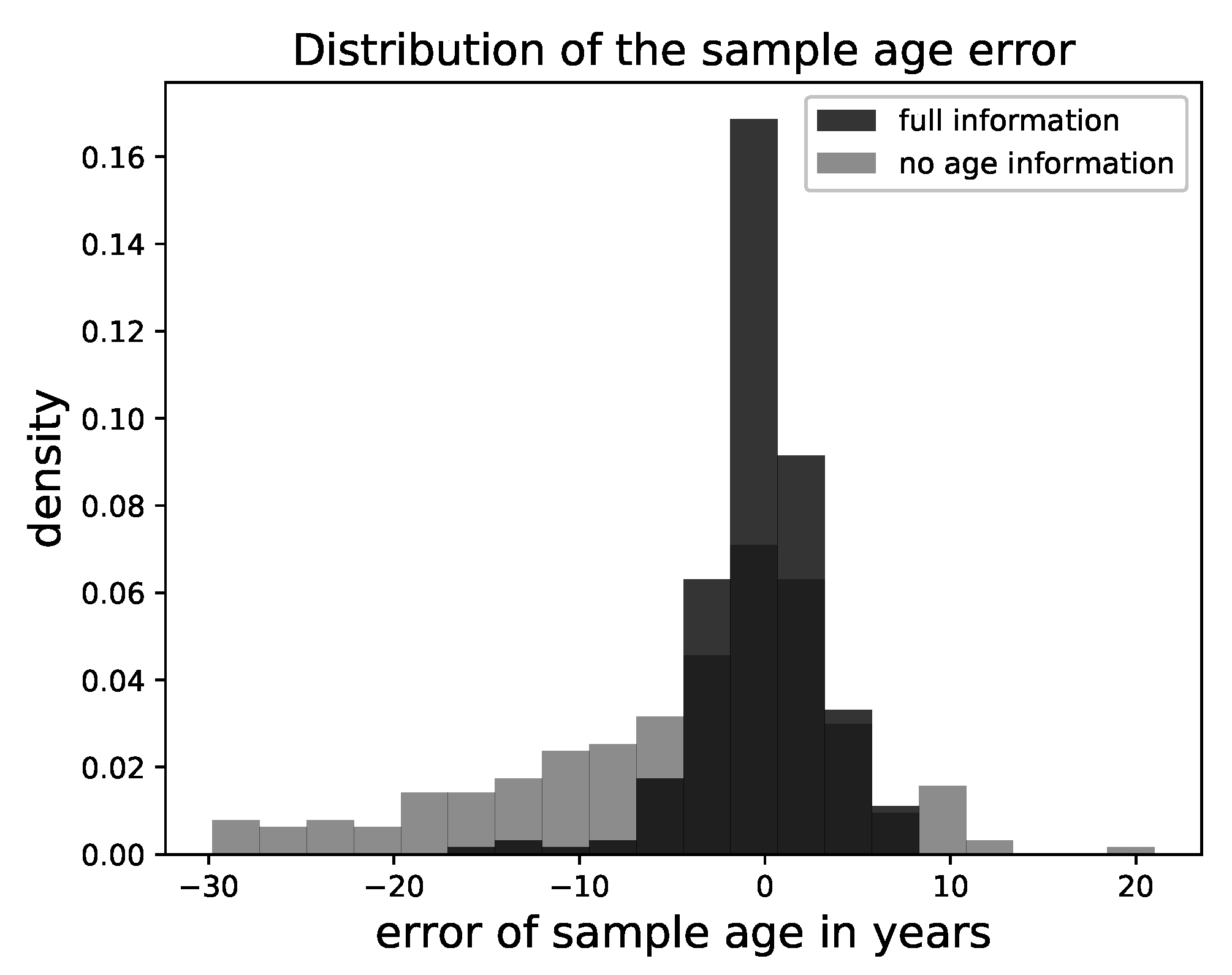

In contrast to that, the age information significantly impacted the result. In the case it was provided, the variance was visually reduced. This made sense, as the age was mostly associated with small-scale or color features, which were removed by the degradation. In

Figure 7, we show the deviation of the attributed age of the samples from the ground truth over all faces. This validated our visual impression, as the deviation was small in the case the age information was provided and the samples followed the posed constraint.

Given the bad quality of the image data, the fidelity of the reconstruction might be surprising. Every image from the generator was parametrized by 512 latent numbers, which could only describe a vanishingly small subset of all possible face images. The reconstruction was only performed within this subset, resulting in this accuracy. A more realistic generator would cover a wider range of possibilities and is expected to provide a larger variety of posterior samples and, therefore, a less accurate reconstruction.

Another issue with this approach was the inherited bias from the employed networks. If a certain feature was not sufficiently constrained by any further information, the result reproduced the prior and, therefore, the balances within the training set of the generator. In our examples, this could be observed for age, as well as ethnic features. Furthermore, the classifiers might push the results in unintended directions. However, with our approach, we could explicitly add additional information through additional networks to counteract this behavior and de-bias the results to some extent.

6. Conclusions

We demonstrated how to conditionally sample from trained generative models with the help of trained classification/regression networks. By combining several constraints, we could build a collection of neural networks that jointly solved novel tasks through reasoning and quantifying their uncertainty. The approach could built upon state-of-the-art network architectures and provided answers in the form of potentially high-dimensional posterior distributions. Information on high-level concepts could be included to support reconstructions with conventional measurement data. The optimal configuration, architectures, and inference schemes for the reasoning need to be identified by future research.

One could envision large-scale reasoning systems that flexibly answer a large variety of complex questions by assembling appropriate modules from a library of trained networks. The inherent super-exponential reach could allow building systems that derive conclusions for changing tasks. Therefore, our approach could be a step towards more generic artificial intelligence. One has to be aware of unintended biases that enter through the trained networks. These influence the reasoning, leading to potentially unintended consequences.

Furthermore, our approach posed the question of whether similar processes are at work in human/natural intelligence. The reasoning of our approach entirely depended on information that was already stored within networks, without the need for further external input. Depending on the task, generative and discriminative networks are connected on the fly. As this backward-deduction is computational expensive, repeatedly occurring tasks might not be solved this way, but are better written into forward models. Nevertheless, any new cognitive task of this kind an intelligence faces might initially be solved in the way described here, and later optimized if required.