Augmenting Paraphrase Generation with Syntax Information Using Graph Convolutional Networks

Abstract

1. Introduction

2. Background

2.1. Sequence-to-Sequence Models and Attention

2.2. Graph Convolutional Networks

- node representation: feature descriptions for all nodes summarized as the feature matrix X; and

- graph structure representation: the topology of the graph, which is typically represented in the form of an adjacency matrix A.

Syntactic GCNs

3. Related Work

3.1. Linguistic Aware Methods for Paraphrase Generation

3.2. GCN for NLP

4. Methods

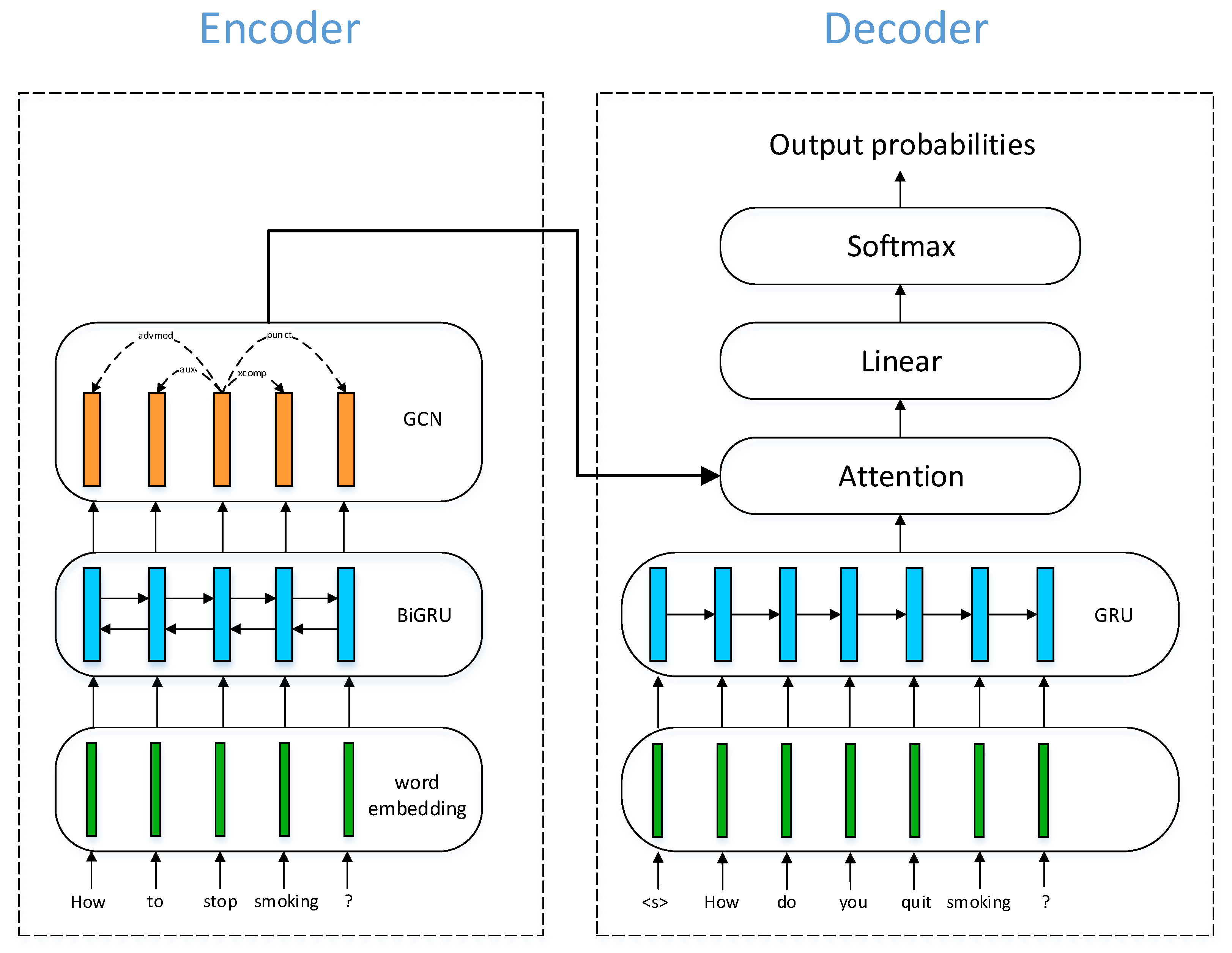

4.1. Encoder

4.2. Decoder

5. Experiments

5.1. Datasets

5.2. Training Configuration

- For the three Quora datasets, evaluation was performed on the validation set at the end of each epoch. The learning rate was halved if the validation loss did not decrease for two consecutive evaluations. The stopping criterion was the BLEU score, and the tolerance number was set to 5, so training was terminated if BLEU scores on 5 consecutive evaluations on the development set did not improve. Since the Quora datasets are relatively small, we used one layer in both encoder (bidirectional) and decoder.

- For the ParaNMT dataset, we evaluated the model every 2000 training steps. The criterion adopted for early-stopping was BLEU, and the tolerance number was set to 10, which is the minimum to cover an entire epoch. The layer depths of both encoder and decoder were set to 2, where the encoder is again bidirectional.

5.3. Evaluation Metrics

- BLEU [33] counts the overlap of sentence fragments in reference translations and the candidate translation output by NMT systems. Assume there are two reference translations and a candidate translation. For each word type in the candidate, the number of times it occurs in both reference sentences are computed, and the maximum of these two counts is taken as an upper bound. Then, the total count of each candidate word is clipped by this upper bound. Next, these clipped counts are summed up. Finally, this sum is divided by the total (unclipped) number of candidate words. This is the case for single words or unigrams. The score for other n-grams is computed similarly. Typically, n ranges from 1 to 4.

- METEOR [34] calculates unigram matching in a generalized fashion. For unigrams, BLEU only takes surface form into account. In contrast, METEOR also matches stem, synonym, and paraphrase between candidate and reference.

- ROUGE [35] is commonly employed in the evaluation of automatically generated summaries. However, it is also used to assess paraphrases. There are four types of ROUGE, among which ROUGE-n that deals with n-grams is most pertinent to paraphrase evaluation. Assume there are two reference sentences. For each n-gram in each of the two reference sentences, the number of times the n-gram occurs in the candidate is calculated. The maximum of these two counts is kept. Then, the maximum counts for each n-gram are summed, which is then divided by the total number of counts of n-grams in the references. We also report results with another type of ROUGE: ROUGE-L. It operates on longest common subsequence. The ROUGE scores presented in this work are F-measure values, which is a trade-off between precision and recall.

6. Results and Discussion

6.1. Prediction Scores

6.2. Models for Comparison

- Residual LSTM [11] is the first seq2seq model proposed for paraphrase generation.

- FSET [36] is a retrieval-based method for paraphrase generation that paraphrases an input sequence by editing it using an edit vector which is composed of the extracted relations between a retrieved sentence pair.

- KEPN [16] employs an off-the-shelf dictionary containing word-level paraphrase pairs(synonyms). In addition to using these synonym pairs to guide the decision on whether to generate a new word or replace it with a synonym, it integrates information of word location with a positional encoding layer in Transformer.

- VAE-SVG-eq [37] is a variational autoencoder (VAE) based on neural networks that conditions both the encoder and decoder of VAE on the input sentence.

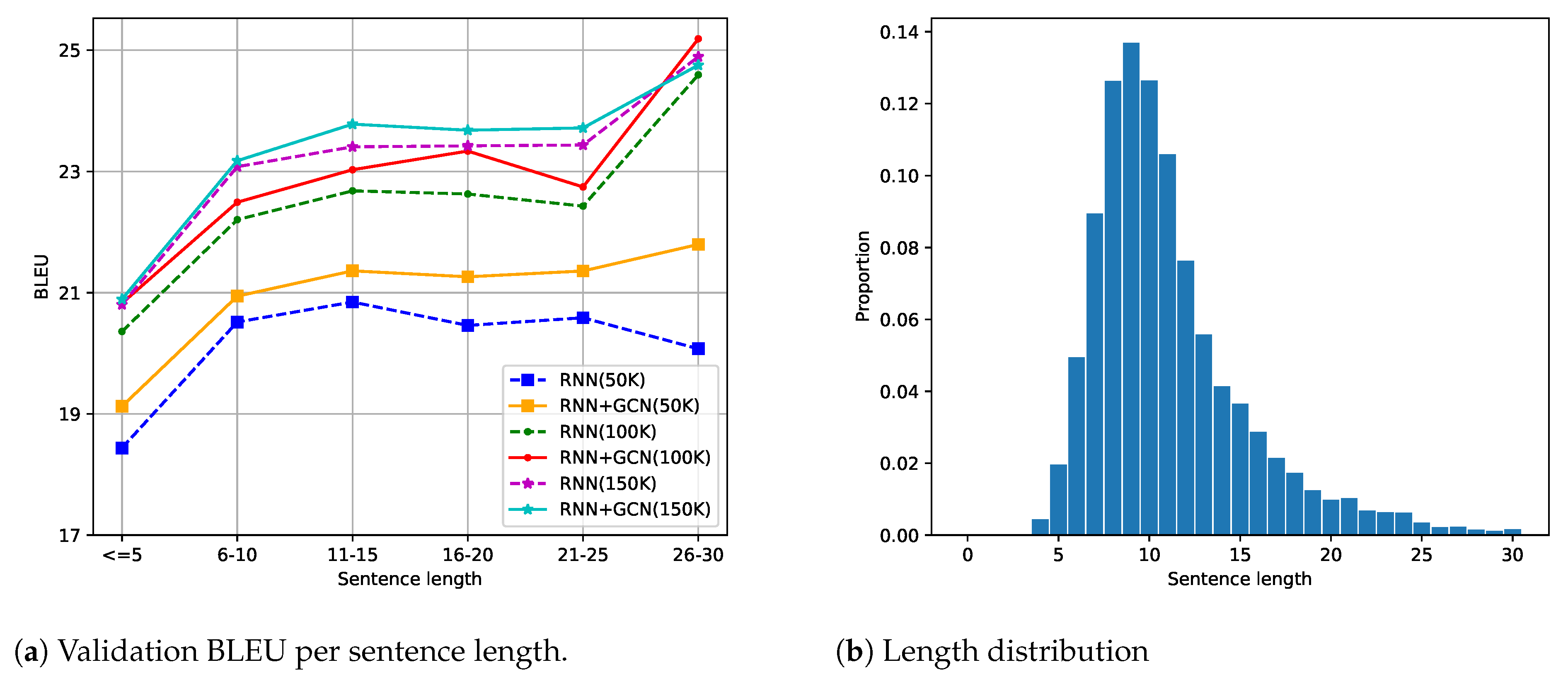

6.3. Effect of Sentence Length

6.4. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| NLP | Natural Language Processing |

| GCN | Graph Convolutional Network |

| RNN | Recurrent Neural Network |

| BiRNN | Bidirectional Recurrent Neural Network |

| GRU | Gated Recurrent Unit |

| LSTM | Long Short-Term Memory |

| NMT | Neural Machine Translation |

| CBOW | continuous-bag-of-words |

References

- Androutsopoulos, I.; Malakasiotis, P. A survey of paraphrasing and textual entailment methods. J. Artif. Intell. Res. 2010, 38, 135–187. [Google Scholar] [CrossRef]

- Madnani, N.; Dorr, B.J. Generating phrasal and sentential paraphrases: A survey of data-driven methods. Comput. Linguist. 2010, 36, 341–387. [Google Scholar] [CrossRef]

- Shinyama, Y.; Sekine, S. Paraphrase Acquisition for Information Extraction. In Proceedings of the Second International Workshop on Paraphrasing, Sapporo, Japan, 11 July 2003; pp. 65–71. [Google Scholar]

- Wallis, P. Information Retrieval Based on Paraphrase. Proceedings of PACLING Conference. Citeseer. 1993. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.11.8885&rep=rep1&type=pdf (accessed on 30 January 2021).

- Yan, Z.; Duan, N.; Bao, J.; Chen, P.; Zhou, M.; Li, Z.; Zhou, J. Docchat: An information retrieval approach for chatbot engines using unstructured documents. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 516–525. [Google Scholar]

- Fader, A.; Zettlemoyer, L.; Etzioni, O. Open question answering over curated and extracted knowledge bases. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 1156–1165. [Google Scholar]

- Berant, J.; Liang, P. Semantic Parsing via Paraphrasing. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Baltimore, MD, USA, 22–27 June 2014; pp. 1415–1425. [Google Scholar]

- Zhang, C.; Sah, S.; Nguyen, T.; Peri, D.; Loui, A.; Salvaggio, C.; Ptucha, R. Semantic sentence embeddings for paraphrasing and text summarization. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 705–709. [Google Scholar]

- Zhao, S.; Meng, R.; He, D.; Andi, S.; Bambang, P. Integrating transformer and paraphrase rules for sentence simplification. arXiv 2018, arXiv:1810.11193. [Google Scholar]

- Guo, H.; Pasunuru, R.; Bansal, M. Dynamic Multi-Level Multi-Task Learning for Sentence Simplification. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 462–476. [Google Scholar]

- Prakash, A.; Hasan, S.A.; Lee, K.; Datla, V.; Qadir, A.; Liu, J.; Farri, O. Neural Paraphrase Generation with Stacked Residual LSTM Networks. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 13–16 December 2016; pp. 2923–2934. [Google Scholar]

- Cao, Z.; Luo, C.; Li, W.; Li, S. Joint copying and restricted generation for paraphrase. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–10 February 2017. [Google Scholar]

- Mallinson, J.; Sennrich, R.; Lapata, M. Paraphrasing Revisited with Neural Machine Translation. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 1, Long Papers, Valencia, Spain, 3–7 April 2017; pp. 881–893. [Google Scholar]

- Wieting, J.; Gimpel, K. ParaNMT-50M: Pushing the Limits of Paraphrastic Sentence Embeddings with Millions of Machine Translations. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 451–462. [Google Scholar]

- Huang, S.; Wu, Y.; Wei, F.; Luan, Z. Dictionary-guided editing networks for paraphrase generation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 6546–6553. [Google Scholar]

- Lin, Z.; Li, Z.; Ding, N.; Zheng, H.T.; Shen, Y.; Wang, W.; Zhao, C.Z. Integrating Linguistic Knowledge to Sentence Paraphrase Generation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 8368–8375. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Wang, S.; Gupta, R.; Chang, N.; Baldridge, J. A task in a suit and a tie: Paraphrase generation with semantic augmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7176–7183. [Google Scholar]

- Palmer, M.; Gildea, D.; Kingsbury, P. The proposition bank: An annotated corpus of semantic roles. Comput. Linguist. 2005, 31, 71–106. [Google Scholar] [CrossRef]

- Iyyer, M.; Wieting, J.; Gimpel, K.; Zettlemoyer, L. Adversarial Example Generation with Syntactically Controlled Paraphrase Networks. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; pp. 1875–1885. [Google Scholar]

- Chen, M.; Tang, Q.; Wiseman, S.; Gimpel, K. Controllable Paraphrase Generation with a Syntactic Exemplar. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 5972–5984. [Google Scholar]

- Kumar, A.; Ahuja, K.; Vadapalli, R.; Talukdar, P. Syntax-Guided Controlled Generation of Paraphrases. Trans. Assoc. Comput. Linguist. 2020, 8, 330–345. [Google Scholar] [CrossRef]

- Sutskever, I. Sequence to Sequence Learning with Neural Networks. In Proceedings of the 27th Conference on Neural Information Processing Systems (NIPS 2014), Montreal, QC, Canada, 8–11 December 2014; pp. 1–9. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Marcheggiani, D.; Titov, I. Encoding Sentences with Graph Convolutional Networks for Semantic Role Labeling. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 1–6 June 2017; pp. 1506–1515. [Google Scholar]

- Ma, S.; Sun, X.; Li, W.; Li, S.; Li, W.; Ren, X. Query and Output: Generating Words by Querying Distributed Word Representations for Paraphrase Generation. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; pp. 196–206. [Google Scholar]

- Vashishth, S.; Bhandari, M.; Yadav, P.; Rai, P.; Bhattacharyya, C.; Talukdar, P. Incorporating Syntactic and Semantic Information in Word Embeddings using Graph Convolutional Networks. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3308–3318. [Google Scholar]

- Mikolov, T.; Corrado, G.; Kai, C.; Dean, J. Efficient Estimation of Word Representations in Vector Space. In Proceedings of the International Conference on Learning Representations (ICLR 2013), Scottsdale, AZ, USA, 2–4 May 2013. [Google Scholar]

- Bastings, J.; Titov, I.; Aziz, W.; Marcheggiani, D.; Sima’an, K. Graph Convolutional Encoders for Syntax-aware Neural Machine Translation. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 1–6 June 2017; pp. 1957–1967. [Google Scholar]

- Bastings, J. The Annotated Encoder-Decoder with Attention. 2018. Available online: https://bastings.github.io/annotated_encoder_decoder/ (accessed on 4 January 2021).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Caruana, R.; Lawrence, S.; Giles, C.L. Overfitting in neural nets: Backpropagation, conjugate gradient, and early stopping. In Advances in Neural Information Processing Systems, Proceedings of the 2000 Conference, Vancouver, BC, Canada, 3–8 December 2001; The MIT Press: Cambridge, MA, USA; London, UK, 2001; pp. 402–408. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An Automatic Metric for MT Evaluation with Improved Correlation with Human Judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; pp. 65–72. [Google Scholar]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Proceedings of the Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Kazemnejad, A.; Salehi, M.; Soleymani Baghshah, M. Paraphrase Generation by Learning How to Edit from Samples. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 6010–6021. [Google Scholar]

- Gupta, A.; Agarwal, A.; Singh, P.; Rai, P. A deep generative framework for paraphrase generation. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

| Dataset | Model | BLEU | METEOR | ROUGE-1 | ROUGE-2 | ROUGE-L |

|---|---|---|---|---|---|---|

| Quora50K | RNN | 20.57 (±0.12) | 47.3 (±0.14) | 48.63 (±0.15) | 28.12 (±0.15) | 46.84 (±0.13) |

| RNN + GCN | 21.09 (±0.07) | 48.41 (±0.1) | 49.84 (±0.1) | 28.93 (±0.09) | 48.0 (±0.1) | |

| Quora100K | RNN | 22.38 (±0.08) | 49.85 (±0.07) | 51.64 (±0.12) | 30.57 (±0.08) | 49.56 (±0.09) |

| RNN + GCN | 22.74 (±0.1) | 50.59 (±0.1) | 52.72 (±0.09) | 31.42 (±0.09) | 50.67 (±0.11) | |

| Quora150K | RNN | 23.19 (±0.06) | 50.89 (±0.05) | 52.86 (±0.07) | 31.71 (±0.02) | 50.7 (±0.06) |

| RNN + GCN | 23.4 (±0.13) † | 51.4 (±0.19) | 53.68 (±0.11) | 32.31 (±0.16) | 51.53 (±0.11) | |

| ParaNMT | RNN | 16.15 (±0.37) | 43.32 (±0.49) | 46.66 (±0.34) | 22.58 (±0.35) | 43.48 (±0.33) |

| RNN + GCN | 17.09 (±0.15) | 44.52 (±0.27) | 47.65 (±0.19) | 23.5 (±0.18) | 44.49 (±0.2) |

| Model | METEOR | BLEU | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 |

|---|---|---|---|---|---|---|

| Residual LSTM [11] * | 28.9 | 27.4 | - | 38.52 | - | 24.56 |

| FSET [36] | 38.57 | - | - | 51.03 | - | 33.46 |

| KEPN [16] | 30.4 | 29.2 | - | - | - | - |

| VAE-SVG-eq [37] | 33.6 | 38.3 | - | - | - | - |

| RNN(ours) | 50.88 | 23.14 | 49.0 | 27.78 | 17.72 | 11.89 |

| RNN+GCN(ours) | 51.39 | 23.34 | 49.04 | 28.13 | 17.95 | 11.99 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chi, X.; Xiang, Y. Augmenting Paraphrase Generation with Syntax Information Using Graph Convolutional Networks. Entropy 2021, 23, 566. https://doi.org/10.3390/e23050566

Chi X, Xiang Y. Augmenting Paraphrase Generation with Syntax Information Using Graph Convolutional Networks. Entropy. 2021; 23(5):566. https://doi.org/10.3390/e23050566

Chicago/Turabian StyleChi, Xiaoqiang, and Yang Xiang. 2021. "Augmenting Paraphrase Generation with Syntax Information Using Graph Convolutional Networks" Entropy 23, no. 5: 566. https://doi.org/10.3390/e23050566

APA StyleChi, X., & Xiang, Y. (2021). Augmenting Paraphrase Generation with Syntax Information Using Graph Convolutional Networks. Entropy, 23(5), 566. https://doi.org/10.3390/e23050566