Abstract

Living systems are open systems, where the laws of nonequilibrium thermodynamics play the important role. Therefore, studying living systems from a nonequilibrium thermodynamic aspect is interesting and useful. In this review, we briefly introduce the history and current development of nonequilibrium thermodynamics, especially that in biochemical systems. We first introduce historically how people realized the importance to study biological systems in the thermodynamic point of view. We then introduce the development of stochastic thermodynamics, especially three landmarks: Jarzynski equality, Crooks’ fluctuation theorem and thermodynamic uncertainty relation. We also summarize the current theoretical framework for stochastic thermodynamics in biochemical reaction networks, especially the thermodynamic concepts and instruments at nonequilibrium steady state. Finally, we show two applications and research paradigms for thermodynamic study in biological systems.

1. Introduction

How should a living system, e.g., a cell, be described from a physical aspect? In 1943, Erwin Schrödinger provided a systematic point of view in his speech, which explained the basic physical logic of cellular activities. The content, which was quite novel and interesting to people especially those with physical background, was later summarized into a book named What is life [1] and became famous worldwide. In this book, he proposed that, the key difference between a living cell and a “dead” system is its ORDER. In a living cell, countless biochemical reactions occur regularly, orderly and endlessly, which would not happen in a non-living system. For a system without living activity, for example, a box of gas mixture, when put into a fixed environment its intrinsic reactions will soon enter the inactive, stable, orderless, dead thermodynamic equilibrium state where all the ordered intrinsic activities will stop and the entropy of the system reaches maximum, whereas living system maintains an ordered and relatively steady state and never reaches equilibrium. Why could this ordered steady state for living systems be maintained? According to the 2nd law of thermodynamics, the entropy of an isolated system would never decrease. Hence, as Schrödinger pointed out, the key reason is that living cells exchange energy and materials with external environment, absorb necessary energy and nutrients for biochemical reactions and expel waste and heat from the reactions. In terms of statistical physics, the system is maintained at a low entropy state to make a living, by absorbing “negative entropy” from environment to nullify the entropy production from its necessary biological activities.

Schrödinger’s point of view had great impact on biology and physics. It has been widely accepted now that metabolism, which describes how a living system continuously consumes necessary nutrients and energy taken from environment for life-sustaining, is the essence of living activities. On the other hand, this point of view first explicitly connected biological phenomenon with the theory of statistical physics, hence drew a large group of statistical physicists’ attention to systems far from equilibrium, i.e., nonequilibrium systems. Despite the success in explaining various non-living phenomena, the traditional equilibrium and near-equilibrium statistical physics had long been difficult to use in living system. After Schrödinger proposed his idea, physicists started to realize that open nonequilibrium systems (represented by living systems) are quite complicated and a new angle was needed.

Another succeeding revolutionary idea comes from the Brussels School. During 1950s, the founder of Brussels School Prigogine and some other researchers discovered the so-called “minimal entropy production principle” [2,3,4] for systems near equilibrium. This principle declares that for systems near equilibrium where the response to thermodynamic force is linear, the steady state (under a certain thermodynamic force) would be the state where entropy production rate takes minimum. However, this principle cannot be used to explain some complicated nonequilibrium phenomena, for example, Rayleigh-Bénard convection [5,6,7,8] and Belousov–Zhabotinsky reaction oscillation [9,10,11,12,13]. These systems are maintained at a highly ordered state, so the entropy production in these systems must be high and should not be at minimum. One common characteristic in these systems is that the response to thermodynamic force is highly nonlinear, and this nonlinearity is why minimal entropy production principle does not apply and ordered patterns arise.

Since Prigogine and colleges found that it’s impossible to apply the minimal entropy production principle to systems arbitrarily far from equilibrium, they proposed the famous dissipative structure theory [14,15]: for systems that are far from equilibrium, the response functions to various thermodynamic forces go beyond linear regime described by linear response theory and arrive at the nonlinear regime. In this regime, the system may be maintained at a relatively steady state (the nonequilibrium steady state) by dissipating free energy and producing entropy, which can exhibit complex and ordered dynamics.

Dissipative structure theory, combined with Schrödinger’s novel idea, pointed out several fundamental requirements for ordered systems including living systems in terms of statistical physics:

- The system must be open. By exchanging energy with external environment, a system’s ordered steady state is maintained with free energy dissipated and entropy (produced by nonequilibrium activities) expelled. In living systems and other chemical reaction systems, mass exchange is also needed in form of chemical reactants

- The system must be driven far enough from equilibrium. If the system is at or near equilibrium, it would be described by minimal entropy production principle and not be available for a steady ordered state.

- The nonlinearity in the system must be strong enough. Week nonlinearity would not lead to a complex and non-trivial dynamics.

When the above requirements are satisfied, self-organized steady ordered structure, i.e., dissipative structure, may arise.

Prigogine’s theory shed light on the research on thermodynamics for system far from equilibrium and its relation with complex nonlinear systems including living systems. With a series of proceeding work since 1980s on nonequilibrium thermodynamics, it’s now possible to explore the thermodynamic rules within living systems.

2. The Development of Nonequilibrium Statistical Physics and Stochastic Thermodynamics

Modern statistical mechanics is based on stochastic theory, where the dynamics of a system is described by stochastic process [16,17]. When the system is near equilibrium, its dynamics is simple fluctuation near the equilibrium state. As introduced above, it has been quite clear for long to physicsts how to describe such fluctuation. However, it was hard for physicists to discover the universal rules applicable to systems arbitrarily far from equilibrium, until recent several decades. The landmark results are the so-called Jarzynski equality [18,19], a series of fluctuation theorems [20,21,22,23] among which the most famous one is Crooks’ fluctuation theorem [21], and the so-called thermodynamic uncertainty relation (TUR) [24,25,26,27].

Jarzynski equality describes the relation between the work done and the free energy change during a general isothermal process. When the system is at a unique certain macroscopic state A, its Hamiltonian is also fixed. When put into a heat bath with temperature T, according to equilibrium statistical physics, it corresponds to one certain equilibrium state whose probability of each microscopic state follows Boltzmann distribution, , with the Hamiltonian for the microscopic state s, the partition function, and . The free energy at equilibrium state is also fixed. When the macroscopic state is changed to another macroscopic state B by doing work, e.g., pulling a spring, pushing a piston, or changing the conformation of a protein complex, the Hamiltonian would be changed to . Same as above, macroscopic state B also corresponds to a unique certain equilibrium state with free energy . According to the 2nd law, in thermodynamic limit, the work done during this process should no smaller than their free energy difference . However, thermodynamic limit only applies in ensemble sense. For each single realization of this stochastic process, the initial state of the system follows the Boltzmann distribution, and is affected by noise from heat bath. Hence during this process, the system would not always follow the same trajectory in phase space, and the work done in each single realization W may also be different. Previous study including the 2nd law actually just stated that the average work done . Jarzynski pointed out that, we should focus not only the average work done , but also the work distribution for each realization, and he found the following equality:

It could also be easily checked with Jensen’s inequality that Equation (1) simply implies the 2nd law .

Jarzynski equality is a great breakthrough to 2nd law, and was confirmed by experiments in 2000s [28,29,30]. For hundreds of years, Jarzynski equality for the first time shows how the 2nd law rules thermodynamic processes in the form of equality and beyond its previous inequality form, and is applicable to systems arbitrarily far from equilibrium.

Crooks’ fluctuation theorem describes the relation between the probability of a trajectory in phase space and the corresponding entropy production [21]. Suppose there is an amount of entropy production along one trajectory (forward trajectory), then the corresponding reverse process (reverse trajectory) would naturally produce entropy (absorb entropy). Denote the probability of the forward trajectory as and the probability of reverse trajectory as . When , according to the 2nd law, in thermodynamic limit , i.e., the process that decreases the overall entropy would never occur. However, in small systems where stochasticity is not negligible, it’s possible to occur with a small probability. This stochasticity is described by Crooks’ fluctuation theorem, which declares that in small systems the ratio is exponentially suppressed by , i.e.,

According to this formula, the larger is, the more impossible would the reverse process be. This is compatible with the 2nd law, since is an extensive quantity and is proportional to the particle number. For a “macroscopic” trajectory, where the particle involved in is around ∼1 mol, the entropy production is in the order of ∼∼, so is very large. In this sense, , and the probability of the reverse process is almost impossible. For a small system, is finite, so the process that “seemingly violate” the 2nd law would occur more frequently. Such fluctuation resulted from stochasticity is why “fluctuation theorem” gets its name. This quantitative relation between the fluctuation probability and the corresponding entropy production has been confirmed by experiments [31,32,33].

Crooks’ fluctuation theorem, just like Jarzynski equality, is also a breakthrough to the 2nd law. Actually, by considering Jarzynski equality, Crooks’ fluctuation theorem can be expressed in a simpler form:

These theories are the most breakthrough in recent 30 years. For the first time, physicists could be able to explore laws in statistical physics far away from equilibrium with these theoretical instruments. They also indicate that, only the statistical laws in mesoscopic systems, where system are small so fluctuation is not negligible but not too small for statistical physics to fail, are fundamental.

Seifert and some proceeding researchers extend these theories together with definition of some other thermodynamic variables to the motion of single particle. They calculated the entropy production rate in this case and proved the corresponding fluctuation theorem [34]:

where is the entropy production for single particle along a trajectory. This is the same with Crooks’ fluctuation theorem. Meanwhile, there are some other forms of fluctuation theorems, which are catalogued in a review from Seifert [23].

After these work, statistical physics can be applied to a much wider range of studies. Researchers now are able to discuss the systems not only at or near equilibrium, but also the systems arbitrarily far from equilibrium and systems even small to single particle with external stochastic force, in terms of thermodynamics. Thus, a new branch named stochastic thermodynamics [23,34,35,36,37,38,39,40] is established and soon becomes a research hotspot in statistical physics and some related fields.

With the branch established, recently an inequality named “thermodynamic uncertainty relation” (TUR) was proposed [24,25,26,27] which shows the relation between the fluctuation and the entropy production at nonequlibrium steady state, and this relation is one of the most important and impacting theoretical results in the last years. Generally, the microstates of the system could form lots of cycles. At nonequilibrium steady state, there would be cyclic fluxes on these cycles (see Section 3.3 below for more details on cycle theory). Quantitatively, denoting the stochastic net cyclic flux on a cycle as j and the corresponding entropy production rate on this cycle as , a relation will hold:

where is the variance of j and is the average of j. It’s clear that if the entropy production rate is increased, the lower bound of the relative fluctuation of the cyclic flux could be suppressed. This relation can also be written in the integral form in a finite time interval :

where is on average how many times the cycle is completed in the time interval and is the entropy produced in . These relations are the called thermodynamic uncertainty relations (TURs).

TUR shows how the stochastic dynamic quantities (fluctuations) are related to thermodynamic quantities (entropy production rates). By increasing entropy production rate, the noise of the dynamics may be suppressed, and vice versa. It also reveals that, it’s impossible to reduce the fluctuation and entropy production rate simultaneously to infinitely small. This relation is attracting more and more attention, and is one of the most important theoretical results in this field in the past years.

3. Nonequilibrium Steady State in Chemical Reaction Networks and Its Thermodynamics

As introduced above, self-organized dissipative structure will maintain its ordered state for relatively long time, although far from equilibrium. Therefore, thermodynamic rules and properties in such steady states, i.e., nonequilibrium steady states (NESSs), are quite interesting to physicists especially biophysicists. The thermodynamic laws and methodologies in NESS, especially those in biochemical NESS, are of great importance to study the thermodynamic properties of living systems. Here we introduce the current thermodynamic theories in chemical reaction networks.

3.1. Description of Chemical Reactions with Stochastic Process

In the framework of stochastic process, chemical reactions are considered as a series of Markov processes described by chemical master equations (CMEs) [41,42]. Consider a general chemical reaction:

where is the i-th component and the corresponding stoichiometric coefficient. Denoting as the molecule number of , the microscopic state of the system can be represented by . For the forward reaction, after the reaction happens, will be changed to with the reaction frequency proportional to probability that the reactant molecules collide, which is also proportional to

Similar for the reverse reaction, after the reverse reaction happens will be changed to the reaction frequency proportional to

Hence, considering other effects such as volume, the corresponding CME describing the evolution of the system’s probability density can be written as [41]

where V is the volume and is the “step operator” defined by its effect on arbitrary function : .

If the system consists of m reactions () forming a chemical reaction network (CRN),

the CME that describes this CRN should add up all the terms corresponding to each reaction, i.e.,

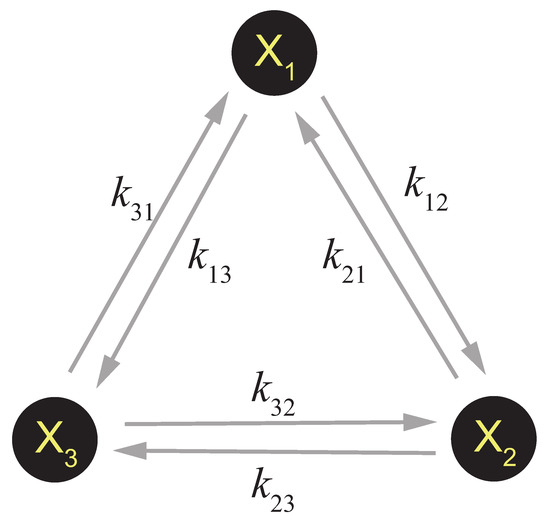

This is how to describe a general CRN. Sometimes this can be simplified. If the reaction system only consists of a series of unimolecular reactions (for example, a CRN that describes the decoration or allosteric transition of a single protein complex), a simplified framework can be applied [36]. As is shown in Figure 1, suppose there is a system consisting of three components which can be transferred to each other by unimolecular reactions, i.e.,

Figure 1.

A simple 3-component unimolecular CRN.

The dynamics of this system can be regarded as a single molecule jumping between the three states , with the probability stopping at each state equals to the concentration of each component at steady state. Thus the evolution of is governed by the following master equation:

which has a much simpler form than the general Equation (10).

In summary, generally the dynamics of a CRN can be regarded the system jump between different microscopic states via Markov processes and can be described by a CME. Actually, such description in terms of stochastic process is proposed much earlier than the research on NESS or stochastic thermodynamics and had already brought in many important applications. For example, the precise simulation of chemical reactions—Gillespie algorithm [43].

3.2. Thermodynamic Quantities in Chemical Reaction Networks Out of Equilibrium

How to define the thermodynamic quantities in chemical reaction systems? Although they are well defined in equilibrium statistical mechanics, these definitions are traditionally only proved to hold at equilibrium state. Luckily, some of the definitions can be extend to nonequilibrium systems. The extension is strictly established by Ge and Qian [44], which is partly reported below. Their original work ignored the cases where different microstates can be connected by multiple reactions, so the content below is slightly modified. It should be noted that the extension naturally converges to standard equilibrium definitions in thermodynamics, similarly to what happens in other literature [45].

Consider a general CRN, where any two microscopic states i and j are connected by reactions. The dynamics of this CRN is governed by the following CME:

with the probability at state i and the reaction rate resulted from -th reaction that switch the system from state i to j. Mathematically, it can be proved that for a quite wide range of CRN, there exists a unique steady state distribution . Hence the internal energy for microstates can be defined with self-consistency:

and total internal energy is . The entropy can be defined by Gibbs entropy:

thus the free energy is

By taking time derivatives to F and S, it can be determined how free energy and entropy vary over time:

and

where

is the entropy production rate of the chemical reactions, and is never negative. is the heat dissipation rate describing how much heat is dissipated and expelled to external environment.

At steady state , it’s clear that . This indicates that, when , system is at equilibrium and there is no heat exchange with external environment. When , system is driven to a NESS, continuously dissipating energy with a rate and expelling these entropy to external environment with a rate in the form of heat. Therefore, considering energy conservation law, the free energy dissipation rate of the system is

In some literature, it’s also called “housekeeping heat” [44], with the name suggesting it’s the necessary energy input to maintain such a NESS. In addition, for NESS, which is in accordance with Schrödinger and Prigogine’s theory.

When the system’s microscopic states are so close that can be described by some continuous variables (for example, in thermodynamic limit where the total molecular number goes to infinity, the system is described by its concentration), the CME can be approximated by a Fokker-Planck equation [41,46]:

with the reaction index and the sum runs over all the possible chemical reactions. is the chemical flux resulted from -th reaction, with the generalized coordinate corresponding to the direction of this reaction, and the corresponding probability drift force and diffusion constant. Note that may be different from , because the direction of the reaction may not be parallel to any of For example, a system consisting of n components can be described by the concentration of each component , thus . Suppose there is a reaction , whose forward reaction would equally increase and decrease . The generalized coordinate of this reaction is , which is not parallel to any of .

3.3. Cycle Theory and the Break of Detailed Balance

As stated above, generally there would be a steady state in a CRN. However, in different cases these steady states have different physical meanings. If the reaction rates in the CRN satisfies the so-called detailed balance condition, i.e., each forward probability flux from a reaction is nullified by the reverse probability flux from the corresponding reverse reaction, the steady state is the equilibrium state and there is no net probability flux in the system. If the detailed balance condition is not satisfied, net probability flux would arise and entropy would continuously be produced. For the system that doesn’t have a source or a sink, such net flux must be a cyclic flux. There are many work on the such cyclic flux since Hill [48], and it has been widely accepted that the existence of cyclic flux in a system is one of the most important signature for the system to be nonequilibrium.

The system shown in Figure 1 is a simple case to illustrate this concept. By definition, the probability flux of each reaction is and the thermodynamic force is [48]

According to Equation (18), the entropy production rate of each chemical reaction is . Denoting the steady state by at steady state the fluxes should satisfy . Hence the total free energy dissipation rate at steady state is

with /.

Each factor in Equation (23) has a quite clear physical meaning. is the net cyclic flux in the cycle. When there will be a clockwise net flux , and when there will be a counter-clockwise net flux . is the ratio of the clockwise rate product and the counter-clockwise rate product, and is the thermodynamic force of the cycle. When detailed balance is satisfied and holds for every reaction, , suggesting both the net cyclic flux and the thermodynamic force are 0, thus the entropy production rate is 0 and the system is at equilibrium state. When detailed balance is broken, both the net cyclic flux and the thermodynamic force will arise, , with a positive entropy production rate indicating the system is at nonequilibrium state. Hence, is equivalent to the break of detailed balance.

How is the thermodynamic force introduced and detailed balance broken in a realistic systems? Qian illustrated the answer to this question with a simple example in a review [36]. In short, when detailed balance is broken, this network must be coupled with some reactions that involve molecules whose amount is under controlled by external constraints or regulations. For example, in the system shown in Figure 1, suppose the reaction is actually coupled with an ATP hydrolysis reaction and the real elementary reaction is

where inorganic phosphate Pi can be considered as a constant and is ignored. The reaction rates and the original rates are related by , . By definition, the reaction potential of each reaction is and it’s easy to show that the thermodynamic force over a cycle . In absence of external regulation, the system is closed and naturally should satisfy detailed balance, thus and

when the external regulation takes place and fixes the concentration ratio to a constant value different from , the rate ratio will be effectively tuned, thus

and Therefore, for a general reaction coupled with ATP hydrolysis, e.g., phosphorylation, by tuning the concentration ratio between ATP and ADP, the thermodynamic force on a cycle could be tuned and detailed balance can be broken. In addition, it can be checked that

where is the ATP hydrolysis energy under standard condition. Therefore, is exactly the free energy that one ATP molecule can produce by hydrolysis at the condition. This result explicitly shows how external energy source is pumped in to drive the system, and is interesting and quite important.

The above discussion can be extended to arbitrary CRNs without sink or source. If there is no cycle in the network, detailed balance will never be broken and the steady state is always equilibrium state. If there are more than one cycle, these cycles can be decomposed to a series of linear independent basis cycles , whose ratio of the rate products in clockwise reactions and counter-clockwise reactions determines whether detailed balance is broken on the basis cycle and the corresponding thermodynamic force. The net flux in the system at steady state can also be decomposed into a series of cyclic fluxes , and the total free energy dissipation rate is adding up all the dissipation on each basis cycle, i.e., . More details about the cycle decomposition can be found in a review by Schnakenberg and some proceeding literature [49,50,51,52]. From their work, it turns out that such decomposition of thermodynamic observables into cycles might be even more fundamental than transition themselves.

At the end of this section, we note that it’s also possible to study the CRN by deterministic thermodynamics and don’t need to concern stochastic theory. For example, the above cycle theory can be obtained merely by deterministic methods, as is shown in the work done by Rao and Esposito [52]. In the framework of mean-field thermodynamics, by analyzing the stoichiometric matrix of the CRN with method from linear algebra, the conservation law and basis cycles can be calculated. And by calculating the chemical potential difference on each basis cycle, the thermodynamic force can be determined. The dynamics of the system is governed by law of mass action, so the steady state flux can also be determined. Hence, the total dissipation rate can be calculated just like above, which is in accordance with stochastic theory. In addition, such methodologies from mean-field thermodynamics are also capable to determine whether the detailed balance is broken in a CRN with irreversible reactions, for example the work done by Gorban and collaborators [53,54]. Their results pointed out that irreversible reactions cannot be involved in any cycles to prevent divergence of entropy production, and are also in accordance with stochastic theory. Some other notable results are obtained in the last decades following similar methodology [55,56,57,58,59,60,61,62]. This mean-field methodology to study thermodynamics is traditionally Brussels School would use.

The advantage to adopt the stochastic theory is that we can calculate not only the thermodynamic quantities themselves such as entropy production rate but also their uncertainties and errors. This is critical in living systems, since the system size is usually small and fluctuation or noise may play an important role in various biological mechanisms [63,64,65]. And such fluctuation is deeply related to entropy production rate with TUR Equations (5) and (6) mentioned above. It shows that, no matter how to design the parameters in a CRN, it’s impossible to suppress the relative fluctuation and entropy production rate to infinitely small at the same time.

4. Thermodynamics for Information Processing in Living Systems

In realistic systems, the form of maintaining the steady state by dissipation free energy is to fulfill various biological functions by burning molecular fuels. Some functions directly transfer the chemical energy in the fuel to mechanical energy, for example the famous molecular motor [66,67,68,69,70,71] which plays an important role in translocation in cells and motion of bacteria. Some functions involve the synthesis of complex molecules [72,73,74,75,76], e.g., DNA and protien complex.

Except these functions where the energy have clear physical or chemical purposes, there are another large group of functions that only involve in the signal transduction processes. These functions are called information processing functions. The signal is usually transduced by a series of pathways and networks, where the allosteric transition and modification (methylation, phosphorylation, ubiquitination, etc.) to the signal molecule play the important role. For example, the key step in the chemotaxis network in E.coli is the methylation and demethylation of the chemoreceptor dimer [77]. Such modification processes are usually accompanied by hydrolysis of the energy molecules (ATP, GTP, etc.), hence free energy will be dissipated when the information is processed.

A natural question is, from the physics point of view, why free energy dissipation is needed during information processing? The hint comes from the development of information theory in modern statistical physics, especially a branch called “information thermodynamics” [55,78,79,80,81] which studies the thermodynamic cost to manipulate information or vise versa. Hence, an intuitive understanding is that the free energy dissipation is the necessary cost to process the information, or could be used to improve the processing accuracy. Actually, there has been some pioneer works revealing the cost for different information processing functions, suggesting this understanding should generally be right. Here we show some typical results for examples.

4.1. The Accuracy of Specificity and Kinetic Proofreading

The study on the accuracy of specificity and kinetic proofreading is one of the earliest study that applies nonequilibrium thermodynamic concepts to information processing in living systems. This study could trace back to Hopfield’s work in 1974 [82], which has already become a classical research paradigm in this area.

This problem arises from the synthesis of complex molecules, such as DNA replication. As is widely known, successful DNA replication is achieved through base paring. For a certain site in the template DNA strand, the affinity of the “correct” base that should be paired to this site is much larger than the “wrong” base. This property that the specific ligand could bind to specific substrate or receptor is the so-called specificity. However, although small, the probability of a wrong base pair is not exactly zero. By calculating the free energy difference between correct and wrong base pairs, researchers found that, if the affinity difference is the only reason leading to specificity, the error rate during DNA replication is around ∼. This error rate is too large for gene to maintain its relative invariance. But actually, in realistic cases, for eukaryotes the error rate is only around [82], much smaller than the estimation by affinity. Hence there must be some other mechanism to reduce the error, which is interesting to researchers for long.

Under this circumstance, Hopfield proposed a so-called kinetic proofreading model [82]. This model supposes that there are one receptor and two ligands in the model, one “correct” and one “wrong”. The affinity difference between the two ligands at equilibrium leads to a basic binding error rate . On top of that, if an irreversible “proofreading reaction” is introduced, dissociating the ligand from the receptor and allowing the receptor to choose the ligand again, the error rate could be further reduced. In this case, the system is driven out of equilibrium because the “proofreading reaction” breaks detailed balance. The error rate could be even reduced to under some specific conditions, which well explains the small error rate during DNA replication.

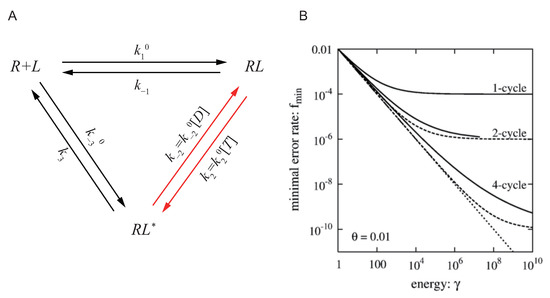

In Hopfield’s work, due to the limitation of the development of thermodynamic theory at that time, he didn’t carefully discuss the relation between the error rate reduction and how far the system is driven out of equilibrium. In a more recent work in 2006, Qian discussed the same question with more details [83]. As is shown in Figure 2A, Hong presented Hopfield’s model in a more rigorous way with nonequilibrium thermodynamics and cycle theory. Ligand L can bind with receptor R forming the complex , and could be activated to by hydrolyzing ATP. The activated could then dissociate to free ligand L and receptor R. Denoting the ligand concentration by , the rate product ratio is

with . As discussed in previous section, it can be proved that is exactly the energy to hydrolyze one ATP molecule. When the concentration ration of ATP and ADP is large enough, , ATP can be effectively hydrolyzed, and the system is driven out of equilibrium.

Figure 2.

Thermodynamic limitation of specificity and kinetic proofreading, adopted from Ref. [77]. (A) The cycle for the reactions between receptor R and ligand L. R could bind with L forming the complex , and could be activated to . The activated could dissociate to free ligand L and receptor R. The activation of is usually achieved by modification such as phosphorylation, and phosphorylation is usually coupled with ATP hydrolysis, so this reaction arrow is colored red indicating it’s where the external energy is put in. T stands for ATP and D stands for ADP in the panel, with Pi not presented. (B) The accuracy of specificity is limited by energy from ATP hydrolysis. The solid lines show the relation between the minimal error rate with different number of proofreading cycles and the energy parameter . It shows that, when the energy of ATP hydrolysis is fixed, no matter how to design the structure of the proofreading reaction networks, the thermodynamic limitation Equation (33) illustrated by dotted lines cannot be broken.

On top of that, suppose there are two ligands (the correct ligand) and L (the wrong ligand) in the system with the same concentration. Their structures are similar (so have the same ) but has a much larger affinity than L. This leads to a smaller dissociation rate than L:

The error rate can be defined by the affinity ratio for the two ligands at activated state,

It can be proved that, at equilibrium state . When the system is driven out of equilibrium, , f can be smaller than . It can be calculated that, for a fixed , the minimal error rate by tuning other parameters is

It can be checked that monotonically decreases with , and when , , which is exactly Hopfield’s result.

In this paper, Hong also proposed that gives a universal thermodynamic limitation to the error rate , which would not be violated no matter what structure the reaction network has. The essence of the specificity is the competitive binding between the two ligands and one receptor, which can be represented by a single reaction:

with the equilibrium constant equals to . The error rate can be defined as

Given that the concentration of L and are equal, the free energy difference between and at steady state is

when at equilibrium, should be zero and . If this reaction is coupled to a ATP hydrolysis reaction and driven out of equilibrium so that , we have the following relation

This is the thermodynamic limit given by .

As is shown in Figure 2A, different solid lines illustrate the relation between the minimal error rate with different number of proofreading cycles and the energy parameter . The solid lines all lie above the dotted line representing the thermodynamic limit Equation (33), confirming that the accuracy of specificity is limited by the thermodynamic cost.

4.2. The Accuracy of Oscillators and the Energy Cost

Oscillatory behaviors exist in many biological systems and are crucial in controlling the timing of various living activities. However, due to noise, any oscillator cannot keep its high accuracy forever. The time that the oscillator takes to complete one cycle is never exactly equal to the mean period. Affected by noise, it would be slightly longer or shorter. With more cycle completed, the error will accumulate more and more, and finally lost the function of timing. In terms of physics, after a long time, the phase of the oscillator will lost its coherence to initial phase. How to overcome the noise and maintain coherence in a relatively long time, is an important question.

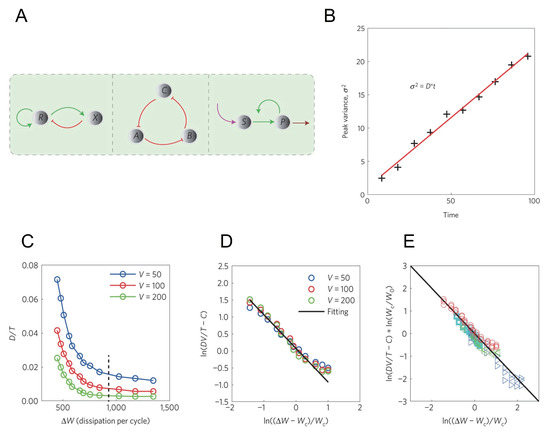

In Ref. [84], researchers thoroughly studied this problem. As is shown in Figure 3A, researchers enumerated all the possible motifs that could generate oscillation in three-node networks, including activator-inhibitor model, repressilator and substrate-depletion model. Researchers provided three realistic examples for each of the three motifs, along with a famous Brusselator model (which actually belongs to substrate-depletion model) and studied the oscillation accuracy and the energy cost for the respective cases.

Figure 3.

The relation between the coherence in the accurate oscillation network and its energy cost, adopted from Ref. [84]. (A) Three motifs that could generate oscillation. Left panel: activator-inhibitor model. Middle panel: repressilator model. Right panel: substrate-depletion model. In Ref. [84], both Brusselator and glycolysis are classified to substrate-depletion model. (B) Definition of phase diffusion constant D. The black crosses label the variance of the peak time which grows linearly, and the slope is D. (C) The relation between D in activator-inhibitor model and the energy dissipation in a period . The black dashed line corresponds to the energy of one ATP molecule hydrolysis . (D) is (C) in log scale after normalized by volume V. The black solid line is the fitting result from Equation (34). (E) The fitting result for all the for models after normalized by volume V. All the simulation results collapse to the same fitting line (black solid line). Different marks represent results from different models: red circle for activator-inhibitor model, cyan square for repressilator, blue triangle for Brusselator, green triangle for glycolysis.

As is shown in Figure 3B, researchers defined a phase diffusion constant D to describe the coherence of the oscillatory network. The results reveal that all the four models can oscillate only when far from equilibrium, and the dimensionless phase diffusion constant (T is the oscillation period) inversely depends on the energy dissipation in a period :

with a critical energy, V the system volume, C and two constants. This equation shows that the accuracy of the oscillators inversely depends on the additional energy to the minimal requirement for oscillation, and is used to fit the simulation of the 4 models, as is shown in Figure 3C–E. From the fitting, it turns out that Equation (34) is applicable for all the four models.

To understand the origin of this inverse dependence, researchers consider the noisy Stuart-Landau equation that describes a general system near Hopf bifurcation:

where are noise terms satisfying

In this system, by calculating the energy dissipation and phase diffusion constant with parameters in Equation (35), Equation (34) can be analytically obtained when , and can be expressed by:

hence the inverse relation Equation (34) holds in a quite large range and is relatively general.

In some biological systems, timing function is accomplished by the collective oscillation phase of a group of oscillating molecules. For such systems, synchronization among different molecular oscillators is of great importance to generate and maintain the correlation of the collective phase, which is critical for macroscopic oscillation accuracy. A recent work shows that the maximal achievable synchrony among a group of identical molecular oscillators is also inversely dependent on the additional energy cost [85].This result, along with Equation (34), shows that oscillation accuracy can be improved by additional energy both in single oscillator level and collective level.

5. Outlook

In this review, we summarize the history and current research status of biological thermodynamics. It’s still a new research branch and hotspot to study the thermodynamics in living systems, which could lead to great fundamental improvement in both physics and biology.

In terms of physics, living systems are realistic systems far from equilibrium, which is a good research object for nonequilibrium statistical physics. By studying the biological functions and their thermodynamic cost, as the examples we show above, the availability of the theories from nonequilibrium statistical physics can be examined by realistic cases. Furthermore, with such applications in realistic systems, physicists could accumulate intuitive feelings that which concepts are of more importance, so that they are able to develop more realistic and useful thermodynamic theories and instruments. It’s also possible to adopt experimental tools from biological systems to test physical theoretical results, just like the test for Jarzynski equality [28,29,30] and fluctuation theorems [31,32,33].

In terms of biology, theoretical tools from thermodynamics and statistical physics could provide another new angle to understand the general, fundamental laws in living systems. New paradigms can also be established with the new theoretical tools to study quantitative behavior in living systems, such as spatial positioning by self-organization [86], understanding fluctuation in cell growth [87] and characterizing enzymes as active matter [88]. In addition, laws from thermodynamics could also help answer the design principles of biological systems, which might be useful to synthetic biology [89,90,91,92] and other biological engineering with in silico design.

Author Contributions

Conceptualization, D.Z. and Q.O.; methodology and investigation, D.Z.; writing—original draft preparation, D.Z.; writing—review and editing, D.Z. and Q.O.; supervision, Q.O. All authors have read and agreed to the published version of the manuscript.

Funding

This work is partially funded by NSFC (12090054,11774011) and China Postdoctoral Science Foundation (2020M680180).

Acknowledgments

We thank Yuhai Tu for continuous discussion and instruction on this topic. This work is partially supported by NSFC (12090054,11774011) and China Postdoctoral Science Foundation (2020M680180).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Schrödinger, E. What Is Life? The Physical Aspect of the Living Cell; Cambridge University Press: Cambridge, UK, 1944. [Google Scholar]

- Prigogine, I. Etude Thermodynamique des Phénomènes Irréversibles; Desoer: Liège, Belgium, 1947. [Google Scholar]

- De Groot, S.R. Thermodynamics of Irreversible Processes; North-Holland Publishing Company: Amsterdam, The Netherlands, 1951; Volume 336. [Google Scholar]

- Jaynes, E.T. The minimum entropy production principle. Annu. Rev. Phys. Chem. 1980, 31, 579–601. [Google Scholar] [CrossRef]

- Bodenschatz, E.; Pesch, W.; Ahlers, G. Recent developments in Rayleigh-Bénard convection. Annu. Rev. Fluid Mech. 2000, 32, 709–778. [Google Scholar] [CrossRef]

- Cross, M.C.; Hohenberg, P.C. Pattern formation outside of equilibrium. Rev. Mod. Phys. 1993, 65, 851. [Google Scholar] [CrossRef]

- Greenside, H.; Coughran, W., Jr.; Schryer, N. Nonlinear pattern formation near the onset of Rayleigh-Bénard convection. Phys. Rev. Lett. 1982, 49, 726. [Google Scholar] [CrossRef]

- Caldwell, D. Non-linear effects in a Rayleigh–Bénard experiment. J. Fluid Mech. 1970, 42, 161–175. [Google Scholar] [CrossRef]

- Petrov, V.; Gaspar, V.; Masere, J.; Showalter, K. Controlling chaos in the Belousov—Zhabotinsky reaction. Nature 1993, 361, 240–243. [Google Scholar] [CrossRef]

- Zhang, D.; Györgyi, L.; Peltier, W.R. Deterministic chaos in the Belousov–Zhabotinsky reaction: Experiments and simulations. Chaos Interdiscip. J. Nonlinear Sci. 1993, 3, 723–745. [Google Scholar] [CrossRef]

- Györgyi, L.; Field, R.J. A three-variable model of deterministic chaos in the Belousov–Zhabotinsky reaction. Nature 1992, 355, 808–810. [Google Scholar] [CrossRef]

- Tyson, J.J. Oscillations, bistability, and echo waves in models of the belousov-zhabotinskii reaction. Ann. N. Y. Acad. Sci. 1979, 316, 279–295. [Google Scholar] [CrossRef]

- Swinney, H.L. Observations of order and chaos in nonlinear systems. Phys. D Nonlinear Phenom. 1983, 7, 3–15. [Google Scholar] [CrossRef]

- Nicolis, G.; Prigogine, I. Self-Organization in Nonequilibrium Systems: From Dissipative Structures to Order through Fluctuations; Wiley: New York, NY, USA, 1977. [Google Scholar]

- Prigogine, I. Introduction to Thermodynamics of Irreversible Processes; Wiley: New York, NY, USA, 1967. [Google Scholar]

- Gardiner, C.W. Handbook of Stochastic Methods; Springer: Berlin, Germany, 1985; Volume 3. [Google Scholar]

- Sekimoto, K. Stochastic Energetics; Springer: Berlin/Heidelberg, Germany, 2010; Volume 799. [Google Scholar]

- Jarzynski, C. Nonequilibrium equality for free energy differences. Phys. Rev. Lett. 1997, 78, 2690. [Google Scholar] [CrossRef]

- Jarzynski, C. Equilibrium free-energy differences from nonequilibrium measurements: A master-equation approach. Phys. Rev. E 1997, 56, 5018. [Google Scholar] [CrossRef]

- Kurchan, J. Fluctuation theorem for stochastic dynamics. J. Phys. A Math. Gen. 1998, 31, 3719. [Google Scholar] [CrossRef]

- Crooks, G.E. Entropy production fluctuation theorem and the nonequilibrium work relation for free energy differences. Phys. Rev. E 1999, 60, 2721. [Google Scholar] [CrossRef]

- Esposito, M.; Van den Broeck, C. Three detailed fluctuation theorems. Phys. Rev. Lett. 2010, 104, 090601. [Google Scholar] [CrossRef]

- Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 2012, 75, 126001. [Google Scholar] [CrossRef]

- Barato, A.C.; Seifert, U. Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 2015, 114, 158101. [Google Scholar] [CrossRef]

- Polettini, M.; Lazarescu, A.; Esposito, M. Tightening the uncertainty principle for stochastic currents. Phys. Rev. E 2016, 94, 052104. [Google Scholar] [CrossRef]

- Pietzonka, P.; Ritort, F.; Seifert, U. Finite-time generalization of the thermodynamic uncertainty relation. Phys. Rev. E 2017, 96, 012101. [Google Scholar] [CrossRef]

- Horowitz, J.M.; Gingrich, T.R. Thermodynamic uncertainty relations constrain non-equilibrium fluctuations. Nat. Phys. 2020, 16, 15–20. [Google Scholar] [CrossRef]

- Liphardt, J.; Dumont, S.; Smith, S.B.; Tinoco, I.; Bustamante, C. Equilibrium information from nonequilibrium measurements in an experimental test of Jarzynski’s equality. Science 2002, 296, 1832–1835. [Google Scholar] [CrossRef]

- Mossa, A.; de Lorenzo, S.; Huguet, J.M.; Ritort, F. Measurement of work in single-molecule pulling experiments. J. Chem. Phys. 2009, 130, 234116. [Google Scholar] [CrossRef]

- Berg, J. Out-of-equilibrium dynamics of gene expression and the Jarzynski equality. Phys. Rev. Lett. 2008, 100, 188101. [Google Scholar] [CrossRef] [PubMed]

- Collin, D.; Ritort, F.; Jarzynski, C.; Smith, S.B.; Tinoco, I.; Bustamante, C. Verification of the Crooks fluctuation theorem and recovery of RNA folding free energies. Nature 2005, 437, 231–234. [Google Scholar] [CrossRef] [PubMed]

- Schuler, S.; Speck, T.; Tietz, C.; Wrachtrup, J.; Seifert, U. Experimental test of the fluctuation theorem for a driven two-level system with time-dependent rates. Phys. Rev. Lett. 2005, 94, 180602. [Google Scholar] [CrossRef]

- Wang, G.; Reid, J.; Carberry, D.; Williams, D.; Sevick, E.M.; Evans, D.J. Experimental study of the fluctuation theorem in a nonequilibrium steady state. Phys. Rev. E 2005, 71, 046142. [Google Scholar] [CrossRef]

- Seifert, U. Entropy production along a stochastic trajectory and an integral fluctuation theorem. Phys. Rev. Lett. 2005, 95, 040602. [Google Scholar] [CrossRef]

- Seifert, U. Stochastic thermodynamics: Principles and perspectives. Eur. Phys. J. B 2008, 64, 423–431. [Google Scholar] [CrossRef]

- Qian, H. Phosphorylation energy hypothesis: Open chemical systems and their biological functions. Annu. Rev. Phys. Chem. 2007, 58, 113–142. [Google Scholar] [CrossRef]

- Horowitz, J.M. Quantum-trajectory approach to the stochastic thermodynamics of a forced harmonic oscillator. Phys. Rev. E 2012, 85, 031110. [Google Scholar] [CrossRef]

- Barato, A.C.; Seifert, U. Unifying three perspectives on information processing in stochastic thermodynamics. Phys. Rev. Lett. 2014, 112, 090601. [Google Scholar] [CrossRef] [PubMed]

- Prost, J.; Joanny, J.F.; Parrondo, J. Generalized fluctuation-dissipation theorem for steady-state systems. Phys. Rev. Lett. 2009, 103, 090601. [Google Scholar] [CrossRef]

- Gong, Z.; Quan, H.T. Jarzynski equality, Crooks fluctuation theorem, and the fluctuation theorems of heat for arbitrary initial states. Phys. Rev. E 2015, 92, 012131. [Google Scholar] [CrossRef] [PubMed]

- Van Kampen, N.G. Stochastic Processes in Physics and Chemistry; Elsevier: Amsterdam, The Netherlands, 1992. [Google Scholar]

- Polettini, M.; Esposito, M. Irreversible thermodynamics of open chemical networks. I. Emergent cycles and broken conservation laws. J. Chem. Phys. 2014, 141, 024117. [Google Scholar] [CrossRef] [PubMed]

- Gillespie, D.T. A general method for numerically simulating the stochastic time evolution of coupled chemical reactions. J. Comput. Phys. 1976, 22, 403–434. [Google Scholar] [CrossRef]

- Ge, H.; Qian, H. Physical origins of entropy production, free energy dissipation, and their mathematical representations. Phys. Rev. E 2010, 81, 051133. [Google Scholar] [CrossRef]

- Esposito, M.; Van den Broeck, C. Second law and Landauer principle far from equilibrium. EPL Europhys. Lett. 2011, 95, 40004. [Google Scholar] [CrossRef]

- Risken, H. Fokker-Planck Equation; Springer: Berlin/Heidelberg, Germany, 1996. [Google Scholar]

- Tomé, T.; de Oliveira, M.J. Entropy production in irreversible systems described by a Fokker-Planck equation. Phys. Rev. E 2010, 82, 021120. [Google Scholar] [CrossRef]

- Hill, T.L. Free Energy Transduction in Biology; Academic Press: New York, NY, USA, 1977. [Google Scholar]

- Schnakenberg, J. Network theory of microscopic and macroscopic behavior of master equation systems. Rev. Mod. Phys. 1976, 48, 571. [Google Scholar] [CrossRef]

- Qian, H.; Kjelstrup, S.; Kolomeisky, A.B.; Bedeaux, D. Entropy production in mesoscopic stochastic thermodynamics: nonequilibrium kinetic cycles driven by chemical potentials, temperatures, and mechanical forces. J. Phys. Condens. Matter 2016, 28, 153004. [Google Scholar] [CrossRef]

- Wachtel, A.; Vollmer, J.; Altaner, B. Fluctuating currents in stochastic thermodynamics. I. Gauge invariance of asymptotic statistics. Phys. Rev. E 2015, 92, 042132. [Google Scholar] [CrossRef] [PubMed]

- Rao, R.; Esposito, M. Nonequilibrium thermodynamics of chemical reaction networks: Wisdom from stochastic thermodynamics. Phys. Rev. X 2016, 6, 041064. [Google Scholar] [CrossRef]

- Gorban, A.; Yablonsky, G. Extended detailed balance for systems with irreversible reactions. Chem. Eng. Sci. 2011, 66, 5388–5399. [Google Scholar] [CrossRef]

- Gorban, A.; Mirkes, E.; Yablonsky, G. Thermodynamics in the limit of irreversible reactions. Phys. A Stat. Mech. Its Appl. 2013, 392, 1318–1335. [Google Scholar] [CrossRef]

- Gorban, A.N.; Gorban, P.A.; Judge, G. Entropy: The Markov ordering approach. Entropy 2010, 12, 1145–1193. [Google Scholar] [CrossRef]

- Gorban, A.N.; Shahzad, M. The michaelis-menten-stueckelberg theorem. Entropy 2011, 13, 966–1019. [Google Scholar] [CrossRef]

- Yablonsky, G.S.; Constales, D.; Marin, G.B. Joint kinetics: A new paradigm for chemical kinetics and chemical engineering. Curr. Opin. Chem. Eng. 2020, 29, 83–88. [Google Scholar] [CrossRef]

- Marin, G.B.; Yablonsky, G.S.; Constales, D. Kinetics of Chemical Reactions: Decoding Complexity; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Yablonsky, G.S.; Constales, D.; Marin, G.B. Equilibrium relationships for non-equilibrium chemical dependencies. Chem. Eng. Sci. 2011, 66, 111–114. [Google Scholar] [CrossRef]

- Yablonsky, G.; Gorban, A.; Constales, D.; Galvita, V.; Marin, G. Reciprocal relations between kinetic curves. EPL Europhys. Lett. 2011, 93, 20004. [Google Scholar] [CrossRef]

- Gorban, A.; Yablonsky, G. Three waves of chemical dynamics. Math. Model. Nat. Phenom. 2015, 10, 1–5. [Google Scholar] [CrossRef]

- Branco, P.D.; Yablonsky, G.; Marin, G.B.; Constales, D. The switching point between kinetic and thermodynamic control. Comput. Chem. Eng. 2019, 125, 606–611. [Google Scholar] [CrossRef]

- Paulsson, J. Summing up the noise in gene networks. Nature 2004, 427, 415–418. [Google Scholar] [CrossRef]

- Bar-Even, A.; Paulsson, J.; Maheshri, N.; Carmi, M.; O’Shea, E.; Pilpel, Y.; Barkai, N. Noise in protein expression scales with natural protein abundance. Nat. Genet. 2006, 38, 636–643. [Google Scholar] [CrossRef]

- McDonnell, M.D.; Abbott, D. What is stochastic resonance? Definitions, misconceptions, debates, and its relevance to biology. PLoS Comput. Biol. 2009, 5, e1000348. [Google Scholar] [CrossRef]

- Jülicher, F.; Ajdari, A.; Prost, J. Modeling molecular motors. Rev. Mod. Phys. 1997, 69, 1269. [Google Scholar] [CrossRef]

- Qian, H. A simple theory of motor protein kinetics and energetics. Biophys. Chem. 1997, 67, 263–267. [Google Scholar] [CrossRef]

- Qian, H. A simple theory of motor protein kinetics and energetics. II. Biophys. Chem. 2000, 83, 35–43. [Google Scholar] [CrossRef]

- Lau, A.W.C.; Lacoste, D.; Mallick, K. Nonequilibrium fluctuations and mechanochemical couplings of a molecular motor. Phys. Rev. Lett. 2007, 99, 158102. [Google Scholar] [CrossRef] [PubMed]

- Mandadapu, K.K.; Nirody, J.A.; Berry, R.M.; Oster, G. Mechanics of torque generation in the bacterial flagellar motor. Proc. Natl. Acad. Sci. USA 2015, 112, E4381–E4389. [Google Scholar] [CrossRef]

- Tu, Y.; Cao, Y. Design principles and optimal performance for molecular motors under realistic constraints. Phys. Rev. E 2018, 97, 022403. [Google Scholar] [CrossRef]

- Doi, M.; Edwards, S.F. The Theory of Polymer Dynamics; Oxford University Press: Oxford, UK, 1988; Volume 73. [Google Scholar]

- Andrieux, D.; Gaspard, P. Nonequilibrium generation of information in copolymerization processes. Proc. Natl. Acad. Sci. USA 2008, 105, 9516–9521. [Google Scholar] [CrossRef] [PubMed]

- Mast, C.B.; Schink, S.; Gerland, U.; Braun, D. Escalation of polymerization in a thermal gradient. Proc. Natl. Acad. Sci. USA 2013, 110, 8030–8035. [Google Scholar] [CrossRef] [PubMed]

- Banerjee, K.; Kolomeisky, A.B.; Igoshin, O.A. Elucidating interplay of speed and accuracy in biological error correction. Proc. Natl. Acad. Sci. USA 2017, 114, 5183–5188. [Google Scholar] [CrossRef]

- Chiuchiú, D.; Tu, Y.; Pigolotti, S. Error-speed correlations in biopolymer synthesis. Phys. Rev. Lett. 2019, 123, 038101. [Google Scholar] [CrossRef] [PubMed]

- Lan, G.; Sartori, P.; Neumann, S.; Sourjik, V.; Tu, Y. The energy–speed–accuracy trade-off in sensory adaptation. Nat. Phys. 2012, 8, 422–428. [Google Scholar] [CrossRef]

- Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 1961, 5, 183–191. [Google Scholar] [CrossRef]

- Sagawa, T.; Ueda, M. Second law of thermodynamics with discrete quantum feedback control. Phys. Rev. Lett. 2008, 100, 080403. [Google Scholar] [CrossRef]

- Sagawa, T.; Ueda, M. Generalized Jarzynski equality under nonequilibrium feedback control. Phys. Rev. Lett. 2010, 104, 090602. [Google Scholar] [CrossRef]

- Deffner, S.; Jarzynski, C. Information processing and the second law of thermodynamics: An inclusive, Hamiltonian approach. Phys. Rev. X 2013, 3, 041003. [Google Scholar] [CrossRef]

- Hopfield, J.J. Kinetic proofreading: A new mechanism for reducing errors in biosynthetic processes requiring high specificity. Proc. Natl. Acad. Sci. USA 1974, 71, 4135–4139. [Google Scholar] [CrossRef]

- Qian, H. Reducing intrinsic biochemical noise in cells and its thermodynamic limit. J. Mol. Biol. 2006, 362, 387–392. [Google Scholar] [CrossRef]

- Cao, Y.; Wang, H.; Ouyang, Q.; Tu, Y. The free-energy cost of accurate biochemical oscillations. Nat. Phys. 2015, 11, 772–778. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Cao, Y.; Ouyang, Q.; Tu, Y. The energy cost and optimal design for synchronization of coupled molecular oscillators. Nat. Phys. 2020, 16, 95–100. [Google Scholar] [CrossRef] [PubMed]

- Murray, S.M.; Sourjik, V. Self-organization and positioning of bacterial protein clusters. Nat. Phys. 2017, 13, 1006–1013. [Google Scholar] [CrossRef]

- Iyer-Biswas, S.; Crooks, G.E.; Scherer, N.F.; Dinner, A.R. Universality in stochastic exponential growth. Phys. Rev. Lett. 2014, 113, 028101. [Google Scholar] [CrossRef] [PubMed]

- Jee, A.Y.; Cho, Y.K.; Granick, S.; Tlusty, T. Catalytic enzymes are active matter. Proc. Natl. Acad. Sci. USA 2018, 115, E10812–E10821. [Google Scholar] [CrossRef] [PubMed]

- Elowitz, M.B.; Leibler, S. A synthetic oscillatory network of transcriptional regulators. Nature 2000, 403, 335–338. [Google Scholar] [CrossRef]

- Benner, S.A.; Sismour, A.M. Synthetic biology. Nat. Rev. Genet. 2005, 6, 533–543. [Google Scholar] [CrossRef]

- Purnick, P.E.; Weiss, R. The second wave of synthetic biology: From modules to systems. Nat. Rev. Mol. Cell Biol. 2009, 10, 410–422. [Google Scholar] [CrossRef]

- Cameron, D.E.; Bashor, C.J.; Collins, J.J. A brief history of synthetic biology. Nat. Rev. Microbiol. 2014, 12, 381–390. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).