Weighted Schatten p-Norm Low Rank Error Constraint for Image Denoising

Abstract

:1. Introduction

2. Related Work

2.1. Weighted Schatten p-Norm Minimization

2.2. NonLocal Self-Similarity

3. Principle and Method of WSNLEC

3.1. Low Rank Error

3.2. Core Idea of WSNLEC

3.3. Solution Method

3.4. WSNLEC in Image Denoising

| Algorithm 1: Weighted Schatten p-norm low rank error constraint (WSNLEC) for Image Denoising. |

Input: Noisy image Y (1) Initialize , ; (2) For k = 1 : K do (3) Iterative regularization ; (4) Construct similar groups and by block matching method [27]; (5) For each local image patch do (6) Estimate the k-th weight vector by ; (7) Update by GST algorithm; (8) Update by GST algorithm; (9) Update by Equation (10); (10) End For (11) Aggregate to form the denoised image ; (10) End For Output: Denoised image |

4. Experimental Results and Analysis

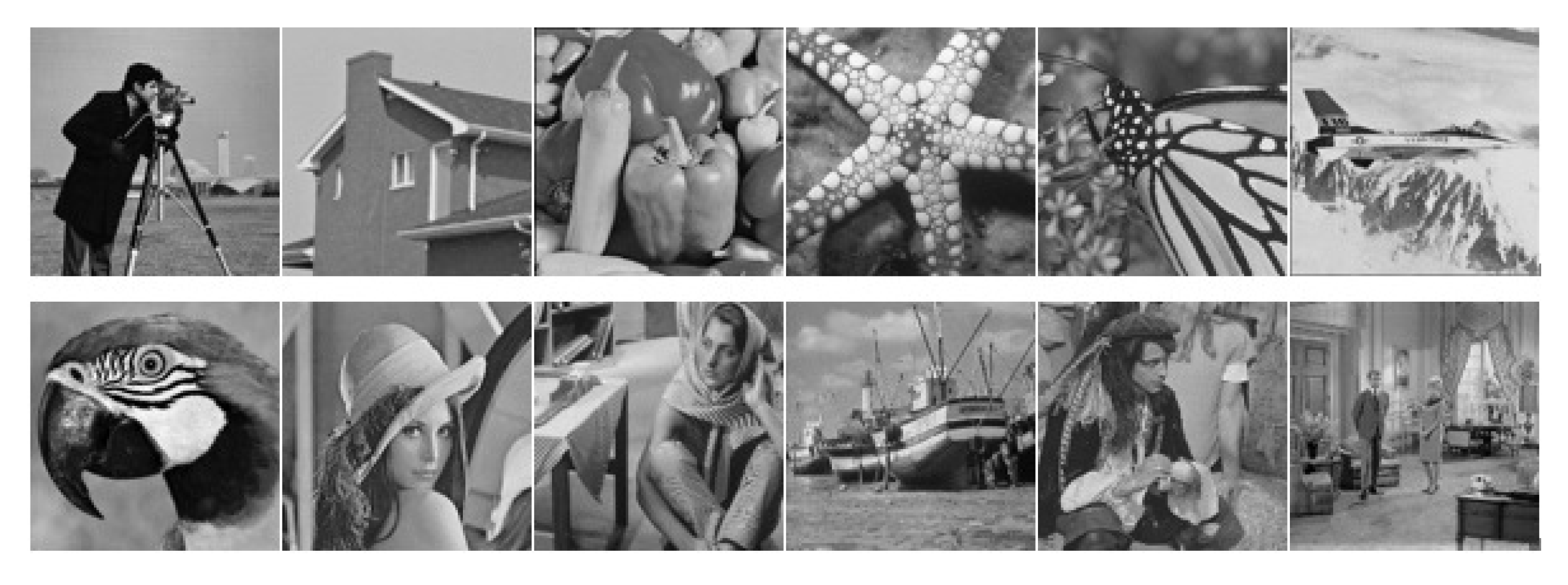

4.1. Experimental Setup

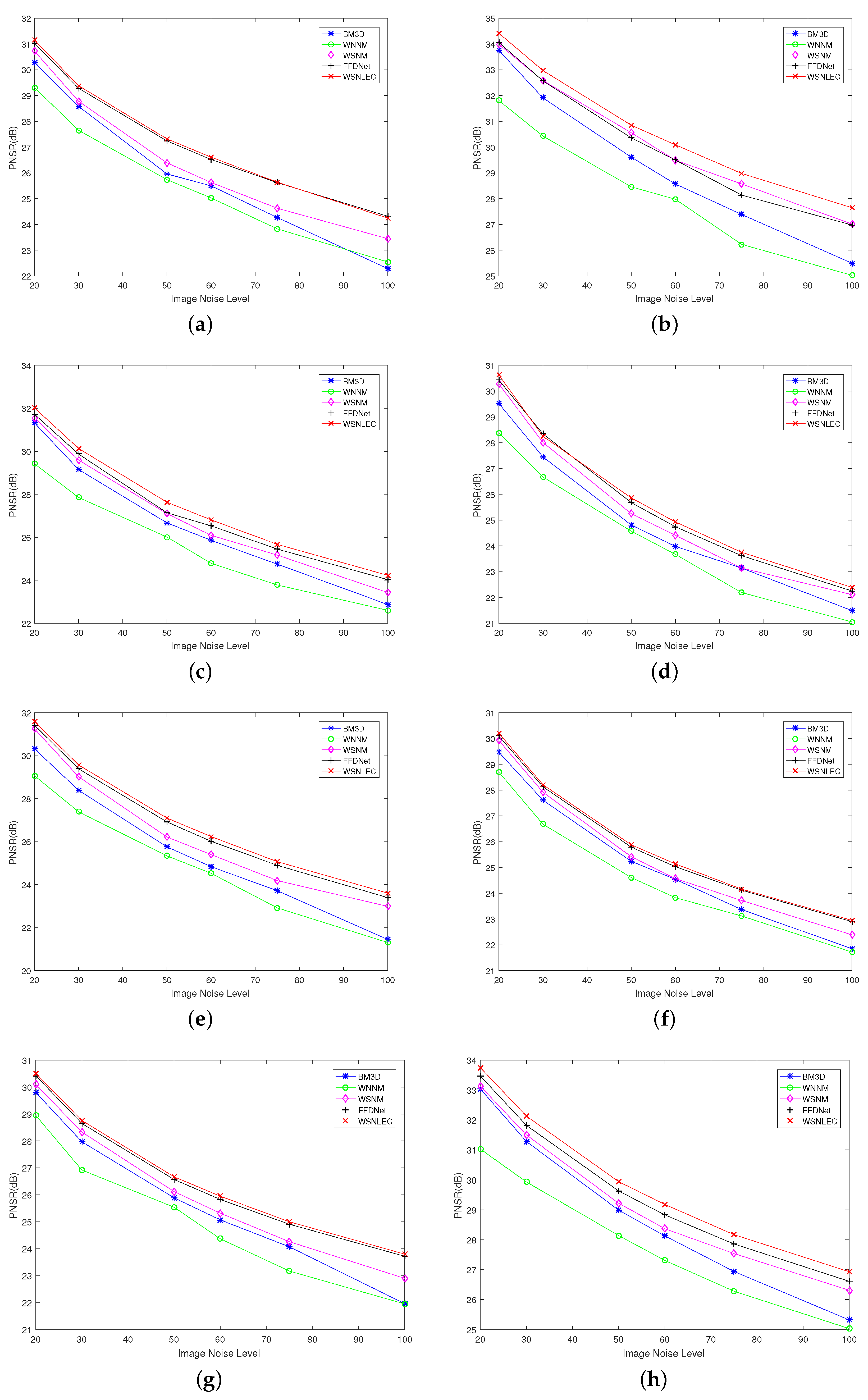

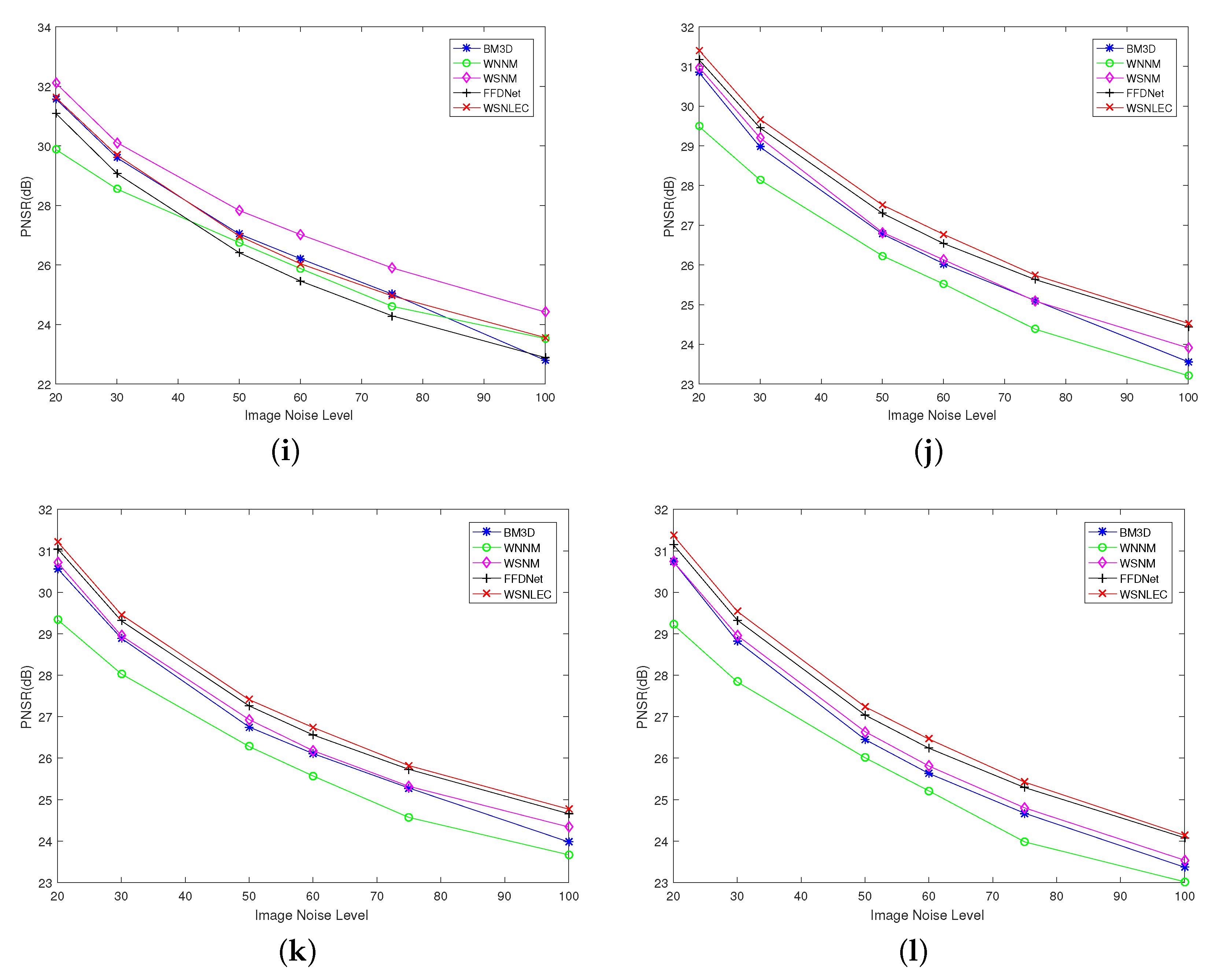

4.2. Experimental Results of Noise Reduction Algorithms with Different Standard Deviations

4.3. Experimental Results of Denoising Algorithms for Different Test Images

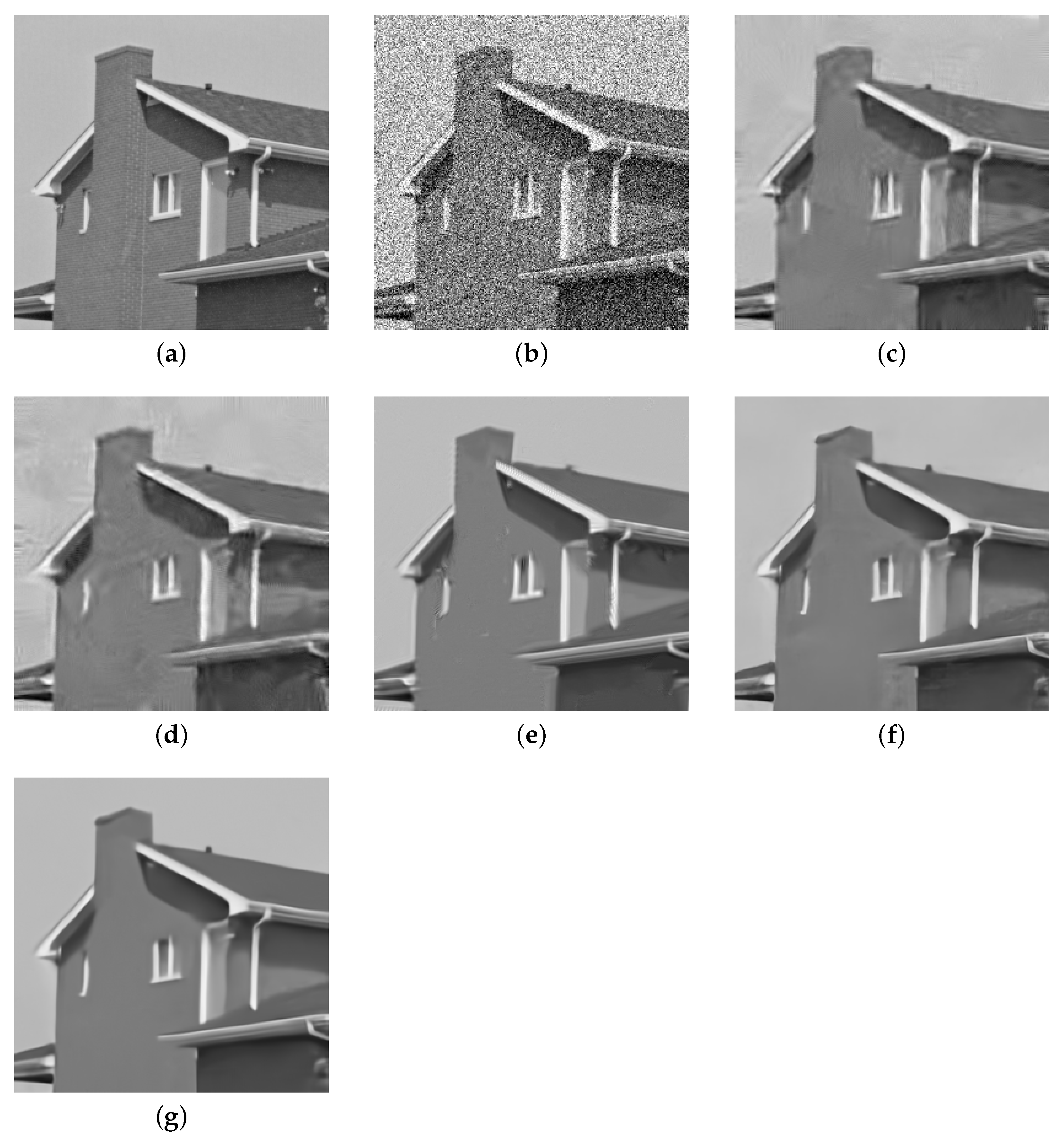

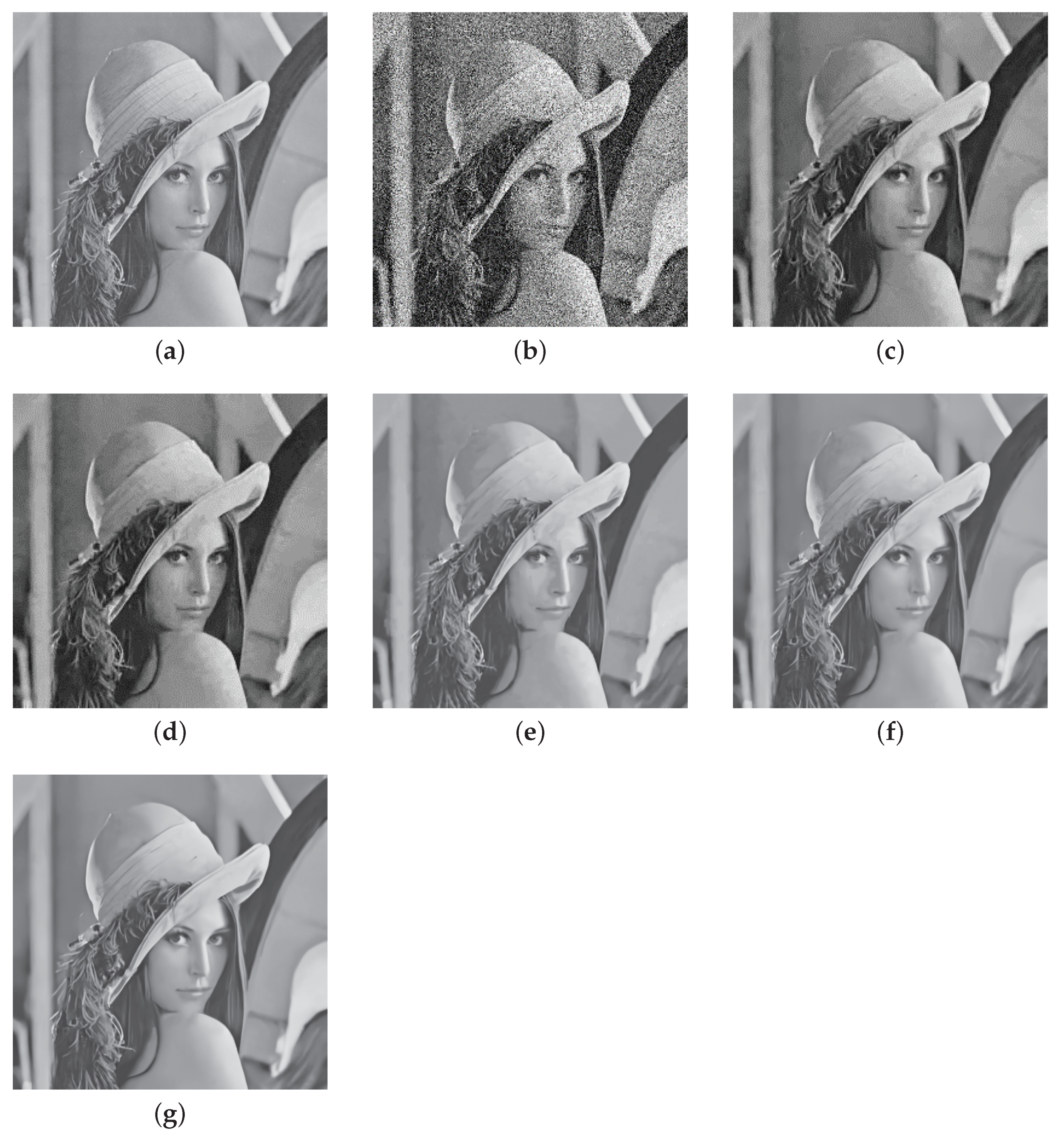

4.4. Visual Effects of Different Denoising Algorithms

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Symbols Explanation

| Y | Noisy image |

| X | Clear image |

| N | Noise |

| Regularization parameter | |

| Denoised image | |

| Similarity group of clear image | |

| Low rank matrix from clear image | |

| Similarity group of noisy image | |

| R | Low rank matrix error |

| Apply FFDNet method to preprocess the noise image Y and obtain the image | |

| Noise variance | |

| p | Power |

| Weight | |

| , | The i-th singular value of matrix |

| Threshold | |

| Constant term | |

| k | The number of iterations |

| Relaxation parameter |

References

- Jin, C.; Luan, N. An image denoising iterative approach based on total variation and weighting function. Multimed. Tools Appl. 2020, 79, 20947–20971. [Google Scholar] [CrossRef]

- Huang, S.; Zhou, P.; Shi, H.; Sun, Y.; Wan, S.R. Image speckle noise denoising by a multi-layer fusion enhancement method based on block matching and 3D filtering. Imaging Sci. J. 2020, 67, 224–235. [Google Scholar] [CrossRef]

- Sun, L.; Jeon, B.; Zheng, Y.; Wu, Z.B. A novel weighted cross total variation method for hyperspectral image mixed denoising. IEEE Access 2017, 5, 27172–27188. [Google Scholar] [CrossRef]

- Shen, J.; Chan, T. Mathematical models for local nontexture inpaintings. SIAM J. Appl. Math. 2002, 62, 1019–1043. [Google Scholar] [CrossRef]

- Wang, W.; Yao, M.J.; Ng, M.K. Color image multiplicative noise and blur removal by saturation-value total variation. Appl. Math. Model. 2021, 90, 240–264. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Shen, H.; Zhang, L. Hyperspectral image denoising using local low-rank matrix recovery and global spatial-spectral total variation. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 713–729. [Google Scholar] [CrossRef]

- Xie, Z.; Liu, L.; Yang, C. An entropy-based algorithm with nonlocal residual learning for image compressive sensing recovery. Entropy 2019, 21, 900. [Google Scholar] [CrossRef] [Green Version]

- Dong, W.; Shi, G.; Li, X. Nonlocal image restoration with bilateral variance estimation: A low-rank approach. IEEE Trans. Image Process. 2013, 22, 700–711. [Google Scholar] [CrossRef]

- Maboud, F.K.; Rodrigo, C. Subspace-orbit randomized decomposition for low-rank matrix approximations. IEEE Trans. Signal Process. 2018, 66, 4409–4424. [Google Scholar]

- Xu, J.C.; Wang, N.; Xu, Z.W.; Xu, K.Q. Weighted lp norm sparse error constraint based ADMM for image denoising. Math. Probl. Eng. 2019, 2019, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Zuo, C.; Jovanov, L.; Goossens, B. Image denoising using quadtreebased nonlocal means with locally adaptive principal component analysis. IEEE Signal Process. Lett. 2016, 23, 434–438. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.M.; Chen, Y. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fu, Y.L.; Xu, J.W.; Xiang, Y.J.; Chen, Z.; Zhu, T.; Cai, L.; He, W.H. Multi-scale patches based image denoising using weighted nuclear norm minimisation. IET Image Process. 2020, 14, 3161–3168. [Google Scholar] [CrossRef]

- Cao, C.H.; Yu, J.; Zhou, C.Y.; Hu, K.; Xiao, F.; Gao, X.P. Hyperspectral image denoising via subspace-based nonlocal low-rank and sparse factorization. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2019, 12, 973–988. [Google Scholar] [CrossRef]

- Ren, F.J.; Wen, R.P. A new method based on the manifold-alternative approximating for low-rank matrix completion. Entropy 2018. [Google Scholar] [CrossRef] [PubMed]

- Cai, J.F.; Candès, E.J.; Shen, Z.W. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, G.Y.; Yao, J.W.; Qi, C. Background subtraction based on low-rank and structured sparse decomposition. IEEE Trans. Image Process. 2015, 24, 2302–2314. [Google Scholar] [CrossRef] [PubMed]

- Shang, F.; Cheng, J.; Liu, Y.; Luo, Z.; Lin, Z. Bilinear factor matrix norm minimization for robust PCA: Algorithms and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2066–2080. [Google Scholar] [CrossRef] [Green Version]

- Zha, Z.Y.; Yuan, X.; Wen, B.H.; Zhou, J.T.; Zhang, J.C.; Zhu, C. From rank estimation to rank approximation: Rank residual constraint for image restoration. IEEE Trans. Image Process. 2020, 29, 3254–3269. [Google Scholar] [CrossRef] [Green Version]

- Zeng, H.Z.; Xie, X.Z.; Kong, W.F.; Cui, S.; Ning, J.F. Hyperspectral image denoising via combined non-local self-similarity and local low-rank regularization. IEEE Access 2020, 8, 50190–50208. [Google Scholar] [CrossRef]

- An, J.L.; Lei, J.H.; Song, Y.Z.; Zhang, X.R.; Guo, J.M. Tensor based multiscale low rank decomposition for hyperspectral images dimensionality reduction. Remote Sens. 2019, 11, 1485–1503. [Google Scholar] [CrossRef] [Green Version]

- Nie, F.; Huang, H.; Ding, C. Low-rank matrix recovery via efficient schatten p-norm minimization. In Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; pp. 655–661. [Google Scholar]

- Gu, S.H.; Zhang, L.; Zuo, W.M. Weighted nuclear norm minimization with application to image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2862–2869. [Google Scholar]

- Xie, Y.; Gu, S.; Liu, Y. Weighted schatten p-norm minimization for image denoising and background subtraction. IEEE Trans. Image Process. 2016, 25, 4842–4857. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.M.; Qian, J.; Zhang, B.; Yang, J.; Gong, C.; Wei, Y. Low-Rank matrix recovery via modified Schatten-p norm minimization with convergence guarantees. IEEE Trans. Image Process. 2020, 29, 3132–3142. [Google Scholar] [CrossRef] [PubMed]

- Zuo, W.M.; Meng, D.Y.; Zhang, L. A generalized iterated shrinkage algorithm for non-convex sparse coding. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 12 April 2013; pp. 217–224. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a fast and flexible solution for CNN based image denoising. SSIM. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef] [Green Version]

| = 20 | |||||

|---|---|---|---|---|---|

| Image | BM3D | WNNM | WSNM | FFDNet | WSNLEC |

| C.Man | 30.28 | 29.31 | 30.72 | 31.03 | 31.15 |

| House | 33.75 | 31.81 | 33.99 | 34.06 | 34.42 |

| Peppers | 31.32 | 29.42 | 31.55 | 31.72 | 32.02 |

| Starfish | 29.52 | 28.38 | 30.28 | 30.43 | 30.63 |

| Monarch | 30.32 | 29.07 | 31.25 | 31.42 | 31.59 |

| Airplane | 29.47 | 28.70 | 29.93 | 30.10 | 30.21 |

| Parrot | 29.80 | 28.95 | 30.10 | 30.42 | 30.51 |

| Lena | 33.04 | 31.30 | 33.13 | 33.47 | 33.75 |

| Barbara | 31.58 | 29.88 | 32.11 | 31.09 | 31.62 |

| Boat | 30.85 | 29.49 | 30.98 | 31.17 | 31.41 |

| Man | 30.57 | 29.34 | 30.72 | 31.05 | 31.22 |

| Couple | 30.74 | 29.22 | 30.74 | 31.15 | 31.37 |

| Average | 30.94 | 29.57 | 31.29 | 31.43 | 31.66 |

| = 30 | |||||

|---|---|---|---|---|---|

| Image | BM3D | WNNM | WSNM | FFDNet | WSNLEC |

| C.Man | 28.58 | 27.65 | 28.78 | 29.28 | 29.38 |

| House | 31.91 | 30.43 | 32.58 | 32.57 | 32.97 |

| Peppers | 29.15 | 27.85 | 29.59 | 29.87 | 30.13 |

| Starfish | 27.45 | 26.67 | 28.01 | 28.34 | 28.25 |

| Monarch | 28.39 | 27.39 | 29.02 | 29.39 | 29.57 |

| Airplane | 27.61 | 26.68 | 27.92 | 28.13 | 28.21 |

| Parrot | 27.97 | 26.92 | 28.33 | 28.65 | 28.76 |

| Lena | 31.28 | 29.93 | 31.50 | 31.82 | 32.13 |

| Barbara | 29.60 | 28.55 | 30.31 | 29.07 | 29.69 |

| Boat | 28.97 | 28.14 | 29.20 | 29.45 | 29.67 |

| Man | 28.89 | 28.03 | 28.95 | 29.31 | 29.46 |

| Couple | 28.82 | 27.84 | 28.96 | 29.33 | 29.54 |

| Average | 29.05 | 28.01 | 29.43 | 29.60 | 29.81 |

| = 50 | |||||

|---|---|---|---|---|---|

| Image | BM3D | WNNM | WSNM | FFDNet | WSNLEC |

| C.Man | 25.96 | 25.74 | 26.39 | 27.24 | 27.32 |

| House | 29.61 | 28.46 | 30.56 | 30.36 | 30.85 |

| Peppers | 26.67 | 26.01 | 27.10 | 27.41 | 27.63 |

| Starfish | 24.81 | 24.57 | 25.25 | 25.68 | 25.86 |

| Monarch | 25.76 | 25.34 | 26.22 | 26.92 | 27.10 |

| Airplane | 25.24 | 24.61 | 25.42 | 25.79 | 25.88 |

| Parrot | 25.89 | 25.54 | 26.12 | 26.57 | 26.67 |

| Lena | 28.99 | 28.14 | 29.22 | 29.63 | 29.94 |

| Barbara | 27.04 | 26.75 | 27.83 | 26.41 | 26.97 |

| Boat | 26.78 | 26.23 | 26.82 | 27.30 | 27.51 |

| Man | 26.75 | 26.28 | 26.93 | 27.26 | 27.41 |

| Couple | 26.45 | 26.01 | 26.64 | 27.04 | 27.24 |

| Average | 26.66 | 26.14 | 27.04 | 27.30 | 27.53 |

| = 60 | |||||

|---|---|---|---|---|---|

| Image | BM3D | WNNM | WSNM | FFDNet | WSNLEC |

| C.Man | 25.50 | 25.03 | 25.63 | 26.52 | 26.61 |

| House | 28.57 | 27.98 | 29.49 | 29.50 | 30.09 |

| Peppers | 25.86 | 24.79 | 26.09 | 26.53 | 26.81 |

| Starfish | 23.98 | 23.67 | 24.41 | 24.74 | 24.93 |

| Monarch | 24.84 | 24.53 | 25.40 | 26.02 | 26.24 |

| Airplane | 24.54 | 23.83 | 24.57 | 25.03 | 25.14 |

| Parrot | 25.07 | 24.37 | 25.32 | 25.83 | 25.95 |

| Lena | 28.13 | 27.31 | 28.37 | 28.83 | 29.18 |

| Barbara | 26.21 | 25.88 | 27.02 | 25.46 | 26.04 |

| Boat | 26.03 | 25.52 | 26.13 | 26.54 | 26.77 |

| Man | 26.11 | 25.57 | 26.18 | 26.56 | 26.74 |

| Couple | 25.63 | 25.21 | 25.81 | 26.24 | 26.46 |

| Average | 25.87 | 25.31 | 26.20 | 26.48 | 26.75 |

| = 75 | |||||

|---|---|---|---|---|---|

| Image | BM3D | WNNM | WSNM | FFDNet | WSNLEC |

| C.Man | 24.27 | 23.83 | 24.63 | 25.62 | 25.64 |

| House | 27.39 | 26.23 | 28.57 | 28.14 | 28.98 |

| Peppers | 24.75 | 23.78 | 25.17 | 25.45 | 25.67 |

| Starfish | 23.14 | 22.19 | 23.14 | 23.62 | 23.75 |

| Monarch | 23.72 | 22.92 | 24.19 | 24.90 | 25.07 |

| Airplane | 23.37 | 23.12 | 23.72 | 24.12 | 24.16 |

| Parrot | 24.07 | 23.17 | 24.26 | 24.91 | 25.00 |

| Lena | 26.94 | 26.28 | 27.54 | 27.86 | 28.17 |

| Barbara | 25.02 | 24.61 | 25.90 | 24.29 | 24.96 |

| Boat | 25.10 | 24.38 | 25.09 | 25.63 | 25.74 |

| Man | 25.28 | 24.57 | 25.32 | 25.73 | 25.82 |

| Couple | 24.67 | 23.98 | 24.80 | 25.29 | 25.42 |

| Average | 24.81 | 24.09 | 25.19 | 25.48 | 25.70 |

| = 100 | |||||

|---|---|---|---|---|---|

| Image | BM3D | WNNM | WSNM | FFDNet | WSNLEC |

| C.Man | 22.29 | 22.55 | 23.45 | 24.30 | 24.25 |

| House | 25.50 | 25.03 | 27.02 | 26.98 | 27.65 |

| Peppers | 22.87 | 22.60 | 23.43 | 24.03 | 24.23 |

| Starfish | 21.49 | 21.05 | 22.11 | 22.25 | 22.39 |

| Monarch | 21.45 | 21.31 | 22.99 | 23.40 | 23.61 |

| Airplane | 21.85 | 21.72 | 22.39 | 22.90 | 22.95 |

| Parrot | 21.96 | 21.95 | 22.90 | 23.72 | 23.80 |

| Lena | 25.32 | 25.03 | 26.31 | 26.61 | 26.93 |

| Barbara | 22.80 | 23.53 | 24.42 | 22.89 | 23.56 |

| Boat | 23.56 | 23.21 | 23.91 | 24.44 | 24.53 |

| Man | 23.98 | 23.67 | 24.34 | 24.66 | 24.77 |

| Couple | 23.37 | 23.02 | 23.54 | 24.08 | 24.14 |

| Average | 23.04 | 22.89 | 23.90 | 24.19 | 24.40 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, J.; Cheng, Y.; Ma, Y. Weighted Schatten p-Norm Low Rank Error Constraint for Image Denoising. Entropy 2021, 23, 158. https://doi.org/10.3390/e23020158

Xu J, Cheng Y, Ma Y. Weighted Schatten p-Norm Low Rank Error Constraint for Image Denoising. Entropy. 2021; 23(2):158. https://doi.org/10.3390/e23020158

Chicago/Turabian StyleXu, Jiucheng, Yihao Cheng, and Yuanyuan Ma. 2021. "Weighted Schatten p-Norm Low Rank Error Constraint for Image Denoising" Entropy 23, no. 2: 158. https://doi.org/10.3390/e23020158