Statistical and Visual Analysis of Audio, Text, and Image Features for Multi-Modal Music Genre Recognition

Abstract

1. Introduction

2. Related Work

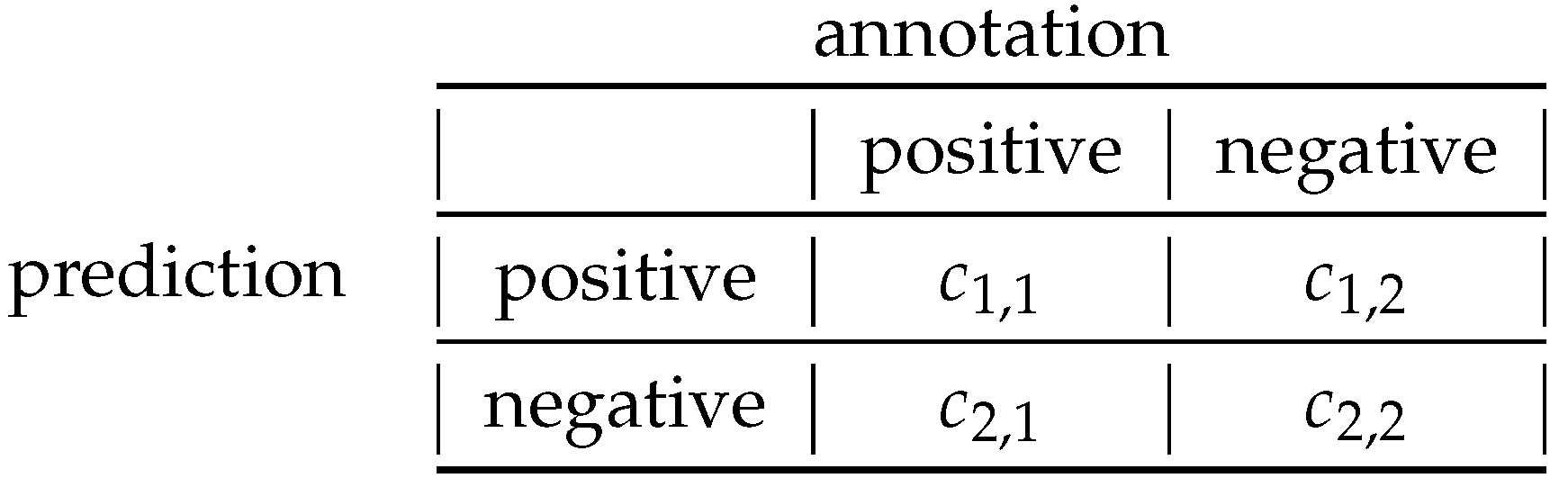

3. A Multi-Modal Approach to Music Genre Recognition

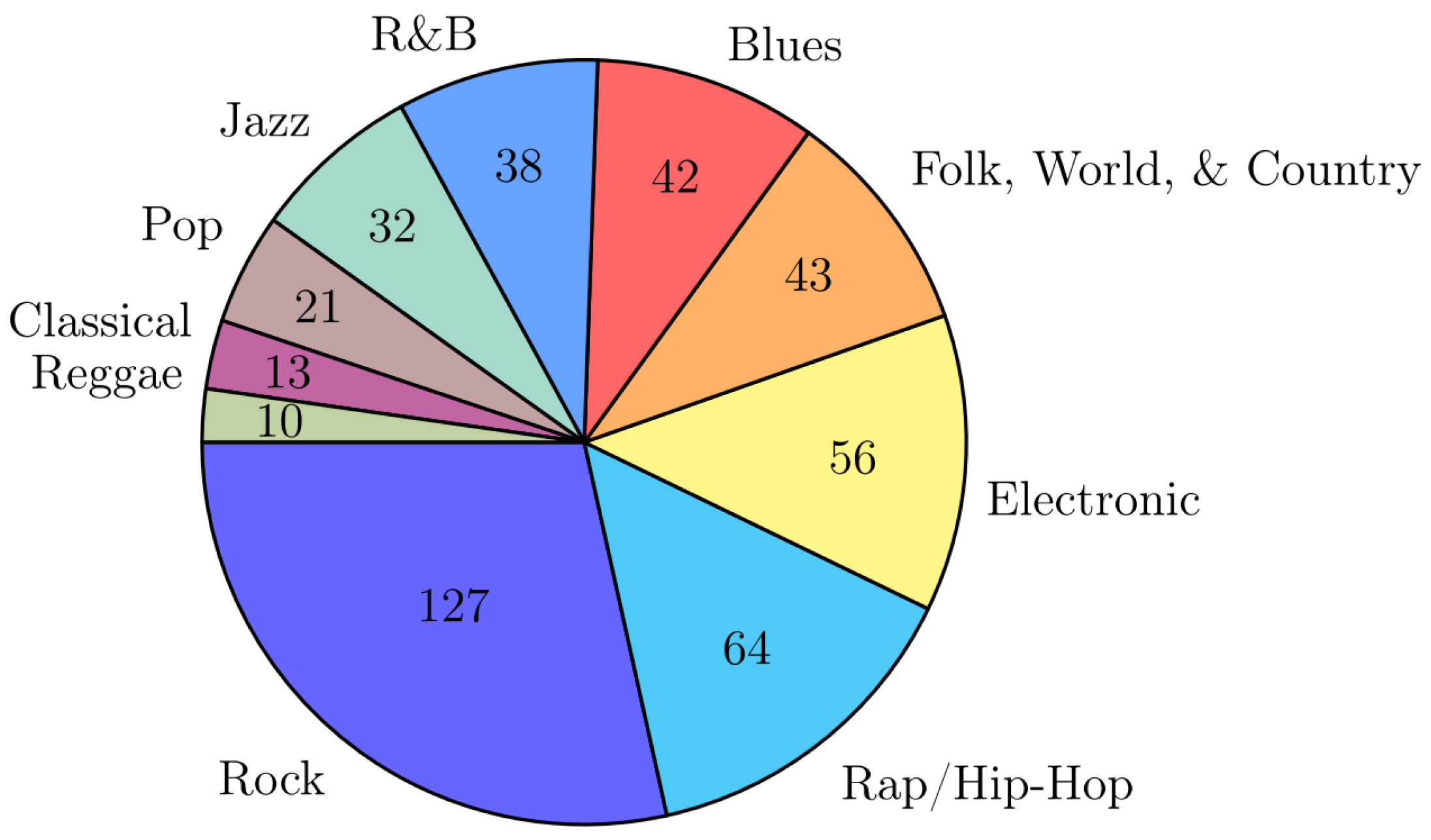

3.1. Data Set

3.2. General Approach

3.3. Features

3.3.1. Audio Features

Tempo and Rhythm

Timbre

Harmony

Semantic Features

3.3.2. Text Features

Bag-of-Words (BoW) Feature

Doc2vec Feature

3.3.3. Image Features

Bag-of-Features (BoF) with SIFT Descriptors

Features of Deep Convolutional Neural Networks

3.3.4. Reduction of Dimension by Principal Component Analysis

3.4. Classifiers

Linear Support Vector Machine

Random Forest

3.5. Fusion of Binary Models Trained for Individual Genres and Modalities

4. Evaluation

4.1. Configuration Of Experiments

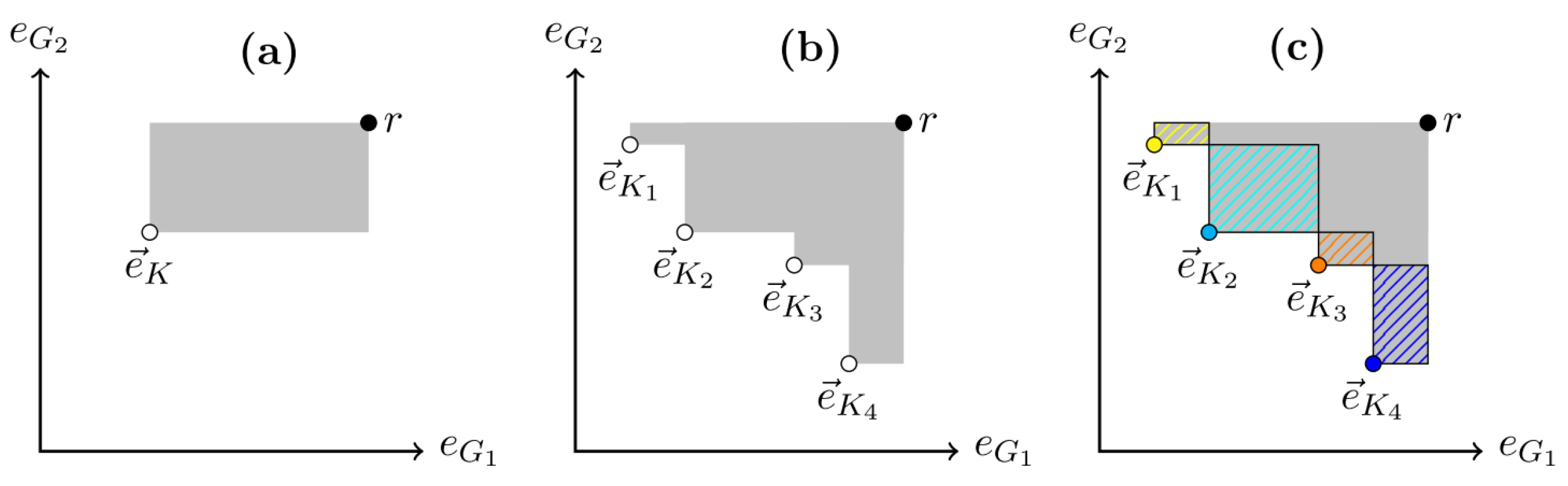

4.2. Visual Data Analysis

4.2.1. Aggregation of the Same Combinations of Features

SIFT_BOF (v = 400, pca = 16) + TIMBRE,

SIFT_BOF (v = 400, pca = 64) + TIMBRE,

4.2.2. Removal of Dominated Results

4.2.3. Filtering of Less Relevant Results

4.3. Feature-Related Hypotheses

- :

- The classification with audio-based features achieves a better error rate than the classification with non-audio-based features. Feature combinations are not examined here.

- :

- The combination of features of different modalities leads to a better error rate. More specifically:

- :

- The combination of any features of two modalities results in a better error rate compared to using any features of one of the two modalities.

- :

- The combination of any features of three modalities results in a better error rate compared to using any features of two of the three modalities.

- :

- Non-audio-based features achieve a better error rate for certain genres whose error rate is high when classified via audio features.

- :

- The use of principal component analysis for text and image features does not degrade the results with the respect to the classification error.

- :

- The use of BoW features without PCA achieves the same error rate as the use of BoW features with a PCA with dimensionality reduction to 64 dimensions.

- :

- The use of BoW features without PCA achieves the same error rate as the use of BoW features with a PCA with dimensionality reduction to 32 dimensions.

- :

- The use of BoF features without PCA achieves the same error rate as the use of BoF features with a PCA with dimensionality reduction to 64 dimensions.

- and are no combinations of individual features groups.

- and are only features of type BoW, doc2vec, or BoF.

- and are the same feature type.

- does not use PCA, uses PCA.

- belongs to a partition of exactly one modality (e.g., audio),

- belongs to a partition of two modalities (e.g., audio + text),

- the modalities of the partition of include the modality of the partition of .

- belongs to a partition of exactly two modalities (e.g., audio + text),

- belongs to a partition of exactly three modalities (e.g., audio + text + image),

- the modalities of ’s partition include all modalities of the partition of .

4.4. Classifier-Related Hypotheses

- :

- The different classification methods have different error rates for the same features.

- :

- There are genres, for which certain classifiers achieve a better error rate for the same features than other classifiers.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| BoF | Bag-of-Features |

| BoW | Bag-of-Words |

| CARTs | Classification and Regression Trees |

| DNNF | Deep Neural Network Features |

| MFCCs | Mel Frequency Cepstral Coefficients |

| SIFT | Scale-Invariant Features Transform |

| SVM | Support Vector Machine |

| TF-IDF | Term Frequency-Inverse Document Frequency |

| PCA | Principal Component Analysis |

Appendix A. Data Sets

- 1517 artist data set:

- 1000 songs data set:

- SALAMI data set:

- SLAC data set:

- CDs data set:

Appendix B. Reassignment of Music Genres

| Original Genre | New Genre |

| Alternative Pop/Rock | - |

| Alternative and Punk | Rock |

| Alternative-Rock | Rock |

| Ambient | Electronic |

| Avant-Garde | Jazz |

| Big Band | Jazz |

| Big Beat | Electronic |

| Bluegrass | Folk, World, & Country |

| Blues-Contemporary Blues | Blues |

| Blues-Country Blues | Blues |

| Blues-Urban Blues | Blues |

| Classic | Classical |

| Classical-Classical | Classical |

| Country | Folk, World, & Country |

| Dance | Electronic |

| Dance Pop | Pop |

| Deutscher Rock Pop | - |

| Disco | Electronic |

| Easy Listening and Vocals | - |

| Electronic and Dance | Electronic |

| Electronica | Electronic |

| Eletronica | Electronic |

| Euro-Techno | Electronic |

| Folk | Folk, World, & Country |

| Funk/Soul | R&B |

| Grunge | Rock |

| Heavy Metal | Rock |

| Hip Hop/Rap | Rap/Hip-Hop |

| Hip Hop | Rap/Hip-Hop |

| Hip-Hop | Rap/Hip-Hop |

| House | Electronic |

| Humor | - |

| Indie | Rock |

| International | Folk, World, & Country |

| Jazz & Vocal | Jazz |

| Jazz-Acid Jazz | Jazz |

| Jazz-Bebop | Jazz |

| Jazz-Dixieland | Jazz |

| Jazz-Post-Bop | Jazz |

| Jazz-Soul Jazz | Jazz |

| Kölsch-Rock | Rock |

| Latin | - |

| Metal | Rock |

| Modern Folk-Alternative Folk | Folk, World, & Country |

| Modern Folk-Singer/Songwriter | Folk, World, & Country |

| Non-Music | - |

| Oldies | - |

| Other | - |

| Pop/Rock | - |

| PopRock | - |

| Progressive Rock | Rock |

| R and B and Soul | R&B |

| R&B-Contemporary R&B | R&B |

| R&B-Contemporary R&B | R&B |

| R&B-Funk | R&B |

| R&B-Gospel | R&B |

| R&B-Rock & Roll | R&B |

| R&B-Soul | R&B |

| Rap | Rap/Hip-Hop |

| Rave | Electronic |

| Religious | - |

| RnB | R&B |

| Rock & Pop | - |

| Rock-Alternative Metal/Punk | Rock |

| Rock-Classic Rock | Rock |

| Rock-Metal | Rock |

| Rock-Roots Rock | Rock |

| Rock Pop | - |

| Rock and Pop | - |

| Soul | R&B |

| Soundtrack | - |

| Soundtracks and More | - |

| Stage & Screen | - |

| Symphonic Metal | Rock |

| Synthpop | Pop |

| Trance | Electronic |

| Trip-Hop | Rap/Hip-Hop |

| World-African | Folk, World, & Country |

| World-Calypso | Folk, World, & Country |

| World-Celtic | Folk, World, & Country |

| World-Chanson | Folk, World, & Country |

| World-Cuban | Folk, World, & Country |

| World-Fusion | Folk, World, & Country |

| World-Klezmer | Folk, World, & Country |

| World-U.S. Traditional | Folk, World, & Country |

| World | Folk, World, & Country |

Appendix C. Audio Features

Appendix C.1. Audio Features of the TEMPO Feature Group. For Each Feature, the Average and Standard Deviation per Calculated Time Window Are Calculated

| Feature | AMUSE-ID | Dim. | Reference |

| Duration | 400 | 1 | Theimer et al. [78] |

| Characteristics of fluctuation patterns | 410 | 7 | Theimer et al. [78] |

| Rhythmic clarity | 418 | 1 | Lartillot [79] |

| Estimated onset number per minute | 420 | 1 | Theimer et al. [78] |

| Estimated beat number per minute | 421 | 1 | Theimer et al. [78] |

| Estimated tatum number per minute | 422 | 1 | Theimer et al. [78] |

| Tempo based on onset times | 425 | 1 | Lartillot [79] |

| Five peaks of fluctuation curves summed across all bands | 427 | 5 | Lartillot [79] |

Appendix C.2. Audio Features of the Feature Group TIMBRE. For Each Feature, the Average and Standard Deviation per Calculated Time Window Are Calculated

| Domain | Feature | AMUSE-ID | Dim. | Reference |

| Time | Root mean square | 4 | 1 | Theimer et al. [78] |

| Time | Low energy | 6 | 1 | Theimer et al. [78] |

| Time | RMS peak number in 3 s | 11 | 1 | Lartillot [79] |

| Time | RMS peak number above mean amplitude in 3 s | 12 | 1 | Lartillot [79] |

| Frequency | Tristimulus | 1 | 2 | Theimer et al. [78] |

| Frequency | Spectral centroid | 14 | 1 | Theimer et al. [78] |

| Frequency | Spectral irregularity | 15 | 1 | Lartillot [79] |

| Frequency | Spectral bandwidth | 16 | 1 | Theimer et al. [78] |

| Frequency | Spectral skewness | 17 | 1 | Theimer et al. [78] |

| Frequency | Spectral kurtosis | 18 | 1 | Theimer et al. [78] |

| Frequency | Spectral crest factor | 19 | 4 | Theimer et al. [78] |

| Frequency | Spectral flatness measure | 20 | 4 | Theimer et al. [78] |

| Frequency | Spectral extent | 21 | 1 | Theimer et al. [78] |

| Frequency | Spectral flux | 22 | 1 | Theimer et al. [78] |

| Frequency | Sub-band energy ratio | 25 | 4 | Theimer et al. [78] |

| Frequency | Spectral slope | 29 | 1 | Theimer et al. [78] |

| Phase | Angles in phase domain | 32 | 1 | Theimer et al. [78] |

| Phase | Distances in phase domain | 33 | 1 | Theimer et al. [78] |

| Cepstral | Mel frequency cepstral coefficients (MIR-Toolbox-Implementation) | 39 | 13 | Theimer et al. [78] |

| Cepstral | Delta MFCCs (MIR-Toolbox-Implementation) | 48 | 13 | Lartillot [79] |

Appendix C.3. Features of the HARMONY Feature Group. For Each Feature, the Average and Standard Deviation per Calculated Time Window Are Calculated

| Feature | AMUSE-ID | Dim. | Reference |

| Fundamental frequency | 200 | 1 | Theimer et al. [78] |

| Inharmonicity | 217 | 1 | Lartillot [79] |

| Chroma Energy Normalized Statistics | 218 | 12 | Müller [80] |

| Chroma DCT-Reduced log Pitch | 219 | 12 | Müller and Ewert [81] |

| Local tuning (NNLS Implementation) | 253 | 1 | Mauch and Dixon [82] |

| Harmonic change (NNLS Implementation) | 254 | 1 | Mauch and Dixon [82] |

| Consonance (NNLS Implementation) | 255 | 1 | Mauch and Dixon [82] |

| Number of different chords | 257 | 1 | Vatolkin [43] |

| Number of chord changes | 258 | 1 | Vatolkin [43] |

| Shares of the most frequent 20, 40 and 60 percents of chords with regard to their duration | 259 | 3 | Vatolkin [43] |

| Key and its clarity 4096 | 10202 | 2 | Lartillot [79] |

| Major/minor alignment 4096 | 10203 | 1 | Lartillot [79] |

| Strengths of major keys 4096 | 10209 | 12 | Lartillot [79] |

| Tonal centroid vector 4096 | 10216 | 6 | Lartillot [79] |

| Harmonic change detection function 4096 | 10217 | 1 | Lartillot [79] |

Appendix C.4. Audio Features of the SEMANTIC Feature Group. For Each Feature, the Average and Standard Deviation per Calculated Time Window Are Calculated

| Feature | AMUSE-ID | Dim. | Reference |

| Guitar RF Chord-based | 2001 | 1 | Vatolkin [43] |

| Guitar SVM Chord-based | 2003 | 1 | Vatolkin [43] |

| Piano RF Chord-based | 2021 | 1 | Vatolkin [43] |

| Piano SVM Chord-based | 2023 | 1 | Vatolkin [43] |

| Wind RF Chord-based | 2041 | 1 | Vatolkin [43] |

| Wind SVM Chord-based | 2043 | 1 | Vatolkin [43] |

| Strings RF Chord-based | 2061 | 1 | Vatolkin [43] |

| Strings SVM Chord-based | 2063 | 1 | Vatolkin [43] |

| AMG mood Aggressive best RF model | 4002 | 1 | Vatolkin [43] |

| AMG mood Aggressive best SVM model | 4006 | 1 | Vatolkin [43] |

| AMG mood Energetic best RF model | 4062 | 1 | Vatolkin [43] |

| AMG mood Energetic best SVM model | 4066 | 1 | Vatolkin [43] |

| AMG mood Sentimental best RF model | 4122 | 1 | Vatolkin [43] |

| AMG mood Sentimental best SVM model | 4126 | 1 | Vatolkin [43] |

| AMG mood Stylish best RF model | 4142 | 1 | Vatolkin [43] |

| AMG mood Stylish best SVM model | 4146 | 1 | Vatolkin [43] |

| AMG mood Reflective best RF model | 4102 | 1 | Vatolkin [43] |

| AMG mood Reflective best SVM model | 4106 | 1 | Vatolkin [43] |

| AMG mood Confident best RF model | 4022 | 1 | Vatolkin [43] |

| AMG mood Confident best SVM model | 4026 | 1 | Vatolkin [43] |

| AMG mood Earnest best RF model | 4042 | 1 | Vatolkin [43] |

| AMG mood Earnest best SVM model | 4046 | 1 | Vatolkin [43] |

| AMG mood PartyCelebratory best RF model | 4082 | 1 | Vatolkin [43] |

| AMG mood PartyCelebratory best SVM model | 4086 | 1 | Vatolkin [43] |

| GFKL2011 Activation Level High best RF model | 6002 | 1 | Vatolkin [43] |

| GFKL2011 Activation Level High best SVM model | 6006 | 1 | Vatolkin [43] |

| GFKL2011 Effects Distortion best RF model | 6022 | 1 | Vatolkin [43] |

| GFKL2011 Effects Distortion best SVM model | 6026 | 1 | Vatolkin [43] |

| GFKL2011 Singing clear best RF model | 6042 | 1 | Vatolkin [43] |

| GFKL2011 Singing clear best SVM model | 6046 | 1 | Vatolkin [43] |

| GFKL2011 Singing Range middle best RF model | 6062 | 1 | Vatolkin [43] |

| GFKL2011 Melodic range > octave best RF model | 6242 | 1 | Vatolkin [43] |

| GFKL2011 Melodic range > octave best SVM model | 6246 | 1 | Vatolkin [43] |

| GFKL2011 Melodic range ≤ octave best RF model | 6262 | 1 | Vatolkin [43] |

| GFKL2011 Melodic range ≤ octave best SVM model | 6266 | 1 | Vatolkin [43] |

| GFKL2011 Melodic range linear best RF model | 6282 | 1 | Vatolkin [43] |

| GFKL2011 Melodic range linear best SVM model | 6286 | 1 | Vatolkin [43] |

| GFKL2011 Melodic range volatile best RF model | 6302 | 1 | Vatolkin [43] |

| GFKL2011 Melodic range volatile best SVM model | 6306 | 1 | Vatolkin [43] |

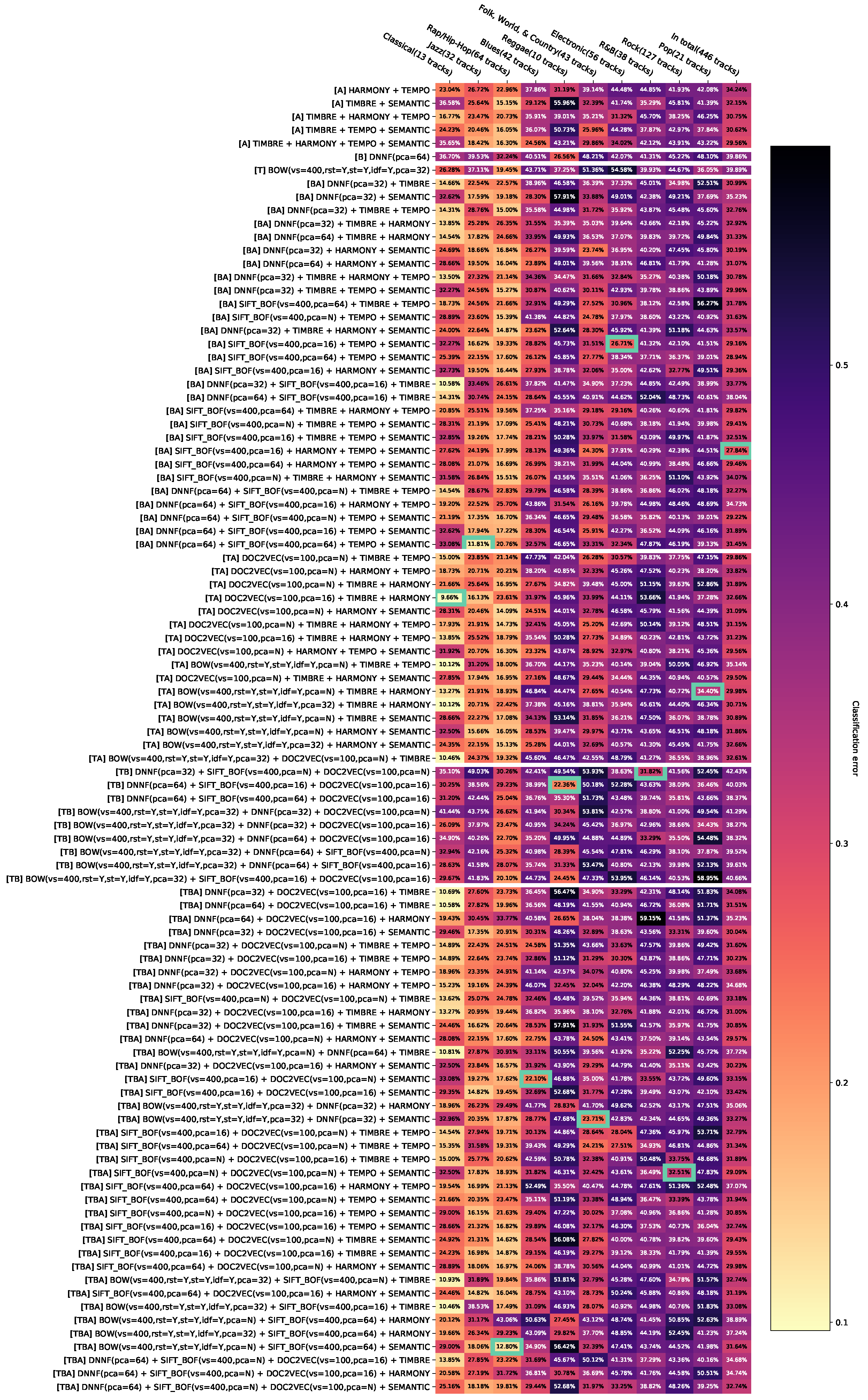

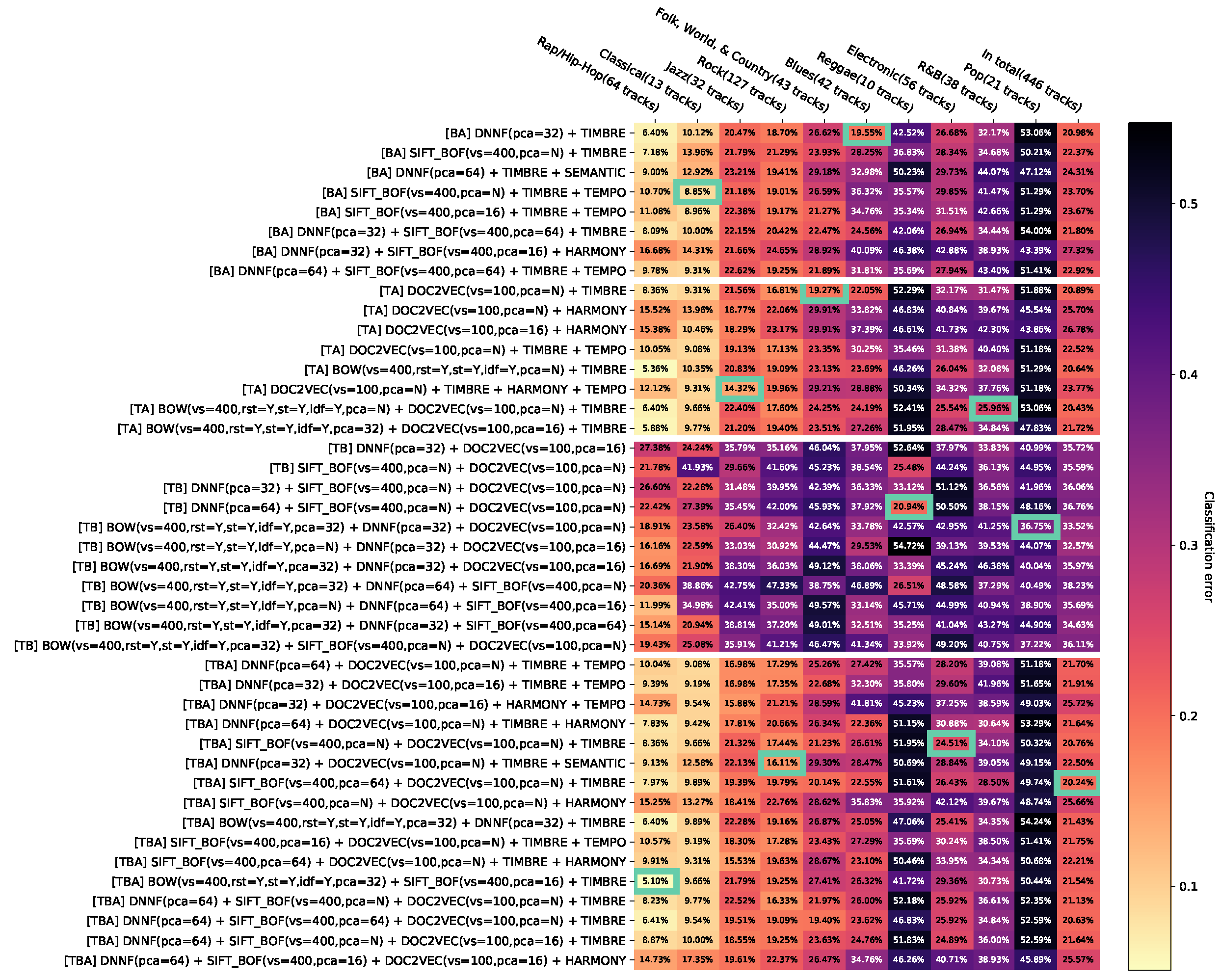

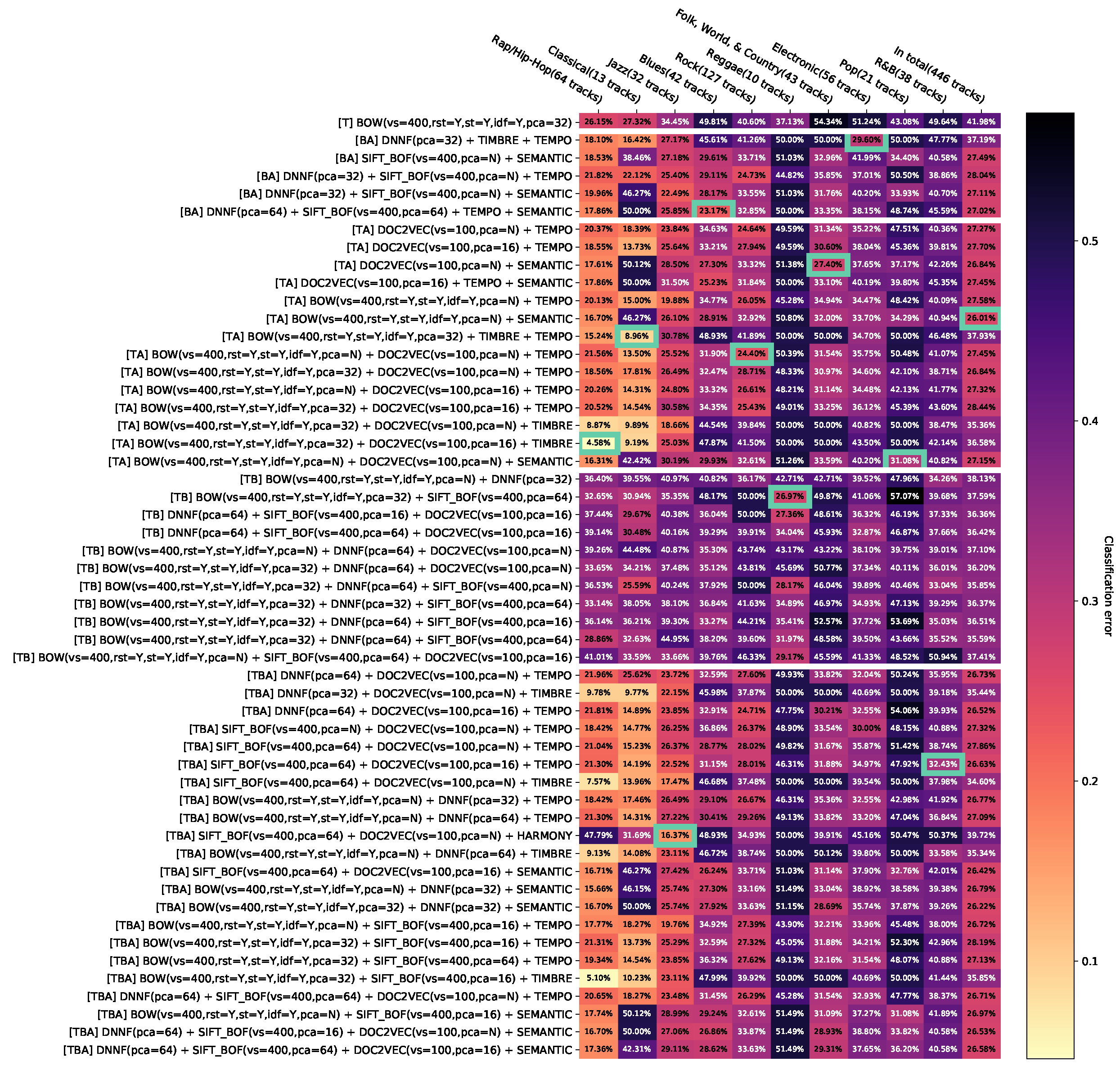

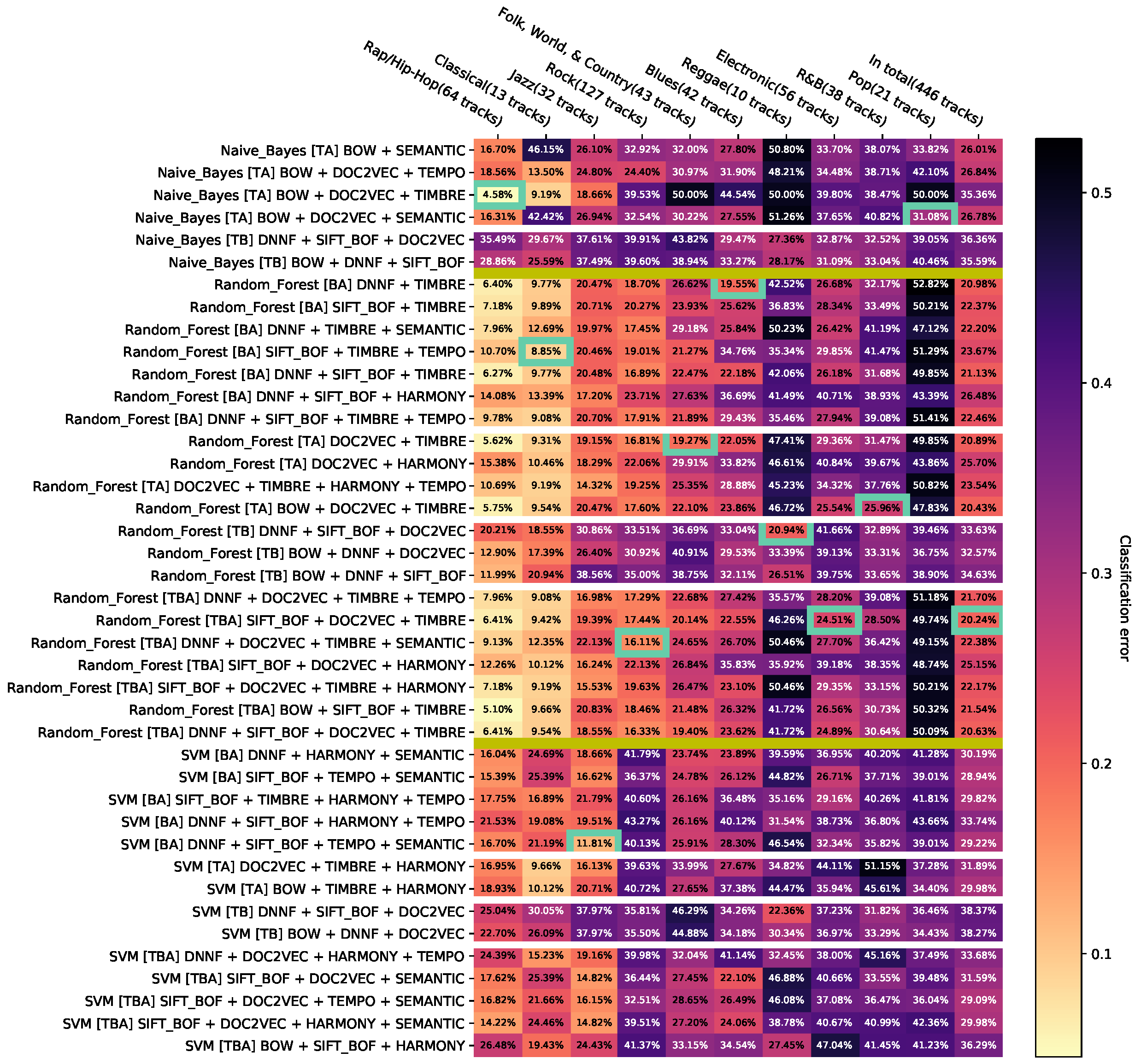

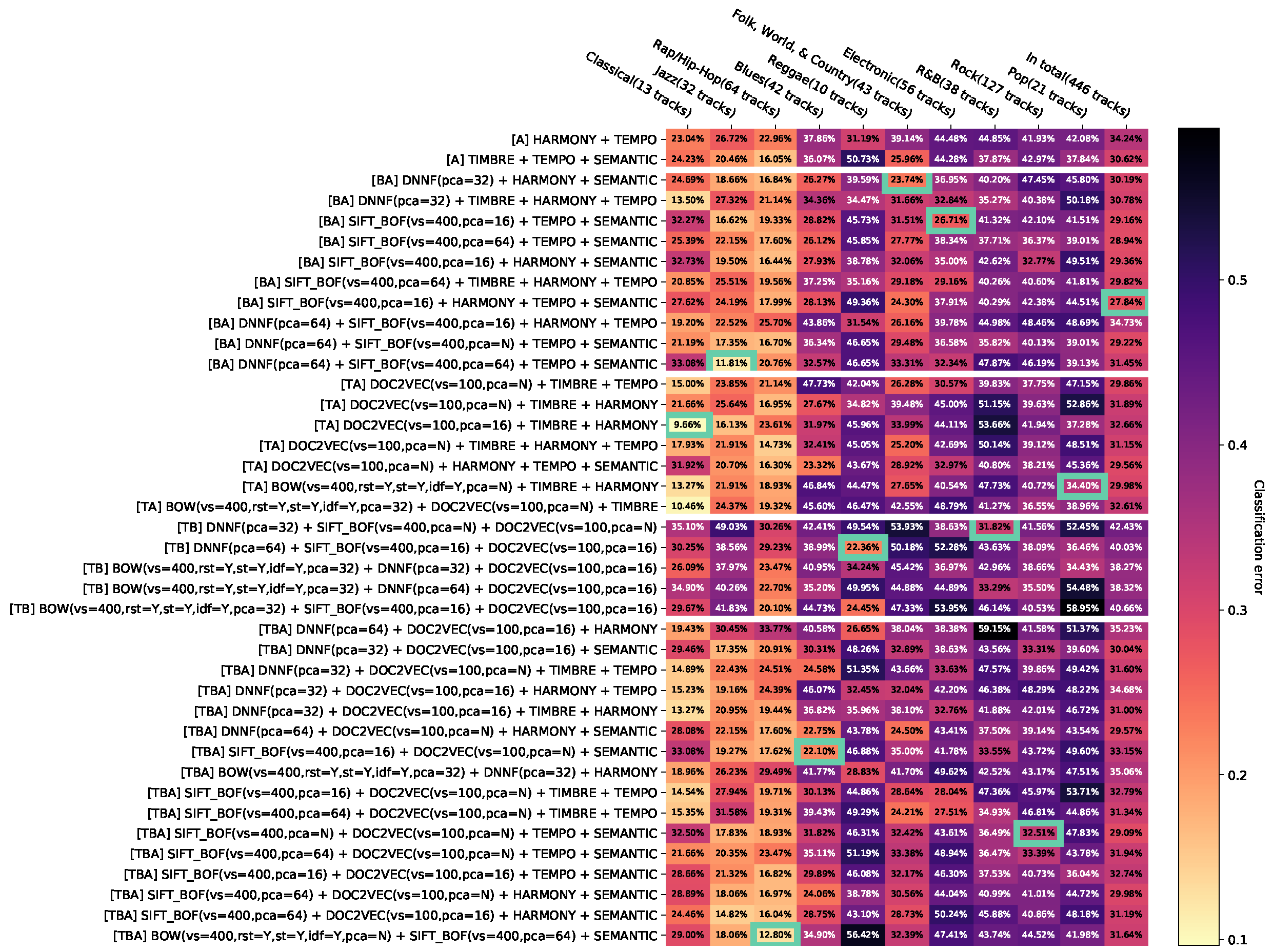

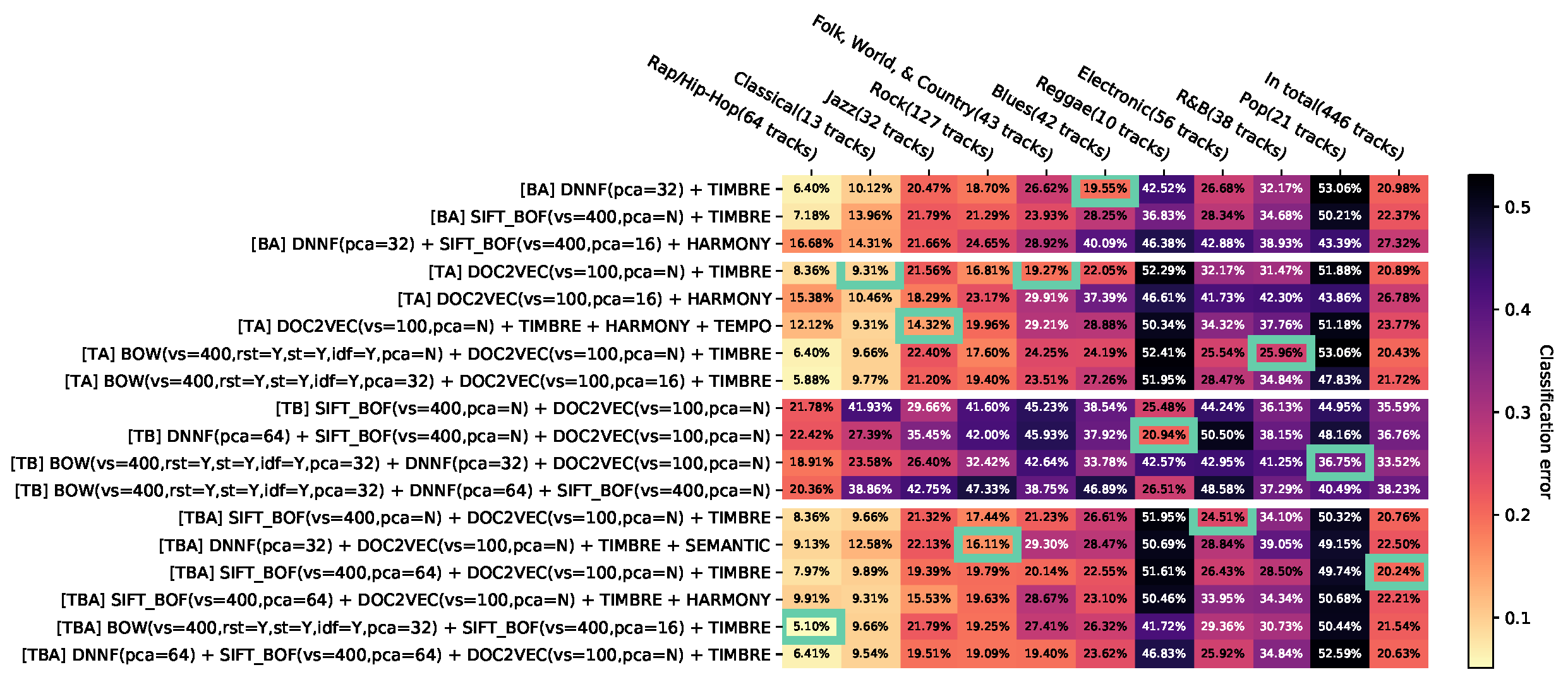

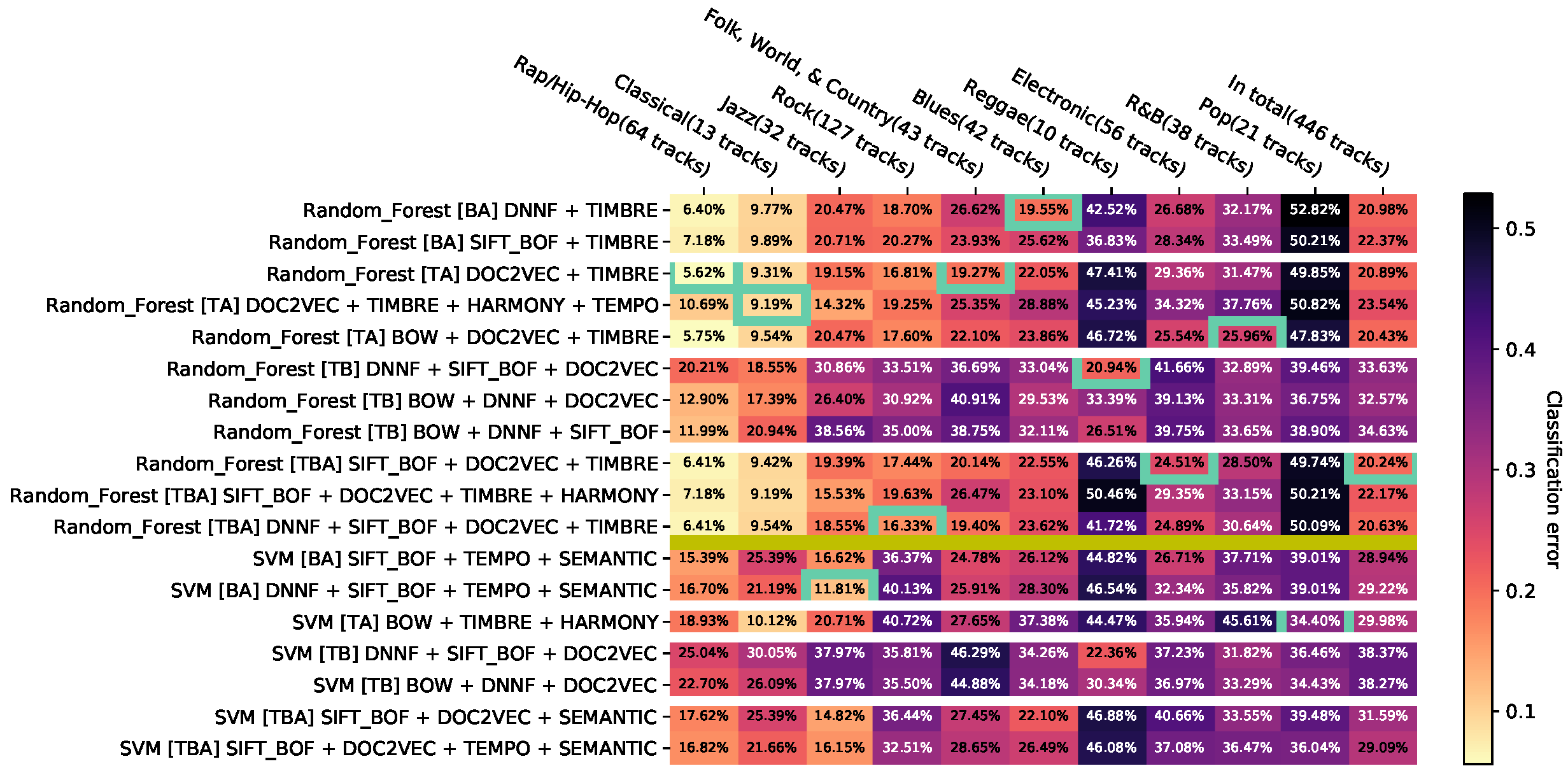

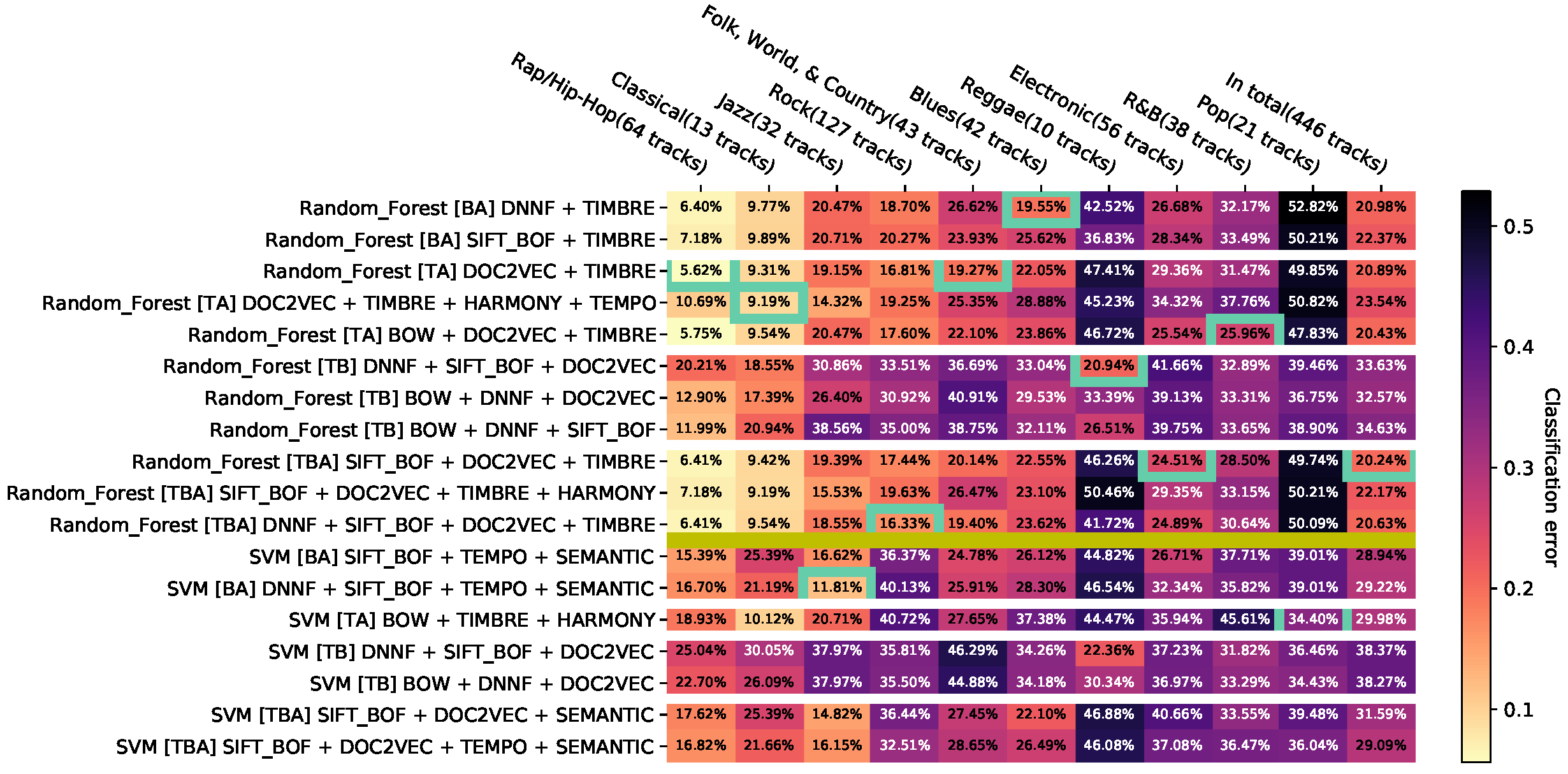

Appendix D. Visualizations of the Test Results

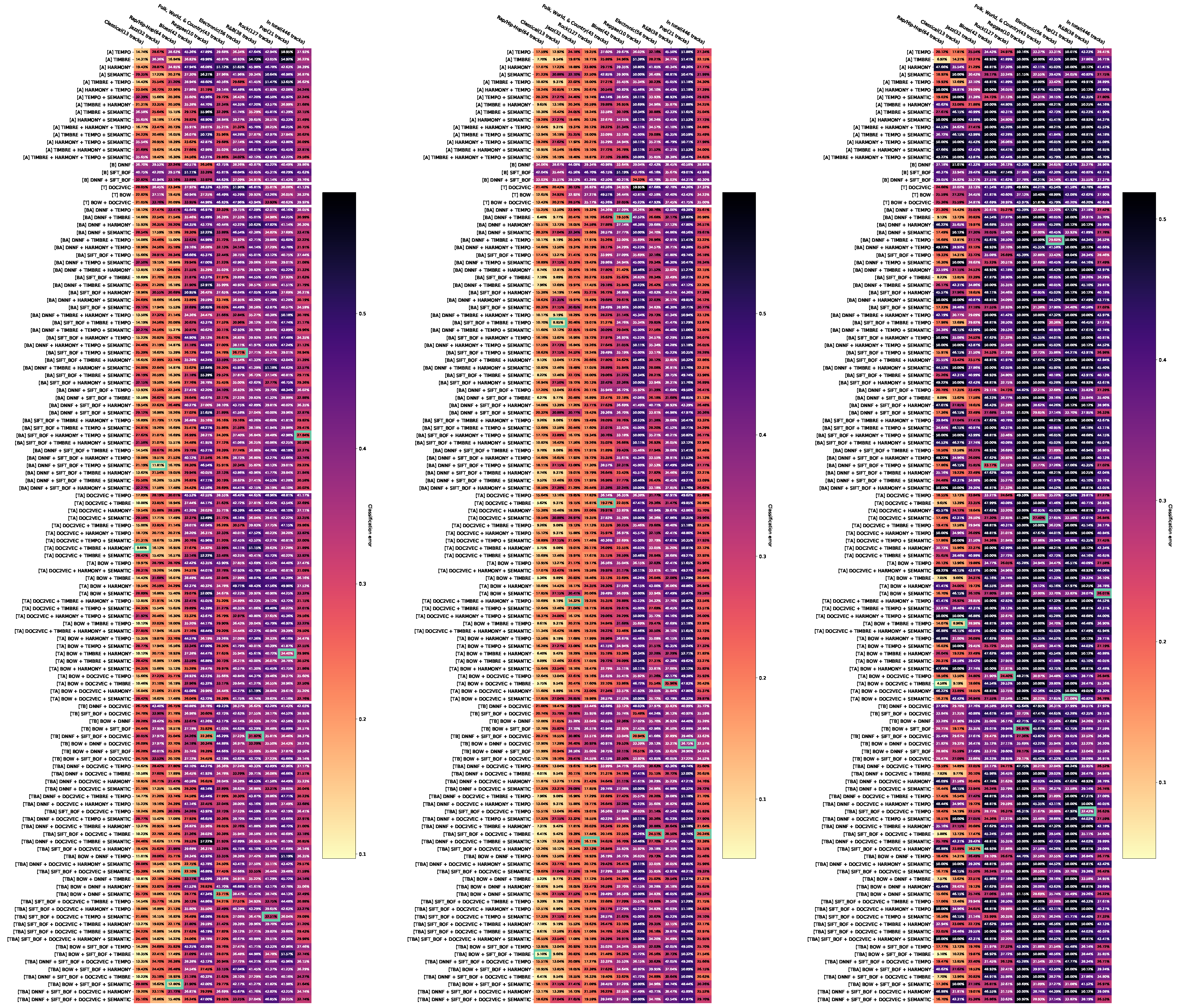

Appendix D.1. Error Rates of the Three Classifiers for the Different Combinations of Features

Appendix D.2. Error Rates of the Three Classifiers with Aggregated Feature Combinations

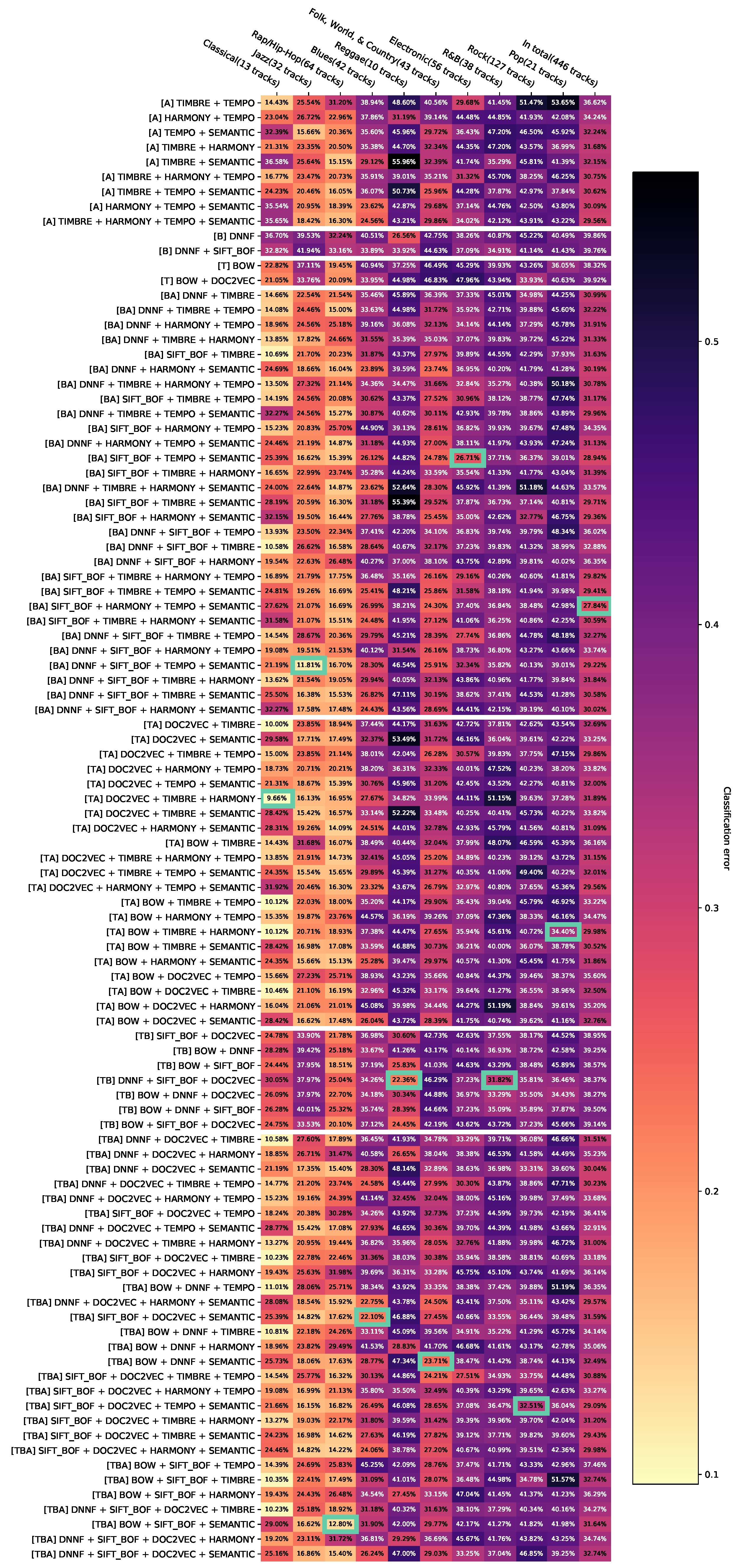

Appendix D.3. Appendix Error Rates for Aggregated Feature Combinations with Svm as a Classifier without Dominated Feature Combinations

Appendix D.4. Appendix Error Rates for Aggregated Feature Combinations with the Random Forest Classifier without Dominated Feature Combinations

Appendix D.5. Appendix Error Rates for Aggregated Feature Combinations with the Naïve Bayes Classifier without Dominated Feature Combinations

Appendix D.6. Appendix Error Rates of All Classifiers for Aggregated Feature Combinations without Dominated Feature Combinations

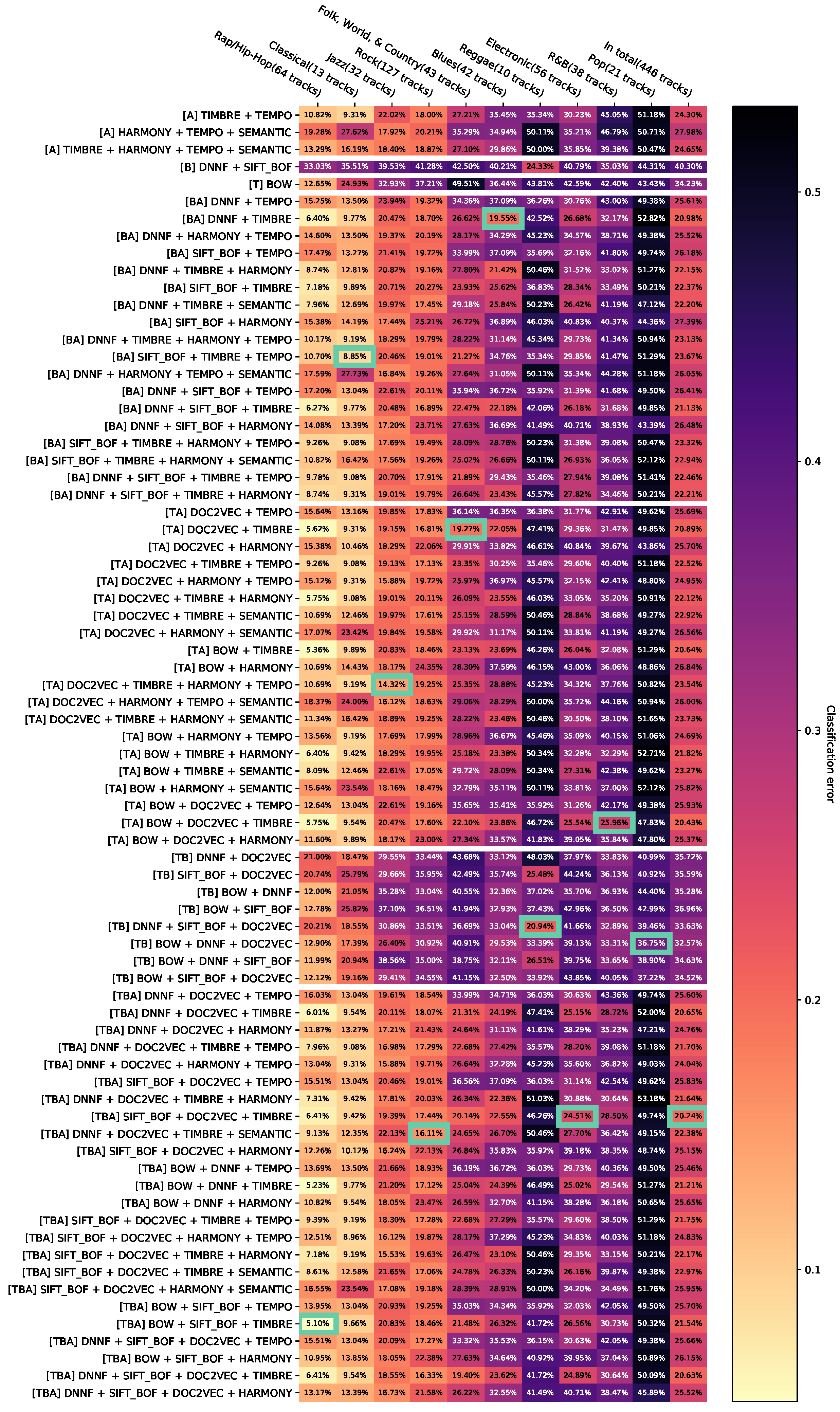

Appendix D.7. Appendix Error Rates for Aggregated Feature Combinations with Svm as a Classifier without Feature Combinations Contributing Less than T=0.01 to the Dominated Hypervolume

Appendix D.8. Appendix Error Rates for Aggregated Feature Combinations with the Random Forest Classifier without Feature Combinations Contributing Less than T=0.01 to the Dominated Hypervolume

Appendix D.9. Appendix Error Rates for Aggregated Feature Combinations with the Na ïve Bayes Classifier without Feature Combinations Contributing Less than T=0.01 to the Dominated Hypervolume

Appendix D.10. Appendix Error Rates of All Classifiers for Aggregated Feature Combinations without Feature Combinations Contributing Less than T=0.01 to the Dominated Hypervolume

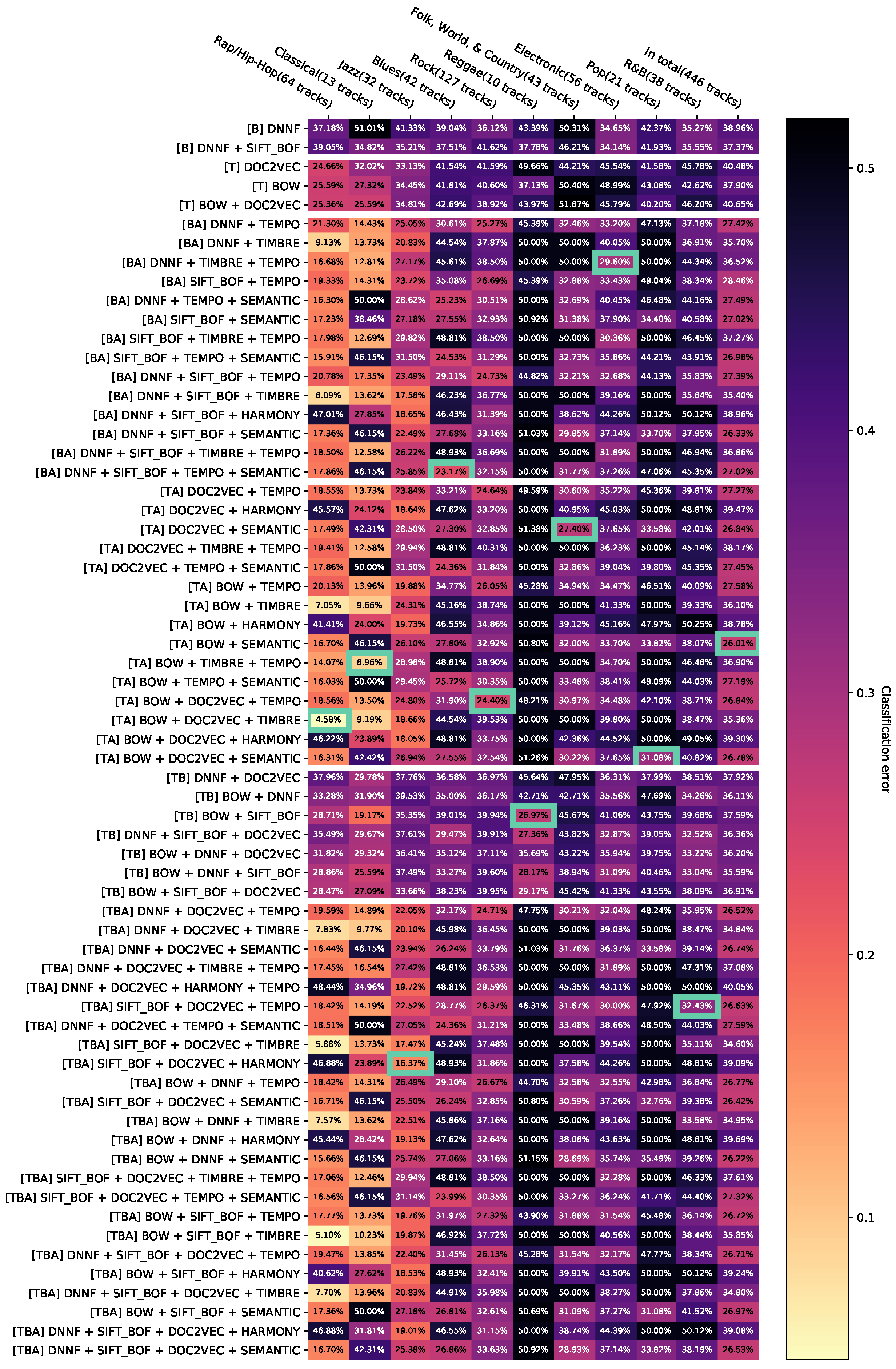

Appendix D.11. Appendix Error Rates for Aggregated Feature Combinations with Svm as a Classifier without Feature Combinations Contributing Less than T=0.05 to the Dominated Hypervolume

Appendix D.12. Appendix Error Rates for Aggregated Feature Combinations with the Random Forest Classifier without Feature Combinations Contributing Less than T=0.05 to the Dominated Hypervolume

Appendix D.13. Appendix Error Rates for Aggregated Feature Combinations with the Na ïve Bayes Classifier without Feature Combinations Contributing Less than T=0.05 to the Dominated Hypervolume

Appendix D.14. Appendix Error Rates of All Classifiers for Aggregated Feature Combinations without Feature Combinations Contributing Less than T=0.05 to the Dominated Hypervolume

Appendix E. Test Results

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| Audio against image | 0.062 | 0.124 | 0.368 | 0.399 | retained |

| Audio against text | 0.328 | 0.656 | 0.368 | 0.372 | retained |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| Audio against image | 0.010 | 0.020 | 0.250 | 0.390 | rejected |

| Audio against text | 0.006 | 0.012 | 0.250 | 0.368 | rejected |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| Audio against image | 0.041 | 0.082 | 0.282 | 0.380 | retained |

| Audio against text | 0.021 | 0.042 | 0.282 | 0.406 | rejected |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| Audio against image + audio | 0.037 | 0.220 | 0.296 | 0.267 | retained |

| Audio against text + audio | 0.248 | 1.000 | 0.296 | 0.295 | retained |

| Image against image + audio | 0.010 | 0.060 | 0.371 | 0.267 | retained |

| Image against text + image | 0.003 | 0.020 | 0.371 | 0.337 | rejected |

| Text against text + audio | 0.006 | 0.035 | 0.361 | 0.295 | rejected |

| Text against text + image | 0.075 | 0.452 | 0.360 | 0.337 | retained |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| Audio against image + audio | 0.005 | 0.030 | 0.250 | 0.210 | rejected |

| Audio against text + audio | 0.004 | 0.026 | 0.250 | 0.204 | rejected |

| Image against image + audio | 0.010 | 0.060 | 0.388 | 0.210 | retained |

| Image against text + image | 0.003 | 0.020 | 0.388 | 0.309 | rejected |

| Text against text + audio | 0.004 | 0.027 | 0.352 | 0.204 | rejected |

| Text against text + image | 0.003 | 0.020 | 0.352 | 0.309 | rejected |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| Audio against image + audio | 0.010 | 0.060 | 0.278 | 0.263 | retained |

| Audio against text + audio | 0.010 | 0.060 | 0.278 | 0.260 | retained |

| Image against image + audio | 0.010 | 0.060 | 0.372 | 0.263 | retained |

| Image against text + image | 0.004 | 0.026 | 0.372 | 0.325 | rejected |

| Text against text + audio | 0.006 | 0.035 | 0.389 | 0.260 | rejected |

| Text against text + image | 0.016 | 0.098 | 0.389 | 0.325 | retained |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| Image + audio against text + image + audio | 0.213 | 0.640 | 0.267 | 0.267 | retained |

| Text + audio against text + image + audio | 0.033 | 0.100 | 0.295 | 0.267 | retained |

| Text + image against text + image + audio | 0.021 | 0.0624 | 0.337 | 0.267 | retained |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| Image + audio against text + image + audio | 0.328 | 0.984 | 0.210 | 0.202 | retained |

| Text + audio against Text + image + audio | 0.534 | 1.000 | 0.204 | 0.202 | retained |

| Text + image against text + image + Audio | 0.091 | 0.273 | 0.310 | 0.202 | retained |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| Image + audio against text + image + audio | 0.021 | 0.062 | 0.263 | 0.262 | retained |

| Text + audio against text + image + audio | 0.333 | 0.999 | 0.260 | 0.262 | retained |

| Text + image against text + image + audio | 0.033 | 0.099 | 0.325 | 0.262 | retained |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| [B] SIFT_BOF line (vs = 400, pca = N) against line [B] SIFT_BOF line (vs = 400, pca = 16) | 0.213 | 0.853 | 0.480 | 0.501 | retained |

| [B] SIFT_BOF line (vs = 400, pca = N) against line [B] SIFT_BOF line (vs = 400, pca = 64) | 0.374 | 1.000 | 0.480 | 0.482 | retained |

| [T] DOC2VEC line (vs = 100, pca = N) against line [T] DOC2VEC line (vs = 100, pca = 16) | 0.155 | 0.619 | 0.413 | 0.408 | retained |

| [T] BOW(..., pca = N) line against [T] BOW(..., pca = 32) | 0.657 | 1.000 | 0.409 | 0.399 | retained |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| [B] SIFT_BOF line (vs = 400, pca = N) against line [B] SIFT_BOF line (vs = 400, pca = 16) | 0.131 | 0.523 | 0.461 | 0.474 | retained |

| [B] SIFT_ line (vs = 400, pca = N) against line [B] SIFT_BOF line (vs = 400, pca = 64) | 0.722 | 1.000 | 0.461 | 0.474 | retained |

| [T] DOC2VEC line (vs = 100, pca = N) against line [T] DOC2VEC line (vs = 100, pca = 16) | 0.575 | 1.000 | 0.393 | 0.397 | retained |

| [T] BOW(..., pca = N) line against [T] BOW(..., pca = 32) | 0.213 | 0.853 | 0.372 | 0.434 | retained |

| Test of Variables Line A against B | p-Value | Adapted p-Value | Median A | Median B | Null Line Hypothesis |

|---|---|---|---|---|---|

| [B] SIFT_BOF line (vs = 400, pca = N) against line [B] SIFT_BOF line (vs = 400, pca = 16) | 0.286 | 1.000 | 0.432 | 0.435 | retained |

| [B] SIFT_BOF line (vs = 400, pca = N) against line [B] SIFT_BOF line (vs = 400, pca = 64) | 0.721 | 1.000 | 0.432 | 0.447 | retained |

| [T] DOC2VEC line (vs = 100, pca = N) against line [T] DOC2VEC line (vs = 100, pca = 16) | 0.062 | 0.248 | 0.458 | 0.416 | retained |

| [T] BOW(..., pca = N) line against [T] BOW(..., pca = 32) | 0.594 | 1.000 | 0.426 | 0.420 | retained |

References

- Sturm, B.L. A Survey of Evaluation in Music Genre Recognition. In Proceedings of the 10th International Workshop on Adaptive Multimedia Retrieval: Semantics, Context, and Adaptation (AMR), Copenhagen, Denmark, 24–25 October 2012; pp. 29–66. [Google Scholar]

- Oramas, S.; Nieto, O.; Barbieri, F.; Serra, X. Multi-Label Music Genre Classification from Audio, Text and Images Using Deep Features. In Proceedings of the 18th International Society for Music Information Retrieval Conference (ISMIR), Suzhou, China, 23–27 October 2017; pp. 23–30. [Google Scholar]

- Oramas, S.; Barbieri, F.; Nieto, O.; Serra, X. Multimodal Deep Learning for Music Genre Classification. Trans. Int. Soc. Music Inf. Retr. 2018, 1, 4–21. [Google Scholar] [CrossRef]

- Tzanetakis, G.; Cook, P. Musical Genre Classification of Audio Signals. IEEE Trans. Speech Audio Process. 2002, 10, 293–302. [Google Scholar] [CrossRef]

- Lidy, T.; Rauber, A. Evaluation of Feature Extractors and Psycho-Acoustic Transformations for Music Genre Classification. In Proceedings of the 6th International Society for Music Information Retrieval Conference (ISMIR), Montreal, QC, Canada, 11–16 October 2005; pp. 34–41. [Google Scholar]

- Scaringella, N.; Zoia, G.; Mlynek, D. Automatic Genre Classification of Music Content: A Survey. IEEE Signal Process. Mag. 2006, 23, 133–141. [Google Scholar] [CrossRef]

- Bainbridge, D.; Bell, T. The Challenge of Optical Music Recognition. Comput. Humanit. 2001, 35, 95–121. [Google Scholar] [CrossRef]

- Burgoyne, J.; Devaney, J.; Ouyang, Y.; Pugin, L.; Himmelman, T.; Fujinaga, I. Lyric Extraction and Recognition on Digital Images of Early Music Sources. In Proceedings of the 10th International Society for Music Information Retrieval Conference (ISMIR), Kobe, Japan, 26–30 October 2009; pp. 723–728. [Google Scholar]

- Ke, Y.; Hoiem, D.; Sukthankar, R. Computer Vision for Music Identification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–26 June 2005; IEEE Computer Society: Washington, DC, USA, 2005; Volume 1, pp. 597–604. [Google Scholar]

- Dorochowicz, A.; Kostek, B. Relationship between Album Cover Design and Music Genres. In Proceedings of the 2019 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 18–20 September 2019; pp. 93–98. [Google Scholar]

- Le, V. Visual Metaphors on Album Covers: An Analysis into Graphic Design’s Effectiveness at Conveying Music Genres. Bachelor’s Thesis, Honors College, Oregon State University, Corvallis, OR, USA, 2020. [Google Scholar]

- Schindler, A. Multi-Modal Music Information Retrieval: Augmenting Audio-Analysis with Visual Computing for Improved Music Video Analysis. Ph.D. Thesis, Faculty of Informatics, TU Wien, Hong Kong, China, 2019. [Google Scholar]

- Libeks, J.; Turnbull, D. You Can Judge an Artist by an Album Cover: Using Images for Music Annotation. IEEE Multimed. 2011, 18, 30–37. [Google Scholar] [CrossRef][Green Version]

- Logan, B.; Kositsky, A.; Moreno, P. Semantic Analysis of Song Lyrics. In Proceedings of the 2004 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 27–30 June 2004; IEEE Computer Society: Washington, DC, USA, 2004; pp. 827–830. [Google Scholar]

- Xia, Y.; Wang, L.; Wong, K. Sentiment Vector Space Model for Lyric-Based Song Sentiment Classification. Int. J. Comput. Process. Lang. 2008, 21, 309–330. [Google Scholar] [CrossRef]

- Tsaptsinos, A. Lyrics-Based Music Genre Classification Using a Hierarchical Attention Network. In Proceedings of the 18th International Society for Music Information Retrieval Conference (ISMIR), Suzhou, China, 23–27 October 2017; pp. 694–701. [Google Scholar]

- Simonetta, F.; Ntalampiras, S.; Avanzini, F. Multimodal Music Information Processing and Retrieval: Survey and Future Challenges. In Proceedings of the 2019 International Workshop on Multilayer Music Representation and Processing (MMRP), Milano, Italy, 24–25 January 2019; pp. 10–18. [Google Scholar]

- Neumayer, R.; Rauber, A. Integration of Text and Audio Features for Genre Classification in Music Information Retrieval. In Proceedings of the 29th European Conference on IR Research (ECIR), Rome, Italy, 2–5 April 2007; pp. 724–727. [Google Scholar]

- Mayer, R.; Neumayer, R.; Rauber, A. Combination of Audio and Lyrics Features for Genre Classification in Digital Audio Collections. In Proceedings of the 16th ACM International Conference on Multimedia (MM), Vancouver, BC, Canada, 27–31 October 2008; pp. 159–168. [Google Scholar]

- Mayer, R.; Rauber, A. Multimodal Aspects of Music Retrieval: Audio, Song Lyrics-and Beyond? In Advances in Music Information Retrieval; Ras, Z.W., Wieczorkowska, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 333–363. [Google Scholar]

- Mayer, R.; Rauber, A. Music Genre Classification by Ensembles of Audio and Lyrics Features. In Proceedings of the 12th International Society for Music Information Retrieval Conference (ISMIR), Miami, FL, USA, 24–28 October 2011; pp. 675–680. [Google Scholar]

- Laurier, C.; Grivolla, J.; Herrera, P. Multimodal Music Mood Classification Using Audio and Lyrics. In Proceedings of the 7th International Conference on Machine Learning and Applications, San Diego, CA, USA, 11–13 December 2008; pp. 688–693. [Google Scholar]

- Yang, D.; Lee, W.S. Music Emotion Identification from Lyrics. In Proceedings of the 11th IEEE International Symposium on Multimedia (ISM), San Diego, CA, USA, 14–16 December 2009; pp. 624–629. [Google Scholar]

- Xiong, Y.; Su, F.; Wang, Q. Automatic Music Mood Classification by Learning Cross-Media Relevance between Audio and Lyrics. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 961–966. [Google Scholar]

- Delbouys, R.; Hennequin, R.; Piccoli, F.; Royo-Letelier, J.; Moussallam, M. Music Mood Detection Based on Audio and Lyrics with Deep Neural Net. In Proceedings of the 19th International Society for Music Information Retrieval Conference (ISMIR), Paris, France, 23–27 September 2018; pp. 370–375. [Google Scholar]

- Suzuki, M.; Hosoya, T.; Ito, A.; Makino, S. Music Information Retrieval from a Singing Voice Using Lyrics and Melody Information. EURASIP J. Appl. Signal Process. 2007, 2007, 38727. [Google Scholar] [CrossRef]

- Dhanaraj, R.; Logan, B. Automatic Prediction Of Hit Songs. In Proceedings of the 6th International Conference on Music Information Retrieval (ISMIR), London, UK, 11–15 September 2005; pp. 488–491. [Google Scholar]

- Zangerle, E.; Tschuggnall, M.; Wurzinger, S.; Specht, G. ALF-200k: Towards Extensive Multimodal Analyses of Music Tracks and Playlists. In Advances in Information Retrieval; Pasi, G., Piwowarski, B., Azzopardi, L., Hanbury, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; pp. 584–590. [Google Scholar]

- Cataltepe, Z.; Yaslan, Y.; Sonmez, A. Music Genre Classification Using MIDI and Audio Features. EURASIP J. Appl. Signal Process. 2007, 2007, 36409. [Google Scholar] [CrossRef]

- Velarde, G.; Chac’on, C.C.; Meredith, D.; Weyde, T.; Grachten, M. Convolution-based Classification of Audio and Symbolic Representations of Music. J. New Music Res. 2018, 47, 191–205. [Google Scholar] [CrossRef]

- Dunker, P.; Nowak, S.; Begau, A.; Lanz, C. Content-based mood classification for photos and music: A generic multi-modal classification framework and evaluation approach. In Proceedings of the 1st ACM SIGMM International Conference on Multimedia Information Retrieval (MIR), Vancouver, BC, Canada, 30–31 October 2008; pp. 97–104. [Google Scholar]

- McKay, C.; Burgoyne, J.A.; Hockman, J.; Smith, J.B.L.; Vigliensoni, G.; Fujinaga, I. Evaluating the Genre Classification Performance of Lyrical Features Relative to Audio, Symbolic and Cultural Features. In Proceedings of the 11th International Society for Music Information Retrieval Conference (ISMIR), Utrecht, The Netherlands, 9–13 August 2010; pp. 213–218. [Google Scholar]

- Panda, R.; Malheiro, R.; Rocha, B.; Oliveira, A.; Paiva, R.P. Multi-Modal Music Emotion Recognition: A New Dataset, Methodology and Comparative Analysis. In Proceedings of the 10th International Symposium on Computer Music Multidisciplinary Research (CMMR), Marseille, France, 15–18 October 2013. [Google Scholar]

- Moore, A.F. Categorical Conventions in Music Discourse: Style and Genre. Music Lett. 2001, 82, 432–442. [Google Scholar] [CrossRef]

- Pachet, F.; Cazaly, D. A taxonomy of musical genres. In Proceedings of the 6th International Conference on Content-Based Multimedia Information Access (RIAO), Paris, France, 12–14 Aprial; pp. 1238–1245.

- Discogs. Available online: https://www.discogs.com (accessed on 30 October 2021).

- MusicBrainz. Available online: https://musicbrainz.org (accessed on 30 October 2021).

- MetroLyrics. Available online: https://en.wikipedia.org/wiki/MetroLyrics (accessed on 30 October 2021).

- LyricWiki. Available online: https://de.wikipedia.org/wiki/LyricWiki (accessed on 30 October 2021).

- CajunLyrics. Available online: http://www.cajunlyrics.com (accessed on 30 October 2021).

- Lololyrics. Available online: https://www.lololyrics.com (accessed on 30 October 2021).

- Apiseeds Lyrics. Available online: https://apiseeds.com/documentation/lyrics (accessed on 30 October 2021).

- Vatolkin, I. Improving Supervised Music Classification by Means of Multi-Objective Evolutionary Feature Selection. Ph.D. Thesis, Department of Computer Science, TU Dortmund University, Dortmund, Germany, 2013. [Google Scholar]

- Kamien, R. Music: An Appreciation; McGraw-Hill Education: New York, NY, USA, 2014. [Google Scholar]

- Pampalk, E. Computational Models of Music Similarity and their Application in Music Information Retrieval. Ph.D. Thesis, Department of Computer Science, Vienna University of Technology, Vienna, Austria, 2006. [Google Scholar]

- American National Standards Institute. USA Standard Acoustical Terminology; ANSI: New York, NY, USA, 1960. [Google Scholar]

- Randel, D.M. The Harvard Dictionary of Music; Belknap Press: Cambridge, MA, USA, 2003. [Google Scholar]

- Harris, Z.S. Distributional Structure. WORD 1954, 10, 146–162. [Google Scholar] [CrossRef]

- Bramer, M. Principles of Data Mining; Undergraduate Topics in Computer Science; Springer: London, UK, 2013. [Google Scholar]

- Le, Q.; Mikolov, T. Distributed Representations of Sentences and Documents. In Proceedings of the 31st International Conference on International Conference on Machine Learning (ICML), Beijing, China, 21–26 June 2014; Volume 32, pp. 1188–1196. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; Dean, J. Distributed Representations of Words and Phrases and Their Compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 5–10 December 2013; Curran Associates Inc.: Red Hook, NY, USA, 2013; pp. 3111–3119. [Google Scholar]

- Skansi, S. Introduction to Deep Learning-From Logical Calculus to Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Lloyd, S. Least Squares Quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Pearson, K. LIII. On Lines and Planes of Closest Fit to Systems of Points in Space. Lond. Edinb. Dublin Philos. Mag. J. Sci. 1901, 2, 559–572. [Google Scholar] [CrossRef]

- Maron, M.E. Automatic Indexing: An Experimental Inquiry. J. Assoc. Comput. Mach. 1961, 8, 404–417. [Google Scholar] [CrossRef]

- Qiang, G. An Effective Algorithm for Improving the Performance of Naive Bayes for Text Classification. In Proceedings of the 2nd International Conference on Computer Research and Development (ICCRD), Kuala Lumpur, Malaysia, 7–10 May 2010; pp. 699–701. [Google Scholar]

- Vapnik, V.N.; Chervonenkis, A.Y. Theory of Pattern Recognition; USSR: Nauka, MA, USA, 1974. [Google Scholar]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-based Learning Methods; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Ho, T.K. Random Decision Forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition (ICDAR), Montreal, QC, Canada, 14–16 August 1995; pp. 278–282. [Google Scholar]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Wiley: Wadsworth, OH, USA, 1984. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Au, T. Random Forests, Decision Trees, and Categorical Predictors: The “Absent Levels” Problem. J. Mach. Learn. Res. 2018, 19, 1–30. [Google Scholar]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Vatolkin, I.; Theimer, W.; Botteck, M. AMUSE (Advanced Music Explorer)—A Multitool framework for music data analysis. In Proceedings of the 11th International Society for Music Information Retrieval Conference (ISMIR), Utrecht, The Netherlands, 9–13 August 2010; pp. 33–38. [Google Scholar]

- Kohavi, R. A Study of Cross-validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence (IJCAI), Montreal, QC, Canada, 20–25 August 1995; pp. 1137–1143. [Google Scholar]

- Zitzler, E.; Knowles, J.; Thiele, L. Quality Assessment of Pareto Set Approximations. In Multiobjective Optimization: Interactive and Evolutionary Approaches; Branke, J., Deb, K., Miettinen, K., Słowiński, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 373–404. [Google Scholar]

- Wilcoxon, F. Individual Comparisons by Ranking Methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

- Weihs, C.; Jannach, D.; Vatolkin, I.; Rudolph, G. Music Data Analysis: Foundations and Applications; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Choi, K.; Fazekas, G.; Sandler, M.B.; Cho, K. Transfer Learning for Music Classification and Regression Tasks. In Proceedings of the 18th International Society for Music Information Retrieval Conference (ISMIR), Suzhou, China, 23–27 October 2017; pp. 141–149. [Google Scholar]

- Seyerlehner, K.; Widmer, G.; Knees, P. Frame Level Audio Similarity-A Codebook Approach. In Proceedings of the 11th International Conference on Digital Audio Effects (DAFx), Espoo, Finland, 1–4 September 2008. [Google Scholar]

- Soleymani, M.; Caro, M.N.; Schmidt, E.M.; Sha, C.Y.; Yang, Y.H. 1000 Songs for Emotional Analysis of Music. In Proceedings of the 2nd ACM International Workshop on Crowdsourcing for Multimedia (CrowdMM), Barcelona, Spain, 21 October 2013; ACM: New York, NY, USA, 2013; pp. 1–6. [Google Scholar]

- Smith, J.B.L.; Burgoyne, J.A.; Fujinaga, I.; Roure, D.D.; Downie, J.S. Design and Creation of a Large-Scale Database of Structural Annotations. In Proceedings of the 12th International Society for Music Information Retrieval Conference (ISMIR), Miami, FL, USA, 24–28 October 2011; pp. 555–560. [Google Scholar]

- Last.FM. Available online: https://www.last.fm. (accessed on 30 October 2021).

- TU Dortmund, Department of Computer Science, Chair for Algorithm Engineering Music Collection. Available online: https://ls11-www.cs.tu-dortmund.de/rudolph/mi/albumlist (accessed on 30 October 2021).

- TU Dortmund, Department of Computer Science, Chair for Algorithm Engineering Music Collection TAS 120. Available online: https://ls11-www.cs.tu-dortmund.de/rudolph/mi/tsai120 (accessed on 30 October 2021).

- Theimer, W.; Vatolkin, I.; Eronen, A. Definitions of Audio Features for Music Content Description; Technical Report TR08-2-001; Department of Computer Science, TU Dortmund University: Dortmund, Germany, 2008. [Google Scholar]

- Lartillot, O. MIRtoolbox 1.4 User’s Manual. Technical report, Finnish Centre of Excellence in Interdisciplinary Music Research and Swiss Center for Affective Sciences. 2012. Available online: https://www.jyu.fi/hytk/fi/laitokset/mutku/en/research/materials/mirtoolbox/MIRtoolbox%20Users%20Guide%201.4/@@download/file/manual1.4.pdf (accessed on 30 October 2021).

- Müller, M. Information Retrieval for Music and Motion; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Müller, M.; Ewert, S. Chroma Toolbox: Matlab Implementations for Extracting Variants of Chroma-Based Audio Features. In Proceedings of the 12th International Conference on Music Information Retrieval (ISMIR), Miami, FL, USA, 24–28 October 2011; pp. 215–220. [Google Scholar]

- Mauch, M.; Dixon, S. Approximate Note Transcription for the Improved Identification of Difficult Chords. In Proceedings of the 11th International Society for Music Information Retrieval Conference (ISMIR), Utrecht, The Netherlands, 9–13 August 2010; pp. 135–140. [Google Scholar]

| Vocabulary Line Size | Stop Word Line Removal | Stemming | TF-IDF | PCA | |

|---|---|---|---|---|---|

| Configuration 1 | 400 | yes | yes | yes | no |

| Configuration 2 | 400 | yes | yes | yes | 32 |

| Size of the Hidden Layer | PCA | |

|---|---|---|

| Configuration 1 | 100 | no |

| Configuration 2 | 100 | 16 |

| Vocabulary Size | PCA | |

|---|---|---|

| Configuration 1 | 400 | no |

| Configuration 2 | 400 | 16 |

| Configuration 3 | 400 | 64 |

| PCA | |

|---|---|

| Configuration 1 | 32 |

| Configuration 2 | 64 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wilkes, B.; Vatolkin, I.; Müller, H. Statistical and Visual Analysis of Audio, Text, and Image Features for Multi-Modal Music Genre Recognition. Entropy 2021, 23, 1502. https://doi.org/10.3390/e23111502

Wilkes B, Vatolkin I, Müller H. Statistical and Visual Analysis of Audio, Text, and Image Features for Multi-Modal Music Genre Recognition. Entropy. 2021; 23(11):1502. https://doi.org/10.3390/e23111502

Chicago/Turabian StyleWilkes, Ben, Igor Vatolkin, and Heinrich Müller. 2021. "Statistical and Visual Analysis of Audio, Text, and Image Features for Multi-Modal Music Genre Recognition" Entropy 23, no. 11: 1502. https://doi.org/10.3390/e23111502

APA StyleWilkes, B., Vatolkin, I., & Müller, H. (2021). Statistical and Visual Analysis of Audio, Text, and Image Features for Multi-Modal Music Genre Recognition. Entropy, 23(11), 1502. https://doi.org/10.3390/e23111502