Abstract

A pursuit–evasion game is a classical maneuver confrontation problem in the multi-agent systems (MASs) domain. An online decision technique based on deep reinforcement learning (DRL) was developed in this paper to address the problem of environment sensing and decision-making in pursuit–evasion games. A control-oriented framework developed from the DRL-based multi-agent deep deterministic policy gradient (MADDPG) algorithm was built to implement multi-agent cooperative decision-making to overcome the limitation of the tedious state variables required for the traditionally complicated modeling process. To address the effects of errors between a model and a real scenario, this paper introduces adversarial disturbances. It also proposes a novel adversarial attack trick and adversarial learning MADDPG (A2-MADDPG) algorithm. By introducing an adversarial attack trick for the agents themselves, uncertainties of the real world are modeled, thereby optimizing robust training. During the training process, adversarial learning was incorporated into our algorithm to preprocess the actions of multiple agents, which enabled them to properly respond to uncertain dynamic changes in MASs. Experimental results verified that the proposed approach provides superior performance and effectiveness for pursuers and evaders, and both can learn the corresponding confrontational strategy during training.

1. Introduction

With the development of the three generations of artificial intelligence [1], the technology of multi-agent systems (MASs) has been widely used in many areas of society, such as multi-agent motion planning, complex IT systems, computer communication technology, and so on [2,3,4,5]. Pursuit–evasion games have been widely investigated in MASs during recent years. They have been extended to various fields, to include maneuvering target tracking, surveillance early warning, anti-intrusion protection, and intelligent transportation [6,7]. The goal of these studies is to provide good strategies for pursuers and evaders. For pursuers, their goal is to round up the evaders as much as possible through cooperative decision-making. For evaders, they need to choose the best strategy based on the actions of pursuers to design an escape path to prevent being captured [8].

To address this problem, a series of research activities on agent-based pursuit–evasion games has been carried out in the differential gaming field. Isaacs [9] proposed a one-to-one robot hunting problem where partial differential equations describing the pursuer and the evader were created and solved analytically. Furthermore, a generalized maximum–minimum solving method of the Hamilton Jacobi equation for pursuit–evasion games was provided by Krasovskii [10]. Because in complex control problems, directly solving differential equations is very complicated and consumes many computing resources, researchers proposed some intelligent optimization algorithms that provide new ideas for solving the differential equation problems associated with pursuit–evasion games. Chen et al. [11] simulated fish foraging behavior and proposed a cooperative pursuit strategy that studied pursuit and evasion when trackers have a constrained turning rate. Wang et al. [12] introduced an alliance generation algorithm that generates a synergistic strategy based on the emotional factors of multirobot systems. This ensured that a team’s agents worked towards a common goal. However, there are many constraints and state variables involved in the complicated control process governing these issues, which make the solution intricate especially in a complex and dynamic scenario with multi-agent confrontation. Therefore, more intelligent algorithms are needed to effectively solve the problem of pursuit–evasion games.

By combining deep learning’s ability to perceive highly dimensional data [13] and reinforcement learning’s decision-making ability [14], deep reinforcement learning (DRL) provides a new optimization scheme for intelligent decision-making or control. Because techniques based on deep reinforcement learning do not require the establishment of a differential game model and agents can learn the optimal confrontation strategy only through interaction with the environment [15], some scholars introduced deep reinforcement learning in pursuit–evasion games and acquired the Nash equilibrium of the problem. Xu et al. [16] established a multi-agent reinforcement learning model for UAV pursuit–evasion in which relative motion state equations were employed. As a result, the pursuit–evasion issue was converted into a zero-sum game addressed through minimax-Q learning. In predatory games, Park et al. [17] set up a co-evolution framework for predator and prey to allow multiple agents to learn good policies by deep reinforcement learning. Gu et al. [18] presented an attention-based fault-tolerant model, which could also be applied to pursuit–evasion games, and the key idea was to utilize the multihead attention mechanism to select the correct and useful information for estimating the critics. To solve the complicated training problems caused by discrete action sets introduced by deep Q networks [19], Liu et al. [20] transformed a space rendezvous optimization problem between a space vehicle and noncooperative target into a pursuit–evasion differential game. They introduced a branching architecture with a group of parallel neural networks and shared decision modules. To overcome the unstable recognition ability of pursuers, Qadir et al. [21] proposed a novel approach for self-organizing feature maps and deep reinforcement learning based on the agent group role membership function model. Experiments verified the effectiveness of this method for facilitating the capture of evaders by mobile agents. Singh et al. [22] built on the actor–critic model-free multi-agent deep deterministic policy gradient algorithm to operate over the continuous spaces of pursuit–evasion games. In their approach, the evader’s strategy is not learned. It is based on Voronoi regions that pursuers try to minimize and evaders try to maximize.

Although they represent progress, previous studies on DRL-based pursuit–evasion games are still in their early stages. In these studies, pursuing platforms are assumed to be equipped with error-free identification and measurement systems that allow them to acquire precise information about the position, velocity, and other characteristics of evaders and cooperators [6,23]. However, sensors and other equipment configured in an unmanned system encounter positioning, sensing, and actuator error in reality [24,25]. These errors cause the environment to become uncertain, thereby affecting the strategies of the pursuers and evaders and making their performance worse. Therefore, this research is about designing a robust algorithm for MASs to effectively mitigate these errors and that would be significant for application research in real-world multi-agent decision-making.

This paper introduces a novel multi-agent algorithm to address the decision-making problem of pursuit–evasion games. The algorithm can solve pursuit–evasion games in complex virtual and real environments, where there are static or moving obstacles and pursuers and evaders need to avoid them while making decisions. Specifically, we make the following contributions in this paper:

(1) We develop an actor–critic-based motion control framework based on the multi-agent deep deterministic policy gradient (MADDPG) [26], which can take the state and behavior of other partners into account and is used to provide collaborative decision-making capabilities for each agent in the MAS;

(2) We propose an advanced algorithm called A2-MADDPG, which uses two skills to make the training strategy robust. The first is adversarial attack tricks for agents. It proposes to sample the status after stochastic Gaussian noise is applied, and this approach can train a robust agent to cope with measurement errors in the real world. The second is the optimized adversarial learning technique [27]. It is introduced to improve agent stability and to assist in adapting to noise produced by interactions between multiple agents;

(3) We verified the effectiveness and robustness of the algorithm in simulation experiments. We compared the performance of the proposed method with two common and advanced algorithms, namely the MADDPG and the independent multiagent deep deterministic policy gradient (IMDDPG), where the IMDDPG is a natural extension of the DDPG [28] in the field of multi-agents. Through a series of experiments, we show that the proposed method presents excellent performance for both pursuers and evaders compared with the MADDPG and IMDDPG in the case of the same hyperparameter settings and simulation environment parameter settings, and it can help them both develop robust motion strategies.

The rest of the paper is structured as follows: Section 2 provides background information about multi-agent pursuit–evasion games and describes related theoretical approaches. Section 3 introduces a framework for collaborative pursuit missions and an improved A2-MADDPG algorithm where an adversarial attack trick and an adversarial learning-based optimization method are combined with the MADDPG. Section 4 verifies the robustness and high performance of the algorithm through simulation experiments. Section 5 provides a conclusion and envisages future work.

2. Background

In this section, the kinematic and observation model of agents executing a pursuit–evasion task is presented. In addition, the essential theoretical background of the DRL-based MADDPG algorithm and adversarial learning is introduced.

2.1. Problem Definition

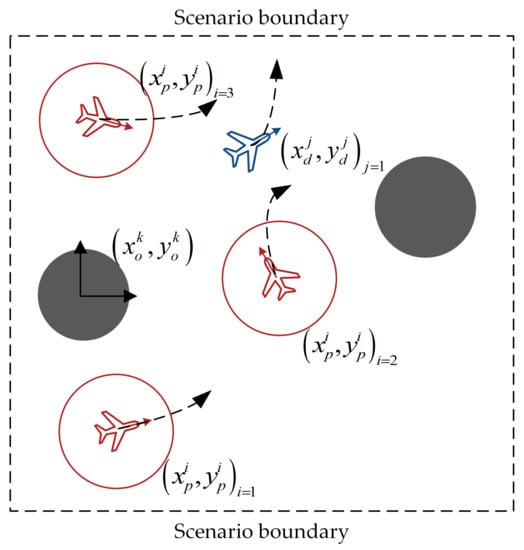

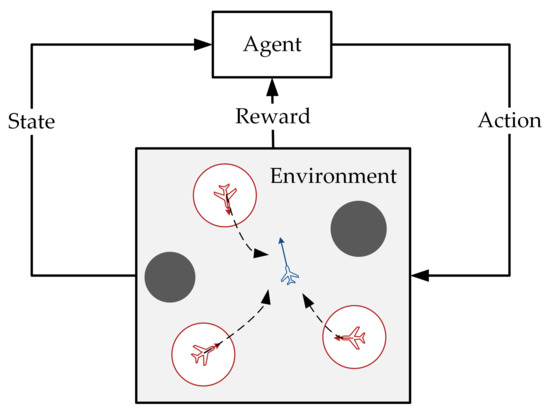

The multi-agent pursuit–evasion game problem can be described as follows: there are pursuers (red agents) and evaders (blue agents), as shown in Figure 1. Both agent types have different tasks based on their maneuverability. Each agent can perceive the relative position of the threat zone (gray circle) using radar and sensors. The velocity and position of each agent are provided by its navigation equipment, and they share information by transmitting through a signaling connection. Pursuers are equipped with an attack or shielding interference device (the red circle represents the attack range of the pursuit), and their mission is considered successful when they suppress an evader by approaching it. Evaders must stay away from pursuers. Neither pursuers nor evaders can exceed their boundaries.

Figure 1.

The scenario of multi-agent pursuit–evasion games.

2.1.1. Comparisons of Operators

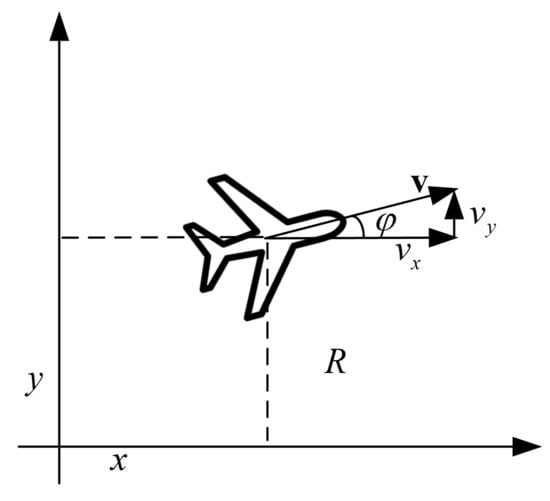

A general decision-making program for pursuit–evasion gaming is primarily used to determine the communication and cooperation between platforms and achieve target pursuit. This is performed without fully considering the maneuvering characteristics of the platforms. Both agents in this paper are mobile UAVs flying at a fixed altitude with nonholonomic constraints [29], as portrayed in Figure 2. The status update of each UAV can be described as:

where , , and denote the position, velocity, and yaw angle parameters. The superscript t represents time t; is the time interval; a is UAV’s acceleration. Considering power systems and mechanical limitations, the maximal velocity and acceleration are assumed to be and , which are introduced in the following simulation.

Figure 2.

Motion analysis of the UAV.

2.1.2. Observation Model

The observation model of the agent was presented to provide the agent with the ability to sense the environment [30]. In this multi-agent pursuit–evasion task, is the position of each agent in the pursuit formation, and both and represent the position of the center point of the evader and the threatened area, respectively. The number of pursuers, evaders, and obstacles in the environment is defined as , , and , respectively. Since pursuers and evaders need to consider avoiding obstacles to prevent being hit when making decisions, these obstacles make it more difficult to solve the problem of pursuit–evasion. The formation of all pursuers is denoted as A. An agent i on the pursuers’ team can use radar detection and communication transmission to obtain its own local observations from the environment as follows:

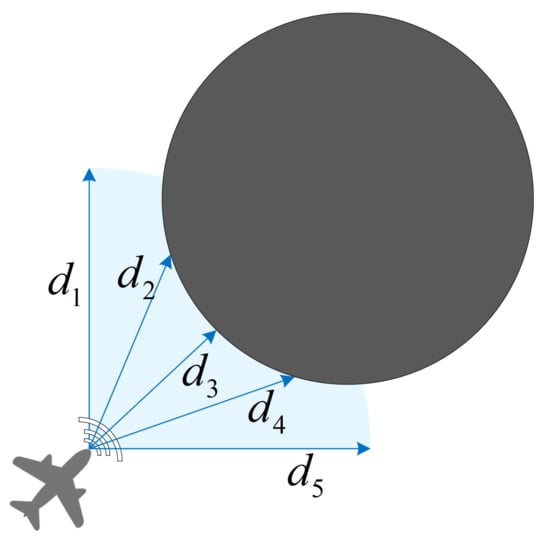

Here, , , , and represent the self-observed velocity and position of the agent on the x and y axis. indicates the observed location of other pursuers in the formation, and l is the sequence number of other pursuers on the team. denotes the observed location of an evader, and j represents its sequence number. represents information observed about an obstacle, and k is the obstacle number. Considering a real mission scenario, a set of range sensors is employed to help the unmanned system detect possible threats from obstacles ahead of it in the range. As shown in Figure 3, the 90 angle containing the blue arc within the sensor range is the agent’s threat detection area. An agent’s observations about an obstacle are divided into five parts:

where denotes the five sensor indications. We set when a threat is not detected. Based on the comprehensive observation information above, an agent can perceive and assess the situation.

Figure 3.

Unmanned system obstacle threat detection based on range sensors.

2.2. Theoretical Context

Deep reinforcement learning is a representative intelligent machine learning algorithm, and adversarial learning can increase the stability and robustness of the model trained by reinforcement learning [31]. They provide new research ideas for multi-agent pursuit–evasion decision-making. In this section, adversarial learning, the DRL-based DDPG algorithm, and the MADDPG algorithm are introduced.

2.2.1. Adversarial Learning

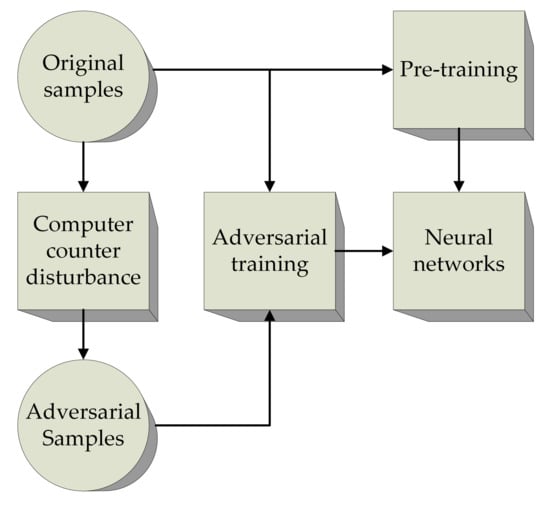

Adversarial learning is a technique of defending against adversarial samples [32]. This approach attempts to improve the accuracy of neural network models by training adversarial samples and normal samples together and reducing the interference of the adversarial samples. The robustness and generalization ability of the resulting network are improved. Adversarial training can be expressed as follows:

where x, denote the original sample and adversarial sample, respectively, y is the label value, and is the weight of the networks. represents the distance measurement between the original sample and the adversarial sample, and represents the adversarial loss function. In the min–max form, the internal maximization optimization problem is to find the optimal adversarial sample, and the external minimization optimization problem is to minimize the loss function. The learning process of confrontation training is depicted in Figure 4.

Figure 4.

Schematic of adversarial learning.

The fast gradient sign method (FGSM) efficiently generates adversarial samples [33]. The FGSM uses a model’s objective loss function to determine the input vector needed to calculate its counter disturbance, which it adds to the corresponding input. This generates counter samples that correspond to the original samples. Suppose that in a classification problem, the output label of the model is . After adversarial training, the model will have higher prediction confidence, that is the model will output the correct sample label even if a small adversarial disturbance is added to the sample. This process can be defined as:

where represents the sample perturbation added and represents adversarial samples after adding perturbation. Each time the model is trained, the FGSM performs an optimization along the gradient direction of the counter loss function , and counter samples are obtained. The generation process of sample disturbance can be expressed as:

where denotes the magnitude of disturbance and g is the inverse gradient of the input vector. Moreover, the FGSM-based target loss function can be defined as:

where c is an equilibrium coefficient that is used to balance both the original and attack samples. As a result, the adversarial examples used in the adversarial learning method can improve the generalization ability of a model by adding a regular term to the loss function. The goal of adversarial training is to minimize the loss function in the worst case.

2.2.2. DRL-Based DDPG Algorithm

During the deep reinforcement learning process, an agent completes its interaction with the environment by perceiving the environment and taking appropriate actions. It performs adaptive iterative optimization according to a reward signal, as shown in Figure 5. An effective approach to describe the DRL-based training process is the Markov decision process (MDP) [34], which is represented by a five-tuple . At each time step, an agent interacts with the environment and makes observations, which comprise the agent’s state . Agents then perform the action to obtain reward R from s to a new state . P denotes the environmental model, and it represents the probability distribution of transitioning to a new state. is a discount factor used to balance the impact of instantaneous returns and long-term returns on cumulative rewards.

Figure 5.

The basic process of deep reinforcement learning.

The deep deterministic policy gradient is an algorithm that combines policy-based actor neural networks with value-based critic neural networks that can be employed for continuous control [28]. The actor online network reacts according to the agent’s current observation state and generates a reasonable action . The critic online network Q is responsible for evaluating the current action and outputting the action value function . and denote the corresponding parameters of an actor online network and a critic online network. In addition, actor target networks and critic target networks are constructed for future updates.

After each decision, a training sample for time t is collected in the experience buffer M, which is applied to iteratively improve the agent’s strategies, that is in the update optimization phase, a stochastic mini-batch of N arrays of samples of the previous format is extracted for every training time. The critic online network is updated according to the TD-error, which is defined as:

Here, is the loss function of critic networks, y is the target value Q-target, and i represents the sequence of extracted samples. Additionally, the actor online network would be trained by minimizing the following policy gradient:

At regular intervals, the soft update approach and update factor are used to copy the network parameters to the target network, which can be expressed as:

2.2.3. Multi-Agent Deep Deterministic Policy Gradient Algorithm

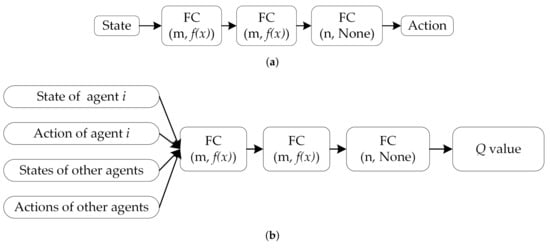

The MADDPG algorithm is an effective DRL algorithm derived from the DDPG algorithm and can be used to address problems with multi-agent strategies. In the MADDPG, each agent has its own actor–critic framework [35]. For a multi-agent system, the observation set consisting of n agents is , the action set is , and the reward set is . For each agent, its observations and actions are denoted as and at a point in time. The agent’s actor online network outputs a policy according to the agent’s own observations, and its critic online network estimates a centralized action value function . This function is based on the status and actions of all agents, as depicted in Figure 6.

Figure 6.

Critic and actor network structures of the MADDPG algorithm. (a) Actor and (b) critic network structures.

During each interaction within the environment, an agent will store relevant experiences in the experience buffer. Unlike the DDPG, the N comprehensive learning samples in the MADDPG are drawn and spliced from the experience buffer of all agents each time one is trained. For agent i, the critic online network is updated according to:

Meanwhile, by minimizing the policy gradient, its actor online network can be optimized. This is expressed as:

The MADDPG algorithm also borrows the soft update technique described in Equation (13) from DDPG.

Although agents trained with the MADDPG can achieve good results in some simple environments, the multi-agent system is very sensitive to changes in the training environment, and the convergence strategies obtained by agents are likely to fall into a local optimum, that is when the strategies of other agents change, the agent cannot produce the optimal action strategy. In order to improve the robustness of the strategy, this paper combines the MADDPG and adversarial learning to propose the A2-MADDPG algorithm, which is introduced in Section 3.

3. Proposed Method

This section proposes an approach for realizing control for pursuers and evaders in a game that contains an uncertain environment. There are obstacles in the environment that both pursuers and evaders need to avoid, and when acquiring specific values in the state space, sensors and other devices encounter positioning, sensing, and actuator errors, resulting in inaccurate values, so the environment is uncertain for pursuers and evaders. An MADDPG-based control framework for multi-agent systems is presented, and it includes action, state, space, and specific reward functions. Furthermore, an improved approach called the A2-MADDPG is described. The A2-MADDPG incorporates an adversarial attack trick and adversarial learning into the MADDPG algorithm.

3.1. MADDPG-Based Framework

3.1.1. Actor Space

When addressing DRL-based multi-agent decision-making, state and action spaces must be defined based on the MDP framework. To ensure that mission control is more similar to the real world, UAVs use dual-channel control, that is the force on a UAV is controlled directly. The effects of this force are then applied to the UAV’s movement attitude and flight velocity. The action output of a dual-channel thrust UAV thrust can be expressed as:

where the superscript i represents the sequence number of the UAV in an MAS. , represent the force on the x and y axes that the UAV received. Therefore, the acceleration can be given by:

where represents the mass of the UAV. The UAV’s attitude can then be adjusted when combined with Equation (1).

3.1.2. State Space

The state space of a UAV provides useful information based on an agent’s observation model. This is used to help the agent sense its surroundings and make decisions. To help both sides during confrontation training, the state spaces of pursuers and evaders should be presented. As per Section 2.1.2, each pursuer’s state information is processed and integrated and includes its position relative to partners , evader targets , and obstacles , as mentioned in Equation (3). The state space of pursuer i can be defined as:

where denotes its position and speed based on self-observed information that has not been processed. Similarly, the state space of an evader j can be defined as:

where represents the position and speed of evader j, represents its position relative to other evaders, represents its position relative to pursuers, and represents sensed obstacles.

3.1.3. Reward Function

In the traditional MADDPG algorithm, formation cooperation cannot be uniformly controlled since each agent has an independent actor and critic network. When a unit successfully hunts down a target, all agents belonging to the formation receive a positive reward regardless of whether the agent was in effective tracking range or played a positive role in the mission. This is contrary to the incentive policy of real pursuit–evasion scenarios.

To address this problem, a reward function based on the team strategy was presented. An agent could receive a positive reward only if it was within a certain distance of the target when the mission terminated. The reward is shaped by three basic elements: (1) distance : the Euclidean distance is used to judge whether the agent successfully pursued the evader; (2) maneuvering safety : the agent is punished if it has collided with obstacles or collaborators; and (3) mission criteria , are used to judge whether the agent completed the mission. These three subreward functions can be defined as:

where i is the sequence number of the agent and function is used to calculate the Euclidean distance of positions a and b. represents the radius of an obstruction, and represents the minimum safe distance between each pursuer. To summarize, the reward function for a pursuer i can be formulated as:

Three relative gain factors are introduced, which represent the respective weights of the three rewards or punishments. Among them, is negative, and both and are positive. To train an autonomous evader, a specific reward function was built according to the distance among the pursuer, evaders, and obstacles, and it has weights that are the inverse of the pursuer’s reward function.

3.2. A2-MADDPG Algorithm

3.2.1. Adversarial Attack Trick for the Agent

When perceiving the environment in a real scenario, an agent would encounter unavoidable errors due to the detection process, image recognition, signal processing, and satellite position-based parameter measurements. Improving model robustness is of great significance, especially in key intelligent control fields such as UAVs and robots, where tiny errors or noise could lead to immeasurable and undesirable consequences.

To train a robust agent to adapt to measurement errors and other noise in real environments, an adversarial attack trick for the agent itself is proposed. This approach aims to generate random noise in the agent’s status, thereby confusing its perception and assisting it in producing a strategy for abnormal conditions [36]. Algorithm 1 summarizes the adversarial attack process, in which inputs are constituted by the action of an actor online network, the action value of a critic target network, and that of agent i. Through limited iterations , the state is combined with stochastic Gaussian noise under the minimum Q value that could be excavated. Algorithm 1, to control the sequence, introduces the pseudocode for an agent’s adversarial attack trick.

| Algorithm 1 Adversarial attack trick (with the MADDPG). |

| 1: |

| 2: |

| 3: for do |

| 4: |

| 5: |

| 6: |

| 7: if then |

| 8: |

| 9: end if |

| 10: end for |

| 11: return |

The action of agent i can be remodeled based on its state after including stochastic Gaussian noise. Similarly, the robustness of the multi-agent intelligent control model could be optimized according to the adversarial attack of all agents by modeling the indeterminacies of the real world.

3.2.2. Adversarial Learning for Cooperators

In addition to uncertain influences from a real environment, an agent is susceptible to strategy changes made by other agents in the overall system [37]. In other words, an agent cannot produce the optimal action strategy to match other agents when those agents change strategies. Our algorithm preprocesses the actions of cooperators using adversarial training techniques, so the agent’s strategy is updated based on the worst decisions of other agents. Specifically, as the neural network is updated, the cumulative return of agent i is optimized under the condition that all cooperators use adversarial strategies. The cumulative return of agent i combined with adversarial learning can be formulated as:

where represents the state distribution. That means would be influenced by the actions of all agents. Furthermore, the action value Q function could be defined in a recursive form as:

The single-step gradient descent method was introduced to overcome high computing costs [38]. Using this method, the actions taken by cooperators are those exhibiting a mixed disturbance, and the direction of the disturbance is the orientation in which the Q function is decreasing. To summarize, the update process of the critic online network can be formulated as:

where represents the critic target network, represents the action of other agents in their minimum conditions, and represents the disturbance added for agent j. By linearizing the Q function, the parameter is used to denote the gradient direction of at . can be replaced with this gradient approximation:

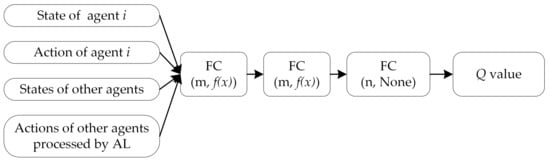

The critic network structure of the MADDPG combined with adversarial learning is illustrated in Figure 7. When the MADDPG is implemented, adversarial interference must be added to the actions of other agents without requiring the critic network to be remodeled.

Figure 7.

Critic network structures of the MADDPG algorithm combined with AL (the information about the actions of other agents in the input layer is processed by AL).

To summarize, the A2-MADDPG algorithm proposed in this paper employs an adversarial attack trick and adversarial learning to process an agent’s state information and other agents’ actions during training. This bridges the gap between simulated training and the real world by adding adversarial disturbances. The overall A2-MADDPG algorithm is described in Algorithm 2.

| Algorithm 2 Adversarial attack trick and adversarial learning MADDPG (A2-MADDPG) algorithm. |

| 1: for N agents, randomly initialize their critic network and actor network |

| 2: synchronize target networks and with |

| 3: initialize hyperparameters: experience buffer , mini-batch size m, max episode M, max step T, actor learning rate la, critic learning rate lc, discount factor γ, soft update rate τ |

| 4: for episode = 1, M do |

| 5: reset environment, and receive the initial state x |

| 6: initialize exploration noise of action |

| 7: for t = 1, T do |

| 8: |

| 9: for each agent i, select action |

| 10: execute , rewards , and next state x’ |

| 11: store sample in |

| 12: for agenti = 1, n do |

| 13: randomly extract m samples |

| 14: update critic network by Equation (27) |

| 15: update actor network by:

|

| 16: end for |

| 17: update target networks by |

| 18: end for |

| 19: end for |

4. Experiment and Result Analysis

This section describes the simulation’s settings and a series of experiments implemented to analyze the effectiveness and performance of the approaches proposed in previous sections.

4.1. Simulation Environment Settings

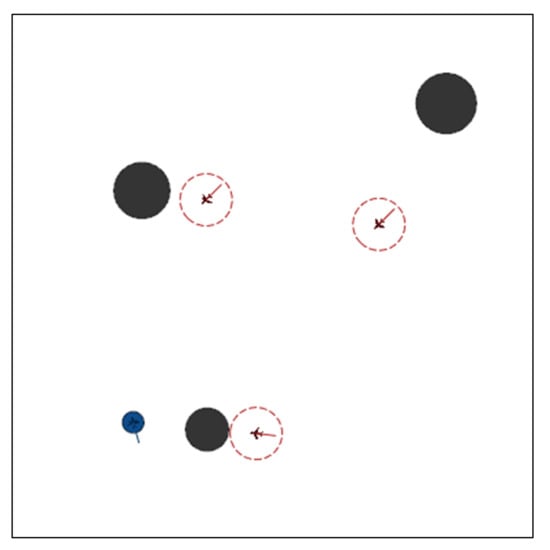

The experiments were conducted using Pycharm and the gym module on an Ubuntu16.04 system with an Intel i7-6700K CPU, a GeForce1660Ti graphics card, and 16 G of RAM. As shown in Figure 8, the testbed was a square (20 km on a side) in a two-dimensional plane. Each obstacle was assumed to have a round threat area with radius km (black circle). The attack range of the pursuers (red circles with a UAV inside) was set to 1.2 km, which means the mission was considered successful for pursuers when the distance between them and at least one evader (blue UAVs) was within 1.2 km. Table 1 provides the parameters used for the platforms.

Figure 8.

Critic network structures of the MADDPG algorithm combined with AL (the information about actions of other agents in the input layer is processed by AL).

Table 1.

The detailed parameter settings of agent platforms in the pursuit–evasion game.

In the DRL-based multipursuer framework, a two-layer perceptron model was constructed for the actor and critic networks. Two fully connected 15 × 64 × 64 × 2 neural networks were created for the actor network and its target. Furthermore, two fully connected 17 × 64 × 64 × 1 neural networks were created for the critic network and its target. Each round ends when the pursuers capture an evader, a platform collides with an obstacle, or the simulation reaches the maximum number of steps. After each round, the environment was reset, and the next round began. Network training ended when the experience buffer was full, and an Adam optimizer was used to determine the neural network parameters. The hyperparameters of the network are shown in Table 2.

Table 2.

The detailed parameter setting of agent platforms in the pursuit–evasion game.

4.2. Experiment on the Performance of the A2-MADDPG

4.2.1. Performance of Pursuers

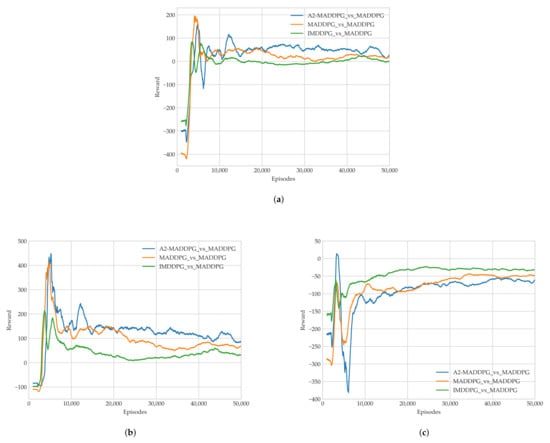

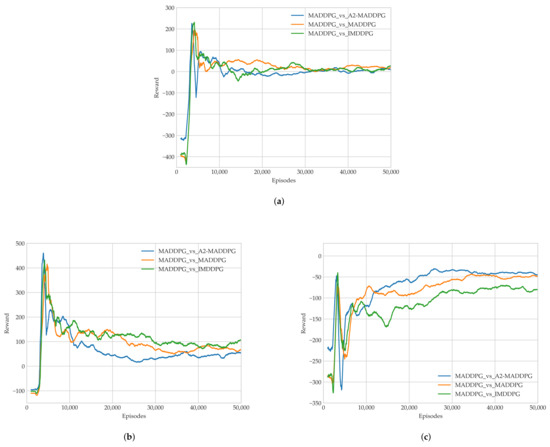

To examine the performance of trained pursuers, this study used different algorithms to train them to fight evaders that were trained using the constant MADDPG algorithm from Experiment 1. Specifically, we employed the IMDDPG, MADDPG, and A2-MADDPG algorithms in the MAS of pursuers and present comparative data about the average return values for the last 1000 training episodes in Figure 9.

Figure 9.

Average rewards in each episode during Training Experiment 1 of (a) all agents, (b) pursuers, and (c) evaders.

As illustrated in Figure 9a, UAVs trained using all three algorithms required roughly 8000 episodes to converge to a steady value with an average reward. In Figure 9b,c, the pursuers and evaders play against each other, and their respective average rewards do not converge to a stable state until about 16,000 episodes. The A2-MADDPG achieved higher convergence approaching the average reward for pursuers and a lower result for evaders. This means the proposed algorithm produced agents that were highly successful at pursuing while preventing targets from escaping, which resulted in lower rewards. To prove the real performance of our algorithm, the average time of first pursuit and the average success of the pursuers in the last 1000 training episodes are recorded in Figure 10.

Figure 10.

Algorithm performance in Experiment 1. (a) Earliest pursuit time and (b) successful number of pursuers.

Figure 10a shows that the MAS pursuers can complete their pursuit after being trained using the three DRL algorithms. As the training time increases, A2-MADDPG UAV formations can pursue evaders in a shorter time. This means that the A2-MADDPG model has more advanced co-adjutant siege capabilities. Figure 10b shows the variation of eligible pursuing times produced during training. The pursuers quickly completed a large number of pursuits in each round, but their success gradually decreased as two maneuvering objects were introduced, confronted one another, and stabilized. This means that MADDGP evaders could also make intelligent decisions autonomously to flee. Ultimately, the average eligible pursuing time of A2-MADDPG UAVs was about four per round, which is better than the other two algorithms.

To present the pros and cons of each algorithm in the steady state, the last 10,000 rounds (from 40,000 to 50,000 episodes in Figure 9 and Figure 10) were analyzed. The results are presented in Table 3 and include the average return values for pursuers and evaders, the average and maximum first pursuit time, and the average and maximum successes.

Table 3.

Comparison of the training results (sampled from 40,000 to 50,000 episodes in Experiment 1).

Table 3 shows the performance of each algorithm after stable convergence. The IMDDPG pursuers that used a distributed critic network had an average return value of 42.15, while the MADDPG pursuers had 72.87. Meanwhile, the A2-MADDPG algorithm improved the pursuers’ performance, causing the average return value to rise to 103.20. Driven by the shaped reward function, A2-MADDPG pursuers developed efficient strategies, thereby obtaining a higher round reward. The earliest pursuit time indicator reflects the performance of time-efficient decisions that pursuers made. Table 3 shows that for the A2-MADDPG, the earliest pursuit time became shorter, and its average value was reduced from IMDDPG’s, 32.17 to 24.61. The maximum earliest pursuit time was reduced from 35.662 to 26.802. The pursuer success number describes how many evaders were successful in each round. This indicator was better for the A2-MADDPG than for the other two algorithms, which indicates that the MAS pursuers based on it had better performance.

4.2.2. Performance of Evaders

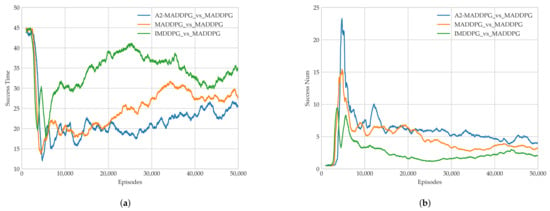

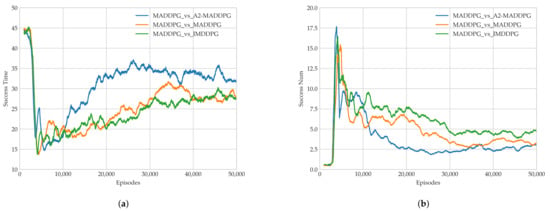

In Experiment 2, we trained evaders using the IMDDPG, MADDPG, and A2-MADDPG to challenge MADDPG pursuers. The average reward results for the last 1000 training episodes are presented in Figure 11.

Figure 11.

Average rewards in each episode during Training Experiment 2 of (a) all agents, (b) pursuers, and (c) evaders.

As illustrated in Figure 11c, the evaders achieved the highest round average using the A2-MADDPG, followed by MADDPG, and finally, IMDDPG. This means that A2-MADDPG evaders had a larger advantage during confrontations, which helped them avoid being attacked by pursuers more often.

We recorded the earliest completion time and the eligible tracking time of the pursuers in each round to verify the algorithm’s performance, as shown in Figure 12. In Figure 12a, observe that the blue curve has the highest values when the experiment stabilized, that is the first time of pursuing task completion during confrontations between MADDPG pursuers and A2-MADDPG evaders was the longest. This means that A2-MADDPG evaders made efficient decisions when avoiding predators. Figure 12b shows the value of the eligible pursuing time for 50,000 training episodes, from which we observed that the average eligible pursuing time under the confrontation between MADDPG pursuers and A2-MADDPG evaders was the smallest. A2-MADDPG evaders demonstrated better escape strategies. In addition, experimental data from the last 10,000 rounds were analyzed, as presented in Table 4, to control the sequence. The analysis included the average return value of the pursuing UAV formation and evader, the average value of the first time of pursuing task completion, the minimum value of the first time of pursuing task completion, the average eligible pursuing time, and the maximum eligible pursuing time.

Figure 12.

Algorithm performance in Experiment 2. (a) Earliest pursuit time and (b) number of successful pursuers.

Table 4.

Comparison of training results (sampled from 40,000 to 50,000 episodes in Experiment 2).

Table 4 gives the parameters of each algorithm after stable convergence. Compared with the IMDDPG, which uses a distributed critic network, centralized MADDPG evaders had higher average return values, longer times being pursued, and lower occurrences of being caught. A2-MADDPG evaders, with superior maneuverability, could generate effective actions to flee from capture by pursuers. That means the proposed A2-MADDPG also optimized the evaders’ strategies.

4.3. Experiment on the Effectiveness of the A2-MADDPG

4.3.1. Effectiveness of Pursuing

In this section, the algorithm for a specific 3V1 pursuit and evasion confrontation was simulated and analyzed. Table 5 provides the initial positions of the UAVs and obstacles in Experiment 3.

Table 5.

Initial positions of UAVs and obstacles in Experiment 3.

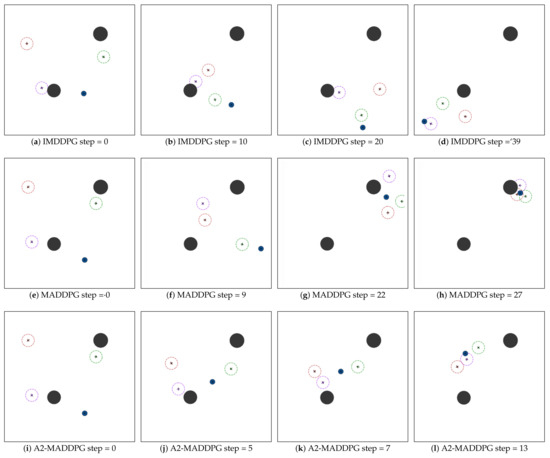

Figure 13 presents the confrontation process of the mission starting from the same initial state. The IMDDPG pursuer formation successfully hit the evader after 39 steps. The MADDPG pursuer formation generated better encirclement strategies, and it took more time to hunt down the target (27 steps). The A2-MADDPG formation adjusted the direction and speed of each UAV to more effectively reach the more maneuverable evader. It took 13 steps for the pursuers to reach the escape target.

Figure 13.

Experiment 3: pursuit–evasion game with one MADDPG-based evader and three groups of pursuers driven by different algorithms. The IMDDPG took 39 steps, MADDPG 27 steps, and A2-MADDPG 13 steps to reach the escape target for the first time.

The algorithms were examined in a random test environment with stochastic initial states and environments in Experiment 4. Based on the chase game trained in Section 4.2.1, the results regarding task completion for 1000 test episodes are shown in Table 6. The improved A2-MADDPG had a higher mission success rate of 88.9% for pursuers, which was higher than the success rate of the MADDPG’s 75.3% and the IMDDPG’s 70.4%. Moreover, the A2-MADDPG pursuers were able to catch the evader in less time. Compared with the MADDPG and IMDDPG, the average value of first time of pursuing completion was 23.698 for the A2-MADDPG, which shows that the A2-MADDPG pursuers can complete pursuits in less time and the effectiveness of their pursuits was enhanced.

Table 6.

Effectiveness of pursuing in Experiment 4.

4.3.2. Effectiveness of Escaping

To test the effectiveness of escaping, 1000 random environments were generated in Experiment 5 to compare with the IMDDPG, MADDPG, and A2-MADDPG. The results are presented in Table 7. IMDDPG evaders had a success rate of 17.9%, and A2-MADDPG evaders created better evasion strategies, which resulted in an increased success rate of 31.4%. The maximum value of 30.692 for the average value of the first time of being pursued also proved the effectiveness of the A2-MADDPG for evaders. This means that A2-MADDPG evaders specified more effective escape strategies.

Table 7.

Effectiveness of escaping in Experiment 5.

In summary, compared with the IMDDPG and MADDPG, the evaluation indicators of the A2-MADDPG were significantly better under the same hyperparameter and training environment settings; in the same test environment, the pursuit and escape strategies trained by the A2-MADDPG were obviously more robust and more efficient than those trained by the other two algorithms. Therefore, the A2-MADDPG had a superior performance in the experiments.

5. Conclusions

In this paper, deep reinforcement learning was applied to multi-agent pursuit–evasion decision-making without building a complicated control system, as is commonly performed in traditional approaches. An elaborate MADDPG-based framework was constructed for providing online decision-making schemes and determining the co-adjutant control of multi-agent systems. By introducing adversarial disturbances, an improved A2-MADDPG was proposed that effectively reduced the influence of errors between models and real scenarios. Introducing an adversarial attack trick optimized the robustness of the multi-agent intelligent control model by incorporating adversarial attacks from all agents. An adversarial learning technique was incorporated into our algorithm to overcome the vulnerability of responding to the changes introduced by other agents. This was performed by processing data in the input layer of a critic network. Experimental results showed that the proposed algorithm improved the performance of both types of players in pursuit–evasion games and that the trained agents could devise effective strategies autonomously in confrontational missions.

We intend to expand the pursuit–evasion missions by changing the number of pursuers and evaders in the future and increasing the number of obstacles to make the environment more complex, so as to evaluate the performance, efficiency, and robustness of our algorithms in a more realistic and dynamic space. In addition, we would like to apply the trained robust strategies to drones or unmanned vehicles, so that they can make decisions based on the environmental information obtained by the cameras with an authentic range. This will accelerate the conversion of this work from virtual digital simulations to real multi-agent systems.

Author Contributions

Conceptualization, investigation, methodology, and writing—original draft preparation, K.W.; resources, software, visualization, and validation, D.W. and Y.Z.; writing—review and editing, B.L.; project administration and funding acquisition, X.G.; data curation and formal analysis, Z.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 62003267), the Natural Science Foundation of Shaanxi Province (Grant No. 2020JQ-220), and the Open Project of Science and Technology on Electronic Information Control Laboratory (Grant No. JS20201100339).

Acknowledgments

The authors would like to acknowledge the National Natural Science Foundation of China (Grant No. 62003267), the Natural Science Foundation of Shaanxi Province (Grant No. 2020JQ-220), and the Open Project of Science and Technology on Electronic Information Control Laboratory (Grant No. JS20201100339) for providing the funding to conduct these experiments. We thank LetPub (www.letpub.com, accessed on 25 July 2021) for its linguistic assistance during the preparation of this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, Y.; De Luca, G. Technologies Supporting Artificial Intelligence and Robotics Application Development. J. Artif. Intell. Technol. 2021, 1, 1–8. [Google Scholar] [CrossRef]

- Wu, D.; Wan, K.; Gao, X.; Hu, Z. Multiagent Motion Planning Based on Deep Reinforcement Learning in Complex Environments. In Proceedings of the 2021 6th International Conference on Control and Robotics Engineering (ICCRE), Beijing, China, 16–18 April 2021; pp. 123–128. [Google Scholar] [CrossRef]

- Czap, H. Self-Organization and Autonomic Informatics (I); IOS Press: Amsterdam, The Netherlands, 2005; Volume 1. [Google Scholar]

- Folino, G.; Forestiero, A.; Spezzano, G. A Jxta Based Asynchronous Peer-to-Peer Implementation of Genetic Programming. J. Softw. 2006, 1, 12–23. [Google Scholar] [CrossRef]

- Forestiero, A.; Mastroianni, C.; Papuzzo, G.; Spezzano, G. A proximity-based self-organizing framework for service composition and discovery. In Proceedings of the 2010 10th IEEE/ACM International Conference on Cluster, Cloud and Grid Computing, Melbourne, VIC, Australia, 17–20 May 2010; pp. 428–437. [Google Scholar]

- Lopez, V.G.; Lewis, F.L.; Wan, Y.; Sanchez, E.N.; Fan, L. Solutions for Multiagent Pursuit-Evasion Games on Communication Graphs: Finite-Time Capture and Asymptotic Behaviors. IEEE Trans. Autom. Control 2019, 65, 1911–1923. [Google Scholar] [CrossRef]

- Zhou, Z.; Xu, H. Mean Field Game and Decentralized Intelligent Adaptive Pursuit Evasion Strategy for Massive Multi-Agent System under Uncertain Environment with Detailed Proof. In Proceedings of the Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications II, Online, 27 April–8 May 2020. [Google Scholar]

- Liu, K.; Jia, B.; Chen, G.; Pham, K.; Blasch, E. A real-time orbit SATellites Uncertainty propagation and visualization system using graphics computing unit and multi-threading processing. In Proceedings of the IEEE/AIAA Digital Avionics Systems Conference, Prague, Czech Republic, 13–17 September 2015. [Google Scholar]

- Differential Games. A Mathematical Theory with Applications to Warfare and Pursuit, Control and Optimization. Math. Gaz. 1967, 51, 80.

- Unification of differential games, generalized solutions of the Hamilton-Jacobi equations, and a stochastic guide. Differ. Equ. 2009, 45, 1653–1668. [CrossRef]

- Chen, J.; Zha, W.; Peng, Z.; Gu, D. Multi-player pursuit–evasion games with one superior evader. Automatica 2016, 71, 24–32. [Google Scholar] [CrossRef] [Green Version]

- Hao, W.; Cheng, L.; Fang, B. An alliance generation algorithm based on modified particle swarm optimization for multiple emotional robots pursuit-evader problem. In Proceedings of the 2014 11th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Xiamen, China, 19–21 August 2014. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef]

- Botvinick, M.; Ritter, S.; Wang, J.X.; Kurth-Nelson, Z.; Blundell, C.; Hassabis, D. Reinforcement Learning, Fast and Slow. Trends Cogn. Sci. 2019, 23, 408–422. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Dong, L.; Sun, C. Cooperative control for multi-player pursuit–evasion games with reinforcement learning. Neurocomputing 2020, 412, 101–114. [Google Scholar] [CrossRef]

- Xu, G.; Zhao, Y.; Liu, H. Pursuit and evasion game between UVAs based on multi-agent reinforcement learning. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; pp. 1261–1266. [Google Scholar]

- Park, J.; Lee, J.; Kim, T.; Ahn, I.; Park, J. Co-Evolution of Predator-Prey Ecosystems by Reinforcement Learning Agents. Entropy 2021, 23, 461. [Google Scholar] [CrossRef] [PubMed]

- Gu, S.; Geng, M.; Lan, L. Attention-Based Fault-Tolerant Approach for Multi-Agent Reinforcement Learning Systems. Entropy 2021, 23, 1133. [Google Scholar] [CrossRef]

- Sewak, M. Deep q network (dqn), double dqn, and dueling dqn. In Deep Reinforcement Learning; Springer: Berlin/Heidelberg, Germany, 2019; pp. 95–108. [Google Scholar]

- Liu, B.; Ye, X.; Dong, X.; Ni, L. Branching improved Deep Q Networks for solving pursuit–evasion strategy solution of spacecraft. J. Ind. Manag. Optim. 2017, 13. [Google Scholar] [CrossRef]

- Qadir, M.Z.; Piao, S.; Jiang, H.; Souidi, M.E.H. A novel approach for multi-agent cooperative pursuit to capture grouped evaders. J. Supercomput. 2020, 76, 3416–3426. [Google Scholar] [CrossRef]

- Singh, G.; Lofaro, D.M.; Sofge, D. Pursuit-evasion with Decentralized Robotic Swarm in Continuous State Space and Action Space via Deep Reinforcement Learning. In Proceedings of the 12th International Conference on Agents and Artificial Intelligence, Valletta, Malta, 22–24 February 2020; pp. 226–233. [Google Scholar]

- Wang, X.; Xuan, S.; Ke, L. Cooperatively pursuing a target unmanned aerial vehicle by multiple unmanned aerial vehicles based on multiagent reinforcement learning. Adv. Control Appl. Eng. Ind. Syst. 2020, 2, e27. [Google Scholar] [CrossRef]

- Pang, C.; Xu, G.G.; Shan, G.L.; Zhang, Y.P. A new energy efficient management approach for wireless sensor networks in target tracking. Def. Technol. 2021, 17, 932–947. [Google Scholar] [CrossRef]

- Di, K.; Yang, S.; Wang, W.; Yan, F.; Xing, H.; Jiang, J.; Jiang, Y. Optimizing evasive strategies for an evader with imperfect vision capacity. J. Intell. Robot. Syst. 2019, 96, 419–437. [Google Scholar] [CrossRef]

- Lowe, R.; Wu, Y.; Tamar, A.; Harb, J.; Abbeel, P.; Mordatch, I. Multi-agent actor–critic for mixed cooperative-competitive environments. arXiv 2017, arXiv:1706.02275. [Google Scholar]

- Zhang, B.H.; Lemoine, B.; Mitchell, M. Mitigating unwanted biases with adversarial learning. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans, LA, USA, 2–3 February 2018; pp. 335–340. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Li, B.; Gan, Z.; Chen, D.; Sergey Aleksandrovich, D. UAV Maneuvering Target Tracking in Uncertain Environments Based on Deep Reinforcement Learning and Meta-Learning. Remote Sens. 2020, 12, 3789. [Google Scholar] [CrossRef]

- Li, B.; Yang, Z.P.; Chen, D.Q.; Liang, S.Y.; Ma, H. Maneuvering target tracking of UAV based on MN-DDPG and transfer learning. Def. Technol. 2021, 17, 457–466. [Google Scholar] [CrossRef]

- Li, S.; Wu, Y.; Cui, X.; Dong, H.; Fang, F.; Russell, S. Robust multi-agent reinforcement learning via minimax deep deterministic policy gradient. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 4213–4220. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Liu, Y.; Mao, S.; Mei, X.; Yang, T.; Zhao, X. Sensitivity of Adversarial Perturbation in Fast Gradient Sign Method. In Proceedings of the 2019 IEEE Symposium Series on Computational Intelligence (SSCI), Xiamen, China, 6–9 December 2019; pp. 433–436. [Google Scholar]

- Papadimitriou, C.H.; Tsitsiklis, J.N. The complexity of Markov decision processes. Math. Oper. Res. 1987, 12, 441–450. [Google Scholar] [CrossRef] [Green Version]

- Guo, H.; Liu, T.; Wang, Y.; Chen, F.; Fan, J. Research on actor–critic reinforcement learning in RoboCup. In Proceedings of the 2006 6th World Congress on Intelligent Control and Automation, Dalian, China, 21–23 June 2006; Volume 2, pp. 9212–9216. [Google Scholar]

- Wan, K.; Gao, X.; Hu, Z.; Wu, G. Robust motion control for UAV in dynamic uncertain environments using deep reinforcement learning. Remote Sens. 2020, 12, 640. [Google Scholar] [CrossRef] [Green Version]

- Hernandez-Leal, P.; Kartal, B.; Taylor, M.E. A survey and critique of multiagent deep reinforcement learning. Auton. Agents Multi-Agent Syst. 2019, 33, 750–797. [Google Scholar] [CrossRef] [Green Version]

- Vivek, B.; Babu, R.V. Single-step adversarial training with dropout scheduling. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 947–956. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).